Abstract

We propose a stochastic approximation (SA) based method with randomization of samples for policy evaluation using the least squares temporal difference (LSTD) algorithm. Our proposed scheme is equivalent to running regular temporal difference learning with linear function approximation, albeit with samples picked uniformly from a given dataset. Our method results in an O(d) improvement in complexity in comparison to LSTD, where d is the dimension of the data. We provide non-asymptotic bounds for our proposed method, both in high probability and in expectation, under the assumption that the matrix underlying the LSTD solution is positive definite. The latter assumption can be easily satisfied for the pathwise LSTD variant proposed by Lazaric (J Mach Learn Res 13:3041–3074, 2012). Moreover, we also establish that using our method in place of LSTD does not impact the rate of convergence of the approximate value function to the true value function. These rate results coupled with the low computational complexity of our method make it attractive for implementation in big data settings, where d is large. A similar low-complexity alternative for least squares regression is well-known as the stochastic gradient descent (SGD) algorithm. We provide finite-time bounds for SGD. We demonstrate the practicality of our method as an efficient alternative for pathwise LSTD empirically by combining it with the least squares policy iteration algorithm in a traffic signal control application. We also conduct another set of experiments that combines the SA-based low-complexity variant for least squares regression with the LinUCB algorithm for contextual bandits, using the large scale news recommendation dataset from Yahoo.

Similar content being viewed by others

1 Introduction

Several machine learning problems involve solving a linear system of equations from a given set of training data. In this paper, we consider the problem of policy evaluation in reinforcement learning (RL). The objective here is to estimate the value function \(V^\pi\) of a given policy \(\pi\). Temporal difference (TD) methods are well-known in this context, and they are known to converge to the fixed point \(V^\pi = {\mathcal {T}}^\pi (V^\pi )\), where \({\mathcal {T}}^\pi\) is the Bellman operator (see Sect. 3.1 for a precise definition).

The TD algorithm stores an entry representing the value function estimate for each state, making it computationally difficult to implement for problems with large state spaces. A popular approach to alleviate this curse of dimensionality is to parameterize the value function using a linear function approximation architecture. For every s in the state space \({\mathcal {S}}\), we approximate \(V^\pi (s) \approx \theta ^\textsf {T}\phi (s)\), where \(\phi (\cdot )\) is a d-dimensional feature vector with \(d<< |{\mathcal {S}}|\), and \(\theta\) is a tunable parameter. The function approximation variant of TD is known to converge to the fixed point of \(\varPhi \theta = \varPi {\mathcal {T}}^\pi (\varPhi \theta )\), where \(\varPi\) is the orthogonal projection onto the space within which we approximate the value function, and \(\varPhi\) is the feature matrix that characterizes this space (Tsitsiklis and Van Roy 1997). For a detailed treatment of this subject matter, the reader is referred to the classic textbooks (Bertsekas and Tsitsiklis 1996; Sutton and Barto 1998).

Batch reinforcement learning is a popular paradigm for policy learning. Here, we are provided with a (usually) large set of state transitions \({\mathcal {D}}\triangleq \{(s_i,r_i,s'_{i}),i=1,\ldots ,T)\}\) obtained by simulating the underlying Markov decision process (MDP). For every \(i=1,\ldots ,T\), the 3-tuple \((s_i,r_i,s'_{i})\) corresponds to a transition from state \(s_i\) to \(s'_i\) and the resulting reward is denoted by \(r_i\). The objective is to learn an approximately optimal policy from this set. Least squares policy iteration (LSPI) (Lagoudakis and Parr 2003) is a well-known batch RL algorithm in this context, and it is based on the idea of policy iteration. A fundamental component of LSPI is least squares temporal difference (LSTD) (Bradtke and Barto 1996), which is introduced next.

LSTD estimates the fixed point of \(\varPi {\mathcal {T}}^\pi\), for a given policy \(\pi\), using empirical data \({\mathcal {D}}\). The LSTD estimate is given as the solution to

We consider a special variant of LSTD called pathwise LSTD, proposed by Lazaric et al. (2012). The idea behind pathwise LSTD is to (i) have the dataset \({\mathcal {D}}\) created using a sample path simulated from the underlying MDP for the policy \(\pi\), and (ii) set \(s_T'=0\) while computing \({\bar{A}}_T\) defined above. The latter setting ensures the existence of the LSTD solution \({\hat{\theta }}_T\) under the condition that the family of features on the dataset \({\mathcal {D}}\) are linearly independent.

Our primary focus in this work is to solve the LSTD system in a computationally efficient manner. Solving (1) is computationally expensive, especially when d is large. For instance, in the case when \({\bar{A}}_T^{-1}\) is invertible, the complexity of the approach above is \(O(d^2 T)\), where \(\bar{A}_T^{-1}\) is computed iteratively using the Sherman–Morrison lemma. On the other hand, if we employ the Strassen algorithm or the Coppersmith–Winograd algorithm for computing \({\bar{A}}_T^{-1}\), the complexity is of the order \(O(d^{2.807})\) and \(O(d^{2.375})\), respectively, in addition to \(O(d^2 T)\) complexity for computing \({\bar{A}}_T\). An approach for solving (1) without explicitly inverting \({\bar{A}}_T\) is computationally expensive as well.

From the above discussion, it is evident that LSTD scales poorly with the number of features, making it inapplicable for large datasets with many features. We propose the batchTD algorithm to alleviate the high computation cost of LSTD in high dimensions. The batchTD algorithm replaces the inversion of the \({\bar{A}}_T\) matrix by the following iterative procedure that performs a fixed point iteration (see Fig. 1 for an illustration): Set \(\theta _0\) arbitrarily and update

where each \(i_n\) is chosen uniformly at random from the set \(\{1,\ldots ,T\}\), and \(\gamma _n\) are step-sizes that satisfy standard stochastic approximation conditions. The random sampling is sufficient to ensure convergence to the LSTD solution. The update iteration (2) is of order O(d), and our bounds show that after T iterations, the iterate \(\theta _T\) is very close to LSTD solution, with high probability. The advantage of the scheme above is that it incurs a computational cost of O(dT), while a traditional LSTD solver based on Sherman–Morrison lemma would require \(O(d^2T)\).

The update rule in (2) resembles that of TD(0) with linear function approximation, justifying the nomenclature ‘batchTD’. Note that regular TD(0) with linear function approximation uses a sample path from the Markov chain underlying the policy considered. In contrast, the batchTD algorithm performs the update iteration using a sample picked uniformly at random from a dataset. We establish, through non-asymptotic bounds, that using batchTD in place of LSTD does not impact the convergence rate of LSTD to the true value function. The advantage with batchTD is the low computational cost in comparison to LSTD.

From a theoretical standpoint, the scheme (2) comes under the purview of stochastic approximation (SA). Stochastic approximation is a well-known technique that was originally proposed for finding zeroes of a nonlinear function in the seminal work of Robbins and Monro (1951). Iterate averaging is a standard approach to accelerate the convergence of SA schemes and was proposed independently by Ruppert (1991) and Polyak and Juditsky (1992). Non asymptotic bounds for Robbins Monro schemes have been provided by Frikha and Menozzi (2012) and extended to incorporate iterate averaging by Fathi and Frikha (2013). The reader is referred to Kushner and Yin (2003) for a textbook introduction to SA.

Improving the complexity of TD-like algorithms is a popular line of research in RL. The popular Computer Go setting (Silver et al. 2007), with dimension \(d=10^6\), and several practical application domains (e.g. transportation, networks) involve high-feature dimensions. Moreover, considering that linear function approximation is effective with a large number of features, our O(d) improvement in complexity of LSTD by employing a TD-like algorithm on batch data is meaningful. For other algorithms treating this complexity problem, see GTD (Sutton et al. 2009a), GTD2 (Sutton et al. 2009b), iLSTD (Geramifard et al. 2007) and the references therein. In particular, iLSTD is suitable for settings where the features admit a sparse representation.

In the context of improving the complexity of LSTD, our contributions can be summarized as follows: First, through finite sample bounds, we show that our batchTD algorithm (2) converges to the pathwise LSTD solution at the optimal rate of \(O(n^{-1/2})\) in expectation (see Theorem 4.2 in Sect. 4). By projecting the iterate (2) onto a compact and convex subset of \({\mathbb {R}}^d\), we are able to establish high probability bounds on the error \(\left\| \theta _n - {\hat{\theta }}_T\right\| _2\). In particular, we show that, with probability \(1-\delta\), the batchTD iterate \(\theta _n\) constructs an \(\epsilon\)-approximation of the corresponding pathwise LSTD solution with \(O(d\ln (1/\delta )/\epsilon ^2)\) complexity, irrespective of the number of batch samples T. The above rate results are for a step-size choice that is inversely proportional to the number of iterations of (2), and also require the knowledge of the minimum eigenvalue of the symmetric part of \({\bar{A}}_T\). We overcome the latter dependence on the knowledge of the minimum eigenvalue through iterate averaging. As an aside, we note that using completely parallel arguments to those used in arriving at non-asymptotic bounds for batchTD, one could derive bounds for the regular TD algorithm with linear function approximation, albeit for the special case when the underlying samples arrive in an i.i.d. fashion. Second, through a performance bound, we establish that using our batchTD algorithm in place of LSTD does not impact the rate of convergence of the approximate value function to the true value function.

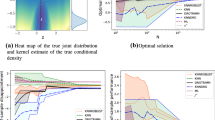

Third, we investigate the rates when larger step sizes (\(\varTheta (n^{-\alpha })\) where \(\alpha \in\) (1/2, 1)) are used in conjunction with averaging of the iterates, i.e., the well known Polyak-Ruppert averaging scheme. The rate obtained in high probability for the iterate-averaged variant is of the order \(O(n^{-\alpha /2})\), with the added advantage that, unlike non-averaged case, the step-size choice does not require knowledge of the minimum eigenvalue of the symmetric part of \({\bar{A}}_T\). Further, with iterate averaging the complexity of the algorithm stays at O(d) per iteration, as before. Fourth, we consider a traffic control application, and implement a variant of LSPI which uses the batchTD algorithm in place of LSTD. In particular, for the experiments we employ step-sizes that were used to derive the non-asymptotic bounds mentioned above. We demonstrate that running batchTD for a short number of iterations (\(\sim 500\)) on big-sized problems with feature dimension \(\sim 4000\), one gets a performance that is almost as good as regular LSTD at a significantly lower computational cost.

We now turn our attention to solving least squares regression problems via the popular stochastic gradient descent (SGD) method. Many practical machine learning algorithms require computing the least squares solution at each iteration in order to make a decision. As in the case of LSTD, classic least squares solution schemes such as Sherman–Morrison lemma are of complexity of the order \(O(d^2)\). A practical alternative is to use a SA based iterative scheme that is of the order O(d). Such SA-based schemes when applied to the least squares parameter estimation context are well known in the ML literature as SGD algorithms.

We also analyze the low-complexity SGD alternative for the classic least squares parameter estimation problem. Using the same template as for the results of batchTD, we derive non-asymptotic bounds, which hold both in high probability as well as in expectation, for the tracking error \(\Vert \theta _n - {\hat{\theta }}_T\Vert _2\). Here \(\theta _n\) is the SGD iterate, while \({\hat{\theta }}_T\) is the least squares solution. We describe a fast variant of the LinUCB (Li et al. 2010) algorithm for contextual bandits, where the SGD iterate is used in place of the least squares solution. We demonstrate the empirical usefulness of the SGD-based LinUCB algorithm using the large scale news recommendation dataset from Yahoo (Webscope 2011). We observe that, using the step-size suggested by our bounds, the SGD-based LinUCB algorithm exhibits low tracking error, while providing significant computational gains.

The rate results coupled with the low complexity of our schemes, in the context of LSTD as well as least squares regression, make them more amenable to practical implementation in the canonical big data settings, where the dimension d is large. This is amply demonstrated in our applications in transportation and recommendation systems domains, where we establish that batchTD and SGD perform almost as well as regular LSTD and regression solvers, albeit with much less computation (and with less memory). Note that the empirical evaluations are for higher level machine learning algorithms—least squares policy iteration (LSPI) (Lagoudakis and Parr 2003), and linear bandits (Dani et al. 2008; Li et al. 2010), which use LSTD and regression in their inner loops.

The rest of the paper is organized as follows: In Sect. 2, we discuss related work. In Sect. 2.2 we present the batchTD algorithm, and in Sect. 4 we provide the non-asymptotic bounds for this algorithm. In Sect. 5, we analyze a variant of our algorithm that incorporates iterate averaging. In Sect. 6, we compare our bounds to those in recent work. In Sect. 7, we describe a variant of LSPI that uses batchTD in place of LSTD. Next, in Sect. 8, we provide detailed proofs of convergence, and derivation of rates. We provide experiments on a traffic signal control application in Sect. 9. In Sect. 10, we provide extensions to solve the problem of least squares regression and in Sect. 11, we provide a set of experiments that tests a variant of the LinUCB algorithm using a SGO subroutine for least squares regression. Finally, in Sect. 12 we provide the concluding remarks.

2 Literature review

2.1 Previous work related to LSTD

In Chapter 6 of Konda (2002), the authors establish that LSTD has the optimal asymptotic convergence rate, while by Antos et al. (2008) and Lazaric et al. (2012), the authors provide a finite time analysis for LSTD and LSPI. Recent work by Tagorti and Scherrer (2015) provides sample complexity bounds for LSTD(\(\lambda\)). LSPE(\(\lambda\)), which is an algorithm that is closely related to LSTD(\(\lambda\)), is analyzed by Yu and Bertsekas (2009). The authors there provide asymptotic rate results for LSPE(\(\lambda\)), and show that it matches that of LSTD(\(\lambda\)). Also related is the work by Pires and Szepesvári (2012), where the authors study linear systems in general, and as a special case, provide error bounds for LSTD with improved dependence on the underlying feature dimension.

A closely related contribution that is geared towards improving the computational complexity of LSTD is iLSTD (Geramifard et al. 2007). However, the analysis for iLSTD requires that the feature matrix be sparse, while we provide finite-time bounds for our fast LSTD algorithm without imposing sparsity on the features. Another line of related previous work is GTD (Sutton et al. 2009a), and its later enhancement GTD2 (Sutton et al. 2009b). The latter algorithms feature an update iteration that can be viewed as gradient descent and operate in the online setting similar to the regular TD algorithm with function approximation. However, the advantage with GTD/GTD2 is that these algorithms are provably convergent to the TD fixed point even when the policy used for collecting samples differs from the policy being evaluated—the so-called off-policy setting. Recent work by Liu et al. (2015) provides finite time analysis for the GTD algorithm. Unlike GTD-like algorithms, we operate in an offline setting with a batch of samples provided beforehand. LSTD is a popular algorithm here, but has a bad dependency in terms of computational complexity on the feature dimension, and we bring this down from \(O(d^2)\) to O(d) by running an algorithm that closely resembles TD on the batch of samples. This algorithm is shown to retain the convergence rate of LSTD.

To the best of our knowledge, efficient SA algorithms that approximate LSTD without impacting its rate of convergence have not been proposed before in the literature. The high probability bounds that we derive for batchTD do not directly follow from earlier work on LSTD algorithms. Concentration bounds for SA schemes have been derived by Frikha and Menozzi (2012). While we use their technique for proving the high-probability bound on batchTD iterate (see Theorem 4.2), our analysis is more elementary, and we make all the constants explicit for the problem at hand. Moreover, in order to eliminate a possible exponential dependence of the constants in the resulting bound on the reciprocal of the minimum eigenvalue of the symmetric part of \({\bar{A}}_T\), we depart from the argument by Frikha and Menozzi (2012).

Finite sample analysis of TD with linear function approximation has received more attention in recent works (cf. Dalal et al. 2018; Bhandari et al. 2018; Lakshminarayanan and Szepesvari 2018). A detailed comparison of our bounds to those in the aforementioned references is provided in Sect. 6.

This paper is an extended version of an earlier work (see Prashanth et al. 2014). This work corrects the errors in the earlier work by using significant deviations in the proofs, and includes additional simulation experiments. Finally, by Narayanan and Szepesvári (2017), the authors list a few problems with the results and proofs in the conference version (Prashanth et al. 2014), and the corrections incorporated in this work address the comments by Narayanan and Szepesvári (2017).

2.2 Previous work related to SGD

Finite time analysis of SGD methods have been provided by Bach and Moulines (2011). While the bounds by Bach and Moulines (2011) are given in expectation, many machine learning applications require high probability bounds, which we provide for our case. Regret bounds for online SGD techniques have been given by Zinkevich (2003); Hazan and Kale (2011). The gradient descent algorithm by Zinkevich (2003) is in the setting of optimising the average of convex loss functions whose gradients are available, while that by Hazan and Kale (2011) is for strongly convex loss functions.

In comparison to previous work w.r.t. least squares regression, we highlight the following differences:

Earlier works on strongly convex optimization (cf. Hazan and Kale 2011) require the knowledge of the strong convexity constant in deciding the step-size. While one can regularize the problem to get rid of the step-size dependence on \(\mu\), it is not straightforward to choose the regularization constant. Notice that for SGD type schemes, one requires that the matrix \({\bar{A}}_T\) have a minimum positive eigenvalue \(\mu\). Equivalently, this implies that the original problem is regularized with \(T\mu\). This may turn out to be too high a regularization and hence it is desirable to have SGD get rid of this dependence without changing the problem itself. This is precisely what iterate-averaged SGD achieves, i.e., optimal rates both in high probability and expectation even for the un-regularized problem. To the best of our knowledge, there is no previous work that provides non-asymptotic bounds, both in high probability and in expectation, for iterate-averaged SGD.

Our analysis is for the classic SGD scheme that is anytime, whereas the epoch-GD algorithm by Hazan and Kale (2011) requires the knowledge of the time horizon.

While the algorithm by Bach and Moulines (2013) is shown to exhibit the optimal rate of convergence without assuming strong convexity, the bounds there are in expectation only. In contrast, for the special case of strongly convex functions, we derive high-probability bounds in addition to bounds in expectation. Furthermore, the bound in expectation from Bach and Moulines (2011) is not optimal for a strongly convex function in the sense that the initial error (which depends on where the algorithm started) is not forgotten as fast as the rate that we derive.

On a minor note, our analysis is simpler since we work directly with least squares problems, and we make all the constants explicit for the problems considered.

3 TD with uniform sampling on batch data (batchTD)

We propose here a stochastic approximation variant of the LSTD algorithm, whose iterates converge to the same fixed point as the regular LSTD algorithm, while incurring much smaller overall computational cost. The algorithm, which we call batchTD, is a simple stochastic approximation scheme that updates incrementally using samples picked uniformly from batch data. The results that we present establish that the batchTD algorithm computes an \(\epsilon\)-approximation to the LSTD solution \({\hat{\theta }}_T\) with probability \(1-\delta\), while incurring a complexity of the order \(O(d\ln (1/\delta )/\epsilon ^2)\), irrespective of the number of samples T. In turn, this enables us to give a performance bound for the approximate value function computed by the batchTD algorithm.

In the following section, we provide a brief background on LSTD and pathwise LSTD. In the subsequent section, we present our batchTD algorithm.

3.1 Background

Consider an MDP with state space \({\mathcal {S}}\) and action space \({\mathcal {A}}\), both assumed to be finite. Let \(p(s,a,s')\), \(s,s' \in {\mathcal {S}}, a\in {\mathcal {A}}\) denote the probability of transitioning from state s to \(s'\) on action a. Let \(\pi\) be a stationary randomized policy, i.e., \(\pi (s, \cdot )\) is a distribution over \({\mathcal {A}}\), for any \(s\in {\mathcal {S}}\). The value function \(V^\pi\) is defined by

where \(s_t\) denotes the state of the MDP at time t, \(\beta \in [0,1)\) the discount factor, and r(s, a) denotes the instantaneous reward obtained in state s under action a. The value function \(V^\pi\) can be expressed as the fixed point of the Bellman operator \({\mathcal {T}}^\pi\) defined by

When the cardinality of \({\mathcal {S}}\) is huge, a popular approach is to parameterize the value function using a linear function approximation architecture, i.e., for every \(s \in {\mathcal {S}}\), approximate \(V^\pi (s) \approx \phi (s)^\textsf {T}\theta\), where \(\phi (s)\) is a d-dimensional feature vector for state s with \(d \ll |{\mathcal {S}}|\), and \(\theta\) is a tunable parameter. With this approach, the idea is to find the best approximation to the value function \(V^\pi\) in \({\mathcal {B}}=\{\varPhi \theta \mid \theta \in {\mathbb {R}}^d\}\), which is a vector subspace of \({\mathbb {R}}^{|S|}\). In this setting, it is no longer feasible to find the fixed point \(V^\pi ={\mathcal {T}}^\pi V^\pi\). Instead, one can approximate \(V^\pi\) within \({\mathcal {B}}\) by solving the following projected system of equations:

In the above, \(\varPhi\) denotes the feature matrix with rows \(\phi (s)^\textsf {T}, \forall s \in {\mathcal {S}}\), and \(\varPi\) is the orthogonal projection onto \({\mathcal {B}}\). Assuming that the matrix \(\varPhi\) has full column rank, it is easy to derive that \(\varPi = \varPhi (\varPhi ^\textsf {T}\varPsi \varPhi )^{-1}\varPhi ^\textsf {T}\varPsi\), where \(\varPsi\) is the diagonal matrix whose diagonal elements form the stationary distribution (assuming it exists) of the Markov chain associated with the policy \(\pi\).

The solution \(\theta ^*\) of (5) can be re-written as follows (cf. Bertsekas 2012, Section 6.3):

where \(P = [P(s,s')]_{s,s'\in {\mathcal {S}}}\) is the transition probability matrix with components \(P(s,s') = \sum _{a \in {\mathcal {A}}} \pi (s,a) p(s,a,s')\), \(\mathcal{R}\) is the vector with components \(\sum _{a \in {\mathcal {A}}} \pi (s,a) r(s,a)\), for each \(s\in {\mathcal {S}}\), and \(\varPsi\) the stationary distribution (assuming it exists) of the Markov chain for the underlying policy \(\pi\).

In the absence of knowledge of the transition dynamics P and stationary distribution \(\varPsi\), LSTD is an approach which can approximate the solution \(\theta ^*\) using a batch of samples obtained from the underlying MDP. In particular it requires a dataset, \({\mathcal {D}}= \{(s_i,r_i,s'_i),i=1,\ldots ,T)\}\), where each tuple in the dataset \((s_i,r_i,s_i')\) represents a state-reward-next-state triple chosen by the policy. The LSTD solution approximates A, b, and \(\theta ^*\) with \(\bar{A}_T\), \(\bar{b}_T\) using the samples in \({\mathcal {D}}\) as follows:

Denoting the current state feature \((T\times d)\)-matrix by \(\varPhi \triangleq (\phi (s_1)^\textsf {T},\dots , \phi (s_T))\), next state feature \((T\times d)\)-matrix by \(\varPhi ' \triangleq (\phi (s_1')^\textsf {T},\dots , \phi (s_T'))\), and reward \((T\times 1)\)-vector by \(\mathcal{R} = (r_1,\dots ,r_T)^\textsf {T}\), we can rewrite \({\bar{A}}_T\) and \({\bar{b}}_T\) as followsFootnote 1:

It is not clear whether \({\bar{A}}_T\) is invertible for an arbitrary dataset \({\mathcal {D}}\). One way to ensure invertibility is to adopt the approach of pathwise LSTD, proposed by Lazaric et al. (2012). The pathwise LSTD algorithm is an on-policy version of LSTD. It obtains samples, \({\mathcal {D}}\) by simulating a sample path of the underlying MDP using policy \(\pi\), so that \(s_i' = s_{i+1}\) for \(i = 1,\dots ,T-1\). The dataset thus obtained is perturbed slightly by setting the feature of the next state of the last transition, \(\phi (s_T')\), to zero. This perturbation, as suggested by Lazaric et al. (2012), is crucial to ensure that the system of the equations that we solve as an approximation to (6) is well-posed. For the sake of completeness, we make this precise in the following discussion, which is based on Sections 2 and 3 of Lazaric et al. (2012).

Define the empirical Bellman operator \({\hat{T}}: {\mathbb {R}}^T \rightarrow {\mathbb {R}}^T\) as follows: For any \(y \in {\mathbb {R}}^T\),

Let \(\hat{\mathcal{R}}\) be a \(T\times 1\) vector with entries \(r_i\), \(i=1,\ldots ,T\) and \((\hat{\mathcal{V}} y)_i = y_{i+1}\) if \(i<n\) and 0 otherwise. Then, it is clear that \({\hat{T}} y = \hat{\mathcal{R}} + \beta \hat{\mathcal{V}} y\).

Let \({\mathcal {G}}_T \triangleq \{ (\phi (s_1)^\textsf {T}\theta ,\ldots ,\phi (s_T)^\textsf {T}\theta )^\textsf {T}\mid \theta \in {\mathbb {R}}^d\} \subset {\mathbb {R}}^T\) be the vector sub-space of \({\mathbb {R}}^T\) within which pathwise LSTD approximates the true values of the value function corresponding to the states \(s_1,\dots , s_T\), and it is the empirical analogue of \({\mathcal {B}}\) defined earlier. It is easy to see that \({\mathcal {G}}_T = \{ \varPhi \theta \mid \theta \in {\mathbb {R}}^d\}\). Let \({\hat{\varPi }}\) be the orthogonal projection onto \({\mathcal {G}}_T\) using the empirical norm, which is defined as follows: \(\Vert f \Vert ^2_T \triangleq T^{-1}\sum _{i=1}^{T} f(s_i)^2\), for any function f. Notice that \({\hat{\varPi }} {\hat{T}}\) is a contraction mapping, since

Hence, by the Banach fixed point theorem, there exists some \(v^* \in {\mathcal {G}}_T\) such that \({\hat{\varPi }} {\hat{T}} v^* = v^*\).

Suppose that the feature matrix \(\varPhi\) is full rank—an assumption that is standard in the analysis of TD-like algorithms and also beneficial in the sense that it ensures that the system of equations we attempt to solve is well-posed. Then, it is easy to see that there exists a unique \({\hat{\theta }}_T\) such that \(v^* = \varPhi {\hat{\theta }}_T\). Moreover, replacing \({\bar{A}}_T\) in (7) with

where \({\hat{P}}\) is a \(T\times T\) matrix with \({\hat{P}}(i,i+1)=1\) for \(i=1,\ldots ,T-1\), and 0 otherwise. It is clear that \({\bar{A}}_T\) is invertible and \({\hat{\theta }}_T\) is the unique solution to (7).

Remark 1

(Regular versus Pathwise LSTD) For a large dataset \({\mathcal {D}}\) generated from a sample path of the underlying MDP for policy \(\pi\), the difference in the matrix used as \({\bar{A}}_T\) in LSTD and pathwise LSTD is negligible. In particular, the difference in \(\ell _2\)-norm of \({\bar{A}}_T\) composed with and without zeroing out the next state in the last transition of \({\mathcal {D}}\) can be upper bounded by a constant multiple of \(\dfrac{1}{T}\). As mentioned earlier, zeroing out the next state in the last transition of \({\mathcal {D}}\) together with a full-rank \(\varPhi\) makes the system of equations in (7) well-posed. As an aside, the batchTD algorithm, which we describe below, would work as a good approximation to LSTD, as long as one ensures that \({\bar{A}}_T\) is positive definite. Pathwise LSTD presents one approach to achieve the latter requirement, and it is an interesting future research direction to derive other conditions that ensure \({\bar{A}}_T\) is positive definite.

3.2 Update rule and pseudocode for the batchTD algorithm

The idea is to perform an incremental update that is similar to TD, except that the samples are drawn uniformly randomly from the dataset \({\mathcal {D}}\). Recall that, in the case of pathwise LSTD, the dataset corresponds to those along a sample path simulated from the underlying MDP for a given policy \(\pi\), i.e., \(s'_i = s_{i+1}\), \(i=1,\ldots ,T-1\) and \(s'_T=0\).

The full pseudocode for batchTD is given in Algorithm 1. Starting with an arbitrary \(\theta _0\), we update the parameter \(\theta _n\) as follows:

where each \(i_n\) is chosen uniformly randomly from the set \(\{1,\ldots ,T\}\). In other words, we pick a sample with uniform probability 1/T from the set \({\mathcal {D}}= \{(s_i,r_i,s'_i),i=1,\ldots ,T)\}\), and use it to perform a fixed point iteration in (10). The quantities \(\gamma _n\) above are step sizes that are chosen in advance, and satisfy standard stochastic approximation conditions, i.e., \(\sum _{n}\gamma _n = \infty\), and \(\sum _n\gamma _n^2 <\infty\). The operator \(\varUpsilon\) projects the iterate \(\theta _n\) onto the nearest point in a closed ball \({\mathcal {C}}\subset {\mathbb {R}}^d\) with a radius H that is large enough to include \({\hat{\theta }}_T\). Note that projection via \(\varUpsilon\) amounts to scaling down the \(\ell _2\)-norm of the iterate \(\theta _n\) so that it does not exceed H, and is a computationally inexpensive operation.

In the next section, we present non-asymptotic bounds for the error \(\left\| \theta _n - {\hat{\theta }}_T\right\| _2\) that hold with high probability, and in expectation, for the projected iteration in (10). Further, we also provide an error bound that holds in expectation for a variant of (10) without involving the projection operation. From the bounds presented below, we can infer that, for a step size choice that is inversely proportional to the number n of iterations, obtaining the optimal \(O\left( 1/\sqrt{n}\right)\) requires the knowledge of the minimum eigenvalue \(\mu\) of \(\frac{1}{2}\left( {\bar{A}}_T + {\bar{A}}_T^\textsf {T}\right)\), where \({\bar{A}}_T\) is a matrix made from the features used in the linear approximation (see assumption (A1) below). Subsequently, in Sect. 5, we present non-asymptotic bounds for a variant of the batchTD algorithm, which employs iterate averaging. The bounds for iterate-averaged batchTD establish that the knowledge of eigenvalue \(\mu\) is not needed to obtain a rate of convergence that can be made arbitrarily close to \(O\left( 1/\sqrt{n}\right)\).

4 Main results for the batchTD algorithm

Map of the results: Theorem 4.1 proves almost sure convergence of batchTD iterate \(\theta _n\) to LSTD solution \({\hat{\theta }}_T\), with and without projection. Theorem 4.2 provides finite time bounds both in high probability, and in expectation for the error \(\Vert \theta _n - {\hat{\theta }}_T \Vert _2\), where \(\theta _n\) is given by (10). We require high probability bounds to qualify the rate of convergence of the approximate value function \(\varPhi \theta _n\) to the true value function, i.e., a variant of Theorem 1 by Lazaric et al. (2012) for the case of the batchTD algorithm. Theorem 4.5 presents a performance bound for the special case when the dataset \({\mathcal {D}}\) comes from a sample path of the underlying MDP for the given policy \(\pi\). Note that the first three results above hold irrespective of whether the dataset \({\mathcal {D}}\) is based on a sample path or not. However, the performance bound is for a sample path dataset only, and is used to illustrate that using batchTD in place of regular LSTD does not harm the overall convergence rate of the approximate value function to the true value function.

We state all the results in Sects. 4.2–4.5 and provide detailed proofs of all the claims in Sect. 8. Also, all the results are by default for the projected version of the batchTD algorithm, i.e., \(\theta _n\) given by (10), while Sect. 4.4 presents the results for the projection-free batchTD variant. In particular, the latter section provides both asymptotic convergence and a bound in expectation for the error \(\Vert \theta _n - {\hat{\theta }}_T \Vert _2\) for the projection-free variant of batchTD.

4.1 Assumptions

We make the following assumptions for the analysis of the batchTD algorithm:

- (A1):

-

The matrix \(\bar{A}_T\) is positive definite, which implies the smallest eigenvalue \(\mu\) of its symmetric part \(\frac{1}{2}\left( \bar{A}_T + \bar{A}_T^\textsf {T}\right)\) is greater than zero.Footnote 2

- (A2):

-

Bounded features: \(\left\| \phi (s_i) \right\| _2\le \varPhi _{\max }< \infty ,\) for \(i=1,\ldots ,T\).

- (A3):

-

Bounded rewards: \(|r_i|\le R_{\max } < \infty\) for \(i=1,\ldots ,T\).

- (A4):

-

The set \({\mathcal {C}}\triangleq \{\theta \in {\mathbb {R}}^d \mid \left\| \theta \right\| _2\le H\}\) used for projection through \(\varUpsilon\) satisfies \(H > \frac{\left\| {\bar{b}}_T\right\| _2}{\mu }\), where \(\mu\) is as defined in (A1).

In the following sections, we present results for the generalized setting, i.e., the dataset \({\mathcal {D}}\) does not necessarily come from a sample path of the underlying MDP, but we assume that the matrix \({\bar{A}}_T\) is positive definite (see (A1)). For pathwise LSTD, (A1) can be replaced by the following assumption:

- (A1’):

-

The matrix \(\varPhi\) is full rank.

Recall that the pathwise LSTD algorithm perturbs the data set slightly, as discussed in Sect. 3.1 above. Thus, from (9), we have

The inequality above holds because \(\left\| {\hat{P}} v\right\| _2\le \left\| v \right\| _2\), and \(\left\| {\hat{P}}^\textsf {T}v\right\| _2\le \left\| v \right\| _2\), leading to the fact that \(\lambda _{\min }\left( I - \frac{\beta }{2}\left( {\hat{P}} + {\hat{P}}^\textsf {T}\right) \right) \ge (1-\beta ).\) Thus, it is easy to infer that (A1’) implies (A1), using (11) in conjunction with the fact that a full rank \(\varPhi\) implies \(\mu '>0\).

Note that the dataset is assumed to be fixed for all the results presented below.

4.2 Asymptotic convergence

Theorem 4.1

Assume (A1)–(A4), and also that the step sizes \(\gamma _n \in {\mathbb {R}}_+\) satisfy \(\sum _{n}\gamma _n = \infty\), and \(\sum _n\gamma _n^2 <\infty\). Then, for the iterate \(\theta _n\) updated according to (10), we have

Proof

See Sect. 8.1. \(\square\)

4.3 Non-asymptotic bounds

The main result that bounds the computational error \(\left\| \theta _n - {\hat{\theta }}_T \right\| _2\) with explicit constants is given below.

Theorem 4.2

(Error bounds for batchTD) Assume (A1)–(A4). Set \(\gamma _n= \frac{c_0c}{(c+n)}\) such that \(c_0\in (0,\mu ((1+\beta )^2\varPhi _{\max }^4)^{-1}]\) and \(c_0 c > \frac{1}{\mu }\). Then, for any \(\delta >0\), we have

In the above, \(K_1(n)\) and \(K_2(n)\) are functions of order O(1), defined byFootnote 3:

Proof

See Sect. 8.2. \(\square\)

A few remarks are in order.

Remark 2

(Initial versus sampling error) The bound in expectation above can be re-written as

The first term on the RHS above is the initial error, while the second term is the sampling error. The initial error depends on the initial point \(\theta _0\) of the algorithm. The sampling error arises out of a martingale difference sequence that depends on the random deviation of the stochastic update from the standard fixed point iteration. From (15), it is evident that the initial error is forgotten at the rate \(O\left( \dfrac{1}{n^{c_0 c\mu /2}} \right)\). Since \(c_0 c \mu >1\), the former rate is faster than the rate \(O(1/\sqrt{n})\) at which the sampling error decays.

Remark 3

(Rate dependence on the minimum eigenvalue \(\mu\)) We note that setting c such that \(c_0 c \mu = \eta \in (1,\infty )\) we can rewrite the constants in Theorem 4.2 as:

So both the bounds in expectation and high probability have a linear dependence on the reciprocal of \(\mu\). Note also that the constant \((R_{\max }+(1+\beta )H\varPhi _{\max }^2)\) is nothing more than a bound on the size of the random innovations made by the algorithm at each time step.

Remark 4

(Eigenvalue dependence on \(\beta\)) Notice that the eigenvalue \(\mu\) is implicitly dependent on \(\beta\):

Clearly, as \(\beta\) increases, it is harder to satisfy the assumption that \(\mu > 0\). Moreover, for pathwise LSTD (see Sect. 3.1), the inequality in (11) underlines an implicit linear dependence of the rates on the reciprocal of \((1 - \beta )\). However, the bounds’ exact sensitivity to this reciprocal is data-dependent.

Remark 5

(Regularization) To obtain the best performance from the batchTD algorithm, we need to know the value of \(\mu\). However, we can get rid of this dependency easily by explicitly regularizing the problem. In other words, instead of the LSTD solution (7), we obtain the following regularized variant:

where \(\mu\) is now a constant set in advance. The update rule for this variant is

This algorithm retains all the properties of the non-regularized batchTD algorithm, except that it converges to the solution of (16) rather than to that of (7). In particular, the conclusions of Theorem 4.2 hold without requiring assumption (A1), but measuring \(||\theta _n-{\hat{\theta }}_T^{reg}||_2\), the error to the regularized fixed point \({\hat{\theta }}_T^{reg}\).

Remark 6

(Computational complexity) Our theoretical results in Theorem 4.2 show that, with probability \(1-\delta\), batchTD constructs an \(\epsilon\)-approximation of the pathwise LSTD solution with \(O(d\ln (1/\delta )/\epsilon ^2)\) complexity. In other words, for the batchTD estimate to be within a distance \(\epsilon >0\) of the LSTD solution, the number of iterations of (10) would be proportional to \(\frac{d\ln (1/\delta )}{\epsilon ^2}\). This observation coupled with the fact that each iteration of (10) is of order O(d) establishes the advantage of batchTD over pathwise LSTD from a time-complexity viewpoint.

However, batchTD requires storing the entire dataset for the purpose of random sampling. To reduce the storage requirement of batchTD, one could uses mini-batching of the dataset, i.e., store smaller subsets of the dataset and run batchTD updates on these mini-batches. It is an interesting direction for future work to analyze such an approach and recommend appropriate mini-batch sizes based on the parameters of the underlying policy evaluation problem. For the case of regression, such an approach has been recommended in earlier works, cf. Roux et al. (2012).

Remark 7

(TD with linear function approximation) One could use completely parallel arguments to that in the proof of Theorem 4.2 to obtain rate results for TD(0) with linear function approximation under i.i.d. samples. A similar observation holds for the bounds presented below for the projection-free variant of batchTD in Theorem 4.4, and for the iterate-averaged variant of batchTD in Theorem 5.1.

The bounds for TD with linear function approximation under i.i.d. sampling would be a side benefit, while the primary message from our work is that one could run TD(0) on a batch, and obtain a computational advantage, with performance comparable to that of LSTD. We have used pathwise LSTD to drive home this point.

Finally, note that the regular TD with linear function approximation is under non i.i.d. sampling (or involving a Markov noise component), and deriving non-asymptotic bounds for such a setting is beyond the scope of this paper.

4.4 Projection-free variant of the batchTD algorithm

Here we consider a projection-free variant of batchTD that updates according to (10), but with \(\varUpsilon (\theta ) = \theta ,\ \forall \theta \in {\mathbb {R}}^d\). We now present the results for batchTD without a non-trivial projection, under assumptions similar to the projected variant of batchTD, i.e., bounded rewards, features, and a positive lower bound on the minimum eigenvalue \(\mu\) of the symmetric part of \({\bar{A}}_T\). The results include asymptotic convergence and a bound in expectation on the error \(\Vert \theta _n - {\hat{\theta }}_T\Vert _2\). However, we are unable to derive bounds in high probability without having the iterates explicitly bounded using \(\varUpsilon\), and it would be a interesting future research direction to get rid of this operator for the bounds in high probability.

Theorem 4.3

Assume (A1)–(A3), and also that the step sizes \(\gamma _n \in {\mathbb {R}}_+\) satisfy \(\sum _{n}\gamma _n = \infty\), and \(\sum _n\gamma _n^2 <\infty\). Then, for the iterate \(\theta _n\) updated according to (10) without projection (i.e., \(\varUpsilon\) is the identity map), we have

Proof

See Sect. 8.2. \(\square\)

Using a slightly different proof technique, we are able to give a bound in expectation for the error of the non-projected batchTD, in the result below.

Theorem 4.4

(Expectation error bound for batchTD without projection) Assume (A2)–(A4). Set \(\gamma _n= \frac{c_0c}{(c+n)}\) such that \(c_0\in (0,\mu ((1+\beta )^2\varPhi _{\max }^4)^{-1}]\) and \(c_0 c \mu \in (1,\infty )\). Then, we have

where \(K_1(n)\) is a function of order O(1), defined by:

Proof

See Sect. 8.3. \(\square\)

4.5 Performance bound

We can combine our error bounds above with the performance bound derived by Lazaric et al. (2012) for pathwise LSTD. The theorem below shows that using batchTD in place of pathwise LSTD does not impact the overall convergence rate.

Theorem 4.5

(Performance bound) Let \({\tilde{v}}_n \triangleq \varPhi \theta _n\) denote the approximate value function obtained after n steps of batchTD, and let v denote the true value function, evaluated at the states \(s_1, \ldots , s_T\) along the sample path. Then, under the assumptions (A1)–(A4), with probability \(1-2\delta\) (taken w.r.t. the random path sampled from the MDP, and the randomization in batchTD), we have

where \(\Vert f \Vert ^2_T \triangleq \dfrac{1}{T}\sum \limits _{i=1}^{T} f(s_i)^2\), for any function f and \(\mu '\) is the minimum eigenvalue of \(\dfrac{1}{T}\varPhi ^\textsf {T}\varPhi\) (see also (11)).

Proof

The result follows by combining Theorem 4.2 above with Theorem 1 of Lazaric et al. (2012) using a triangle inequality. \(\square\)

Remark 8

The approximation and estimation errors (first and second terms in the RHS of (20)) are artifacts of function approximation and least squares methods, respectively. The third term is a consequence of using batchTD in place of the LSTD. Setting \(n=T\) in the above theorem, we observe that using our scheme in place of LSTD does not impact the rate of convergence of the approximate value function \({\tilde{v}}_n\) to the true value function v. Further, the performance bound in Theorem 4.5, considering only the dimension d, minimum eigenvalue \(\mu\) and sample size T, is of the order \(O\left( \frac{\sqrt{d}}{\mu \sqrt{T}}\right)\), which is better than the order \(O\left( \frac{d}{\mu T^{1/4}}\right)\) on-policy performance bound for GTD/GTD2 in Proposition 4 of Liu et al. (2015).

Remark 9

(Generalization bounds) While Theorem 4.5 holds for only states along the sample path \(s_1,\ldots , s_T\), it is possible to generalize the result to hold for states outside the sample path. This approach has been adopted by Lazaric et al. (2012) for regular LSTD, and the authors there provide performance bounds over the entire state space assuming a stationary distribution exists for the given policy \(\pi\), and the underlying Markov chain is mixing fast (see Lemma 4 by Lazaric et al. (2012)). In the light of the result in Theorem 4.5 above, it is straightforward to provide generalization bounds similar to Theorems 5 and 6 of Lazaric et al. (2012) for batchTD as well, and the resulting rates from these generalization bound variants for batchTD are the same as that for regular LSTD. We omit these obvious generalizations, and refer the reader to Section 5 of Lazaric et al. (2012) for further details.

5 Iterate averaging

Iterate averaging is a popular approach for which it is not necessary to know the value of the constant \(\mu\) (see (A1) in Sect. 4) to obtain the (optimal) approximation error of order \(O(n^{-1/2})\). Introduced independently by Ruppert (1991) and Polyak and Juditsky (1992), the idea here is to use a larger step-size \(\gamma _n \triangleq c_0\left( c/(c+n)\right) ^\alpha\), and then use the averaged iterate, defined as follows:

where \(\theta _n\) is the iterate of the batchTD algorithm, presented earlier. The following result bounds the the distance of the averaged iterate to the LSTD solution.

Theorem 5.1

(Error Bound for iterate averaged batchTD) Assume (A1)–(A4). Set \(\gamma _n= c_0\left( \frac{c}{c+n}\right) ^\alpha\), with \(\alpha \in (1/2,1)\) and \(c,c_0>0\). Then, for any \(\delta >0\), and any \(n>n_0\triangleq \max \{\lfloor \left( \frac{2c_0(1+\beta ^2)\varPhi _{\max }^4}{\mu })^{1/\alpha } - 1\right) c\rfloor ,0\}\), we have

where

Proof

The proof of both the high probability bound as well as the bound in expectation proceed by splitting the analysis into the error before and after \(n_0\). The individual terms in the definition of \(K_2^{IA}(n)\) can be classified based on whether they are bounding the error before or after \(n_0\). In particular, the term labelled (E4) in the definition of \(K_2^{IA}(n)\) is a bound on the error before \(n_0\), while the terms collected under (E3) are a bound on the error after \(n_0\).

While the proof of the bound in expectation involves splitting the analysis before and after \(n_0\), the resulting bound via \(K_1^{IA}(n)\) does not have a clear split into additive terms that directly correspond to before or after \(n_0\). However, from the proof presented later, it is apparent that \(C_1\) arises out of a bound on the initial error before \(n_0\), the term involving the factor labelled (E1) in the definition of \(K_1^{IA}(n)\) arises out of a bound on the sampling error before \(n_0\). Further, \(C_0\) arises out of a bound on the initial error after \(n_0\), and the term labelled (E2) in \(K_1^{IA}(n)\) is used to bound the sampling error after \(n_0\).

For a detailed proof, the reader is referred to Sect. 8.4. \(\square\)

A few remarks are in order.

Remark 10

(Explicit constants) Unlike Fathi and Frikha (2013), where the authors provide concentration bounds for general stochastic approximation schemes, our results provide an explicit \(n_0\), after which the error of iterate averaged batchTD is nearly of the order O(1/n).

Remark 11

(Rate dependence on eigenvalue) From the bounds in Theorem 5.1, it is evident that the dependency on the knowledge of \(\mu\) for the choice of c can be removed through averaging of the iterates, while obtaining a rate that is close to \(1/\sqrt{n}\). In particular, iterate averaging results in a rate that is of the order \(O\left( 1/n^{(1-\alpha )/2}\right)\), where the exponent \(\alpha\) has to be chosen strictly less than 1. Setting \(\alpha =1\) causes the constant \(C_0\) as well as \(K_1^{IA}(n), K_2^{IA}(n)\) to blowup and hence, there is a loss of \(\alpha /2\) in the rate, when compared to non-averaged batchTD. However, unlike the latter, iterate averaged batchTD does not need the knowledge of \(\mu\) in setting the step size \(\gamma _n\).

Remark 12

(Decay rate of initial error) The bound in expectation in Theorem 5.1 can be re-written as follows:

Thus, the initial error is forgotten at the rate O(1/n), and this is slower than the corresponding rate obtained for the case of non-averaged batchTD (see Remark 2). Hence, as suggested by earlier works on stochastic approximation (cf. Fathi and Frikha 2013), it is preferred to average after a few iterations since the initial error is not forgotten faster than the sampling error with averaging.

Remark 13

(Computational cost vs. accuracy) Let \(\epsilon , \delta > 0\). Then, the number of iterations n requires to achieve an accuracy \(\epsilon\), i.e., \(\left\| {\bar{\theta }}_n - {\hat{\theta }}_T \right\| _2\le \epsilon\) with probability \(1-\delta\), is of the order \(O\left( \frac{1}{\epsilon ^{2/\alpha }} \log \left( \frac{1}{\delta } \right) \right)\). On the other hand, the corresponding number of iterations for the non-averaged case (see Theorem 4.2) is \(O\left( \frac{1}{\epsilon ^{2}} \log \left( \frac{1}{\delta } \right) \right)\).

6 Recent works: a comparison

Non-asymptotic bounds for TD(0) with linear function approximation are derived in three recent works—see Dalal et al. (2018); Bhandari et al. (2018); Lakshminarayanan and Szepesvari (2018). In Dalal et al. (2018); Lakshminarayanan and Szepesvari (2018), the authors consider the i.i.d. sampling case, while the authors by Bhandari et al. (2018) provide bounds in the i.i.d. as well as the more general Markov noise settings. As noted earlier in Remark 7, our analysis could be re-used to derive bounds for TD with linear function approximation in the i.i.d. sampling scenario, while the case of Markov noise is not handled by us. This observation justifies a comparison of the bounds that we derive for batchTD to those in the aforementioned references for TD under i.i.d. sampling, and we provide this comparison below.

In comparison to the references Bhandari et al. (2018) and Lakshminarayanan and Szepesvari (2018), we would like to point out that we derive non-asymptotic bounds that hold with high probability, in addition to bounds that hold in expectation. The aforementioned references provide bounds that hold in expectation only.

The bound in expectation that we derived in Theorem 4.2 matches the bound derived in Bhandari et al. (2018), up to constants. Note that our result in Theorem 4.2, as well as those in (Bhandari et al. 2018) are for the projected variant of TD(0). In addition, we also provide a bound in expectation in Theorem 4.4 for the projection-free variant of TD(0).

Continuing the comparison with Bhandari et al. (2018), the bounds in their work require the knowlege of the minimum eigenvalue \(\mu\), which is unknown in a typical RL setting. We get rid of this problematic eigenvalue dependence through iterate averaging, while obtaining a nearly optimal rate of the order \(O\left( n^{\alpha /2}\right)\), where \(\frac{1}{2}< \alpha <1\).

The bounds by Dalal et al. (2018) are for TD(0) with linear function approximation under the i.i.d. sampling case, allowing a comparison of bounds for batchTD with their results. The bound in expectation on the error \(\left\| \theta _n - \theta ^*\right\| _2\) in Theorem 3.1 of Dalal et al. (2018) is \(O(\frac{1}{n^\sigma })\), where \(0<\sigma <\frac{1}{2}\). Here \(\theta _n\) is the TD(0) iterate, and \(\theta ^{*}\) is the TD fixed point. In contrast, the bound we obtain in Theorem 4.3 is \(O(\frac{1}{\sqrt{n}})\). Both results are for the projection-free variant. However, our bound involves a stepsize that requires the knowledge of \(\mu\) (see (A1)), while their stepsize is \(\varTheta (\frac{1}{n^{2\sigma }})\). Our results for the iterate-averaged variant in Theorem 5.1 get rid of this stepsize dependence, and the rate we obtain for this variant are comparable to that in Theorem 3.1 of Dalal et al. (2018).

Continuing the comparison with Dalal et al. (2018), we first note that the high-probability bound in 4.2 in our work, which is for the case when \(\mu\) is known, has a rate of order \(O\left( \frac{1}{\sqrt{n}}\right)\), while the iterate averaged variant in Theorem 5.1 exhibits a rate \(O\left( \frac{1}{n^{\alpha /2}}\right)\), where \(0< \alpha < \frac{1}{2}\). On the other hand, the rate from the bounds in Theorem 3.6 of Dalal et al. (2018), is limited by a problem-dependent parameter \(\lambda\) that is below the minimum eigenvalue (which is \(\mu\) in our notation). Further, our high probability bound in Theorem 4.2 applies for all n, while that in Theorem 5.1 is for all \(n\ge n_0\), with \(n_0\) explicitly specified (as a function of the underlying parameters). In contrast, the bound in Theorem 3.6 of Dalal et al. (2018) applies to sufficiently large n, where the threshold beyond which the bound applies is not explicitly specified. Finally, we project the iterates to keep it bounded, while the bounds by Dalal et al. (2018) do not involve a projection operator. Note that we require projection for the high-probability bounds, while we derive a bound in expectation for the projection-free variant (see Theorem 4.4).

In Lakshminarayanan and Szepesvari (2018), the authors derive non-asymptotic bounds in expectation, which could be applied for TD(0) with linear function approximation, or even to our batchTD algorithm. Lakshminarayanan and Szepesvari (2018) derive lower bounds, while we focus on Theorem 1, which contains the upper bound. Our bound in expectation in Theorem 4.2 is comparable to that in Theorem 1 there, since the overall rate is \(O(\frac{1}{\sqrt{n}})\) in either case, and both results assume knowledge about underlying dynamics (through the minimum eigenvalue \(\mu\) in our case, while through a certain distribution constant for setting the stepsize there). Further, unlike Lakshminarayanan and Szepesvari (2018), we derive bounds for the iterate-averaged variant, which gets rid of the problematic stepsize dependence, at a compromise in the rate, which turns out to be \(O(\frac{1}{n^{\alpha }})\), with \(\alpha < \frac{1}{2}\).

7 Fast LSPI using batchTD (fLSPI)

LSPI (Lagoudakis and Parr 2003) is a well-known algorithm for control based on the policy iteration procedure for MDPs. We propose a computationally efficient variant of LSPI, which we shall henceforth refer to as fLSPI. The latter algorithm works by substituting the regular LSTDQ with batchTDQ—an algorithm that is quite similar to batchTD described earlier. We first briefly describe the LSPI algorithm and later provide a detailed description of fLSPI.

7.1 Background for LSPI

We are given a set of samples \({\mathcal {D}}\triangleq \{(s_i,a_i,r_i,s'_{i}),i=1,\ldots ,T)\}\), where each sample i denotes a one-step transition of the MDP from state \(s_i\) to \(s'_i\) under action \(a_i\), while resulting in a reward \(r_i\). The objective is to find an approximately optimal policy using this set. This is in contrast with the goal of LSTD, which aims to approximate the state-value function of a particular policy (see Sect. 3.1).

For a given stationary policy \(\pi\), the Q-value function \(Q^\pi (s,a)\) for any state \(s\in {\mathcal {S}}\) and action \(a\in {\mathcal {A}}({\mathcal {S}})\) is defined as follows:

In the above, the initial state s and the action a in s are fixed, and thereafter the actions taken are governed by the policy \(\pi\). This function can be thought of as the value function for a policy \(\pi\) in state s, given that the first action taken is the action a. As before, we parameterize the Q-value function using a linear function approximation architecture,

where \(\phi (s,a)\) is a d-dimensional feature vector corresponding to the tuple (s, a) and \(\theta\) is a tunable policy parameter.

LSPI is built in the spirit of policy iteration algorithms. These perform policy evaluation and policy improvement in tandem. For the purpose of policy evaluation, LSPI uses a LSTD-like algorithm called LSTDQ, which learns an approximation to the Q- (state-action value) function. It does this for any policy \(\pi\), by solving the linear system

As in the case of LSTD, the above can be seen as approximately solving a system of equations similar to (6), but in this case for the Q-value function. The pathwise LSTDQ variant is obtained by forming the dataset \({\mathcal {D}}\) from a sample path of the underlying MDP for a given policy \(\pi\), and also zeroing out the feature vector of the next state-action tuple in the last sample of the dataset.

The policy improvement step uses the approximate Q-value function to derive a greedily updated policy as follows:

Since this policy is provably better than \(\pi\), iterating this procedure allows LSPI to find an approximately optimal policy.

7.2 fLSPI algorithm

The fLSPI algorithm works by substituting the regular LSTDQ with its computationally efficient variant batchTDQ. The overall structure of fLSPI is given in Algorithm 2.

For a given policy \(\pi\), batchTDQ approximates LSTDQ solution (26) by an iterative update scheme as follows (starting with an arbitrary \(\theta _0\)):

From Sect. 2.2, it is evident that the claims in Proposition 8.1 and Theorem 4.2 hold for the above scheme as well.

Remark 14

Error bounds for fLSPI can be derived along the lines of those for regular on-policy LSPI by Lazaric et al. (2012), and we omit the details.

8 Convergence proofs

Let \({\mathcal {F}}_n\) denotes the \(\sigma\)-field generated by \(\theta _0,\ldots ,\theta _n\), \(n\ge 0\). Let

Recall that we denote the current state feature \((T\times d)\)-matrix by \(\varPhi \triangleq (\phi (s_1)^\textsf {T},\dots , \phi (s_T))\), the next state feature \((T\times d)\)-matrix by \(\varPhi ' \triangleq (\phi (s_1')^\textsf {T},\dots , \phi (s_T'))\), and the reward \((T\times 1)\)-vector by \(\mathcal{R} = (r_1,\dots ,r_T)^\textsf {T}\). Recall also that the LSTD solution is given by

Finally we note also that the pathwise LSTD solution has the same form as above, except that \(\varPhi ' \triangleq {\hat{P}} \varPhi = (\phi (s_1')^\textsf {T},\dots , \phi (s_{T-1}')^\textsf {T},\mathbf {0}^\textsf {T})\), where \(\mathbf {0}\) is the \(d\times 1\)-zero-vector.

8.1 Proof of asymptotic convergence

Proof of Theorem 4.3 (batchTD without projection):

Proof

We first rewrite (10) as follows:

where \(\varDelta M_n = f_n(\theta _{n-1}) - {\mathbb {E}}(f_n(\theta _{n-1})\mid {\mathcal {F}}_{n-1})\) is a martingale difference sequence, with \(f_n(\cdot )\) as defined in (28).

The ODE associated with (29) is

In the above, \(q(\theta (t)) \triangleq -{\bar{A}}_T \theta (t) + \bar{b}_T\).

To show that \(\theta _n\) converges a.s. to \({\hat{\theta }}_T\), one requires that the iterate \(\theta _n\) remains bounded a.s. Both boundedness and convergence can be inferred from Theorems 2.1–2.2(i) of Borkar and Meyn (2000), provided we verify assumptions (A1)–(A2) there. These assumptions are as follows: (a1) The function q is Lipschitz. For any \(\eta \in {\mathbb {R}}\), define \(q_{\eta }(\theta ) = q(\eta \theta )/\eta\). Then, there exists a continuous function \(q_\infty\) such that \(q_{\eta } \rightarrow q_{\infty }\) as \(\eta \rightarrow \infty\) uniformly on compact sets. Furthermore, the origin is a globally asymptotically stable equilibrium for the ODE

(a2) The martingale difference \(\{\varDelta M_{n}, n\ge 1\}\) is square-integrable with

for some \(C_0 < \infty\).

We now verify (a1) and (a2) in our context. Notice that \(q_\eta (\theta )\triangleq -{\bar{A}}_T \theta + {\bar{b}}_T/\eta\) converges to \(q_{\infty }(\theta (t))=-{\bar{A}}_T \theta (t)\) as \(\eta \rightarrow \infty\). Since the matrix \({\bar{A}}_T\) is positive definite by (A1), the aforementioned ODE has the origin as its globally asymptotically stable equilibrium. This verifies (a1).

For verifying (a2), notice that

The first inequality follows from the fact that for any scalar random variable Y, \({\mathbb {E}}\left( Y - E\left[ Y\mid {\mathcal {F}}_n\right] \right) ^2 \le {\mathbb {E}}Y^2\), while the second inequality follows from (A2) and (A3). The claim follows. \(\square\)

Proof of Theorem 4.1 (batchTD with projection):

Proof

We first rewrite (10) as follows:

where \(\varDelta M_n\), \({\mathcal {F}}_n\) and \(f_n(\theta )\) are as defined in (28).

From (A3) and the fact that the iterate \(\theta _n\) is projected onto a compact and convex set \({\mathcal {C}}\), it is easy to see that the norm of the martingale difference \(\varDelta M_n\) is upper bounded by \(2\left( R_{\max }\varPhi _{\max }+(1+\beta )H\varPhi _{\max }^2\right)\). Thus, (32) can be seen as a discretization of the ODE

where \(\check{\varUpsilon }(\theta ) = \lim _{\tau \rightarrow 0} \left[ \left( \varUpsilon \left( \theta + \tau f(\theta )\right) - \theta \right) /\tau \right]\) , for any bounded continuous f. The operator \(\check{\varUpsilon }\) ensures that \(\theta\) governed by (33) evolves within the set \({\mathcal {C}}\) that contains \(\hat{\theta }_T\). As in the proof of Lemma 4.1 by Yu (2015), we have

where the inequality follows from (A1). From the foregoing, we have that \(\left\| {\hat{\theta }}_T \right\| _2\le \frac{\left\| {\bar{b}}_T\right\| _2}{\mu } < H \Rightarrow {\hat{\theta }}_T \in {\mathcal {C}}\). Following similar arguments as before, it can be inferred that at any boundary point \(\theta\) of \({\mathcal {C}}\), \(\langle \theta , -{\bar{A}}_t \theta + {\bar{b}}_T \rangle < 0\), and hence the ODE (33) has the origin as its globally asymptotically stable equilibrium. The claim now follows from Theorem 2 in Chapter 2 of Borkar (2008) (or even Theorem 5.3.1 on pp. 191–196 of Kushner and Clark (1978)). \(\square\)

8.2 Proofs of finite-time error bounds for batchTD

To obtain high probability bounds on the computational error \(\Vert \theta _n - {\hat{\theta }}_T \Vert _2\), we consider separately the deviation of this error from its mean (see (34) below), and the size of its mean itself (see (35) below). In this way the first quantity can be directly decomposed as a sum of martingale differences, and then a standard martingale concentration argument applied, while the second quantity can be analyzed by unrolling iteration (10).

Proposition 8.1 below gives these results for general step sequences. The proof involves two martingale analyses, which also form the template for the proofs for the least squares regression extension (see Sect. 10), and the iterate averaged variant of batchTD (see Theorem 5.1).

After proving the results for general step sequences, we give the proof of Theorem 4.2, which gives explicit rates of convergence of the computational error in high probability for a specific choice of step sizes.

Proposition 8.1

Let \(z_n = \theta _n - {\hat{\theta }}_T\), where \(\theta _n\) is given by (10). Under (A1)–(A4), we have \(\forall \epsilon > 0\),

-

(1)

a bound in high probability for the centered error:

$$\begin{aligned} \!\!{\mathbb {P}}&\left( \left\| z_n \right\| _2- {\mathbb {E}}\left\| z_n \right\| _2\ge \epsilon \right) \le \exp \left( - \dfrac{\epsilon ^2}{4 \left( R_{\max }+(1+\beta )H\varPhi _{\max }^2\right) ^2\sum \limits _{k=1}^{n} L^2_k}\right) , \end{aligned}$$(34)where \(L_k \triangleq \gamma _k \prod _{j=k+1}^{n} (1 - \gamma _{j} (2\mu - \gamma _{j}(1+\beta )^2\varPhi _{\max }^4))^{1/2}\),

-

(2)

and a bound in expectation for the non-centered error:

$$\begin{aligned}&{\mathbb {E}}\left( \left\| z_n\right\| _2\right) ^2 \le \underbrace{\left[ \prod _{k = 1}^n \left( 1 - \gamma _k(2\mu - \gamma _k(1+\beta )^2\varPhi _{\max }^4)\right) \left\| z_0\right\| _2\right] ^2}_\mathbf{initial \, error }\nonumber \\&\quad + \underbrace{4\sum _{k=1}^{n}\gamma _k^2 \left[ \prod _{j = k}^{n-1} (1 - \gamma _j(2\mu - \gamma _j(1+\beta )^2\varPhi _{\max }^4) \right] ^2}_\mathbf{sampling \,error } \left( R_{\max }+(1+\beta ) H \varPhi _{\max }^2\right) ^2. \end{aligned}$$(35)

As mentioned earlier, the initial error relates to the starting point \(\theta _0\) of the algorithm, while the sampling error arises out of a martingale difference sequence (see Step 1 in Sect. 8.2.2 below for a precise definition).

We establish later, in Sect. 8.2.3, that under a suitable choice of step sizes, the initial error is forgotten faster than the sampling error.

We claim that the terms of the form \(1 - \gamma _j(2\mu - \gamma _j \varPhi _{\max }^4(1 + \beta )^2)\), which go into a product in the Lipschitz constant \(L_i\) as well as in the initial/sampling error terms of the expectation bound, are positive. This claim can be seen as follows:

where the inequality above follows from the fact that \(\mu \le (1+\beta )\varPhi _{\max }^2\).

In Sect. 8.2.3, to establish the rates of Theorem 4.2, we first prove that \(\sum _{i=1}^n L_i\) is an order 1/n term, and the claim of positivity of \(L_i\) is necessary for the aforementioned proof.

8.2.1 Proof of Proposition 8.1 part (1)

Proof

The proof gives a martingale analysis of the centered computational error. It proceeds in three steps:

Step 1 (Decomposition of error into a sum of martingale differences)

Recall that \(z_n\triangleq \theta _n - {\hat{\theta }}_T\). We rewrite \(\left\| z_n \right\| _2- {\mathbb {E}}\left\| z_n \right\| _2\) as follows:

where \(g_k \triangleq {\mathbb {E}}[\left\| z_n\right\| _2\left| {\mathcal {F}}_k \right. ]\), \(D_k \triangleq g_k - {\mathbb {E}}[ g_k \left| {\mathcal {F}}_{k-1} \right. ]\), and \({\mathcal {F}}_k\) denotes the \(\sigma\)-field generated by the random variables \(\{\theta _i,i\le k\}\) for \(k \ge 0\).

Recall that \(f_k(\theta ) \triangleq (\theta ^\textsf {T}\phi (s_{i_k}) - (r_{i_k} + \beta \theta ^\textsf {T}\phi (s_{i_{k}}') ))\phi (s_{i_k})\) denotes the random innovation at time k, given that \(\theta _{k-1} = \theta\).

Step 2 (Showing that \(g_k\) is a Lipschitz function of the random innovation \(f_k\))Footnote 4

The next step is to show that the functions \(g_k\) are Lipschitz continuous in the random innovation at time k, with Lipschitz constants \(L_k\). It then follows immediately that the martingale difference \(D_k\) is a Lipschitz function of the \(k^{th}\) random innovation with the same Lipschitz constant, which is the property leveraged in Step 3 below. In order to obtain Lipschitz constants with no exponential dependence on the inverse of \((1-\beta )\mu\) we depart from the general scheme of Frikha and Menozzi (2012), and use our knowledge of the form of the random innovation \(f_k\) to eliminate the noise due to the rewards between time k and time n:

Let \(\varTheta _j^k(\theta )\) denote the value of the random iterate at instant j evolving according to (10) and beginning from the value \(\theta\) at time k.

First we note that as the projection, \(\varUpsilon\), is non-expansive,

Expanding the random innovation terms, we have

where \(a_{j}\triangleq [\phi (s_{i_{j}})\phi (s_{i_{j}})^\textsf {T}- \beta \phi (s_{i_{j}})\phi (s_{i_{j}}')^\textsf {T}]\). Note that

Recall that \(\varPhi ^\textsf {T}\triangleq (\phi (s_1),\dots ,\phi (s_T))\), and \({\varPhi '}^\textsf {T}\triangleq (\phi (s_1)',\dots ,\phi (s_T)')\). Let \(\varDelta \triangleq \mathrm {diag}( \left\| \phi (s_1) \right\| _2^2, \dots ,\) \(\left\| \phi (s_{T}) \right\| _2^2 )\). Then, for any vector \(\theta\), we have

For the equality in (39), we have used that \(\sum _{k = 1}^T\phi (s_k)\phi (s_k)^\textsf {T}= \varPhi ^\textsf {T}\varPhi\), and similar identities. Further, the inequality in (40) can be inferred using the following fact:

where we have used assumption (A1) for the last inequality above. The last term in (40) follows from \(|\theta ^\textsf {T}{\varPhi '}^\textsf {T}\varDelta \varPhi ' \theta | \le \left\| \theta \right\| _2^2\varPhi _{\max }^4\), where we have used assumption (A2) that ensures features are bounded. The inequality in (41) can be inferred as follows:

In the above, we have used the boundedness of features to infer \(|\theta ^\textsf {T}\varPhi ^\textsf {T}\varDelta \varPhi \theta | \le \left\| \theta \right\| _2^2\varPhi _{\max }^4\), and \(|\theta ^\textsf {T}{\varPhi '}^\textsf {T}\varDelta \varPhi ' \theta | \le \left\| \theta \right\| _2^2\varPhi _{\max }^4\).

Hence, from the tower property of conditional expectations, it follows that:

Finally, writing f and \(f'\) for two possible values of the random innovation at time k, and writing \(\theta = \theta _{k-1} +\gamma _k f\) and \(\theta ' = \theta _{k-1} +\gamma _k f'\) and using Jensen’s inequality, we have that

which proves that the functions \(g_k\) are \(L_k\)-Lipschitz in the random innovations at time k. Recall that \(D_k=g_k - g_{k-1}\), and hence, the Lipschitz constant of \(D_k\) is \(\max \left( L_k,L_{k-1}\right)\). However, from (36), we have \(L_k > L_{k-1}\), leading to a Lipschitz constant of \(L_k\) for \(D_k\).

Step 3 (Applying a sub-Gaussian concentration inequality)

Now we derive a standard martingale concentration bound in the lemma below. Note that, for any \(\lambda >0\),

The last equality above follows from (37), while the first inequality follows from Markov’s inequality.

Let Z be a zero-mean random variable (r.v) satisfying \(|Z|\le B\) w.p. 1, and g be a L-Lipschitz function g. Letting \(Z'\) denote an independent copy of Z and \(\varepsilon\) a Rademacher r.v., we have

In the above, we have used Jensen’s inequality in (44), the fact that distribution of \(g(Z)-g(Z')\) is the same as \(\varepsilon (g(Z)-g(Z'))\) in (45), a result from Example 2.2 in Wainwright (2019) in (46), the fact that g is L-Lipschitz in (47), and the boundedness of Z in (48).

Note that by (A3), and the projection step of the algorithm, we have that \(|f_k(\theta _{k-1})|<(R_{\max }+(1+\beta )H\varPhi _{\max }^2 )\) is a bounded random variable, and, conditioned on \({\mathcal {F}}_{k-1}\), \(D_k\) is Lipschitz in \(f_k(\theta _{k-1})\) with constant \(L_k\). Hence, we obtain

leading to

The proof of Proposition 8.1 part (1) follows by optimizing over \(\lambda\) in (49). \(\square\)

8.2.2 Proof of Proposition 8.1 part (2)

Proof

The proof of this result also follows a martingale analysis. In contrast to the high probability bound, here we work directly with the error, rather than the centered error, and split it into predictable and martingale parts. Bounding the predictable part then bounds the influence of the initial error, and bounding the martingale part bounds the error due to sampling.

Step 1 (Extract a martingale difference from the update)

First, by using that \(\bar{A}_T = {\mathbb {E}}((\phi (s_{i_n}) - \beta \phi (s_{i_n}'))\phi (s_{i_n})^\textsf {T}\mid {\mathcal {F}}_{n-1})\) and that \({\mathbb {E}}(f_n({\hat{\theta }}_T)\mid {\mathcal {F}}_{n-1}) = 0\), we can rearrange the update rule (10) to get

where \(\varDelta M_n : = f_n(\theta _{n-1}) - {\mathbb {E}}( f_n(\theta _{n-1}) \mid {\mathcal {F}}_{n-1})\) is a martingale difference.

Step 2 (Apply Jensen’s inequality to the square of the norm)

From Jensen’s inequality, and the fact that the projection in the update rule (10) is non-expansive, we obtain

Note that the cross-terms have vanished in (50) since \(\varDelta M_n\) is martingale difference, independent of the other terms, given \({\mathcal {F}}_{n-1}\).

Step 3 (Unroll the iteration)

Using assumptions (A1) and (A2)

Furthermore, by assumption (A3), and the projection step, the martingale differences \(\varDelta M_n\) are bounded in norm by \(2(R_{\max }+(1+\beta )H\varPhi _{\max }^2)\). By applying the tower property of conditional expectations repeatedly together with (51) we arrive at the following bound:

\(\square\)

8.2.3 Derivation of rates given in Theorem 4.2

Proof

To obtain the rates specified in the bound in expectation in Theorem 4.2, we simplify the bound in expectation in Proposition 8.1 using the choice \(\gamma _n = \frac{c_0 c}{(c+n)}\), with \(c_0\in (0,\mu ((1 + \beta )^2\varPhi _{\max }^4)^{-1}]\) and \(2c_0 c\mu \in (1,\infty )\). Consider the sampling error term in (35) under the aforementioned choice for the step size.

In the above, the inequality in (52) uses the fact that \(1 - \gamma _j(2\mu - \gamma _j(1+\beta )^2\varPhi _{\max }^4 > 0\), a claim that was established earlier in (36). The inequality in (53) uses \(c_0\in (0,\mu ((1 + \beta )^2\varPhi _{\max }^4)^{-1}]\). The inequality in (54) follows by using \(\ln (1+u)\le u\). To infer the inequality in (55), we use \(\sum _{j=k+1}^{n} (c+j)^{-1} \ge \int _{x=c+k+1}^{n+c+1} x^{-1} dx\), which holds because the LHS is the upper Riemann sum of RHS. Now, evaluating the integral of \(x^{-1}\), the exponential term inside the summand of (54) becomes:

and the inequality in (55) follows by substituting the bound on the RHS above. We obtain the final inequality, (56), by upper bounding the term \(\sum _{k=1}^n (k + c + 1)^{2 c_0 c \mu } (k+c)^{-2}\) on the RHS of (55) as follows:

where the inequality in (58) holds because

Further, the inequality in (58) follows from the fact that \((1 + 1/c)^{2c} \le e^2\) for all \(c>0\), and the inequality in (59) follows by comparison of a sum with an integral together with the assumption that \(c_0 c \mu > 1\).

Similarly, the initial error term in (35) can be simplified from the hypothesis that \(c_0 c\mu \in (1,\infty )\) and \(c_0\in (0,\mu ((1 + \beta )^2\varPhi _{\max }^4)^{-1}]\) as follows:

The last inequality above follows again from a comparison with an integral: \(\sum _{j = 1}^n\frac{1}{c+j} \ge \int _{c+1}^{c+n} x^{-1}dx = \ln \frac{n+c}{c+1}\). Hence, we obtain

and the result concerning the bound in expectation in Theorem 4.2 now follows.

We now derive the rates for the high-probability bound in Theorem 4.2. With \(\gamma _n = \frac{c_0 c}{(c+n)}\), and \(c_0\in (0,\mu ((1 + \beta )^2\varPhi _{\max }^4)^{-1}]\), we have

Inequality (62) follows from the assumption on \(c_0\). To obtain the inequality (63), as in the rates for the bound in expectation, we have taken the exponential of the logarithm of the product, brought the product outside the logarithm as a sum, and applied the inequality \(\ln (1 - x) \le x\) which holds for \(x\in [0,1)\). The inequality in (64) can be inferred in a manner analogous to that in (55), while that in (65) follows in a similar manner as (58).

We now find three regimes for the rate of convergence, based on the choice of c. Each case is again derived from a comparison of the sum in (65) with an appropriate integral:

- (i):

-

\(\sum _{i=1}^{n} L_i^2 = O\left( (n+c)^{c_0 c\mu }\right)\) when \(c_0 c \mu \in (0,1)\),

- (ii):

-

\(\sum _{i=1}^{n} L_i^2 = O\left( n^{-1}\ln n\right)\) when \(c_0 c \mu =1\), and

- (iii):

-

\(\sum _{i=1}^{n} L_i^2 \le \frac{c_0^2 c^2 e }{(c_0 c \mu - 1)}(n+c)^{-1}\) when \(c_0 c \mu \in (1,\infty )\).

Thus, setting \(c \in (1/(c_0\mu ),\infty )\), the high probability bound from Proposition 8.1 gives

where \(K_{\mu ,c,c_0,\beta }\triangleq \dfrac{c_0^2c^2 e\left( R_{\max } + (1+\beta )H\varPhi _{\max }^2\right) ^2}{(c_0 c \mu - 1)}\). The high probability bound in Theorem 4.2 now follows. \(\square\)

8.3 Proof of expectation bound for batchTD without projection

The proof of the theorem follows just as the proof of Theorem 4.2 but using the following proposition in place of Proposition 8.1 part 2. The proof of the following proposition differs from that of Proposition 8.1 part 2 in that the decomposition of the computational error extracts a noise term dependent only on \({\hat{\theta }}_T\) rather than on \(\theta _n\), and so projection is not needed.

Proposition 8.2

Let \(z_n = \theta _n - {\hat{\theta }}_T\), where \(\theta _n\) is given by (10) with \(\varUpsilon (\theta )=\theta , \ \forall \theta \in {\mathbb {R}}^d\). Under (A1)–(A4), we have \(\forall \epsilon > 0\),

Proof

The proof involves two steps.

Step 1 (Unrolling the error recursion)

First, by rearranging the update rule (10) we obtain an iteration for the computational error \(z_n = \theta _n - {\hat{\theta }}_T\), and subsequently unroll this iteration:

where \(\varPi _k^n \triangleq \prod _{j = k}^n \left( I - \gamma _j(\phi (s_{i_j}) - \beta \phi (s_{i_j}'))\phi (s_{i_j})^\textsf {T}\right)\) for \(1\le k \le n\), and \(\varPi _k^n=I\) for \(k>n\).Footnote 5 In the above, we have used that the random increment at time n has the form \(f_n(\theta ) = (\theta ^\textsf {T}\phi (s_{i_n}) - (r_{i_n} + \beta \theta ^\textsf {T}\phi (s'_{i_{n}}) ))\phi (s_{i_n})\). Notice that by the definition of the LSTD solution, we have that \({\mathbb {E}}(f_n({\hat{\theta }}_T)\mid {\mathcal {F}}_{n-1}) = 0\), and so \(f_n({\hat{\theta }}_T)\) is a zero mean random variable.

Step 2 (Taking the expectation of the norm)

From Jensen’s inequality, we obtain

where we have used the identity \(\left\| x - y \right\| _2^2 \le 3 \left\| x \right\| _2^2 + 3 \left\| y \right\| _2^2\) for any two vectors x, y.

Using assumptions (A1) and (A2), we have

Furthermore, by assumption (A3), the random variables \(f_n({\hat{\theta }}_T)\) are bounded in norm by (\(R_{\max }+(1+\beta )\left\| {\hat{\theta }}_T\right\| _2\varPhi _{\max }^2\)). So, by applying the tower property of conditional expectations repeatedly together with (69) we arrive at the following bound:

\(\square\)

Proof of Theorem 4.4