Abstract

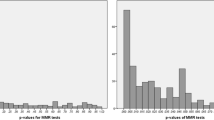

Most organizational researchers understand the detrimental effects of measurement errors in testing relationships among latent variables and hence adopt structural equation modeling (SEM) to control for measurement errors. Nonetheless, many of them revert to regression-based approaches, such as moderated multiple regression (MMR), when testing for moderating and other nonlinear effects. The predominance of MMR is likely due to the limited evidence showing the superiority of latent interaction approaches over regression-based approaches combined with the previous complicated procedures for testing latent interactions. In this teaching note, we first briefly explain the latent moderated structural equations (LMS) approach, which estimates latent interaction effects while controlling for measurement errors. Then we explain the reliability-corrected single-indicator LMS (RCSLMS) approach to testing latent interactions with summated scales and correcting for measurement errors, yielding results which approximate those from LMS. Next, we report simulation results illustrating that LMS and RCSLMS outperform MMR in terms of accuracy of point estimates and confidence intervals for interaction effects under various conditions. Then, we show how LMS and RCSLMS can be implemented with Mplus, providing an example-based tutorial to demonstrate a 4-step procedure for testing a range of latent interactions, as well as the decisions at each step. Finally, we conclude with answers to some frequently asked questions when testing latent interactions. As supplementary files to support researchers, we provide a narrated PowerPoint presentation, all Mplus syntax and output files, data sets for numerical examples, and Excel files for conducting the loglikelihood values difference test and plotting the latent interaction effects.

Similar content being viewed by others

Notes

As in most of the discussions in SEM, measurement errors in this article refer to random measurement errors only. Systematic measurement errors, such as method effects and researcher-introduced bias, can only be accounted for using specific research designs and other modeling approaches.

Equation 1 shows that either X or Z can be treated as the moderator. In other words, both independent variable and moderator are treated in the same way statistically. Theory and hypotheses should be used to determine which is the independent variable and which is the moderator (Dawson, 2014).

The reliability level should be set a priori; a sensitivity analysis using multiple levels of reliability is not recommended because that would be exploratory (Savalei, 2019). Based on the results of a simulation study, Savalei (2019) recommended fixing the measurement error a priori by a slightly over-estimated reliability (+ 0.05) when the researcher is comfortable in guessing the reliability. However, if the reliability is underestimated (− 0.05) or overestimated by a larger extent (+ 0.15), the recommended approach will result in less accurate and less precise parameter estimates, as well as lower coverage of CI and inflated type I error when compared with either SEM or the single indicator with Cronbach’s alpha correction approaches. These negative consequences were more obvious when the sample size approached 200.

Little, Slegers, and Card (2006) suggested an effects-coding method as an alternative way of identifying and scaling latent variables by fixing the sum of factor loadings across indicators to the number of indicators (for example, sum of factor loadings is fixed at 4 when there are four indicators for the construct), and the sum of indicator intercepts is fixed at 0. While the effects-coding method is equivalent to the marker variable method that fixes the factor loading of a marker variable to one by just changing the scale and intercept of the latent variable, the effects-coding method is particularly useful for interpreting latent means. However, the effects-coding method may not be appropriate for estimating latent interactions since latent variables that form the latent interaction should be centered (such that the mean is zero) to improve interpretability of the estimated regression coefficients. Besides, interpreting unstandardized regression coefficients will be more challenging when the effects-coding method is adopted. Moreover, the effects-coding method requires all indicators to have the same response scale while using the marker item method in LMS does not require this. We also caution against achieving identification by standardizing the latent variables (fixing the variance of latent variables at 1) because the standard errors of estimated parameters may be biased. Given these various issues, we recommend adopting the marker variable method to provide identification when LMS is used.

Although rare, there may be instances where Model 2 does not fit the data adequately and yet Model 3 fits the data significantly better than Model 2. Unfortunately, we will not be able to tell if Model 3 fits the data adequately in such situations.

However, these five levels are arbitrarily selected and if other levels (at least five levels for a continuous variable because the confidence intervals are not linear) of the moderator can be meaningfully identified, these more specific levels should be used instead. An example is when the moderator is a directly observed variable measured on a 5-point scale, then using 1, 2, 3, 4, and 5 as the five levels may be more meaningful. Note that since the moderator is mean-centered when converted into a latent variable before estimating the latent interaction effects, each level of the moderator in a simple slope test should be mean-centered as well. For example, suppose the mean of the moderator Z is 3.25 on a 5-point scale, this mean should be subtracted from each of the five levels of the moderator and the simple slope of the relationship between X and Y at Z equals 1 can be defined as the following under MODEL CONSTRAINT:

$$ {\mathrm{Slope}}_{2\mathrm{L}}=\mathrm{b}1+\mathrm{b}3\ast \left(-2.25\right);!1-3.25=-2.25. $$Little’s MCAR test is also available in the R package – BaylorEdPsych by A Alexander Beaujean at https://www.rdocumentation.org/packages/BaylorEdPsych/versions/0.5.

References

Aguinis, H. (1995). Statistical power problems with moderated multiple regression in management research. Journal of Management, 21, 1141–1158. https://doi.org/10.1016/0149-2063(95)90026-8.

Aguinis, H., Edwards, J. R., & Bradley, K. J. (2017). Improving our understanding of moderation and mediation in strategic management research. Organizational Research Methods, 20, 665–685. https://doi.org/10.1177/1094428115627498.

Aguinis, H., & Gottfredson, R. K. (2010). Best-practice recommendations for estimating interaction effects using moderated multiple regression. Journal of Organizational Behavior, 31, 776–786. https://doi.org/10.1002/job.686

Aiken, L. S., & West, S. G. (1991). Multiple regression: Testing and interpreting interactions. Newbury Park, London: Sage.

Algina, J., & Moulder, B. C. (2001). A note on estimating the Jöreskog–Yang model for latent variable interaction using LISREL 8.3. Structural Equation Modeling, 8, 40–52. https://doi.org/10.1207/S15328007SEM0801_3.

Asparouhov, T, & Muthén, B. (2013). Computing the strictly positive Satorra-Bentler chi-square test in Mplus. Mplus Web Notes: No. 12. Retrieved from http://www.statmodel.com/examples/webnotes/SB5.pdf.

Becker, T. E. (2005). Potential problems in the statistical control of variables in organizational research: A qualitative analysis with recommendations. Organizational Research Methods, 8, 274–289. https://doi.org/10.1177/1094428105278021.

Bernerth, J. B., & Aguinis, H. (2016). A critical review and best practice recommendations for control variable usage. Personnel Psychology, 69, 229–283. https://doi.org/10.1111/peps.12103.

Bollen, K. A. (1989). Structural equations with latent variables. New York, NY: John Wiley.

Bollen, K. A. (1995). Structural equation models that are nonlinear in latent variables. In P. V. Marsden (Ed.), Sociological methodology 1995 (Volume 25). American Sociological Association: Washington, D. C.

Bollen, K. A., & Paxton, P. (1998). Two-stage least squares estimation of interaction effects. In R. E. Schumacker & G. A. Marcoulides (Eds.), Interaction and nonlinear effects in structural equation modeling (pp. 125–151). Mahwah, NJ: Erlbaum.

Bryant, F. B., & Satorra, A. (2012). Principles and practice of scaled difference chi-square testing. Structural Equation Modeling: A Multidisciplinary Journal, 19, 372–398. https://doi.org/10.1080/10705511.2012.687671.

Busemeyer, J. R., & Jones, L. E. (1983). Analysis of multiplicative combination rules when the causal variables are measured with error. Psychological Bulletin, 93, 549–562. https://doi.org/10.1037/0033-2909.93.3.549.

Cham, H., West, S. G., Ma, Y., & Aiken, L. S. (2012). Estimating latent variable interactions with nonnormal observed data: A comparison of four approaches. Multivariate Behavioral Research, 47, 840–876. https://doi.org/10.1080/00273171.2012.732901.

Cheung, G. W. (2009). A multiple-perspective approach in analyzing data in congruence research. Organizational Research Methods, 12, 63–68. https://doi.org/10.1177/1094428107310091.

Cheung, G. W., & Lau, R. S. (2017). Accuracy of parameter estimates and confidence intervals in moderated mediation models: A comparison of regression and latent moderated structural equations. Organizational Research Methods, 20, 746–769. https://doi.org/10.1177/1094428115595869.

Cohen, J. (1988). Statistical power analysis for the behavioral sciences (2nd ed.). New Jersey: Lawrence Erlbaum.

Collins, L. M., Schafer, J. L., & Kam, C. M. (2001). A comparison of inclusive and restrictive strategies in modern missing data procedures. Psychological Methods, 6, 330–351. https://doi.org/10.1037//1082-989X.6.4.330.

Cortina, J. M. (1993). Interaction, nonlinearity, and multicollinearity: Implications for multiple regression. Journal of Management, 19, 915–922. https://doi.org/10.1177/014920639301900411.

Cortina, J. M., Chen, G., & Dunlap, W. P. (2001). Testing interaction effects in LISREL: Examination and illustration of available procedures. Organizational Research Methods, 4(4), 324–360. https://doi.org/10.1177/109442810144002.

Cortina, J. M., Markell-Goldstein, H. M., Green, J. P., & Chang, Y. (2019). How are we testing interactions in latent variable models? Surging forward or fighting shy? Organizational Research Methods. https://doi.org/10.1177/1094428119872531.

Cudeck, R. (1989). Analysis of correlation matrices using covariance structure models. Psychological Bulletin, 105(2), 317–327. https://doi.org/10.1037/0033-2909.105.2.317.

Dawson, J. F. (2014). Moderation in management research: What, why, when, and how. Journal of Business and Psychology, 29, 1–19. https://doi.org/10.1007/s10869-013-9308-7.

Du, D., Derks, D., Bakker, A. B., & Lu, C. (2018). Does homesickness undermine the potential of job resources? A perspective from the work-home resources model. Journal of Organizational Behavior, 39, 96–112. https://doi.org/10.1002/job.2212.

Dempster, A. P., Laird, N. M., & Rubin, D. B. (1977). Maximum likelihood from incomplete data via the EM algorithm. Journal of the Royal Statistical Society, Series B, 39, 1–38. https://doi.org/10.1111/j.2517-6161.1977.tb01600.x.

Edwards, J. R. (2009). Latent variable modeling in congruence research: Current problems and future directions. Organizational Research Methods, 12, 34–62. https://doi.org/10.1177/1094428107308920.

Enders, C. K. (2001). A primer on maximum likelihood algorithms available for use with missing data. Structural Equation Modeling: A Multidisciplinary Journal, 8, 128–141. https://doi.org/10.1207/S15328007SEM0801_7.

Ford, J. K., MacCallum, R. C., & Tait, M. (1986). The application of exploratory factor analysis in applied psychology: A critical review and analysis. Personnel Psychology, 39, 291–314. https://doi.org/10.1111/j.1744-6570.1986.tb00583.x.

Fornell, C., & Larcker, D. F. (1981). Evaluating structural equation models with unobservable variables and measurement error. Journal of Marketing Research, 18, 39–50. https://doi.org/10.1177/002224378101800104.

Goodman, S. A., & Svyantek, D. J. (1999). Person-organization fit and contextual performance: Do shared values matter. Journal of Vocational Behavior, 55, 254–275. https://doi.org/10.1006/jvbe.1998.1682.

Graham, J. W. (2003). Adding missing-data-relevant variables to FIML-based structural equation models. Structural Equation Modeling, 10, 80–100. https://doi.org/10.1207/S15328007SEM1001_4.

Hair, J. F., Black, W. C., Babin, B. J., & Anderson, R. E. (2009). Multivariate data analysis (7th ed.). Upper Saddle River, NJ: Prentice Hall.

Hall, R. J., Snell, A. F., & Foust, M. S. (1999). Item parceling strategies in SEM: Investigating the subtle effects of unmodeled secondary constructs. Organizational Research Methods, 2, 233–256. https://doi.org/10.1177/109442819923002.

Harring, J. R., Weiss, B. A., & Li, M. (2015). Assessing spurious interaction effects in structural equation modeling: A cautionary note. Educational and Psychological Measurement, 75, 721–738. https://doi.org/10.1177/0013164414565007.

Hayduk, L. A. (1987). Structural equation modeling with LISREL: Essentials and advances. Baltimore, MD: John Hopkins University Press.

Hayes, A. F. (2013). Introduction to mediation, moderation, and conditional process analysis: A regression-based approach. New York: Guilford Press.

Johnson, P. O., & Neyman, J. (1936). Tests of certain linear hypotheses and their application to some educational problems. Statistical Research Memoirs, 1, 57–93.

Jöreskog, K. G., & Yang, F. (1996). Nonlinear structural equation models: The Kenny-Judd model with interaction effects. In G. A. Marcoulides & R. E. Schumacker (Eds.), Advanced structural equation modeling: Issues and techniques (pp. 57–88). Mahwah, NJ: Erlbaum.

Judge, T. A., Rodell, J. B., Klinger, R. L., Simon, L. S., & Crawford, E. R. (2013). Hierarchical representations of the five-factor model of personality in predicting job performance: Integrating three organizing frameworks with two theoretical perspectives. Journal of Applied Psychology, 98, 875–925. https://doi.org/10.1037/a0033901.

Karasek, R. (1985). Job content instrument: Questionnaire and user’s guide (rev. 1.1). Los Angeles: University of Southern California.

Kelava, A., Werner, C. S., Schermelleh-Engel, K., Moosbrugger, H., Zapf, D., Ma, Y., Cham, H., Aiken, L. S., & West, S. G. (2011). Advanced nonlinear latent variable modeling: Distribution analytic LMS and QML estimators of interaction and quadratic effects. Structural Equation Modeling: A Multidisciplinary Journal, 18, 465–491. https://doi.org/10.1080/10705511.2011.582408.

Kenny, D. A., & Judd, C. M. (1984). Estimating the nonlinear and interactive effects of latent variables. Psychological Bulletin, 96, 201–210. https://doi.org/10.1037/0033-2909.96.1.201.

Klein, A., & Moosbrugger, H. (2000). Maximum likelihood estimation of latent interaction effects with the LMS method. Psychometrika, 65, 457–474. https://doi.org/10.1007/BF02296338.

Klein, A. G., & Muthén, B. O. (2007). Quasi maximum likelihood estimation of structural equation models with multiple interaction and quadratic effects. Multivariate Behavioral Research, 42, 647–674. https://doi.org/10.1080/00273170701710205.

Kline, R. B. (2016). Principles and practice of structural equation modeling (4th edition), pp.33-34. New York: The Guilford Press.

Lau, R. S., & Cheung, G. W. (2012). Estimating and comparing specific mediation effects in complex latent variable models. Organizational Research Methods, 15, 3–16. https://doi.org/10.1177/1094428110391673.

Ledgerwood, A., & Shrout, P. E. (2011). The trade-off between accuracy and precision in latent variable models of mediation processes. Journal of Personality and Social Psychology, 101, 1174–1188. https://doi.org/10.1037/a0024776.

Little, R. J. A. (1988). A test of missing completely at random for multivariate data with missing values. Journal of the American Statistical Association, 83, 1198–1202.

Little, T. D., Slegers, D. W., & Card, N. A. (2006). A non-arbitrary method of identifying and scaling latent variables in SEM and MACS models. Structural Equation Modeling: A Multidisciplinary Journal, 13, 59–72. https://doi.org/10.1207/s15328007sem1301_3.

Marsh, H. W., Wen, Z., & Hau, K. T. (2004). Structural equation models of latent interactions: Evaluation of alternative estimation strategies and indicator construction. Psychological Methods, 9, 275–300. https://doi.org/10.1037/1082-989X.9.3.275.

Maslowsky, J., Jager, J., & Hemken, J. (2015). Estimating and interpreting latent variable interactions: A tutorial for applying the latent moderated structural equations method. International Journal of Behavioral Development, 39, 87–96. https://doi.org/10.1177/0165025414552301.

Mooijaart, A. & Satorra, A. (2009). On insensitivity of the chi-square model test to nonlinear misspecification in structural equation models. Psychometrika, 74, 443–455.

Muthén B. (2012). Latent variable interactions. Technical note, 1-9, retrieved from http://www.statmodel.com/download/LV%20interaction.pdf.

Muthén, L. K., & Muthén, B. O. (1998-2014). Mplus user’s guide (Eighth ed.). Los Angeles, CA: Muthén & Muthén.

Newman, D. A. (2014). Missing data: Five practical guidelines. Organizational Research Methods, 17, 372–411. https://doi.org/10.1177/1094428114548590.

Peterson, M. F., Smith, P. B., Akande, A., Ayestaran, S., Bochner, S., Callan, V., et al. (1995). Role conflict, ambiguity, and overload: A 21-nation study. Academy of Management Journal, 38, 429–452. https://doi.org/10.2307/256687.

Preacher, K. J., & Selig, J. P. (2012). Advantages of Monte Carlo confidence intervals for indirect effects. Communication Methods and Measures, 6, 77–98. https://doi.org/10.1080/19312458.2012.679848.

Preacher, K. J., Zhang, Z., & Zyphur, M. J. (2016). Multilevel structural equation models for assessing moderation within and across levels of analysis. Psychological Methods, 21, 189–205. https://doi.org/10.1037/met0000052.

Rubin, D. B. (1976). Inference and missing data. Biometrika, 63, 581–592.

Sardeshmukh, S. R., & Vandenberg, R. J. (2017). Integrating moderation and mediation: A structural equation modeling approach. Organizational Research Methods, 20, 721–745. https://doi.org/10.1177/1094428119872531.

Satorra, A. & Bentler, P.M. (2010). Ensuring positiveness of the scaled difference chi-square test statistic. Psychometrika, 75, 243–248. doi:https://doi.org/10.1007/s11336-009-9135-y, 2010.

Savalei, V. (2019). A comparison of several approaches for controlling measurement error in small samples. Psychological Methods, 24(3), 352–370. https://doi.org/10.1037/met0000181.

Stroebe, M., van Vliet, T., Hewstone, M., & Willis, H. (2002). Homesickness among students in two cultures: Antecedents and consequences. British Journal of Psychology, 93, 147–168. https://doi.org/10.1348/000712602162508.

Su, R., Zhang, Q., Liu, Y., & Tay, L. (2019). Modeling congruence in organizational research with latent moderated structural equations. Journal of Applied Psychology, 104(11), 1404–1433. https://doi.org/10.1037/ap10000411.

Umbach, N., Naumann, K., Brandt, H., & Kelava, A. (2017). Fitting nonlinear structural equation models in R with package nlsem. Journal of Statistical Software, 77, 1–20. https://doi.org/10.18637/jss.v077.i07.

Van Veldhoven, M., de Jonge, J., Broersen, S., Kompier, M., & Meijman, T. (2002). Specific relationships between psychosocial job conditions and job-related stress: A three-level analytic approach. Work & Stress, 16, 207–228. https://doi.org/10.1080/02678370210166399.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

ESM 1

(PDF 100 kb)

Rights and permissions

About this article

Cite this article

Cheung, G.W., Cooper-Thomas, H.D., Lau, R.S. et al. Testing Moderation in Business and Psychological Studies with Latent Moderated Structural Equations. J Bus Psychol 36, 1009–1033 (2021). https://doi.org/10.1007/s10869-020-09717-0

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10869-020-09717-0