Abstract

Research universities have a strong devotion and advocacy for research in their core academic mission. This is why they are widely recognized for their excellence in research which make them take the most renowned positions in the different worldwide university leagues. In order to examine the uniqueness of this group of universities we analyze the scientific production of a sample of them in a 5 year period of time. On the one hand, we analyze their preferences in research measured with the relative percentage of publications in the different subject areas, and on the other hand, we calculate the similarity between them in research preferences. In order to select a set of research universities, we studied the leading university rankings of Shanghai, QS, Leiden, and Times Higher Education (THE). Although the four rankings own well established and developed methodologies and hold great prestige, we choose to use THE because data were readily available for doing the study we had in mind. Having done that, we selected the twenty academic institutions ranked with the highest score in the last edition of THE World University Rankings 2020 and to contrast their impact, we also, we compared them with the twenty institutions with the lowest score in this ranking. At the same time, we extracted publication data from Scopus database for each university and we applied bibliometrics indicators from Elsevier’s SciVal. We applied the statistical techniques cosine similarity and agglomerative hierarchical clustering analysis to examine and compare affinities in research preferences among them. Moreover, a cluster analysis through VOSviewer was done to classify the total scientific production in the four major fields (health sciences, physical sciences, life sciences and social sciences). As expected, the results showed that top universities have strong research profiles, becoming the leaders in the world in those areas and cosine similarity pointed out that some are more affine among them than others. The results provide clues for enhancing existing collaboration, defining and re-directing lines of research, and seeking for new partnerships to face the current pandemic to find was to tackle down the covid-19 outbreak.

Similar content being viewed by others

Introduction

Higher education institutions (HEIs) differ in size, scope, history, mission and subject inclinations. This diversity manifests the extensive growth and development of this academic sector since the medieval period (European Commission 2019). Within these academic institutions are the research universities that emphasize the preeminence of high-level research.

Research universities are considered as the key higher education institutions that focus on advanced research as the core part of their missions with statements such as “research-intensive” or “research-based” (Mammadov and Aypay 2020; Taylor 2006).

These kind of universities are positioned on the higher education market sharing similar challenges, ambitions, incentives and motivations (Leydesdorff et al. 2019). In general, these institutions manifest a strong priority to discover new scientific knowledge in an extensive range of fields, to stand out with disruptive innovations, and to produce the future researchers through their wide-ranging PhD programs (Mohrman et al. 2008).

Research universities are a contemporary invention, having emerged in Prussia in the early nineteenth century, and in the United States only in the aftermath of the Civil War. Since then, they are contributing to the national economies as well as to local and regional economies (Atkinson and Blanpied (2008)).

The Carnegie Classification of Institutions of Higher Education, the framework for classifying colleges and universities in the United States, categorized 131 American research universities (public and private-non-profit) as "R1: Doctoral Universities—Very high research activity": these universities have a very high level of research activity and per capita in such research activity. They are a small nucleus of highly productive “super research universities” (Carnegie Classification 2019; Carnegie Foundation 2001; Fernandez and Baker 2017).

The league of european research universities (LERU) is an established of consortium research-intensive universities across Europe. The League represents 23 leading universities pushing the frontiers of innovative research and sharing the values of high-quality teaching within an environment of internationally competitive research. According to LERU research universities are bringing cutting-edge knowledge and becoming the ultimate source of most innovation in the economy, society and culture of countries. They are providing a framework for an education through the skepticism, creativity and high-level capability (LERU 2019).

Europe has the oldest and leading research universities worldwide, such as the Paris-Sorbonne University (founded 1150), University of Heidelberg (1386) or Catholic University Leuven (1425) that produce large numbers of publications and are globally interconnected (Powell and Dusdal 2017a).

Furthermore, the Russell Group in the United Kingdom (UK) represents 24 leading research-intensive universities. These world-class universities play an important part in the intellectual and academic life of the UK and perform a huge social, economic and cultural impact across the country, and around the globe (Russell Group 2020). Some of these research universities have been recognized as the world’s most powerful and productive universities according to the number of publications per year. In fact some are very specialized in certain areas becoming the world leaders such as the strong scientific grounding of the University of Oxford in medical studies.

Powell and Dusdal (2017a, b) examined the research system of three countries, Germany, France and the United Kingdom, to find out that made them leaders in organizational development of research and scientific innovation. The differences in productivity in these three countries could not be completely explained by the differences in global economic investments or the number of researchers committed to science. If not, it is a question of institutionalization and distribution organizational in which researchers produce science. The key findings of their study were that the institutionalization of the research universities in these countries support high levels of national scientific productivity.

The Times Higher Education-World University Rankings (THE) is one of the leading global performance approaches that judges research-intensive universities across all of their core missions- following a set of five criteria in order to be included in the Overall Ranking. In THE rankings two criteria that have to do explicitly with the concept of research universities are: (1) Sufficient publications—An institution is required to publish more than 1000 papers over the previous 5 years, and more than 150 publications in any single year. Thresholds are also applied per subject for the subject rankings. (2) Subject breadth—An institution must not be focused on a single narrow subject area (more than 80% of their publication output is from one subject area) (THE-World University Rankings 2020) Besides this ranking there are other rankings of institutions such as the Academic Ranking of World Universities also known as Shanghai Ranking, originally compiled and issued by Shanghai Jiao Tong University in 2003 with an emphasis on Nobel Prize laureates and ground-breaking publications, the QS World University Rankings which is compiled using six simple metrics that capture university performance (Academic Reputation, Employer Reputation, Faculty/Student Ratio, Citations per faculty, International Faculty Ratio, International Student Ratio) (QS World University Rankings 2020), the SCImago Institutions Rankings (SIR) is a classification of academic and research-related institutions ranked by a composite indicator that combines three different sets of indicators based on research performance, innovation outputs and societal impact measured by their web visibility (SCImago Institutions Rankings (SIR), 2020), the CWTS Leiden Ranking 2020 offers a sophisticated set of bibliometric indicators that provide statistics at the level of universities on scientific impact, collaboration, open access publishing, and gender diversity (CWTS Leiden Ranking 2020). After studying these ranking methodologies, we choose THE because of the comprehensive nature of its indicators and because we had data available from the THE WUR, the Scopus database, and Scival (SciVal 2019; Scopus 2019a).

Several studies have examined research productivity of universities across scientific literature (Toutkoushian et al. 2003; Butler 2007; Chen et al. 2010; Cloete et al. 2011; Huang 2012; Jung 2012; McGill and Settle 2012; Abramo and D’Angelo 2014; Altbach 2015; Musiige and Maassen 2015; Budd 2017; Webber 2011; Bolli et al. 2016; Hu et al. 2017). Van Raan (2008) observed that the top research universities are about twice as efficient in production and citations as compared to the bottom performance universities based on the field normalized indicator.

Following this brief introduction to the framework of research universities and considering that we have not found studies that analyze the publication profiles of research universities, we ask ourselves a series of research questions: Q1: What could be making these universities to stand out scientifically? Q2: Are their research preferences different from those of other universities? Q3: Are research universities similar in research preferences? Q4: What are the most commonly used keywords in their production? Q5: Are research universities collaborating with each other?

Our main research hypothesis is that top-ranked research universities excel in certain areas becoming world leaders in these fields. In relation to this, research universities are similar in research preferences. However, there are some universities more analogous to each other. We also study the question of how top universities are influencing the rest of the universities in the ranking, particularly, those in the lower positions.

Data and method

We used the last edition of times higher education (THE) Ranking World University rankings 2020 to select a sample of research universities. Founded in 2004, the times higher education (THE) World University Rankings is one of the most globally recognized university ranking systems; they support students in decision making for studying, early career researchers deciding next steps for hosting institutions, research agencies that allocate research budgets, policymakers planning and checking scientific results for funding programs, etc. The ranking encompasses a total of 1400 institutions in its last edition 2020 (THE 2020).

We chose the first 20 leading research universities ordered by the general score, these are as follows (in descending order)

Institution | Country | |

|---|---|---|

1 | University of Oxford | United Kingdom |

2 | California institute of technology | United States |

3 | University of Cambridge | United Kingdom |

4 | Stanford University | United States |

5 | Massachusetts institute of technology (MIT) | United States |

6 | Princeton University | United States |

7 | Harvard University | United States |

8 | Yale University | United States |

9 | University of Chicago | United States |

10 | Imperial College London | United Kingdom |

11 | University of Pennsylvania | United States |

12 | Johns Hopkins University | United States |

13 | University of California, Berkeley | United States |

14 | ETH Zurich (Swiss Federal Institute of Technology in Zurich) | Switzerland |

15 | University College London (UCL) | United Kingdom |

16 | Columbia University | United States |

17 | University of California, Los Angeles | United States |

18 | University of Toronto | Canada |

19 | Cornell University | United States |

20 | Duke University | United States |

The American institutions on the list: Harvard University, California Institute of Technology, Stanford University, Massachusetts Institute of Technology, Princeton University, Harvard University, Yale University, University of Chicago, University of Pennsylvania, Johns Hopkins University, University of California, Berkeley, Columbia University, University of California, Los Angeles, Cornell University, Duke University belong to the section of R1: Doctoral Universities—very high research activity in the Carnegie Classification. Furthermore, the British universities University of Oxford, University of Cambridge, Imperial College London, University College London belong to the Russell Group and LERU and also form the Golden Triangle. The Golden Triangle is a term that was initially used to describe the grouping of elite, highly-funded universities located in the southern English cities of Oxford, Cambridge, and London. These universities are prominent in the world of education, research and innovation both in the UK and on an international scale. The institutions that form the Golden Triangle receive some of the highest research incomes, funding and grants from the UK government, European and global agencies, and the largest financial endowments of all British universities.

For the purpose of comparing publication profiles between universities we decided to select the twenty universities that have the lowest scores:

Institution | Country | |

|---|---|---|

1001 + | Warsaw University of Technology | Poland |

1001 + | Wenzhou Medical University | China |

1001 + | University of West Bohemia | Czech Republic |

1001 + | Western Paraná State University (Unioeste) | Brazil |

1001 + | West University of Timişoara | Romania |

1001 + | Wrocław University of Science and Technology | Poland |

1001 + | Yamagata University | Japan |

1001 + | Yamaguchi University | Japan |

1001 + | University of Yamanashi | Japan |

1001 + | Yanshan University | China |

1001 + | Yarmouk University | Jordan |

1001 + | Yazd University | Iran |

1001 + | Yeditepe University | Turkey |

1001 + | Yıldız Technical University | Turkey |

1001 + | Yokohama National University | Japan |

1001 + | Yuan Ze University | Taiwan |

1001 + | Zagazig University | Egypt |

1001 + | University of Zagreb | Croatia |

1001 + | University of Zanjan | Iran |

1001 + | Zhejiang University of Technology | China |

As we can observe they belong to China, Japan, Turkey, etc. The majority of them are based in Asia. There are at least 7 of 20 outside Asia, and 5 (25%) based in Europe. Just because they appear in the last ranking positions does not mean they do not have quality indicators. The lower ranking universities can be better choice for some students. Even though the academic position on the ranking can be essential to decide where to study, students consider other things when choosing their university: financial aspect, employment rate after graduation, professional training, learning environment or in general student life satisfaction (University World News 2013).

The Scopus data source has been used to extract the scientific outputs of the institutions. Scopus database remains the exclusive data source powering the flagship THE rankings. Scopus is the largest abstract and citation database of peer-reviewed research literature including over 24,000 titles, including 4200 Open Access journals from more than 5000 international publishers. This covers journals included in WOS, and its coverage is statistically balanced in terms of topics, countries, languages and publishers (de Moya-Anegon et al. 2007). In addition, SciVal has also been used to extract other metrics. SciVal is a research evaluation tool based on Scopus data which allows us to visualize research performance, benchmark relative to peers, develop strategic partnerships, identify and analyze new, emerging research topics, and find out collaborators, etc. All data were downloaded cutoff date in December 2019.

We extracted the publications produced per each institution in a 5-year publication window from 2014 to 2018 (the same publication window used in THE 2020). The total number of documents downloaded were 1,160,626 (all type of documents is included). At the same time, we classified each title in its scientific area basing us on the ASJC (All Science Journal Classification) scheme in Scopus. Therefore, we count the number of publications that universities have in the different subject areas. There are over 300 sub-subject areas in Scopus, which aggregate up to 27 broad subject areas. The table below shows the broad subject area classification (Scopus 2019b):

Physical sciences | Chemical engineering, Chemistry, Computer science, Earth and Planetary sciences, Energy, Engineering, environmental science, Material science, Mathematics, Physics and Astronomy, Multidisciplinary |

Health sciences | Medicine, Nursing, Veterinary, Dentistry, Health professions, Multidisciplinary |

Social sciences | Arts and humanities, Business, Management and accounting, Decision sciences, Economics, Econometrics and finance, Psychology, Social sciences, Multidisciplinary |

Life sciences | Agricultural and biological sciences, Biochemistry, Genetics and molecular biology, Immunology and Microbiology, Neuroscience, Pharmacology, Toxicology and pharmaceutics, Multidisciplinary |

The VOSviewer software tool was used for creating the term maps. VOSviewer was developed at Leiden University’s Centre for Science and Technology Studies (CWTS) and provides techniques for constructing and visualizing bibliometric networks. These networks may for instance include journals, researchers, or individual publications, and they can be constructed based on citation, bibliographic coupling, co-citation, or co-authorship relations. VOSviewer also offers text mining functionality that can be used to construct and visualize co-occurrence networks of important terms extracted from a body of scientific literature. (VOSviewer 2020).

The data gathered was processed using the XLSTAT statistical program.

Needless to mention that scientific collaboration is an implicit and fundamental aspect of research universities considering that they participate in projects funded by international research agencies involving a large number of collaborating partners such as the EU-funded projects which involve collaboration between organizations from different EU countries or associated countries. For this reason, we considered to include an analysis of scientific collaboration in this study.

The Times Higher Education World University Rankings 2020 calibrated performance indicators to provide the most comprehensive and balanced comparisons, trusted by students, academics, university leaders, industry and governments. The performance indicators are grouped into five areas: Teaching (the learning environment); Research (volume, income and reputation); Citations (research influence); International outlook (staff, students and research); and Industry Income (knowledge transfer).

The variables relevant to this study are:

-

Research (volume, income and reputation): 30%

-

Reputation survey: 18%

-

Research income: 6%

-

Research productivity: 6%

The most prominent indicator in this category looks at a university’s reputation for research excellence among its peers, based on the responses to our annual Academic Reputation Survey.

Research income is scaled against academic staff numbers and adjusted for purchasing-power parity (PPP). This indicator is fully normalised to take account of each university’s distinct subject profile, reflecting the fact that research grants in science subjects are often bigger than those awarded for the highest-quality social science, arts and humanities research.

To measure productivity, they count the number of publications published in the academic journals indexed by Elsevier’s Scopus database per scholar scaled for institutional size and normalized for subject.

-

-

Citations (research influence): 30%

This indicator examines the research influence capturing the average number of citations received by the university’s published work. The data include all indexed publications between 2014 and 2018 and the citations made in the 6 years from 2014 to 2019 to these publications. The data are normalized to reflect variations in citation volume between different subject areas. This means that institutions with high levels of research activity in subjects with traditionally high citation counts do not gain an unfair advantage.

Variables extracted from Scopus and SciVal:

-

Scientific production: Total documents produced by a research university during the study period from 2014 to 2018. All types of documents are considered (articles, conference proceedings, reviews, books, book chapters…). The scientific production of a research university is counted as the documents with at least one author from that research university (according to the affiliation that appears in the credits of authorship).

-

Scientific production in the different subject areas: Publications produced by these universities in the 27 subject areas and 300 sub-subject areas.

-

Citations: Number of citations to the set of publications from 2014 to 2018.

-

Citations per Publication: It is calculated by dividing the total number of citations by the total number of publications.

-

Collaboration %: Percentage of publications made in collaboration: international, national, institutional or single.

-

Collaboration Impact: It is the citations obtained by publications made with international, national or institutional collaborators.

-

Field-weighted citation impact (FWCI) is the ratio of the total citations received by the denominator’s output, and the total citations that would be expected based on the average of the subject field.

A FWCI of:

-

Exactly 1 means that the output performs just as expected for the global average.

-

More than 1 means that the output is more cited than expected according to the global average. For example, 1.23 means 23% more cited than expected.

-

Less than 1 means that the output is cited less than expected according to the global average.

-

-

Outputs in top citation percentiles: This metric shows how many publications of each research universities are in the top 1%, 5%, 10% or 25% most cited documents globally.

-

Publications in top journal percentiles: This indicator calculates how many publications of each research university are in the top 1%, 5%, 10% or 25% most-cited journals.

-

Academic-corporate collaboration: Indicates the degree of collaboration between research universities and corporate affiliations or industrial sectors.

-

Academic-corporate collaborationiImpact: Calculates the average citations received by the outputs that have been co-authored by researchers from both academic and corporate affiliations.

Analysis of scientific collaboration between research universities:

-

We have calculated the scientific collaboration between the twenty research universities in that period of time. For example: how many publications do Cambridge and Oxford have in common? At the same time, we have calculated the percentage of collaboration with other universities not included in the sample.

Measuring through cosine similarity and agglomerative hierarchical clustering the research preferences of universities.

We have applied cosine similarity and agglomerative hierarchical clustering to analyze the research similarities among research universities.

Cosine similarity measures the similarity between two vectors of an inner product space. It is measured by the cosine of the angle between two vectors and determines whether two vectors are pointing in roughly the same direction. It is often used to measure document similarity in text analysis.

This is the mathematical definition of the cosine similarity:

Cosine similarity:

Because this analysis compares the research preferences in subject areas instead of the absolute research output of universities consequently the cosine distance is more appropriate than Euclidean distance. Cosine distance refers to cosine of the angle between two vectors. Generally, the angle between two vectors is used as a measure of divergence between the vectors. A cosine value of 0 means that the two vectors are at 90° to each other (orthogonal) and have no match. The closer the cosine value to 1, the smaller the angle and the greater the match between vectors. (Singhal 2001; Zhao and Suzuki 2015; Zhigang et al. 2017; Lin et al. 2018).

The publications of each research university in several subject areas were counted, and the disciplinary distribution vector of every research university was constructed to calculate the degree of discipline similarity between any two research universities based on cosine similarity formula. After calculating the similarity of the research universities, we applied the method of Agglomerative Hierarchical Clustering.

Agglomerative hierarchical clustering

Cluster analysis allows to identify the natural structure of a dataset and also to categorize the objects into different clusters where objects within a same cluster are quite similar to each other (Arbelaitz et al. 2013). Hierarchical clustering (Zhang et al. 1996) shows a hierarchical structure of the input data set instead of only one partition. It successively seeks to build a hierarchy of clusters (presented in a dendrogram) via two types of strategies: Agglomerative hierarchical clustering algorithm or AGNES (agglomerative nesting) (bottom–up) and Divisive Hierarchical clustering algorithm or DIANA (divisive analysis) (top down).

Such categorization is inspired from the mechanism of grouping the objects whether bottom up or top–down approach. AHC is considered as a bottom–up hierarchical approach where each object is set in a separated cluster (Bouguettaya et al. 2015) then AHC will merge such clusters into larger clusters. The process continues until a specific termination has been reached. A complete linkage algorithm aims to identify the similarity between two clusters by measuring two nearest data points that are located in different clusters. Hence, the merge will be done between the clusters that have a minimum distance (most similar) between each other (Barirani et al. 2013; Sammour 2019).

The text mining function of VOSviewer (van Eck and Waltman 2011) was chosen to create a map based on the text used in the title and abstract fields of research universities publications. We chose fractionalization and binary counting and selecting 5 as the minimum number of documents in which a term is required to occur in order to remain in the analysis. The number of terms that meet the threshold was 2500. Terms that do not occur in a sufficiently large number of documents have now been removed from the analysis. Among the remaining terms, we proposed to keep 60% of the terms that should remain in the analysis. VOSviewer removed from the analysis the terms that appear to be least relevant (van Eck and Waltman 2017; Waltman and van Eck 2013; Waltman et al. 2010). At the same time, we use a thesaurus file to remove non-informative terms and to merge terms that are synonyms. A thesaurus file is a plain text file consisting of two tab-delimited columns.

Results

Overview scientific performance research universities

In Table 1 we can observe indicators to measure research performance of the top 20 research universities. We extracted indicators from THE 2020 (overall, research, citations) and we applied metrics from SciVal (scholarly output, citation count, collaboration, etc.)

The three universities with the highest THE general score are University of Oxford, California Institute of Technology and University of Cambridge. According to the THE indicators we can confirm that University of Oxford had the highest score in Research (99.6) and Stanford university excelled others in the Citations metric (99.9). Regarding to SciVal metrics Harvard university produced the largest number of publications (149,867) and also obtained the largest amount of citations (2,843,045). Massachusetts Institute of Technology (MIT) achieved the highest average of citations per publication (20.6).

ETH Zurich was the most collaborative university with a 65% of its papers in collaboration. Stanford university succeeded in collaboration impact (27.4) and in the FWCI (2.66). California Institute of Technology published the highest percentage in output in top 10% citation percentiles (32.7). It is maybe worthwhile to mention that the Jet Propulsion Laboratory, a federal owned lab funded by NASA, is operated as a division of California Institute of Technology partially it may explain the high position of the university in metrics. Massachusetts Institute of Technology (MIT) had the highest percentage of publications in top 10% journal percentiles (53.8).

Regarding to Academic-Corporate Collaboration and Academic-Corporate Collaboration Impact, Massachusetts Institute of Technology (8.7) and Yale University (57.4) highlighted in these indicators from others.

As we can appreciate according to the metrics, they all share high levels of productivity, collaboration and impact levels. All of them have high level of publications in top 10% journals, and a FWCI higher than 2 indicating that their publications have been cited twice more than would be expected based on the world average for similar publications.

The following Table 2 shows the Spearman correlation among all the indicators from the research universities.

The overall score present high statistical correlations with Research (0.883), Citations per publication (0.719), FWCI (0.559), and Output in Top 10% Citation Percentiles (0.712).

Research is highly correlated with Output in Top 10% Citation Percentiles (0.666) and with Citations per Publication (0.586). Thus, it is not surprising that Citations metric is related with Citations per publication (0.764), Collaboration Impact (0.530), FWCI (0.937) and Output in Top 10% Citation Percentiles (0.511).

The results seem to indicate that the main research indicators in the ranking have high correlation with Scival impact indicators which leads to the conclusion that high levels of impact cause universities to excel in positions in the ranking compared to others.

Research preferences at top 20 research Universities

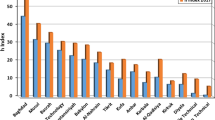

On average, the areas where research universities publish the most are Medicine, Physics and Astronomy and Engineering. However, there are some which have a strong and defined research profile such as California Institute of Technology in Physics and Earth and Planetary Sciences. Harvard university, Johns Hopkins University and University of Pennsylvania in Medicine. And MIT in Physics and Engineering among others (Fig. 1).

The smallest data value is 0.03 in the area of Veterinary, the maximum 64.68 in Medicine. On average, the research universities contribute to the different areas a 6.113 on average, and the standard deviation of the data is around 8.511. As shown in the above Table 3, some universities have higher standard deviation than others, for example, Johns Hopkins University, Harvard University and California Institute of Technology. These universities have the defined publication profile in the field of Medicine and Physics and Astronomy. University of California at Berkeley is the institution with the lowest standard deviation and is one of the universities whose contribution to the disciplines is more distributed.

Cosine similarity

The cosine distances between two research universities were calculated based on the data in Table 3. Table 4 shows the research universities with the most and the least similarity in the publication in subject areas. Princeton University and University of California at Berkeley, both important American universities in the world with similar economic status and structure, are the closest in the vector space of research subjects (0.906).

The next closest pair is Princeton University and University of Cambridge, two prominent research universities located in the different countries (0.902). The third pair is Harvard University and University College London (0.895). Although the absolute count of publications varies between them both have similar disciplinary structure and research preferences. The most dissimilar research universities pair is Imperial College London and Stanford University (0.675).

As shown in Table 5, the resulting adjacency matrix represents the level of similarity between two research universities in sub-subject areas. For example, the cosine similarity between massachusetts institute of technology (MIT) and ETH Zurich is 0.901 whereas that between MIT and University of Pennsylvania is 0.728. It means MIT is more similar to ETH Zurich than University of Pennsylvania in the contribution in publication to the sub-subject areas.

Scientific similarity among 20 leading universities and lowest one in THE ranking

We created the next Table to find out if there are research similarities between the first twenty leading research areas and the last twenty research universities in THE.

The cosine similarity matrix between the world’s top and lowest-ranked research universities were calculated based on the data of publications. As shown in Table 6, the resulting adjacency matrix represents the level of similarity between two universities. For example, the cosine similarity between Universidade Estadual do Oeste do Paraná and Columbia University is 0.872 whereas that between Universidade Estadual do Oeste do Paraná and Cornell University is 0.659. It means Universidade Estadual do Oeste do Paraná is more similar to Columbia University than Cornell University in the distribution of research subjects. Zhejiang University of Technology and Massachusetts Institute of Technology are very similar (0.860).

Based on the data that we can see in Table 6, the top and lowest universities are similar in publication patterns. No surprisingly, these results show us that top research universities are influencing and being followed by the rest of the universities ranked by THE, particularly those universities in the 1000 + band. This constitutes an opportunity to enhance research collaboration, especially with those universities in countries that hold abundant and unique natural resources, not to mention the talent of local researchers and their universities.

The partnerships with other universities and networks open up global opportunities for students and staff. Collaboration among different kind of universities would give them a spectrum of diversity based on different views and backgrounds. This enriches the quality of the research improving the research profile of the university. Top universities could benefit greatly from collaborations with other low ranked universities as they could offer them different approach not before considered making them more prolific and varied. The participation in international networks links the universities to like-minded universities around the world ensuring that they continue at the vanguard of transnational research exchanges.

Agglomerative hierarchical clustering (AHC)

The next dendrogram shows the hierarchical relationship in publication preferences between twenty research universities. The dendrogram y-axis can be used to evaluate the similarity between research universities (Fig. 2).

The height axis displays the distance (or dissimilarity function) between observations and/or clusters. The horizontal bars indicate the point at which two clusters/observations are merged. For example, ETH Zurich and massachusetts institute of technology (MIT) are merged at a distance of 4, which is the minimum one among all other distances. Also, California Institute of Technology and Princeton University are merged at the value of 6. Finally, ETH Zurich and massachusetts institute of technology (MIT) and California Institute of Technology and Princeton University are merged, and their distance is 10.

University of Cambridge and University of California at Berkeley are more similar to each other than they are to University of Chicago, Stanford University and Imperial College London.

University of Chicago and Stanford University are more similar to each other than they are to University of Cambridge, University of California at Berkeley, and Imperial College London.

Imperial College London is substantially different from all of these research universities.

In the next Fig. 3, we have created a co-occurrence term map based on data used in title and abstract field of the publications of top 20 research universities. We have chosen the binary counting, which means that only the presence or the absence of a term matters.

The terms used in the publications are categorized into four clusters: Cluster 1 or red (terms related to health sciences), Cluster 2 or green (physics sciences), Cluster 3 or blue (social sciences) and Cluster 4 or yellow (terms related to life sciences). The highest number of words are concentrated in the cluster 1- this has a connection to the average research preferences of universities in medicine. The weight given to the color of a certain cluster is determined by the number of items belonging to that cluster in the neighborhood of the point.

A high number of repetitions of terms means that they are continually being chosen by universities in their publications. Therefore, these occurrences used by the leading universities might provide other researchers an overview either to avoid these research topics which are related with these particular words or it might show that it is a potential research topic that they can explore in future to become a new research topic.

The term Patient is most used in the cluster 1/red with a number of 4896 occurrences and a relevance of 0.81; Research is the word most used in the cluster 2/green with 4490 with a relevance of 0.67; the third is Structure used 4197 times in the cluster 3/blue with a relevance of 0.29; and Cell with 3967 occurrences and 0.82 relevance in the cluster 4/yellow.

As a mode of reflection we have noticed that words resulting from university publications are fairly general therefore we are considering that perhaps a study by keywords would be relevant as future work and could give us more information.

In Fig. 4 we have represented the most commonly terms used only in the titles. The links represent the words relations.

The interrelationships of terms in the map above are based on distance, i.e. the distance of circles shows the relationship between terms. Bigness or smallness of each circle reveal the number of occurrences of each concept. Then, as shown in Fig. 4, concentration of the words in the titles would mostly be upon the subjects such as”measurement”, “production”, “search”, “channel”, “preface”, “galaxy”. The distribution of the words in the map reflects that scholars in research universities have used words from different subjects in their titles.

For the purpose of finding out how research universities collaborate with each other we have done a search on Scopus for the affiliation field with the same publishing window.

Scientific collaboration among 20 leading universities

Research universities have collaborated in a total of 63,361 documents among them. 29,844 were published in Open Access (Gold OA, full Open Access, hybrids, Open Archive and Promotional Access) and 33,517 were published in Other (subscription or Green OA). The three main funding sponsors behind these publications are National Institutes of Health (10,034), National Science Foundation (7699) and European Research Council (2821).

In Fig. 5 the evolution in collaborative publications of research universities is shown.

We can see that there is a slight increase in collaborative publications over the years. International collaboration has been an important priority for the institutional research strategies of all major research universities meanwhile the substantial development of the ‘endless frontier’ of research. For research universities, international networks generate recognition and demonstrate the broader engagement and status as an institution, facilitating to attract students and staff from an international context. At the level of individual researchers and research groups there has also been a substantial collaboration growth.

It is worth remembering that, universities are increasingly aware that high levels of collaboration, especially international collaboration leads to an increase in visibility and therefore the citations received. Growth in international research collaboration is almost certainly enhanced by the incentives provided by funding agencies such as national science foundation (NSF), Welcome trust, or European Framework Programs, etc.

Figure 6 represents the areas where universities concentrate most of their publications when they collaborate.

The five areas where research universities collaborated the most are: Medicine (23,136), Physics and Astronomy (12,711) and Biochemistry, Genetics and Molecular Biology (11,614). On the contrary, Business, Management and Accounting (704), Decision Sciences (685), Health Professions (466) are the subject areas with fewer publications in common (Fig. 6).

The three most collaborating universities are University of Oxford (11,220), UCL (10,168), University of Cambridge (9647), all of them from the United Kingdom (Fig. 7).

The topics involved in the collaborations can be outlined in the keywords assigned to each publication. Keywords provide quick access to scientific publications and are highly effective in terms of bibliometric analysis when examining the knowledge structure of scientific fields (Zhang et al. 2016; Vargas-Quesada et al. 2017). Keywords offer a practical description of research hotspots and topic of prominence (the attention by researchers to set of related research problems and concepts). In the present study, VOSviewer was used to create a knowledge map of keyword co-occurrence with 1500 terms in 11 clusters, 16,119 Links (Fig. 8) and to identify the top 5 keywords.

The top 5 keywords were “Measurement” “Search” “Observation” “Event” and “Galaxy”.

Conclusions

Our study has analyzed the research preferences of twenty leading research universities according to the last edition of THE rankings 2020. We have used the Scopus database to extract data and SciVal to apply indicators with a 5-year publication window from 2014 to 2018.

Most of the universities in our sample belong to the United States and all of them are classified as "R1: Doctoral Universities—Very high research activity" in the Carnegie Classification.

The top twenty research universities have in common high levels of scientific collaborations and high number of publications in top journals. Their publications have been cited twice more than would be expected based on the world average for similar publications as stated in their FWCI.

According to Spearman the indicators Research from THE, citations per publication, FWCI and the output in top 10 citation percentiles from SciVal have an impact on the Overall score of universities in the last edition.

Through cosine similarity we have been able to find out that research universities are quite similar to each other in research preferences, however there are some more related to each other than others, such as Princeton University and University of Cambridge or John Hopkins University with University of California at Los Angeles. Regarding to the analysis of sub subject areas we detected that University of Oxford is very similar to MIT and ETH Zurich.

With agglomerative hierarchical clustering (AHC) we were able to classify research universities in groups according their dissimilarity applying Euclidean distance.

Looking the data in this study, we can affirm that the lowest-ranked research universities are similar in research preferences to the twenty top.

The keywords used in the publications of research universities are categorized into four clusters: Cluster 1 or red (keywords related to health sciences), Cluster 2 or green (keywords related to physics sciences), Cluster 3 or blue (keywords related to social sciences)- Cluster 4 or yellow (keywords related to life sciences). Most of the words are concentrated in the first clusters of health sciences.

The twenty research universities have a total of 63,361 publications in collaboration. There is a slight increase in collaborative publications over the years. They have collaborated the most in the field of Medicine, Physics and Astronomy, Biochemistry, Genetics and Molecular Biology. University of Oxford, UCL and University of Cambridge are the most collaborating universities. University of Washington is the university that collaborates most with the 20 research universities.

Also, we presented results and an analysis of similarity among the leading universities and the ones in the 1000 + band of THE WUR and indication of the opportunity this represents for enhancing international collaboration and partnership in a win-to-win relationship.

Next steps and future works

In future research, we would like to conduct a deeper analysis of the research patterns of the lowest- ranked research universities with the top-ranked and explore further opportunities in addition to the ones we have presented in this study, and possible extend the band to universities ranked in the 800 + positions. At the same time, we could analyse the scientific collaboration between the runners-up universities and top universities to know the benefits obtained from this diversity. Also, we would like to analyse the differences between comprehensive universities and specialized universities. This makes it possible to compare more universities by research fields and specializations. To do this we would use bibliographic databases to extract publications. We would like to find out how beneficial are these collaborations in terms of visibility and impact. The international co-authorship also increases the citations of the institution, yet there are untapped potential to enhance the collaboration among institutions.

Another future research idea is to analyze the scientific contribution of these twenty universities to the scientific production in the 17 goals UN SDGs (No Poverty; Zero Hunger; Good Health and Well-being; Quality Education; Gender Equality; Clean Water and Sanitation; Affordable and Clean Energy; Decent Work and Economic Growth; Industry, Innovation, and Infrastructure; Reducing Inequality; Sustainable Cities and Communities; Responsible Consumption and Production; Climate Action; Life Below Water; Life On Land; Peace, Justice, and Strong Institutions; Partnerships for the Goals. For this we would extract the production of these universities in these 17 goals of UN SDGs how the production of the universities is in each of the challenges and we would apply cosine similarity to see if they are similar to each other on these issues as well.

References

Abramo, G., & D’Angelo, C. A. (2014). How do you define and measure research productivity? Scientometrics, 101, 1129–1144. https://doi.org/10.1007/s11192-014-1269-8.

Altbach, P. G. (2015). What counts for academic productivity in research universities? International Higher Education, 79, 6–7. https://doi.org/10.6017/ihe.2015.79.5837.

Arbelaitz, O., Gurrutxaga, I., Muguerza, J., PéRez, J. M., & Perona, I. (2013). An extensive comparative study of cluster validity indices. Pattern Recognition, 46(1), 243–256.

Atkinson, R. C., & Blanpied, W. A. (2008). Research universities: Core of the US science and technology system. Technology in Society, 30(1), 30–48.

Barirani, A., Agard, B., & Beaudry, C. (2013). Competence maps using agglomerative hierarchical clustering. Journal of Intelligent Manufacturing, 24(2), 373–384.

Bolli, T., Olivares, M., Bonaccorsi, A., Daraio, C., Aracil, A. G., & Lepori, B. (2016). The differential effects of competitive funding on the production frontier and the efficiency of universities. Economics of Education Review, 52, 91–104. https://doi.org/10.1016/j.econedurev.2016.01.007.

Bouguettaya, A., Yu, Q., Liu, X., Zhou, X., & Song, A. (2015). Efficient agglomerative hierarchical clustering. Expert Systems with Application, 42(5), 2785–2797.

Budd, J. (2017). Faculty publications and Citations: A longitudinal examination. College & Research Libraries, 78(1), 80. https://doi.org/10.5860/crl.78.1.80.

Butler, L. (2007). Assessing university research: A plea for a balanced approach. Science and Public Policy, 34(8), 565–574. https://doi.org/10.3152/030234207X254404.

Carnegie Foundation. (2001). The carnegie classification of institutions of higher education. California: Carnegie Foundation.

Chen, Y., Nixon, M. R., Gupta, A., & Hoshower, L. (2010). Research productivity of accounting faculty: An exploratory study. American Journal of Business Education, 3(2), 101–115.

Carnegie classification of institutions of higher education (2019). Retrieved from 13 May 2020https://carnegieclassifications.iu.edu/

Cloete, N., Bailey, T., & Pillay, P. (2011). Universities and economic development in Africa. African Minds: Cape.

CWTS Leiden Ranking. (2020). Retrieved November 24, from https://www.leidenranking.com/.

European commission (2019)

Fernandez, F., & Baker, D. (2017). Science production in the United States: An unexpected synergy between mass higher education and the super research University. In J. J. W. Powell, D. P. Baker, & F. Fernandez (Eds.), The century of science (international perspectives on education and society) (Vol. 33, pp. 85–111). Bingley: Emerald Publishing Limited.

https://eacea.ec.europa.eu/national-policies/eurydice/content/types-higher-education-institutions-91_en Retrieved from 13 May 2020.

Hu, Z., Guo, F., & Hou, H. (2017). Mapping research spotlights for different regions in China. Scientometrics, 110, 779–790.

Huang, M. (2012). Exploring the h-index at the institutional level: A practical application in world university rankings. Online Information Review, 36(4), 534–547. https://doi.org/10.1108/14684521211254059.

Jung, J. (2012). International research collaboration among academics in china and south korea. International Journal of Chinese Education, 1(2), 235–254. https://doi.org/10.1163/22125868-12340005.

Lancho-Barrantes, B. S., & Cantu-Ortiz, F. J. (2019). Quantifying the research preferences of top research universities: Why they make a difference? Paper presented at the 17th international conference on scientometrics and informetrics, ISSI 2019 - Proceedings (vol. 2, pp. 1488–1499).

League of European Research Universities (2019). www.leru.org.

Leydesdorff, L., Bornmann, L., & Mingers, J. (2019). Statistical significance and effect sizes of differences among research universities at the level of nations and worldwide based on the leiden rankings. Journal of the Association for Information Science and Technology, 70(5), 509–525. https://doi.org/10.1002/asi.24130.

Lin, G., Hu, Z., & Hou, H. (2018). Research preferences of the G20 countries: A bibliometrics and visualization analysis. Current Science, 115(8), 1477–1485.

Mammadov, R., & Aypay, A. (2020). Efficiency analysis of research universities in Turkey. International Journal of Educational Development. https://doi.org/10.1016/j.ijedudev.2020.102176.

McGill, M. M., & Settle, A. (2012). Identifying effects of institutional resources and support on computing faculty research productivity, tenure, and promotion. International Journal of Doctoral Studies, 7, 167.

Mohrman, K., Ma, W., & Baker, D. P. (2008). The research university in transition: The emerging global model. Higher Education Policy, 21(1), 5–27.

Moya-Anegón, F., Chinchilla-Rodríguez, Z., Vargas-Quesada, B., Corera-Álvarez, E., Muñoz-Fernández, F. J., González-Molina, A., et al. (2007). Coverage analysis of Scopus: A journal metric approach. Scientometrics, 73(1), 53–78.

Musiige, G., & Maassen, P. (2015). Faculty perceptions of the factors that influence research productivity at Makerere University. Knowledge production and contradictory functions in African higher education, pp. 109–127. https://zenodo.org/record/824663#.X72HyhP7R0s.

Powell, J. J., & Dusdal, J. (2017a). Science production in Germany, France, Belgium, and Luxembourg: Comparing the contributions of research universities and institutes to science, technology, engineering, mathematics, and health. Minerva, 55(4), 413–434.

Powell, J. J. W., & Dusdal, J. (2017b). The European Center of science productivity: Research universities and institutes in France, Germany, and the United Kingdom. International Perspectives on Education and Society, 33, 55–83.

QS World University Rankings. (2020). Retrieved November 24, from https://www.topuniversities.com/qs-world-university-rankings.

Sammour, M. (2019). An agglomerative hierarchical clustering with association rules for discovering climate change patterns. International Journal of Advanced Computer Science and Applications, 10(3), 233–240. https://doi.org/10.14569/IJACSA.2019.0100330.

SciVal (2019) Retrieved from 28 Jan 2019 https://www.scival.com/.

Scopus (2019a) Retrieved from 28 Jan 2019 https://www.scopus.com/home.uri..

Scopus (2019b). ASJC classification. Retrieved from 13 Dec 2019. https://service.elsevier.com/app/answers/detail/a_id/14834/supporthub/scopus/.

Singhal, A. (2001). Modern information retrieval: A brief overview. IEEE Data Engineering Bulletin, 24, 35–43.

Taylor, J. (2006). Managing the unmanageable: The management of research in research-intensive universities. Higher Education Management and Policy, 18(2), 3–4.

The Russell Group, Retrieved from 13 May 2020 https://russellgroup.ac.uk/about/.

Toutkoushian, R. K., Porter, S. R., Danielson, C., & Hollis, P. R. (2003). Using publications counts to measure an institution’s research productivity. Research in Higher Education, 44(2), 121–148.

University World News (2013). The role of research universities in developing countries. Retrieved from 28 Jan 2019 https://www.universityworldnews.com/post.php?story=20130811091502202.

van Eck, N. J., & Waltman, L. (2017). Citation-based clustering of publications using CitNetExplorer and VOSviewer. Scientometrics, 111(2), 1053–1070.

Van Raan, A. F. J. (2008). Bibliometric statistical properties of the 100 largest European research universities: Prevalent scaling rules in the science system. Journal of the American Society for Information Science and Technology, 59(3), 461–475. https://doi.org/10.1002/asi.20761.

Vargas-Quesada, B., Chinchilla-Rodríguez, Z., & Rodriguez, N. (2017). Identification and visualization of the intellectual structure in graphene research. Frontiers in Research Metrics and Analytics, 2, 7. https://doi.org/10.3389/frma.2017.00007.

VOSviewer - Visualizing scientific landscapes. (2020). Retrieved November 24, from https://www.vosviewer.com/.

Waltman, L., & van Eck, N. J. (2013). A smart local moving algorithm for large-scale modularity-based community detection. European Physical Journal B. https://doi.org/10.1140/epjb/e2013-40829-0.

Waltman, L., van Eck, N. J., & Noyons, E. C. M. (2010). A unified approach to mapping and clustering of bibliometric networks. Journal of Informetrics, 4(4), 629–635.

Webber, K. L. (2011). Measuring Faculty Productivity. In J. Shin, R. Toutkoushian, & U. Teichler (Eds.), University Rankings. The changing academy – the changing academic profession in international comparative perspective. Dordrecht: Springer.

World University Rankings | Times Higher Education (THE). (2020). Retrieved November 24, from https://www.timeshighereducation.com/world-university-rankings.

Zhang, T., Ramakrishnan, R., & Livny, M. (1996). BIRCH: An efficient data clustering method for very large databases. SIGMOD, 25(2), 103–114.

Zhang, J., Yu, Q., Zheng, F., Long, C., Lu, Z., & Duan, Z. (2016). Comparing keywords plus of WOS and author keywords: A case study of patient adherence research. Journal of the Association for Information Science Technology, 67, 967–972. https://doi.org/10.1002/asi.23437.

Zhao, K., & Suzuki, E. (2015). Clustering classifiers learnt from local datasets based on cosine similarity, pp. 150–159. https://doi.org/10.1007/978-3-319-25252-0_16.

Zhigang, H., Gege, L., Haiyan, H. (2017). Research preferences of the G20 countries: A bibliometrics and visualization analysis. Paper presented at the ISSI 2017 - 16th International Conference on Scientometrics and Informetrics, Conference Proceedings, pp. 709–720.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Lancho-Barrantes, B.S., Cantu-Ortiz, F.J. Quantifying the publication preferences of leading research universities. Scientometrics 126, 2269–2310 (2021). https://doi.org/10.1007/s11192-020-03790-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-020-03790-1