Abstract

This meta-analysis investigated the extent to which relative metacomprehension accuracy can be increased by interventions that aim to support learners’ use of situation-model cues as a basis for judging their text comprehension. These interventions were delayed-summary writing, delayed-keywords listing, delayed-diagram completion, self-explaining, concept mapping, rereading, and setting a comprehension-test expectancy. First, the general effectiveness of situation-model-approach interventions was examined. The results revealed that, across 28 effect sizes (comprising a total of 2,236 participants), situation-model-approach interventions exerted a medium positive effect (g = 0.46) on relative metacomprehension accuracy. Second, the interventions were examined individually. The results showed that, with the exception of self-explaining, each intervention had a significant positive effect on relative metacomprehension accuracy. Yet, there was a tendency for setting a comprehension-test expectancy to be particularly effective. A further meta-analysis on comprehension in the selected studies revealed that, overall, the situation-model-approach interventions were also beneficial for directly improving comprehension, albeit the effect was small. Taken together, the findings demonstrate the utility of situation-model-approach interventions for supporting self-regulated learning from texts.

Similar content being viewed by others

Introduction

Much of the learning students engage in is based on reading text material. For example, students complete reading assignments, review class notes, and read books and articles for writing a term paper. When doing so, they must monitor and judge their comprehension of the material. Students who more accurately judge which material they have comprehended well and which material they have comprehended less well—that is, students who exhibit greater relative metacomprehension accuracy—can better regulate their learning, for instance, by selectively restudying. This adaptive regulation in turn results in an improved comprehension (e.g., Rawson et al. 2011; Shiu and Chen 2013; Thiede et al. 2003, 2012). However, learners typically reveal poor relative metacomprehension accuracy (see, e.g., Prinz et al. 2020; Thiede et al. 2009). One major reason for inaccurate metacomprehension is that learners base their judgments on inappropriate cues (e.g., quantity of recallable details; Thiede et al. 2010). Hence, interventions to support learners to use more appropriate cues (e.g., reproducibility of key ideas) have been developed, such as delayed-summary writing, delayed-keywords listing, delayed-diagram completion, self-explaining, concept mapping, rereading, and setting the right test expectancy (see, e.g., Griffin et al. 2013, 2019a; Wiley et al. 2016b). Yet, to date, the extent to which these interventions are effective to improve relative metacomprehension accuracy is unclear. Therefore, we conducted a meta-analysis to uncover and compare the effectiveness of the interventions. The present meta-analysis extends previous narrative reviews in important ways. First, clear sets of rules were predefined to search and select studies for inclusion, and sophisticated statistical methods (e.g., weighting effect sizes by their precision) were used to aggregate the selected studies. This led to increased statistical power and a reliable estimate of the average effect size (see, e.g., Higgins and Green 2011). In addition, the meta-analysis indicates to what extent the effect is consistent across the studies (see, e.g., Borenstein et al. 2009). Second, this meta-analysis has the potential to contribute to greater evidence-based practice. There has been the call for education to place increased emphasis on empirical evidence as basis for making decisions about instructional practices. A central requirement for such evidence-based practice are methodologically sound meta-analyses that synthesize findings to reveal effective methods (see, e.g., Slavin 2008).

Difficulties with and Importance of Relative Metacomprehension Accuracy

The earliest studies that used and established the typical relative metacomprehension-accuracy research paradigm were conducted by Maki and Berry (1984) and Glenberg and Epstein (1985). In this paradigm, participants read several texts, predict their comprehension of each text, and complete a test on each text. Relative metacomprehension accuracy is then operationalized as the intraindividual correlation between a participant’s predictions and actual test performance scores across the set of texts. Typically, Gamma or Pearson correlation coefficients are computed. These coefficients range from −1.00 to +1.00, with stronger positive correlations indicating greater accuracy (see, e.g., also Griffin et al. 2019a).

Research has demonstrated that learners are typically poor at accurately discriminating between more and less well-understood texts. A recent meta-analysis showed that relative metacomprehension accuracy is on average as low as +.24 (i.e., without any intervention or support; Prinz et al. 2020). This is in line with previous narrative reviews suggesting that the average level of relative metacomprehension accuracy is between +.20 and +.30 (Dunlosky and Lipko 2007; Lin and Zabrucky 1998; Maki 1998; Thiede et al. 2009; Weaver et al. 1995). However, it is important that learners accurately judge their comprehension of texts because it enables them to effectively regulate their studying by devoting time and resources to where they are needed (e.g., De Bruin et al. 2011; Schleinschok et al. 2017; Thiede et al. 2003, 2012). Consequently, to support text-based learning, it is crucial to discover ways to improve relative metacomprehension accuracy.

The Role of Cue Utilization for Relative Metacomprehension Accuracy

The cue-utilization framework provides an explanation for why relative metacomprehension accuracy is commonly poor (Griffin et al. 2009; cf. Koriat 1997; for an overview of constraints on relative metacomprehension accuracy, see Thiede et al. 2009). This framework supposes that learners do not have direct access to their cognitive states but have to infer their level of comprehension based on cues. In doing so, they can use a variety of cues, and judgment accuracy depends on how strongly the cues used are tied to the mental text representation that determines performance on the respective comprehension test. Heuristic cues, such as domain familiarity (e.g., Glenberg et al. 1987) or topic interest (e.g., Lin et al. 1996), are available whether or not a text has been read and are therefore insensitive to idiosyncrasies of a specific mental text representation. Hence, they typically yield inaccurate judgments. Representation-based cues, such as ease of processing (e.g., Maki et al. 1990) or accessibility of textual information (e.g., Baker and Dunlosky 2006), become available only during or after reading and are therefore more closely related to a particular mental text representation. Thus, they generally yield more accurate judgments.

Nonetheless, not all representation-based cues are equally valid indicators of comprehension. Their validity depends on the correspondence between the level of mental text representation the cues are related to and the level of mental text representation tapped by the comprehension test. According to the construction-integration model of text comprehension (Kintsch 1998), a learner concurrently constructs a mental representation of a text on multiple levels: The surface level is constructed as the words are encoded. The textbase level is constructed as the words are parsed into propositions and as links between the propositions are formed. The situation-model level is constructed as different pieces of textual information are connected with each other and with the learner’s prior knowledge. The situation model represents a learner’s deep understanding and what is typically referred to as text comprehension. Thus, a comprehension test should assess a learner’s situation model by requiring inferences or application of the textual information rather than a learner’s surface or textbase representations by demanding the recall of specific textual information (which is often referred to as metamemory instead of metacomprehension, see, e.g., Griffin et al. 2013, 2019a; for a detailed discussion on designing texts and tests for assessing the situation model, see Wiley et al. 2005). When a comprehension test actually assesses the situation model, metacomprehension will be less accurate if learners use cues tied to their surface or textbase representations but more accurate if learners use cues that tap their situation model. This perspective is called the situation-model approach to metacomprehension (Griffin et al. 2019a; Wiley et al. 2016b). However, Thiede et al. (2010, Experiment 1; see also Jaeger and Wiley, 2014, Experiment 2) gathered self-reports of cue use and found that, rather than using situation-model cues, learners tend to default to heuristic cues or representation-based cues related to their memory of specific textual information, leading to less accurate judgments.

Potential explanations for why learners often focus on heuristic and memory-based cues are provided by the effort monitoring and regulation framework (De Bruin et al. 2020). For one, this framework suggests that monitoring and regulation can be impaired through unnecessary cognitive load imposed by the learning task or the inadequate distribution of load between the task and metacognitive processes. Such resource constraints might force learners to draw on more easily available heuristic and memory-based cues (cf. Griffin et al. 2009). In addition, the framework suggests that learners sometimes misinterpret cues, in particular, their invested mental effort. Research has indicated that, although high mental effort does not necessarily indicate that learning is ineffective, learners tend to judge their learning as low when they experience high mental effort (e.g., Baars et al. 2014, 2018, Experiment 2; Dunlosky et al. 2006; Koriat et al. 2009; Schleinschok et al. 2017; see also Baars et al. 2020; Carpenter et al. 2020). Hence, learners are confronted with the difficulty that their invested mental effort is a salient but not necessarily predictive cue of their actual learning and particularly their deeper comprehension.

Situation-Model-Approach Interventions to Improve Relative Metacomprehension Accuracy

Following the situation-model approach, interventions have been developed to increase relative metacomprehension accuracy by encouraging learners to use cues that are related to their situation model. More precisely, these situation-model-approach interventions are designed to help learners generate, attend to, or select situation-model cues (see, e.g., Griffin et al. 2013, 2019a; Wiley et al. 2016b).

Cue-Generation Interventions

Some situation-model-approach interventions support the generation of situation-model cues. They do so either by requiring encoding on the situation-model level, as is the case for self-explaining and concept mapping, or by requiring retrieval of the situation model, as is the case for delayed-generation tasks (Thiede et al. 2019).

Delayed Generation of Summaries, Keywords, and Diagrams

Research has shown that delayed-generation tasks, such as writing a summary of each text, listing keywords that capture the essence of each text, and completing a diagram for each text, can lead to enhanced relative metacomprehension accuracy. More precisely, when these tasks were completed after a short delay, that is, after all texts had been read, relative metacomprehension accuracy was higher than when they were completed immediately after reading each text or when no task was completed (delayed-summary writing: Anderson and Thiede 2008; Engelen et al. 2018, Experiment 1; Thiede and Anderson 2003; Thiede et al. 2010, Experiment 1; delayed-keywords listing: De Bruin et al. 2011; Engelen et al. 2018, Experiment 1; Shiu and Chen 2013; Thiede et al. 2003, 2005, 2012, Study 2, 2017; Waldeyer and Roelle, 2020; delayed-diagram completion: Van de Pol et al. 2019, Experiment 1; Van Loon et al. 2014; see also Van de Pol et al. 2020). It is important to note that neither delayed-nongenerative tasks (i.e., thinking about a text or reading keywords) nor simply delaying predictions affected relative metacomprehension accuracy (Thiede et al. 2005). Consequently, both the generation and the delay between reading and the task play a crucial role in improving relative metacomprehension accuracy. First, a generation task encourages learners to access a representation of the text from memory prior to making a judgment. Specifically, writing a summary, listing keywords, or completing a diagram functions as a kind of self-test, providing learners with cues such as how successfully they can retrieve textual information. Second, the surface and textbase representations of a text fade quite rapidly, whereas the situation model is more stable over time (Kintsch et al. 1990). Hence, when performing a generation task immediately after reading, learners have easy access to and may primarily use their surface and textbase representations. However, the cues obtained from this experience are of limited predictive validity for later comprehension-test performance, which is largely determined by the situation model. In contrast, performing a generation task after a delay allows for the surface and textbase representations to decay, forcing learners to access and use their situation model. The cues gained from this experience are more predictive of later comprehension-test performance and therefore improve relative metacomprehension accuracy (see, e.g., also Griffin et al. 2013, 2019a).

Some studies provided support for the proposed mechanisms underlying the effectiveness of delayed-generation tasks by investigating learners’ cue use (Anderson and Thiede 2008; Engelen et al. 2018, Experiment 1; Thiede et al. 2010, Experiment 1; Thiede and Anderson 2003; Van de Pol et al. 2019, Experiment 1; Van Loon et al. 2014). For example, Anderson and Thiede (2008) conducted qualitative analyses of the generated summaries. The results indicated that, when writing summaries immediately, the learners frequently based their predictions on how well they felt they could reproduce details reported in a text. In contrast, when writing summaries after a delay, the learners often used their perception of how well they could describe gist ideas of a text to predict their comprehension. Thus, delayed-summary writing enhanced the learners’ use of situation-model cues, producing a higher accuracy level (see also Thiede and Anderson 2003; Van de Pol et al. 2019, Experiment 1; Van Loon et al. 2014). Furthermore, Thiede et al. (2010, Experiment 1) had learners report what cues they used when making their predictions following delayed-, immediate-, and no-summary writing. Most learners reported relying on how much textual information they could recall. This cue supported accurate predictions only in the delayed-summary condition. This result indicates that delayed-generation tasks render representation-based cues more valid by shifting focus to the situation model. However, although some studies found that delayed-keywords listing is also effective to increase relative metacomprehension accuracy for primary- and secondary-school students (De Bruin et al. 2011, Experiments 1 and 2; Thiede et al. 2017), some studies did not find benefits for these younger learners (De Bruin et al. 2011, Experiment 2; Engelen et al. 2018, Experiment 1; Pao 2014). Similarly, for delayed-summary writing, one study did not find a benefit for primary-school students (Engelen et al. 2018, Experiment 1). This suggests that the favorable impact of delayed-generation tasks may not consistently occur. Presumably, the quality of the keywords (e.g., whether they refer to central ideas or unimportant facts), summaries (e.g., whether they cover all main ideas), or diagrams (e.g., whether relations are clear) as well as learners’ cognitive capacity to generate them plays a role for the tasks’ effectiveness (cf., e.g., De Bruin et al. 2011; Roebers et al. 2007).

Self-Explaining

Self-explaining is the activity of explaining to oneself the meaning of information presented in a text as well as how it fits together with other information and the overall theme (e.g., Chi 2000). Self-explaining during reading can increase relative metacomprehension accuracy (Griffin et al. 2008, Experiment 2, 2019b, Experiment 4; Wiley et al. 2016aFootnote 1; Wiley et al. 2008, Experiment 2). By considering the relevance of textual information and trying to make inferences during self-explaining, learners simultaneously construct and reflect on their situation model, generating situation-model cues. Hence, in contrast to delayed-generation tasks, which prompt learners to draw on an already constructed situation model (i.e., retrieval-based cue-generation intervention), self-explaining encourages learners to focus on their situation model during reading (i.e., encoding-based cue-generation intervention; Thiede et al. 2019). Relatedly, in contrast to delayed-generation tasks, which need to be performed after a time lag to increase access to situation-model cues, self-explaining directly involves the situation model, making a delay superfluous (see, e.g., also Griffin et al. 2019a; Wiley et al. 2016b). Griffin et al. (2008, Experiment 2) found a positive effect of self-explaining on relative metacomprehension accuracy above mere rereading and independent of individual differences in working-memory capacity and reading skill. This finding suggests that the effect of self-explaining is not tied to the alleviation of processing constraints that can impede monitoring but rather attributable to the generation of situation-model cues. However, in a study by Jaeger and Wiley (2014, Experiment 2), self-explaining failed to improve relative metacomprehension accuracy, indicating that the effect of this intervention may be of limited reliability.

Concept Mapping

Concept mapping is the activity during which learners visually depict the connections among concepts presented in a text (e.g., Weinstein and Mayer 1986). Concept mapping during reading has been found to enhance relative metacomprehension accuracy (Redford et al. 2012, Experiment 2; Thiede et al. 2010, Experiment 2). Concept mapping may be effective for two reasons. First, the activity yields an external visual representation of a learner’s understanding. Thus, when, for example, trying to comprehend later parts of a text, a learner can review earlier content on the concept map. This eases working-memory demands that can be devoted to monitoring. Second, similar to self-explaining, concept mapping promotes learners not only to build a situation model but also to reflect on it, generating situation-model cues (i.e., encoding-based cue-generation intervention; Thiede et al. 2019; see, e.g., also Redford et al. 2012; Wiley et al. 2016b). Supporting this assumption, Thiede et al. (2010, Experiment 2) found that learners used their perception of how many appropriate connections between concepts they could make in a concept map as a situation-model cue for predicting their comprehension, which led to an increased accuracy level. Moreover, Redford et al. (2012, Experiment 2) revealed that only learners who constructed concept maps but not learners who received completed concept maps achieved greater relative metacomprehension accuracy than learners in a no-intervention control group. This outcome provides evidence that the act of constructing concept maps is critical because it enables learners to generate relevant cues.

Cue-Attention Intervention

A further intervention that can promote relative metacomprehension accuracy is repeated reading, which targets at facilitating the attention to situation-model cues (Dunlosky and Rawson 2005; Griffin et al. 2008, Experiment 1Footnote 2; Rawson et al. 2000). During a first reading, learners may often lack the cognitive resources to concurrently engage in comprehension and monitoring processes and therefore primarily rely on heuristic cues. In contrast, during a second reading, many of the processes involved in comprehension do not have to be re-executed (e.g., Millis et al. 1998). This allows learners to put more resources into careful monitoring (see, e.g., also Griffin et al. 2019a). Accordingly, Griffin et al. (2008) found that the relative metacomprehension accuracy of learners with low working-memory capacity or low reading skill was limited after a single reading. However, after rereading, their accuracy level was increased such that they were as accurate as learners with high working-memory capacity or high reading skill. The authors argued that rereading provides a second chance at monitoring, which is especially beneficial for learners whose limited cognitive capabilities prevent them from thorough monitoring during the first pass (for alternative but empirically less supported explanations for the rereading effect, see also Dunlosky and Rawson 2005; Rawson et al. 2000). In addition, Rawson et al. (2000) ruled out the possibility that improved relative metacomprehension accuracy after rereading is an artifact of familiarity with all texts prior to providing predictions or of increased test reliability. Furthermore, as indicated previously, when rereading was combined with a self-explanation intervention, both were independently beneficial for enhancing relative metacomprehension accuracy. This outcome supports the theoretical distinction between the two mechanisms: Interventions like self-explaining enable the generation of situation-model cues, whereas rereading enhances the attention to the cues (Griffin et al. 2008, Experiment 2). It should be noted, however, that some studies did not find a benefit of rereading over reading once (Chiang et al. 2010; Margolin and Snyder 2018), and, in some studies, learners exhibited low relative metacomprehension accuracy despite rereading (Bugg and McDaniel 2012; Pao 2014; Redford et al. 2012, Experiment 1).

Cue-Selection Intervention

A common feature of the interventions described so far is that they prompt learners to engage in additional tasks as a means of increasing access to situation-model cues. However, even when learners have enhanced access to such cues, they might not use them if they do not understand that this is the level of mental text representation that will be assessed during testing. Hence, the comprehension-test-expectancy intervention is another effective approach to improving relative metacomprehension accuracy because it guides learners toward selecting situation-model cues: Learners are instructed that upcoming tests will assess their deeper understanding and receive respective sample test questions (Griffin et al. 2019b; Thiede et al. 2011; Wiley et al. 2008, 2016a). For example, in a study by Griffin et al. (2019b, Experiment 1), learners in the memory-test-expectancy group were told that their memory of specific details would be tested, and they completed sample memory test questions about practice texts. In contrast, learners in the comprehension-test-expectancy group were told that their ability to draw inferences would be tested, and they completed sample inference test questions about practice texts. Learners in the no-expectancy group were only told that they would be taking tests and did not receive any sample test questions. Concerning the critical texts, all learners completed both memory and inference tests. The results revealed that the learners in the no-expectancy and memory-test-expectancy groups made judgments that were more predictive of their performance on the memory than the inference tests (i.e., greater relative metamemory accuracy). However, the learners in the comprehension-test-expectancy group made judgments that were more closely related to their performance on the inference than the memory tests (i.e., greater relative metacomprehension accuracy).

Prior research has indicated that learners typically view the concept of text comprehension in terms of memory of textual information rather than deep understanding and hence anticipate tests requiring recall (see, e.g., Wiley et al. 2005). Consequently, learners tend to rest upon memory-based instead of situation-model cues to evaluate their comprehension (Jaeger and Wiley 2014, Experiment 2; Thiede et al. 2010, Experiment 1). Thus, poor relative metacomprehension accuracy at least in part results from learners’ incorrect assumptions about what comprehension means and what they therefore will be tested on. The comprehension-test-expectancy intervention is useful to override this reading-for-memory mindset so that learners select appropriate cues and make accurate judgments (see, e.g., also Griffin et al. 2019a; Wiley et al. 2016b). The test-expectancy effect even emerged when the intervention was implemented after reading (Griffin et al. 2019b, Experiment 3). This result confirms that test expectancies influence which cues learners select when making judgments rather than learners’ access to particular cues. Moreover, when combined with self-explaining, the comprehension-test-expectancy intervention provided a unique, nonoverlapping benefit for relative metacomprehension accuracy (Griffin et al. 2019b, Experiment 4; see also Wiley et al. 2008, Experiment 2). This finding supports the theoretical distinction between interventions that promote cue generation versus cue selection.

The Present Study

The reviewed literature shows that interventions aimed at increasing the use of situation-model cues usually support relative metacomprehension accuracy. However, the extent of their effectiveness is unclear. Therefore, we conducted a meta-analysis examining the overall effectiveness of situation-model-approach interventions. In doing so, we focused on the most thoroughly investigated interventions described above, namely delayed-summary writing, delayed-keywords listing, delayed-diagram completion, self-explaining, concept mapping, rereading, and setting a comprehension-test expectancy. We expected to find a positive average effect across all interventions (Hypothesis 1) as well as for each intervention individually (Hypothesis 2).

The different interventions come with different costs and benefits. For example, in contrast to interventions that are executed directly during reading, such as self-explaining, delayed-generation tasks have to be performed several minutes after reading to yield positive effects. Moreover, whereas some interventions can easily be implemented in instruction, such as rereading, others, for instance, concept mapping, require training before learners can carry them out in a way that leads to positive effects. In addition, some interventions, such as setting a comprehension-test expectancy, do not facilitate external diagnostic processes, but some of them, like summary writing, produce outputs that enable instructors to evaluate learners’ understanding. Yet, the effectiveness of the interventions relative to each other is unknown. Thus, in a further step, we statistically compared their effectiveness (Research Question 1).

Although all of the situation-model-approach interventions aim at enhancing learners’ use of situation-model cues, they do so in different ways. Specifically, they can be classified into interventions promoting the generation of, attention to, or selection of situation-model cues (see, e.g., Griffin et al. 2013; Wiley et al. 2016b). This theoretical distinction has been empirically supported as the effects of self-explaining (cue-generation intervention) were nonoverlapping with the effects of rereading (cue-attention intervention) and setting a comprehension-test expectancy (cue-selection intervention; Griffin et al. 2008, Experiment 2; Griffin et al. 2019b, Experiment 4; Wiley et al. 2008, Experiment 2). Furthermore, Thiede et al. (2019) suggested to distinguish between encoding-based and retrieval-based cue-generation interventions. Some cue-generation interventions, namely self-explaining and concept mapping, encourage learners to encode a text in a manner that supports the construction of a situation model and thus the availability of situation-model cues, whereas other cue-generation interventions, namely delayed-generation tasks, promote learners to retrieve information about their situation model after reading and hence the availability of situation-model cues. As it is unclear whether the different intervention types (cue-generation encoding-based interventions, cue-generation retrieval-based interventions, cue-attention intervention, and cue-selection intervention) vary in their effectiveness for supporting relative metacomprehension accuracy, we explored to what extent their effects differ (Research Question 2).

Finally, although not the focus of our study, we were interested in the impact of the interventions not only on relative metacomprehension accuracy but also on comprehension (Research Question 3). To draw conclusions about the overall effectiveness of the interventions for supporting self-regulated learning from texts, both their indirect effects via metacomprehension and their direct effects on comprehension need to be considered.

Method

Literature Search and Study Selection

In a recent meta-analysis, we investigated learners’ baseline level of relative metacomprehension accuracy (Prinz et al. 2020). In the context of this recent meta-analysis, we conducted a comprehensive literature search for studies on relative metacomprehension accuracy, yielding 800 unique records. After a first screening of these records for relevance to the study aim, 214 records remained. Then, the following inclusion criteria were applied:

-

The focus was learning from texts (i.e., not learning of single sentences or vocabularies, more than one text).

-

Metacomprehension judgments were provided before testing (i.e., predictions).

-

Relative metacomprehension accuracy was operationalized with the Gamma or Pearson correlation.

-

There was a sample or condition in which participants did not receive an intervention or any other kind of manipulation (e.g., prejudgment recall of the texts, sample questions about the critical texts, monetary incentives to provide accurate judgments).

-

Sufficient statistical information for effect size computation was reported.

In addition, the following exclusion criteria were set:

-

The data were also reported in another record (i.e., when a study was published in a journal as well as in a conference proceeding or dissertation, only the journal article was included).

-

Participants were learners with learning disabilities or second-language learners.

-

The authors of the meta-analysis did not master the language in which the study was reported (e.g., Japanese).

-

The texts were presented in the context of a multimedia learning environment or illustrations or animations were presented along with the texts.

-

Participants had the opportunity to regulate their learning before testing.

-

Paradigm-atypical reading procedures, predictions, or tests were applied (e.g., participants were allowed to highlight text, participants made their predictions 1 day after reading the texts, the texts were available during testing).

After this second screening, 66 eligible records remained (see Prinz et al. 2020, for more details). For the present meta-analysis, we used this pool to further filter out relevant studies. To do so, additional inclusion criteria were applied:

-

Learners were school or university students. Prior research has shown that, although school students’ baseline level of relative metacomprehension accuracy is usually lower than that of university students (e.g., De Bruin et al. 2011; Redford et al. 2012, Experiment 2; Thiede et al. 2012, Study 1; see also Prinz et al. 2020), interventions that can improve relative metacomprehension accuracy among university students also have the potential to do so among school students (e.g., De Bruin et al. 2011; Redford et al. 2012, Experiment 2; see also Thiede et al. 2017). Therefore, we focused on learners at all education levels.

-

The texts were expository texts.

-

Comprehension of each text was assessed via questions that required inferences or application of the textual information (because the interventions are targeted at this kind of knowledge, that is, the situation model).

-

A control condition in which participants did not receive an intervention was compared to a condition in which participants received a situation-model-approach intervention (i.e., an intervention that is implemented with the goal to focus learners on situation-model cues).

-

For each particular intervention (i.e., with a similar implementation), more than one study had to meet the criteria for inclusion described so far. This led to the exclusion of the following interventions: generating drawings (Schleinschok et al. 2017; Thiede et al. 2019; but see Van de Pol et al. 2020, for an overview on the impact of drawing and mapping activities), generating explanations for others (Fukaya 2013), generating and answering questions (Bugg and McDaniel 2012), receiving advanced organizers (Dunlosky et al. 2002), reading texts with analogies (Wiley et al. 2018), and completing sentence-sorting tasks (Thomas and McDaniel 2007). The following interventions remained for inclusion: delayed-summary writing, delayed-keywords listing, delayed-diagram completion, self-explaining, concept mapping, rereading, and setting a comprehension-test expectancy.

-

The typical implementation of the intervention was used. With regard to the delayed-generation interventions, the critical feature was that participants had to read all texts before being required to generate summaries, keywords, or diagrams. Although it was not a requirement for inclusion, it also became apparent that, in all studies on delayed-keywords listing identified for this meta-analysis, the participants received example keywords (a minor deviation occurred in the study by Engelen et al. 2018, Experiment 1, in which the participants not only received two example keywords but also had to list three more keywords themselves). Moreover, in all studies on delayed-diagram completion, the participants received a brief training on how to complete diagrams. With respect to delayed-summary writing, besides the study by Engelen et al. (2018, Experiment 1), in which the participants were asked to complete a two-sentence summary for a short practice text, in all other studies, the participants did not receive examples or training. The self-explanation intervention had to require participants to engage in self-explaining during reading. Additionally, in all studies included, the participants were provided with a short example text along with potential self-explanation statements. Similarly, the requirement for the concept-mapping intervention was that participants had to construct concept maps during reading. In addition, in all studies, the participants received extensive instruction and training on constructing concept maps. The rereading intervention simply had to be implemented such that participants read a text twice before providing the prediction for that text. Finally, the critical feature with regard to the comprehension-test-expectancy intervention was that participants’ knowledge about the upcoming comprehension tests had to be manipulated via both (a) an explicit description of the nature of the test questions and (b) sample test questions about practice texts.

As indicated previously, an inclusion criterion was that the control condition received no intervention. In the studies on delayed-diagram completion, after reading the texts, the participants in the control group completed a picture-matching task, which required them to find the differences between two pictures. As this filler task is very unlikely to affect learning processes, the studies were deemed appropriate for inclusion. Note, moreover, that in the studies on test expectancy, the participants in the control group neither received information about the nature of the upcoming test questions nor sample test questions.

-

Sufficient statistical information for effect size computation was available. If the required information was not reported and not received through a previous author request, we asked the authors to provide the missing information. When this was unsuccessful, the study could not be included.

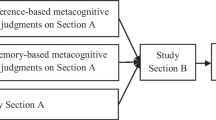

After applying these inclusion and exclusion criteria, a total of 28 effect sizes from 17 records remained. The studies were published between 2000 and 2020 and comprised a total of 2,236 participants. The flowchart in Fig. 1 provides a visual overview of the selection process. Table 1 presents the studies with corresponding effect size information for each intervention.

Coding of Studies

All studies were double coded by a trained research assistant and the first author of this meta-analysis. Discrepancies were resolved through discussion. In this way, an agreement rate of 100% was achieved.

Outcome Measures

The main outcome measure was relative metacomprehension accuracy computed as an intraindividual correlation. To remain as consistent as possible in terms of the outcome measure used, we focused on Gamma correlations and sought to avoid Pearson correlations. When a study did not provide Gamma correlations, we first checked whether the information could be identified in the reviews by Thiede et al. (2009) or Wiley et al. (2016b) before making author requests.

As we were also interested in the impact of the interventions on comprehension, we additionally coded this outcome measure. Specifically, we focused on comprehension computed as the mean performance across the comprehension tests per participant. When a study did not provide the mean but the median test performance, we made author requests.

Moderator Variables

For each study, we coded the specific intervention under investigation. Hence, the intervention was a moderator variable with seven categories: delayed-summary writing, delayed-keywords listing, delayed-diagram completion, self-explaining, concept mapping, rereading, and setting a comprehension-test expectancy.

Based on this moderator variable, we constructed a second moderator variable that reflected the type of intervention and had four categories: (a) cue-generation encoding-based intervention (i.e., self-explaining and concept mapping), (b) cue-generation retrieval-based intervention (i.e., delayed-summary writing, delayed-keywords listing, and delayed-diagram completion), (c) cue-attention intervention (i.e., rereading), and (d) cue-selection intervention (i.e., setting a comprehension-test expectancy).

Effect Size Computation

We used Hedges’s g (Hedges 1981) as the effect size measure. By incorporating a correction factor, Hedges’s g provides a more conservative effect size estimate for small sample sizes compared to Cohen’s d (see, e.g., Borenstein et al. 2009). Interpretative benchmarks according to Cohen (1988) were used: g = 0.20 indicates a small effect, g = 0.50 a medium effect, and g = 0.80 a large effect.

Procedure and Analysis

All statistical analyses were conducted with the Comprehensive Meta-Analysis Program (Borenstein et al. 2013). An alpha level of .05 was used for the analyses unless stated otherwise.

Average Effect

To examine the impact of the situation-model-approach interventions, we computed the average effect and tested whether it differed significantly from zero. In doing so, each effect size was weighted by the inverse of its variance and a random-effects model was used. A random-effects model was preferred to a fixed-effect model because the selected studies are no exact replications but differ with regard to sample, design, materials, and procedure. Therefore, the true effect size likely varies across studies rather than being fixed. Specifically, the application of a random-effects model implies that the observed effect sizes are assumed to differ from the average effect due to sampling error (within-study variance) and a true-variation component (between-studies variance; see, e.g., Hunter and Schmidt 2015; Lipsey and Wilson 2001). The method of moments was used to estimate the variance between studies (see, e.g., Borenstein et al. 2009).

From Experiment 1 by Thiede et al. (2010), we extracted effect size information for two subgroups (i.e., college students required and college students not required to take a remedial reading course; see also footnote b for Table 1). Similarly, Experiment 2 by De Bruin et al. (2011) provided effect size information for two subgroups (i.e., fourth graders and sixth graders). As the subgroups contributed independent information, we used subgroups within studies instead of studies as the unit of analysis (see, e.g., Borenstein et al. 2009).

Moreover, Experiment 4 by Griffin et al. (2019b) reported data on two interventions, self-explaining and setting a comprehension-test expectancy, with the same control group serving as the point of comparison. Hence, to avoid problems with multiple comparisons (i.e., the lack of independence between the effect for self-explaining versus control and the effect for test expectancy versus control), we created a composite score, namely the mean of the effect for self-explaining versus control and the effect for test expectancy versus control. The variance of this composite score was computed based on the variance of each effect size and the correlation between the two effects (which was set to r = .50 given the equal sample size in conditions; see, e.g., Borenstein et al. 2009). The same issue applied to Experiment 1 by Engelen et al. (2018), which compared delayed-summary writing and delayed-keywords listing to the same control group. Again, we calculated a composite score that was the mean of the effect for delayed-summary writing versus control and the effect for delayed-keywords listing versus control. The composite score’s variance was computed based on the variance of each effect size and the correlation between the two effects (which was set to r = .50 given the approximately equal sample size in conditions; see, e.g., Borenstein et al. 2009).

Publication Bias

We used three indicators to check for publication bias. We again applied a random-effects model. First, we generated a funnel plot. The effect size was mapped on the x-axis and the standard error on the y-axis. In the absence of a publication bias, the effect sizes would be distributed symmetrically around the average effect. In the presence of a publication bias, there would be some missing values near the bottom of the plot where studies with small samples are allocated (they generally have a larger standard error). Specifically, there would be a gap on the left side where the studies with small, nonsignificant effects would have been plotted if they had been published (see, e.g., Borenstein et al. 2009).

Second, we conducted Rosenthal’s (1979) fail-safe N test. This test indicates how many unpublished zero-effect studies one would need to retrieve and incorporate into the meta-analysis before the p value for the average effect became nonsignificant.

Third, we conducted a trim-and-fill analysis (Duval and Tweedie 2000a, b). This analysis first trims asymmetric effect sizes from the right side of the funnel plot to calculate an unbiased estimate of the average effect. Then, to correct the variance of the effects, the plot is filled by reinserting the trimmed effect sizes on the right side and by adding their imputed counterparts on the left side.

Moderator Analyses

We conducted moderator analyses to compare the effectiveness of the interventions. First, we tested for significant heterogeneity in effect sizes by calculating the Q statistic. Q reflects the total amount of study-to-study variation observed and can be compared to the expected variation (under the assumption that all variation is due to within-study error) to test the null hypothesis that all studies share a common true effect size (see, e.g., Borenstein et al. 2009; Cochran 1954). Additionally, we calculated the I2 statistic. I2 reflects the degree of heterogeneity, that is, what proportion of the total observed variation can be attributed to true variation in effect sizes (see, e.g., Higgins et al. 2003; Huedo-Medina et al. 2006). I2 ranges from 0 to 100%. Higgins et al. (2003) suggest that values around 25%, 50%, and 75% may be interpreted as low, moderate, and high, respectively.

The categorical moderator variables were analyzed with special analysis of variance for meta-analyses. In doing so, mixed-effects models were applied. In mixed-effects models, the average effect within each moderator category (i.e., each particular intervention or intervention type) is calculated using a random-effects model and the difference across categories is assessed using a fixed-effect model (i.e., the categories are assumed to be exhaustive rather than sampled at random; see, e.g., Borenstein et al. 2009). In each moderator analysis, we pooled the within-category estimates of the variance of true effect sizes and applied this common estimate to all studies to obtain a more accurate estimate of the between-studies variance. As an effect size measure, we computed R2, which reflects the proportion of true variation among studies explained by a moderator. We applied the interpretative thresholds provided by Cohen (1988): R2 = .01 denotes a small effect, R2 = .09 a medium effect, and R2 = .25 a large effect. Furthermore, the Q statistic was used to determine whether any two moderator categories significantly differed from each other (see, e.g., Borenstein et al. 2009). As already stated, based on Experiment 4 by Griffin et al. (2019b), effect sizes for self-explaining and setting a comprehension-test expectancy were calculated using the same control group. Likewise, based on Experiment 1 by Engelen et al. (2018), effect sizes for delayed-summary writing and delayed-keywords listing were computed using the same control group. In the moderator analyses, the two effects from one study were treated as independent effects so that they could be included in the study pool for both interventions.

Results

The Average Effect of Situation-Model-Approach Interventions on Relative Metacomprehension Accuracy

We calculated the weighted mean correlation in the control and intervention conditions, respectively. There was a small average correlation in the control condition, r = .14, 95% CI [.09, .20], k = 26, and a medium-to-large average correlation in the intervention condition, r = .41, 95% CI [.33, .48], k = 26. Accordingly, the average effect across all situation-model-approach interventions on relative metacomprehension accuracy was significantly positive and of medium size, g = 0.46, 95% CI [0.33, 0.60], p < .001, k = 26 (as described in the Procedure and Analysis section, there were 26 effect sizes for this analysis because the two effects from Griffin et al. 2019b, Experiment 4, and Engelen et al. 2018, Experiment 1, were combined into one composite score, respectively; see Table 1 for the effect size information concerning the individual studies). Hence, in accordance with Hypothesis 1, situation-model-approach interventions are generally effective in improving relative metacomprehension accuracy.Footnote 3

The set of effect sizes was heterogeneous, Q(25) = 70.46, p < .001, I2 = 65%. The I2 statistic indicated that 65% of the observed variation could be attributed to true variation among studies, which reflects moderate-to-high heterogeneity (Higgins et al. 2003). Thus, we conducted a moderator analysis to compare the effectiveness of the particular interventions.

Publication Bias

First, the funnel plot in Fig. 2 shows that the spread of effect sizes about the average effect is not perfectly symmetrical. Specifically, toward the bottom of the plot, there is a gap on the left side, suggesting that some studies with small, nonsignificant effects are missing. This indicates that a publication bias might be present. Second, Rosenthal’s (1979) fail-safe N test revealed that 843 studies with an effect size of zero would be needed to render the average effect nonsignificant. According to a guideline by Rosenthal (1991), as the fail-safe N exceeds 5 * k + 10 (5 * 26 + 10 = 140 < 843), the average effect seems to be robust to a potential publication bias. Third, the trim-and-fill analysis imputed four studies. However, the estimate of the unbiased average effect was g = 0.41, 95% CI [0.28, 0.54], which would represent only a small change in the average effect size. In conclusion, the impact of publication bias in the present study seems to be negligible.

Comparisons of the Interventions with Regard to Relative Metacomprehension Accuracy

Interventions

The moderator analysis with intervention revealed a significant positive average effect of small-to-medium size for rereading and delayed-keywords listing, of medium size for delayed-summary writing, of medium-to-large size for delayed-diagram completion, and of large size for setting a comprehension-test expectancy. In addition, there was a marginally significant positive average effect of medium size for concept mapping (p = .097). The average effect for self-explaining was not significant (see Table 2). Hence, apart from self-explaining, the results are in line with Hypothesis 2 in that each particular intervention supports relative metacomprehension accuracy.

The moderator analysis was not significant, Q(6) = 5.71, p = .456, R2 = .03. Pairwise comparisons with Bonferroni correction of the alpha level (corrected alpha = .002) showed that none of the differences between the interventions reached the set level of significance (see Table 3). However, the average effect for setting a comprehension-test expectancy tended to be larger than the average effects for delayed-keywords listing and rereading (ps < .05). Therefore, with respect to Research Question 1, the results indicate that the interventions do not substantially differ with regard to their impact on relative metacomprehension accuracy. Yet, there is a tendency for the test-expectancy intervention to be particularly effective, especially compared with delayed-keywords listing and rereading.

Concerning delayed-summary writing, delayed-keywords listing, self-explaining, and rereading, the test of heterogeneity was significant (see Table 2). For delayed-keywords listing, there was some variation with regard to learners’ education level. However, an explorative moderator analysis revealed that, although the average effect was only marginally significant for school students, g = 0.27, 95% CI [− 0.03, 0.57], k = 5, it did not significantly differ from the average effect for university students, g = 0.58, 95% CI [0.03, 1.14], k = 2; Q(1) = 0.94, p = .333, R2 < .01. We refrained from conducting further moderator analyses within each intervention because there were only few studies for each, causing too little variability in the data (i.e., few cases per moderator category; e.g., there was only one study with school students for delayed-summary writing and none for self-explaining and rereading).

Intervention Types

The moderator analysis with intervention type revealed a significant positive average effect of small-to-medium size for the cue-generation encoding-based interventions and the cue-attention intervention, of medium size for the cue-generation retrieval-based interventions, and of large size for the cue-selection intervention (see Table 4).

The moderator analysis was also not significant, Q(3) = 4.07, p = .254, R2 = .10. Pairwise comparisons with Bonferroni correction of the alpha level (corrected alpha = .008) showed that none of the differences between the intervention types reached the set level of significance (see Table 5). However, in accordance with the analysis on the seven specific interventions, the average effect for the cue-selection intervention tended to be larger than the average effect for the cue-attention intervention (p = .029). Hence, concerning Research Question 2, it can be concluded that the different intervention types do not substantially differ in their effectiveness for supporting relative metacomprehension accuracy. Yet, the cue-selection intervention of setting the right test expectancy tends to be more effective than the cue-attention intervention, that is, rereading.

Apart from the cue-selection intervention, the test of heterogeneity for the other intervention types was significant (see Table 4). For the cue-generation retrieval-based interventions, there was some variation regarding learners’ education level. An explorative moderator analysis revealed that, although both average effects were significant, the one for school students, g = 0.31, 95% CI [0.10, 0.52], k = 8, was marginally significantly smaller than the one for university students, g = 0.62, 95% CI [0.39, 0.86], k = 7; Q(1) = 3.77, p = .052, R2 = .27. We did not run further moderator analyses within each intervention type because there were only few studies for each, restricting the variability in the data (i.e., few cases per moderator category).

The Average Effect of Situation-Model-Approach Interventions on Comprehension

The average effect across the situation-model-approach interventions on comprehension was significantly positive but small, g = 0.13, 95% CI [0.002, 0.26], p = .046, k = 26 (see Table 6 for the effect size information concerning the individual studies). Thus, with respect to Research Question 3, the results suggest that situation-model-approach interventions generally improve not only metacomprehension but also comprehension although to a lesser extent.Footnote 4

The set of effect sizes was heterogeneous with moderate-to-high heterogeneity, Q(25) = 72.74, p < .001, I2 = 66% (Higgins et al. 2003). Hence, we next compared the effectiveness of the particular interventions in a moderator analysis.

Comparisons of the Interventions with Regard to Comprehension

Interventions

The moderator analysis with intervention showed that there was a significant medium-to-large positive average effect for concept mapping and a marginally significant small-to-medium positive average effect for the test-expectancy intervention (p = .054). None of the other interventions yielded a significant average effect (see Table 7).

The moderator analysis was significant, Q(6) = 15.57, p = .016, R2 = .37. Pairwise comparisons with Bonferroni correction of the alpha level (corrected alpha = .002) showed that the average effect for delayed-summary writing was significantly smaller than the average effects for concept mapping and setting a comprehension-test expectancy and by trend also smaller than the average effect for self-explaining (p = .015). Moreover, the average effect for delayed-keywords listing tended to be smaller than the average effects for concept mapping and setting a comprehension-test expectancy (p < .05; see Table 8). Hence, with respect to Research Question 3, the results further indicate that there is a tendency for concept mapping and the test-expectancy intervention to be particularly effective, whereas delayed-summary writing and delayed-keywords listing are rather ineffective for supporting comprehension.

Only for rereading was the test of heterogeneity significant (see Table 7). We refrained from conducting further moderator analyses within this intervention, however, because of too little variability in the data.

Intervention Types

The moderator analysis with intervention type revealed that there was a significant medium positive average effect for the cue-generation encoding-based interventions and a marginally significant small-to-medium positive average effect for the cue-selection intervention (p = .054). The average effects for the other two intervention types were not significant (see Table 9).

The moderator analysis was significant, Q(3) = 12.03, p = .007, R2 = .37. In line with the analysis on the seven specific interventions, pairwise comparisons with Bonferroni correction of the alpha level (corrected alpha = .008) showed that the average effect for the cue-generation retrieval-based interventions was significantly smaller than the average effect for the cue-generation encoding-based interventions and by trend also smaller than the average effect for the cue-selection intervention (p = .017; see Table 10). Thus, concerning Research Question 3, these results reinforce that the cue-generation retrieval-based interventions do not support comprehension, whereas the cue-generation encoding-based interventions and the cue-selection intervention of setting the right test expectancy are beneficial in this regard.

The test of heterogeneity was significant for the cue-generation retrieval-based interventions and the cue-attention intervention (see Table 9). Concerning the cue-generation retrieval-based interventions, we ran an explorative moderator analysis with learners’ education level. The average effects for school students, g = − 0.03, 95% CI [− 0.19, 0.14], k = 8, and university students, g = − 0.05, 95% CI [− 0.24, 0.14], k = 7, were not significant and did not significantly differ from each other, Q(1) = 0.03, p = .874, R2 < .01. We did not run further moderator analyses within each intervention type because there were only few studies for each, restricting the variability in the data (i.e., few cases per moderator category).

Discussion

The present meta-analysis investigated to what extent relative metacomprehension accuracy can be enhanced through interventions that promote learners’ use of situation-model cues for judging their comprehension. In particular, these interventions were delayed-summary writing, delayed-keywords listing, delayed-diagram completion, self-explaining, concept mapping, rereading, and setting a comprehension-test expectancy.

Effectiveness of Situation-Model-Approach Interventions

By showing that situation-model-approach interventions generally improve relative metacomprehension accuracy, the results of this meta-analysis reinforce previous narrative reviews (e.g., Griffin et al. 2013, 2019a; Wiley et al. 2016b). In addition, the results unveil that the improvement is quite considerable (i.e., medium effect). Therefore, the interventions should be used in instruction to support the accuracy of learners’ judgments about their comprehension and thus their self-regulated learning from texts.

With the exception of self-explaining, each of the investigated situation-model-approach interventions reliably enhances relative metacomprehension accuracy. Yet, the test-expectancy intervention seems to be particularly effective, especially in comparison to delayed-keywords listing and rereading. Accordingly, a further analysis on the intervention types showed that the cue-selection intervention of setting the appropriate test expectancy tends to be more beneficial than the cue-attention intervention of rereading. Hence, this meta-analysis extends prior research not only by revealing the overall degree of effectiveness of the interventions but also by indicating which intervention is comparatively more effective (i.e., test expectancy), less effective (i.e., rereading), or unreliable (i.e., self-explaining).

The average effect for self-explaining was heterogeneous and not significant. Of the two studies on self-explaining in the present meta-analysis, the effect was significantly positive in the study by Griffin et al. (2019b, Experiment 4) but not significant and even negative in the study by Jaeger and Wiley (2014, Experiment 2; see Table 1). As conducting further moderator analyses within this intervention was not feasible with two studies, we compared the studies on variables that could potentially have caused the discrepancy in effects (see Prinz et al. 2020). However, the studies were very similar with regard to sample, design, materials, and procedure so that differences in these aspects are unlikely to explain the variation among effects. In the study by Jaeger and Wiley (2014, Experiment 2), analyses of self-reported cue use showed that self-explaining did not increase learners’ use of situation-model cues but actually tended to reduce the likelihood of using such cues compared to when learners did not engage in self-explaining. A possible reason for this outcome is that being required to read and simultaneously self-explain cognitively overwhelmed the learners, preventing them from generating or at least monitoring situation-model cues. Actually, a similar outcome was revealed for problem-solving tasks in previous research: In contrast to other generation activities, such as fully or partially generating the solution procedure by oneself, self-explaining the solution steps did not improve monitoring accuracy presumably because it was too cognitively demanding and thus led to low-quality explanations that provided invalid cues (e.g., Baars et al. 2018; see also Van Gog et al. 2020). Hence, the benefit of self-explaining for relative metacomprehension accuracy might depend on learners’ available cognitive resources. In fact, in studies that applied a combined self-explanation and rereading intervention, the effect on relative metacomprehension accuracy was consistently positive (Griffin et al. 2008, Experiment 2; Wiley et al. 2008, Experiment 2). Self-explaining during rereading might ensure that learners can allocate ample resources to generating and monitoring situation-model cues. Therefore, an important direction for future research is to explore what condition, such as higher working-memory capacity, or what support, such as rereading instructions, is needed for the self-explanation intervention to reliably improve relative metacomprehension accuracy.

There was also significant heterogeneity among the effects for delayed-summary writing, delayed-keywords listing, and rereading. As there were rather few studies on each particular intervention with not much variability in methodological implementation, we again could not conduct moderator analyses to explain the variability in effects. Hence, more studies on these interventions including the examination of potential moderating influences are needed. For example, it seems likely that, as in the case of self-explaining, the effect of rereading depends on individual differences in cognitive capacity and reading skill. In fact, Griffin et al. (2008) found that rereading particularly benefited learners with low working-memory capacity or low reading skill (see also Ikeda and Kitagami 2013). Furthermore, the effects of delayed-summary writing and delayed-keywords listing might depend on the quality of the resulting products and learners’ cognitive capacity to produce them. For instance, if keywords do not tap central ideas from a text but rather unimportant facts, their benefit for yielding situation-model cues might be limited. This assumption is indirectly supported by the finding that, for the cue-generation retrieval-based interventions taken together (for these interventions jointly, there was sufficient variation regarding learners’ education level to conduct a moderator analysis), the average effect tended to be smaller for school students than for university students. Thus, when applying delayed-generation tasks to promote relative metacomprehension accuracy in instruction, learners with lower cognitive capacity, especially younger learners, need to be supported in generating sophisticated products, for example, by receiving extensive training first.

Beyond the effects on relative metacomprehension accuracy, the effects on comprehension as well as practical considerations play important roles in determining the interventions’ utility for supporting self-regulated learning from texts. Concerning comprehension, our results showed that, although the effect is comparatively small, situation-model-approach interventions are generally beneficial. Moreover, there is a tendency for concept mapping and setting a comprehension-test expectancy to be particularly effective, especially compared with delayed-generation tasks. Concerning practical utility, the test-expectancy intervention has the advantages that it does not require training, a delay after reading, or additional instruction time beyond reading, and it has the potential to improve learners’ metacognitive knowledge. In fact, the last two aspects are unique benefits of the test-expectancy intervention. Whereas all other examined interventions require learners to engage in an additional task that has the by-product of improving relative metacomprehension accuracy, the test-expectancy intervention helps learners actively apply the appropriate learning goal to adjust their metacognitive processes as they monitor and judge their comprehension. An advantage of this approach is that learners can internalize this goal and adopt it in future learning episodes (cf. Griffin et al. 2019b). In contrast, rereading, for example, is easy to implement because it does not require training or a delay, but additional instruction time has to be spent on reading and no general metacognitive knowledge can be acquired. Given the overall picture, setting a comprehension-test expectancy appears to be a particularly beneficial intervention for enhancing self-regulated learning from texts.

Consequently, it seems important to shed more light on the way in which the test-expectancy intervention exerts its effects. Also in general, the mechanisms underlying the effectiveness of situation-model-approach interventions should be investigated more closely. For instance, at a more fine-grained level, the interventions might improve cue use by reducing cognitive load. This proposition is in line with the effort monitoring and regulation framework (De Bruin et al. 2020), which suggests that, for effective self-regulated learning, cognitive load needs to be optimized during and after the primary task. Cue-generation and cue-attention interventions might optimize cognitive load during learning, whereas cue-selection interventions might optimize cognitive load at the time of judgment. More precisely, cue-generation and cue-attention interventions might help learners devote cognitive resources to monitoring. For example, during rereading, the reduced need for comprehension-related processing (e.g., Millis et al. 1998) might free cognitive capacity for monitoring. Generating summaries, keywords, diagrams, or concept maps might promote monitoring by enabling cognitive offloading as written text and graphics provide an external representation of a learner’s understanding (cf. Nückles et al. 2020). Concept mapping, for instance, yields a visual representation of a learner’s situation model that can be reinspected during learning, which might ease working-memory capacity for monitoring. Moreover, concept mapping, self-explaining, and delayed-generation tasks simply provide additional opportunities to engage with the learning material on a situation-model level and hence to monitor the situation model. In contrast, the cue-selection intervention of setting the appropriate test expectancy might alleviate cognitive load at the time of judgment by helping learners focus on relevant cues. Learners may often be overwhelmed by the many available cues and hence rely on heuristic and memory-based cues because these cues are more salient and less effortful to use (cf. Griffin et al. 2009). When learners are informed about what comprehension entails and what cues are therefore useful for evaluating their comprehension, however, they might be better able to disregard heuristic and memory-based cues and to concentrate on situation-model cues. In conclusion, our results indicate that optimizing cognitive load both during and after the primary task of comprehending a text promotes learners to more accurately judge their understanding. However, reducing the load after the primary task, that is, at the time of judgment, seems particularly effective.

The effort monitoring and regulation framework (De Bruin et al. 2020) also proposes that self-regulated learning can be impaired when learners misinterpret their invested mental effort. That is, although high mental effort is not necessarily indicative of poor learning, learners tend to judge their learning as low when experiencing high mental effort (e.g., Baars et al. 2014, 2018, Experiment 2; Dunlosky et al. 2006; Koriat et al. 2009; Schleinschok et al. 2017; see also Baars et al. 2020; Carpenter et al. 2020). Hence, because learners’ invested mental effort is a salient but not inevitably predictive cue of their comprehension, they need to be supported in correctly interpreting or neglecting this cue. Actually, situation-model-approach interventions may decrease learners’ reliance on effort by providing more valid cues. This assumption is supported by Schleinschok et al. (2017) who investigated the impact of generating a drawing after reading each passage on relative metacomprehension accuracy. Their results showed that, in general, the higher the learners’ cognitive load during the learning task, the lower they judged their comprehension to be (cue utilization). However, compared with learners’ judgments, their cognitive load was of limited predictive validity for their actual comprehension (cue diagnosticity). Therefore, cognitive load was used as a cue for judging comprehension but was hardly diagnostic for later comprehension performance. Yet, the learners in the drawing condition also used the quality of their drawings as a cue (cue utilization), which was highly predictive of their actual comprehension (cue diagnosticity), presumably contributing their higher level of accuracy (Experiment 1). Similarly, other cue-generation interventions might provide situation-model cues that learners can use in place of or in addition to their experienced mental effort, thereby enhancing relative metacomprehension accuracy. Moreover, the cue-selection intervention of setting the right test expectancy might alert learners about valid cues, decreasing their reliance on mental effort.

Limitations and Future Research

There are a few limitations that need to be considered when interpreting the present results and some important directions for future research. First, this meta-analysis reveals only that the level of accuracy changes as a function of situation-model-approach interventions. It leaves open the question of how the accuracy comes about. More concretely, although some studies investigated the (meta)cognitive processes underlying learners’ judgments, such as their cue use (e.g., Thiede et al. 2010, Experiment 1), the precise mechanisms are not yet clear and alternative or additional processes, such as cognitive load, may play a role. Future research should therefore shed light on the proposed relations between cognitive load, cue use, and judgment accuracy, for example, by using self-reports of cue use or the think-aloud methodology during reading and judgment making. Based on this understanding, further interventions might be designed to optimize cognitive load and help learners focus on valid cues. Relatedly, different intervention types seem to support relative metacomprehension accuracy via different pathways, namely via the generation of, attention to, or selection of situation-model cues (Griffin et al. 2008, Experiment 2; Griffin et al. 2019b, Experiment 4; Wiley et al. 2008, Experiment 2). Additionally, encoding-based and retrieval-based cue-generation interventions can be distinguished (Thiede et al. 2019). However, although the retrieval-based cue-generation interventions appear to be less beneficial for supporting comprehension than the encoding-based cue-generation interventions, there were no differences in relative metacomprehension accuracy between these two intervention types. Therefore, it does not seem to matter for relative metacomprehension accuracy when or through which cognitive processes situation-model cues are generated but rather that they are generated so that learners can use them to judge their comprehension. Yet, to more reliably reveal the impact of the kind of processing, that is, encoding versus retrieval, future studies should examine one particular intervention and only vary the processing task. For example, it could be varied whether learners draw directly during or after reading (cf. Thiede et al. 2019).

Second, there were only a small number of studies for some of the interventions under investigation, in particular, for delayed-diagram completion, self-explaining, and concept mapping. Therefore, the conclusions regarding these interventions should be treated with caution and further studies need to be conducted. Furthermore, it has to be emphasized that the studies on an intervention are not exact replications but differ in specific aspects concerning sample, design, materials, and procedure. However, we set several inclusion criteria, such as that only studies that examined expository texts and inference or application questions were eligible for inclusion. In addition, the studies were required to use a highly similar implementation of the respective intervention and a control condition in which the participants did not receive an intervention. In this way, we aimed at keeping a clear focus and at obtaining valid results concerning the investigated interventions. Yet, a larger empirical base would increase confidence in the results and allow for a more comprehensive examination of potential moderators, such as individual-difference variables (e.g., working-memory capacity, reading skill) or methodological aspects (e.g., text length, sample questions). In fact, the heterogeneity statistics for some of the interventions and intervention types indicated true between-studies variance that moderators might explain. Moreover, it should be noted that further interventions to promote the use of situation-model cues have been explored. For instance, the generation of drawings (Schleinschok et al. 2017; Thiede et al. 2019) or explanations (Fukaya 2013) also proved successful for enhancing relative metacomprehension accuracy. However, because there were fewer than two studies on these interventions that met the criteria for inclusion, they could not be investigated. Therefore, future research should replicate the studies on these interventions as well.

Third, the present study focused on metacomprehension accuracy in terms of relative accuracy. Absolute accuracy and bias are further measures of metacomprehension accuracy referring to the difference between judgments and performance. Specifically, absolute accuracy is the absolute difference and bias the signed difference reflecting over- and underconfidence. These two measures are also highly important in the context of self-regulated learning (see, e.g., De Bruin and Van Gog 2012; Dunlosky and Thiede 2013). For example, overconfidence can lead to the premature termination of studying and thus impair learning (e.g., Dunlosky and Rawson 2012). Compared with relative accuracy, absolute accuracy and bias assess different aspects of metacomprehension accuracy, have different strengths and limitations, and are often affected by different factors (e.g., Maki et al. 2005; Schraw 2009). However, to date, the impact of situation-model-approach interventions on absolute accuracy or bias has comparatively seldom been investigated. Consequently, future research should explore whether the interventions improve all measures, which might support self-regulated learning most effectively. Besides other measures, also other learning tasks might be considered. This study particularly focused on self-regulated learning from texts. A specificity of learning in this context is the goal that learners build and monitor their situation model. Hence, it is questionable to what extent the present findings are transferable to other tasks, such as the learning of vocabularies or problem-solving, which aim at memorization or the acquisition of procedures. Although the situation-model approach gave rise to the interventions under investigation and helps explain their effects, for other learning tasks, other theoretical frameworks and interventions might be more useful. Thus, future research should assess what interventions are effective to support self-regulated learning involving other tasks.

Fourth, the interventions were less beneficial for improving comprehension than metacomprehension. This result indicates that conditions that lead to more accurate judgments and hence benefit learning in the long run do not necessarily benefit learning in the short run. It should be noted, however, that most studies specifically aimed at promoting metacomprehension, whereas it was not their goal to directly improve comprehension. For example, Jaeger and Wiley (2014, Experiment 2) remarked that their study on self-explaining did not involve practice or feedback, which has been central to self-explanation interventions designed to enhance comprehension (e.g., McNamara 2004), because their primary intention was to improve metacomprehension. In fact, for situation-model-approach interventions to increase relative metacomprehension accuracy, it is not necessary that learners construct a high-quality situation model. Instead, the critical feature is that learners use cues related to their situation model even if these cues indicate that their comprehension is poor. Nonetheless, in the ideal case, an intervention should improve all aspects of self-regulated learning, namely comprehension, metacomprehension accuracy, and regulation. Hence, further research should seek to uncover interventions meeting this requirement.

Conclusion