Abstract

An exponential increase in the availability of information over the last two decades has asked for novel theoretical frameworks to examine how students optimally learn under these new learning conditions, given the limitations of human processing ability. In this special issue and in the current editorial introduction, we argue that such a novel theoretical framework should integrate (aspects of) cognitive load theory and self-regulated learning theory. We describe the effort monitoring and regulation (EMR) framework, which outlines how monitoring and regulation of effort are neglected but essential aspects of self-regulated learning. Moreover, the EMR framework emphasizes the importance of optimizing cognitive load during self-regulated learning by reducing the unnecessary load on the primary task or distributing load optimally between the primary learning task and metacognitive aspects of the learning task. Three directions for future research that derive from the EMR framework and that are discussed in this editorial introduction are: (1) How do students monitor effort? (2) How do students regulate effort? and (3) How do we optimize cognitive load during self-regulated learning tasks (during and after the primary task)? Finally, the contributions to the current special issue are introduced.

Similar content being viewed by others

Introduction

Over the last two decades, education has been confronted with dramatic increases in the availability of information and in possibilities of technology that provide access to this information and that can help to learn from this information (Jungwirth 2002). Even though acquiring declarative knowledge and domain-specific skills remain at the heart of contemporary education, these changes require an additional emphasis on supporting learners to effectively manage the information processing load. Specifically, the signified changes entail that learners have to devote substantial mental effort not only to processing the to-be-learned content (i.e., object-level processing) but also to self-regulating their learning processes (meta-level processing). The necessity to adequately distribute the available cognitive resources among these two types of processing alone is a challenge to learners. Learners should, for example, know how to adapt their learning strategies when learning from hypermedia versus when learning from a traditional textbook or when learning in a face-to-face versus MOOC environment (Wong et al. 2019). Moreover, they should learn how to manage distractions and avoid multi-tasking (Wiradhany et al. 2019). However, the requirements of effective management of the information processing load go even further. For instance, in monitoring their learning, learners have to deal with the problem that the mental effort they invested in object-level processing is a highly salient but not necessarily a predictive cue of their actual learning. That is, the relation between effort and learning is not unidirectional. Putting in high effort does not necessarily mean learning is effective, and moreover, experiencing effort as too high does not always mean learning is ineffective. Accurate self-monitoring of effort is essential, though, as it affects students’ regulation of effort and interacts with their learning goals: If learners set low learning goals, they may strive towards putting in minimal effort and may not be inclined to self-monitor object-level task performance accurately. In summary, most learning environments ask for effective monitoring and regulation of effort of the individual learning and require the effective instructional design to optimize cognitive load when engaging with learning tasks on the object level and meta level.

Building on De Bruin and Van Merriënboer (2017), we argue that these challenges ask for integration of cognitive load theory (CLT) and self-regulated learning theory (SRL). First, to enhance our understanding of how learners self-monitor learning, taking a cognitive load perspective to examine how learners incorporate mental effort into their monitoring processes would be highly informative. Second, to enhance our understanding of the design of learning tasks and learning environments in relation to the cognitive load experienced by learners, a self-regulation perspective that focuses on how learners regulate mental effort would be valuable. Third, to enhance the building of declarative knowledge and domain-specific skills, an integrated cognitive load and self-regulated learning perspective that investigates the cognitive demands of self-regulating learning and how to optimize cognitive load during self-regulated learning could be highly beneficial.

In the Editorial of this special issue, we introduce and explain the effort monitoring and regulation (EMR) framework, which aims to provide the basis for future research in this field. Moreover, we sketch three research questions that emanate from the framework and that provide the basis for the contributions to this special issue. Note that integrated CLT-SRL research is in its embryonal stage, with little empirical data to date. The goal of this special issue is to review existing research and research paradigms that prove particularly promising to initiate CLT-SRL research related to monitoring and regulation of effort.

Effort Monitoring and Regulation Framework

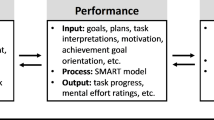

Figure 1 depicts the EMR framework, including the three research questions. The framework builds on the Nelson and Narens (1990) model, showing a meta level at which metacognitive monitoring and regulation take place, which, in turn, is based on input from the object level, at which learners engage with a learning task. Cognitive load is positioned in the middle, as it has direct links with both levels (meta and object) and both processes (monitoring and control) in the framework. It should be noted that, beyond effort cues, various other cues (e.g., fluency, familiarity) will affect monitoring, regulation, and learning. Moreover, interactions with individual differences, task characteristics, and learning context need to be taken into account when investigating the monitoring and regulation of effort.

Research Question 1: How Do Students Monitor Effort?

Starting with the upper right-hand part of the EMR framework, it indicates that important input to the meta level is how students monitor effort, both in relation to engaging with a task (i.e., how do learners experience effort during learning?) and in relation to the self-regulatory aspects within and across tasks (i.e., how do students use effort as a cue when monitoring learning and performance?). When students monitor their learning, they base their judgments on whatever information they have available that is indicative of their current learning. This information, typically called “cues,” varies in the diagnosticity of actual learning (Koriat 1997). For example, when judging their comprehension of texts, students’ ability to explain the text to someone else is a more predictive cue than the fluency of reading the text (Thiede et al. 2010). However, students often mistakenly judge a fluently read text as a well-understood text. The fluency bias indicates that the effort students invest in learning seems to be used as a cue during monitoring. Moreover, self-rated mental effort is found to be negatively related to judgments of learning (JOLs) (e.g., Baars et al. 2013). Alternatively, students may be learning, even when there are no performance effects visible yet (Soderstrom and Bjork 2015). This may lead students to interpret their invested effort as in vain. Research is needed to understand how students use effort as a cue for monitoring and how this affects further self-regulation of learning. Effort monitoring cues can relate to intrinsic, extraneous, and germane load (see Sweller et al. 1998), although it is unclear whether and how students can monitor the difference between these types of load and thus use them as distinctive cues. Moreover, students’ beliefs about effort, e.g., whether they interpret high effort as an indicator of actually learning (effort as important) or as an indicator of not learning (effort as impossibility) (Oyserman et al. 2018) also contribute to provide meaning to the effort cues they experience during self-monitoring at the meta level.

It is worth mentioning that studies that measured cue use always did so indirectly, that is, inferring the use of cues based on the result of metacognitive prompts (e.g., the diagram completion and summarization studies; Thiede et al. 2003; Van Loon et al. 2014). To more directly measure cue use, for future research, a different approach is recommended: Students could be directly observed (or retrospectively prompted) when generating and utilizing cues to monitor and regulate their learning. We propose that the existing metacognitive cue prompt research is expanded to include direct measurement of mental effort as a cue for monitoring and regulation of learning and to incorporate process measures of cue elicitation and utilization. This research should also examine the relation between effort ratings and judgments of learning or performance, including potential effects of timing, sequencing, and phrasing/depiction of effort ratings and judgments of learning. Little is known as to how these design aspects affect the quality of measures of monitoring and regulation of learning, but research by Händel and Fritzsche (2015) showed that students are sensitive to these aspects when judging their learning. A judgment scale that used “smileys” led to higher monitoring judgments than a numerical, verbal, or −/+ scale. All in all, this research will further our understanding of how learners experience effort, how effort is used as a cue for judging learning and performance, and how it interacts with effort beliefs, task characteristics, and individual differences.

Research Question 2: How Do Students Regulate Effort?

Understanding how students experience effort and how they use cues related to the mental effort is also of essence to understanding and optimizing regulation (the left-hand part of the framework; related to research question 2). Regulation of effort often takes place data driven (see Fig. 1, data-driven cues) whenever learners as a result of task experience decide to decrease, increase, or continue their current invested effort level. Regulation of effort can also be goal driven (Fig. 1, upper left corner), whenever learners set a goal prior to or during a learning task, and monitor and regulate learning towards the goal (Koriat et al. 2014). Task experience (data-driven cues) may also affect goal-driven effort regulation, i.e., when learners adjust a goal based on interacting with the task. Whether students act, data driven or goal driven affects their interpretation of effort; experiencing high effort may be interpreted as positive when learning is mainly goal driven, but negative when no explicit goal is set (i.e., data driven). Moreover, goal-driven learning often becomes (partly) data driven due to effort and learning cues resulting from task interaction. Hence, the question of how learners regulate effort (research question 2) is intertwined with the question of how learners monitor effort (research question 1).

Regulating effort is particularly challenging because typically there is a non-linear relation between invested effort and achieved learning outcomes. In the case of learning conditions that create “desirable difficulties,” this challenge is even greater, because these learning conditions cost high effort, do not show an immediate learning benefit, but lead to higher learning and more transfer at a delay (Bjork 1994). Testing yourself (versus restudying the learning material), for example, shows less learning at an immediate test but higher remembering and transfer at a test 2 days later (Roediger and Karpicke 2006). Because desirable difficulties optimize long-term learning, understanding how students can be supported to regulate effort towards desirable difficulties is of great importance. In a similar vein, how students regulate or self-manage their mental effort at the object level is also of interest to the second research question. Research has shown that students are able to self-manage their cognitive load when provided with specific instructions on how to do so (“guided self-management”; Roodenrys et al. 2012). For example, instructing students to reorganize text and diagrams to minimize the search for information in the learning task improved their recall and transfer test performance compared with students in split attention or integrated condition (Sithole et al. 2017). In general, examining what cues students use to regulate their effort during learning tasks and how that corresponds to cue use when monitoring effort and learning is central to research question 2. To our knowledge, this topic has widely been ignored.

Research Question 3: How Do We Optimize Cognitive Load on SRL Tasks (During and After the Primary Task)?

The third research question which emanates from the EMR framework is how cognitive load during self-regulated learning can be optimized. One facet of this research question relates to how the learning task can be designed in such a way that it helps learners managing the information processing load of the respective object-level processes and meta-level processes. For instance, several effective means to enhance monitoring accuracy such as prompting learners to generate keywords (e.g., De Bruin et al. 2011; Thiede et al. 2003; Waldeyer and Roelle 2020) or to complete a diagram (e.g., van de Pol et al. 2019) engage learners in writing down their responses to the respective prompts. By this means, the information processing load is likely reduced because learners do not need to hold all of the keywords/diagram components and the concomitant diagnostic cues in working memory when they judge their level of learning. Similarly, in learning journal writing, learners are prompted to write down their monitoring judgments, which likely frees cognitive capacity for subsequent regulation (e.g., Nückles et al. 2009; Roelle et al. 2017). Research is needed (1) to examine the effects of these design features on reducing cognitive load and (2) to understand how to support students’ distribution of cognitive load over time between the object-level primary task, and their self-monitoring and self-regulation at the meta level. Together, this will help optimize cognitive load during the self-regulation of learning.

A second facet of research question 3 refers to the training of self-regulated learning skills. Based on cognitive load theory, it can be assumed that the cognitive load that is due to self-regulation processes (i.e., monitoring or regulation) decreases with increasing expertise regarding these processes. In terms of both monitoring (e.g., Händel et al. 2020; Hübner et al. 2010; Roelle et al. 2012) and task selection skills (i.e., a type of regulation activity; see Raaijmakers et al. 2018a, 2018b), there are promising results that show beneficial training effects. Yet, it is unclear whether training decreases processing load for metacognitive prompts (e.g., keyword prompt, diagram completion prompt) and to what extent self-regulated learning skills transfer to other domains. Specifically, training interventions require a clear model of the monitoring and learning processes that are affected and the cues they generate. Such models still have to be specified for several metacognitive interventions. Unraveling these processes will then enable examining what practice schedule maximizes transfer to other contexts and domains.

A third facet of research question 3 that is closely related to the second facet is to what extent metacognitive prompts should be explicitly related to monitoring and regulation. Should learners be made aware that, for example, the keywords they generate can inform them about their level of learning and should be used when monitoring and regulating learning? Until now, this relation was never made explicit, but some learners of course may have inferred it. On the one hand, making this relation explicit could aid in cue utilization by more actively referring to the cues generated through the cue prompt when monitoring and regulating learning. On the other hand, it potentially increases cognitive load and has the risk of overloading learners as they may spend time and effort on analyzing cues that are scarcely diagnostic.

The Contributions of the Special Issue

Prinz, Golke, and Wittwer

The paper by Prinz et al. (this issue) provides a meta-analysis of the effect of situation-model-approach interventions (such as delayed summary writing and delayed keyword listing) on metacomprehension accuracy. Their findings show that these interventions have a medium-sized positive effect on metacomprehension accuracy and a small direct effect on comprehension. Setting a comprehension-test expectancy seemed to be a particularly effective intervention compared to all else. In relation to the EMR framework, the authors present a number of interesting insights. This paper relates specifically to research question 1 and in part to research question 3. First, they pose that situation-model-approach interventions may aid learners to rely less on the often-erroneous interpretation of effort as a cue that learning is low and help learners to focus on diagnostic cues such as indicators of comprehension level. Moreover, the cue generation interventions could ease working memory load by allowing for cognitive off-loading (e.g., putting a summary on paper, listing keywords).

van de Pol, van Loon, van Gog, Braumann, and de Bruin

The paper by van de Pol et al. (this issue) reviews the effects of engaging students in generative visualization activities (i.e., diagramming, concept mapping, and drawing) on monitoring and regulation accuracy of both students and teachers, which relates to research question 1 of the EMR framework. Furthermore, the study provides insight into students’ and teachers’ actual cue utilization during the formation of the respective judgments. As main findings, the authors report that all three visualization activities can foster judgment accuracy and that several moderating factors such as the degree of access to the generative products during the formation of the judgments and the instructions that learners receive prior to engaging in the generative activities matter. Moreover, the study highlights that students do not necessarily utilize the (diagnostic) cues that become available through the respective generative activities and that when they utilize the (diagnostic) cues, both students and teachers likely have difficulties in judging the cue manifestation, that is, the degree to which a generative product that could inform them about their/their students’ level of comprehension is correct or erroneous. The authors sketch potential solutions for this suboptimality as well as reason about how the mental effort that is invested in the generative visualization activities could serve as a (further) diagnostic cue and how future research could investigate the role of mental effort in this generation paradigm.

Baars, Wijnia, de Bruin, and Paas

The paper by Baars et al. (this issue) provides a meta-analysis of the relation between mental effort and judgments of learning and thus directly relates to research question 1 of the EMR framework. Their findings indicate that overall, there is a medium-sized negative correlation between mental effort and judgments of learning and that several factors moderate this relationship. One of these moderating factors is the degree to which learners regulate their learning in a data-driven or in a goal-driven manner. The correlation between mental effort and judgments of learning increases (i.e., approaches zero/the positive range) with an increasing degree to which learners engage in goal-driven regulation. A further moderating factor seems to be the type of task. The negative correlation was found to be stronger for problem-solving tasks than for reading or word learning tasks. The authors provide potential explanations for these moderating effects as well as give suggestions for how future research could address the underlying mechanisms behind the (different) correlations between mental effort and judgments of learning.

Scheiter, Ackerman, and Hoogerheide

The paper by Scheiter et al. (this issue) reviews methods for measuring the degree of mental effort invested in a task, addressing question 1 of the EMR framework. A very common approach for measuring effort is for learners themselves to provide self-appraisals of the mental effort they have invested in a task. However, like other subjective judgments (i.e., metacognitive assessments of one’s own learning), such self-appraisals of mental effort may be biased. The authors review the limited research measuring mental effort through both subjective appraisals and objective measures (e.g., eye tracking, EEG) and provide evidence of dissociations between the two. The authors propose that self-appraisals of mental effort, like metacognitive judgments, may be based on cues and heuristics that are not diagnostic of actual effort and could mislead these appraisals. They propose directions for future research aimed at understanding how learners interpret effort appraisals, directly measuring the alignment between subjective and objective effort measures, and identifying the cues and heuristics that learners rely on when making self-appraisals of mental effort.

Carpenter, Endres, and Hui

The paper by Carpenter et al. (this issue) reviews students’ experiences of learning from retrieval practice, both during memory-based tasks and during problem-solving tasks. During both types of tasks, students generally experience retrieval practice as more effortful than restudy. Although this leads to avoidance of retrieval practice in self-regulated learning contexts for memory-based tasks, this is less the case for problem-solving tasks. With a focus on research questions 1 and 2, the paper describes how learners mostly take a data-driven perspective and interpret high effort as an indicator of achieving little or no learning. However, the higher effort associated with retrieval practice is a desirable difficulty that eventually leads to more durable (i.e., long term) learning than, e.g., restudy. Students struggle to recognize when a higher effort is and when it is not a sign of desirable difficulty. Given the clear benefits of retrieval practice on long-term learning, particularly for memory-based learning tasks, interventions are needed to aid learners in acknowledging the higher effort as a desirable difficulty and increase the use of retrieval practice during self-regulated learning. Making students aware of the benefits of retrieval practice and providing hints are promising ways to do so. The authors describe how future research should focus, among others, on collecting observational measures of students’ self-regulated learning decisions and collect multiple measures of effort during retrieval (subjective and objective) to examine how they relate to the perceived effectiveness of retrieval practice as a strategy to enhance learning.

van Gog, Hoogerheide, and van Harsel

The paper by van Gog et al. (this issue) reviews the literature on self-regulated learning with problem-solving tasks. In terms of research question 1 of the EMR framework, the review shows how and in which circumstances learner engagement in the generative activity of problem-solving (during or after example studying) can enhance judgment accuracy. Moreover, the authors discuss the role of mental effort in the process of deriving (diagnostic) cues from the engagement in problem-solving as well as describe how future research could analyze and optimize learners’ utilization of mental effort in this process (e.g., by analyzing the origin of the mental effort and also attending to the quality of the problem-solving). The paper of van Gog et al. also relates to the process of regulation. The authors highlight that training learners in task selection based on both their prior problem-solving performance and the invested mental effort is a promising means to optimize effort regulation in self-regulated learning with problem-solving tasks, which relates to research question 2 of the EMR framework. In sketching fruitful paths for future research concerning the role of effort regulation in this paradigm, the authors discuss how incorporating motivational measures, informing learners about the benefits of engaging in effortful tasks, and implementing methods such as eye tracking could be useful.

Eitel, Endres, and Renkl

The paper by Eitel et al. (this issue) reviews the literature with respect to how learners themselves can manage cognitive load, addressing research question 3 of the EMR framework. Although cognitive load can be influenced by aspects of instruction design (e.g., instructions, the complexity of the information), it can also be influenced by the ways in which learners manage the task (e.g., how they process the instructions or how they integrate multiple pieces of information), such that even in situations that involve suboptimal instructional design, cognitive load can be effectively self-managed by engaging in compensatory processing. Such processing requires effort and attention and is thus likely to diminish with increased study duration. To illustrate, the authors describe an empirical study in which students read chapters about atomic models in chemistry with or without seductive details that were irrelevant to the learning contents. Although seductive details had no effect on learners’ ratings of cognitive load or on their test performance over the first chapter, seductive details significantly increased cognitive load and decreased test performance over the final (fifth) chapter. Thus, in a suboptimal instruction design (involving seductive details), learners initially appeared to effectively manage their cognitive load through compensatory processing (i.e., ignoring the seductive details) but did not sustain this processing over the course of the study duration. The authors outline areas for future research based on individual student differences that may predict willingness to sustain mental effort across lengthy study durations, and how instruction may be designed to encourage this sustained effort.

Nückles, Roelle, Glogger-Frey, Waldeyer, and Renkl

The paper by Nückles et al. (this issue) describes how a self-regulation view in writing-to-learn is a promising theoretical perspective to scaffold self-regulated learning because of the cognitive off-loading features written text has to offer. That is, the written text provides learners with a memory aid and external representation of what they are learning. Interestingly, a research program is presented, the Freiburg Self-Regulated-Journal-Writing Approach, in which several instructional support methods that optimize cognitive load during self-regulated learning by journal writing were investigated. Thereby, this paper relates to research question 2 and provides a comprehensive answer to research question 3. In the paper, four different lines of research are discussed. The first research line is on prompting germane processing in journal writing, for example, by prompting cognitive and metacognitive strategies students learning outcomes can be enhanced. The second line of research showed that having students self-explain worked examples about journal writing improved their response to prompts, their learning outcomes, and supported transfer to other topics. Also, worked examples enabled learners to self-manage their cognitive load while engaging in journal writing. The third line of research highlights the importance of adapting or fading prompting and feedback in relation to the expertise of the learner when using journal writing. Finally, the fourth line of research is centered around motivation and it was found that the effort learners have to invest in effective journal writing has its motivational costs. Yet, prompting students to reflect on the personal relevance of the learning materials or emphasizing mastery goals can ameliorate these motivational costs. In sum, the Freiburg Self-Regulated-Journal-Writing Approach provided many insights on how to support journal writing and optimize cognitive load in order to scaffold self-regulated learning. In doing so, many fruitful ideas for future research integrating self-regulated learning with cognitive load theory are discussed.

Wirth, Stebner, Trypke, Schuster, and Leutner

The paper by Wirth et al. (this issue) introduces a novel, interactive layers model of self-regulated learning and cognitive load and, as such, resonates with research questions 1, 2, and 3. First, it describes how self-regulated learning can be unconscious, not loading onto working memory, but relying on so-called resonant states in sensory memory. As an example, think of an experienced driver on the highway who responds effectively to other cars and curves in the road, without remembering any of it seconds later, and while talking to the passenger. Alternatively and more commonly studied in the literature, self-regulated learning is conscious and related to schema construction in working memory. Both conscious and unconscious self-regulation is described to take place in three different layers: a content layer, a learning strategy layer, and a metacognitive layer. The model is then applied to explain existing inconsistent empirical findings in the literature.

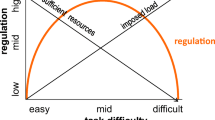

Seufert

The first discussion paper by Seufert (this issue) provides a critical reflection on the empirical and theoretical contributions of this special issue, including this editorial introduction. She emphasizes the importance of gaining an understanding of how learner’s effort is used as a cue, and how it can bias students’ judgments of their learning. She continues to describe, in a comprehensive model, how self-regulation can be seen as an inverted U-shaped function of task difficulty, with an optimal balance between resources and load, and thus optimal self-regulation, at medium task difficulty. Seufert concludes that more elaborate analyses of effort appraisals, including research into the different origins of load, are needed to further understanding of effort as a cue during self-regulated learning. This research should broaden the scope to include appraisals of learning resources, including motivational and affective states.

Dunlosky, Badali, Rivers, and Rawson

The second discussion paper by Dunlosky et al. (this issue) also examines the contributions to this special issue and provides several insightful reflections. The authors emphasize that mental effort per se does not cause learning (e.g., schema construction), but instead, learning is always the result of the learning processes that are causing (more or less) mental effort. In some of the research and writing on cognitive load, this is not always sufficiently clear. They acknowledge the importance of understanding students’ perceptions of effort and how these influence self-regulated learning and student achievement. However, they also argue that it will be particularly difficult for students to use mental effort as a diagnostic cue when monitoring learning, as the diagnosticity of the effort cue depends on the relation between effort and learning outcomes; a relation that is typically unknown to students during learning. They continue to analyze the challenges measuring objective and subjective effort that research faces and conclude that a general process theory is needed that describes how learning process cues relate to perceived effort cues to further our understanding and allow empirical examinations.

References

Baars, M., Visser, S., Van Gog, T., De Bruin, A., & Paas, F. (2013). Completion of partially worked examples as a generation strategy for improving monitoring accuracy. Contemporary Educational Psychology, 38(4), 395–406. https://doi.org/10.1016/j.cedpsych.2013.09.001.

Baars, M., Wijnia, L., de Bruin, A. B. H., & Paas, F. (this issue). The relation between student’s effort and monitoring judgments during learning: A Metaanalysis. Educational Psychology Review.

Bjork, R. A. (1994). Memory and metamemory considerations in the training of human beings. In J. Metcalfe & A. Shimamura (Eds.), Metacognition: Knowing about knowing (pp. 185–209). Cambridge: MIT Press.

Carpenter, S., Endres, T., & Hui, L. (this issue). Students’ Use of Retrieval in Self-Regulated Learning: Implications for Monitoring and Regulating Effortful Learning Experiences. Educational Psychology Review.

De Bruin, A. B. H., Thiede, K. W., Camp, G., & Redford, J. (2011). Generating keywords improves metacomprehension and self-regulation in elementary and middle school children. Journal of Experimental Child Psychology, 109(3), 294–310. https://doi.org/10.1016/j.jecp.2011.02.005.

De Bruin, A. B. H., & van Merriënboer, J. J. G. (2017). Bridging cognitive load and self-regulated learning research. Learning and Instruction, 52, 1–98.

Dunlosky, J., Badali, S., Rivers, M. L., & Rawson, K. A. (this issue). The role of effort in understanding educational achievement: Objective effort as an explanatory construct versus effort as student perception. Educational Psychology Review.

Eitel, A., Endres, T., & Renkl, A. (this issue). Self-management as a bridge between cognitive load and self-regulated learning. The illustrative case of seductive details. Educational Psychology Review.

Händel, M., & Fritzsche, E. S. (2015). Students’ confidence in their performance judgements: A comparison of different response scales. Educational Psychology, 35(3), 377–395. https://doi.org/10.1080/01443410.2014.895295.

Händel, M., Harder, B., & Dresel, M. (2020). Enhanced monitoring accuracy and test performance: Incremental effects of judgment training over and above repeated testing. Learning and Instruction. Advance online publication, 65, 101245. https://doi.org/10.1016/j.learninstruc.2019.101245.

Hübner, S., Nückles, M., & Renkl, A. (2010). Writing learning journals: Instructional support to overcome learning-strategy deficits. Learning and Instruction, 20(1), 18–29. https://doi.org/10.1016/j.learninstruc.2008.12.001.

Jungwirth, B. (2002). Information overload: Threat or opportunity. Journal of Adolescent & Adult Literacy, 45(5), 90–91.

Koriat, A. (1997). Monitoring one’s own knowledge during study: A cue-utilization approach to judgments of learning. Journal of Experimental Psychology: General, 126(4), 349–370.

Koriat, A., Nussinson, R., & Ackerman, R. (2014). Judgments of learning depend on how learners interpret study effort. Journal of Experimental Psychology: Learning, Memory, and Cognition, 40, 1624–1637.

Nelson, T. O., & Narens, L. (1990). Metamemory: A theoretical framework and new findings. The Psychology of Learning and Motivation, 26, 125–141.

Nückles, M., Hübner, S., & Renkl, A. (2009). Enhancing self-regulated learning by writing learning protocols. Learning and Instruction, 19, 259–271. https://doi.org/10.1016/j.learninstruc.2008.05.002.

Nückles, M., Roelle, J., Glogger-Frey, I., Waldeyer, J., & Renkl, A. (this issue). The self-regulation-view in writing-to-learn: using journal writing to optimize cognitive load in self-regulated learning. Educational Psychology Review.

Oyserman, D., Elmore, K., Novin, S., Fisher, O., & Smith, G. C. (2018). Guiding people to interpret experienced difficulty as importance highlights their academic possibilities and improves their academic performance. Frontiers in Psychology, 9, 1–13.

Prinz, A., Golke, S., & Wittwer, J. (this issue). To what extent do situation-model-approach interventions improve relative metacomprehension accuracy? Meta-analytic insights. Educational Psychology Review.

Raaijmakers, S. F., Baars, M., Paas, F., van Merriënboer, J. J., & Van Gog, T. (2018a). Training self-assessment and task-selection skills to foster self-regulated learning: Do trained skills transfer across domains? Applied Cognitive Psychology, 32(2), 270–277. https://doi.org/10.1002/acp.3392.

Raaijmakers, S. F., Baars, M., Schaap, L., Paas, F., Van Merriënboer, J., & Van Gog, T. (2018b). Training self-regulated learning skills with video modeling examples: Do task-selection skills transfer? Instructional Science, 46(2), 273–290. https://doi.org/10.1007/s11251-017-9434-0.

Roediger, H. L., & Karpicke, J. D. (2006). Test-enhanced learning: Taking memory tests improves long-term retention. Psychological Science, 17(3), 249–255. https://doi.org/10.1111/j.1467-9280.2006.01693.x.

Roelle, J., Krüger, S., Jansen, C., & Berthold, K. (2012). The use of solved example problems for fostering strategies of self-regulated learning in journal writing. Education Research International., 2012, 1–14. https://doi.org/10.1155/2012/751625.

Roelle, J., Nowitzki, C., & Berthold, K. (2017). Do cognitive and metacognitive processes set the stage for each other? Learning and Instruction, 50, 54–64. https://doi.org/10.1016/j.learninstruc.2016.11.009.

Roodenrys, K., Agostinho, S., Roodenrys, S., & Chandler, P. (2012). Managing one’s own cognitive load when evidence of split attention is present. Applied Cognitive Psychology, 26(6), 878–886. https://doi.org/10.1002/acp.2889.

Scheiter, K., Ackerman, & Hoogerheide, V. (this issue). Looking at mental effort appraisals through a metacognitive lens: Are they biased? Educational Psychology Review.

Seufert, T. (this issue). Building bridges between Self-Regulation and Cognitive Load: an invitation for a broad and differentiated attempt. Educational Psychology Review.

Sithole, S., Chandler, P., Abeysekera, I., & Paas, F. (2017). Benefits of guided self-management of attention on learning accounting. Journal of Educational Psychology, 109(2), 220–232. https://doi.org/10.1037/edu0000127.

Soderstrom, N. C., & Bjork, R. A. (2015). Learning versus performance: An integrative review. Perspectives on Psychological Science, 10(2), 176–199.

Sweller, J., Van Merriënboer, J. J. G., & Paas, F. (1998). Cognitive architecture and instructional design. Educational Psychology Review, 10(3), 251–296. https://doi.org/10.1023/A:1022193728205.

Thiede, K. W., Anderson, M., & Therriault, D. (2003). Accuracy of metacognitive monitoring affects learning of texts. Journal of Educational Psychology, 95(1), 66–73. https://doi.org/10.1037/0022-0663.95.1.66.

Thiede, K. W., Griffin, T. D., Wiley, J., & Anderson, M. C. M. (2010). Poor metacomprehension accuracy as a result of inappropriate cue use. Discourse Processes, 47(4), 331–362.

van de Pol, J., de Bruin, A. B. H., van Loon, A. H., & van Gog, T. (2019). Students’ and teachers’ monitoring and regulation of students’ text comprehension: Effects of metacomprehension cue availiability. Contemporary Educational Psychology, 56, 236–249. https://doi.org/10.1016/j.cedpsych.2019.02.001.

Van de Pol, J., van Loon, M., van Gog, T., Braumann, S., & de Bruin, A. (this issue). Mapping and Drawing to Improve Students’ and Teachers’ Monitoring and Regulation of Students’ Learning from Text: Current Findings and Future Directions.

Van Gog, T., Hoogerheide, V., & van Harsel, M. (this issue). The role of mental effort in fostering self-regulated learning with problem-solving tasks. Educational Psychology Review.

Van Loon, M. H., De Bruin, A. B. H., Van Gog, T., Van Merriënboer, J. J., & Dunlosky, J. (2014). Can students evaluate their understanding of cause-and-effect relations? The effects of diagram completion on monitoring accuracy. Acta Psychologica, 151, 143–154. https://doi.org/10.1016/j.actpsy.2014.06.007.

Waldeyer, J., & Roelle, J. (2020). The keyword effect: A conceptual replication, effects on bias, and an optimization. Metacognition and Learning. Advance online publication. https://doi.org/10.1007/s11409-020-09235-7.

Wiradhany, W., Vugt, M. K., & Nieuwenstein, M. R. (2019). Media multitasking, mind-wandering, and distractibility: A large-scale study. Attention, Perception, and Psychophysics., 82(3), 1112–1124. https://doi.org/10.3758/s13414-019-01842-0.

Wirth, J., Stebner, F., Trypke, M., Schuster, C., & Leutner, D. (this issue). An interactive layers model of self-regulated learning and cognitive load. Educational Psychology Review.

Wong, J., Baars, M., Davis, D., Van Der Zee, T., Houben, G. J., & Paas, F. (2019). Supporting self-regulated learning in online learning environments and MOOCs: A systematic review. International Journal of Human–Computer Interaction, 35(4–5), 356–373.

Author information

Authors and Affiliations

Consortia

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

For a list of members of the EARLI Emerging Field Group (EFG) ‘Monitoring and Regulation of Effort’ at the time of writing, see Appendix

Appendix

Appendix

List of all other members (in alphabetical order) of the EARLI Emerging Field Group “Monitoring and Regulation of Effort” at the time of writing the editorial introduction paper (September 2019; Note that the special issue contributions were written after that, from September 2019–September 2020).

Rakefet Ackerman, Technion University, Israel

Felicitas Biwer, Maastricht University, The Netherlands

Tino Endres, University of Freiburg, Germany

Vincent Hoogerheide, Utrecht University, the Netherlands

Luotong Hui, Maastricht University, The Netherlands

Tamara van Gog, Utrecht University NL/Leibniz Institut für Wissensmedien GE

Eva Janssen, Utrecht University, The Netherlands

Jeroen van Merriënboer, Maastricht University, The Netherlands

Fred Paas, Erasmus University Rotterdam/University of Wollongong

Andrey Podolskiy, National Research University, Russia

Alexander Renkl, University of Freiburg, Germany

Juliane Richter, Leibniz Institut für Wissensmedien, Germany

Daria Savel’eva, National Research University, Russia

Katharina Scheiter, Leibniz Institut für Wissensmedien, Germany

Stoo Sepp, University of Wollongong, Australia

Yael Sidi, Technion University, Israel

Ferdinand Stebner, Ruhr-Universität Bochum, Germany

Melanie Trypke, Ruhr-Universität Bochum, Germany

Julia Waldeyer, Ruhr-Universität Bochum, Germany

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

de Bruin, A.B.H., Roelle, J., Carpenter, S.K. et al. Synthesizing Cognitive Load and Self-regulation Theory: a Theoretical Framework and Research Agenda. Educ Psychol Rev 32, 903–915 (2020). https://doi.org/10.1007/s10648-020-09576-4

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10648-020-09576-4