Abstract

Ambiguity affects decisions of people who exhibit a distaste of and require a premium for dealing with it. Do ambiguity-neutral subjects completely disregard ambiguity and react to any vague news? Online vending platforms often attempt to affect buyer’s decisions by messages like “20 people are looking at this item right now” or “The average score based on 567 reviews is 7.9/10”. We augment the two-color Ellsberg experiment with similarly worded signals about the unknown probability of success. All decision-makers, including ambiguity-neutral, recognize and account for ambiguity; ambiguity-neutral subjects are less likely to respond to vague signals. The difference between decisions of ambiguity-neutral and non-neutral subjects vanishes for high precision signals; still less than 60% subjects choose the ambiguous urn, even for high communicated probabilities of success. We conjecture participants may discard information, if they see no contradiction between it and their prior beliefs, hence the latter are not updated. Higher confidence makes subjects more likely to discard the news, and empirically ambiguity-neutral subjects appear more confident than those ambiguity-averse.

Similar content being viewed by others

1 Introduction

News affects behavior of individuals, organizations, and whole markets even if the conveyed message lacks precision.Footnote 1 A special type of imprecise news can be seen in on-screen notifications common in online stores and booking platforms: “Booked 4 times on your dates in the last 6 h on our site. Last booked for your dates 26 min ago.” (booking.com), “In high demand: 28 booked in the last day” (rentalcars.com), “Customer reviews: 4.1 out of 5; total 4354 customer ratings” (amazon.co.uk), etc. We place messages of this type in the context of decisions in uncertainty and experimentally show that ambiguity attitudes explain reaction to them. Ambiguity-neutral subjects are less likely to change choices in response to vague news, implying that somehow vagueness matters for them despite neutrality to ambiguity; they also disregard messages that communicate very high probabilities of success. A possible explanation is in the lack of updating of prior beliefs by subjects with high confidence, which appears to correlate with ambiguity-neutrality.

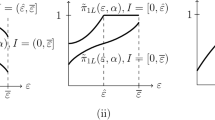

Models of decisions in uncertainty agree that when ambiguity is resolved, decisions of non-neutral to ambiguity subjects become indistinguishable from those of ambiguity neutrals. Empirically, for example, Baillon et al. (2017) show, using real data, that as soon as more information about the dynamics of stock option performance becomes available, ambiguity-averse subjects form beliefs close to those of their ambiguity-neutral peers. It is not clear though, if ambiguity-neutral subjects fully incorporate all incoming information in their decision; in our data, for example, a significant fraction of ambiguity-neutral subjects respond to positive news but this fraction is nowhere close to 100 per cent (see Fig. 1). Theoretical models offer qualitatively different predictions in this regard. In the multiple-priors framework (Gilboa and Schmeidler 1989), a signal (communication of information) affects decisions of ambiguity-averse subjects only if it alters the lowest expected utility. Therefore, a vague signal that changes the set of priors but leaves the worst expectation unchanged might lead to a change in choices of ambiguity-neutral subjects (if their belief is affected) but no change in the ambiguity-averse behavior. In the second-order models (e.g. Klibanoff et al. 2005; Nau 2006; Neilson 2010) response of ambiguity-averse subjects depends on how exactly signals affect the whole second-order distribution; theoretically, they can both underreact and overreact to news compared to the response of ambiguity-neutrals. Neo-additive capacities (Chateauneuf et al. 2007) explicitly weigh the probabilistic and the non-probabilistic components of the decision functional. As long as the worst and the best outcomes remain unaffected, the neo-additive approach implies a higher impact of news that communicate a probability value on probabilistically sophisticated subjects, as the latter assign a weight of unity to the probabilistic component. Importantly, the above (and other) theoretical models assume homogeneity of ambiguity-neutral subjects in terms of their dealing with ambiguity, which appears in conflict with our data.

Fractions (y-axis) of ambiguity-averse and ambiguity-neutral subjects choosing the urn with an unknown composition (a) in Ellsberg-type two-color experiments. “Ellsberg (color)”, where color = red or blue, depict choices in treatments without signals: “If a [color] ball is drawn you will get the prize. Would you prefer to draw the ball from Urn A or Urn B?” Further questions are conditioned on color = red and include signals (formulated on the x-axis) about choices and draws of hypothetical other participants. Subjects are classified as ambiguity-averse if in the standard Ellsberg experiment they prefer the unambiguous urn independent of the color on which the prize is conditioned; subjects who choose different urns in the two Ellsberg questions are classified as ambiguity-neutral. The total number of ambiguity-neutral (AN) subjects is 204 and ambiguity-averse (AA) is 1035. Number of ambiguity-averse subjects choosing urn A in “Ellsberg (Red)” and “Ellsberg (Blue)” is zero by definition

The fact that ambiguity-neutral subjects by definition neither like nor dislike ambiguity, does not have to imply they neglect it. When subjects receive information, they first evaluate it, before making the decision. The information is tested against a prior belief (the probability of success in the ambiguous urn) subjects have: if there is no contradiction between the prior and the data, the prior stands; if the data contradict the prior, the latter is updated to fit the data.Footnote 2 This belief then informs decision-making. Subjects who are more confident in their prior are less likely to update beliefs in response to new information. Literature (e.g. Dominiak et al. 2012) and our observations suggest these are more likely to be ambiguity-neutral; thus they effectively take ambiguity of news into account by discarding the news. This approach is similar to ideas used in Ulrich (2013), where investors receive signals (observe inflation data) and apply a likelihood ratio test to identify if their reference model is trustworthy, and in Fryer et al. (2019) where agents receive an imprecise (open to interpretation) signal, which is first interpreted and then used to form beliefs. In our case the signal is interpreted from the perspective of whether it confirms the prior belief or not.

As highlighted in Fig. 1, we extend a standard two-color Ellsberg (1961) experiment by adding signals about the probability of success in the ambiguous urn and investigate how subjects with different ambiguity attitudes respond to variations in ambiguity, in the communicated probability of success, and in both. A number of studies have previously analyzed the impact of varying levels of ambiguity on decisions. Early studies by Curley and Yates (1985) and Bowen et al. (1994) represented ambiguity as an interval of possible values of probability and varied both the length and the centerpiece of this interval with an objective to detect changes in the average ambiguity attitude of the sample. Budescu et al. (2002) and Du and Budescu (2005) use the same approach, yet focus on subjects’ sensitivity to gain/losses framing, as well as to the domain of uncertainty (outcomes or probabilities).Footnote 3 Another approach to vary ambiguity is used by Ahmed and Skogh (2006) who make subjects' payoffs dependent on a draw from an urn, the composition of which is either unknown, or described in a way that limits but does not fully reveal the likelihood of success, or described well enough to give a precise probability of it. In their data, when ambiguity is high, subjects prefer to share losses, but as ambiguity reduces, subjects switch towards insurance. However, no distinction between ambiguity-neutral or ambiguity-averse subjects makes it difficult to judge to which extent subjects' decisions are governed by ambiguity attitudes.Footnote 4 Our focus is on differences between ambiguity-neutral and non-neutral subjects, and in particular on the way signals about the likelihood of success and the ambiguity of those signals affect decisions.

To signal probabilities in ambiguity, we imitate messages of online stores and booking websites who often tempt buyers with messages like "5 people are looking at this item at the moment", "This hotel was booked 13 times on our site" or "Score based on 527 reviews: 7.9/10". These messages may be ordered according to their information strength: the first one tells us about other people making a decision but not the decisions or their outcomes; the second one reports on some decisions previously made but ignores the outcomes. The third message is the most informative out of the three, reporting the average outcome and its reliability (the number of “trials”). We use this idea to compose signals in our experiments. For example, we tell our subjects that 12 [hypothetical] participants before them chose the ambiguous urn in the Ellsberg task, or 12 out of 20 actually drew a red ball from that urn. As messages of this type are common in everyday life, they are easy to understand. From a statistical perspective, they may be conveniently interpreted as a frequentist representation of probability or as reports on the number of successes in Bernoulli trials. Subjects make decisions sequentially, starting with the standard Ellsberg task, which is used to distinguish between ambiguity-averse, -neutral, and –seeking participants. They further progress through signals akin to those described above. We collect data from five independent experiments, both lab-based and online, with and without monetary incentives, with the number of participants ranging from 109 to 892, giving us a total of 1182 valid responsesFootnote 5 of ambiguity-averse and ambiguity-neutral subjects. The large total number of responses is crucial as ambiguity-neutral subjects are a key benchmark in our approach, and usually their fraction in experiments on ambiguity attitudes is not large; online experiments offer a good opportunity to collect large samples. We then conduct a difference in means analysis, complemented with probit regressions controlling for gender, age and knowledge of statistics, to establish the effects of signals on decisions.

On the one hand, providing some information, however vague, potentially reduces ambiguity, for which reason we expect a positive impact on ambiguity-averse subjects. Indeed, in all tasks we detect a significant effect of signals on subjects' choices; ambiguity-averse subjects are likely to react even to very vague news bearing little information about the probability of success, see Fig. 1 for a preview of our results. On the other hand, vague news lacks reliability, for which reason a vague signal may be not strong enough to reject prior beliefs about the fundamentals, thus implying limited effect through the change in fundamentals (probability of success) channel. Theoretically, ambiguity-neutral subjects only respond to changes in fundamentals. In our data, a large part of them remain unaffected by the news, although the communicated probability of success is evidently above 0.5, as there is also a significant fraction of those who change decisions. This highlights the heterogeneity in the ambiguity-neutral cohort, which we attribute to confidence. The difference between ambiguity-averse and ambiguity-neutral subjects in their responses to signals becomes less significant once they face signals that convey some probability of success; subjects with a better knowledge of mathematical statistics and probabilities are more likely to respond to them. The strongest response is observed for the signal that communicates the highest likelihood of success. Varying the precision of the signal also produces a significant effect on subjects' choices. All main results hold at the aggregate level for the pooled data, controlling for experiments, at the split level for the subsamples of lab versus online, and incentivized versus unincentivized experiments, and at the level of individual experiments, to ensure consistency of findings across them.

2 Methodology and data

Our data comes from both online and lab experiments. Jumping ahead, main results are identical across all independent online experiments reported in this paper, which adds validity to the online design. As a comparison benchmark, lab experiments confirm online findings. There is an ongoing debate in the literature on the validity and generalizability of results from lab experiments (e.g. Rubinstein 2001 and 2013, Levitt and List 2007, Falk and Heckman 2009—to mention a few). Appendix A discusses pros and cons of online versus lab experimental settings (see, e.g. Reips, 2000, and Birnbaum, 2004, for an overview). The main reason for us to go online is the data on ambiguity-neutrality: we need a large enough sample to ensure the cohort of ambiguity-neutral subjects is of a reasonable size. As on average about 60% of subjects are found ambiguity-averse, with some studies reporting as many as above 70% (see Oechssler and Roomets 2015), and the Ellsberg test can falsely classify ambiguity-neutral subjects as ambiguity-averse or -seeking, in a worst case scenario we can be left with about 10–15% of subjects deemed ambiguity-neutral. In a typical lab session this could mean as little as 3–5 participants in a cohort. To overcome this problem, lab results in our paper are based on several sessions. An online experiment is a rather inexpensive alternative to obtain the required large number of responses. Another issue is the payment scheme, which often becomes a point of critique. For robustness, we conducted several experiments with different payment schemes as well as without monetary incentives—results are qualitatively the same. Consistency of results across individual experiments is reported in Sect. “Individual experiments”.

2.1 Questionnaire and variables

Subjects answer a questionnaire consisting of four parts, see Appendix B. In part I, they face the standard Ellsberg task and report whether they would bet on urn \(A\) (ambiguous) or \(B\) (risky) if they need to pick a red (in question Q1) or a blueFootnote 6 (in question Q2) ball in order to win. This task is used as a simple test of ambiguity attitudes. For the major part of the analysis our focus is on ambiguity-aversion and ambiguity-neutrality. We will code subject \(i\)'s ambiguity aversion as a binary variable \(AA_{i}\), which takes a value of 1 if the subject is classified as ambiguity-averse, and 0 if the subject is ambiguity-neutral according to this test. Neither ambiguity-averse nor ambiguity-seeking subjects can be falsely classified as ambiguity-neutral, yet some ambiguity-neutral subjects may be falsely classified as ambiguity-averse. For this reason, any potential differences between cohorts with \(AA_{i} = 1\) and \(AA_{i} = 0\) are conservative estimates which would only become more pronounced if truly ambiguity-neutral subjects are removed from the cohort with \(AA_{i} = 1\).

In Part II subjects are told the prize in all subsequent tasks is conditioned on drawing Red, as in Q1. Each question contains a signal that refers to choices and draws of hypothetical "other participants". The wording “other participants” was inspired by messages like “Five other users are looking at this hotel at the moment”, common on booking websites. For online participants, questions and "other participants" are hypothetical by the design of the survey. Although the word "hypothetical" does not appear in the questions, numbers were chosen so that subjects do not associate questions with the real participants in the lab. To avoid deception, in the lab sessions an effort was made to explain in the introduction that questions were hypothetical and outcomes would be computer-modelled. It was also made clear that hypothetical balls are returned to the urns after each draw. In an informal post-experiment feedback, both in the lab and onlineFootnote 7 participants confirmed no confusion arose in this regard. We use different incentive schemes in different settings, to ensure results are not driven by a potential misunderstanding of the question or incentives.

In Q3 subjects learn that 12 “other participants” chose the ambiguous urn (\(A\)), while in Q4 they learn that 12 out of 20 participants did so. Neither signal explicitly communicates anything about the distribution of balls in urn A, yet subjects may perceive them as such. Responses of ambiguity-neutral subjects serve as a litmus test for the probabilistic component of signals. Question Q5 communicates that 12 out of 20 "other participants" drew a red ball from the ambiguous urn. This signal is designed to indicate the likely distribution of balls in urn \(A\) without removing ambiguity completely. Questions Q6 and Q7 differ from Q5 in either the communicated frequency of drawing Red from \(A\) (16/20 participants instead of 12/20) or the number of total observations on the basis of which this frequency was calculated (120/200 participants drew Red from \(A\) instead of 12/20). We associate these signals with a better probability of success in \(A\) (16/20 > 12/20), and a further reduction in ambiguity (increase in precision of the signal) respectively. All numbers are chosen with the intention to simplify calculations subjects might wish to perform.

In Part IV, participants are asked to rate their proficiency in statistics and probabilities, indicate their age and gender. Along with revealed confidence, answers to these demographic questions serve as control variables. Variable \(STATS\) captures subjects' proficiency in statistics: \(STATS = 1\) for subjects who assess their knowledge of statistics and probabilities higher than 3, the median, at the five-point scale used in question Q10. \(FEMALE\) takes value 1 for female subjects and 0 for males, as reported in answers to question Q11.

\(YOUNG\) distinguishes between younger (age reported in question Q12 is below 25, \(YOUNG = 1\)) and older (\(YOUNG = 0\)) cohorts of subjects. This split is dictated by the distribution of observations in the age groups and is conveniently consistent with the definition of "youth" by the UN.Footnote 8

Table 1 describes data from all five experiments. For comparison, Oechssler and Roomets (2015) summarize percentages of subjects that can be classified as ambiguity averse from 39 experimental studies: if extremes are omitted, on average 57.1% subjects are found ambiguity-averse, with the percentage ranging predominantly between 45 and 75%. Our observations lie comfortably within these limits.

2.2 Recruitment and incentives

For the lab experiments, subjects are recruited on campus, representing a mix of students and staff. Experiment Lab1 took place at the Higher School of Economics in Perm (Russia), with recruitment through a newsletter and announcements in lectures. Due to space limitations, two sessions were held to collect answers, totaling 109 subjects; in each session, subjects were informed that once all answers are collected, three questions would be selected randomly, and for them urns \(A\) and \(B\) would be reconstructedFootnote 9 in front of the audience in a special prize-drawing session. Based on the actual draws and subjects' choices in the relevant questions, the participant with the highest number of correct guesses in these three questions would receive the main prize (RUR 3000, about 70% of the official minimum monthly wage at that time), and the runner-up would receive the second prize (RUR 2000); any ties are resolved by randomization between participants with the highest number of guesses. The competition between the winner and the runner up is not a problem as it still creates incentives to provide the highest number of right guesses.

Experiment Lab2 took place at the ESSEXLab of the University of Essex (UK). The ESSEXLab maintains a database of students and staff who have pre-registered for participation in computerized lab experiments. Emails are sent to randomly selected subjects from this database to recruit subjects. The experiment was programmed with z-Tree.Footnote 10 After all answers are collected, the software emulates urns \(A\) and \(B\) by randomizing outcomes: the probability of drawing Red is set at 0.5 both for urn \(B\) (risky) in all questions and for urn \(A\) (ambiguous) in questions Q1-Q4; at 0.6 for urn \(A\) in questions Q5 and Q7; and at 0.8 for urn \(A\) in question Q6. The distributions for A were not communicated to the subjects, who were rather told explicitly the following: "…disregard information from previous questions and focus only on the information given in the particular question you are answering. You can assume that this information is a correct description of the situation. It is NOT used to misguide you." Subjects receive £2 for each question where their answer matches the computer-modelled draw. A minimum payoff of £5 was guaranteed to participants; the average payoff was £17.

In all online experiments, subjects were recruited by snowball sampling, with an initial invitation sent by email within the professional network of the experimenters, as well as posted on social networks with a request to re-post. In experiment Web1, a prize (£100 cheque) was promised to the participant with the highest number of answers that match computer-generated draws; ties resolved by a random allocation, as in experiment Lab1. Subjects had an option to provide their email address to be contacted if they win; about two-thirds of them did. Other online experiments had no monetary incentives. We controlled for intrinsic non-monetary motivation (Vinogradov and Shadrina 2013) by dropping observations from incomplete questionnaires. Adding experiments with no monetary incentives allows us to control for the effect of the random assignment of the prize on decisions in ambiguity—effectively, the randomization device embedded in such an incentive scheme, forms a compound lottery together with the tasks subjects face in the experiment. Theoretically, this may distort subjects' choices. Having experiments with no monetary incentives removes this distortion.

2.3 Analysis

Each subject performs a series of tasks under different information conditions, which we call treatments. A question from the original Ellsberg task will be chosen as a basis treatment (control). Our objective is to measure the effect of a change in the information condition on subjects' choices. Denote subject \(i\)'s choice in treatment \(j = 1..J\) as \(T_{i,j} \in \left\{ {A,B} \right\}\). One observation is a response of one subject in one treatment (control).Footnote 11 Each observation can be assigned a number\(n = J \cdot \left( {i - 1} \right) + j\), establishing a one-to-one correspondence between subject-treatment tuples and observations. For each observation of subject\(i\), we define \(J\) values of the response variable as follows:

Knowing the values of control variables \(x_{i}\) for each subject\(i\), we similarly define for each observation \(n\) the subject-specific control variable \(x_{n}\) as \(x_{{J.\left( {i - 1} \right) + j}} = x_{i}\) for each\(j = 1..J\). The same procedure is applied to ambiguity aversion \(AA_{n}\) .

Finally, we define \(J\) signal-specific indicators \(s_{j}\) (with \(j = 1..J\)) with the following values for each observation \(n\):

With this notation, each observation \(n = J \cdot \left( {i - 1} \right) + j\) consists of the response \(R_{{\text{n}}}\) of subject \(i\) to signal \(j\) (treatment \(j\)), subject \(i\)'s ambiguity aversion \(AA_{n} = AA_{i}\), other subject-specific factors \(x_{n}\) and a treatment indicator \(s_{j,n}\), which takes a value of 1 if observation \(n\) corresponds to treatment \(j\). It follows that the number of observations entering each particular regression estimate equals the number of subjects' responses times the number of signals used in that regression. This equips us with a tool to estimate the impact of signals, ambiguity aversion and behavioral factors on the response variable \(R_{n}\). All variables are binary. All estimates will be obtained from probit regressions with standard errors clustered at the subject level, controlling for experiment-specific fixed effects.

It only remains to define the control condition. The Ellsberg task is the one with the least information on the ambiguous urn, and lends itself as a control, however it contains two questions (color conditions). Conveniently, ambiguity-averse subjects, the way we classify them, choose only the risky urn (\(B\)) in both questions (similarly, ambiguity-seeking subjects choose the ambiguous urn \(A\) in both questions). Ambiguity-neutral subjects are expected to randomize 50–50 between \(A\) and \(B\), yet in our data 66.2% of ambiguity-neutral subjects chose the urn with the unknown distribution, \(A\), in the first question, when asked to bet on Red, and 33.8% chose it in the second question, betting on Blue. This suggests either a color bias (subjects believe they are luckier when they bet on Red than on Blue), or the question order bias (subjects choose option \(A\) first as it comes first on the screen when reading from top to bottom and from left to the right, and then they choose their answer to the second question, so as to make it consistent with the first one, in line with ambiguity-neutrality; as the question with betting on Red comes first, it attracts more choices). All subsequent treatments, however, clearly specify that the prize would only be awarded for drawing Red, and come as a single question for each signal. The color bias can be ruled out as when asked about Red only, especially in questions Q3 and Q4, the fraction of ambiguity-neutral subjects choosing \(A\) becomes comparable to that in the Ellsberg task conditioned on Blue. Also note the counter-intuitive effect: although Q1 and Q3-Q7 participants bet on red only, AN subjects are less likely to choose the ambiguous urn A when they receive favorable information than when they don’t. This also points towards possible order bias. To correct for the order bias, we choose the second question from the Ellsberg task as the control condition and denote it as \(s_{{{\text{ctrl}}}}\). This approach provides a conservative estimate of differences between ambiguity-averse and -neutral subjects, as it makes the two groups closer to each other in their initial choices. As a robustness test, we will use two alternative specifications for the control condition. First, we will demonstrate how main results hold if the first ("Red") Ellsberg question is chosen for control. Second, we will designate Q3 ("12 other participants prefer urn \(A\)") as an alternative control condition. Moreover, we will redefine the control condition again, when comparing choices across "probabilistic" signals. This will allow us to contrast effects of signals in the ambiguity domain, i.e. compared to the original choices in conditions of high ambiguity, versus those in the probability domain, i.e. focusing on differences generated by signals that communicate probabilities.

In order to make notation more self-explanatory we denote signals as \(s_{{12{\text{pref}}}}\) for question Q3 ("12 other subjects prefer urn \(A\)"), \(s_{{12/20{\text{pref}}}}\) for question Q4 ("12 out of 20 subjects prefer urn \(A\)"), and will use the communicated ratios of successes in \(A\) as subscripts in \(s_{12/20}\), \(s_{16/20}\) and \(s_{120/200}\) for questions Q5–Q7. This corresponds to \(s_{{\text{j}}}\) used earlier.

3 Results

We first present results for the effects of signals on subjects' choices as compared to the original Ellsberg task, which we call the "total effect". Then we study "marginal effects" of signals, i.e. changes in choices between different treatments, with the main focus on the probability domain. We further proceed with an analysis of behavioral factors that affect decisions.

3.1 Total effects

A strictly positive and significant fraction of ambiguity-averse subjects choose the ambiguous prospect after signals \(s_{{{\text{12pref}}}}\) and \(s_{{12/20{\text{pref}}}}\) (recall, all ambiguity-averse subjects, by our classification, choose B in the control treatment), although these signals do not explicitly hint towards any particular value of the probability of success in A. The non-probabilistic nature of these signals is confirmed by no significant change in choices of ambiguity-neutral subjects, see differences \(s_{{{\text{12pref}}}} - s_{{{\text{ctrl}}}}\) and \(s_{{\text{12/20pref}}} - s_{{{\text{ctrl}}}}\) in Table 2. The magnitude of changes in the fractions of ambiguity-averse and -neutral subjects looks similar, yet note that initially a large fraction of ambiguity-neutral subjects chose A, while zero ambiguity-averse subjects did, this explains the difference in significance. When, however, a signal hints towards a particular value of probability of success, both ambiguity-averse and ambiguity-neutral subjects react to such news, even though the news is still vague. In all treatments, more ambiguity-neutral subjects choose A, in line with our expectations. Differences in means in Table 2 demonstrate a stronger response of ambiguity-averse, than ambiguity-neutral subjects to probabilistic signals \(s_{12/20}\) , \(s_{16/20}\) and \(s_{120/200}\); as expected, we observe that choices of ambiguity-averse subjects converge to those of ambiguity-neutrals and that probabilistic signals affect decisions of ambiguity-neutral subjects to a lesser degree than those of ambiguity-averse. Note also that the weak response of ambiguity-neutral subjects to signals also manifests in the fact that in no treatment we have any more than 60% of them choosing the ambiguous urn, A.

Importantly, the difference between the effects of the two non-probabilistic signals,\(s_{{12/20{\text{pref}}}} - s_{{12{\text{pref}}}}\), is also significant for ambiguity-averse subjects although it is rather small, while it remains insignificant for ambiguity-neutrals. Even a small change in the formulation of the message, that makes it look somewhat more plausible, affects behavior of ambiguity-averse participants. Generally, comparison of signals \(s_{{\text{12/20pref}}} - s_{120/200}\) with signal \(s_{{12{\text{pref}}}}\) instead of \(s_{{{\text{ctrl}}}}\) yields very similar results to the above, confirming our findings are not biased by the choice of the control condition. Remarkably though, this comparison for ambiguity-neutral subjects reveals little change generated by signals \(s_{12/20}\) and\(s_{120/200}\), indicating the communicated probability of success is not high enough to generate a significant change in choices. Yet note that here the results are also internally consistent: ambiguity-neutral subjects equally respond to signals with different precision but the same probability, even when the basis for comparison is changed (\(s_{{12{\text{pref}}}}\) instead of \(s_{{{\text{ctrl}}}}\)).

This simple analysis of differences does not account for heterogeneity of subjects. To address this, we estimate the impact of signals on subjects' choices, controlling for subject-specific parameters, see Table 3.Footnote 12 Signal notation is now used to refer both to regressions constructed for the corresponding treatments and to the dummies used in those regressions. Each regression contrasts the relevant treatment with a control condition\(s_{{{\text{ctrl}}}}\); hence each dummy takes a value of 0 if the relevant observation comes from the control condition. All signals appear to significantly affect choices. The weakest, albeit still significant impact comes through \(s_{{12{\text{pref}}}}\) and \(s_{{12/20{\text{pref}}}}\), which are least informative by design. Signaling a better probability (as in \(s_{16/20}\)) has the strongest impact on subjects. To compare the relative strength of the impact, note that coefficients for \(s_{{12{\text{pref}}}}\) and \(s_{{12/20{\text{pref}}}}\) are not statistically different, while \(s_{12/20}\), \(s_{16/20}\) and \(s_{120/200}\) significantly differ from them and between each other (Wald test, \(p < 0.01\) for all pairs). In all treatments, ambiguity-averse subjects are less likely to choose A. Good understanding of mathematical statistics makes subjects more likely to respond to probabilistic signals \(s_{12/20}\) –\(s_{120/200}\). The role of gender is not consistently visible although there is a weak tendency for female subjects to more frequently choose A.

In Table 3, ambiguity attitude has a universal effect on subjects' choices across treatments: being ambiguity-averse makes subjects less likely to choose A, as expected. The question is. However. whether they are also more or less likely to respond to signals than ambiguity-neutral subjects. To investigate, we include the interaction term between ambiguity aversion, AA, and signal dummies, using the same controls as above. The signal terms without interaction reflect the impact of signals on decisions through updating of the prior, while the interaction term captures the ambiguity premium effect on ambiguity-averse subjects. Results in Table 4 confirm the first two signals affect subjects' decisions through ambiguity premiums solely, while signals \(s_{12/20}\)—\(s_{120/200}\) work by updating the probability of success. Ambiguity-averse subjects are more affected by probabilistic signals: all interaction coefficients are positive, working against the separate effect of ambiguity aversion, yet the response is stronger to probabilistic signals (e.g. coefficients for \(s_{{12/20{\text{pref}}}} \times AA\) and \(s_{12/20 } \times AA\) are different at \(p < 0.01\), Wald test). Although all interaction terms are positive and significant, they counteract the separate effect of AA, so that responses of ambiguity-averse subjects to probabilistic signals are more aligned with those of ambiguity-neutrals, consistent with Table 3.

3.2 Robustness

First, we re-define \(s_{{{\text{ctrl}}}}\) so that it corresponds to the first question in the Ellsberg task (recall that in the above exercise we used the second question from the Ellsberg task as a control condition). As in Table 4, we measure effects of all signals on subjects' choices as compared to this benchmark. Results in Table 5 confirm main findings (see coefficients for \(AA\) and the interaction terms), except that we observe now a significant negative effect of signals on choices, which we attribute to the order bias, discussed in Sect. “Analysis”: ambiguity-neutral subjects, counter-intuitively, become less likely to choose A after they receive signals, for which reason the difference between their response to signals and that of their ambiguity-averse peers becomes even larger than in Table 4, see the interaction terms.

Second, we re-define the control condition again, by taking \(s_{{12{\text{pref}}}}\) to be the new \(s_{ctrl}\). By design, \(s_{{{\text{12pref}}}}\) is the least informative of all signals. We re-estimate the same model as in Table 4. This gives us a more conservative estimate, as some ambiguity-averse subjects have already responded to signal \(s_{{12{\text{pref}}}}\). Still effects of further signals are sizeable; the estimates confirm main findings, see Table 6. Note that, consistent with Table 2, results demonstrate the communicated probability in \(s_{12/20}\) and \(s_{120/200}\) is not high enough to generate any significant change in the behavior of ambiguity-neutral subjects, this time also controlled for their gender, age, and knowledge of statistics. The equality of coefficients also demonstrates ambiguity-averse subjects equally [non-] respond to these two signals. Yet the significant interaction terms confirm stronger response of ambiguity-averse subjects, both to these two signals and to \(s_{16/20}\).

So far, we have ignored the ambiguity-seeking cohort – mainly because the usual focus is on ambiguity-aversion, and the fraction of ambiguity-seeking subjects is typically small. All theoretical considerations apply to ambiguity-seeking subjects with an opposite sign, which would imply, in particular, that in all signals more of them choose A, yet that reduction in ambiguity would make less ambiguity-seeking subjects choose A. If their confidence differs from the ambiguity-neutral cohort, there may also be difference in updating. Results in Table 7 are in line with this interpretation.

Finally, we turn to the ability of subjects to understand the probabilistic nature of the information we mean to communicate with our signals. This has been the key element in our construct as we aimed to affect the prior subjects have. We use their self-assessed proficiency in statistics to divide the sample into two groups–those with high proficiency in statistics (STATS > 3), and those who report they are not very familiar with probabilities and statistics (STATS = 3 and below). The expectation is that subjects with a higher proficiency in statistics would be able to recognize that the signals communicate the probability of success and thus the role of these signals for them is more pronounced than for those with little understanding of statistics. Table 8 confirms this conjecture – only the signal that communicates the highest probability of success,\(s_{16/20}\), significantly affects choices of the cohort with less familiarity with statistics. Unlike them, “statisticians” respond to all probabilistic signals. Moreover, notable is that the coefficients for the interaction terms are greater in the low-STATS cohort, which indicates ambiguity-averse subjects in this cohort recognize a reduction in ambiguity and respond to it, even though ambiguity-neutral subjects on average do not change their choices.

3.3 Individual experiments

Above, we pooled data from several experiments, controlling for experiment-specific fixed effects. Table 9 presents main results for individual experiments, as well as for incentivized versus non-incentivized, and online versus lab-based experiments.Footnote 13 All main results hold: ambiguity-aversion is robustly associated with a stronger reaction to all signals, ambiguity-neutral subjects robustly react to \(s_{16/20}\); in experiment Web1 they also equally react to \(s_{12/20}\) and \(s_{120/200}\), while in all other experiments, except for Lab1, we are unable to detect any significant response of them to these two signals. The only exception here is Lab1, where ambiguity-neutral subjects seem to react to \(s_{120/200}\) but not to \(s_{12/20}\). This may be due to insufficient incentives and subsequently randomizing behavior of otherwise ambiguity-averse subjects, see discussion in Appendix A.

All control variables also demonstrate consistent effects in individual experiments, except for gender: female subjects are more likely to choose \(A\) in online experiments, while less likely to do so in the lab setting (although the latter effect is insignificant, most likely due to the low number of observations). Potentially this can be associated with different impacts of online (typically accessed from home, at convenient time, relaxed and comfortable) and lab (formal, scheduled, less comfortable) environments on female and male subjects. In our study gender is a control variable, and this finding does not affect our main results, yet raises a note of caution for experimental investigations of gender gaps in decisions, as results may be environment sensitive.

4 Discussion

Our results can be summarized as follows. First, subjects crudely classified as ambiguity-averse by the Ellsberg test, are persistently less likely (than their ambiguity-neutral peers) to choose the ambiguous prospect in all treatments. Second, not all ambiguity-neutral subjects respond to signals: even for the highest probability signal in our setting, the one that is designed to communicate 80% probability of success, we only obtain just about 58% ambiguity-neutral subjects preferring urn A (ambiguous).

One can explain this result by assuming the ambiguity-neutral group is heterogeneous and differs from the ambiguity-averse cohort in terms of levels of confidence.Footnote 14 More confident subjects are less likely to respond to signals as the latter do not provide enough evidence to reject the prior, given the confidence level. If ambiguity-averse subjects are less confident, it comes as little surprise that they react stronger to positive news about "the fundamentals" (the probability of success). Ambiguity-averse subjects also respond to news that bear little information about the fundamentals; the effect appears to be through a perceived reduction in ambiguity.Footnote 15 Although it is conceivable that subjects update their probabilistic priors based on the reported behavior of other subjects (e.g. if they believe that others possess information that they do not, which is quite plausible if no information is available), the non-response of ambiguity-neutral decision-makers rules this possibility out. The reaction of ambiguity-averse subjects to these questions (\(s_{{12{\text{pref}}}}\) and \(s_{{12/20{\text{pref}}}}\)) may already be seen as their lower confidence than that of their ambiguity-neutral counterparts. We now seek further evidence of heterogeneity in confidence and its correlation with ambiguity attitudes.

In the post-experiment survey we asked subjects: “If you pick the wrong color you can return the ball to the urn and instead pick again. Will you pick again from the same urn?” (Q8). Answers to this question provide us with some measure of subjects’ confidence, taking values of 1 (“I will pick from the same urn”) for higher confidence and 0 (“I will pick from a different urn”) for lower confidence. Figure 2 compares the average confidence of cohorts of subjects who choose A after each of our signals. The average confidence of the ambiguity-neutral subsample remains roughly unchanged at the level of 69–74%; differences are not significant. For ambiguity-averse subjects our measure is insignificantly lower at 68–69% for “uninformative” signals \(s_{{{\text{12pref}}}}\)—\(s_{{12/20{\text{pref}}}}\) and jumps to about 80% for \(s_{12/20}\)—\(s_{120/200}\) (difference from ambiguity-neutrals and from “uninformative” signals statistically significant at p > 0.05) suggesting a sub-cohort with higher confidence responds to more informative signals, as we assumed in the description of the information processing stage.

Average confidence of ambiguity-neutral (AN) and ambiguity-averse (AA) subjects choosing A in each of the signals. Confidence is a dummy equal to 1 if the subject answers "I would draw from the same urn" when asked "If you are given a second chance, would you draw from the same urn or from the other urn?"

While we interpret the above measure as confidence, it has some drawbacks. First, it is binary and does not give a clear picture of difference in confidence between subjects who choose A in uninformative and informative questions. Second, it is noisy: an ambiguity-neutral subject with a prior of 0.5 might want to switch from one urn to another, independent of confidence. Unlike confidence-driven change in choices, this switching is random due to indifference. While ambiguity-neutrals with other priors should not switch unless they are not confident in their priors, the presence of “random switchers” creates noise and may lead to an underestimation of confidence in the ambiguity-neutral cohort. We, therefore, turn to a different approach to assess confidence of our respondents.

In the Lab 2 experiment we asked post-experiment questions of the type “In question 5 you were asked “12 people out of 20 picked a red ball from urn A. Which urn would you prefer now?”—did you have any particular number of red balls in mind when making the choice?” Subjects could choose ranges \(\left[ {n,n + l} \right]\) between \(n \ge 0\) and \(n + l \le 100\), and were advised to choose “from 0 to 100” if they did not have any particular number of balls in mind; if they thought the number of red balls was \(n\), they were to indicate \(\left[ {n,n} \right]\) . The lengths of the intervals, \(l\), may be seen as a subjective perception of ambiguity that surrounds decisions. Yet ambiguity in each question is exogenous and identical for all subjects. The differences in perceptions therefore can be seen as reflecting subjects’ confidence in their choices.Footnote 16 We take the value 100 – l to measure their confidence: subjects who report shorter ranges are seen as more confident in their prior. Averages for this measure per signal are in Fig. 3: results mimic the above assessment made through the crude binary measure of confidence. Generally, improvement in the information content of the signal makes more subjects with higher confidence choose A. Ambiguity-neutral subjects on average demonstrate higher confidence, which explains why many of them do not respond to informative though vague signals.Footnote 17

5 Conclusion

We have presented results of an Ellsberg-type experiment with imprecise signals about the probability of success in the ambiguous urn. Our signals are verbal, similar to messages often used in online stores. They vary in the level of ambiguity, or communicated probability, or both. We conjecture that at the information processing stage decision-makers assess whether their prior needs updating. This assessment is part of the decision process of all subjects, independent of their ambiguity attitudes. If confidence is high and the precision of the signal is low, the prior belief may stay despite the news subjects receive. This approach explains, for example, why ambiguity-neutral subjects may differently respond to signals of different levels of ambiguity: vague signals will not reject the prior belief, and thus have no impact on decisions, while signals with low ambiguity (high precision) will lead to an updating of beliefs, and thus affect decisions. Ambiguity-neutrality is still there, reflected in the zero ambiguity premium ambiguity-neutral subjects require for dealing with ambiguity, even though the latter is recognized. However, ambiguity attitudes appear correlated with confidence, which is the key factor behind the assessment that leads or does not lead to updating of beliefs.

Empirically we find that ambiguity-neutral subjects indeed are less responsive to vague news. Despite varying the communicated probability of success and the precision, we were unable to obtain any more than 60% of them choosing the ambiguous urn in the Ellsberg-type experiment. Responses of ambiguity-averse and ambiguity-neutral cohorts to signals in our experiments indicate that ambiguity-neutral subjects on average are more confident than ambiguity-averse, and it is this lack of confidence that makes ambiguity-averse subjects to react to vague news. Vagueness of news therefore has two effects on decisions: one is reflected in “pricing” of ambiguity (as reflected in the ambiguity premium), given some belief, and the other—in the decision to update or not to update beliefs, which applies to all subjects, regardless their ambiguity attitudes. The latter effect may also differentiate between subjects with different ambiguity attitudes, if attitudes correlate with confidence. Our observations, as well as some observations from other studies, point towards such a correlation. More research is needed to better understand how confidence and ambiguity attitudes relate to each other and to decisions people make in uncertainty.

Notes

Qualitative corporate news (lacking numbers and hard evidence) stimulates trading activity of short sellers (von Beschwitz et al., 2017) and drives stock prices even if it bears little factual information (von Beschwitz et al., 2015; Boudoukh et al., 2013). Service quality signals of various precision and reliability, available through online reviews and ranking systems, influence consumer choices (Vermeulen and Seegers, 2009). Players' decisions in game shows are sensitive to moderators' comments even if those are ambiguous. For example, Eichberger and Vinogradov (2015) discuss lowest-unmatched bid auctions where a player wins if his/her bid is unmatched (no other player places such a bid), and is the lowest among all unmatched bids. In their data, the bidding pattern sharply changes when the moderator announces the winning bid is "below €20" or "below €300", although in both cases the winning bid (unknown to players) was just under €15, and 80% of participants anyway placed bids under €20 before the announcements. This fraction fell by some 30% after the second announcement.

There is a large literature on updating ambiguous beliefs, starting from Gilboa and Schmeidler’s (1993) axiomatization of maximum-likelihood updating (see, e.g. Eichberger et al. 2007 and 2010, for a brief review and characterization of approaches). However theoretical approaches typically derive updated preferences conditional on a realization of an event, which may have a clear interpretation as a color of a ball drawn in the Ellsberg experiment (e.g. in Cohen et al., 2000) but are not that straightforwardly linked to signals in our study.

As a variation of this approach, Kramer and Budescu (2005) make both urns in the Ellsberg task ambiguous, yet with different degrees of ambiguity: for better [imprecise] probabilities of success, they found less ambiguity avoidance, although when subjects choose between urns with imprecise and precise probability, ambiguity avoidance increases in the likelihood of success. The mechanics of this behavior is unclear.

They attribute this change in behavior to the inability of participants to calculate a fair insurance premium when probabilities are not given. Equally, one could argue, if subjects form beliefs as in Chew and Sagi (2006, 2008) and Abdellaoui et al. (2011), insurance premiums can be calculated, yet they would be different on the demand and supply sides due to different effects of ambiguity aversion. No insurance may thus be an equilibrium outcome, similarly to no trading in Dow and Werlang (1992) or no deposits in the banking equilibrium in Vinogradov (2012). Other approaches to varying ambiguity include, e.g., presenting subjects with multiple sources (Eichberger et al. 2015) or varying the number of colors in the urn (Dimmock et al. 2016b).

Valid responses are complete and non-duplicate, a filter used in online versions of our experiment. A response is complete if the subject has made choice in all tasks that are presented sequentially. Subjects who quit the online system before completing all tasks are deemed having low intrinsic motivation and excluded from the analysis (Vinogradov and Shadrina 2013). Response is non-duplicate if it comes from a unique IP address – this helps ensure all subjects are unique and none took part twice.

In some experiments we used black color instead of blue.

In the pilot experiment, not reported in this paper, participants were recruited via a Facebook account, and the feedback was collected via Facebook, too. This does not violate anonymity of participants as their comments on Facebook cannot be linked to their answers in the experiment. In other online experiments, participants had an option to leave feedback in the end of the experiment.

"Definition of Youth", United Nations fact sheet, https://www.un.org/esa/socdev/documents/youth/fact-sheets/youth-definition.pdf.

Identical machine-wrapped in blue and red foil chocolates were used as balls; non-transparent bags were used as urns. All chocolates were distributed in the audience after the experiment as a participation reward. Distribution of chocolates in Urn \(A\) was determined in front of the audience by the following mechanism: subjects submitted numbers 1 to 9, not knowing what would happen afterwards, then the fraction of subjects who submitted numbers 5..9 was calculated, multiplied by 100, and this value was taken as the number of red balls in urn \(A\). Further details are in Vinogradov and Shadrina (2013).

The software license requires that we mention the use of it in our experiment and cite Fischbacher (2007).

For methodological aspects of the within-subject design see, e.g. Charness et al. (2012).

Note that as discussed in Sect. “Analysis”, the number of observations equals the number of subjects' responses times the number of signals used. In Tables 3,4,5,6,7,8 in each regression we use 2 signals – the treatment \(s_{{\text{j}}}\) and the control condition, for which reason the number of observations is the double of the number of responses.

Here in each regression we use 6 signals – five treatments \(s_{j}\) and one control condition, hence the number of observations equals the number of responses times six.

An alternative explanation would be that some ambiguity-neutral subjects update beliefs pessimistically, and thus become effectively ambiguity-averse after a signal (see Eichberger et al. 2010). We thank an anonymous reviewer for pointing at this possibility. Our data cannot reject such a scenario, as we assume ambiguity attitudes fixed and only measure them once – through the standard Ellsberg task in the beginning of each experiment. The explanation we offer allows for constant ambiguity attitudes, which is particularly appealing and has certain similarity with the role confidence plays in the generalized Bayesian updating rule in Eichberger et al.(2010), for which they demonstrate ambiguity attitudes remain unaffected by updating. In particular, Eichberger et al. (2010) write “The less likely it was for the conditioning event to arise under her “additive belief” π, the less confidence the individual attaches to the additive component of the updated neo-additive capacity”. The intuition is similar to ours: using the probabilistic prior, decision-makers assess the likelihood of the observed event, and based on that decide to which extent they trust the prior. If the observed event suggests the prior is not reliable then it gets updated towards the value dictated by ambiguity attitudes, otherwise the probabilistic prior receives a higher weight, and pessimism/optimism matter less.

This effect holds both for ambiguity-aversion and ambiguity-seeking.

Confidence is the interpretation Epstein and Schneider (2007) give to the set of priors.

Perhaps a better and more straightforward measure of confidence would be to ask “How confident are you in this answer?”, as in Lamla and Vinogradov (2019), whose dataset contains this confidence information for individual inflation expectations and, separately, ambiguity attitudes measures for each subject. Preliminary estimates using this data confirm our above result that confidence (measured through answers to this question) also appears to be higher among ambiguity-neutral subjects.

Unfortunately, this also removes all subjects that use the same access point (e.g. wireless router) to access internet.

Online experiments were run on www.surveymonkey.com.

References

Abdellaoui, M., Baillon, A., Placido, L., & Wakker, P. P. (2011). The rich domain of uncertainty: Source functions and their experimental implementation. The American Economic Review, 101(2), 695–723.

Ahmed, A. M., & Skogh, G. (2006). Choices at various levels of uncertainty: An experimental test of the restated diversification theorem. Journal of Risk Uncertainty, 33(3), 183–196.

Baillon, A., Bleichrodt, H., Keskin, U., & l’Haridon Li, O. C. (2017). The effect of learning on ambiguity attitudes. Management Science, 64(5), 2181–2198.

Birnbaum, M. H. (2004). Human Research and Data Collection via the Internet. Annual Review of Psychology, 55, 803–832.

Boudoukh, J., Feldman, R., Kogan, S., & Richardson, M. (2013). Which news moves stock prices? A Textual Analysis, NBER Working Paper No. 18725, National Bureau of Economic Research, January 2013.

Bowen, J., Qiu, Z. L., & Li, Y. (1994). Robust tolerance for ambiguity. Organizational behavior and human decision processes, 57(1), 155–165.

Budescu, D. V., Kuhn, K. M., Kramer, K. M., & Johnson, T. R. (2002). Modeling certainty equivalents for imprecise gambles. Organizational Behavior and Human Decision Processes, 88(2), 748–768.

Carroll, C. D. (2003). Macroeconomic expectations of households and professional forecasters. The Quarterly Journal of Economics, 118(1), 269–298.

Charness, G., Gneezy, U., & Kuhn, M. A. (2012). Experimental methods: Between-subject and within-subject design. Journal of Economic Behavior and Organization, 81(1), 1–8.

Chateauneuf, A., Eichberger, J., & Grant, S. (2007). Choice under uncertainty with the best and worst in mind: Neo-additive capacities. Journal of Economic Theory, 137(1), 538–567.

Chew, S. H., & Sagi, J. S. (2006). Event Exchangeability: Probabilistic Sophistication without Continuity or Monotonicity. Econometrica, 74, 771–786.

Chew, S. H., & Sagi, J. S. (2008). Small worlds: Modeling attitudes toward sources of uncertainty. Journal of Economic Theory, 139(1), 1–24.

Cohen, M., Gilboa, I., Jaffray, J. Y., & Schmeidler, D. (2000). An experimental study of updating ambiguous beliefs. Risk, Decision and Policy, 5(2), 123–133.

Curley, S. P., & Yates, J. F. (1985). The center and range of the probability interval as factors affecting ambiguity preferences. Organizational Behavior and Human Decision Processes, 36(2), 273–287.

Curley, S. P., Yates, J. F., & Abrams, R. A. (1986). Psychological Sources of Ambiguity Avoidance'. Organizational Behavior and Human Decision Processes, 38(2), 230–256.

Dimmock, S. G., Kouwenberg, R., Mitchell, O. S., & Peijnenburg, K. (2016a). Ambiguity aversion and household portfolio choice puzzles: Empirical evidence. Journal of Financial Economics, 119(3), 559–577.

Dimmock, S. G., Kouwenberg, R., & Wakker, P. P. (2016b). Ambiguity attitudes in a large representative sample. Management Science, 62(5), 1363–1380.

Dominiak, A., Duersch, P., & Lefort, J. P. (2012). A dynamic Ellsberg urn experiment. Games and Economic Behavior, 75(2), 625–638.

Dominitz, J., & Manski, C. F. (2004). How should we measure consumer confidence? The Journal of Economic Perspectives, 18(2), 51–66.

Dow, J., & da Costa Werlang, S. R. (1992). Uncertainty aversion, risk aversion, and the optimal choice of portfolio. Econometrica, 60, 197–204.

Du, N., & Budescu, D. V. (2005). The effects of imprecise probabilities and outcomes in evaluating investment options. Management Science, 51(12), 1791–1803.

Eichberger, J., Grant, S., & Kelsey, D. (2007). Updating choquet beliefs. Journal of Mathematical Economics, 43(7–8), 888–899.

Eichberger, J., Grant, S., & Kelsey, D. (2010). Comparing three ways to update Choquet beliefs. Economics Letters, 107(2), 91–94.

Eichberger, J., Oechssler, J., & Schnedler, W. (2015). How do subjects view multiple sources of ambiguity? Theory and Decision, 78(3), 339–356.

Eichberger, J., & Vinogradov, D. (2015). Lowest-Unmatched Price Auctions. International Journal of Industrial Organization, 43, 1–17.

Ellsberg, D. (1961). Risk, Ambiguity and the Savage Axioms. The Quarterly Journal of Economics, 75(4), 643–669.

Epstein, L. G., & Schneider, M. (2007). Learning under ambiguity. The Review of Economic Studies, 74(4), 1275–1303.

Falk, A., & Heckman, J. J. (2009). Lab experiments are a major source of knowledge in the social sciences. Science, 326(5952), 535–538.

Fischbacher, U. (2007). z-Tree: Zurich toolbox for ready-made economic experiments. Experimental Economics, 10(2), 171–178.

Frisch, D., & Baron, J. (1988). Ambiguity and Rationality. Journal of Behavioral Decision Making, 1, 149–157.

Fryer, R. G., Jr., Harms, P., & Jackson, M. O. (2019). Updating beliefs when evidence is open to interpretation: Implications for bias and polarization. Journal of the European Economic Association, 17(5), 1470–1501.

Gilboa, I., & Schmeidler, D. (1989). Maxmin expected utility with non-unique prior. Journal of Mathematical Economics, 18(2), 141–153.

Gilboa, I., & Schmeidler, D. (1993). Updating ambiguous beliefs. Journal of Economic Theory, 59(1), 33–49.

Hollard, G., Massoni, S., & Vergnaud, J. C. (2016). In search of good probability assessors: an experimental comparison of elicitation rules for confidence judgments. Theory and Decision, 80(3), 363–387.

Horton, J. J., Rand, D. G., & Zeckhauser, R. J. (2011). The online laboratory: Conducting experiments in a real labor market. Experimental Economics, 14(3), 399–425.

Klibanoff, P., Marinacci, M., & Mukerji, S. (2005). A smooth model of decision making under ambiguity. Econometrica, 73(6), 1849–1892.

Kramer, K. M., & Budescu, D. V. (2005). Exploring Ellsberg's paradox in vague-vague cases. In Experimental business research (pp. 131–154). Springer US.

Krantz, J. H., & Dalal, R. (2000). Validity of Web-Based Psychological Research. In M. H. Birnbaum (Ed.), Psychology Experiments on the Internet (pp. 35–60). New York: Academic Press.

Kühberger, A., & Perner, J. (2003). The role of competition and knowledge in the Ellsberg task. Journal of Behavioral Decision Making, 16, 181–191.

Lamla, M. J., & Vinogradov, D. V. (2019). Central bank announcements: Big news for little people? Journal of Monetary Economics, 108, 21–38.

Levitt, S. D., & List, J. A. (2007). What do laboratory experiments measuring social preferences reveal about the real world? The Journal of Economic Perspectives, 21(2), 153–174.

McFadden, D. L., Bemmaor, A. C., Caro, F. G., Dominitz, J., Jun, B. H., Lewbel, A., et al. (2005). Statistical analysis of choice experiments and surveys. Market Lett, 16(3), 183–196.

Nau, R. F. (2006). Uncertainty aversion with second-order utilities and probabilities. Management Science, 52(1), 136–145.

Neilson, W. S. (2010). A simplified axiomatic approach to ambiguity aversion. Journal of Risk and Uncertainty, 41(2), 113–124.

Oechssler, J., & Roomets, A. (2015). A test of mechanical ambiguity. Journal of Economic Behavior & Organization, 119, 153–162.

Reips, U. (2000). The Web Experiment Method: Advantages, Disadvantages, and Solutions. In M. H. Birnbaum (Ed.), Psychology Experiments on the Internet (pp. 89–117). San Diego, CA: Academic Press.

Rubinstein, A. (2001). A Theorist's View of Experiments. European Economic Review, 45, 615–628.

Rubinstein, A. (2013). Response Time and Decision Making: A Free Experimental Study. Judgment and Decision Making, 8(5), 540–551.

Thomas, L. B. (1999). Survey measures of expected US inflation. The Journal of Economic Perspectives, 13(4), 125–144.

Trautmann, S. T., Vieider, F. M., & Wakker, P. P. (2008). Causes of ambiguity aversion: Known versus unknown preferences. Journal of Risk and Uncertainty, 36(3), 225–243.

Ulrich, M. (2013). Inflation ambiguity and the term structure of US Government bonds. Journal of Monetary Economics, 60(2), 295–309.

Vermeulen, I. E., & Seegers, D. (2009). Tried and tested: The impact of online hotel reviews on consumer consideration. Tourism Management, 30(1), 123–127.

Vinogradov, D. (2012). Destructive effects of constructive ambiguity in risky times. Journal of International Money and Finance, 31(6), 1459–1481.

Vinogradov, D., & Shadrina, E. (2013). Non-monetary incentives in online experiments. Economics Letters, 119(3), 306–310.

von Beschwitz, B., Keim, DB., Massa M (2015) First to 'Read' the News: News Analytics and Institutional Trading. CEPR Discussion Paper 10534.

von Beschwitz, B., Chuprinin, O., & Massa, M. (2017). Why Do Short Sellers Like Qualitative News? Journal of Financial and Quantitative Analysis, forthcoming.

Acknowledgements

We thank Aurelien Baillon, David Budescu, Soo-Hong Chew, Jürgen Eichberger, Jacob Sagi, Elena Shadrina, Peter Wakker, as well as seminar participants at ETH-Zürich, University of Essex, University of Glasgow and University of Hamburg, and participants of iCare conference at HSE in Perm and JE on Ambiguity and Strategic Interactions at the University of Grenoble for helpful comments, suggestions and encouragement. Special thanks go to Yury Vorontsov and Orhon Can Dagtekin who provided valuable assistance in data collection and preliminary analysis at the early stages of the project. All remaining errors are ours. Dmitri Vinogradov acknowledges support from the Russian Foundation for Basic Research grant RFBR 18-010-01166.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A. Online versus lab experiments

A number of factors may potentially make results of lab experiments differ from those obtained online. Fear of negative evaluation (FNE) by others is known to affect attitudes to ambiguity (Curley et al. 1986). Although one can design experiments to ensure preferences are not revealed to experimenters, thus avoiding FNE in experimental tasks (Trautmann et al. 2008), it continues to affect incentives in the lab: when the experiment becomes boring and the expected payoff does not suffice to keep subjects motivated to continue (Rubinstein 2013), they might still do so, to avoid possible negative evaluation by other participants and the experimenters who would be able to observe subjects interrupting and leaving the lab. In addition, it has become a norm to offer subjects a show-up fee in a lab. It enters the total payoff together with an elaborate incentive scheme designed to reveal subjects' preferences and beliefs. The scheme itself may be quite complicated; in particular, with regards to lottery choices it involves a randomization device, which needs to be explained to the subjects. Suspicion is a known problem: participants may believe that experimenters manipulate the randomizing device in such a way as to minimize the payoffs (see, e.g., Frisch and Baron 1988; Kühberger and Perner 2003; Dimmock et al. 2016a, for a discussion of the issue). Confusion about the payoff structure and suspicion may reduce the effectiveness of incentives, especially given the guaranteed show-up fee. Continuation despite the lack of incentives would result in random choices. In our setting this is a particular problem, as subjects randomizing between the two urns in the Ellsberg experiment are classified as ambiguity-neutral. Online experiments rule this out as subjects can leave the experiment at any moment; suspicion does not arise as all questions are hypothetical and randomization takes place in the minds of participants; and no show-up fee creates no incentives to continue despite lacking motivation.

Although evidence suggests online experiments are able to yield results similar to those obtained in a lab (see e.g. Krantz and Dalal 2000), concerns may arise with regards to data validity; Birnbaum (2004) discusses methodology issues related to online surveys, Horton et al. (2011) summarize approaches that help validate the data. Vinogradov and Shadrina (2013) argue that it is non-monetary intrinsic motivation of subjects (such as curiosity and willingness to help) that matters for the quality of data collected and its comparability with the lab. To control for this, in line with their results, we omit all incomplete responses. We also remove multiple submissions, i.e. all occasional duplicate entries as per the IP address.Footnote 18 The web platformFootnote 19 uses cookies to detect if the survey was taken previously from the same computer. To minimize the attrition effect, a large initial sample of responses was acquired. A sampling bias may occur with snowballing, to minimize which, the survey was introduced to subjects via different channels. Some authors suggest participants may wish to cheat in online experiments, submitting answers they believe experimenters would see as correct ones (Reips 2000). To prevent this, the (preliminary) findings from the experiment and possible answers were not available to participants while the experiment was running. We also find it useful that the Ellsberg task does not impose a right or wrong answer thus removing incentives to cheat. Having a series of experiments under different arrangements allows us to reduce potential biases.

The clarity of questions was tested by trialling the experiments as face-to-face surveys to obtain feedback and ensure our instructions were clear. We piloted the experiment online in 2011 with 765 complete responses obtained via snowballing, of which 68.4% were classified as ambiguity-averse and 11.2% as ambiguity-neutral. Findings from this pilot are similar to what we report in the paper, yet due to the lack of data on gender and age we did not include it in the sample used here. Informal post-experiment feedback from the pilot, as well as from experiments reported below, confirmed subjects correctly understood the tasks.

Generally, unincentivized surveys are not uncommon and data from them is regarded reliable: for example, the Michigan Survey of Consumers is a major source of inflation expectations data for the U.S. (e.g., Thomas 1999; Carroll 2003; Dominitz and Manski 2004). Although subjects are usually paid a fee to complete the [rather long] questionnaire, individual answers are not incentivized and payoff does not depend on the correctness of forecasts. McFadden et al. (2005) review possible biases in surveys and suggest remedies, in particular they note that hypothetical questions ("vignette surveys") and abstract questions (like "On a scale of 0 to 100, where 0 means no chance, and 100 means certainty, what would you say is the probability of …?") yield answers highly predictive of actual subsequent behavior. Hollard et al. (2016) demonstrate that a simple non-incentivized rule of asking subjects about their subjective beliefs performs well in eliciting those beliefs, compared to incentivized rules.

Appendix B. Questionnaire and instructions

The following questionnaire was used without major alterations in all experiments reported in this paper. Minor alterations concerned the availability of the Indifferent option, on top of the options to choose urn A or urn B in experiments Web1 and Web2. This does not affect the classification of subjects as ambiguity-averse or ambiguity-neutral, as all experiments included a version of questions Q1 and Q2 without the indifference option. In the analysis of choices this indifference option might underestimate the fraction of subjects who choose \(A\), thus providing us with a conservative estimate for our results.

The questionnaire:

Consider two identical urns each of which has 100 balls colored red and blue. One of the urns has an unknown number of balls of each color. The other one has exactly 50 red and 50 blue balls.

Balls are returned to the urns after each draw.

Part I

Q1. If a red ball is drawn you will get the prize. Would you prefer to draw the ball from Urn A or Urn B?

Q2. If a blue ball is drawn you will get the prize. Would you prefer to draw the ball from Urn A or Urn B?

Part II

From now on you can get the prize only if the red ball is drawn.

Q3.12 people before you preferred urn A to urn B when asked to draw a red ball. Which urn would you prefer now?

Q4.12 out of 20 people before you preferred urn A to urn B when asked to pick a red ball. Which urn would you prefer now?

Q5.12 people out of 20 picked a red ball from urn A. Which urn would you prefer now?

Q6.16 people out of 20 picked a red ball from urn A. Which urn would you prefer now?

Q7.120 out of 200 people picked a red ball from urn A. Which urn would you prefer now?

Part III

Q8.If you pick the wrong color you can return the ball to the urn and instead pick again. Will you pick again from the same urn?

Q9 8 out of 10 people who used their second chance before you, have changed the urn to pick the ball. Would you prefer now the same urn for your second chance?

Part IV

Q10. Please rate your knowledge of probability and statistics on the scale of 1 to 5, 1 being unfamiliar with probability and statistics (basic knowledge or no knowledge) and 5 being solid in these subjects (have taken a course on them, studied them somewhere else etc.).

Q11. Sex (M = male, F = female)

Q12. Age (subjects choose on the following scale: below 25, 25 – 35, 36 – 45, 46 – 55, 56 – 65, above 65)

END OF THE QUESTIONNAIRE.

Experiment instructions (only used in lab experiments):

Welcome. This is an experiment on decision-making. If you read the following instructions carefully, you can, depending on your decisions, earn a considerable amount of money. It is therefore very important that you read these instructions carefully.

It is prohibited to communicate with the other participants during the experiment. If you have a question at any time raise your hand and the experimenter will come to your desk to answer it. Please switch off your mobile phone or any other devices which may disturb the experiment. Please use the computer only for entering your decisions. Please only use the decision forms provided, do not start or end any programs, and do not change any settings.

During the experiment you can earn "points". At the end of the experiment these points will be converted to cash at the following rate:

1 point = £2

In this experiment we guarantee a minimum earning of £5. The maximum you can earn in this experiment is £32. The experiment consists of three parts. In each of them you will deal with two urns containing red and blue balls each. One urn (urn B) has exactly 50 red balls and 50 blue balls in it. The other urn (urn A) has also 100 balls but the exact number of red and blue balls in it is unknown; you only know that balls in it are either red or blue, there can be no balls of any other color.

In the first part of the experiment you will be asked to select either urn A or urn B. The computer will draw a ball from your selected urn. If this ball is of the designated color (red or blue, depending on the question), you will get the prize (1 point). If there is an option "indifferent" and you choose this option, the computer will decide for you, from which urn the ball should be drawn. If you prefer to make the decision yourself, please select either urn A or urn B, not "indifferent". This part contains four questions: two with indifference option and two without it.

In the second part you will be given more information. Each piece of information refers to a new situation. In all situations, urn B contains 50 red balls and 50 blue balls, as before. Please treat each question separately, disregard information from previous questions and focus only on the information given in the particular question you are answering. You can assume that this information is a correct description of the situation. It is NOT used to misguide you. This part contains five questions. In each of them you will get the prize (1 point) if the computer draws a RED ball from your selected urn, and zero if the drawn ball is blue.

In the third part we will ask questions about yourself and the way you made your decisions. Please provide accurate answers, you can earn extra points here. We might also ask you to answer one of the previous questions again. Please treat this question as a new one, you are not required to remember and reproduce your answer to a similar question in the first or second part, just give an accurate answer as if you didn't answer this question before. This question concludes the experiment.

Once the experiment ends, you will be shown your choices, the colors of balls drawn from the respective urns, and your earnings from the respective questions. All extra earnings and the total payoff will be shown in a separate screen.

Thank you for your participation!

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Vinogradov, D., Makhlouf, Y. Signaling probabilities in ambiguity: who reacts to vague news?. Theory Decis 90, 371–404 (2021). https://doi.org/10.1007/s11238-020-09759-z

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11238-020-09759-z