Abstract

National statistical organizations often rely on non-exhaustive surveys to estimate industry-level production functions in years in which a full census is not conducted. When analyzing data from non-census years, we propose selecting an estimator based on a weighting of its in-sample and predictive performance. We compare Cobb–Douglas functional assumption to existing nonparametric shape constrained estimators and a newly proposed estimator. For simulated data, we find that our proposed estimator has the lowest weighted errors. Using the 2010 Chilean Annual National Industrial Survey, a Cobb–Douglas specification describes at least 90% as much variance as the best alternative estimators in practically all cases considered providing two insights: the benefits of using application data for selecting an estimator, and the benefits of structure in noisy data. Finally for the five largest manufacturing industries, we find that a 30% sample, on average, achieves 60% of the R-squared value that would have been achieved with a full census; however, the variance across industries and samples is large.

Similar content being viewed by others

Notes

We will primarily focus on establishment censuses and surveys performed by census bureaus where an establishment is defined as a single physical location where business is conducted or where services or industrial operations are performed. An example is the U.S. annual survey of manufacturers. This survey is conducted annually, except for years ending in 2 and 7 in which a census is performed, Foster et al. (2008). This survey includes approximately 50,000 establishments selected from the census universe of 346,000, or approximately a 15% sampling, Fort et al. (2013).

The Chilean National Institute of Statistics uses survey and census. Whereas, in other parts of economics the terms sample and population are commonly used for the same concept.

A body of economic literature of a less computational and more aggregate in nature is the growth accounting literature, Solow (1957) and Barro et al. (2003). These methods rely on price information, a cost minimization assumption and parametric forms to deterministically compute the coefficients of a first order approximation of a production function using observed input cost shares (see for example Syverson 2011). This literature foregoes any model adequacy check of the production model.

Because of our focus on a conditional-mean estimator, we do not model inefficiency and therefore do not report inefficiency levels.

For example Cobb–Douglas is widely used in Monte Carlo simulations, Andor and Hesse (2014); however, the product economics literature does not provide any support for this standard assumption. The Trans-log functional function is more flexible than the Cobb–Douglas functional form; however, the function parameters and domain have to be selected carefully to assure the Trans-log function satisfies concavity. For this reasons, we sacrifice flexibility and select the Cobb–Douglas functional form so that estimates consistent with concavity are more likely to result.

A partitioned region is referred to as a basis region, Hannah and Dunson (2013).

We use lowercase letters as indices and capital letters to indicate the number of components.

The tolerance is set to 1% and CNLS is used as the large model in all of our examples. Initially, we do not use the Generalized Cross Validation (GCV) score approximation used by Hannah and Dunson (2013), because they assert that GCV’s predictive results are only comparable with full cross validation strategies for problems with \(n\ge 5000\), which are larger than the datasets we consider in this paper.

The advantage of the machine learning based comparison methods over standard Monte Carlo simulation methods is that we do not need to specify a functional form to generate data. However, we are only able to make statements about the estimator for particular application dataset under consideration. Monte Carlo simulation methods might still be preferred to explore a larger set of potential data generation processes and their associated production functions.

In-sample error is the deviations between the estimated function and the true function measured on the input vectors that appear in the observed sample.

Predictive error is the deviations between the estimated function and the true function measured on input vectors that are unobserved in the sample.

The distinction between comparing the true function and observed output is through Monte Carlo simulations we know the true function and can compare our estimators to the true function. However, when using application data, we can only compare to the observed output in the sample.

Note that the estimator “hat” character is over \(E(Er{r}_{ISf}^{nL})\) rather than \(Er{r}_{ISf}^{nL}\), the in-sample error for the particular learning set fitted with the production function.

The concept described here is referred to as sample-specificity or the estimator’s performance in a specific sample. If we use the same set of data (the training set) for estimating the model and for evaluating the model there are significant benefits to overfitting. Even if we use the same DGP to generate more observations for the input vectors used in fitting the model, we would expect a worse fit because this new output vector would have a different draw of random error terms. It is this difference between evaluating fit on the training set and evaluating fit on a new draw of the output vector for the same input vectors. This is why we use the algorithm proposed in Hastie et al. (2009).

Computing the in-sample error provides a more detailed measure of the quality of the production function on a full set than the learning error\({\overline{MSE}}_{yIS}^{nL}={\sum }_{v=1}^{V}{\sum }_{i=1}^{{n}_{L}}{({\hat{f}}_{vi}-{Y}_{vi})}^{2}/{n}_{L}v\), because it averages performance across many possible \({\epsilon }_{i}\) residual values for the learning set input vector.

Specifically, if we intend to use the learning set’s residual sum of squares, calculation of an estimator \({\sigma }^{2}\) would require knowledge of the functional estimator’s effective number of parameters. However, effective parameters can be difficult to calculate for both nonparametric and sieve estimators.

As a reviewer pointed out, the parametric Cobb–Douglas in multiplicative form with an additive error term is not only challenging in cross-sectional data, but also in a panel setting due to the incidental parameters issue. Specifically analyzing the asymptotic performance, the variance of the noise term is a function of both \(T\) and \(N\), and unless both of these values approach infinite, the estimate of the noise variance will not converge to the true value.

We experimented with a variety of starting values including linear and Cobb–Douglas estimates, zero values (the default values in Matlab), and the true values. While the starting values of zero perform quit poorly, all other starting value result is very similar estimates.

Note that the definition of \({R}_{FS}^{2}\) implies that if the evaluated estimator fails to explain more variability than the simply taking the mean of the output variable over the full sample, we will instead use the mean as our estimator.

We reemphasize that unlike cross validation procedures in which the goal is to estimate \(E(Er{r}_{y}^{n})\), our goal is to estimate \(E(Er{r}_{y}^{nL})\).

Again, expectations and averages over the error and optimism metrics discussed are done over all possible learning sets of a given size.

The maximum attainable, i.e., using the full set as the learning set, noise-to-total variance levels of our real datasets are very similar to those of our low noise settings. Compare \((1-{\overline{MSE}}_{FSy}^{nL})/var({Y}_{FS})\) in our low noise settings against the \({R}_{FS}^{2}\) results of the \(100\)% survey real datasets.

Recall we use the Cobb–Douglas function with an additive error term is used to maintain consistency of the error structure across estimators.

While nonparametric estimators avoid potential functional form misspecification, nonparametric estimates of functional derivatives tend to have higher variances than parametric estimators, Hansen (2018).

Within the class of monotonically increasing and concave production function, the additional assumption of homotheticity can be imposed. A homothetic production function \(f({\boldsymbol{X}})\) can be written as \(g(h({\boldsymbol{X}}))\) where \(g\) is a monotonically increasing function and \(h\) is homogeneous of degree 1. Practically what nonhomothetic implies is that while input isoquants are convex for any output level, the shape of the input isoquant can vary across output levels.

We calculate the MRTS as the marginal product of capital as a ratio to the marginal product of labor. Thus, we impose a positive sign convention.

As mentioned, increased testing set variance as the learning set size increases does not seem to be large enough to affect the variance of our estimates across the different learning and testing set sizes considered.

In Appendix 6.6, we further explore the sensitivity of the results shown in Fig. 3 to our assumption of a finite population of firms and discuss the consequences of considering an infinite amount of unobserved firms when assessing the predictive capabilities of our estimators, thus only evaluating estimator performance in terms of predictive error.

These are hyperplanes which have zero coefficients on some input dimensions, implying it is possible to obtain output without the zero-coefficient inputs.

Due to the increased computational burden of using larger datasets, we present results for a single replicate of the DGP for each sample size and only include learning set results. For this section we report RMSE results rather than MSE results because the latter are small and the differences are indistinguishable across settings.

References

Afriat SN (1967) The construction of utility functions from expenditure data. Int Econ Rev 8(1):67–77

Afriat SN (1972) Efficiency estimation of production functions. Int Econ Rev 13(3):568–598

Aigner D, Lovell CK, Schmidt P (1977) Formulation and estimation of stochastic frontier production function models. J Economet 6(1):21–37

Allen DM (1974) The relationship between variable selection and data agumentation and a method for prediction. Technometrics 16(1):125–127

Andor M, Hesse F (2014) The stoned age: the departure into a new era of efficiency analysis? A Monte Carlo comparison of stoned and the “oldies” (sfa and dea). J Product Anal 41(1):85–109

Andor M, Parmeter C (2017) Pseudolikelihood estimation of the stochastic frontier model. Appl Econ 49(55):5651–5661

Balázs G, György A, Szepesvári C (2015) Near-optimal max-affine estimators for convex regression. Proceedings of the eighteenth international conference on artificial intelligence and statistics, PMLR 38:56–64

Barro R, Sala-i-Martin X (2003) Economic growth. The MIT Press, Cambridge, MA

Breiman L (1992) The little bootstrap and other methods for dimensionality selection in regression: X-fixed prediction error. J Am Stat Assoc 87(419):738–754

Breiman L (1993) Hinging hyperplanes for regression, classification, and function approximation. IEEE Trans Inf Theory 39(3):999–1013

Breiman L (1996) Bagging predictors. Mach Learn 24(2):123–140

Burman P (1989) A comparative study of ordinary cross-validation, v-fold cross-validation and the repeated learning-testing methods. Biometrika 76(3):503–514

Chambers RG et al. (1988) Applied production analysis: a dual approach. Cambridge University Press. Cambridge, UK

Chen X (2007) Large sample sieve estimation of semi-nonparametric models. Handb Economet 6:5549–5632

De Loecker J, Goldberg PK, Khandelwal AK, Pavcnik N (2016) Prices, markups, and trade reform. Econometrica 84(2):445–510

Efron B (2004) The estimation of prediction error: covariance penalties and cross-validation. J Am Stat Assoc 99(467):619–632

Fare R, Rolf F, Grosskopf S, Lovell CK et al. (1994) Production frontiers. Cambridge University Press. Cambridge, UK

Fort TC, Haltiwanger J, Jarmin RS, Miranda J (2013) How firms respond to business cycles: the role of firm age and firm size. IMF Econ Rev 61(3):520–559

Foster L, Haltiwanger J, Syverson C (2008) Reallocation, firm turnover, and efficiency: selection on productivity or profitability? Am Econ Rev 98(1):394–425

Frisch R (1964) Theory of production. Springer Science & Business Media. Berlin, Germany

Geisser S (1975) The predictive sample reuse method with applications. J Am Stat Assoc 70(350):320–328

Hannah L, Dunson D (2012) Ensemble methods for convex regression with applications to geometric programming based circuit design. ICML'12 Proceedings of the 29th International Conference on International Conference on Machine Learning, 147–154

Hannah L, Dunson D (2013) Multivariate convex regression with adaptive partitioning. J Mach Learn Res 14(1):3261–3294

Hansen BE (2018) Econometrics. University of wisconsin, department of economics. Madison, WI

Hastie T, Tibshiraniand R, Friedman J (2009) The elements of statistical learning. Springer series in statistics. Springer, New York Inc, New York

Huang R, Szepesvári C (2014) A finite-sample generalization bound for semiparametric regression: partially linear models. In Proceedings of the artificial intelligence and statistics, pp 402–410

Hwangbo H, Johnson AL Ding Y (2015) Power curve estimation: functional estimation imposing the regular ultra passum law. Working Paper, SSRN https://papers.ssrn.com/sol3/papers.cfm?abstract_id=2621033

Kuosmanen T (2008) Representation theorem for convex nonparametric least squares. Economet J 11(2):308–325

Kuosmanen T, Saastamoinen A, Sipiläinen T (2013) What is the best practice for benchmark regulation of electricity distribution? comparison of dea, sfa and stoned methods. Energy Policy 61:740–750

Kuosmanen T, Kortelainen M (2012) Stochastic non-smooth envelopment of data: semi-parametric frontier estimation subject to shape constraints. J Product Anal 38(1):11–28

Lee C-Y, Johnson AL, Moreno-Centeno E, Kuosmanen T (2013) A more efficient algorithm for convex nonparametric least squares. Eur J Oper Res 227(2):391–400

Mazumder R, Choudhury A, Iyengar G, Sen B (2019) A computational framework for multivariate convex regression and its variants. J Am Stat Assoc 114:318–331

Olesen OB, Ruggiero J (2014) Maintaining the regular ultra passum law in data envelopment analysis. Eur J Oper Res 235(3):798–809

Olesen OB, Ruggiero J (2018) An improved afriat-diewert-parkan nonparametric production function estimator. Eur J Oper Res 264(3):1172–1188

Shephard R (1970). Theory of cost and production functions princeton university press. Princeton, New Jersey

Simar L, Zelenyuk V (2011) Stochastic fdh/dea estimators for frontier analysis. J Product Anal 36(1):1–20

Simar L, Van Keilegom I, Zelenyuk V (2017) Nonparametric least squares methods for stochastic frontier models. J Product Anal 47(3):189–204

Solow RM (1957) Technical change and the aggregate production function. Rev Econ Stat 39(3):312–320

Stone M (1974) Cross-validatory choice and assessment of statistical predictions. J R Stat Soc Ser B Methodol 36(2):111–147

Syverson C (2011) What determines productivity? J Econ Lit 49(2):326–365

White H, Wooldridge J (1991) Some results on sieve estimation with dependent observations. In: Nonparametric and semiparametric methods in econometrics and statistics: proceedings of the fifth international symposium in economic theory and econometrics, Chapter 18, Cambridge University Press, pp 459–493

Yagi D, Chen Y, Johnson AL, Kuosmanen T (2018) Shape constrained kernel-weighted least squares: Application to production function estimation for chilean manufacturing industries. J Bus Econ Stat, published online: https://doi.org/10.1080/07350015.2018.1431128

Yagi D, Chen Y, Johnson AL, Morita H (2019) An axiomatic nonparametric production function estimator: Modeling production in Japan’s cardboard industry. arXiv, Preprint at: https://arxiv.org/abs/1906.08359

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix: Insights from machine learning for evaluating production function estimators on manufacturing survey data

Appendix: Insights from machine learning for evaluating production function estimators on manufacturing survey data

This appendix includes:

Results for the case with 3 and 4 regressors; More extensive simulated dataset results (Appendix 6.1),

CAPNLS: Formulation and scalability to larger datasets (Appendix 6.2).

Parametric Bootstrap algorithm to calculate expected optimism (Appendix 6.3).

Comparison between different error structures for the parametric Cobb–Douglas function (Appendix 6.4).

Cobb–Douglas results with multiplicative residual assumption for Chilean manufacturing data (Appendix 6.5).

Application Results for Infinite Populations (Appendix 6.6).

Comprehensive Application Results: MPSS, MP, and MRTS (Appendix 6.7).

1.1 More extensive simulated dataset results

In this section, we provide additional Monte Carlo simulation results for the bivariate case and present results for the trivariate and tetravariate cases. The performance of all estimators deteriorates significantly due to the curse of dimensionality. The results reported are for the performance metric full set error, \(\widehat{E(Er{r}_{FS}^{nL})}\), which is a weighting of the expected in-sample error and the expected predictive error. While the parametric estimator should have the best performance in terms of expected predictive error, it is not the case for expected in-sample error. The parametric estimator is generally not able to fit the observed data with noise as well as the nonparametric estimators because of parametric estimator’s lack of flexibility. Or stated differently, the nonparametric estimators benefit from being able to overfit the observed sample. We see the effects of lack of flexibility for the parametric estimator relative to the nonparametric estimator is pronounced in higher dimensional low noise cases.

1.1.1 Bi-variate input Cobb–Douglas DGP

In this section we reproduce Table 1 of the text, but also include computational times. We run our experiments on a computer with Intel Core2 Quad CPU 3.00 GHz and 8GB RAM using MATLAB R2016b and the solvers quadprog and lsqnonlin.

1.1.2 Tri-variate input Cobb–Douglas DGP

We consider the data generation process (DGP) \({Y}_{i}={X}_{i1}^{0.4}{X}_{i2}^{0.3}{X}_{i3}^{0.2}+{\epsilon }_{i}\), where \({\epsilon }_{i} \sim N(0,{\sigma }^{2})\), \(\sigma =\{0.01,0.05,0.1,0.2,0.3,0.4\}\) for our six noise settings having the same small and large noise split as the previous example, and \({X}_{ij} \sim Unif(0.1,1)\) for \(j=1,2,3,\ i=1,\ldots ,{n}_{L}\). Again, CNLS’s expected full set errors exceed the displayed range for the learning-to-full set scenarios regardless of noise level, due to the poor predictive error values, which are partly linked to the higher proportion of ill-defined hyperplanesFootnote 29 CNLS fits. Compared to bi-variate case, the parametric estimator deteriorates relative to the nonparametric estimators, i.e., the higher errors for the parametric estimator exceed the small scale of most panels in Fig. 4.

Figure 5, however, shows that the errors given by the parametric estimator are lower than the errors for CNLS in learning-to-full settings. Further, CAP’s expected performance deteriorates relative to the bi-variate example. As in the bi-variate example, as the learning set grows, the expected full set errors gap between CAPNLS and the correctly specified parametric estimator either favors CAPNLS at every learning set size or becomes more favorable for CAPNLS as the learning set size increases. Finally, Fig. 5 shows that CAPNLS can accurately recover a production function even when noise composes nearly 85% of the variance, as shown when \(\sigma =0.4\).

In Table 11, we observe that all methods fit slightly more hyperplanes than for the bi-variate example. The increase in the number of hyperplanes with increased dimensionality is moderate for both CAPNLS and CAP at all settings. For CNLS, while the number of hyperplanes does not significantly increase for \(n=100\), it significantly increases for the two larger datasets. The runtimes for all methods are also higher than in the previous example, i.e., CAPNLS’s times nearly double, although staying below one minute for all scenarios.

1.1.3 Tetravariate input Cobb–Douglas DGP

We consider the DGP \({Y}_{i}={X}_{i1}^{0.3}{X}_{i2}^{0.25}{X}_{i3}^{0.25}{X}_{i4}^{0.1}+{\epsilon }_{i}\), where \({\epsilon }_{i} \sim N(0,{\sigma }^{2})\) and \({X}_{ij} \sim Unif(0.1,1)\) for \(j=1,2,3,4;\ i=1,\ldots ,{n}_{L}\) and \(\sigma =\{0.01,0.05,0.1,0.2,0.3,0.4\}\) for our six noise settings. The performance of all estimators further deteriorates due the curse of dimensionality. Figures 6 and 7 show that for this higher dimensional example, the parameters in the parametric estimator are increasingly difficult to learn, and thus the parametric estimator can only predict the true function up to a certain accuracy, namely \({\bar{MSE}}_{FSf}^{nL}=0.015\), and then tends to plateau at this error level even as the learning set size increases. Moreover, the benefits of CAPNLS over the other nonparametric methods are similar to the tri-variate example for the small noise settings, but significantly larger for the large noise settings. Finally, the gap between CAPNLS and all the other functional estimators, parametric or nonparametric, favors CAPNLS for all noise settings and learning set sizes.

Table 12 shows that the number of hyperplanes needed to fit the four-variate input production function does not significantly increase from the trivariate-input case for any of the methods. CAPNLS has 40–60% longer runtimes compared to the trivariate-input case. The runtime increase with dimensionality; however, this is not a severe concern, because the input information to fit a production function (or output in the case of a cost function) rarely exceeds four variables. The maximum recorded runtime for CAPNLS is still below two minutes, i.e., it is not large in absolute terms.

1.1.4 More extensive simulated dataset results

Tables 13–30 provide more detail results. This information was show in Figs. 1 and 2 in the main text and Figs. 4–7 in the Appendix.

1.2 CAPNLS: formulation and scalability to larger datasets

1.2.1 CAPNLS as a series of quadratic programs

Taking advantage of the linearly-constrained quadratic programming structure of CAPNLS is essential to achieve computational feasibility. Therefore, we write Problem (5) in the standard form

Starting with the objective function from (4), we let \(\tilde{{\boldsymbol{X}}}=({\boldsymbol{1}},{\boldsymbol{X}})\) and write

where we have dropped constant \({\sum }_{i=1}^{N}{Y}_{i}^{2}\) and multiplied times one half. To write the last expression in Eq. (6.2.2) in standard form, we first write \({\sum }_{i=1}^{N}{({{\boldsymbol{\beta }}}_{[i]}^{T}{\tilde{{\boldsymbol{X}}}}_{i})}^{2}\) using matrix operations. We define observation-to-hyperplane \(n(d+1)\times K(d+1)\)-dimensional mapping matrix \(P\), with elements \(P((i-1)(d+1)+j,([i]-1)(d+1)+i)\) = \({\tilde{X}}_{ij},\ i=1,\cdots \ ,n,\ j=1,\cdots \ ,d+1\) and all other elements equal to zero. Similarly, we define \(n\times n(d+1)\)-dimensional observation-specific vector product matrix \(S\), with elements \(S(i,(i-1)(d+1)+l)=1\) for \(l=1,\cdots \ ,3,i=1,\cdots \ ,n,j=1,\cdots \ ,d+1\). Then, we concatenate vectors \({({{\boldsymbol{\beta }}}_{k})}_{(k=1)}^{K}\) in \(K(d+1)\times 1\)-dimensional vector \({\boldsymbol{\beta }}\). It follows that

from which we have that \(H={P}^{T}({S}^{T}S)P\) and \(g=-{P}^{T}{S}^{T}{\boldsymbol{Y}}\).

To write in the Afriat Inequality constraints as \(nK\times K(d+1)\) - dimensional matrix \(A\), we let elements \(A\left(\right.K(i-1)+k,\ j+(d+1)([i]-1)\left)\right.\)= \({\tilde{X}}_{ij},\ i=1,\cdots \ ,n,\ j=1,\cdots \ ,d+1,\ k=1,\cdots \ ,K,\) and let all other elements equal zero. Finally, we define \(K(d+1)\) – dimensional vector \({\boldsymbol{l}}\) to have elements \({\boldsymbol{l}}\left(\right.(k-1)(d+1)+1\left)\right.=0,\ k=1,\cdots \ ,K\), and all other elements be equal to negative infinity.

1.2.2 Scalability of CAPNLS to larger datasets

To demonstrate the performance of CAPNLS in large data sets, we revisit the DGP used in the Tri-variate case, specifically, \({Y}_{i}={X}_{i1}^{0.4}{X}_{i2}^{0.3}{X}_{i3}^{0.2}+{\epsilon }_{i}\), where \({\epsilon }_{i} \sim N(0,{\sigma }^{2})\), \(\sigma =0.1\) and \({X}_{ij} \sim Unif(0.1,1)\) for \(j=1,2,3,\ i=1,\cdots \ ,n\), and \(n=500,1000,2000,3000\), and \(5000\). Table 31 reports the estimator’s performance.Footnote 30

We first conduct standard CAPNLS analysis and report learning errors, number of fitted hyperplanes and runtime results. Runtimes for datasets up to \(2000\) observations are well below the one hour threshold, but there are significant scalability challenges for datasets larger than \(2000\) observations. Thus, we apply the Fast CAP stopping criterion in Hannah and Dunson (2013), which measures the GCV score improvement by the addition of one more hyperplane and stops the algorithm if no improvement has been achieved in two consecutive additions. Unlike Fast CAP, however, we apply it directly to the learning error against observations. We denote the results for those runs with the CAPNLSF superscript and observe that differences are minimal compared to following our standard partitioning strategy. This alternative stopping rule results in a highly scalable algorithm which can fit datasets up to \(5000\) observations in \(\sim\!\! 40\) minutes.

1.3 Parametric Bootstrap algorithm to calculate expected optimism

We apply the following algorithm from Efron (2004) to compute in-sample optimism. First, we assume a Gaussian density \(p({\boldsymbol{Y}})=N(\hat{{\boldsymbol{Y}}},{\hat{\sigma }}^{2}{\boldsymbol{I}})\), where \({\boldsymbol{Y}}\) is the vector of estimated output values of the estimator for which we are assessing the in-sample optimism. We use \({\boldsymbol{I}}\) to indicate an identity matrix. We obtain \({\sigma }^{2}\) from the residuals of a “big” model presumed to have negligible bias. Given CNLS’s high flexibility and complex description (many hyperplanes), we choose it as our “big” model. Although obtaining an unbiased estimate for \({\sigma }^{2}\) from CNLS’s residuals is complicated, i.e., there are no formal results regarding the effective number of parameters CNLS uses, using \(MS{E}_{yLearn}^{CNLS}\) as \({\sigma }^{2}\) results in a downward biased estimator of \({\sigma }^{2}\). This downward bias in fact results in improved efficiency for the parametric bootstrap algorithm and is an example of a “little” bootstrap, see Breiman (1992). Thus, we let \(\hat{\sigma }=MS{E}_{yLearn}^{CNLS}\). Efron (2004) then suggests to run a large number \(B\) of simulated observations \({{\boldsymbol{Y}}}^{* }\) from \(p({\boldsymbol{Y}})\), fit them to obtain estimates \({\hat{{\boldsymbol{Y}}}}^{* }\), and estimate \(co{v}_{i}=cov({\hat{Y}}_{i},{Y}_{i})\) computing

We select \(B=500\) for all our experiments based on observed convergence of the \({\sum }_{i=1}^{N}{\hat{cov}}_{i}\) quantity. Further, we note that if the researcher is not comfortable with the assumption made about the size of \(MS{E}_{yLearn}^{CNLS}\) relative to \({\sigma }^{2}\), sensitivity analysis (by adding a multiplier \(c\,>\,1\), such that \(p({\boldsymbol{Y}})=N(\hat{{\boldsymbol{Y}}},c{\hat{\sigma }}^{2}{\boldsymbol{I}})\)) can be performed. Finally, we also note that non-Gaussian distributions can be used to draw the bootstrapped \({{\boldsymbol{Y}}}^{* }\) vectors. This is especially useful when considering inefficiency, because it can imply a skewed distribution.

1.4 Comparison between different error structures for the parametric Cobb–Douglas function

This Appendix compares the performance of the standard log-linear Cobb–Douglas with an additive error estimator and the multiplicative Cobb–Douglas with an additive error estimator. Figures 8–13 consider the bivariate, trivariate, and tetravariate case with both a low and high noise setting. We find both parametric estimators preform very similarly with the correctly specified additive error estimator performing slightly better.

1.5 Further Cobb–Douglas results with multiplicative residual assumption for Chilean manufacturing data

This section presents the parameter estimates for the additive Cobb–Douglas specification. Estimates are presented for the five largest industries in the Chilean manufacturing data. Table 32 shows that returns to scale (RTS) is decreasing (DRS) for Other Metal Products (2899), Wood (2010) and Structural Use Metal (2811). However, RTS are increasing (IRS) for Plastics (2520) and Bakeries (1541). All RTS estimates are with an additive factor of \(0.2\) from constant returns to scale.

1.5.1 Cobb–Douglas results with multiplicative residual

In this subsection, we reconstruct Table 3 from the main text, but consider the alternative parametric estimator, a log-linear Cobb–Douglas function with an multiplicative error term (CDM).

1.6 Application results for infinite populations

In this Appendix we explore the sensitivity of our application insights in the case when predictive ability at any point of the production function is equally important. This represents a key departure from the assumptions made in the main body of the paper, as it translates into not weighting in-sample and predictive errors. Rather, since we are interested in the descriptive ability of the fitted production function on an infinite number of unobserved input vectors, we only consider the predictive error. To illustrate the consequences of this alternative assumptions in detail, we present our results in Fig. 14 which is analogous to Fig. 3. In Fig. 14 we show replicate-specific as well as averaged \({R}^{2}\) values. In this case, rather than using \({R}_{FS}^{2}\) as our predictive power indicator, we use \(R_{Pred}^2 = \max (1 - (E(\widehat {Er{r_y}})/Var({Y_{FS}}),0))\).

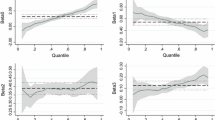

In Fig. 14, we observe that for the majority of studied industries, weighting the in-sample error with the predictive error, the direct consequence of our finite full population assumption, does not affect the diagnostic of the mean predictive power of our production function models. Using our notation, this means that for most industries the expected \({R}_{Pred}^{2}\) for each given subsample size did not differ greatly from \({R}_{FS}^{2}\). However, the \({R}_{Pred}^{2}\) figures have significantly higher variance than their \({R}_{FS}^{2}\) counterparts for each subsample size. For all industries except for industry code 2811, we obtain at least one replicate with negligible predictive power. For some industries, such as Industry Codes 2520 and 1541, this causes the predictive power bound to be very wide (although the upper bound and mean values increase monotonically in the subsample size).

1.7 Comprehensive application results: MPSS, MP, and MRTS

Tables 34–39 provide more detail results regarding MPSS, MP for both capital and labor, and MRTS for different percentiles of the four inputs (capital, labor, energy, and services). This information was summarized in Tables 5–9 in the main text.

Rights and permissions

About this article

Cite this article

Preciado Arreola, J.L., Yagi, D. & Johnson, A.L. Insights from machine learning for evaluating production function estimators on manufacturing survey data. J Prod Anal 53, 181–225 (2020). https://doi.org/10.1007/s11123-019-00570-9

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11123-019-00570-9

Keywords

- Convex nonparametric least squares

- Adaptive partitioning

- Multivariate convex regression

- Nonparametric stochastic frontier