Abstract

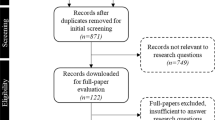

Although systematic reviews are considered the primary means for generating cumulative knowledge and their results are often used to inform evidence-based practice, the robustness of their meta-analytic summary estimates is rarely investigated. Consequently, the results of published systematic reviews and, by extension, our cumulative knowledge have come under scrutiny. Using a comprehensive approach to sensitivity analysis, we examined the impact of outliers and publication bias, as well as their combined effect, on meta-analytic results on employee turnover. Our analysis of 205 distributions from seven recently published meta-analyses revealed that meta-analytic results on turnover are often affected by publication bias and, less frequently, outliers. Moreover, we observed that 33% of the recommendations for practice provided in the original systematic reviews on turnover were not robust to outliers and/or publication bias, which, if implemented by practitioners, could yield unexpected consequences and, thus, widen the science-practice gap. We argue that practitioners should be skeptical about implementing practices recommended by meta-analytic studies that do not include sensitivity analyses. To improve sensitivity analysis reporting rates and, thus, the transparency of meta-analytic findings and recommendations for practice, we introduce an open-access software (metasen.shinyapps.io/gen1/) that conducts all analyses performed in the current study. We provide guidelines and recommendations for future turnover studies and sensitivity analyses in the meta-analytic context.

Similar content being viewed by others

Notes

Bosco et al. (2015, Table 5) reported |r| = .10 and median N = 306 among 270 attitude-turnover relations, corresponding to observed power of .42 according to a two-tailed bivariate normal test using the G*Power software (Faul, Erdfelder, Buchner & Lang, 2009). The same value for attitudes with all types of behaviors was |r| = .16 and median N = 220 among 7958 correlations, corresponding to observed power of .66.

We use the term “naïve” to denote that the meta-analytic results are unadjusted for publication and/or other bias, such as outliers (Copas & Shi, 2000).

Most analyses were conducted using Fisher’s z transformed Pearson correlation coefficients, and in these cases, results were back-transformed into Pearson’s r for interpretation purposes. The precision-effect test-precision effect estimate with standard error (PET-PEESE) and one-sample removed analyses were conducted using untransformed correlation coefficients.

We examined whether our robustness results changed after removing all omnibus distributions (i.e., main effects) included in our reanalysis of the seven datasets. We identified and removed from our analysis 56 omnibus distributions. Following this, we observed that 139 out of 145 (95%) moderator level distributions reported a naïve meta-analytic mean estimate that may be misestimated to a noticeable degree (i.e., by at least 20%). This result is similar to the full set of distributions (i.e., omnibus and moderator level distributions) (190/201 or 95%). As such, henceforth we report results pertaining to the full set of distributions.

We greatly appreciate Dr. Julie Hancock’s willingness to share with us these data.

Cohen’s (1988) effect size benchmarks were originally intuited from results reported in the 1960 volume of Journal of Abnormal and Social Psychology. Although Cohen’s benchmarks have become the norm, recent evidence suggests that they may not represent what is generally observed across substantive relations in the social sciences (Bosco et al., 2015; Richard, Bond, & Stokes-Zoota, 2003). Therefore, we use adapted guidelines from Kepes and McDaniel (2015) to suggest that “small,” “medium,” and “large” bias is detected when the observed standardized mean difference between a naïve and adjusted meta-analytic mean is less than .18, between .18 and .32, and greater than .32, respectively – cutoffs that were adapted from Bosco et al.’s (2015) benchmarks for the 33rd and 50th percentile of effect sizes observed in applied psychology.

References

Ada, S., Sharman, R., & Balkundi, P. (2012). Impact of meta-analytic decisions on the conclusions drawn on the business value of information technology. Decision Support Systems, 54, 521–533. https://doi.org/10.1016/j.dss.2012.07.001.

Aguinis, H., Dalton, D. R., Bosco, F. A., Pierce, C. A., & Dalton, C. M. (2011). Meta-analytic choices and judgment calls: Implications for theory building and testing, obtained effect sizes, and scholarly impact. Journal of Management, 37, 5–38. https://doi.org/10.1177/0149206310377113.

Aguinis, H., & Edwards, J. R. (2014). Methodological wishes for the next decade and how to make wishes come true. Journal of Management Studies, 51, 143–174. https://doi.org/10.1111/joms.12058.

Allen, D. G. (2008). Retaining talent: A guide to analyzing and managing employee turnover. SHRM Foundation Effective Practice Guidelines Series, 1–43.

Aytug, Z. G., Rothstein, H. R., Zhou, W., & Kern, M. C. (2012). Revealed or concealed? Transparency of procedures, decisions, and judgment calls in meta-analyses. Organizational Research Methods, 15, 103–133. https://doi.org/10.1177/1094428111403495.

Banks, G. C., Field, J. G., Oswald, F. L., O’Boyle, E. H., Landis, R. S., Rupp, D. E., & Rogelberg, S. G. (2019) Answers to 18 questions about open science practices. Journal of Business and Psychology, 34, 257–270. https://doi.org/10.1007/s10869-018-9547-8.

Banks, G. C., Kepes, S., & McDaniel, M. A. (2012). Publication bias: A call for improved meta-analytic practice in the organizational sciences. International Journal of Selection and Assessment, 20, 182–196. https://doi.org/10.1111/j.1468-2389.2012.00591.x.

Banks, G. C., Kepes, S., & McDaniel, M. A. (2015). Publication bias: Understanding the myths concerning threats to the advancement of science. In C. E. Lance & R. J. Vandenberg (Eds.), More statistical and methodological myths and urban legends (pp. 36–64). New York, NY: Routledge.

Barrick, M. R., & Zimmerman, R. D. (2005). Reducing voluntary, avoidable turnover through selection. Journal of Applied Psychology, 90, 159–166. https://doi.org/10.1037/0021-9010.90.1.159.

Bettis, R. A. (2012). The search for asterisks: Compromised statistical tests and flawed theories. Strategic Management Journal, 33, 108–113. https://doi.org/10.1002/smj.975.

Borenstein, M., Hedges, L. V., Higgins, J. P., & Rothstein, H. R. (2009). Introduction to meta-analysis. West Sussex: Wiley.

Bosco, F. A., Aguinis, H., Singh, K., Field, J. G., & Pierce, C. A. (2015). Correlational effect size benchmarks. Journal of Applied Psychology, 100, 431–449. https://doi.org/10.1037/a0038047.

Carter, E. C., Schönbrodt, F. D., Gervais, W. M., & Hilgard, J. (2019). Correcting for bias in psychology: A comparison of meta-analytic methods. Advances in Methods and Practices in Psychological Science, 2, 115–144. https://doi.org/10.1177/2515245919847196.

Cascio, W. F. (2006). Managing human resources: Productivity, quality of work life, profits (7th ed.). Burr Ridge, IL: Irwin/McGraw-Hill.

Cohen, J. (1988). Statistical power analysis for the behavioral sciences. Hillsdale, NJ: Erlbaum.

Copas, J., & Shi, J. Q. (2000). Meta-analysis, funnel plots and sensitivity analysis. Biostatistics, 1, 247–262. https://doi.org/10.1093/biostatistics/1.3.247.

Dalton, D. R., Aguinis, H., Dalton, C. M., Bosco, F. A., & Pierce, C. A. (2012). Revisiting the file drawer problem in meta-analysis: An assessment of published and nonpublished correlation matrices. Personnel Psychology, 65, 221–249. https://doi.org/10.1111/j.1744-6570.2012.01243.x.

Dunlap, W. P. (1994). Generalizing the common language effect size indicator to bivariate normal correlations. Psychological Bulletin, 116, 509–511. https://doi.org/10.1037/0033-2909.116.3.509.

Duval, S., & Tweedie, R. (2000). A nonparametric “trim and fill” method of accounting for publication bias in meta-analysis. Journal of the American Statistical Association, 95, 89–98. https://doi.org/10.1080/01621459.2000.10473905.

Eysenck, H. J. (1978). An exercise in mega-silliness. American Psychologist, 33, 517–517. https://doi.org/10.1037/0003-066X.33.5.517.a.

Fanelli, D. (2012). Negative results are disappearing from most disciplines and countries. Scientometrics, 90, 891–904. https://doi.org/10.1007/s11192-011-0494-7.

Faul, F., Erdfelder, E., Buchner, A., & Lang, A.-G. (2009) Statistical power analyses using G*Power 3.1: Tests for correlation and regression analyses. Behavior Research Methods, 41, 1149–1160. https://doi.org/10.3758/brm.41.4.1149.

Ferguson, C. J., & Brannick, M. T. (2011). Publication bias in psychological science: Prevalence, methods for identifying and controlling, and implications for the use of meta-analyses. Psychological Methods, 17, 120–128. https://doi.org/10.1037/a0024445.

Field, A. P., & Gillett, R. (2010). How to do a meta-analysis. British Journal of Mathematical and Statistical Psychology, 63, 665–694. https://doi.org/10.1348/000711010x502733.

Geyskens, I., Krishnan, R., Steenkamp, J.-B. E. M., & Cunha, P. V. (2009). A review and evaluation of meta-analysis practices in management research. Journal of Management, 35, 393–419. https://doi.org/10.1177/0149206308328501.

Greenhouse, J. B., & Iyengar, S. (2009). Sensitivity analysis and diagnostics. In H. Cooper, L. V. Hedges, & J. C. Valentine (Eds.), The handbook of research synthesis and meta-analysis (2nd ed., pp. 417–433). New York, NY: Russell Sage Foundation.

Grubbs, F. E. (1969). Procedures for detecting outlying observations in samples. Technometrics, 11, 1–21. https://doi.org/10.1080/00401706.1969.10490657.

Hancock, J. I., Allen, D. G., Bosco, F. A., McDaniel, K. R., & Pierce, C. A. (2013). Meta-analytic review of employee turnover as a predictor of firm performance. Journal of Management, 39, 573–603. https://doi.org/10.1177/0149206311424943.

Hancock, J. I., Allen, D. G., & Soelberg, C. (2017). Collective turnover: An expanded meta-analytic exploration and comparison. Human Resource Management Review, 27, 61–86. https://doi.org/10.1016/j.hrmr.2016.06.003.

Hedges, L. V., & Olkin, I. (1985). Statistical method for meta-analysis. New York, NY: Academic Press.

Hom, P. W., Lee, T. W., Shaw, J. D., & Hausknecht, J. P. (2017). One hundred years of employee turnover theory and research. Journal of Applied Psychology, 102, 530–545. https://doi.org/10.1037/apl0000103.

Hunter, J. E., & Schmidt, F., L. (1983) Quantifying the effects of psychological interventions on employee job performance and work-force productivity. American Psychologist, 38, 473–478. https://doi.org/10.1037/0003-066x.38.4.473.

Hunter, J. E., & Schmidt, F. L. (2004). Methods of meta-analysis: Correcting error and bias in research findings (2nd ed.). Thousand Oaks, CA: Sage.

Jiang, K., Liu, D., McKay, P. F., Lee, T. W., & Mitchell, T. R. (2012). When and how is job embeddedness predictive of turnover? A meta-analytic investigation. Journal of Applied Psychology, 97, 1077–1096. https://doi.org/10.1037/a0028610.

Jick, T. D. (1979). Mixing qualitative and quantitative methods: Triangulation in action. Administrative Science Quarterly, 24, 602–611. https://doi.org/10.2307/2392366.

Kepes, S., Banks, G. C., McDaniel, M. A., & Whetzel, D. L. (2012). Publication bias in the organizational sciences. Organizational Research Methods, 15, 624–662. https://doi.org/10.1177/1094428112452760.

Kepes, S., Bennett, A. A., & McDaniel, M. A. (2014). Evidence-based management and the trustworthiness of our cumulative scientific knowledge: Implications for teaching, research, and practice. Academy of Management Learning & Education, 13, 446–466. https://doi.org/10.5465/amle.2013.0193.

Kepes, S., Bushman, B. J., & Anderson, C. A. (2017) Violent video game effects remain a societal concern: Reply to Hilgard, Engelhardt, and Rouder (2017). Psychological Bulletin, 143, 775–782. https://doi.org/10.1037/bul0000112.

Kepes, S., & McDaniel, M. A. (2015). The validity of conscientiousness is overestimated in the prediction of job performance. PLoS One, 10, e0141468. https://doi.org/10.1371/journal.pone.0141468.

Kepes, S., McDaniel, M. A., Brannick, M. T., & Banks, G. C. (2013). Meta-analytic reviews in the organizational sciences: Two meta-analytic schools on the way to MARS (the Meta-Analytic Reporting Standards). Journal of Business and Psychology, 28, 123–143. https://doi.org/10.1007/s10869-013-9300-2.

Kepes, S., & Thomas, M. A. (2018). Assessing the robustness of meta-analytic results in information systems: Publication bias and outliers. European Journal of Information Systems, 27, 90–123. https://doi.org/10.1080/0960085x.2017.1390188.

Kristof-Brown, A. L., Zimmerman, R. D., & Johnson, E. C. (2005). Consequences of individuals’ fit at work: A meta-analysis of person–job, person–organization, person–group, and person–supervisor fit. Personnel Psychology, 58, 281–342. https://doi.org/10.1111/j.1744-6570.2005.00672.x.

Lee, T. W., Hom, P. W., Eberly, M. B., Li, J., & Mitchell, T. R. (2017). On the next decade of research in voluntary employee turnover. Academy of Management Perspectives, 31, 201–221. https://doi.org/10.5465/amp.2016.0123.

Macaskill, P., Walter, S. D., & Irwig, L. (2001). A comparison of methods to detect publication bias in meta-analysis. Statistics in Medicine, 20, 641–654. https://doi.org/10.1002/sim.698.

McGaw, B., & Glass, G. V. (2016) Choice of the metric for effect size in meta-analysis. American Educational Research Journal, 17, 325–337. https://doi.org/10.3102/00028312017003325.

McShane, B. B., Böckenholt, U., & Hansen, K. T. (2016). Adjusting for publication bias in meta-analysis: An evaluation of selection methods and some cautionary notes. Perspectives on Psychological Science, 11, 730–749. https://doi.org/10.1177/1745691616662243.

Moreno, S. G., Sutton, A. J., Turner, E. H., Abrams, K. R., Cooper, N. J., Palmer, T. M., & Ades, A. E. (2009). Novel methods to deal with publication biases: Secondary analysis of antidepressant trials in the FDA trial registry database and related journal publications. British Medical Journal, 339, b2981. https://doi.org/10.1136/bmj.b2981.

Nosek, B. A., Spies, J. R., & Motyl, M. (2012). Scientific utopia: II. Restructuring incentives and practices to promote truth over publishability. Perspectives on Psychological Science, 7, 615–631. https://doi.org/10.1177/1745691612459058.

O’Boyle, E. H., Rutherford, M. W., & Banks, G. C. (2014). Publication bias in entrepreneurship research: An examination of dominant relations to performance. Journal of Business Venturing, 29, 773–784. https://doi.org/10.1016/j.jbusvent.2013.10.001.

O’Boyle, E. H., Banks, G. C., & Gonzalez-Mulé, E. (2017). The chrysalis effect: How ugly initial results metamorphosize into beautiful articles. Journal of Management, 43, 376–399. https://doi.org/10.1177/0149206314527133.

Open Science Collaboration. (2015). Estimating the reproducibility of psychological science. Science, 349, aac4716. https://doi.org/10.1126/science.aac4716.

Orlitzky, M. (2011) How can significance tests be deinstitutionalized? Organizational Research Methods, 15, 199–228. https://doi.org/10.1177/1094428111428356.

Park, T.-Y., & Shaw, J. D. (2013). Turnover rates and organizational performance: A meta-analysis. Journal of Applied Psychology, 98, 268–309. https://doi.org/10.1037/a0030723.

Peters, J. L., Sutton, A. J., Jones, D. R., Abrams, K. R., & Rushton, L. (2007). Performance of the trim and fill method in the presence of publication bias and between-study heterogeneity. Statistics in Medicine, 26, 4544–4562. https://doi.org/10.1002/sim.2889.

Peters, J. L., Sutton, A. J., Jones, D. R., Abrams, K. R., & Rushton, L. (2008). Contour-enhanced meta-analysis funnel plots help distinguish publication bias from other causes of asymmetry. Journal of Clinical Epidemiology, 61, 991–996. https://doi.org/10.1016/j.jclinepi.2007.11.010.

Richard, F. D., Bond, C. F., & Stokes-Zoota, J. J. (2003). One hundred years of social psychology quantitatively described. Review of General Psychology, 7, 331–363. https://doi.org/10.1037/1089-2680.7.4.331.

Rothstein, H. R., Sutton, A. J., & Borenstein, M. (2005). Publication bias in meta-analyses. In H. R. Rothstein, A. J. Sutton, & M. Borenstein (Eds.), Publication bias in meta-analysis: Prevention, assessment, and adjustments (pp. 1–7). West Sussex: Wiley.

Rousseau, D. M., & McCarthy, S. (2007). Educating managers from an evidence-based perspective. Academy of Management Learning & Education, 6, 84–101. https://doi.org/10.5465/amle.2007.24401705.

Rubenstein, A. L., Eberly, M. B., Lee, T. W., & Mitchell, T. R. (2018). Surveying the forest: A meta-analysis, moderator investigation, and future-oriented discussion of the antecedents of voluntary employee turnover. Personnel Psychology, 71, 23–65. https://doi.org/10.1111/peps.12226.

Rupp, D. E. (2011). Ethical issues faced by editors and reviewers. Management and Organization Review, 7, 481–493. https://doi.org/10.1111/j.1740-8784.2011.00227.x.

Schalken, N., & Rietbergen, C. (2017). The reporting quality of systematic reviews and meta-analyses in industrial and organizational psychology: A systematic review. Frontiers in Psychology, 8, 1–12. https://doi.org/10.3389/fpsyg.2017.01395.

Schmidt, F. L. (1992). What do data really mean? Research findings, meta-analysis, and cumulative knowledge in psychology. American Psychologist, 47, 1173–1181. https://doi.org/10.1037/0003-066x.47.10.1173.

Schmidt, F. L., & Hunter, J. E. (2003). History, development, evolution, and impact of validity generalization and meta-analysis methods, 1975-2001. In K. R. Murphy (Ed.), Validity generalization: A critical review (pp. 31–65). Mahwah, NJ: Lawrence Erlbaum.

Schmidt, F. L., & Hunter, J. E. (2015). Methods of meta-analysis: Correcting error and bias in research findings (3rd ed.). Newbury Park, CA: Sage.

Shen, W., Kiger, T. B., Davies, S. E., Rasch, R. L., Simon, K. M., & Ones, D. S. (2011). Samples in applied psychology: Over a decade of research in review. Journal of Applied Psychology, 96, 1055–1064. https://doi.org/10.1037/a0023322.

Simmons, J. P., Nelson, L. D., & Simonsohn, U. (2011). False-positive psychology: Undisclosed flexibility in data collection and analysis allows presenting anything as significant. Psychological Science, 22, 1359–1366. https://doi.org/10.1177/0956797611417632.

Stanley, T. D. (2017). Limitations of PET-PEESE and other meta-analysis methods. Social Psychological and Personality Science, 8, 581–591. https://doi.org/10.1177/1948550617693062.

Stanley, T. D., & Doucouliagos, H. (2014). Meta-regression approximations to reduce publication selection bias. Research Synthesis Methods, 5, 60–78. https://doi.org/10.1002/jrsm.1095.

Steel, R. P., Hendrix, W. H., & Balogh, S. P. (1990). Confounding effects of the turnover base rate on relations between time lag and turnover study outcomes: An extension of meta-analysis findings and conclusions. Journal of Organizational Behavior, 11, 237–242. https://doi.org/10.1002/job.4030110306.

Sutton, A. J. (2005). Evidence concerning the consequences of publication and related biases. In H. R. Rothstein, A. J. Sutton, & M. Borenstein (Eds.), Publication bias in meta analysis: Prevention, assessment, and adjustments (pp. 175–192). West Sussex: Wiley.

Terrin, N., Schmid, C. H., Lau, J., & Olkin, I. (2003). Adjusting for publication bias in the presence of heterogeneity. Statistics in Medicine, 22, 2113–2126. https://doi.org/10.1002/sim.1461.

Thoemmes, F., MacKinnon, D. P., & Reiser, M. R. (2010). Power analysis for complex mediational designs using Monte Carlo methods. Structural Equation Modeling: A Multidisciplinary Journal, 17, 510–534. https://doi.org/10.1080/10705511.2010.489379.

van Assen, M. A. L. M., van Aert, R. C. M., & Wicherts, J. M. (2015). Meta-analysis using effect size distributions of only statistically significant studies. Psychological Methods, 20, 293–309. https://doi.org/10.1037/met0000025.

Van Iddekinge, C. H., Roth, P. L., Putka, D. J., & Lanivich, S. E. (2011). Are you interested? A meta-analysis of relations between vocational interests and employee performance and turnover. Journal of Applied Psychology, 96, 1167–1194. https://doi.org/10.1037/a0024343.

Vevea, J. L., & Woods, C. M. (2005). Publication bias in research synthesis: Sensitivity analysis using a priori weight functions. Psychological Methods, 10(4), 428–443. https://doi.org/10.1037/1082-989X.10.4.428.

Viechtbauer, W. (2017). Meta-analysis package for R: Package ‘metafor.’ R package version 2.0–0.

Viechtbauer, W., & Cheung, M. W. L. (2010). Outlier and influence diagnostics for meta-analysis. Research Synthesis Methods, 1, 112–125. https://doi.org/10.1002/jrsm.11.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

ESM 1

(DOCX 3953 kb)

Rights and permissions

About this article

Cite this article

Field, J.G., Bosco, F.A. & Kepes, S. How robust is our cumulative knowledge on turnover?. J Bus Psychol 36, 349–365 (2021). https://doi.org/10.1007/s10869-020-09687-3

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10869-020-09687-3