Abstract

Second-order unconditionally stable schemes of linear multi-step methods, and their equivalent single-step methods, are developed in this paper. The parameters of the linear two-, three-, and four-step methods are determined for optimal accuracy, unconditional stability and tunable algorithmic dissipation. The linear three- and four-step schemes are presented for the first time. As an alternative, corresponding single-step methods, spectrally equivalent to the multi-step ones, are developed by introducing the required intermediate variables. Their formulations are equivalent to that of the corresponding multi-step methods; their use is more convenient, owing to being self-starting. Compared with existing second-order methods, the proposed ones, especially the linear four-step method and its alternative single-step one, show higher accuracy for a given degree of algorithmic dissipation. The accuracy advantage and other properties of the newly developed schemes are demonstrated by several illustrative examples.

Similar content being viewed by others

1 Introduction

Direct time integration methods are powerful numerical tools for solving the ordinary differential and differential-algebraic equations arising in structural dynamics, multi-body dynamics and many other fields. A lot of excellent integration methods have been developed and are available in the literature; they are often classified as explicit and implicit methods. Explicit methods are easy to implement, but their intrinsic conditional stability limits the step size. Implicit methods can be designed to be unconditionally stable, so they are suitable when the accuracy requirements are not very strict.

In addition, time integration methods also can be categorized as multi-step and multi-stage techniques. The multi-step methods employ the states of several previous steps to express the current one, whereas the multi-stage utilize the states of selected collocation points. The single-step methods, which only use the information of the last step, may be included in both classes and represent the connecting link between them.

The most representative methods in the multi-stage class belong to the Runge-Kutta family [6], including the explicit [7], the diagonally implicit [19], and the fully implicit Runge–Kutta methods [16]. Based on the Gauss quadrature, the fully implicit s-stage Runge–Kutta methods can reach up to 2sth-order accuracy. However, the improvement in accuracy is realized at a dramatic increase in computational cost, so these higher-order schemes are not very useful in practice. In this family, the explicit and diagonally implicit Runge–Kutta methods are more widely-used.

The composite methods, which can be classified in the multi-stage class, have been greatly developed in recent years. They divide each step into several sub-steps, in which different schemes may be adopted. For example, the combination of the trapezoidal rule and the 3-point Euler backward scheme yields the two-sub-step Bathe method [4]. It has the maximum degree of algorithmic dissipation, while also retaining preferable low-frequency accuracy. Considering this advantage, the Bathe method was found to perform well in many fields [3, 5, 36]. As a extensions of the Bathe method, several two-sub-step implicit methods with tunable algorithmic dissipation, including the Kim and Reddy [25], the Kim and Choi [23], the \(\rho _\infty \)-Bathe [35] and the simple ones without acceleration vectors [22], were subsequently proposed. All these methods include the standard Bathe method as a special case; they present the same properties with an appropriate choice of the parameters [26]. To achieve higher accuracy, the three- and four-sub-step implicit composite methods [8, 18, 29, 30, 42] were also developed, adopting similar ideas. In addition, many efforts have been devoted to the development of explicit composite methods, producing several good candidates, such as the Noh and Bathe [34], the Soares [37], and many other methods [21, 24, 43].

In the multi-step class, including many existing single-step methods, Dahlquist [11] has revealed that the methods of higher than second-order accuracy cannot achieve unconditionally stable, known as Dahlquist’s second barrier. As a result, for implicit methods in this class, second-order unconditionally stable schemes are of most concern.

Owing to the simplicity, the single-step methods have received a lot of attention after the 1950s, generating many widely-used schemes, including the Newmark method [33], the Wilson-\(\theta \) method [39], the HHT-\(\alpha \) method proposed by Hilbert, Hughes, and Taylor [15], the WBZ-\(\alpha \) method by Wood, Bossak, and Zienkiewicz [40], the generalized-\(\alpha \) (G-\(\alpha \)) method [9, 17], the GSSSS (generalized single-step single-solve) method [45], and others reviewed in [38]. These single-step methods can display the identical spectral characteristics of the corresponding linear multi-step methods [45]. However, the linear two-step method with optimal parameters [31, 44] can provide better accuracy than most existing single-step methods under the same degree of algorithmic dissipation.

Besides, representative multi-step methods also include the explicit Adams–Bashforth methods [2], the implicit Adams–Moulton methods [13] and the backward differentiation formulas (BDFs) [12]. The Adams–Bashforth and Adams–Moulton methods are designed to achieve higher-order accuracy, but most of them have poor stability. In practical applications, the implicit Adams–Moulton methods are usually used in tandem with the Adams–Bashforth methods as predictor-corrector pairs, which are essentially explicit. The BDFs, although unconditionally stable only up to second order, are particularly useful for solving stiff problems and differential-algebraic equations, owing to their strong algorithmic dissipation. One drawback of the multi-step methods is that they are not self-starting, so another method has to be used to solve the first steps, until there are enough to start the multi-step procedure. As a consequence, multi-step methods may not be as convenient to use as single-step ones.

This paper focuses on second-order accurate and unconditionally stable schemes of the linear multi-step methods. To acquire these accuracy and stability properties, the linear two-step method has been originally studied in [31, 32, 44]. Seeking higher accuracy, the linear three-, and four-step methods are discussed in this paper. From linear analysis, their optimal parameters are determined by minimizing the global error, under the constraints of second-order accuracy, unconditional stability and tunable algorithmic dissipation. The resulting optimal schemes present better accuracy than the linear two-step method, the linear four-step scheme being the best among them. Furthermore, to avoid additional start-up procedures, the single-step methods, spectrally equivalent to the linear multi-step ones, are proposed as well. Instead of utilizing the states of previous steps, the single-step methods get additional information by introducing intermediate variables. With proper parameters, they can achieve the same properties as the linear multi-step methods. Finally, the newly developed methods are applied to solve a few illustrative examples, and the solutions are compared with those of some widely-used implicit second-order schemes.

This paper is organised as follows. The formulations of the linear multi-step methods and the corresponding single-step ones are presented in Sect. 2. The optimization of the parameters is implemented in Sect. 3. The detailed properties of the proposed schemes are discussed in Sect. 4. Numerical examples are provided in Sect. 5, and conclusions are drawn in Sect. 6.

2 Formulations

The linear multi-step method for solving the initial-value problem of the generic first-order implicit differential equation \({\varvec{f}}({\varvec{x}},\dot{{\varvec{x}}},t)={\varvec{0}},{\varvec{x}}(t_0)={\varvec{x}}_0\) can be cast as

where \({\varvec{x}}_k\) and \(\dot{{\varvec{x}}}_k\) are the numerical approximations of \({\varvec{x}}(t_k)\) and \(\dot{{\varvec{x}}}(t_k)\), respectively, \(\Delta t=t_k-t_{k-1}\) is the time step size, assumed as constant for simplicity, \(\alpha _j\) and \(\beta _j\) are scalar parameters. The initial condition \({\varvec{x}}_0\) is supposed to be given in advance.

Applied to the linear structural dynamics problem, expressed as

where \({\varvec{M}}, {\varvec{C}}, {\varvec{K}}\) are the mass matrix, damping matrix, and stiffness matrix, respectively, \({\varvec{R}}(t)\) is the external load, \({\varvec{q}}\), \(\dot{{\varvec{q}}}\) and \(\ddot{{\varvec{q}}}\) denote the displacement, velocity and acceleration vectors, respectively, \({\varvec{q}}_0\) and \({\varvec{v}}_0\) are the known initial displacement and velocity vectors, this method can be formulated as

From Eq. (3), \({\varvec{q}}_k\), \(\dot{{\varvec{q}}}_k\) and \(\ddot{{\varvec{q}}}_k\) can be solved by combining the linear multi-step scheme and the discrete dynamics equation at \(t_k\).

Table 1 provides the step-by-step solution of the linear r-step method for solving the linear structural dynamics problems. To start the procedure of the linear r-step method, the initial \(k < r\) steps need to be prepared by another method. The single-step scheme

which can be obtained by setting \(\alpha _1=1\), \(\beta _1=1-\beta _0\) and \(\alpha _{j}=0, \beta _{j}=0\ (j=2,3,\cdots ,r)\) in Eq. (1b), is adopted in Table 1. Certainly, other methods, such as the trapezoidal rule, and the combination of single-step and multi-step methods, can also be used for solving the first \(r-1\) steps. The scheme in Eq. (4) is employed since it shares the same effective stiffness matrix as the linear r-step method. When applied to linear systems, no additional matrix factorization is requested. With the start-up procedure, the linear multi-step method almost does not require additional computational costs compared with the existing single-step methods. The employed single-step scheme is first-order accurate and unconditionally stable when \(\beta _0>1/2\), and it becomes the second-order trapezoidal rule when \(\beta _0=1/2\). When applied to nonlinear problems, the Newton-Raphson iteration needs to be used to solve the nonlinear equations per step.

To completely save the start-up procedure of initial steps, the single-step method, which can be used as an alternative of the linear r-step method in Eq. (1b), is also proposed as

where \(\dot{{\varvec{x}}}_k^{j}\ (j=1,2,\cdots ,r-1)\) are intermediate variables, and \(\gamma _j\ (j=0,1,\cdots ,2r-2)\) are scalar parameters. When applied to the linear structural dynamics problem (2), this method yields

Clearly, a series of intermediate variables are introduced by this method. These variables are only useful to proceed the current and next step, but do not need to be stored and output. From Eq. (5), they can be seen as the shift approximations of \(\dot{{\varvec{x}}}_k\), so \(\dot{{\varvec{x}}}_0^{1}=\dot{{\varvec{x}}}_0^{2}=\cdots =\dot{{\varvec{x}}}_0^{r-1}=\dot{{\varvec{x}}}_0\) is assumed at the initial time. By choosing appropriate values for the \(\gamma _j\) parameters, the single-step method of Eq. (5) can achieve spectral characteristics identical to those of the linear r-step method of Eq. (1b), which will be demonstrated in Sect. 3.

Table 2 presents the step-by-step solution of the single-step method for solving the linear structural dynamics problems. The intermediate variables of step \(k-1\) are useful to update the state vectors of the current step, and after obtaining the intermediate variables of step k, the storage occupied by these variables of step \(k-1\) can be released. Therefore, this single-step method only requires to store \(2(r-1)\) vectors additionally. The additional storage is negligible compared to the overall storage. In terms of costs, although the single-step methods need to update the intermediate variables additionally, the required efforts of vector addition and subtraction operations are also negligible compared to the matrix operations per step, especially for large finite element systems. Therefore, it is reasonable to say that the proposed single-step methods have equivalent computational costs to the existing single-step methods. Due to being free from the need of additional start-up procedures, the proposed single-step method can be regarded as a more convenient alternative of the linear r-step method for applications.

3 Optimization

In this section, the linear spectral analysis is executed to determine the parameters and optimize the properties. According to Dahlquist’s second barrier [11], only the second-order unconditionally stable schemes are concerned here.

Generally, the test equation for linear analysis is

where \(\xi \) is the damping ratio, and \(\omega \) is the natural frequency. It can be equivalently reduced to the first-order equation

Decomposing the coefficient matrix in Eq. (8) gives the simplified first-order equation

where \(\text {i}=\sqrt{-1}\). To simplify the analysis, Eq. (9) is used as the test equation here.

Applied to Eq. (9), the recurrence equation of the linear r-step method, shown in Eq. (1b), is

The characteristic polynomial can be written as

where the generic characteristic root is denoted by \(\mu \). According to Eq. (11), this polynomial has r characteristic roots \(\mu _1, \mu _2, \cdots , \mu _r\). One of them, called the principal root, dominates the quality of numerical solutions; the other roots are spurious.

Similarly, applying the single-step method of Eq. (5) to the test equation (9) yields the compact scheme

where \({\varvec{A}}\in {\mathbb {C}}^{r\times r}\) is the amplification matrix, which governs the stability and accuracy characteristics of a method. From Eq. (12), the difference scheme only with respect to x can be written as

where \(A_j\ (j=1,\cdots ,r)\) is the sum of the jth-order principal minors of \({\varvec{A}}\). The characteristic polynomial of \({\varvec{A}}\) is

Equation (14) shows a form similar to that of Eq. (11), and they can be identical by choosing appropriate values for the respective parameters.Then these two methods can be called spectrally equivalent to each other.

As representatives of the proposed formulation, the linear two-, three-, and four-step methods, respectively referred to as LMS2, LMS3 and LMS4 in the following, and the alternative single-step methods, correspondingly named SS2, SS3 and SS4, are discussed here. After determining the parameters of the linear multi-step methods, the parameters of the single-step methods are directly obtained by comparing the respective characteristic polynomials. To our knowledge, apart from LMS2 [31, 44], the second-order unconditionally stable schemes of the higher-step methods have not been proposed yet.

3.1 LMS2 and SS2

Setting \(r=2\) in Eq. (1b) yields the formulation of LMS2 as

It is second-order accurate and unconditionally stable with the optimal parameters

The detailed process of parameter optimization is presented in [44]. In Eq. (16), all parameters are controlled by a single parameter \(\rho _\infty \), which is the asymptotic spectral radius, defined as \(\max \{|\mu _j|,j=1,2,\cdots ,r\}\) when \(|\lambda \Delta t|\rightarrow {+\infty }\). Generally, \(\rho _\infty \) is used to denote the degree of algorithmic dissipation. A smaller \(\rho _\infty \) means stronger algorithmic dissipation for the high-frequency content.

As shown in Eq. (5), the corresponding single-step scheme SS2 has the form

From Eq. (14), SS2’s characteristic polynomial is

where

Meanwhile, the characteristic polynomial of LMS2 can be obtained by Eq. (11) and the parameters in Eq. (16) as

Equation (18) is the same as Eq. (20) when the parameters satisfy

Therefore, SS2 with the parameters in Eq. (21) is spectrally equivalent to LMS2 with the parameters in Eq. (16), so the single-step method SS2 also has second-order accuracy, unconditional stability and tunable algorithmic dissipation.

3.2 LMS3 and SS3

According to Eq. (1b), LMS3 can be formulated as

This method is also expected to have second-order accuracy, unconditional stability, and to offer tunable algorithmic dissipation. With respect to Eq. (10), the local truncation error is defined as

Expanding \(\sigma \) at \(t_k\) gives

where

so this method is second-order accurate if

To maximize the low-frequency accuracy for a given degree of algorithmic dissipation, the characteristic roots of Eq. (11) when \(|\lambda \Delta t|\rightarrow {+\infty }\) need to be equal [9], as \(-\rho _\infty \), which implies that

Equations (26) and (27) impose six conditions, so only one parameter, e.g. \(\beta _0\), is now adjustable.

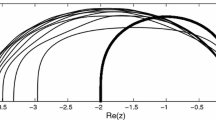

To evaluate the stability, \(\mu =(1+z)/(1-z)\) is assumed; the stability condition \(|\mu |\le 1\) is equivalent to \(\text {Re}(z)\le 1\). With this assumption, the characteristic polynomial of Eq. (11) can be transformed into

where the real and imaginary parts of the coefficients, denoted by \(p_j\) and \(q_j\), respectively, are determined by the scalar parameters and \(\lambda \Delta t\). When \(r=3\), the generalized Routh array [41] with respect to Eq. (28) is listed in Table 3. According to the generalized Routh stability criterion [41], the roots of Eq. (28) satisfy \(\text {Re}(z)\le 1\) only when

Then this method is unconditionally stable if the constraints in Eq. (29) hold for any \(\lambda \) in the complex left-half-plane, including the imaginary axis. To simplify the analysis, the undamped case, i.e. \(\xi =0\), which implies \(\lambda =\pm \omega \text {i}\), is considered first. The desirable range of \(\beta _0\) that makes this method unconditionally stable can be obtained as

To determine \(\beta _0\) uniquely, the global error, defined as \(E_k=x(t_k)-x_k\), is checked. Subtracting the recursive scheme, as in Eq. (10), from the right-hand side of Eq. (23), yields

Assuming \(E_k\approx E_{k-1}\approx E_{k-2}\approx E_{k-3}\), we can obtain

so the global error can be measured by the constant

The optimal \(\beta _0\) that minimizes EC is finally obtained as

Then, the other parameters can be obtained from Eqs. (26)–(27).

With the set of parameters obtained above, the characteristic polynomial of Eq. (11) can be written as

The unconditional stability for the damped cases is checked by solving Eq. (35) with different values of \(\xi \). As expected, the condition \(|\mu |\le 1\) is satisfied for any \(\xi >0\) and \(\omega \Delta t>0\), so this method is unconditionally stable. For illustration, the absolute values of the characteristic roots \(|\mu |\) as a function of \(\Delta t/T\), where \(T=2\pi /\omega \), for the cases \(\xi =0.0\) and \(\xi =0.1\) are plotted in Figs. 1 and 2 , respectively.

Consequently, the optimal parameters of LMS3 have been obtained, as shown in Eqs. (26), (27) and (34). The resulting scheme has optimal accuracy, unconditional stability and tunable algorithmic dissipation.

According to Eq. (5), the single-step scheme SS3 is

Its characteristic polynomial is

where

By comparing Eqs. (37) and (35), SS3 is spectrally equivalent to LMS3 when its parameters are

where \(\gamma _1\) and \(\gamma _3\) can be solved from the quadratic equation \(y^2-(\gamma _1+\gamma _3)y+\gamma _1\gamma _3=0\). Note that if \(\rho _\infty <1\), \(\gamma _1\) and \(\gamma _3\) are complex conjugate numbers. As such, SS3 yields complex intermediate variables, nonetheless resulting in real-valued \({\varvec{x}}_k\) and \(\dot{{\varvec{x}}}_k\), as explained in the following.

As \(\dot{{\varvec{x}}}_0^1=\dot{{\varvec{x}}}_0^2=\dot{{\varvec{x}}}_0\) is assumed, the recurrence equations of the first few steps after eliminating the intermediate variables can be written as

where

The parameters in Eq. (41) are all real numbers, so the computed state vectors are also real. From Eq. (40), the schemes of the first two steps reduce to first-order accurate, and from the third step on, SS3 shows the same properties of LMS3. Actually, it can be verified that \({\varvec{x}}_3\) and \({\varvec{x}}_4\) in Eq. (40) can be reorganized as

The same formulation can also be found in the fifth step, the sixth step and so on, which further validates the equivalence of SS3 and LMS3. In another way, SS3 can be seen as LMS3 with the specific start-up procedure shown in Eq. (40).

3.3 LMS4 and SS4

Similar to LMS2 and LMS3, LMS4 is cast as

Its local truncation error is

which can be also expanded in the form of Eq. (24) with the coefficients

This method is also second-order accurate if

Likewise, the requirement of tunable algorithmic dissipation imposes four conditions, as

Then two parameters remain free, selected as \(\beta _0\) and \(\alpha _1\).

To investigate the stability, the generalized Routh array for Eq. (28) with \(r=4\) is listed in Table 4.

This method is unconditionally stable if the constraints

are satisfied for any \(\lambda \) in the complex left-half-plane, including the imaginary axis. At the same time, the error constant, defined as

needs to be minimized. Under these conditions, the optimal \(\beta _0\) and \(\alpha _1\) are obtained as

Then other parameters can be obtained from Eqs. (46) and (47). With this set of parameters, the characteristic polynomial of LMS4 can be written as

With \(\xi =0.0\) and \(\xi =0.1\), the solutions of \(|\mu |\) versus \(\Delta t/T\) are shown in Figs. 3 and 4 respectively. As can be seen, this method is also unconditionally stable for the damped cases.

The alternative single-step scheme SS4 has the form as

The characteristic polynomial can be written as

where

By comparing Eqs. (53) and (51), SS4 is spectrally equivalent to LMS4 when its parameters satisfy

where \(\gamma _1\),\(\gamma _3\) and \(\gamma _5\) can be obtained by solving the equation \(y^3-(\gamma _1+\gamma _3+\gamma _5)y^2+(\gamma _1\gamma _3+\gamma _1\gamma _5+\gamma _3\gamma _5)y-\gamma _1\gamma _3\gamma _5=0\). If \(\rho _\infty <1\), two of \(\gamma _1\), \(\gamma _3\) and \(\gamma _5\) are complex.

By using \(\dot{{\varvec{x}}}_0^1=\dot{{\varvec{x}}}_0^2=\dot{{\varvec{x}}}_0^3=\dot{{\varvec{x}}}_0\), the first several steps without the intermediate variables can be also written as

where

One can check that the first three steps only have first-order accuracy, but from the fourth step on, the same formulation as LMS4 can be reproduced. Therefore, SS4 can be also regarded as LMS4 with the specific start-up procedure shown in Eq. (56).

In conclusion, the parameters of LMS2, LMS3, LMS4, SS2, SS3, and SS4 for optimal accuracy, unconditional stability and tunable algorithmic dissipation have been determined in this section. From the parameters of LMS2, LMS3 and LMS4, we can see that when \(0\le \rho _\infty <1\), \(\beta _0>1/2\) and when \(\rho _\infty =1\), \(\beta _0=1/2\) , so the single-step scheme used as the start-up procedure in Table 1 is first-order unconditionally stable as \(0\le \rho _\infty <1\), and recovers the trapezoidal rule as \(\rho _\infty =1\). As opposed to LMS2, other schemes are here presented for the first time. The single-step schemes SS2, SS3 and SS4 can present properties identical to those of the corresponding multi-step method, except for the first few steps.

4 Properties

4.1 Accuracy and stability

Due to the equivalence of single-step and linear multi-step methods, the proposed single-step methods are not considered in this section. Firstly, the error constants (ECs), which are employed to measure the global errors, as in Eqs. (33) and (49), of LMS2, LMS3 and LMS4 are plotted in Fig. 5. As can be seen, LMS4 gives the smallest global error, followed by LMS3, and LMS2, which means that the accuracy can be improved by using the information of more previous steps. As \(\rho _\infty \) increases, the gap between them gradually decreases; when \(\rho _\infty =1\), these schemes all become the equivalent multi-step expressions of the trapezoidal rule.

Considering the test equation (9), the exact solution has the form

Using the linear multi-step method, the numerical solution can be expressed as

where \(\mu _p\) and \(\mu _s\) respectively denote the principal and spurious roots. As shown in Figs. 1, 2, 3 and 4, the one with the largest norm is the principal root when \(\rho _\infty <1\), where the effects of spurious roots with smaller norms can be filtered out at the beginning. For the special case of \(\rho _\infty =1\), the principal root is \((2+\Delta t\lambda )/(2-\Delta t\lambda )\), and the spurious roots are all equal to \(-1\), so the spurious roots have larger norms if \(\xi >0\), as shown in Figs. 2 and 4 . However, substituting the recurrence equation of the trapezoidal rule, as

to the first \(r-1\) steps of the recurrence equation of the linear multi-step method with \(\rho _\infty =1\), as shown in Eq. (10), yields that

It follows that, for this case, the large spurious roots do not contribute to the solution, that is \(c_1=c_2=\cdots =c_{r-1}=0\) in Eq. (59). Therefore, only the contribution of the principal root \(\mu _p\) is considered in the following.

Representing \(\mu _p=\text {e}^{\left( -{\overline{\xi }}\pm \text {i}\sqrt{1-{\overline{\xi }}^2}\right) {\overline{\omega }}\Delta t}\), the numerical solution can be expressed in a form similar to that of the exact solution, as

where \({\overline{\xi }}\) and \({\overline{\omega }}\) are the damping ratio and natural frequency of the numerical solution, and they can be obtained from the norm \(|\mu _p|\) and phase \(\angle \mu _p\) as

By comparing Eqs. (58) and (62), \({\overline{\xi }}\) and \(({\overline{T}}-T)/T\) (\({\overline{T}}=2\pi /{\overline{\omega }}\)), known as the amplitude decay ratio (AD) and period elongation ratio (PE), can be used to measure the amplitude and period accuracy, respectively.

Figures 6, 7 and 8 display the spectral radii (SR=\(|\mu _p|\)), AD(\(\%\)) and PE(\(\%\)), respectively, of LMS2, LMS3, LMS4 and two representative implicit methods, the generalized-\(\alpha \) method and the \(\rho _\infty \)-Bathe method, referred to as G-\(\alpha \) and Bathe respectively, for the undamped case. Because of the two-sub-step scheme, the computational cost required by the Bathe method per step is twice that of other methods approximately, so the abscissa is set as \({\Delta }t/(nT)\) (\(n=2\) for Bathe and \(n=1\) for other methods) to compare the methods under equivalent computational costs. In addition to \(\rho _\infty \), the \(\rho _\infty \)-Bathe method can also change the spectral characteristics by adjusting the splitting ratio \(\gamma \), so three cases of \(\gamma =\gamma _0\), \(\gamma =\gamma _0-0.2\) and \(\gamma =\gamma _0+1\) are considered in these figures, where the value of \(\gamma _0\) can refer to [35]. When \(\gamma =\gamma _0\), its two sub-steps share the same effective stiffness matrix for linear problems.

As illustrated in Fig. 6, all methods can provide tunable algorithmic dissipation in the high-frequency domain by adjusting the parameter \(\rho _\infty \). For a specified \(0\le \rho _\infty <1\), LMS4 shows the spectral radius closest to 1 in the low-frequency region, so its dissipation error is the smallest, followed by LMS3, LMS2 and G-\(\alpha \). With a small \(\rho _\infty \), the Bathe method with \(\gamma =\gamma _0\) and \(\gamma =\gamma _0-0.2\) have the spectral radii close to that of LMS3 in the low-frequency region, but when \(0.6\le \rho _\infty <1\), they have larger dissipation errors than G-\(\alpha \). The Bathe method with \(\gamma =\gamma _0+1\) shows the largest dissipation error for any \(0\le \rho _\infty <1\). When \(\rho _\infty =1\), all methods, except the Bathe method with \(\gamma =\gamma _0-0.2\) and \(\gamma =\gamma _0+1\), present the same properties as the trapezoidal rule.

Figures 7 and 8 focus on the low-frequency behaviour; they display results similar to those of Fig. 6. LMS4 is the most accurate among these methods in terms of both amplitude and period, followed by LMS3, LMS2, and G-\(\alpha \). The Bathe method with \(\gamma =\gamma _0+1\) shows the largest errors, and when \(\rho _\infty \) is close to 1, the Bathe method with \(\gamma =\gamma _0\) and \(\gamma =\gamma _0-0.2\) are less accurate than G-\(\alpha \). From [35], for a given \(\rho _\infty \) and \(\gamma \in (0,1)\), the Bathe method with \(\gamma =\gamma _0\) possesses the largest amplitude decay and the smallest period error, which also can be observed by comparing the cases of \(\gamma =\gamma _0\) and \(\gamma =\gamma _0-0.2\).

Owing to the higher accuracy, the proposed LMS3 and LMS4 are really better candidates than the existing second-order methods. Since the developed single-step schemes, SS2, SS3 and SS4, are spectrally equivalent to LMS2, LMS3 and LMS4, respectively, SS3 and SS4 also have the same accuracy advantages.

4.2 Overshoot

From [14], the methods of unconditional stability are possible to show pathological growth at the initial steps, that is the so-called “overshoot”. For a convergent method, there is no overshoot as \(\omega \Delta t\rightarrow 0\), so generally, only the case of \(\omega \Delta t\rightarrow +\infty \) needs to be concerned. With the assumption, the recursive schemes of the linear r-step method, applied to the test model \(\ddot{q}+\omega ^2q=0\), can be written as

Since the overshooting phenomenon only occurs at the first few steps, the numerical results of the rth step are considered here. From Eq. (64), they depend on the start-up procedure for computing the first \(r-1\) steps. If the single-step method as show in Eq. (4) is employed, that is

the approximate results at step r of LMS2, LMS3 and LMS4 can be obtained as

respectively, where the parameters are represented by \(\rho _\infty \). The results indicate that LMS2, LMS3 and LMS4 have zero-order overshoot in both displacement and velocity.

If the trapezoidal rule is used to solve the first \(r-1\) steps, that is

the approximate results at step r of LMS2, LMS3 and LMS4 have the form as

Also, no overshoot can be observed from the results. Therefore, as long as the method used for the start-up procedure does not have overshoot, the proposed multi-step methods also exhibit no overshoot from the rth step.

For the proposed single-step methods, SS2, SS3 and SS4, the results of the first step, applied to the model \(\ddot{q}+\omega ^2q=0\), are checked. With the assumption \(\omega \Delta t\rightarrow +\infty \), they can be expressed as

respectively. The numerical results indicate that the proposed single-step methods also have zero-order overshoot in both displacement and velocity.

In addition, the numerical example used in [14] to measure the overshooting behaviour is also considered here, which has the form as

With \(\Delta t/T=10\), the numerical displacements and velocities given by the proposed multi-step methods, single-step methods and the multi-step methods with the trapezoidal rule (TR) as the start-up procedure, are summarized in Figs. 9 and 10 .

From the results, when \(\rho _\infty <1\), the displacements show a decaying trend from the beginning; the velocities also begin to decay after the initial disturbance caused by the non-zero initial displacement. When \(\rho _\infty =1\), both the displacements and velocities present periodic increases and decreases, since no algorithmic dissipation exists. In all the results, no pathological growth can be observed, which further validates that the proposed methods have no overshoot in both displacement and velocity.

5 Illustrative solutions

To check the performance of the proposed LMS2, LMS3, LMS4, SS2, SS3, and SS4, several illustrative examples are simulated in this section, and the G-\(\alpha \) and Bathe method are also employed for comparison. In order to compare under the close computational costs, the step size of the two-sub-step Bathe method is set as twice that of the other methods. The G-\(\alpha \) developed in [1], which improves the acceleration accuracy to second-order, is used in the computations. Unless otherwise specified, the single-step method in Eq. (4) is used as the start-up procedure for the linear multi-step methods.

Single degree-of-freedom example. The single-degree-of-freedom linear equation [22]

where

is considered to check the convergence rate. The global errors (GE), defined as

within [0, 10] versus \(\Delta t/n\) for these methods using \(\rho _\infty =0\) are plotted in Fig. 11. Second-order convergence rate can be clearly observed in all methods. The Bathe method with \(\gamma =\gamma _0+1\) shows significantly larger errors than others. Besides, the absolute errors (AE), calculated by

with \(\Delta t=0.01\) for the case \(\rho _\infty =0\) and \(\rho _\infty =0.6\) are plotted in Figs. 12 and 13, respectively. In both cases, LMS4 and SS4 predict the most accurate solutions, followed by LMS3 and SS3, LMS2 and SS2, consistent with the discussion in Sec. 4. If the Bathe method with \(\gamma =\gamma _0+1\) is excluded, G-\(\alpha \) shows the largest errors when \(\rho _\infty =0\), and the Bathe method with \(\gamma =\gamma _0-0.2\) is the most inaccurate when \(\rho _\infty =0.6\). The Bathe method with \(\gamma =\gamma _0\) shows higher accuracy than the method with \(\gamma =\gamma _0-0.2\), so only this scheme is considered in the following examples.

To exhibit the effect of different start-up procedures on the accuracy, Fig. 14 plots the absolute errors given by LMS4 following the procedure in Table 1, SS4, and LMS4 using the trapezoidal rule for the first three steps with \(\Delta t=0.01\). In addition to the trapezoidal rule, the other two start-up procedures are first-order accurate. From the results, the accuracy difference can be observed at the beginning, but gradually disappears over time. The single-step method SS4 has very close accuracy to LMS4 with the trapezoidal rule as the start-up procedure in the whole time. Therefore, the start-up procedure has a very limited impact on the overall accuracy. For linear systems, the procedure shown in Table 1 and the single-step method are more recommended, since they only need one factorization of the effective stiffness matrix.

Stiff-soft mass-spring system. The mass-spring oscillator [5], shown in Fig. 15, is considered as a model problem to test the dissipation ability of a method. The motion of \(m_0\) is prescribed as \(q_0=\sin (1.2t)\), and other parameters are \(k_1=10^7\), \(k_2=1\), \(m_0=0\), \(m_1=m_2=1\). The governing equation of \(m_1\) and \(m_2\) can be written as

With zero initial conditions and \(\Delta t/n=0.1309\), the numerical solutions of \(m_1\) by these methods using \(\rho _\infty =0\) are summarized in Fig. 16. The results of \(q_1\) are overlapping with each other, and from \({\dot{q}}_1(v_1)\) and \(\ddot{q}_1(a_1)\), these methods all can filter out the rigid component in the first several steps. Because the first \(r-1\) steps of the linear r-step method and the corresponding single-step method cannot offer the prescribed degree of algorithmic dissipation, the proposed methods need to experience more steps of high-frequency oscillations.

Figure 17 plots the acceleration of \(m_1\) given by LMS4 with different start-up procedures. One can see that SS4 shows stronger dissipation in the first three steps, and LMS4 using the trapezoidal rule as the start-up procedure exhibits larger oscillation due to being non-dissipative. At the sixth step, the stiff component has been filtered out in all schemes.

Wave propagation in a clamped-free bar. As shown in Fig. 18, the clamped-free bar [18, 35], excited by the load F at the free end, is considered. The system parameters are the elastic modulus \(E=3\times 10^7\), cross-sectional area \(A=1\), density \(\rho =7.3\times 10^{-4}\), length \(L=200\), and the step load \(F=10^4\). The bar is meshed by 1000 linear two-node finite elements.

With different CFL numbers (the ratio of the propagation length per step \(\sqrt{E/\rho }\Delta t\) to the element length L/1000), the velocity at the midpoint \(x=100\) given by these methods using \(\rho _\infty =0\) are plotted in Figs. 19–23. From the results, the spurious oscillation can be observed in all methods with CFL=1.0 (CFL=2.0 for the Bathe method), and it lasts longer for LMS3, SS3, LMS4 and SS4. However, with a smaller CFL number, which is different for each method, all methods can rapidly filter out the high-frequency oscillation. Among these methods, LMS4 and SS4 provide favorable results with a larger CFL number, so they require fewer steps than other methods.

A two-dimensional wave propagation problem. The two-dimensional wave propagation problem, a pre-stressed membrane excited by the concentrated load at the center [20, 28], as shown in Fig. 24, is considered. Its transverse governing equation can be expressed as

where the exact wave velocity \(c_0\) is assumed as 1. The concentrated load has the form as

Because of the symmetry, only the domain \(\left[ 0,15\frac{1}{6}\right] \times \left[ 0,15\frac{1}{6}\right] \) needs to be solved. It is meshed regularly by four-node rectangular elements with nodes equally spaced. The consistent mass matrix is used in all solutions.

With zero initial conditions, the displacements at \(t\approx 13\) given by these methods with \(\rho _\infty =0\) and the CFL number suggested from the last example are shown in Figs. 25–27, where the membrane is meshed by \(70\times 70\), \(105\times 105\) and \(140\times 140\) elements, respectively. By the mesh of \(140\times 140\) elements, Fig. 28 plots the obtained displacements and velocities at the angle of \(\theta =0\) and \(\theta =\pi /4\), where \(\theta \) denotes the angle between the wave propagation direction and the x-axis. Except for the trapezoidal rule, other methods all can predict reasonably accurate solutions. The linear multi-step method shows almost identical results to its corresponding single-step method. From the above two examples, the proposed methods perform very well in the wave propagation problems with an appropriate CFL number. A more detailed analysis of the proposed methods for wave propagation problems, such as the optimal CFL numbers for different \(\rho _\infty \), other advantages and disadvantages, is still deserved in the future.

Spring-pendulum model. The spring-pendulum model [10] shown in Fig. 29 is solved. Its motion equation is expressed as

The system parameters are set as \(m=1~\mathrm {kg}\), \(L_0=0.5~\mathrm {m}\), \(g=9.81~\mathrm {m/s^2}\), \(k=98.1~\mathrm {N/m}\) and \(98.1\times 10^6~\mathrm {N/m}\) for modelling the compliant and stiff cases, respectively. The initial conditions are

With \(\Delta t/n=0.01~\mathrm {s}\), Figs. 30–31 show the numerical results of r and \(\theta \), as well as their absolute errors for the compliant case, where \(\rho _\infty =0\) is adopted in all methods, and the reference solution is obtained using the Bathe method with \(\Delta t=10^{-5}~\mathrm {s}\). For the nonlinear problem, LMS4 and SS4 still provide the most accurate results, and G-\(\alpha \) yields the largest errors. The single-step schemes also show close accuracy to the corresponding multi-step methods.

Figure 32 plots the computed r and \(\theta \) for the stiff case by using \(\Delta t/n=0.01~\mathrm {s}\) and \(\rho _\infty =0\). Since the algorithmic dissipation of the proposed methods works from the rth step, LMS4 and SS4 needs more steps to damp out the stiff component. From about the sixth step, all methods can give reasonable results for r.

Truss element. In this example, the classic pendulum model [27], as shown in Fig. 33, is considered. It is discretized as 1 truss element considering the Green strain. This example is usually used to check the energy-conserving characteristic of a method for nonlinear structural dynamics. The system parameters are the length \(L=3.0443~\mathrm {m}\), density per unit length \(\rho A=6.57~\mathrm {kg/m}\), axial stiffness \(EA=10^4~\mathrm {N}\), and \(10^{10}~\mathrm {N}\) for the compliant and stiff cases. The pendulum is excited by the horizontal velocity \(v_0=7.72~\mathrm {m/s}\) at the free end. The gravity is not considered, so the pendulum makes continuous rotations in this plane, along with axial straining.

With \(\Delta t/n=0.01~\mathrm {s}\) and \(\rho _\infty =0\), Figs. 34–35 present the computed results for the compliant and stiff cases, respectively. Figure 34 displays the axial deformation \(\Delta L\) and total energy for the compliant case. Significant amplitude and energy decay can be observed with the G-\(\alpha \), LMS2 and SS2 methods. LMS4 and SS4 exhibit better energy-conserving properties for the nonlinear system owing to their higher accuracy. Figure 35 gives the axial deformation \(\Delta L\) and rotation angle \(\theta \) for the stiff case. Plots similar to those of Fig. 32 can be observed. All these methods can effectively filter out the stiff component after a few oscillations.

6 Conclusions

In this paper, second-order unconditionally stable schemes of linear multi-step methods, represented by LMS2, LMS3, and LMS4, are discussed. From linear analysis, the parameters, controlled by \(\rho _\infty \), of LMS3 and LMS4 are given for optimal accuracy, unconditional stability and controllable algorithmic dissipation. In addition, single-step methods, spectrally equivalent to the linear multi-step ones, are proposed by using intermediate state variables. The parameters of the latter methods, named SS2, SS3, and SS4, are also obtained by enabling them to have the same characteristic polynomials as LMS2, LMS3, and LMS4, respectively. The resulting schemes show equivalent formulations of the corresponding linear multi-step methods, except for the first few steps, in which the linear multi-step methods require a dedicated start-up procedure. Being self-starting, the equivalent single-step schemes are more convenient to use.

Compared with some representative second-order methods, including LMS2, G-\(\alpha \) and the Bathe method, the newly developed LMS3 and LMS4 show higher amplitude and period accuracy for a given degree of algorithmic dissipation. Among them, LMS4 is the most accurate, followed by LMS3, and LMS2, which indicates that employing the states of more past steps helps improving accuracy. The same conclusion can be obtained for SS2, SS3 and SS4. Finally, the above methods mentioned are applied to some illustrative examples. LMS4 and SS4 exhibit accuracy advantage and favorable energy-conserving characteristics in linear and nonlinear examples. The algorithmic dissipation of these methods also perform well in the wave propagation problem and stiff systems.

References

Arnold M, Brüls O (2007) Convergence of the generalized-\(\alpha \) scheme for constrained mechanical systems. Multibody SysDyn 18(2):185–202

Bashforth F, Adams JC (1883) An attempt to test the theories of capillary action: by comparing the theoretical and measured forms of drops of fluid. Cambridge University Press, Cambridge

Bathe KJ (2007) Conserving energy and momentum in nonlinear dynamics: a simple implicit time integration scheme. Comput Struct 85(7–8):437–445

Bathe KJ, Baig MMI (2005) On a composite implicit time integration procedure for nonlinear dynamics. Comput Struct 83(31–32):2513–2524

Bathe KJ, Noh G (2012) Insight into an implicit time integration scheme for structural dynamics. Comput Struct 98:1–6

Butcher JC (1964) Implicit Runge–Kutta processes. Math Comput 18(85):50–64

Butcher JC (2016) Numerical methods for ordinary differential equations. Wiley, Hoboken

Chandra Y, Zhou Y, Stanciulescu I, Eason T, Spottswood S (2015) A robust composite time integration scheme for snap-through problems. Comput Mech 55(5):1041–1056

Chung J, Hulbert G (1993) A time integration algorithm for structural dynamics with improved numerical dissipation: the generalized-\(\alpha \) method. J Appl Mech 60:371–375

Chung J, Lee JM (1994) A new family of explicit time integration methods for linear and non-linear structural dynamics. Int J Numer Meth Eng 37(23):3961–3976

Dahlquist GG (1963) A special stability problem for linear multistep methods. BIT Numer Math 3(1):27–43

Gear CW (1971) Numerical initial value problems in ordinary differential equations. Prentice Hall PTR, New Jersey

Hairer E, Nørsett SP, Wanner G (1993) Solving ordinary differential equations I: Nonstiff problems, 2nd edn. Springer Verlag, Berlin

Hilber HM, Hughes TJ (1978) Collocation, dissipation and [overshoot] for time integration schemes in structural dynamics. Earthq Eng Struct Dyn 6(1):99–117

Hilber HM, Hughes TJ, Taylor RL (1977) Improved numerical dissipation for time integration algorithms in structural dynamics. Earthq Eng Struct Dyn 5(3):283–292

Iserles A (2009) A first course in the numerical analysis of differential equations. Cambridge University Press, Cambridge

Jay OL, Negrut D (2009) A second order extension of the generalized–\(\alpha \) method for constrained systems in mechanics. In: Multibody dynamics. Springer, New York, pp 143–158

Ji Y, Xing Y (2020) An optimized three-sub-step composite time integration method with controllable numerical dissipation. Comput Struct 231:106210

Kennedy CA, Carpenter MH (2019) Diagonally implicit Runge–Kutta methods for stiff ODEs. Appl Numer Math 146:221–244

Kim KT, Zhang L, Bathe KJ (2018) Transient implicit wave propagation dynamics with overlapping finite elements. Comput Struct 199:18–33

Kim W (2019) A new family of two-stage explicit time integration methods with dissipation control capability for structural dynamics. Eng Struct 195:358–372

Kim W (2020) An improved implicit method with dissipation control capability: The simple generalized composite time integration algorithm. Appl Math Model 81:910–930

Kim W, Choi SY (2018) An improved implicit time integration algorithm: the generalized composite time integration algorithm. Comput Struct 196:341–354

Kim W, Lee JH (2018) An improved explicit time integration method for linear and nonlinear structural dynamics. Comput Struct 206:42–53

Kim W, Reddy J (2017) An improved time integration algorithm: A collocation time finite element approach. Int J Struct Stab Dyn 17(02):1750024

Kim W, Reddy J (2020) A comparative study of implicit and explicit composite time integration schemes. Int J Struct Stab Dyn, p 2041003

Kuhl D, Crisfield M (1999) Energy-conserving and decaying algorithms in non-linear structural dynamics. Int J Numer Meth Eng 45(5):569–599

Kwon SB, Bathe KJ, Noh G (2020) An analysis of implicit time integration schemes for wave propagations. Comput Struct 230:106188

Li J, Yu K, He H (2020) A second-order accurate three sub-step composite algorithm for structural dynamics. Appl Math Model 77:1391–1412

Li J, Yu K, Li X (2019) A novel family of controllably dissipative composite integration algorithms for structural dynamic analysis. Nonlinear Dyn 96(4):2475–2507

Masarati P, Lanz M, Mantegazza P (2001) Multistep integration of ordinary, stiff and differential-algebraic problems for multibody dynamics applications. In: Xvi Congress Nazionale AIDAA, pp 1–10

Masarati P, Morandini M, Mantegazza P (2014) An efficient formulation for general-purpose multibody/multiphysics analysis. J Comput Nonlinear Dyn 9(4)

Newmark NM (1959) A method of computation for structural dynamics. J Eng Mech Div 85(3):67–94

Noh G, Bathe KJ (2013) An explicit time integration scheme for the analysis of wave propagations. Comput Struct 129:178–193

Noh G, Bathe KJ (2019) The Bathe time integration method with controllable spectral radius: the \(\rho _{\infty }\)-Bathe method. Comput Struct 212:299–310

Noh G, Ham S, Bathe KJ (2013) Performance of an implicit time integration scheme in the analysis of wave propagations. Comput Struct 123:93–105

Soares D Jr (2016) A novel family of explicit time marching techniques for structural dynamics and wave propagation models. Comput Methods Appl Mech Eng 311:838–855

Tamma KK, Har J, Zhou X, Shimada M, Hoitink A (2011) An overview and recent advances in vector and scalar formalisms: space/time discretizations in computational dynamics–a unified approach. Archives Comput Methods Eng 18(2):119–283

Wilson EL (1968) A computer program for the dynamic stress analysis of underground structures. Tech. rep. California Univ Berkeley Structural Engineering Lab, California

Wood W, Bossak M, Zienkiewicz O (1980) An alpha modification of Newmark’s method. Int J Numer Meth Eng 15(10):1562–1566

Xie X (1985) Stable polynomials with complex coefficients. In: 24th IEEE conference on decision and control, pp 324–325

Zhang H, Xing Y (2018) Optimization of a class of composite method for structural dynamics. Comput Struct 202:60–73

Zhang H, Xing Y (2019) Two novel explicit time integration methods based on displacement–velocity relations for structural dynamics. Comput Struct 221:127–141

Zhang J (2020) A-stable two-step time integration methods with controllable numerical dissipation for structural dynamics. Int J Numer Meth Eng 121:54–92

Zhou X, Tamma KK (2004) Design, analysis, and synthesis of generalized single step single solve and optimal algorithms for structural dynamics. Int J Numer Meth Eng 59(5):597–668

Acknowledgements

The first and second authors acknowledge the financial support by China Scholarship Council.

Funding

Open access funding provided by Politecnico di Milano within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zhang, H., Zhang, R. & Masarati, P. Improved second-order unconditionally stable schemes of linear multi-step and equivalent single-step integration methods. Comput Mech 67, 289–313 (2021). https://doi.org/10.1007/s00466-020-01933-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00466-020-01933-y