Abstract

We rigorously justify the mean-field limit of an N-particle system subject to Brownian motions and interacting through the Newtonian potential in \({\mathbb {R}}^3\). Our result leads to a derivation of the Vlasov–Poisson–Fokker–Planck (VPFP) equations from the regularized microscopic N-particle system. More precisely, we show that the maximal distance between the exact microscopic trajectories and the mean-field trajectories is bounded by \(N^{-\frac{1}{3}+\varepsilon }\) (\(\frac{1}{63}\le \varepsilon <\frac{1}{36}\)) with a blob size of \(N^{-\delta }\) (\(\frac{1}{3}\le \delta <\frac{19}{54}-\frac{2\varepsilon }{3}\)) up to a probability of \(1-N^{-\alpha }\) for any \(\alpha >0\). Moreover, we prove the convergence rate between the empirical measure associated to the regularized particle system and the solution of the VPFP equations. The technical novelty of this paper is that our estimates rely on the randomness coming from the initial data and from the Brownian motions.

Similar content being viewed by others

1 Introduction

Systems of interacting particles are quite common in physics and biosciences, and they are usually formulated according to first principles (such as Newton’s second law). For instance, particles can represent galaxies in cosmological models [1], molecules in a fluid [33], or ions and electrons in plasmas [61]. Such particle systems are also relevant as models for the collective behavior of certain animals like birds, fish, insects, and even micro-organisms (such as cells or bacteria) [5, 13, 48].

Such systems are often described by time dependent trajectory on the N-body configuration space. Depending on the equation of motion, in particular if it is first or second order, the configuration space is given by the collection of all particle positions or all positions and velocities. The latter is used to translate the second order equation into a first order system. The trajectory is then given by the integral curves of the respective flow.

Since numeric and analytic treatment of many body systems is often complicated, effective descriptions which describe collective degrees of freedom of the system are sought for. These effective equations describe collective degrees of freedom of the system, for example temperature, pressure or, as it is the topic of the present manuscript, the density.

In this paper, we are interested in the second order case, in particular the classical Newtonian dynamics of N indistinguishable particles interacting through pair interaction forces and subject to Brownian noise. Denote by \(x_i\in {\mathbb {R}}^3\) and \(v_i\in {\mathbb {R}}^3\) the position and velocity of particle i. The evolution of the system is given by the following stochastic differential equations (SDEs),

where k(x) models the pairwise interaction between the individuals, and \(\{B_i\}_{i=1}^N\) are independent realizations of Brownian motions which count for extrinsic random perturbations such as random collisions against the background. In the presence of friction, model (1) is known as the interacting Ornstein–Uhlenbeck model in the probability or statistical mechanics community. In particular, we refer readers to [49, 58] by Olla, Varadhan and Tremoulet for the scaling limit of the Ornstein–Uhlenbeck system. In this manuscript, we take the interaction kernel to be the Coulombian kernel

for some real number a. The case \(a>0\) corresponds, for example, to the electrostatic (repulsive) interaction of charged particles in a plasma, while the case \(a<0\) describes the attraction between massive particles subject to gravitation. We refer readers to [37, 61] for the original modelings.

Since the number N of particles is large, it is extremely complicated to investigate the microscopic particle system (1) directly. Fortunately, it can be studied through macroscopic descriptions of the system based on the probability density for the particles on phase space. These macroscopic descriptions are usually expressed as continuous partial differential equations (PDEs). The analysis of the scaling limit of the interacting particle system to the macroscopic continuum model is usually called the mean-field limit. For the second order particle system (1), it is expected to be approximated by the following Vlasov–Poisson–Fokker-Planck (VPFP) equations

where \(f(x,v,t):~(x,v,t) \in {\mathbb {R}}^3\times {\mathbb {R}}^3\times [0,\infty )\rightarrow {\mathbb {R}}^+\) is the probability density function in the phase space (x, v) at time t, and

is the charge density introduced by f(x, v, t). We denote by \(E(x,t):=k*\rho (x,t)\) the Coulombian or gravitational force field.

The intent of this research is to show the mean-field limit of the particle system (1) towards the Vlasov–Poisson–Fokker-Planck equations (3). In particular, we quantify how close these descriptions are for a given N. Where \(\sigma =0\) (there is no randomness coming from the noise), mean-field limit results for interacting particle systems with globally Lipschitz forces have been obtained by Braun and Hepp [9] and Dobrushin [16]. Bolley et al. [5] presented an extension of the classical theory to the particle system with only locally Lipschitz interacting force. Such case concerning kernels \(k\in W_{loc}^{1,\infty }\) were also used in the context of neuroscience [6, 57]. The last few years have seen great progress in mean-field limits for singular forces by treating them with an N-dependent cut-off. In particular, Hauray and Jabin [32] discussed mildly singular force kernels satisfying \(|k(x)|\le \frac{C}{|x|^\alpha }\) with \(\alpha <d-1\) in dimensions \(d\ge 3\). For \(1<\alpha <d-1\), they performed the mean-field limit for typical initial data, where they chose the cut-off to be \(N^{-\frac{1}{2d}}\). For \(\alpha < 1\), they proved molecular chaos without cut-off. Unfortunately, their method failed precisely at the Coulomb threshold when \(\alpha = d-1\). More recently, Boers and Pickl [4] proposed a novel method for deriving mean-field equations with interaction forces scaling like \(\frac{1}{|x|^{3\lambda -1}}\) \((5/6<\lambda <1)\), and they were able to obtain a cut-off as small as \( N^{-\frac{1}{d}}\). Furthermore, Lazarovici and Pickl [39] extended the method in [4] to include the Coulomb singularity and they obtained a microscopic derivation of the Vlasov–Poisson equations with a cut-off of \(N^{-\delta }\) \((0<\delta <\frac{1}{d})\). More recently, the cut-off parameter was reduced to as small as \(N^{-\frac{7}{18}}\) in [23] by using the second order nature of the dynamics. Where \(\sigma >0\), the random particle method for approximating the VPFP system with the Coulombian kernel was studied in [26], where the initial data was chose on a mesh and the cut-off parameter could be \(N^{-\delta }\) \((0<\delta <\frac{1}{d})\). Most recently, Carrillo et al. [12] also investigated the singular VPFP system but with the i.i.d. initial data, and obtained the propagation of chaos through a cut-off of \(N^{-\delta }\) \((0<\delta <\frac{1}{d})\), which was a generalization of [39]. We also note that Jabin and Wang [34] rigorously justified the mean-field limit and propagation of chaos for the Vlasov systems with \(L^\infty \) forces and vanishing viscosity (\(\sigma _N\rightarrow 0\) as \(N\rightarrow \infty \)) by using a relative entropy method. They were also able to give quantitative estimates for stochastic systems with integration kernel in the negative Sobolev space \(W^{-1,\infty }\) [36]. Moreover, [54] introduced a relative entropy method at the the level of the empirical measure based on the energy of the system, which lead to mean-field limit for quite singular interactions of Riesz potential form without a cut-off. Lastly, for a general overview of this topic we refer readers to [13, 31, 35, 56].

When the interacting kernel k is singular, it poses problems for both theory and numerical simulations. An easy remedy is to regularize the force with an N-dependent cut-off parameter and get \(k^N\). The delicate question is how to choose this cut-off. On the one hand, the larger the cut-off is, the smoother \(k^N\) will be, and the easier it will be to show the convergence. However, the regularized system is not a good approximation of the actual system. On the other hand, the smaller the cut-off is, the closer \(k^N\) is to the real k, thus the less information will be lost through the cut-off. Consequently, the necessary balance between accuracy (small cut-off) and regularity (large cut-off) is crucial. The analysis we reviewed above tried to justify that. In this manuscript, we set \(\sigma >0\). Compared with the recent work [12], the main technical innovation of this paper is that we fully use the randomness coming from the initial conditions and the Brownian motions to significantly improve the cut-off. Note that in [12] the size of cut-off can be very close to but larger than \(N^{-\frac{1}{d}}\). However we manage to reduce the cut-off size to be smaller than \(N^{-\frac{1}{d}}\) (see Remark 1.4), which is a sort of average minimal distance between N particles in dimension d. This manuscript significantly improves the ideas presented in [10]. There the potential is split up into a more singular and less singular part. The less singular part is controlled in the usual manner while the mixing coming from the Brownian motion is used to estimate the more singular part. The technical innovation in the present paper is that the possible number of particles subject to the singular part of the interaction can be bounded due to the fact that the support of the singular part is small using a Law of Large Numbers argument. Again using the Law of Large Numbers based on the randomness coming from the Brownian motion, we show that the leading order of the singular part of the interaction can be replaced by its expectation value. This step is a key point of the present manuscript. The replacement by the expectation value, i.e. the integration of the force against the probability density, gives the regularization of the singular part and gives a significant improvement of our estimates. This is carried out in Lemma 3.3, the proof of which can be found in Sect. 5. [10] and the present paper are, to our knowledge, so far the only results where the mixing from the Brownian motion has been used in the derivation of a mean-field limit for an interacting many-body system.

As a companion of (1), some also consider the first order stochastic system

As before, one can expect that as the number of the particles N goes to infinity we can get the continuous description of the dynamics as the following nonlinear PDE

where f(x, t) is now the spatial density.

The particle system (5) has many important applications. One of the best known classical applications is in fluid dynamics with the Biot-Savart kernel

It can be treated by the well-known vortex method introduced by Chorin in 1973 [14]. The convergence of the vortex method for two and three dimensional inviscid (\(\sigma =0\)) incompressible fluid flows was first proved by Hald et al. [24, 25], Beale and Majda [2, 3]. When the effect of viscosity is involved (\(\sigma >0\)), the vortex method is replaced by the so called random vortex method by adding a Brownian motion to every vortex. The convergence analysis of the random vortex method for the Navier–Stokes equations was given by [22, 46, 47, 51] in the 1980s. For more recent results we refer to [17, 19, 36, 54]. Another well-known application of the system (5) is to choose the interaction to be the Poisson kernel

where \(C_d>0\) and k is set to be attractive. Now the system (5) coincides with the particle models to approximate the classical Keller–Segel (KS) equation for chemotaxis [38, 52]. We mainly refer to [10, 18, 28,29,30, 43, 44] for the mean-field limit of the KS system. Concerning the size of the cut-off, more specifically, [43] chose the cut-off to be \((\ln N)^{-\frac{1}{d}}\), which was significantly improved in [28], where the cut-off size could be as small as \(N^{-\frac{1}{d(d+1)}}\log (N)\). In [10, 29], the cut-off size was almost optimal, coming fairly close to \(N^{-\frac{1}{d}}\). Many techniques used in this manuscript are adapted from these papers. For the Poisson-Nernst-Planck equation (k is set to be repulsive), [41, 43] proved the mean-field limit without a cut-off.

The rest of the introduction will be split into three parts: We start with introducing the microscopic random particle system in Sect. 1.1. Then we present some results on the existence of the macroscopic mean-field VPFP equations in Sect. 1.2. Lastly, our main theorem will be stated in Sect. 1.3, where we prove the closeness of the approximation of solutions to VPFP equations by the microscopic system.

1.1 Microscopic Random Particle System

We are interested in the time evolution of a system of N-interacting Newtonian particles with noise in the \(N\rightarrow \infty \) limit. The motion of the system studied in this paper is described by trajectories on phase space, i.e. a time dependent \(\Phi _t:{\mathbb {R}}\rightarrow {\mathbb {R}}^{6N}\). We use the notation

where \(x^t_j\) stands for the position of the \(j^{\text {th}}\) particle at time t and \(v^t_j\) stands for the velocity of the \(j^{\text {th}}\) particle at time t. The system is a Newtonian system with a noise term coupled to the velocity, whose evolution is governed by a system of SDEs of the type

where k is the Coulomb kernel (2) modeling interaction between particles and \(B_i^t\) are independent realizations of Brownian motions.

We regularize the kernel k by a blob function \(0\le \psi (x)\in C^2({\mathbb {R}}^3)\), \(\text{ supp } \psi (x)\subseteq B(0,1)\) and \(\int _{{\mathbb {R}}^3}\psi (x)dx=1\). Let \(\psi _\delta ^N=N^{3\delta }\psi (N^{\delta }x)\), then the Coulomb kernel with regularization has the form

Thus one has the regularized microscopic N-particle system for \(i=1,2\ldots ,N\)

Here the initial condition \(\Phi _0\) of the system is independently, identically distributed (i.i.d.) with the common probability density given by \(f_0\). And the corresponding regularized VPFP equations are

1.2 Existence of Classical Solutions to the Vlasov–Poisson–Fokker-Planck System

The existence of weak and classical solutions to VPFP equations (3) and related systems has been very well studied. Degond [15] first showed the existence of a global-in-time smooth solution for the Vlasov–Fokker–Planck equations in one and two space dimensions in the electrostatic case. Later on, Bouchut [7, 8] extended the result to three dimensions when the electric field was coupled through a Poisson equation, and the results were given in both the electrostatic and gravitational case. Also, Victory and O’Dwyer [59] showed existence of classical solutions for VPFP equations when the spacial dimension was less than or equal to two, and local existence for all other dimensions. Then Bouchut in [7] proved the global existence of classical solutions for the VPFP system (3) in dimension \(d=3\). His proof relied on the techniques introduced by Lions and Perthame [42] concerning the existence to the Vlasov–Poisson system in three dimensions. The long time behavior of the VPFP system was studied by Ono and Strauss [50], Carpio [11] and Carrillo et al. [55].

The existence results in [7, 59] are most appropriate for this work. We summarize them in the following theorem, which was also used in [26, Theorem 2.1].

Theorem 1.1

(Classical solutions of the VPFP equations) Let the initial data \(0\le f_0(x,v)\) satisfies the following properties:

-

(a)

\(f_0\in W^{1,1}\cap W^{1,\infty }({\mathbb {R}}^6)\);

-

(b)

there exists a \(m_0>6\), such that

$$\begin{aligned} (1+|v|^2)^{\frac{m_0}{2}}f_0\in W^{1,\infty }({\mathbb {R}}^6)\,. \end{aligned}$$(14)

Then for any \(T>0\), the VPFP equations (3) admits a unique classical solution on [0, T].

Remark 1.1

The proof of the above theorem given in [7, 59] indicates that the map

is a continuous map from [0, T] to \(W^{1,\infty }({\mathbb {R}}^3)\). This implies that initial smooth data remains smooth for all time intervals [0, T]. So if we assume the initial data satisfies the following for any \(k\ge 1\)

-

(a)

\(f_0\in W^{k,1}\cap W^{k,\infty }({\mathbb {R}}^6)\);

-

(b)

there exists a \(m_0>6\), such that

$$\begin{aligned} (1+|v|^2)^{\frac{m_0}{2}}f_0\in W^{k,1}\cap W^{k,\infty }({\mathbb {R}}^6)\,. \end{aligned}$$(16)

Then the unique classical solution f maintains the regularity on [0, T] for any \(k\ge 1\):

The present paper also needs the uniform-in-time \(L^\infty \) bound of the charge density \(\rho \):

which was obtained in [53] by means of the stochastic characteristic method under the assumption the \(f_0\) was compactly supported in velocity. We also note that [12] provided a proof of the local-in-time \(L^\infty \) bound for \(\rho \) by employing Feynman–Kac’s formula and assuming the initial data has polynomial decay.

In this paper, we assume that the initial data \(f_0\) satisfies the following assumption:

Assumption 1.1

The initial data \(0\le f_0(x,v)\) satisfies

-

1.

\(f_0\in W^{1,1}\cap W^{1,\infty }({\mathbb {R}}^6)\);

-

2.

there exists a \(m_0>6\), such that

$$\begin{aligned} (1+|v|^2)^{\frac{m_0}{2}}f_0\in W^{1,1}\cap W^{1,\infty }({\mathbb {R}}^6); \end{aligned}$$(19) -

3.

\(f_0(x,v)=0\) when \(|v|>Q_v\).

The above assumption makes sure that we have the regularity needed for this article: for any \(T>0\),

where \(C_{f_0}\) depends only on \(\Vert f_0\Vert _{W^{1,1}\cap W^{1,\infty }({\mathbb {R}}^6)}\), \(\Vert (1+|v|^2)^{\frac{m_0}{2}}f_0\Vert _{W^{1,1}\cap W^{1,\infty }({\mathbb {R}}^6)}\) and \(Q_v\). Note that the charge density \(\rho \) satisfies

Thus we have

We also note that equivalently one can estimate a bound for \(f^N\) and \(\rho ^N\) uniformly in N.

Remark 1.2

The assumption that \(f_0\) is compactly supported in the velocity variable is not required for the existence of the VPFP system. However it is used to get the \(L^\infty \) bound of the charge density \(\rho \) (see in [53]) and also in the proof of Lemma 3.1 (see in (84)).

Remark 1.3

All our estimates below are also possible in the presence of sufficiently smooth external fields. Due to the fluctuation-dissipation principle it is more natural to add an external, velocity-dependent friction force to the system.

1.3 Statement of the Main Results

Our objective is to derive the macroscopic mean-field PDE (3) from the regularized microscopic particle system (12). We will do this by using probabilistic methods as in [10, 28, 29, 39]. More precisely, we shall prove the convergence rate between the solution of VPFP equations (3) and the empirical measure associated to the regularized particle system \(\Phi _t\) satisfying (12). We assume that the initial condition \(\Phi _0\) of the system is independently, identically distributed (i.i.d.) with the common probability density given by \(f_0\).

Given the solution \(f^N\) to the mean-field Eq. (13), we first construct an auxiliary trajectory \(\Psi _t\) from (13). Then we prove the closeness between \(\Phi _t\) and \(\Psi _t\). For the auxiliary trajectory

we shall consider again a Newtonian system with noise, however, this time not subject to the pair interaction but under the influence of the external mean field \(k^N*\rho ^N(x,t)\)

Here we let \(\Psi _t\) have the same initial condition as \(\Phi _t\) (i.i.d. with the common density \(f_0\)). Since the particles are just N identical copies of evolution, the independence is conserved. Therefore the \(\Psi _t\) are distributed i.i.d. according to the common probability density \(f^N\). We remark that the VPFP equation (13) is Kolmogorov’s forward equation for any solution of (24), and in particular their probability density \(f^N\) solves (13). This i.i.d. property will play a crucial role below, where we shall use the concentration inequality (see in Lemma 2.5) on some functions depending on \(\Psi _t\).

Our main result states that the N-particle trajectory \(\Phi _t\) starting from \(\Phi _0\) (i.i.d. with the common density \(f_0\)) remains close to the mean-field trajectory \(\Psi _t\) with the same initial configuration \(\Phi _0=\Psi _0\) during any finite time [0, T]. More precisely, we prove that the measure of the set where the maximal distance \(\max \limits _{t\in [0,T]}\Vert \Phi _t-\Psi _t\Vert _\infty \) on [0, T] exceeds \(N^{-\lambda _2}\) decreases exponentially as the number N of particles grows to infinity. Here the distance \(\Vert \Phi _t-\Psi _t\Vert _\infty \) is measured by

Theorem 1.2

For any \(T>0\), assume that trajectories \(\Phi _t=(X_t,V_t)\), \(\Psi _t=({{\overline{X}}}_t,{{\overline{V}}}_t)\) satisfy (12) and (24) respectively with the initial data \(\Phi _0=\Psi _0\), which is i.i.d. sharing the common density \(f_0\) that satisfies Assumption 1.1. Then for any \(\alpha >0\) and \(0<\lambda _2<\frac{1}{3}\), there exists some \(0<\lambda _1<\frac{\lambda _2}{3}\) and a \(N_0\in {\mathbb {N}}\) which both depend only on \(\alpha \), T and \(C_{f_0}\), such that for \(N\ge N_0\), the following estimate holds with the cut-off index \(\delta \in \left[ \frac{1}{3},\min \left\{ \frac{\lambda _1+3\lambda _2+1}{6},\frac{1-\lambda _2}{2}\right\} \right) \)

where \(\Vert \Phi _t-\Psi _t\Vert _\infty \) is defined in (25).

Remark 1.4

In particular, for any \(\frac{1}{63}\le \varepsilon <\frac{1}{36}\), choosing \(\lambda _2=\frac{1}{3}-\varepsilon \) and \(\lambda _1=\frac{1}{9}-\varepsilon \), we have a convergence rate \(N^{-\frac{1}{3}+\varepsilon }\) with a cut-off size of \(N^{-\delta }\) \((\frac{1}{3}\le \delta <\frac{19}{54}-\frac{2\varepsilon }{3})\). In other words, the cut-off parameter \(\delta \) can be chosen very close to \(\frac{19}{54}\) and in particular larger than \(\frac{1}{3}\), which is a significant improvement over previous results in the literature.

Strategy of the proof

Before we explain our strategy in more details, we would like to explain the novelty of this paper, namely the use of the Brownian motion. Of course Brownian motion helps for the solution theory of nonlinear PDE. Existence, both local and global, as well as uniqueness are typically easier to prove or hold with more generality, given that the PDE includes a term which smooths the density. In this case the smoothing comes from the heat kernel, which is due to the Brownian motion on the microscopic level. This paper, however, focuses on the derivation of the effective description from the microscopic dynamics. We cite the respective results on the existence of classical solutions with the properties we need, which is done in Theorem 1.1. For the proof we refer to the literature.

However, in addition to stronger results on the solution theory, there is also a direct advantage for the proof of validity of the effective description from the microscopic dynamics, which plays an important part in the technicalities of this paper. The argument is, on the heuristic level, implemented in the following way: All our proof is based on controlling the growth of the difference between the trajectory of the many body system and the trajectory of the mean field system, which starts with the same initial configuration but assumes that the particles follow the flow generated from the mean-field interaction. This distance is measured in maximum norm, so it gives a control for the deviation of each single particle from its mean-field trajectory. We use Gronwall’s inequality, i.e. assuming that this distance has a certain value, we control the growth of this distance. Under this assumption we are blind to many details of our system. Having information on the distance for each particle, for example, one does not get a good control of the clustering of particles. This is because the density in our system is high, and the worst case scenario can still lead to large clusters assuming that the estimate on the distance of the true positions of the particles from the mean-field trajectory is much larger than the intermediate distance of particles. Without using the effect of Brownian motion in our proof we would now have to assume the worst case, i.e. that the particles form the largest possible clusters. Of course, given the Brownian motion, this is not very probable. Therefore the idea is to show that the worst clusters have only small probability using a law of large numbers argument based on the randomness we get from the Brownian motion.

Note that for other arguments the randomness coming from the initial condition is helpful. Thus we will distinguish between the two in the proof below. In more detail: the strategy is to obtain a Gronwall-type inequality for \(\max \limits _{t\in [0,T]}\Vert \Phi _t-\Psi _t\Vert _\infty \). Notice that

where \(K^N(X_t)\) and \({{\overline{K}}}^N({{\overline{X}}}_t)\) are defined as

One can compute

If the force \(k^N\) is Lipschitz continuous with a Lipschitz constant independent of N, the desired convergence follows easily [9, 16]. However the force considered here becomes singular as \(N\rightarrow \infty \), hence it does not satisfy a uniform Lipschitz bound.

The first term in (27) is already a sufficient bound in view of Gronwall’s Lemma.

\(\bullet \) By the Law of Large Numbers, carried out in detail for our purpose here in Proposition 3.1, we show for any \(T>0\)

where for convenience we abused the notation \(a\preceq b\) to denote \(a\le b\) except for an event with probability approaching zero. This direct error estimate can be seen as a consistency of the two evolution in high probability.

\(\bullet \) In Proposition 3.2 and Lemma 3.4, we show that the propagation of errors, coming from the second term in (27), is stable. This stability is important to be able to close the Gronwall argument. We show that for any \(T>0\)

holds under the condition that

Here it is crucial to ensure the constant \(\lambda _3\) satisfies \(2\delta -1\le -\lambda _3<-\lambda _2<0\).

To get this improvement of the cutoff parameter compared to previous results in the literature, we make use of the mixing caused by the Brownian motion. Therefore we split potential \(K^N:=K_1^N+K_2^N\), where \(K_2^N\) is chosen to have a wider cut-off of order \(N^{-\lambda _2}>N^{-\delta }\). The less singular part \(K_2^N\) is controlled in the usual manner [4, 28, 29, 39] (see in estimate (116)):

Thus we are left with the force \(K_1^N\). We shall first estimate the number of particles that will be present in the support of \(K_1^N\). Since the latter is small, this number will always be very small compared to N.

Under the condition (30) we can not track the particles of the Newtonian time evolution with an accuracy larger than \(N^{-\lambda _2}\). Thus – without using the Brownian motion – we have to assume the worst case scenario, which is all particles giving the maximal possible solution to the force, i.e. sitting close to the edge of the cutoff region, and all forces summing up, i.e. all particles sitting on top of each other.

But the Brownian motion in our system will lead to mixing. For a short time interval the effect of mixing will be much larger than the effect of the correlations coming from the pair interaction, and we can make use of the independence of the Brownian motions. This mixing, which happens on a larger spacial scale than the range of the potential, causes the particles to be distributed roughly equally over the support of the interaction resulting in a cancellation of the leading order of \(K_1^N\). It follows for the more singular part \(K_1^N\) that there exists some constant \(\lambda _3\) satisfying \(2\delta -1\le -\lambda _3<-\lambda _2<0\) such that

This is mainly carried out in Sect. 5 (the proof of Lemma 3.3).

\(\bullet \) Combining consistency (28) and stability (29), we conclude that

where \(-\lambda _3<-\lambda _2<0\). Gronwall’s inequality implies

which verifies the condition (30). Hence it completes our proof.

To quantify the convergence of probability measures, we give a brief introduction on the topology of the p-Wasserstein space. In the context of kinetic equations, it was first introduced by Dobrushin [16]. Consider the following probability space with finite p-th moment:

We denote the Monge-Kantorovich-Wasserstein distance in \({\mathcal {P}}_p({\mathbb {R}}^{d})\) as follows

where \(\Lambda (\mu ,~\nu )\) is the set of joint probability measures on \({{\mathbb {R}}^{d}}\times {{\mathbb {R}}^{d}}\) with marginals \(\mu \) and \(\nu \) respectively and (X, Y) are all possible couples of random variables with \(\mu \) and \(\nu \) as respective laws. For rotational simplicity, the notation for a probability measure and its probability density is often abused. So if \(\mu ,\nu \) have densities \(\rho _1,\rho _2\) respectively, we also denote the distance as \(W_p^p(\rho _1,\rho _2)\). For further details, we refer the reader to the book of Villani [60].

Following the same argument as [39, Corollary 4.3], Theorem 1.2 implies molecular chaos in the following sense:

Corollary 1.1

For any \(T>0\), let \(F_0^N:=\otimes ^N f_0\) and \(F_t^N\) be the N-particle distribution evolving with the microscopic flow (12) starting from \(F_0^N\). Then the k-particle marginal

converges weakly to \(\otimes ^kf_t\) as \(N\rightarrow \infty \) for all \(k\in N\), where \(f_t\) is the unique solution of the VPFP equations (3) with \(f_t|_{t=0}= f_0\). More precisely, under the assumptions of Theorem 1.2, for any \(\alpha >0\), there exists some constants \(C>0\) and \(N_0>0\) depending only on \(\alpha \), T and \(C_{f_0}\), such that for \(N\ge N_0\), the following estimate holds

where \(\lambda _2\) is used in Theorem 1.2.

Another result from Theorem 1.2 is the derivation of the macroscopic mean-field VPFP equations (3) from the microscopic random particle system (12). We define the empirical measure associated to the microscopic N-particle systems (12) and (24) respectively as

The following theorem shows that under additional moment control assumptions on \(f_0\), the empirical measure \(\mu _\Phi (t)\) converges to the solution of VPFP equations (3) in \(W_p\) distance with high probability.

Theorem 1.3

Under the same assumption as in Theorem 1.2, let \(f_t\) be the unique solution to the VPFP equations (3) with the initial data satisfying Assumption 1.1 and \(\mu _\Phi (t)\) be the empirical measure defined in (37) with \(\Phi _t\) being the particle flow solving (12). Let \(p\in [1,\infty )\) and assume that there exists \(m>2p\) such that \(\iint _{{\mathbb {R}}^6}|x|^mf_0(x,v)dxdv<+\infty \). Then for any \(T>0\) and \(\kappa <\min \{\frac{1}{6},\frac{1}{2p},\delta \}\), there exists a constant \(C_1\) depending only on T and \(C_{f_0}\) and constants \(C_2\), \(C_3\) depending only on m, p, \(\kappa \), such that for all \(N\ge e^{\left( \frac{C_1}{1-3\lambda _2}\right) ^2}\) it holds that

where \(\delta \) and \(\lambda _2\) are used in Theorem 1.2.

This theorem provides a derivation of the VPFP equations from an interacting N-particle system, bridging the gap between the microscopic descriptions in terms of agent based models and macroscopic or hydrodynamic descriptions for the particle probability density.

2 Preliminaries

In this section we collect the technical lemmas that are used in the proofs of the main theorems. Throughout this manuscript, generic constants will be denoted generically by C (independent of N), even if they are different from line to line. We use \(\Vert \cdot \Vert _p\) for the \(L^p\) (\(1\le p\le \infty \)) norm of a function. Moreover if \(v=(v_1,\ldots ,v_N)\) is a vector, then \(\Vert v\Vert _\infty :=\max \limits _{i=1,\ldots ,N}|v_i|\).

2.1 Local Lipschitz Bound

First let us recall some estimates of the regularized kernel \(k^N\) defined in (11):

Lemma 2.1

(Regularity of \(k^N\))

-

(i)

\(k^N(0)=0\), \(k^N(x)=k(x)\), for any \(|x|\ge N^{-\delta }\) and \(|k^N(x)|\le |k(x)|\), for any \(x\in {\mathbb {R}}^3\);

-

(ii)

\(|\partial ^\beta k^N(x)|\le CN^{(2+|\beta |)\delta },\text{ for } \text{ any } x\in {\mathbb {R}}^3\);

-

(iii)

\(\Vert k^N\Vert _{2}\le CN^{\frac{\delta }{2}}.\)

The estimate (i) has been proved in [63, Lemma 2.1] and (ii) follows from [2, Lemma 5.1]. As for (iii), it is a direct result of Young’s inequality.

Next we define a cut-off function \(\ell ^N\), which will provide the local Lipschitz bound for \(k^N\).

Definition 2.1

Let

and \(L^N: {\mathbb {R}}^{3N}\rightarrow {\mathbb {R}}^N\) be defined by \((L^N(X_t))_i:=\frac{1}{N-1}\sum \limits _{i\ne j}\ell ^N(x_i^t-x_j^t)\). Furthermore, we define \({{\overline{L}}}^N({{\overline{X}}}_t)\) by \(( \overline{L}^N({{\overline{X}}}_t))_i:=\int _{{\mathbb {R}}^3} \ell ^N(\overline{x}_i^t-x)\rho ^N(x,t)dx\).

We summarize our first observation of \(k^N\) and \(\ell ^N\) in the following lemma:

Lemma 2.2

There is a constant \(C>0\) independent of N such that for all \(x,y\in {\mathbb {R}}^3\) with \(|x-y|\le N^{-\lambda _2}\gg N^{-\delta }\) \((\lambda _2<\delta )\) the following holds:

where \(k^N\) is the regularization of the Coulomb kernel (2) and \(\ell ^N\) satisfies Definition 2.1.

Proof

Let us first consider the case \(|y|<2 N^ {-\lambda _2}\). It follows from the bound from Lemma 2.1 and the decrease of \(\ell ^ N\) that

where we used \(2N^{-\lambda _2}>6N^ {-\delta }\), thus \(\ell ^ N( 2N^ {-\lambda _2})=27N^{3\lambda _2}\).

Next we consider the case \(|y|\ge 2 N^ {-\lambda _2}\). It follows that \(|x|\ge N^ {-\lambda _2}\) and thus by Lemma 2.1 (i)

where in the last step we used \(|x|\ge (|y|-N^ {-\lambda _2})\ge \frac{|y|}{2}\) for \(|y|\ge 2N^ {-\lambda _2}\). Collecting (40) and (41) finishes the proof. \(\square \)

Recall the notations

and we have the local Lipschitz continuity of \(K^N\):

Lemma 2.3

If \(\Vert X_t-{{\overline{X}}}_t\Vert _\infty \le 2N^{-\delta }\), then it holds that

for some \(C>0\) independent of N.

Proof

For any \(\xi \in {\mathbb {R}}^3\) with \(|\xi |<4N^{-\delta }\), we claim that

where \(\ell ^N(x)\) is defined in (39). Indeed, for \(|x|<6N^{-\delta }\), estimate (44) holds due to the fact that \(\Vert \nabla k^N\Vert _\infty \le N^{3\delta }\). For \(|x|\ge 6N^{-\delta }\), there exists \(s\in [0,1]\) such that

where

The right hand side of the above expression takes its largest value when \(s=1\) and

Since \(|\xi |<4N^{-\delta }\) and \(|x|\ge 6N^{-\delta }\), it follows that \(\frac{|\xi |}{|x|}<\frac{2}{3}\). Therefore, we get

Applying claim (44) one has

which leads to (43). \(\square \)

The following observations of \(k^N\) and \(\ell ^N\) turn out to be very helpful in the sequel:

Lemma 2.4

Let \(\ell ^N(x)\) be defined in Definition 2.1 and \(\rho \in W^{1,1}\cap W^{1,\infty } ({\mathbb {R}}^3)\). Then there exists a constant \(C>0\) independent of N such that

and

Proof

We only prove one of the estimates above, since all the estimates can be obtained through the same procedure. One can estimate

We estimate the first term

where B(r) denotes the ball with radius r in \({\mathbb {R}}^3\). The second term is bounded by

It is easy to compute the last term

Collecting estimates (49), (50) and (51), one has

\(\square \)

2.2 Law of Large Numbers

Also, we need the following concentration inequality to provide us the probability bounds of random variables:

Lemma 2.5

Let \(Z_1,\ldots ,Z_N\) be i.i.d. random variables with \({\mathbb {E}}[Z_i]=0,\) \({\mathbb {E}}[Z_i^2]\le g(N)\) and \(|Z_i|\le C\sqrt{Ng(N)}\). Then for any \(\alpha >0\), the sample mean \({\bar{Z}}=\frac{1}{N}\sum _{i=1}^{N}Z_i\) satisfies

where \(C_\alpha \) depends only on C and \(\alpha \).

The proof can be seen in [22, Lemma 1], which is a direct result of Taylor’s expansion and Markov’s inequality.

Recall the notation

We can introduce the following version of the Law of Large Numbers:

Lemma 2.6

At any fixed time \(t\in [0,T]\), suppose that \({{\overline{X}}}_t\) satisfies the mean-field dynamics (24), \(K^N\) and \({{\overline{K}}}^N\) are defined in (42) and (54) respectively, \(L^N\) and \({{\overline{L}}}^N\) are introduced in Definition 2.1. For any \(\alpha >0\) and \(\frac{1}{3}\le \delta <1\), there exist a constant \(C_{1,\alpha }>0\) depending only on \(\alpha \), T and \(C_{f_0}\) such that

and

Proof

We can prove this lemma by using Lemma 2.5.

Due to the exchangeability of the particles, we are ready to bound

where

Since \({{\overline{x}}}_1^t\) and \({{\overline{x}}}_j^t\) are independent when \(j\ne 1\) and \(k^N(0)=0\), let us consider \({{\overline{x}}}_1^t\) as given and denote \(\mathbb {E'}[\cdot ]={\mathbb {E}}[\cdot |{{\overline{x}}}_1^t]\). It is easy to show that \({\mathbb {E}}'[Z_j]=0\) since

To use Lemma 2.5, we need a bound for the variance

Since it follows from Lemma 2.4 that

it suffices to bound

and

where we have used \(\Vert k^N\Vert _2\le CN^{\frac{\delta }{2}}\) in Lemma 2.1 (iii). Hence one has

So the hypotheses of Lemma 2.5 are satisfied with \(g(N)=CN^{4\delta -1}\). In addition, it follows from (ii) in Lemma 2.1 that \(|Z_j|\le CN^{2\delta }\le C\sqrt{Ng(N)}\). Hence, using Lemma 2.5, we have the probability bound

Similarly, the same bound also holds for all other indexes \(i=2,\ldots ,N\), which leads to

Let \(C_{1,\alpha }\) be the constant \(C(\alpha ,T,C_{f_0}) \) in (65), then we conclude (55).

To prove (56), we follow the same procedure as above

where

It is easy to show that \({\mathbb {E}}'[Z_j]=0\). To use Lemma 2.5, we need a bound for the variance. One computes that

and

where we have used the estimates of \(\ell ^N\) in Lemma 2.4. Hence one has

So the hypotheses of Lemma 2.5 are satisfied with \(g(N)=CN^{6\delta -1}\). In addition, it follows from Definition 2.1 that \(|Z_j|\le CN^{3\delta }\le C\sqrt{Ng(N)}\). Hence, we have the probability bound

by Lemma 2.5, which leads to

3 Proof of Theorem 1.2

We do the proof by following the idea in [28, 29], which is that consistency and stability imply convergence. This at least in principle corresponds to the Lax’s equivalence theorem of proving the convergence of a numerical algorithm, which is that stability and consistency of an algorithm imply its convergence.

3.1 Consistency

In order to obtain the consistency error for the entire time interval, we divide [0, T] into \(M+1\) subintervals with length \(\Delta \tau =N^{-\frac{\gamma }{3}}\) for some \(\gamma >4\) and \(\tau _k=n\Delta \tau \), \(k=0,\ldots ,M+1\). The choice of \(\gamma \) will be clear from the discussion below. Here the choice of \(\Delta \tau \) is only for the purpose of proving consistency and it can be sufficiently small. Note that it is different from \(\Delta t\) in the proof of stability in the next subsection.

First, we establish the following lemma on the traveling distance of \({{\overline{X}}}_t\) in a short time interval \([\tau _k,\tau _{k+1}]\):

Lemma 3.1

Assume that \(({{\overline{X}}}_t,{{\overline{V}}}_t)\) satisfies the mean-field dynamics (24). For \(\gamma >4\) it holds

where \(C_B\) depends only on T and \(C_{f_0}\).

Proof

Notice that for \(t\in [\tau _k,\tau _{k+1}]\)

where

The estimate of \(I_1^k(t)\) follows from Lemma 2.4

So we have

To estimate \(I_2^k(t)\), recall a basic property of Brownian motion [20, Chap. 1.2]:

which leads to

where we choose \(b=N^{-\frac{1}{3}}\).

Since \(\max \limits _{t\in [\tau _k,\tau _{k+1}]}\Vert I_2^k(t)\Vert _\infty \le \Delta t \sqrt{2\sigma } \max \limits _{t\in [\tau _k,\tau _{k+1}]}\Vert B(t)-B(\tau _k)\Vert _\infty \), it follows from (78) that

which leads to

where we used the fact that \(n\le \frac{T}{\Delta t}=TN^{\frac{\gamma }{3}}\).

Lastly, we prove the estimate of \(I_3^k(t)\). It is obvious that

and it follows from (77) that

Moreover, it follows from the assumption in Theorem 1.1b) the distribution \(f_0^v(v)\) of \(V_0\) has a compact support:

Then one has

It follows from (73) that

then it yields

which leads to

Then it follows from (76), (80) and (87) that

for \(\gamma >4\), which completes the proof of (72). \(\square \)

Now we can prove the consistency error for the entire time interval [0, T].

Proposition 3.1

(Consistency) For any \(T>0\), let \(({{\overline{X}}}_t,{{\overline{V}}}_t)\) satisfy the mean-field dynamics (24) with initial density \(f_0(x,v)\), \(K^N\) and \({{\overline{K}}}^N\) be defined in (42) and (54) respectively. For any \(\alpha >0\) and \(\frac{1}{3}\le \delta <1\), there exist a constant \(C_{2,\alpha }>0\) depending only on \(\alpha \), T and \(C_{f_0}\) such that

and

Proof

Denote the events:

and

where \(C_B\) and \(C_{1,\alpha } \) are used in Lemma 2.6 and Lemma 3.1 respectively. According to Lemma 2.6 and Lemma 3.1, one has

for any \(\alpha >0\) and \(\gamma >4\).

Furthermore, we denote

then one has

by Lemma 2.6. Also, under the event \({\mathcal {B}}_{\tau _k}\), it holds that

where we have used \(\Vert {{\overline{L}}}^N({{\overline{X}}}_{\tau _k})\Vert _\infty \le C\log (N)\) from Lemma 2.4.

For all \(t\in [\tau _k,\tau _{k+1}]\), under the event \({\mathcal {B}}_{\tau _k}\cap {\mathcal {C}}_{\tau _k}\cap \mathcal {{{\overline{H}}}}\), we obtain

due to the fact that \(3\delta +1<4<\gamma \). In the second inequality we have used the local Lipschitz bound of \(K^N\)

under the event \(\mathcal {{{\overline{H}}}}\) (see in Lemma 2.3). To bound the third term \(\left\| {{\overline{K}}}^N({{\overline{X}}}_{\tau _k})-{{\overline{K}}}^N({{\overline{X}}}_t)\right\| _\infty \), we used the uniform control of \(\max \limits _{\tau _k\le t \le \tau _{k+1}}\Vert \partial _t\rho ^N\Vert _{L^\infty ({\mathbb {R}}^3)}\) in (22). Indeed,

In the third inequality we have used (90) and (95). This yields that

holds under the event \(\bigcap \limits _{k=0}^{M}{\mathcal {B}}_{\tau _k}\cap {\mathcal {C}}_{\tau _k}\cap \mathcal {{{\overline{H}}}}\). Therefore it follows from (92) and (94) that

Denote \(C_{2,\alpha '}\) to be the constant \(C(\alpha , T, C_{f_0})\) in (100). Since \(\alpha >0\) is arbitrary and so is \(\alpha '\), (88) holds true. The proof of (89) can be done similarly. \(\square \)

3.2 Stability

In this subsection we obtain the stability result.

Definition 3.1

Let \({\mathcal {A}}_T\) be the event given by

Proposition 3.2

(Stability) For any \(T>0\), assume that the trajectories \(\Phi _t=(X_t,V_t)\), \(\Psi _t=({{\overline{X}}}_t,{{\overline{V}}}_t)\) satisfy (12) and (24) respectively with the initial data \(\Phi _0=\Psi _0\) which is i.i.d. sharing the common density \(f_0\) satisfying Assumption 1.1. Let \(K^N\) be introduced in (42). For any \(0<\lambda _2<\frac{1}{3}\), \(0<\lambda _1<\frac{\lambda _2}{3}\) and \(\frac{1}{3}\le \delta <1\), we denote the event:

Then for any \(\alpha >0\), there exists some \(C_{3,\alpha }>0\) and a \(N_0\in {\mathbb {N}}\) depending only on \(\alpha \), T and \(C_{f_0}\) such that

for all \(N\ge N_0\).

Remark 3.1

This proposition is one of the crucial statements in our paper, which later leads to Lemma 3.4. Proving propagation of chaos for systems like the one we consider under the assumptions of Lipschitz-continuous forces is standard, as explained in the introduction. The forces we consider are more singular. However our techniques allow us to show that the Lipschitz condition encoded in the definition of \({\mathcal {S}}\) holds typically, i.e. with probability close to one. In this sense, Proposition 3.2 is only helpful if we find an argument that \({\mathcal {A}}_T\) holds. But as long as we have good estimates on the difference of the forces and thus the growth of \(\max \limits _{t\in [0,T]} \sqrt{\log (N)}\left\| X_t-{{\overline{X}}}_t\right\| _\infty +\left\| V_t-{{\overline{V}}}_t\right\| _\infty \), we are in fact able to control \({\mathcal {A}}_T\) (see argument in (154)).

Proof

Let \(\alpha >0\). First, we write \({\mathcal {S}}_T(\Lambda )\) as the intersection of non-overlapping sets \(\{{\mathcal {S}}_n(\Lambda )\}_{n=0}^{M'}\), where

with \(\Delta t:=t_{n+1}-t_n=N^{-\lambda _1}\), then \({\mathcal {S}}_T(\Lambda )=\bigcap \limits _{n=0}^{M'}{\mathcal {S}}_n(\Lambda )\). Note that here the choice of \(\Delta t\) is for the purpose of proving stability and it is different from \(\Delta \tau \) in the proof of consistency.

To prove this proposition, we split the interaction force \(k^N\) into \(k^N=k_1^N+k_2^N\), where \(k_2^N\) is the result of choosing a wider cut-off of order \(N^{-\lambda _2}>N^{-\delta }\) in the force kernel k and

which means that for \(k_2^N\) and \(\ell _2^N\) we choose \(\delta =\lambda _2\) in (11) and (39) respectively.

Following the approach in [10], we introduce the following auxiliary trajectory

We consider the above auxiliary trajectory with two different initial phases. For any \(1\le n\le M'\) and \(t\in [t_n,t_{n+1}]\), we consider the auxiliary trajectory starting from the initial phase

where \((x_i^{t_{n-1}}, v_i^{t_{n-1}})\) satisfies (12) at time \(t_{n-1}\). However when \(n=1\), i.e. \(t\in [0,t_1]\), the initial phase of the auxiliary trajectory is chosen to be \(({\widetilde{x}}_i^{0}, {\widetilde{v}}_i^{0})=(x_i^{0}, v_i^{0})\), which has the distribution \(f_0\). Moreover in the latter case the distribution of \(({\widetilde{x}}_i^{t}, {\widetilde{v}}_i^{t})\) is exactly \(f_t^N\), which solves the regularized VPFP equations (13) with the initial data \(f_0\).

For later reference let us estimate the difference \(\Vert {{\overline{X}}}_t -{\widetilde{X}}_t\Vert _\infty \) and \(\Vert {{\overline{V}}}_t -{\widetilde{V}}_t\Vert _\infty \). Using the equations of these trajectories, we have for \(t\in [t_n,t_{n+1}],\)

and

where C depends only on T and \(C_{f_0}\). Summarizing, we get

Using Gronwall’s inequality it follows that

under the event \({\mathcal {A}}_T\) defined in (101).

Then for any \(t\in [t_n,t_{n+1}]\), one splits the error

First, let us compute \({\mathcal {I}}_1\):

where we have used the local Lipschitz bound of \(K_2^N\) under the event \({\mathcal {A}}_T\) (see in Lemma 2.3). Furthermore, we denote

Since Proposition 3.1 also holds for the case \(\lambda _2<\frac{1}{3}\), one has

Under the event \({\mathcal {B}}_2\), it holds that

since \(\lambda _2<\frac{1}{3}\), where \(\Vert {{\overline{L}}}_2^N({{\overline{X}}}_{t})\Vert _\infty \le C\log (N)\) follows from Lemma 2.4. Hence, one has

under event \({\mathcal {A}}_T\cap {\mathcal {B}}_2\).

To estimate \({\mathcal {I}}_2\), notice that by triangle inequality and (110) one has

under the event \({\mathcal {A}}_T\), which leads to

Here the bound \(\frac{\Vert \nabla K_1^N(X_t)\Vert _\infty }{\Vert L^N({{\overline{X}}}_t)\Vert _\infty }\le CN^{3(\delta -\lambda _2)}\) uses Lemma 2.2 since

And a similar estimate leads to \(\frac{\Vert \nabla K_1^N({\widetilde{X}}_t)\Vert _\infty }{\Vert L^N({{\overline{X}}}_t)\Vert _\infty }\le CN^{3(\delta -\lambda _2)}\).

We denote the event

It has been proved in Proposition 3.1 that

Then under the event \({\mathcal {B}}_3\) it follows that

since \(\Vert {{\overline{L}}}^N({{\overline{X}}}_{t})\Vert _\infty \le C\log (N)\) and \(\frac{1}{3}\le \delta <1\). Thus, we have

under the event \({\mathcal {A}}_T\cap {\mathcal {B}}_3\).

The estimate of \({\mathcal {I}}_3\) is a result of Lemma 3.2. Indeed, we denote the event

so by Lemma 3.2 one has that for any \(0\le n \le M'\)

Furthermore, it holds that

under the event \({\mathcal {G}}_n\), where we have used the fact that \(\Vert k_1^N\Vert _1\le CN^{-\lambda _2}\). Indeed, it is easy to compute that

Collecting (116), (125) and (128) yields that

under the event \({\mathcal {B}}_2\cap {\mathcal {B}}_3\cap {\mathcal {A}}_T\cap {\mathcal {G}}_n\), where C depends on \(\alpha \), T and \(C_{f_0}\). To distinguish it from other constants we will denote this C by \(C_{3,\alpha }\). This implies \({\mathcal {B}}_2\cap {\mathcal {B}}_3\cap {\mathcal {A}}_T\cap {\mathcal {G}}_n\subseteq {{\mathcal {S}}}_n(C_{3,\alpha })\), which yields that

It follows that

where we used the estimates in (114), (123) and(127). Here \(\alpha \) is arbitrary and so is \(\alpha '\). \(\square \)

Lemma 3.2

Consider two trajectories \(({\widetilde{X}}_t,{\widetilde{V}}_t)\), \(({{\overline{X}}}_t,{{\overline{V}}}_t)\) on \(t\in [t_n,t_{n+1}]\) satisfying (106)-(107) and (24) respectively. When \(1\le n\le M'\), the two different initial phases are chosen to be \((X_{t_{n-1}},V_{t_{n-1}})\) and \(({{\overline{X}}}_{t_{n-1}},{{\overline{X}}}_{t_{n-1}})\) at time \(t=t_{n-1}\), and when \(n=0\) the two different initial phases are chosen to be \((X_{0},V_{0})\) and \(({{\overline{X}}}_{0},{{\overline{V}}}_{0})\) at time \(t=0\). Then for any \(\alpha >0\), there exists a \(C_{4,\alpha }>0\) depending only on \(\alpha \), T and \(C_{f_0}\) such that for N sufficiently large it holds that

where we require \(t_{n+1}-t_n=N^{-\lambda _1}\) with \(0<\lambda _1<\frac{\lambda _2}{3}\) and \(0<\lambda _2<\frac{1}{3}\). Here

where \(k_1^N\) is defined in (105).

Lemma 3.2 is used in the proof of Proposition 3.2. It follows from the following estimate of the term in (131) at any fixed time \(t\in [t_n,t_{n+1}]\), a statement which will later be generalized to hold for the maximum of \(\max t\in [t_n,t_{n+1}]\).

Lemma 3.3

Under the same assumptions as in Lemma 3.2, for any \(\alpha >0\), there exists \(C_{5,\alpha }>0\) depending only on \(\alpha \), T and \(C_{f_0}\) such that for N sufficiently large it holds that for any fixed time \(t\in [t_n,t_{n+1}]\)

The proof of Lemma 3.3 is carried out in Sect. 5. The novel technique in the proof used the fact that \(k_1^N\) has a support with the radius \(N^{-\lambda _2}\) (small). This means that in order to contribute to the interaction, \({\widetilde{x}}_j^t\) (or \({{\overline{x}}}_j^t\)) has to get close enough (less than \(N^{-\lambda _2}\)) to \({\widetilde{x}}_i^t\) (or \({\overline{x}}_i^t\)). Due to the effect of Brownian motion we get mixing of the positions of the particles over the whole support of \(k_1^N\). Using a Law of Large Numbers argument one can show that the leading order of the interaction can in good approximation be replaced by the respective expectation value. Due to symmetry of \(k_1^N\) this expectation value is zero. Significant fluctuations of the interaction \(k_1^N\) have very small probability.

The proof of Lemma 3.2

We follow the similar procedure as in Proposition 3.1. We divide \([t_n,t_{n+1}]\) into \(M+1\) subintervals with length \(\Delta \tau =N^{-\frac{\gamma }{3}}\) for some \(\gamma >4\) and \(\tau _k=k\Delta \tau \), \(k=0,\ldots ,M+1\). Recall the event \(\mathcal {{{\overline{H}}}}\) as in (90) and denote the event

It follows from Lemma 3.1 that

for any \(\gamma >4\). Furthermore we denote the event

in (132), then it follow from Lemma 3.3 that

For all \(t\in [\tau _k,\tau _{k+1}]\), under the event \({\mathcal {G}}_{\tau _k}\cap \mathcal {{{\overline{H}}}}\cap \mathcal {{\widetilde{H}}}\), we obtain

when \(\gamma >4\) is sufficiently large. This yields that under the event \(\bigcap _{k=0}^M{\mathcal {G}}_{\tau _k}\cap \mathcal {{{\overline{H}}}}\cap \mathcal {{\widetilde{H}}}\) it holds that

Therefore it follows from (134) and (136) that

Denote \(C_{4,\alpha '}\) to be the constant \(C(\alpha , T, C_{f_0})\) in (137). Since \(\alpha >0\) is arbitrary and so is \(\alpha '\), (131) holds true. This completes the proof of Lemma 3.2. \(\square \)

3.3 Convergence and the Proof of Theorem 1.2

In this section, we achieve the convergence by using the consistency from Proposition 3.1 and the stability from Proposition 3.2. To do this, we first prove the following lemma.

Lemma 3.4

Under the same assumptions as in Proposition 3.2, for any \(\alpha >0\) assume that

and let \(C_{3,\alpha }\) be the constant given in Proposition 3.2. Then it holds that

for N large enough.

Proof

We apply Proposition 3.2 and obtain

for N large enough. \(\square \)

We now return to the proof of Theorem 1.2.

Proof of Theorem 1.2

We shall also use the quantity e(t) defined as

Using the fact that \(\frac{d\Vert x\Vert _{\infty }}{dt}\le \Vert \frac{dx}{dt}\Vert _{\infty }\), one has for all \(t\in (0,T]\)

Recall the event \({\mathcal {S}}_{T}(C_{3,\alpha })\) defined in (102)

and denote

Then under the event \({\mathcal {S}}_{T}(C_{3,\alpha })\cap {\mathcal {C}}_{T}\) it follows that

where in the second inequality we used the fact that

Here we denote

Notice that for

one has \(-\lambda _3<-\lambda _2\). In other words, we obtain that for \(-\lambda _3<-\lambda _2\), there exists some \(N_0\in {\mathbb {N}}\) such that for all \(N\ge N_0\) it holds that

which leads to

by Gronwall’s Lemma. Hence we have

According to Lemma 3.4, assuming that for any \(\alpha >0\)

where \({\mathcal {A}}_{T}=\left\{ \max \limits _{t\in [0,T]} e(t)\le N^{-\lambda _2}\right\} \) implies that

This combining the consistency in Proposition 3.1, i.e. \({\mathbb {P}}\left( {\mathcal {C}}_{T}^c\right) \le N^{-\alpha }\), (150) implies that

We are left to verify the assumption (151). Indeed since \(-\lambda _3<-\lambda _2\), and \( e^{C\sqrt{\log (N)}T}\), \(\log ^2(N)\) are asymptotically bounded by any positive power of N, we can find a \(N_0\in {\mathbb {N}}\) depending only on C and T such that for any \(N\ge N_0\)

Hence it holds that

where, similar as above, for sufficiently large N the exponents \(\alpha \) and \(\alpha '\) can be any positive real number. This means that \(\max \limits _{t\in [0,T]}e(t)\) can hardly reach \(N^{-\lambda _2}\), which verifies the assumption (151). Summarizing, we have proven that for any \(\alpha >0\), there exists some \(N_0\in {\mathbb {N}}\) such that

for all \(N\ge N_0\), where C depends only on \(\alpha ,T,\) and \(C_{f_0}\). This leads to Theorem 1.2. \(\square \)

4 Proof of Theorem 1.3

In order to prove the error estimate between \(f_t\) and \(\mu _\Phi (t)\), let us split the error into three parts

The Theorem 1.3 is proven once we obtain the respective error estimates of those three parts.

Proof of Theorem 1.3

\(\bullet \) The first term \(W_p(f_t,f_t^N)\). The convergence of this term is a deterministic result: solutions of the regularized VPFP equations (13) approximate solutions of the original VPFP equations (3) as the width of the cut-off goes to zero. It follows from [12, Lemma 3.2] that

where \(p\in [1,\infty )\), \(N>3\) and \(C_1\) depends only on T and \(C_{f_0}\). The proof is inspired by the method of Leoper [45]. Note that here we can’t follow the method in [39] directly since the support of \(f^N\) and f are not compact in our present case.

\(\bullet \) The second term \(W_p(f_t^N,\mu _\Psi (t))\). This term concerns the sampling of the mean-field dynamics by discrete particle trajectories. The convergence rate has been proved in [39, Corollary 9.4] by using the concentration estimate of Fournier and Guillin [18]. We summarize the result as follows: let \(p\in [1,\infty )\), \(\kappa <\min \{\delta ,\frac{1}{6},\frac{1}{2p}\}\) and \(N>3\). Assume that there exists \(m>2p\) such that

Then there exist constants \(C_2\) and \(C_3\) such that it holds

\(\bullet \) The third term \(W_p(\mu _\Psi (t),\mu _\Phi (t))\). The convergence of this term is a direct result of Theorem 1.2. Indeed, it follows from [39, Lemma 5.2] that for all \(p\in [0,\infty ]\)

Then we choose \(\alpha =\frac{m}{2p}-1\) in Theorem 1.2 so that

\(\bullet \)Convergence of \(W_p(f_t,\mu _\Phi (t))\). Collecting estimates (156), (157) and (159) and choosing \(\kappa <\min \{\delta ,\frac{1}{6},\frac{1}{2p}\}\), it follows that

where \(C_5\) depends only on T and \(C_{f_0}\), and \(C_6\), \(C_7\) depend only on m, p, \(\kappa \). We can simplify this result by demanding \(N\ge e^{\left( \frac{2C_5}{1-3\lambda _2}\right) ^2}\), which yields \(N^{1-3\lambda _2}\ge (1+\sqrt{\log (N)})e^{C_5\sqrt{\log (N)}}\). Hence we conclude that

\(\square \)

5 The Proof of Lemma 3.3

In this section, we present the proof of Lemma 3.3, which provides the distance between \(K_1^{N}({\widetilde{X}}_t)\) and \(K_1^{N}({{\overline{X}}}_t)\) (\(t\in [t_n,t_{n+1}]\)), where \(({\widetilde{X}}_t,{\widetilde{V}}_t)\), \(({{\overline{X}}}_t,{{\overline{V}}}_t)\) satisfying (106)–(107) and (24) respectively with two different initial phases \((X_{t_{n-1}},V_{t_{n-1}})\) and \(({{\overline{X}}}_{t_{n-1}},{{\overline{X}}}_{t_{n-1}})\) at time \(t=t_{n-1}\) when \(1\le n\le M'\), or \((X_{0},V_{0})\) and \(({{\overline{X}}}_{0},{{\overline{V}}}_{0})\) at time \(t=0\) when \(n=0\). To do this, we introduce the following stochastic process: For time \(0\le s \le t\) and \(a:=(a_x,a_v)\in {\mathbb {R}}^{6N}\), let \(Z^{a,N}_{t,s}:=(Z^{a,N}_{x,t,s},Z^{a,N}_{v,t,s})\) be the process starting at time s at the position \((a_x,a_v)\) and evolving from time s up to time t according to the mean-field force \({{\overline{K}}}^N\):

and

Note that here \((Z^{a,i,N}_{x,t,s},Z^{a,i,N}_{v,t,s})\), \(i=1,\ldots ,N\) are independent. Furthermore \((Z^{a,N}_{x,t,s},Z^{a,N}_{v,t,s})\) has the strong Feller property (see [21] Definition (A)), implying in particular that it has a transition probability density \(u^{a,N}_{t,s}\) which is given by the product \(u^{a,N}_{t,s}:=\prod _{i=1}^N u^{a,i,N}_{t,s}\). Hence each term \(u^{a,i,N}_{t,s}\) is the transition probability density of \((Z^{a,i,N}_{x,t,s},Z^{a,i,N}_{v,t,s})\) and is also the solution to the linearized equation for \(t>\):

where \(\rho ^N=\int _{{\mathbb {R}}^3}f^N(t,x,v)dv\), and \(f^N\) solves the regularized VPFP equations (13) with initial condition \(f_0\).

Consider now the process \(Z^{a,N}_{t,s}\) and \(Z^{b,N}_{t,s}\) for two different starting points \(a,b\in {\mathbb {R}}^{6N}\). It is intuitively clear that the probability density \(u^{a,i,N}_{t,s}\) and \(u^{b,i,N}_{t,s}\) are just a shift of each other. The next lemma gives an estimate for the distance between any two densities in terms of the distance between the starting points a and b and the elapsed time \(t-s\). The proof is carried out in Appendix A.

Lemma 5.1

There exists a positive constant C depending only on \(C_{f_0}\) and T such that for each \(N \in {\mathbb {N}}\), any starting points \(a,b \in {\mathbb {R}}^{6 N}\) and any time \(0 < t \leqslant T\), the following estimates for the transition probability densities \(u_{t, s}^{a,i,N}\) resp. \(u_{t, s}^{b,i,N}\) of the processes \(Z_{t, s}^{a,i,N}\) resp. \(Z_{t, s}^{b,i,N}\) given by (162) hold for \(t-s<\min \{1,T-s\}\):

-

(i)

\( \Vert u_{t, s}^{a,i,N} \Vert _{\infty ,1} \leqslant C \left( (t - s)^{-\frac{9}{2}}+1\right) \),

-

(ii)

\(\Vert u_{t, s}^{a,i,N} - u_{t, s}^{b,i,N} \Vert _{\infty ,1} \leqslant C | a - b|\left( (t - s)^{- 6}+1\right) .\)

The norm \(\Vert \cdot \Vert _{p,q}\) denotes the p-norm in the x and q-norm in the v-variable, i.e. for any \(f:{\mathbb {R}}^3\times {\mathbb {R}}^3\rightarrow {\mathbb {R}} \)

To this end one assumes \(\Delta t=t_{n+1}-t_n=N^{-\lambda _1}\). Next we define for \(t\in [t_n,t_{n+1}]\) the random sets

and

Here \(M_{t_n}^t\) is at time \(t_n\) the set of indices of those particles \(x_j^{t_n}\) which are in the ball of radius \(N^{-\lambda _2}+ \log (N) \Delta t^{\frac{3}{2}} \) around \(x_1^{t_n}+(t-t_n)(v^{t_n}_1- v^{t_n}_j)\), and \({{\overline{M}}}_{t_n}^t\) is an intermediate set introduced to help to control \(M_{t_n}^t\). Note that under the event \({\mathcal {A}}_T\), we have \(M_{t_n}^t\subseteq {{\overline{M}}}_{t_n}^t\).

We also define random sets for \(t\in [t_n,t_{n+1}]\)

and

where \(C_*\) will be defined later. Here \({\mathcal {S}}_{t_n}^t\) indicates the event where the number of particles inside the set \(M_{t_n}^t\) is smaller than \(2C_*N\left( 3N^{-\lambda _2}+ \log (N) \Delta t^{\frac{3}{2}} \right) ^2 \), and the event \(\overline{{\mathcal {S}}}_{t_n}^t\) is introduced to help estimate \({\mathbb {P}}({\mathcal {S}}_{t_n}^t)\).

Our next lemma provides the probability estimate of the event where particle \({\widetilde{x}}_{j}^t\) (or \({{\overline{x}}}_{j}^t\)) is close to \({\widetilde{x}}_{1}^t\) (or \({{\overline{x}}}_{1}^t\)) (distance smaller than \(N^{-\lambda _2}\)) during a short time interval \(t-t_n\), which contributes to the interaction of \(k_1^N\) defined in (105), since the support of \(k_1^N\) has radius \(N^{-\lambda _2}\).

Lemma 5.2

Let \(({\widetilde{x}}_{j}^t,{\widetilde{v}}_{j}^t)\) satisfy (106) and (107) on \(t\in [t_n,t_{n+1}]\) and the random set \(M_{t_n}^t\) satisfy (166), then for any \(\alpha >0\), there exists some constant \(N_0>0\) depending only on \(\alpha \), T and \(C_{f_0}\) such that for all \(N\ge N_0\) it holds

This means that for some particle index j outside \(M_{t_n}^t\), \({\widetilde{x}}_{j}^t\) for some \(t\in [t_n,t_{n+1}]\) such that \(\left| {\widetilde{x}}_{1}^t-{\widetilde{x}}_{j}^t\right| <N^{-\lambda _2}\) (i.e. \({\widetilde{x}}_{j}^t\) contributes to the interaction of \(k_1^N\)) with probability less than \(N^ {-\alpha }\). Here \({\mathbb {P}}\) is understood to be taken on the initial condition \({\widetilde{x}}_{j}^{t_n}\).

Proof

Let (1, j) be fixed and \(a_1^t:=(a^t_{1,x},a^t_{1,v}),b_j^t:=(b^t_{j,x},b^t_{j,v})\in {\mathbb {R}}^6\) satisfy the stochastic differential equations

with the initial data \(a_1^{t_n}=0\) and \(b_j^{t_n}=0\). Here \(B_j^t\) is the same as in (106). It follows from the evolution Eq. (106) that

and

where

which is bounded by \(\Vert {{\overline{k}}}^N\Vert _\infty \le C(\Vert \rho ^N\Vert _1+\Vert \rho ^N\Vert _\infty )\) according to Lemma 2.4. Integrating twice we get for any \(s\ge t_n\)

and

And by the same argument one has

Since \({{\overline{k}}}^N({\widetilde{x}}^\tau _j)\) is bounded by \(\Vert {{\overline{k}}}^N\Vert _\infty \le C(\Vert \rho ^N\Vert _1+\Vert \rho ^N\Vert _\infty )\) according to Lemma 2.4., it follows that there is a constant \(0<C<\infty \) depending only on \(\Vert {{\overline{k}}}^N\Vert _\infty \) such that

For \(j\in (M_{t_n}^t)^c\) for some \(t\in [t_n,t_{n+1}]\), i.e.

together with \(\min \limits _{t\in [t_n,t_{n+1}]}\max \limits _{j\in ( M^ t_{t_n})^c}\left\{ \left| {\widetilde{x}}_{1}^t-{\widetilde{x}}_{j}^t\right| \right\} <N^{-\lambda _2}\), (170) and (171) imply

Hence

where we used \(a_x^ t= \displaystyle \int _{t_n}^ta_v^sds\) and \(b_x^ t=\int _{t_n}^tb_v^sds\) in the second inequality. In the same way we can argue that

Due to independence the difference \(c_{j,v}^t=(c_{j,1}^t,c_{j,2}^t,c_{j,3}^t)=a_{1,v}^t-b_{j,v}^t\) is itself a Wiener process [62] since

Splitting up this Wiener process into its three spacial components we get

where in the last equality we used the reflection principle based on the Markov property [40].

Recall that the time evolution of \(a_{1,v}^ t\) and \(b_{j,v}^t\) are standard Brownian motions, i.e. the density is a Gaussian with standard deviation \(\sigma _t=\sigma (t-t_n)^{\frac{1}{2}}\). Due to the independence of \(a^t_{1,v}\) and \(b^t_{j,v}\), \(c_{j,1}^t\) is also normal distributed with the standard deviation of order \((t-t_n)^{\frac{1}{2}}\). Hence for N sufficiently large, following from (175), it holds that

and

With (172) and (173) the lemma follows. \(\square \)

Now we have all the estimates needed for the proof of Lemma 3.3.

Proof of Lemma 3.3

We show that under the event \({\mathcal {A}}_T\) defined in (101), for any \(\alpha >0\) there exists a \(C_\alpha \) depending only on \(\alpha \), T and \(C_{f_0}\) such that at any fixed time \(t\in [t_n,t_{n+1}]\)

This is done under the event \({\mathcal {A}}_T\) in three steps:

-

(1)

We prove that for any \(t\in [t_n,t_{n+1}]\) the number of particles inside \(M_{t_n}^t\) is larger than

$$\begin{aligned} M_*:= 2C_*N\left( 3N^{-\lambda _2}+ \log (N) \Delta t^{3/2}\right) ^2 \end{aligned}$$(177)with probability less than \(N^{-\alpha }\). Note that \(M_*\) is used as a bound in the definition of (168) and (169). For any \(t\in [t_n,t_{n+1}]\) we prove that

$$\begin{aligned} {\mathbb {P}}\left( \text{ card } (M_{t_n}^t)> M_*\right) ={\mathbb {P}}(({\mathcal {S}}_{t_n}^t)^c)\le {\mathbb {P}}((\overline{{\mathcal {S}}}_{t_n}^t)^c)\le N^{-\alpha }. \end{aligned}$$(178) -

(2)

We prove that at any fixed time \(t\in [t_n,t_{n+1}]\), particles outside \(M_{t_n}^t\) contribute to the interaction of \(k_1^N\) with probability less than \(N^{-\alpha }\), namely

$$\begin{aligned}&{\mathbb {P}}\bigg (\left| \frac{1}{N-1}\sum _{j\in (M_{t_n}^t)^c}\left( k_1^N({\widetilde{x}}_1^t-{\widetilde{x}}_j^t)- k_1^N({{\overline{x}}}_1^t-{{\overline{x}}}_j^t)\right) \right| > 0\bigg )\le N^{-\alpha }. \end{aligned}$$(179) -

(3)

According to step (2) above, at any fixed time \(t\in [t_n,t_{n+1}]\), particles outside \(M_{t_n}^t\) do not contribute to the interaction of \(k_1^N\) with high probability, so we only consider particles that are inside \(M_{t_n}^t\). And we know already from step (1) above that the number of particles inside \(M_{t_n}^t\) is larger than \(M_*\), with low probability. To prove (176), we only need to prove

$$\begin{aligned} {\mathbb {P}}\bigg ( {\mathcal {X}}(M_{t_n}^t)\cap \left\{ \text{ card } (M_{t_n}^t)\le M_*\right\} \bigg )\le N^{-\alpha }\, \end{aligned}$$(180)at any fixed time \(t\in [t_n,t_{n+1}]\), where the event \({\mathcal {X}}(M_{t_n}^t)\) is defined by

$$\begin{aligned} {\mathcal {X}}(M_{t_n}^t):=&\bigg \{\bigg |\frac{1}{N-1}\sum _{j\in M_{t_n}^t}\left( k_1^N({\widetilde{x}}_1^t-{\widetilde{x}}_j^t)- k_1^N({{\overline{x}}}_1^t-{{\overline{x}}}_j^t)\right) \bigg |\nonumber \\&\ge C_{\alpha }N^{2\delta -1}\log (N) +C_\alpha \log ^2(N)N^{3\lambda _1-\lambda _2} \Vert k_1^N\Vert _1\bigg \}. \end{aligned}$$(181)

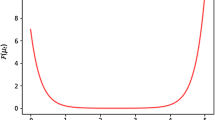

Illustration of the sets \(M_{t_n}^t\) and \({\overline{M}}_{t_n}\) under the assumption that \({\mathcal {A}}_T\) holds: the set \(M_{t_n}^t\) contains all indices of particles with respect to X which are in the ball of radius \(r=N^{-\lambda _2}+ \log (N) (\Delta t)^{3/2}\) around \(x_1\). In the figure this is the ball with solid lines and \(M_{t_n}^t=\{1,3\}\). The set \({{\overline{M}}}_{t_n}^t\) contains all indices of particles with respect to \({{\overline{X}}}\) which are in the ball of radius \(R=3N^{-\lambda _2}+ \log (N) (\Delta t)^{3/2}\) around \({{\overline{x}}}_1\). In the figure this is the ball with dashed lines and \({\overline{M}}_{t_n}^t=\{1,3,4,6\}\). Since on the set \({\mathcal {A}}_T\) the distance d of the particles \(x_1\) and \({{\overline{x}}}_1\) cannot be larger than \(N^ {-\lambda _2}\), it follows that, given that the event \({\mathcal {A}}_T\) holds, a particle \({{\overline{x}}}_j\) is in the solid ball only if the particle \( x_j\) is in the ball with dashed lines, i.e. with radius \(R=3N^{-\lambda _2}+ \log (N) (\Delta t)^{3/2}\) around \(x_1\) (see for example particles \(x_3\) and \({{\overline{x}}}_3\)). Thus \(M_{t_n}^t\subseteq {\overline{M}}_{t_n}\). Controlling \(M_{t_n}^t\) by \({\overline{M}}_{t_n}\) will be helpful to estimate the number of particles inside these sets. The \({\overline{x}}_j\) are distributed independently, and the probability of finding any of these \({{\overline{x}}}_j\) inside the solid ball is small due to the small volume of the ball. This helps to estimate the number of particles in the set \({\overline{M}}_{t_n}\) (see Step 1). Particles outside the ball, i.e. indices not in \({\overline{M}}_{t_n}\) do not contribute to the interaction \(k_1\). This comes from the fact that in order to get a sufficiently small distance for \(x_1\) to interact, they have to travel a long distance during the short time interval \((t-t_n)\): the distance \(\log (N) (\Delta t)^{3/2}\) (recall that the support of \(k_1\) has radius \(N^{-\lambda _2}\)). Due to the Brownian motion, this is possible, of course, but the probability to travel that far will be smaller than any polynomial in N. This argument is worked out in Step 2. The main contribution thus comes from Step 3. Knowing that the number of particles in \(M_{t_n}\) is quite small helps to estimate this term

\(\bullet \textit{Step 1:}\) To prove the first part of (178), note that on the event \({{\mathcal {A}}}_T\) defined in (101) and assuming that \(t\in [t_n,t_{n+1}]\)

implies

Hence \(M_{t_n}^t\subseteq {{\overline{M}}}_{t_n}^t\) and thus for any \(R>0\), \(\text{ card } ({{\overline{M}}}_{t_n}^t)<R\) implies that \(\text{ card } (M_{t_n }^t)\le \text{ card } ({{\overline{M}}}_{t_n }^t)<R\), consequently \({\mathcal {S}}_{t_n}^t\supseteq \overline{{\mathcal {S}}}_{t_n}^t\), i.e. \(({\mathcal {S}}^ t_{t_n})^c\subseteq (\overline{{\mathcal {S}}}^t_{t_n})^c\) .

The second part of (178) is trivial. For the third part we use the independence of the \({{\overline{x}}}\)-particles. Note that the law of \(({{\overline{x}}}^j_{t_n}, {{\overline{v}}}^j_{t_n})\) has a density \(f^N(x,v,t_n)\). For any \(j\in \left\{ 2,\ldots ,N\right\} \) the probability to find \(j\in {{\overline{M}}}_{t_n}^t\) for any \(t\in [t_n,t_{n+1}]\) is given by

where the center \(\Xi ^t\) of the ball is given by \(\Xi ^t={{\overline{x}}}_1^{t_n}+(t-t_n)({{\overline{v}}}_1^{t_n}- v)\), and the radius of the ball is given by \(R=3N^{-\lambda _2}+ \log (N) \Delta t^{3/2} \).

Define

which then satisfies the following transport equation

Then one has

where the center \(\Xi _0^t\) of the ball is given by \(\Xi _0^t={{\overline{x}}}_1^{t_n}+(t-t_n){{\overline{v}}}_1^{t_n}\), in particular the integration area is independent of v. It follows that the probability of finding \(j\in {{\overline{M}}}_{t_n}^t\) for any \(t\in [t_n,t_{n+1}]\) is equivalent to

Next, we compute for \(0<s\le \Delta t\)

where we have chosen

It follows that

which leads to

because of (20), where \(C_2\) depends only on T, and \(C_{f_0}\). It follows from (186) that

where we define \(C_*:=C_2(\frac{4}{3}\pi ) ^{\frac{2}{3}}\), which depends only on T and \(C_{f_0}\).

The probability of finding k particles inside the set \({{\overline{M}}}_{t_n}^t\) is thus bounded from above by the binomial probability mass function with parameter p at position k, i.e. for any natural number \(0\le A\le N\) and any \(t\in [t_n,t_{n+1}]\)

Binomially distributed random variables have mean Np and standard deviation \(\sqrt{Np(1-p)}<\sqrt{Np}\), and the probability to find more than \(Np+ a \sqrt{Np}\) particles in the set \({{\overline{M}}}_{t_n}^t\) is exponentially small in a, i.e. there is a sufficiently large N for any \(\alpha >0\) and any \(t\in [t_n,t_{n+1}]\) such that

This is because of the central limit theory and so the binomial distribution can be seen as a normal distribution when N is sufficiently large. Since \(p\ge C N^{-3\lambda _2}\), we get that \(\sqrt{Np}>C N^ {\frac{1}{2}(1-3\lambda _2)}\) \((\lambda _2<1/3)\). Hence the probability of finding more than \(2Np=Np+\sqrt{Np}\sqrt{Np}\) (i.e. \(a=\sqrt{Np}>C N^ {\frac{1}{2}(1-3\lambda _2)}\)) particles is the set \({{\overline{M}}}_{t_n}^t\) is smaller than any polynomial in N, i.e. there is a \(C_\alpha \) for any \(\alpha >0\) and any \(t\in [t_n,t_{n+1}]\) such that

\(\bullet \textit{Step 2:}\) For (179) it is sufficient to show that for any \(\alpha >0\) there is a sufficiently large N such that for some \(j\in (M_{t_n}^t)^c\)

The total probability we have to control in (179) is at maximum the N-fold value of this. The key to prove that is Lemma 5.2. To have an interaction \(k_1^N({\widetilde{x}}_1^t-{\widetilde{x}}_j^t)\ne 0\) for all \(t\in [t_n,t_{n+1}]\) the distance between particle 1 and particle j has to be reduced to a value smaller than \(N^ {-\lambda _2}\). Due to the Brownian motion, this is possible, but suppressed. Due to the fast decay of the Gaussian it is very unlikely that \(k_1^N({\widetilde{x}}_1^t-{\widetilde{x}}_j^t)\ne 0\). The probability is smaller than any polynomial in N (see Lemma 5.2).The same holds true for \(k_1^N({{\overline{x}}}_1^t-{{\overline{x}}}_j^t)\).

In more detail: due to the cut-off \(N^{-\lambda _2}\) we introduced for \(k_1^N\)

where we used the fact that \(({{\overline{M}}}^ t_{t_n})^c\subseteq ( M^ t_{t_n})^c\) in the last inequality. With Lemma 5.2 we get the bound for (179).

\(\bullet \textit{Step 3:}\) To get (180) we prove that for any natural number

one has

where the event \({\mathcal {X}}(M_{t_n}^t)\) is defined in (181). This can be recast without relabeling j as

Lemma 5.3

Let \(Z_1,\cdots ,Z_M\) be independent random variables with \({\mathbb {E}}[|Z_i|]\le CM^{-2}\) and \(|Z_i|\le C\) for any \(i\in \{1,\cdots ,M\}\). Then for any \(\alpha >0\), it holds that

where \(C_\alpha \) depends only on C and \(\alpha \).

Proof

We first split the random variables \(Z_i=Z_i^a+Z_i^b\) such that \(Z_i^a\) and \(Z_i^b\) are sequences of independent random variables with

This can be achieved by defining

and \(Z_i^b=Z_i-Z_i^a\). Here we choose \(\gamma \) such that \({\mathbb {P}}(|Z_i^a|>0)= M^{-1}\). Applying Markov’s inequality, one computes

This implies that \(\gamma \le CM^{-1}\).

For the sum of \(Z_i^b\) we get the trivial bound

Thus the lemma follows if we can show that

where \(C_\alpha \) has been changed.

Let

Since \(|Z_i|\le C\), one has

Then it follows that

Noticing that \(X_i\) are i.i.d. Bernoulli random variables with \({\mathbb {P}}(X_i=1)={\mathbb {P}}(|Z_i^a|>0)= M^{-1}\), we get

where \(a=\frac{C_\alpha }{C}\ln (M)\). Notice the decay property of the factorial

Thus one chooses M large enough and concludes (196), which proves the lemma. \(\square \)

Using the lemma above, now we proceed to prove (193). Define

It follows that \(|Z_j|\) is bounded and

where we use the fact \(\Vert u_{t,t_{n-1}}^{a,N}\Vert _{\infty ,1}\le CN^{\frac{9}{2}\lambda _1}\) \((\Delta t\le t-t_{n-1}\le 2\Delta t)\) from (i) in Lemma 5.1, \(\Vert f_t^N\Vert _{\infty ,1}\le C_{f_0}\) and \(\Vert k_1^N\Vert _1\le N^{-\lambda _2}\) from (129). Using Lemma 5.3 with \(M=N^{\delta +\frac{\lambda _2}{2}-\frac{9}{4}\lambda _1}\) one obtains

which leads to (193) for \(M=N^{\delta +\frac{\lambda _2}{2}-\frac{9}{4}\lambda _1}\). It is obvious that

for any \(M\le N^{\delta +\frac{\lambda _2}{2}-\frac{9}{4}\lambda _1}\). Thus one concludes (193) holds for the case \(M\le N^{\delta +\frac{\lambda _2}{2}-\frac{9}{4}\lambda _1}\).

For the remaining M we note that

due to the fact that \(0<\lambda _1<\frac{2}{3}\lambda _2\). Thus we are left to prove (193) for the case

This can be done by Lemma 2.5, which we repeat below for easier reference:

Lemma 2.5 Let \(Z_1,\ldots ,Z_{M}\) be i.i.d. random variables with \({\mathbb {E}}[Z_i]=0,\) \({\mathbb {E}}[Z_i^2]\le g(M)\) and \(|Z_i|\le C\sqrt{M g(M)}\). Then for any \(\alpha >0\), the sample mean \({\bar{Z}}=\frac{1}{M}\sum _{i=1}^{M}Z_i\) satisfies

where \(C_\alpha \) depends only on C and \(\alpha \).

For any fixed \(t\in [t_n,t_{n+1}]\) we choose \(Z_j^t:=\frac{M}{N-1} k_1^N({\widetilde{x}}_1^t-{\widetilde{x}}_j^t)-\frac{M}{N-1} {\mathbb {E}}[k_1^N({\widetilde{x}}_1^t-{\widetilde{x}}_j^t)]\) and \(g(M):=CM N^{4\delta -2}\), where \(N^{\delta +\frac{\lambda _2}{2}-\frac{9}{4}\lambda _1}<M\le 4C_*N \log ^2 (N)N^{-3\lambda _1}\). Then following the same argument as in (63), the condition

is satisfied. We can also deduce that

Applying Lemma 2.5 we obtain at any fixed time \(t\in [t_n,t_{n+1}]\)

and similarly

It is left to control the difference

where M satisfies (202). This can be done by using Lemma 5.1. For any \(t\in [t_n,t_{n+1}]\), when \(1\le n\le M'\) we write \(a=({\widetilde{X}}_{t_{n-1}},{\widetilde{V}}_{t_{n-1}})=(X_{t_{n-1}},V_{t_{n-1}})\) and \(b=({{\overline{X}}}_{t_{n-1}},{{\overline{V}}}_{t_{n-1}})\). Then it follows that

where \(\rho _{t,t_{n-1}}^{a,1,N}(x_1)=\int _{{\mathbb {R}}^3} u_{t,t_{n-1}}^{a,1,N}(x_1,v_1)dv_1\). Here we have used the fact that when \(1\le n\le M'\)

by Lemma 5.1 since \(N^{-\lambda _1}\le t-t_{n-1}\le 2N^{-\lambda _1}\). When \(n=1\), since \(a=({\widetilde{X}}_{0},{\widetilde{V}}_{0})=(X_{0},V_{0})=({{\overline{X}}}_{0},{{\overline{V}}}_{0})=b\), one has

Collecting (204), (205) and (206) we get (193) for M satisfying (202), which finishes the proof of (193) for any M. Hence we conclude (180).

\(\bullet \textit{Step 4:}\) Now we prove (176). To see this, we split the summation \(\sum _{j\ne 1}^N\) into two parts: the part where \(j\in M _{t_n}^t\) and the part where \(j\in (M _{t_n}^t )^c\)

where \({\mathcal {X}}(M_{t_n}^t)\) is defined in (181) and

For the part in the event \({\mathcal {X}}((M_{t_n}^t)^c)\) where \(j\in (M _{t_n}^t )^c\), it follows from (179) that

Thus we have

Next we split the summation \(\sum _{j\in M _{t_n}^t }\) in the event \({\mathcal {X}}(M_{t_n}^t)\) (181) into two cases: the case where \( \text{ card } (M_{t_n}^t)\le M_*\) and the case where \( \text{ card } (M_{t_n}^t)> M_*\). Here \(M_*\) is defined in (177).

where in the last inequality we used (180).

According to (178), for any \(t\in [t_n,t_{n+1}]\) one has

which leads to

Therefore it follows from (211) that

Together with (210), it implies

Finally, since the particles are exchangeable, the same result holds for changing \(({\widetilde{x}}_1^t,{{\overline{x}}}_1^t)\) in (215) into \(({\widetilde{x}}_i^t,{{\overline{x}}}_i^t)\), \(i=2,\ldots , N\), which completes the proof of Lemma 3.3. \(\square \)

Change history

10 December 2020

Open Access funding enabled and organized by Projekt DEAL.

References

Aarseth, S.J.: Gravitational N-body simulations: tools and algorithms. Cambridge University Press, Cambridge (2003)