Abstract

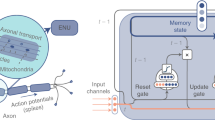

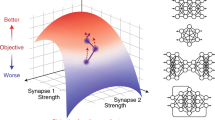

A key feature of intelligent behaviour is the ability to learn abstract strategies that scale and transfer to unfamiliar problems. An abstract strategy solves every sample from a problem class, no matter its representation or complexity—similar to algorithms in computer science. Neural networks are powerful models for processing sensory data, discovering hidden patterns and learning complex functions, but they struggle to learn such iterative, sequential or hierarchical algorithmic strategies. Extending neural networks with external memories has increased their capacities to learn such strategies, but they are still prone to data variations, struggle to learn scalable and transferable solutions, and require massive training data. We present the neural Harvard computer, a memory-augmented network-based architecture that employs abstraction by decoupling algorithmic operations from data manipulations, realized by splitting the information flow and separated modules. This abstraction mechanism and evolutionary training enable the learning of robust and scalable algorithmic solutions. On a diverse set of 11 algorithms with varying complexities, we show that the neural Harvard computer reliably learns algorithmic solutions with strong generalization and abstraction, achieves perfect generalization and scaling to arbitrary task configurations and complexities far beyond seen during training, and independence of the data representation and the task domain.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

Data is generated online during training and the generating methods are provided in the source code.

Code availability

The source code of the NHC is available via Code Ocean at https://doi.org/10.24433/CO.6921369.v1 (ref. 52).

References

Taylor, M. E. & Stone, P. Transfer learning for reinforcement learning domains: a survey. J. Mach. Learn. Res. 10, 1633–1685 (2009).

Silver, D. L., Yang, Q. & Li, L. Lifelong machine learning systems: beyond learning algorithms. In 2013 AAAI Spring Symposium: Lifelong Machine Learning Vol. 13, 49–55 (AAAI, 2013).

Weiss, K., Khoshgoftaar, T. M. & Wang, D. A survey of transfer learning. J. Big Data 3, 9 (2016).

Parisi, G. I., Kemker, R., Part, J. L., Kanan, C. & Wermter, S. Continual lifelong learning with neural networks: a review. Neural Networks 113, 54–71 (2019).

Schmidhuber, J. Deep learning in neural networks: an overview. Neural Networks 61, 85–117 (2015).

Mnih, V. et al. Human-level control through deep reinforcement learning. Nature 518, 529–533 (2015).

Silver, D. et al. Mastering the game of Go with deep neural networks and tree search. Nature 529, 484–489 (2016).

Fawaz, H. I., Forestier, G., Weber, J., Idoumghar, L. & Muller, P.-A. Deep learning for time series classification: a review. Data Min. Knowl. Discov. 33, 917–963 (2019).

Botvinick, M. et al. Reinforcement learning, fast and slow. Trends Cogn. Sci. 23, 408–422 (2019).

Liu, L. et al. Deep learning for generic object detection: a survey. Int. J. Compu. Vis. 128, 261–318 (2020).

Tenenbaum, J. B., Kemp, C., Griffiths, T. L. & Goodman, N. D. How to grow a mind: statistics, structure, and abstraction. Science 331, 1279–1285 (2011).

Lake, B. M., Ullman, T. D., Tenenbaum, J. B. & Gershman, S. J. Building machines that learn and think like people. Behav. Brain Sci. 40, e253 (2017).

Konidaris, G. On the necessity of abstraction. Curr. Opin. Behav. Sci. 29, 1–7 (2019).

Cormen, T. H., Leiserson, C. E., Rivest, R. L. & Stein, C. Introduction to Algorithms (MIT Press, 2009).

Das, S., Giles, C. L. & Sun, G.-Z. Learning context-free grammars: capabilities and limitations of a recurrent neural network with an external stack memory. In Proc. 14th Anuual Conference of the Cognitive Science Society 791–795 (The Cognitive Science Society, 1992).

Mozer, M. C. & Das, S. A connectionist symbol manipulator that discovers the structure of context-free languages. In Advances in Neural Information Processing Systems 863–870 (1993).

Zeng, Z., Goodman, R. M. & Smyth, P. Discrete recurrent neural networks for grammatical inference. IEEE Trans. Neural Netw. Learn. Syst. 5, 320–330 (1994).

Graves, A., Wayne, G. & Danihelka, I. Neural turing machines. Preprint at https://arxiv.org/abs/1410.5401 (2014).

Joulin, A. & Mikolov, T. Inferring algorithmic patterns with stack-augmented recurrent nets. In Advances in Neural Information Processing Systems 190–198 (2015).

Graves, A. et al. Hybrid computing using a neural network with dynamic external memory. Nature 538, 471–476 (2016).

Neelakantan, A., Le, Q. V. & Sutskever, I. Neural programmer: inducing latent programs with gradient descent. In International Conference on Learning Representations (2016).

Kaiser, Ł. & Sutskever, I. Neural GPUs learn algorithms. International Conference on Learning Representations (2016).

Zaremba, W., Mikolov, T., Joulin, A. & Fergus, R. Learning simple algorithms from examples. In International Conference on Machine Learning, 421–429 (2016).

Greve, R. B., Jacobsen, E. J. & Risi, S. Evolving neural turing machines for reward-based learning. In Proceedings of the Genetic and Evolutionary Computation Conference 2016, 117–124 (ACM, 2016).

Trask, A. et al. Neural arithmetic logic units. In Advances in Neural Information Processing Systems 8035–8044 (2018).

Madsen, A. & Johansen, A. R. Neural arithmetic units. In International Conference on Learning Representations (2020).

Le, H., Tran, T. & Venkatesh, S. Neural stored-program memory. In International Conference on Learning Representations (2020).

Reed, S. & De Freitas, N. Neural programmer-interpreters. International Conference on Learning Representations (2016).

Kurach, K., Andrychowicz, M. & Sutskever, I. Neural random-access machines. International Conference on Learning Representations (2016).

Cai, J., Shin, R. & Song, D. Making neural programming architectures generalize via recursion. International Conference on Learning Representations (2017).

Dong, H. et al. Neural logic machines. In International Conference on Learning Representations (2019).

Velickovic, P., Ying, R., Padovano, M., Hadsell, R. & Blundell, C. Neural execution of graph algorithms. In International Conference on Learning Representations (2020).

Sukhbaatar, S., Weston, J., Fergus, R. et al. End-to-end memory networks. In Advances in Neural Information Processing Systems, 2440–2448 (2015).

Weston, J., Chopra, S. & Bordes, A. Memory networks. In International Conference on Learning Representations (2015).

Grefenstette, E., Hermann, K. M., Suleyman, M. & Blunsom, P. Learning to transduce with unbounded memory. In Advances in Neural Information Processing Systems 1828–1836 (2015).

Kumar, A. et al. Ask me anything: dynamic memory networks for natural language processing. In International Conference on Machine Learning 1378–1387 (2016).

Wayne, G. et al. Unsupervised predictive memory in a goal-directed agent. Preprint at https://arxiv.org/abs/1803.10760 (2018).

Merrild, J., Rasmussen, M. A. & Risi, S. HyperNTM: evolving scalable neural turing machines through HyperNEAT. In International Conference on the Applications of Evolutionary Computation 750–766 (Springer, 2018).

Khadka, S., Chung, J. J. & Tumer, K. Neuroevolution of a modular memory-augmented neural network for deep memory problems. Evol. Comput. 27, 639–664 (2019).

Bengio, Y., Louradour, J., Collobert, R. & Weston, J. Curriculum learning. In International Conference on Machine Learning 41–48 (ACM, 2009).

Wierstra, D. et al. Natural evolution strategies. J. Mach. Learn. Res. 15, 949–980 (2014).

Tanneberg, D., Rueckert, E. & Peters, J. Learning algorithmic solutions to symbolic planning tasks with a neural computer architecture. Preprint at https://arxiv.org/abs/1911.00926 (2019).

Oudeyer, P.-Y. & Kaplan, F. What is intrinsic motivation? A typology of computational approaches. Front. Neurorobotics 1, 6 (2009).

Baldassarre, G. & Mirolli, M. Intrinsically motivated learning systems: an overview. In Intrinsically Motivated Learning in Natural and Artificial Systems 1–14 (Springer, 2013).

Mania, H., Guy, A. & Recht, B. Simple random search of static linear policies is competitive for reinforcement learning. In Advances in Neural Information Processing Systems 1803–1812 (2018).

Stanley, K. O. & Miikkulainen, R. Evolving neural networks through augmenting topologies. Evol. Comput. 10, 99–127 (2002).

Salimans, T., Ho, J., Chen, X., Sidor, S. & Sutskever, I. Evolution strategies as a scalable alternative to reinforcement learning. Preprint at https://arxiv.org/abs/1703.03864 (2017).

Krogh, A. & Hertz, J. A. A simple weight decay can improve generalization. In Advances in Neural Information Processing Systems 950–957 (1992).

Conti, E. et al. Improving exploration in evolution strategies for deep reinforcement learning via a population of novelty-seeking agents. In Advances in Neural Information Processing Systems 5027–5038 (2018).

Freund, Y. & Schapire, R. E. A decision-theoretic generalization of online learning and an application to boosting. Journal Comput. Syst. Sci. 55, 119–139 (1997).

Lin, L.-J. Self-improving reactive agents based on reinforcement learning, planning and teaching. Mach. Learn. 8, 293–321 (1992).

Tanneberg, D. The Neural Harvard Computer (Code Ocean, accessed 25 September 2020); https://doi.org/10.24433/CO.6921369.v1.

Acknowledgements

This project has received funding from the European Union’s Horizon 2020 research and innovation programme under grant agreement nos. 713010 (GOAL-Robots) and 640554 (SKILLS4ROBOTS), and from the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation) under no. 430054590. This research was supported by NVIDIA. We want to thank K. O’Regan for inspiring discussions on defining algorithmic solutions.

Author information

Authors and Affiliations

Contributions

D.T. conceived the project, designed and implemented the model, conducted the experiments and analysis, created the graphics. D.T., E.R. and J.P. wrote the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Peer review information Nature Machine Intelligence thanks Greg Wayne and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data

Extended Data Fig. 1 Learning curves comparison.

Shown are the mean and the standard error of the fitness during learning over 15 runs. Note the log-scale of the x-axis. Solved X in the legend indicates the median solved level. The full NHC is the only model that successfully learns all algorithms reliably. More details on these evaluations are given in Table 1.

Supplementary information

Supplementary Information

Supplementary Information including Technical Details.

Rights and permissions

About this article

Cite this article

Tanneberg, D., Rueckert, E. & Peters, J. Evolutionary training and abstraction yields algorithmic generalization of neural computers. Nat Mach Intell 2, 753–763 (2020). https://doi.org/10.1038/s42256-020-00255-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s42256-020-00255-1