Abstract

The expectation functionals, which arise in risk-neutral bi-level stochastic linear models with random lower-level right-hand side, are known to be continuously differentiable, if the underlying probability measure has a Lebesgue density. We show that the gradient may fail to be local Lipschitz continuous under this assumption. Our main result provides sufficient conditions for Lipschitz continuity of the gradient of the expectation functional and paves the way for a second-order optimality condition in terms of generalized Hessians. Moreover, we study geometric properties of regions of strong stability and derive representation results, which may facilitate the computation of gradients.

Similar content being viewed by others

1 Introduction

We study bi-level stochastic linear programs with random right-hand side in the lower-level constraint system. The sequential nature of bi-level programming motivates a setting where the leader decides nonanticipatorily, while the follower can observe the realization of the randomness. A discussion of the related literature is provided in the recent [1]. A central result of [1] states that evaluating the leader’s random outcome by taking the expectation leads to a continuously differentiable functional if the underlying probability measure is absolutely continuous w.r.t. the Lebesgue measure. This allows to formulate first-order necessary optimality conditions for the risk-neutral model. The main result of the present work provides sufficient conditions, namely boundedness of the support and uniform boundedness of the Lebesgue density of the underlying probability measure, that ensure Lipschitz continuity of the gradient of the expectation functional. Moreover, we show that the assumptions of [1] are too weak to even guarantee local Lipschitz continuity of the gradient. By the main result, second-order necessary and sufficient optimality conditions can be formulated in terms of generalized Hessians. As part of the preparatory work for the proof of the main result, we in particular show that any region of strong stability in the sense of [1, Definition 4.1] is a finite union of polyhedral cones. This representation is of independent interest, as it may facilitate the calculation or estimation of gradients of the expectation functional and thus enhance gradient descent-based approaches. The paper is organized as follows: The model and related results of [1] are discussed in Sect. 2, while the main result and a variation with weaker assumptions are formulated in Sect. 3. Sections 4 and 5 are dedicated to geometric properties of regions of strong stability and related projections that appear in the representation of the gradient. Results of these sections play an important role in the proof of the main result that is given in Sect. 6. A second-order sufficient optimality condition is formulated in Sect. 7. The paper concludes with a brief discussion of the results and an outlook in Sect. 8.

2 Model and Notation

Consider the optimistic formulation of a parametric bi-level linear program

where \(z \in {\mathbb {R}}^s\) is a parameter and the data comprise a nonempty polyhedron \(X \subseteq {\mathbb {R}}^n\), vectors \(c \in {\mathbb {R}}^n\), \(q \in {\mathbb {R}}^m\) and the lower-level optimal solution set mapping \(\varPsi : {\mathbb {R}}^n \times {\mathbb {R}}^s \rightrightarrows {\mathbb {R}}^m\) defined by

with \(A \in {\mathbb {R}}^{s \times m}\), \(T \in {\mathbb {R}}^{s \times n}\) and \(d \in {\mathbb {R}}^m\). By [1, Lemma 2.1], the extended real-valued mapping \(f: {\mathbb {R}}^n \times {\mathbb {R}}^s \rightarrow \overline{{\mathbb {R}}} := {\mathbb {R}} \cup \lbrace \pm \infty \rbrace \) given by

is real valued and Lipschitz continuous on the polyhedron

if \(\mathrm {dom} \; f\) is nonempty. Let \(Z: \Omega \rightarrow {\mathbb {R}}^s\) be a random vector on some probability space \((\Omega , {\mathcal {F}}, {\mathbb {P}})\) and denote the induced Borel probability measure by \(\mu _Z = {\mathbb {P}} \circ Z^{-1} \in {\mathcal {P}}({\mathbb {R}}^s)\). Furthermore, we introduce the set

If \(\mathrm {dom} \; f\) is nonempty and we impose the moment condition

the mapping \({\mathbb {F}}: F_Z \rightarrow L^1(\Omega , {\mathcal {F}}, {\mathbb {P}})\) given by \({\mathbb {F}}(x) := f(x,Z(\cdot ))\) is well defined and Lipschitz continuous by [1, Lemma 2.4]. In a situation where the parameter z in (1) is given by a realization of the random vector Z that the follower can observe while the leader has to decide x nonanticipatorily, the upper-level outcome can be modeled by \({\mathbb {F}}(x)\). If we assume \(X \subseteq F_Z\) and the leader’s decision is based on the expectation, we obtain the risk-neutral stochastic program

The following is shown in [1, Theorem 3.1, Corollary 4.7]:

Theorem 2.1

Assume \(\mathrm {dom} \; f \ne \emptyset \) and that \(\mu _Z \in {\mathcal {M}}^1_s\) is absolutely continuous w.r.t. the Lebesgue measure. Then, the mapping \({\mathcal {Q}}_{\mathbb {E}}: F_Z \rightarrow {\mathbb {R}}\) defined by \({\mathcal {Q}}_{\mathbb {E}}(x) = {\mathbb {E}}[{\mathbb {F}}(x)]\) is well defined, Lipschitz continuous and continuously differentiable at any \(x_0 \in \mathrm {int} \; F_Z\).

We shall discuss some key ideas of the proof and introduce the relevant notation: Set

and then, f admits the representation

Remark 2.1

The subsequent analysis does not depend on the specific structure of \({\hat{q}}, {\hat{d}}\) and \({\hat{A}}\) and applies whenever (3) holds with some matrix \({\hat{A}}\) satisfying \(\mathrm {rank} \; {\hat{A}} = s\).

As the rows of \({\hat{A}}\) are linearly independent, the set

of lower-level base matrices is nonempty. A base matrix \({\hat{A}}_B \in {\mathcal {A}}\) is optimal for the lower-level problem for a given (x, z) if it is feasible, i.e.,

\({\hat{A}}_B^{-1}(Tx+z) \ge 0\), and the associated reduced cost vector \({\hat{d}}_N^\top - {\hat{d}}_B^\top {\hat{A}}_B^{-1} {\hat{A}}_N\) is nonnegative. Furthermore, for any optimal base matrix \({\hat{A}}_{B'} \in {\mathcal {A}}\), there exists a feasible base matrix \({\hat{A}}_B \in {\mathcal {A}}\) satisfying

Set

and assume \(\mathrm {dom} \; f \ne \emptyset \), and then,

holds for any \((x,z) \in F\). A key concept is the region of strong stability associated with a base matrix \({\hat{A}}_{B} \in {\mathcal {A}}^*\) given by the set

on which f coincides with the affine linear mapping

Under the assumptions of Theorem 2.1, we have

and the gradient of \({\mathcal {Q}}_{\mathbb {E}}\) admits the representation

where \(D := \lbrace {\hat{q}}_B^\top {\hat{A}}_B^{-1} T \; :\; {\hat{A}}_B \in {\mathcal {A}}^*\rbrace \), and the set-valued aggregation mappings \({\mathcal {W}}, \overline{{\mathcal {W}}}: {\mathbb {R}}^n \times D \rightrightarrows {\mathbb {R}}^s\) are given by

respectively (cf. [1, Theorem 4.3, Corollary 4.7]). Continuity of the \(\nabla {\mathcal {Q}}_{{\mathbb {E}}}\) follows from the fact that the outer semicontinuity of \(\overline{{\mathcal {W}}}\) and

imply continuity of the weight functional \(M_\varDelta : {\mathbb {R}}^n \rightarrow {\mathbb {R}}\),

for any \(\varDelta \in D\).

3 Main Result

We shall first show that the assumptions of Theorem 2.1 are too weak to guarantee Lipschitz continuity \(\nabla {\mathcal {Q}}_{\mathbb {E}}\).

Example 3.1

Consider the case where

The feasible set of the lower-level problem is compact for any parameters in the polyhedral cone \(F = \lbrace (x,z) \in {\mathbb {R}} \times {\mathbb {R}}^2 \; :\; z_1 \ge 0, \; x + z_2 \ge 0 \rbrace \), which implies that \(\mathrm {dom} \; f\) coincides with F for any \({\hat{q}} \in {\mathbb {R}}^4\). As the objective function is constant, any feasible base matrix is optimal for the lower-level problem. Denote the elements of \({\mathcal {A}} = {\mathcal {A}}^*\) by \({\hat{A}}_1, \ldots , {\hat{A}}_6\), and let

be the set of parameters for which \({\hat{A}}_i\) is feasible for the lower-level problem. A straightforward calculation shows that we have

and

Set \({\hat{q}} = (0,0,-5,-3)^\top \), and let \({\hat{q}}_i\) denote the part of upper-level objective function that is associated with \({\hat{A}}_i\). We have

and a straightforward calculation yields

Let the density \(\delta _Z: {\mathbb {R}}^2 \rightarrow {\mathbb {R}}\) of Z be given by

and set \(c=0\). We have \(\mathrm {supp} \; \mu _Z \subset \overline{{\mathcal {W}}}(0,-6)\), and it is easy to see that

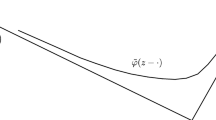

hold true whenever \(x \in ]0,1]\) (Fig. 1).

In Fig. 1, the darker square depicts the intersection of \(\mathrm {supp} \; \mu _Z\) and \(\overline{{\mathcal {W}}}(\frac{1}{4}, -2)\), while the lighter square is \(\overline{{\mathcal {W}}}(\frac{1}{4}, -6) \cap \mathrm {supp} \; \mu _Z\). The distance between the dotted lines is \(x = \frac{1}{4}\)

Thus, \(\mathrm {supp} \; \mu _Z \subset \overline{{\mathcal {W}}}(x,-6) \cup \overline{{\mathcal {W}}}(x,-2)\) holds for any \(x \in [0,1]\) and a simple calculation shows that

is not locally Lipschitz continuous at \(x=0\).

Our main result is the following sufficient conditions for Lipschitz continuity of \(\nabla {\mathcal {Q}}_{\mathbb {E}}\):

Theorem 3.1

Assume \(\mathrm {dom} \; f \ne \emptyset \) and let \(\mu _Z\) be absolutely continuous w.r.t. the Lebesgue measure and have a bounded support as well as a uniformly bounded density. Then, \({\mathcal {Q}}_{\mathbb {E}}\) is differentiable on \(\mathrm {int} \; F_Z\) with Lipschitz continuous gradient.

Note the density in Example 3.1 is not bounded. The proof of Theorem 3.1 requires some preliminary work and will be given in Sect. 6. If the support of \(\mu _Z\) is unbounded, we still obtain a weaker estimate for the gradients:

Theorem 3.2

Assume \(\mathrm {dom} \; f \ne \emptyset \) and let \(\mu _Z\) be absolutely continuous w.r.t. the Lebesgue measure and have a uniformly bounded density. Then, \({\mathcal {Q}}_{\mathbb {E}}\) is differentiable on \(\mathrm {int} \; F_Z\) and for any \(\epsilon > 0\) there exists a constant \(L(\epsilon ) > 0\) such that

holds for all \(x,x' \in \mathrm {int} \; F_Z\).

4 On the Geometry of Regions of Strong Stability

In view of (4) and (5), the gradient \(\nabla {\mathcal {Q}}_{{\mathbb {E}}}(x)\) is given by a weighted sum of the probabilities of the sets \({\mathcal {W}}(x,\varDelta )\) or \(\overline{{\mathcal {W}}}(x,\varDelta )\) for \(\varDelta \in D\). As these sets are defined using regions of strong stability, we shall first study properties of the sets \({\mathcal {S}}({\hat{A}}_B)\) with \({\hat{A}}_{B} \in {\mathcal {A}}^*\).

Remark 4.1

Example 3.1 shows that regions of strong stability are not convex in general.

Proposition 4.1

Assume \(\mathrm {dom} \; f \ne \emptyset \), then

holds for any \({\hat{A}}_B \in {\mathcal {A}}^*\).

Proof

The above result immediately follows from the fact that the quantities involved in the definition of \({\mathcal {S}}({\hat{A}}_B)\) only depend on \(Tx + z\). \(\square \)

Corollary 4.1

Assume \(\mathrm {dom} \; f \ne \emptyset \) and \(n \ge 1\), then no region of strong stability has any extremal points.

Proof

Let (x, z) be an arbitrary point of some region of strong stability \({\mathcal {S}}({\hat{A}}_B)\). The n-dimensional kernel of \((T,I_s)\) contains some nonzero element \((x_0,z_0)\), and we have \((x-x_0, z-z_0), (x+x_0, z+z_0) \in {\mathcal {S}}({\hat{A}}_B)\) by Proposition 4.1. Thus, \((x,z) = \frac{1}{2}(x-x_0, z-z_0) + \frac{1}{2}(x+x_0, z+z_0)\) is no extremal point of \({\mathcal {S}}({\hat{A}}_B)\). \(\square \)

Our main result on the structure of \({\mathcal {S}}({\hat{A}}_B)\) is the following:

Theorem 4.1

Assume \(\mathrm {dom} \; f \ne \emptyset \), then any region of strong stability is a union of at most \((s+1)^{|{\mathcal {A}}^*|}\) polyhedral cones and at most \((s+1)^{|{\mathcal {A}}^*| - 1}\) of these cones have a nonempty interior. Moreover, the multifunction \({\mathcal {S}}: {\mathcal {A}}^*\rightrightarrows {\mathbb {R}}^n \times {\mathbb {R}}^s\) is polyhedral, i.e., \(\mathrm {gph} \; {\mathcal {S}}\) is a finite union of polyhedra.

Before we get to proof of Theorem 4.1, we will establish the following auxiliary result:

Lemma 4.1

Let \({\mathcal {W}} := \lbrace \xi \in {\mathbb {R}}^k \; :\; V \xi < 0 \rbrace \) with \(V \in {\mathbb {R}}^{l \times k}\) be nonempty, then

Proof

The inclusion \(\mathrm {cl} \; {\mathcal {W}} \subseteq \overline{{\mathcal {W}}}\) is trivial. Moreover, for any \(\xi _0 \in {\mathcal {W}} = \mathrm {int} \; \overline{{\mathcal {W}}}\) and \(\xi \in \overline{{\mathcal {W}}}\) the line segment principle (cf. [6, Lemma 2.1.6]) implies \([\xi _0, \xi ) \subseteq {\mathcal {W}}\) and thus \(\xi \in \mathrm {cl} \; {\mathcal {W}}\). \(\square \)

We are now ready to prove Theorem 4.1.

Proof

(Proof of Theorem 4.1) Denote the elements of the finite set \({\mathcal {A}}^*\) by \({\hat{A}}_1, \dots , {\hat{A}}_l\) and the associated parts of the objective function by \({\hat{q}}_1, \dots , {\hat{q}}_l\). Fix any index \(i \in \lbrace 1, \ldots , l \rbrace \); then for any \((x,z) \in F\) satisfying \({\hat{A}}_i^{-1}(Tx + z) \ge 0\), the constraint \(c^\top x + {\hat{q}}_i^\top {\hat{A}}_i^{-1}(Tx + z) = f(x,z)\) in the definition of \({\mathcal {S}}({\hat{A}}_i)\) can be reformulated as

Introducing the sets

with indices \(j =1, \ldots , l\) and \(k =1, \ldots , s\) and using the fact that \({\mathcal {S}}({\hat{A}}_i)\) is closed by the Lipschitz continuity of f, we obtain the representation

As \(\varTheta _i \; \cap \; \bigcap _{j=1,\ldots , l, \; \alpha _j = 0} \Gamma _{ij0}\) is convex and closed, while \(\bigcap _{j=1,\ldots , l, \; \alpha _j \ne 0} \Gamma _{ij\alpha _j}\) is convex and open, [6, Proposition 2.1.10] yields

The sets \(\varTheta _i \; \cap \; \bigcap _{j=1,\ldots , l, \; \alpha _j = 0} \Gamma _{ij0}\) are obviously polyhedral cones, and Lemma 4.1 implies

Moreover, for any \(\alpha _i \in \lbrace 1, \ldots , s \rbrace \) we have \(e_{\alpha _i}^\top {\hat{A}}_i^{-1} \ne 0\) and thus

The second part of the theorem is an immediate consequence of the finiteness of \({\mathcal {A}}^*\). \(\square \)

Corollary 4.2

Assume \(\mathrm {dom} \; f \ne \emptyset \), then any region of strong stability is star shaped and contains the n-dimensional kernel of \((T,I_s)\).

Proof

Radial convexity is an immediate consequence of Theorem 4.1, as any region of strong stability contains the line segments from the origin to any feasible point. The second statement directly follows from Proposition 4.1. \(\square \)

Two-stage stochastic programming can be understood as the special case of bi-level stochastic programming where the objectives of leader and follower coincide. In this case, any region of strong stability is a polyhedral cone and thus convex:

Proposition 4.2

Assume \(\mathrm {dom} \; f \ne \emptyset \) and \({\hat{q}} = \alpha {\hat{d}}\) for some \(\alpha > 0\). Then, any region of strong stability is a polyhedral cone.

Proof

We shall use the notation of the proof of Theorem 4.1 and denote the part of \({\hat{d}}\) associated with \({\hat{A}}_i\) by \({\hat{d}}_i\). Fix any \((x,z) \in F\) and consider any base matrices \({\hat{A}}_i, \hat{A_j} \in {\mathcal {A}}^*\) that are feasible and thus optimal for the lower-level problem. As

both base matrices are also optimal with respect to the upper-level objective function. Thus, \({\mathcal {S}}({\hat{A}}_i)\) coincides with the polyhedral cone \(\varTheta _i\). \(\square \)

Remark 4.2

As \({\hat{d}} = (0,0,0,0)^\top \) holds in Example 3.1, we see the assumption \({\hat{q}} = \alpha {\hat{d}}\) for some \(\alpha \in {\mathbb {R}}\) in Proposition 4.2 cannot be replaced with the weaker condition that \(\lbrace {\hat{q}}, {\hat{d}} \rbrace \) is linearly dependent.

5 Properties of the Aggregation Mappings

We shall now study the aggregation mappings \({\mathcal {W}}\) and \(\overline{{\mathcal {W}}}\) defined in Sect. 2. The following result is the counterpart of Theorem 4.1:

Theorem 5.1

Assume \(\mathrm {dom} \; f \ne \emptyset \), then the multifunction \(\overline{{\mathcal {W}}}\) is polyhedral. Moreover, \(\overline{{\mathcal {W}}}(x,\varDelta )\) is a finite union of polyhedra for any \((x, \varDelta ) \in {\mathbb {R}}^n \times D\).

The proof of Theorem 5.1 will be based on the following auxiliary result:

Lemma 5.1

Let \(C_1, \ldots , C_l \subseteq {\mathbb {R}}^k\) be closed and convex. Then,

Proof

As the sets \(C_1, \ldots , C_l\) are closed and the interior of a union is contained in the union of the interiors, we have

where the first equality is due to the fact that the closure of a union equals the union of the closures and the second equation is a direct consequence of the line segment principle. Thus,

For the reverse inclusion, suppose that there is some

By definition, there are sequences \(\lbrace x_n \rbrace _{n \in {\mathbb {N}}} \subset {\mathbb {R}}^k\) and \(\lbrace \epsilon _n \rbrace _{n \in {\mathbb {N}}} \subset {\mathbb {R}}_{>0}\) satisfying \(x_n \rightarrow x\) and \(B_{\epsilon _n}(x_n) \subseteq \bigcup _{i=1, \ldots , l} C_i\) for all \(n \in {\mathbb {N}}\). As \(\bigcup _{i=1, \ldots , l: \; \mathrm {int} \; C_i \ne \emptyset } C_i\) is closed, there exists some \(N \in {\mathbb {N}}\) such that \(x_n \notin \bigcup _{i=1, \ldots , l: \; \mathrm {int} \; C_i \ne \emptyset } C_i\) for all \(n \ge N\). Together with the previous considerations, the strong separation theorem (cf. [9, Theorem 11.4]) yields the existence of some \(\delta _N \in (0,\epsilon _N]\) such that

As any \(C_i\) with \(\mathrm {int} \; C_i = \emptyset \) is contained in an affine subspace of dimension strictly smaller than k (cf. [2, Section 2.5.2]), we obtain the contradiction

Thus,

which completes the proof. \(\square \)

Corollary 5.1

Let \(C \subseteq {\mathbb {R}}^k\) be a finite union of polyhedra (polyhedral cones). Then, \(\mathrm {cl} \; \mathrm {int} \; C\) is a finite union of polyhedra (polyhedral cones).

Proof

The above statement is an immediate consequence of Lemma 5.1. \(\square \)

Proof

(Proof of Theorem 5.1) As D is finite, it is sufficient to consider the multifunctions \(\overline{{\mathcal {W}}}(\cdot , \varDelta ): {\mathbb {R}}^n \rightrightarrows {\mathbb {R}}^s\) for fixed \(\varDelta \in D\). We have

which is a finite union of polyhedra by Corollary 5.1. Similarly, \(\overline{{\mathcal {W}}}(x,\varDelta )\) admits the representation

By Theorem 4.1 and Corollary 5.1, the set

is the intersection of a finite union of polyhedral cones and the affine subspace \(\lbrace (x',z') \in {\mathbb {R}}^n \times {\mathbb {R}}^s \; :\; x' = x \rbrace \) and thus a finite union of polyhedral cones for any \(x \in {\mathbb {R}}^n\) and any \({\hat{A}}_B \in {\mathcal {A}}^*\). \(\square \)

The following result on \({\mathcal {W}}\) is a simple consequence of the fact that the constraint system describing a region of strong stability only imposes conditions on \((Tx+z)\).

Proposition 5.1

Assume \(\mathrm {dom} \; f \ne \emptyset \), then

holds for any \(x,x' \in {\mathbb {R}}^n\) and \(\varDelta \in D\).

Proof

Fix any \(x, x' \in {\mathbb {R}}^n\), \(z \in {\mathbb {R}}^s\) and set \(z' = z + T(x-x')\), then \(Tx + z = Tx' + z'\) and thus

Similarly, for any \({\hat{A}}_B \in {\mathcal {A}}^*\), \((x,z) \in {\mathcal {S}}({\hat{A}}_B)\) holds if and only if

-

1.

there exists some \(y \in {\mathbb {R}}^m\) such that \(Ay \le Tx + z = Tx' + z'\),

-

2.

\({\hat{A}}_B^{-1}(Tx' + z') = {\hat{A}}_B^{-1}(Tx + z) \ge 0\) and

-

3.

\({\hat{q}}_B^\top {\hat{A}}_B^{-1}(Tx' + z') = {\hat{q}}_B^\top {\hat{A}}_B^{-1}(Tx + z) = f(x,z) - c^\top x = f(x',z') - c^\top x',\)

i.e., if and only if \((x',z') \in {\mathcal {S}}({\hat{A}}_B)\). We conclude that

holds for any \(\varDelta \in D\), which completes the proof. \(\square \)

6 Proof of the Main Result

We are finally ready to prove Theorem 3.1 based on the results of Sects. 4 and 5 as well as the two following auxiliary results:

Lemma 6.1

Assume \(\mathrm {dom} \; f \ne \emptyset \), and let \(\mu _Z \in {\mathcal {P}}({\mathbb {R}}^s)\) be absolutely continuous w.r.t. the Lebesgue measure, then

holds for any \(x\in {\mathbb {R}}^n\), \(\varDelta \in D\) and \(t \in {\mathbb {R}}^s\).

Proof

By the arguments used in the proof of [1, Lemma 4.2], we have

where \({\mathcal {N}}_x \subset {\mathbb {R}}^s\) is contained in a finite union of hyperplanes. Consequently,

and the above statement is a direct consequence of the fact that the Lebesgue measure of \({\mathcal {N}}_x\) equals zero. \(\square \)

Lemma 6.2

Assume \(\mathrm {dom} \; f \ne \emptyset \) and let \(\mu _Z\) be absolutely continuous w.r.t. the Lebesgue measure and have a bounded support as well as a uniformly bounded density. Then, the weight functional \(M_\varDelta \) is Lipschitz continuous on \(\mathrm {int} \; F_Z\) for any \(\varDelta \in D\).

Proof

By definition of \({\mathcal {W}}(x,\varDelta )\), Proposition 5.1 and Lemma 6.1,

holds for any fixed \(\varDelta \in D\). As both

are contained in

and there exists a finite upper bound \(\alpha \in {\mathbb {R}}\) for the Lebesgue density of \(\mu _Z\), we have

where \(\lambda ^s\) denotes the s-dimensional Lebesgue measure. By Theorem 5.1, the boundary of \(\overline{{\mathcal {W}}}(x,\varDelta )\) is contained in a finite union of lower-dimensional polyhedra. Let \({\mathbb {H}}_x\) denote a set of such cones with minimal cardinality. It is a straightforward conclusion from the proofs of Theorem 4.1, Theorem 5.1 and Lemma 5.1 that the cardinality of \({\mathbb {H}}_x\) can be bounded by a constant \(K \in {\mathbb {N}}\) that does not depend on x. Moreover, as any \(H \in {\mathbb {H}}_x\) is contained in some hyperplane, the \(s-1\)-dimensional Lebesgue measure of \(H \cap \mathrm {supp} \; \mu _Z\) is at most \(\mathrm {diam}(\mathrm {supp} \; \mu _Z)^{s-1}\). Thus,

by Cavalieri’s principle, which completes the proof. \(\square \)

Proof

(Proof of Theorem 3.1) Continuous differentiability on \(\mathrm {int} \; F_Z\) is a direct consequence of [1, Corollary 4.7]. Fix any \(x, x' \in \mathrm {int} \; F_Z\); then, (4) and Lemma 6.2 yield

and thus the desired Lipschitz continuity. \(\square \)

Proof

(Proof of Theorem 3.2) Fix any \(\kappa > 0\). As \(\mu _Z\) is tight by [3, Theorem 1.3], there exists a compact set \(C(\kappa ) \subset {\mathbb {R}}^s\) such that \(\mu _Z[{\mathbb {R}}^s \setminus C(\kappa )] < \kappa \). Combining this with the estimate from the first part of the proof of Lemma 6.2 and using the same notation established therein, we see that

holds for any \(\varDelta \in D\). Thus,

We therefore have

and choosing \(\kappa = \frac{\epsilon }{2|D|}\) yields the desired estimate. \(\square \)

Remark 6.1

The constant \(L(\epsilon )\) derived in the proof of Theorem 3.2 depends on \(\epsilon \). If the support of \(\mu _Z\) is unbounded, we have \(L(\epsilon ) \rightarrow \infty \) as \(\epsilon \downarrow 0\).

7 A Sufficient Second-Order Optimality Condition

Under the conditions of Theorem 3.1, \(\nabla {\mathcal {Q}}_{{\mathbb {E}}}\) is Lipschitz continuous on \(\mathrm {int} \; F_Z\) and thus differentiable almost everywhere on \(\mathrm {int} \; F_Z\) by Rademacher’s theorem. Let \({\mathcal {D}} \subseteq \mathrm {int} \; F_Z\) denote the set of points at which \(\nabla {\mathcal {Q}}_{{\mathbb {E}}}\) is differentiable, then generalized Clarke’s Hessian of \({\mathcal {Q}}_{\mathbb {E}}\) at some \(x \in \mathrm {int} \; F_Z\) is the nonempty, convex and compact set

We have \(\partial ^2 {\mathcal {Q}}_{\mathbb {E}}(x) = \lbrace \nabla ^2 {\mathcal {Q}}_{\mathbb {E}}(x) \rbrace \) whenever \(x \in {\mathcal {D}}\).

Let the feasible set of (2) be given by \(X = \lbrace x \in {\mathbb {R}}^n \; :\; Bx \le b \rbrace \) with some \(B \in {\mathbb {R}}^{k \times n}\) and \(b \in {\mathbb {R}}^k\). The following second-order sufficient condition is based on [7]:

Theorem 7.1

Assume \(\mathrm {dom} \; f \ne \emptyset \), \(X \subseteq \mathrm {int} \; F_Z\) and let \(\mu _Z\) be absolutely continuous w.r.t. the Lebesgue measure and have a bounded support as well as a uniformly bounded density. Moreover, let \(({\bar{x}},{\bar{u}})\) be a KKT point of (2), i.e.,

and assume that any \(H \in \partial ^2 {\mathcal {Q}}_{\mathbb {E}}({\bar{x}})\) is positive definite on

Then, \({\bar{x}}\) is a strict local minimizer with order 2 of (2), i.e., there exist a neighborhood U of \({\bar{x}}\) and a constant \(L > 0\) such that

holds for any \(x \in X \cap U\).

Proof

This is a straightforward conclusion from [7, Theorem 1]. \(\square \)

Remark 7.1

There are various other approaches for optimization problems with data in the class \(C^{1,1}\), which consists of differentiable functions with locally Lipschitzian gradients. For instance, second-order optimality conditions can also be formulated based on Dini (cf. [5, Section 4.4]) or Riemann (cf. [8]) derivatives.

8 Conclusions

We have derived sufficient conditions for Lipschitz continuity of the gradient of the expectation functional arising from a bi-level stochastic linear program with random right-hand side in the lower-level constraint system. Invoking the structure of the upper level constraints, we used this result to formulate a second-order sufficient optimality condition for the risk-neutral bi-level stochastic program in terms of the generalized Hessian of \({\mathcal {Q}}_{\mathbb {E}}\). Moreover, the main result on the geometry of regions of strong stability and its counterpart for the aggregation mapping \(\overline{{\mathcal {W}}}\) may facilitate the computation or sample-based estimation of gradients of the expectation functional, which enhances gradient descent-based methods. As any region of strong stability is a finite union of polyhedral cones, a promising approach is to employ spherical radial decomposition techniques to calculate \(\nabla {\mathcal {Q}}_{\mathbb {E}}\) (cf. [4, Chapter 4]). The details are beyond the scope of this paper but shall be addressed in future research.

Data Availability Statement

Data sharing is not applicable to this article as no datasets were generated or analyzed during the current study.

References

Burtscheidt, J., Claus, M., Dempe, S.: Risk-averse models in bilevel stochastic linear programming. SIAM J. Optim. 30(1), 377–406 (2020)

Boyd, S., Vandenberghe, L.: Convex Optimization. Camebridge University Press, Cambridge (2004)

Billingsley, P.: Convergence of Probability Measures. Wiley Series in Probability and Statistics, 2nd edn. Wiley, New York (1999)

Genz, A., Bretz, F.: Computation of multivariate normal and \(t\) probabilities. In: Lecture Notes in Statistics, vol. 195. Springer, Heidelberg (2009)

Ginchev, I., La Torre, D., Rocca, M.: \(C^{k,1}\) functions, characterization, Taylor’s formula and optimization: a survey. Real Anal. Exch. 35(2), 311–342 (2009/2010)

Hiriart-Urruty, J.-B., Lemaréchal, C.: Fundamentals of Convex Analysis. Springer, Berlin (2001)

Klatte, D., Tammer, K.: On second-order sufficient optimality conditions for \(c^{1,1}\)-optimization problems. Optimization 19(2), 169–179 (1988)

La Torre, D., Rocca, M.: \(C^{1,1}\) functions and optimality conditions. J. Concr. Appl. Math. 3(1), 41–54 (2005)

Rockafellar, R.T.: Convex Analysis. Princeton University Press, Princeton (1970)

Acknowledgements

The author thanks the Deutsche Forschungsgemeinschaft for its support via the Collaborative Research Center TRR 154.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by René Henrion.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Claus, M. A Second-Order Sufficient Optimality Condition for Risk-Neutral Bi-level Stochastic Linear Programs. J Optim Theory Appl 188, 243–259 (2021). https://doi.org/10.1007/s10957-020-01775-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10957-020-01775-x

Keywords

- Bi-level stochastic linear programming

- Risk-neutral model

- Second-order optimality conditions

- Lipschitz gradients