Abstract

Till August 17, 2020, COVID-19 has caused 21.59 million confirmed cases in more than 227 countries and territories, and 26 naval ships. Chest CT is an effective way to detect COVID-19. This study proposed a novel deep learning model that can diagnose COVID-19 on chest CT more accurately and swiftly. Based on traditional deep convolutional neural network (DCNN) model, we proposed three improvements: (i) We introduced stochastic pooling to replace average pooling and max pooling; (ii) We combined conv layer with batch normalization layer and obtained the conv block (CB); (iii) We combined dropout layer with fully connected layer and obtained the fully connected block (FCB). Our algorithm achieved a sensitivity of 93.28% ± 1.50%, a specificity of 94.00% ± 1.56%, and an accuracy of 93.64% ± 1.42%, in identifying COVID-19 from normal subjects. We proved using stochastic pooling yields better performance than average pooling and max pooling. We compared different structure configurations and proved our 3CB + 2FCB yields the best performance. The proposed model is effective in detecting COVID-19 based on chest CT images.

Similar content being viewed by others

1 Introduction

The coronavirus pandemic is an ongoing global pandemic disease, which is also called COVID-19. World Health Organization (WHO) declared the COVID-19 as a public health crisis of global concern on 30/01/2020, and as a pandemic on 11/03/2020 [1]. Till August 17, 2020, COVID-19 has caused 21.59 million confirmed cases and 773.6 thousand death tolls.

Recommended preventive measures are composed of mouth covering when coughing, hand washing, social distancing, face masks in public, suspect isolation, etc. From the viewpoint of countries, lockdown, travel restriction, facility closure, workplace control, contact tracing, testing capacity increase are all effective preventive measures.

Reverse transcription polymerase chain reaction (RT-PCR) [2] and real-time RT-PCR [3] are one of the standard diagnosis methods from a nasopharyngeal swab. Chest computed tomography (CCT) is another effective diagnosis tool for COVID-19 diagnosis. Compared to polymerase chain reaction (PCR), CCT is quicker and more sensitive [4]. The main biomarkers differentiating COVID-19 from healthy people are the asymmetric peripheral ground-glass opacities (GGOs) without pleural effusions [5]. Manual interpretation by radiologists is tedious and easy to be influenced by fatigue, emotion, and other factors. A smart diagnosis system via computer vision and artificial intelligence can benefit patients, radiologists, and hospitals.

Traditional artificial intelligence (AI) and modern deep learning (DL) methods have achieved excellent results in analyzing medical images, e.g., Lu [6] proposed a radial-basis-function neural network (RBFNN) to detect pathological brains. Yang [7] presented a kernel-based extreme learning classifier (K-ELM) to create a novel pathological brain detection system. Their method was robust and effective. Lu [8] proposed a novel extreme learning machine trained by the bat algorithm (ELM-BA) approach. Jiang [9] used a six-layer convolutional neural network to recognize sign language fingerspelling. Their method is abbreviated as 6L-CNN-F, here F means fingerspelling. Szegedy et al. [10] presented the GoogLeNet. Yu and Wang [11] suggested the use of ResNet18 for mammogram abnormality detection. Two references provide systematic reviews of machine learning techniques in detecting COVID-19 [12, 13]. Besides, there are some successful applications in other industrial and academic fields using traditional AIs [14,15,16,17,18].

This study used deep convolutional neural network (DCNN) as the backbone. To make our algorithm effective in detecting COVID-19, we proposed three improvements, (i) We introduced stochastic pooling (SP) to replace traditional average pooling and maximum pooling methods; (ii) We created conv block (CB) by combining conv layer and batch normalization, and (iii) we created fully connected block (FCB) by combining dropout layer and fully connected layer.

Those three improvements help enrich the performance of the basic DCNN, and we name our proposed algorithm as “5-layer DCNN with stochastic pooling for COVID-19 (5L-DCNN-SP-C) algorithm.” Sections 2, 3, 4, and 5 present the dataset, methodology, results, and conclusions, respectively.

2 Dataset

We enrolled 142 COVID-19 subjects and 142 healthy controls (HCs) from local hospitals. CCT was performed on all subjects, and three-dimensional volumetric images were obtained. Slice level selection (SLS) method was used: For COVID-19 pneumonia patients, the slice showing the largest size and number of lesions was selected. For healthy controls, any level of the image can be selected. Use this slice level selection method, we extract 320 images (resolution: 1024 × 1024) from both COVID-19 patients and HC subjects, respectively. The demographics of our image set are offered in Table 1. Table 2 shows the abbreviation list for easy reading.

3 Methodology

3.1 Preprocessing

Let us set the original CCT image set to be \({S}_{1}\), which is composed of n CCT images as

First, we compress the three-channel color image to gray image, and get the grayscale image set \({S}_{2}\) as

Second, the histogram stretching (HS) method was firstly employed to increase the image’s contrast. For i-th image \({s}_{2}(i)\), the new histogram stretched image \({s}_{3}(i)\) was obtained as

where \(1 \le \alpha \le 1024,1 \le \beta \le 1024\). Here, \(\left( {\alpha , \beta } \right)\) means coordinates of pixel of the image \(s_{2} \left( i \right)\), and \(\varepsilon_{2}^{\min } \left( i \right)\) means the minimum value of CCT image \(s_{2} \left( i \right)\). \(\varepsilon_{2}^{\max } \left( i \right)\) means the maximum value of image \(s_{2} \left( i \right)\).

In all, we get the histogram stretched dataset \(S_{3}\) as

Third, we crop the images to remove the texts at the margin area, and the checkup bed at the bottom area. Thus, we get the cropped dataset \(S_{4}\) as

where C represents crop operation, and the parameter vector \([\mathrm{top},\mathrm{bottom},\mathrm{left},\mathrm{right}]\) means to the range to be removed from top, bottom, left, and right directions. In our study, we set \(\mathrm{top}=\mathrm{bottom}=\mathrm{left}=\mathrm{right}=150\).

Fourth, we downsampled the image \(s_{4} \left( i \right)\) to size of \(\left[ {\varpi ,\varpi } \right]\), and we now get the resized image set \(S_{5}\) as

where  means downsampling operation. \(\varpi = 128\) in this study. Figure 1 shows the above four preprocessing steps.

means downsampling operation. \(\varpi = 128\) in this study. Figure 1 shows the above four preprocessing steps.

Table 3 compares the size and storage per image at every preprocessing step. We can see here after the five-step preprocessing procedure, each image will only cost about 0.52% of its original storage. The byte compression ratio (BCR) was calculated as: \({\text{BCR}} = {\text{byte}}\left( {s_{5} } \right) \div {\text{byte}}\left( {s_{1} } \right) = 65,536 \div 12,582,912 = 0.52\%\).

Figure 2 shows two samples of our collected and preprocessed dataset \({S}_{5}\), from which we can clearly observe the clinical biomarkers of COVID-19. Cui et al. [19] reported the preliminary CT findings of COVID-19 in their publication. Tuncer et al. [20] used chest CT images, and then developed a local binary pattern and iterative ReliefF algorithm. There are more open publications that show it is feasible to develop effective AI systems based on CCT images.

3.2 Basics of DCNN

Deep convolutional neural network (DCNN) is a king of new artificial neural network. Its main feature is to use multiple layers to build a deep neural network. Generally, DCNN is composed of conv layers (CLs), pooling layers (PLs), and fully connected layers (FCLs) [21,22,23,24,25]. Figure 3 presents a simplistic instance consisting of 2 CLs, 2 PLs, and 2 FCLs. On the right part of Fig. 3, The blue rectangle means FCL block, and red rectangle means the softmax function. DCNNs could reach better performances than old-dated AI methods, because they learn the feature from the data during the training procedure. There is no need to consume much time in feature engineering.

The essential operation in DCNN is convolution. The CL performed 2D convolution along the width and height directions. Note that the weights in CNN are initialized with random, and then learnt from data itself by network training. Figure 4 illustrates the pipeline of input feature maps passing across a CL. Assume there is an input matrix, \(J\) kernels (\(K_{1} ,K_{2} , \ldots ,K_{j} , \ldots ,K_{J}\)), and an output O, with theirs sizes \({\mathbb{S}}\) defined as

where \(\left( {W, X, C} \right)\) represent the size of height, width, and channels of the matrix, respectively. Subscript I, K, and O represent input, kernel, and output, respectively. J denotes total number of filters. Note that

which means the channel of input \(C_{I}\) should equal the channel of kernel \(C_{K}\), and the channel of output \(C_{O}\) should equal the number of filters J.

Assume those filters move with padding of B and stride of A, we can get their relationship by simple math as:

where \( {\lfloor }.{ \rfloor }\) represents the floor function. Afterward, CL’s outputs are hurled into a nonlinear activation function (NLAF) \(\sigma\), that usually selects the rectified linear unit (ReLU) function.

ReLU is preferred to traditional NLAFs such as hyperbolic tangent (HT) and sigmoid (SM) function

3.3 Improvement 1: Use SP to replace MP and AP

The activation maps (AMs) after each block within DCNN are usually too large, i.e., the size of their width, length, and channels are too large to handle, which will cause (i) overfitting of the training and (ii) large computational costs.

Pooling layer (PL) is a form of nonlinear downsampling (NLDS) method to solve above issue. Further, PL can provide invariance-to-translation property to the AMs. For a \(2 \times 2\) region, suppose the pixels within the region \(\overline{\varphi }\) are

The average pooling (AP) calculates the mean value in the region \(\overline{\varphi }\). Assume the output value after NLDS is z, we can have

where \(\left| {\overline{\varphi }} \right|\) means the number of elements of region \(\overline{\varphi }\). Here, \(\left| {\overline{\varphi }} \right| = 4\) if we use a \(2 \times 2{ }\) NLDS pooling. Using Fig. 5 as an example, and assuming the region \(\dot{\varphi }\) at 2nd row 1st column of the input AM, I is chosen, i.e., \(\dot{\varphi } = I\left( {row = 2,col = 1} \right)\); thus, we have \(\begin{array}{*{20}c} {z_{{\dot{\varphi }}}^{AP} = {\text{average}}\left( {\dot{\varphi }} \right) = \left( {4 + 4 + 3 + 9} \right) \div 4 = 20 \div 4 = 5} \\ \end{array}\).

The max pooling (MP) operates on the region \({\overline{{\varphi }}}\) and selects the max value. Note that both AP and MP work on every slice separately.

In Fig. 5, \(\begin{array}{*{20}c} {z_{{\dot{\varphi }}}^{MP} = \max \left( {\dot{\varphi }} \right) = \max \left( {4 + 4 + 3 + 9} \right) = 9} \\ \end{array}\).

In practice, scholars observed that the AP did not work well, because all pixels in the region \(\overline{\varphi }\) are within the arguments of the NLDS function; hence, it could down-weight (DW) intense activation owing to numerous near-zero pixels. For example, in our region \(\dot{\varphi }\), the strongest value \(9\mathop{\to}\limits^{{\rm DM}}5\). On the other hand, MP deciphers above DW problem; however, it simply overfits the training set and causes the lack-of-generalization (LoG) problem.

The stochastic pooling (SP) was introduced to conquer the DW, overfitting, and LoG problems caused by MP and AP. Instead of computing the average or the max, the output of the SP \(z^{{{\text{SP}}}}\) is calculated via sampling from a multinomial distribution generated from the activations of each region \(\overline{\varphi }\). Three steps of SP are depicted below:

-

(1)

Estimate the probability \(\theta_{i,j} \in {\Theta }\) of each entry \(\left\{ {\varphi_{i,j} ,i,j = 1,2} \right\}\) within the region \(\overline{\varphi }\).

$$ \theta_{i,j} = \frac{{\varphi_{i,j} }}{{{\text{sum}}\left( {\overline{\varphi }} \right)}},\quad i,j = 1,2 $$(17a)$$ \mathop \sum \limits_{i,j = 1}^{2} \theta_{i,j} = 1 $$(17b)in which, (i, j) is the element index of region \(\overline{\varphi }\). In matrix format, equation (17a) can be rewritten as

$$ \Theta = \overline{\varphi }/\sum \left( {\overline{\varphi }} \right). $$(18) -

(2)

Select a location \(\beta\) within \(\overline{\varphi }\) in accordance with the probability \({ }\left\{ {\theta_{i,j} } \right\}\).

$$ \beta \sim {\text{Prob}}\left( {\theta_{1,1} ,\theta_{1,2} , \theta_{2,1} , \theta_{2,2} } \right). $$(19) -

(3)

The output is the value at location \(\beta\).

$$ z_{{\overline{\varphi }}}^{{{\text{SP}}}} = \varphi_{\beta } $$(20)

Use the region \(\dot{\varphi }\) in Fig. 5 as example, SP first calculates the probability map (PM),

Using the probability map, we randomly select the position \(\beta = \left( {2,2} \right)\) associates with probability of \(\theta_{2,2} \left( {\dot{\varphi }} \right) = 0.45\). Thus, the SP output of \(\dot{\varphi }\) is \(z_{{\dot{\varphi }}}^{{\rm SP}} = \dot{\varphi }_{\beta } = \dot{\varphi }_{{\left( {2,2} \right)}} = 9\). In all, SP uses non-maximal activations from the region \(\overline{\varphi }\), instead of outputting the greatest value.

3.4 Improvement 2: batch normalization transform

The motivation of batch normalization transform (BNT) is the so-called internal covariant shift (ICS), which means the effect of randomness of the distribution of inputs to internal DCNN layers during training. The phenomenon of ICS will worsen the DCNN’s performance.

This study introduced BNT to normalize those internal layer’s inputs \(A = \left\{ {a_{i} } \right\}\) over every mini-batch (suppose its size is m), in order to guarantee the batch normalized output \(B = \left\{ {b_{i} } \right\}\) have a uniform distribution. Mathematically, BNT is to learn a function from

The empirical mean \(\mu\) and empirical variance \(\sigma^{2}\) over training set \({\text{A}}\) can be calculated as

The input \(a_{i} \in A\) was first normalized to

where \(\Delta\) in denominator in Eq. (25) is to enhance the numerical stability. The value of \(\Delta\) is a small constant. \(\Delta = 10^{ - 5}\) in this study. Now  has zero-mean and unit-variance characteristics. In order to have a more expressive deep neural network [26], a transformation is usually carried out as

has zero-mean and unit-variance characteristics. In order to have a more expressive deep neural network [26], a transformation is usually carried out as

where the parameters \({\mathfrak{C}}\) and \({\mathfrak{D}}\) are two learnable parameters during training. The transformed output \(b_{i} \in B\) is then passed to the next layer and the normalized  remains internal to current layer.

remains internal to current layer.

In the inference stage, we do not have mini-batch anymore. So instead of calculating empirical mean and empirical variance, we will calculate population mean \(\underline{\mu }\) and population variance \(\underline{{\sigma^{2} }}\), and we have the output \(\underline{{b_{i} }}\) at inference stage as

We proposed to use convolution block (CB) to be one of the building blocks of our DCNN. The CB consists of one conv layer and one batch normalization layer.

3.5 Improvement 3: fully connected block

In traditional DCNN, the fully connected layer (FCL) serves the role of classifier. We plan to replace FCL with fully connected block (FCB), which will include one dropout layer (DOL) and one FCL layer. Srivastava et al. [27] proposed the concept of dropout neurons (DON) and DOL by randomly drop neurons and set to zero their neighboring weights \(s\) from the DCNN during training.

The neuron’s incoming and outgoing connections are freezing, after it is dropped out. Figure 6 illustrates the illustration of neurons in DOL. The selections of dropout are random with a retention probability (\(\theta_{rp}\)).

where \(\theta_{rp} = 0.5\), and \(\tilde{s} \) means the weights of dropped out neurons.

During inference, we run the entire DCNN without dropout, but the weights of FCLs of FCBs are downscaled (viz., multiplied) by \(\theta_{rp}\).

Figure 7 shows a toy DCNN example with four FCL layers. Suppose we have \(N\left( k \right)\) neurons at k-th layer, and assume \(N\left( 1 \right) = 12\), \(N\left( 2 \right) = 10\), \(N\left( 3 \right) = 8\), \(N\left( 4 \right) = 4\). Thus, we have in total \(\mathop \sum \nolimits_{k = 1}^{4} N\left( k \right) = 34\) nodes. Suppose we do not consider incoming and outgoing weights, and do not consider the number of biases, the size of learnable weights \(S^{b} \left( {i,j} \right)\) as number of weights between layer \(i\) and layer \(j\) before dropout, roughly calculating, can be written as \(S^{b} \left( {1,2} \right) = 12 \times 10 = 120\), \(S^{b} \left( {2,3} \right) = 10 \times 8 = 80\), \(S^{b} \left( {3,4} \right) = 8 \times 4 = 32\). In total, we have the total number of learnable weights before dropout as \(S^{b} = \mathop \sum \nolimits_{k = 1}^{3} S^{b} \left( {k,k + 1} \right) = 232\). Using \(\theta_{rp} = 0.5\), the size of learnable weights after dropout between layer \(i\) and layer \(j\) is symbolized as \({S}^{a}(i,j)\), and we can calculate the total number of learnable weights as \( S^{a} = \mathop\sum\nolimits_{k = 1}^{3} S^{a} \left( {k,k + 1} \right) = S^{a} \left( {1,2} \right) + S^{a} \left( {2,3} \right) + S^{a} \left( {3,4} \right) = 30 + 20 + 8 = 58 \).

The compression ratio of learnable weights (CRLW), roughly, can be calculated by \(58/232 = 0.25\), which is the squared value of retention probability \(\theta_{rp}\).

where \(S^{a}\) and \(S^{b}\) means the number of learnable weights after and before dropout, respectively.

3.6 Proposed DCNN and its Implementation

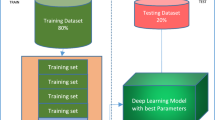

We create a new five-layer DCNN with stochastic pooling for COVID-19 detection (5L-DCNN-SP-C) with three CBs and two FCBs. The structure of proposed 5L-DCNN-SP-C is shown in Fig. 8, where SP is added after each activation map. The reason why set three CBs and two FCBs are by manual trial-and-error method. In the experiment, we will compare this setting (3 CBs + 2 FCBs) against other setting.

The hyperparameters of each layer/block of proposed 5L-DCNN-SP-C are listed in Table 4, where \(\left( {\alpha \beta \times \beta /\gamma } \right)\) means \(\alpha\) filters with size of \(\beta \times \beta\), followed by pooling layer with pooling size of \(\gamma\). Meanwhile, W and B represent the size of weight matrix and bias vector, respectively. The last column in Table 4 shows the activation map (AM).

Ten runs of tenfold cross-validation were employed. Suppose confusion matrix \({\mathbb{C}}\) is defined as

where \(\left( {c_{11} ,c_{12} ,c_{21} ,c_{22} } \right)\) represent TP, FN, FP, and TN, respectively. k is the index of trial (in each trial, onefold was used as test, and all the other folds were used as training), and r is the index of run.

Note that \({\mathbb{C}}\) will be calculated based on each test fold, and summarized across all 10 trials. Then, we get the

Now we can calculate six indicators \(\vec{\eta }\left( r \right)\) based on the confusion matrix over r-th run \({\mathbb{C}}\left( r \right)\).

where \(\eta_{1}\) is sensitivity, \(\eta_{2}\) is specificity, \(\eta_{3}\) is precision, and \(\eta_{4}\) is accuracy. Ignoring variable r, we have:

\(\eta_{5}\) is F1 score.

and \(\eta_{6}\) is Matthews correlation coefficient (MCC)

The mean and standard deviation (SD) of all six measures \(\vec{\eta }\) will be calculated over all ten runs.

where \(1 \le m \le 6\) represents the index of measures.

4 Experiments, results, and discussion

4.1 Pooling method comparison

The results of 10 runs \(\vec{\eta }\) of SP were compared against AP and MP. We compared three pooling methods on test set. The results of all three pooling methods are listed in Table 5. For AP, it obtains \(\eta_{1} = 91.22 \pm { }1.35\), \(\eta_{2} = 90.47 \pm { }1.27\), \(\eta_{3} = 90.55 \pm { }1.19\), \(\eta_{4} = 90.84 \pm { }1.05\), \(\eta_{5} = 90.88 \pm { }1.06\), and \(\eta_{6} = 81.70 \pm { }2.10\). The results of AP are the worst of all three pooling methods. MP obtains better results than AP. The six measures of MP are \(\eta_{1} = 92.38 \pm { }1.04\), \(\eta_{2} = 92.75 \pm { }0.92\), \(\eta_{3} = 92.73 \pm { }0.89\)., \(\eta_{4} = 92.56 \pm { }0.81\), \(\eta_{5} = 92.55 \pm { }0.82\), and \(\eta_{6} = 85.13 \pm { }1.61\). Finally, SP obtains the greatest performances on all six measures. The six measures of SP are as follows: \(\eta_{1} = 93.28 \pm { }1.50\), \(\eta_{2} = 94.00 \pm { }1.56\), \(\eta_{3} = 93.96 \pm { }1.54\), \(\eta_{4} = 93.64 \pm { }1.42\), \(\eta_{5} = 93.62 \pm { }1.42\), and \(\eta_{6} = 87.29 \pm { }2.83\). For the ease of clear view, Fig. 9 presents the error bar plot of comparison of all three pooling methods.

4.2 Structure comparison

We set the number of CBs as \({\upgamma }_{{{\text{CB}}}}\) and the number of FCB as \({\upgamma }_{{{\text{FCB}}}}\). We set \({\upgamma }_{{{\text{CB}}}} = 3\) and \({\upgamma }_{{{\text{FCB}}}} = 2\) by trial-and-error method. Suppose we all use SP, and we create five different structure configurations (SC) setting as in Table 6. The results of cognate performances on test set \(\vec{\eta }\) are shown in Table 7, where we can observe the SC-V performs the best results, which corresponds to our optimal SC setting: \({\upgamma }_{{{\text{CB}}}} = 3\) and \({\upgamma }_{{{\text{FCB}}}} = 2\)

4.3 Comparison to State-of-the-art approaches

We compare our method “5L-DCNN-SP-C” with other COVID-19 classification approaches: RBFNN [6], K-ELM [7], ELM-BA [8], 6L-CNN-F [9], GoogLeNet [10], ResNet-18 [11]. The results \(\vec{\eta }\) on ten runs over test set are presented in Table 8. It is easily observed that our proposed 5L-DCNN-SP-C smashes all the other six comparison baseline methods in all indicators. Particularly, 6L-CNN-F [9] also used convolutional neural network method, and they used more layers (6 layers) than layers used in our model (5 layers).

The reason why our five-layer model is better than that six-layer model [9] is threefold: (i) We choose SP to improve the performance of our deep learning model; (ii) We fine-tune the hyperparameters (such as \({\upgamma }_{{{\text{CB}}}}\), \({\upgamma }_{{{\text{FCB}}}}\), number of filters at each CB, number of neurons at each FCB); (iii) Our model was particularly designed for detecting COVID-19, while the 6L-CNN-F [9] was designed for fingerspelling recognition. In the future, we shall try to use clustering techniques [28, 29] to help improve the performance. Figure 10 shows the comparison bar plot of all seven methods.

5 Conclusion

This study proposed a novel 5L-DCNN-SP-C framework, that combines deep convolutional neural network and stochastic pooling for COVID-19 diagnosis. We added batch normalization transform and dropout layers, and proposed two new blocks (convolution block and fully connected block). In our test, we proved three CBs and two FCBs structure can give the best performance.

There are several shortcomings of our method: (i) The dataset is somewhat small. We shall seek to collect more datasets. (ii) Some new network technologies would be tried in our future studies, such as the recent transfer learning pretrained models.

References

Hadi, A.G., Kadhom, M., Hairunisa, N., Yousif, E., Mohammed, S.A.: A review on COVID-19: origin, spread, symptoms, treatment, and prevention. Biointerface Res. Appl. Chem. 10, 7234–7242 (2020)

Tsuchida, T., Fujitani, S., Yamasaki, Y., Kunishima, H., Matsuda, T.: Development of a protective device for RT-PCR testing SARS-CoV-2 in COVID-19 patients. Infect. Control Hosp. Epidemiol. 41, 975–976 (2020)

Penarrubia, L., Ruiz, M., Porco, R., Rao, S.N., Juanola-Falgarona, M., Manissero, D., et al.: Multiple assays in a real-time RT-PCR SARS-CoV-2 panel can mitigate the risk of loss of sensitivity by new genomic variants during the COVID-19 outbreak. Int. J. Infect. Dis. 97, 225–229 (2020)

Ai, T., Yang, Z., Hou, H., Zhan, C., Chen, C., Lv, W. et al.: Correlation of chest CT and RT-PCR testing in coronavirus disease 2019 (COVID-19) in China: a report of 1014 cases. Radiology, Article ID: 200642, (2020)

Li, Y., Xia, L.: Coronavirus disease 2019 (COVID-19): role of chest CT in diagnosis and management. AJR Am. J. Roentgenol. 214, 1280–1286 (2020)

Lu, Z.: A pathological brain detection system based on radial basis function neural network. J. Med. Imaging Health Inform. 6, 1218–1222 (2016)

Yang, J.: A pathological brain detection system based on kernel based ELM. Multimed. Tools Appl. 77, 3715–3728 (2018)

Lu, S.: A pathological brain detection system based on extreme learning machine optimized by bat algorithm. CNS Neurol. Disord. Drug. Targets 16, 23–29 (2017)

Jiang, X.: Chinese sign language fingerspelling recognition via six-layer convolutional neural network with leaky rectified linear units for therapy and rehabilitation. J. Med. Imaging Health Inform. 9, 2031–2038 (2019)

Szegedy, C., Liu, W., Jia, Y., Sermanet, P., Reed, S., Anguelov, D. et al.: Going deeper with convolutions. In: Proceedings of the IEEE conference on computer vision and pattern recognition, 2015, pp. 1–9.

Yu, X., Wang, S.-H.: Abnormality diagnosis in mammograms by transfer learning based on ResNet18. Fundam. Inform. 168, 219–230 (2019)

Albahri, A.S., Hamid, R.A., Alwan, J.K., Al-qays, Z.T., Zaidan, A.A., Zaidan, B.B. et al.: Role of biological data mining and machine learning techniques in detecting and diagnosing the novel coronavirus (COVID-19): a systematic review. J. Med. Syst. 44, 11, Article ID: 122, (2020).

De Felice, F., Polimeni, A.: Coronavirus Disease (COVID-19): A Machine Learning Bibliometric Analysis. In Vivo 34, 1613–1617 (2020)

Liu, A.J.: Tea category identification using computer vision and generalized eigenvalue proximal SVM. Fundam. Inform. 151, 325–339 (2017)

Zhan, T.M., Chen, Y.: Multiple sclerosis detection based on biorthogonal wavelet transform, RBF kernel principal component analysis, and logistic regression. IEEE Access 4, 7567–7576 (2016)

Du, S.: Multi-objective path finding in stochastic networks using a biogeography-based optimization method. Simulation 92, 637–647 (2016)

Atangana, A.: Application of stationary wavelet entropy in pathological brain detection. Multimed. Tools Appl. 77, 3701–3714 (2018)

Pan, H., Zhang, C., Tian, Y.: RGB-D image-based detection of stairs, pedestrian crosswalks and traffic signs. J. Vis. Commun. Image Represent. 25, 263–272 (2014)

Cui, N., Zou, X.G., Xu, L.: Preliminary CT findings of coronavirus disease 2019 (COVID-19). Clin. Imaging 65, 124–132 (2020)

Tuncer, T., Dogan, S., Ozyurt, F.: An automated residual exemplar local binary pattern and iterative ReliefF based COVID-19 detection method using chest X-ray image. Chemom. Intell. Lab. Syst. 203, 11, Article ID: 104054, (2020)

Hong, J.: Classification of cerebral microbleeds based on fully-optimized convolutional neural network. Multimed. Tools Appl. 79, 15151–15169 (2020)

Hong, J.: Sensorineural hearing loss identification via nine-layer convolutional neural network with batch normalization and dropout. Multimed. Tools Appl. 79, 15135–15150 (2020)

Wang, S., Chen, Y.: Fruit category classification via an eight-layer convolutional neural network with parametric rectified linear unit and dropout technique. Multimed. Tools Appl. 79, 15117–15133 (2020)

Sui, Y.X.: Classification of Alzheimer's disease based on eight-layer convolutional neural network with leaky rectified linear unit and max pooling. J. Med. Syst. vol. 42, Article ID: 85, (2018)

Jiang, Y.Y.: Cerebral micro-bleed detection based on the convolution neural network with rank based average pooling. IEEE Access 5, 16576–16583 (2017)

Garbin, C., Zhu, X.Q., Marques, O.: Dropout versus batch normalization: an empirical study of their impact to deep learning. Multimed. Tools Appl. 79, 12777–12815 (2020)

Srivastava, N., Hinton, G., Krizhevsky, A., Sutskever, I., Salakhutdinov, R.: Dropout: a simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 15, 1929–1958 (2014)

Chen, Y., Zhou, L., Pei, S., Yu, Z., Chen, Y., Liu, X., et al.: KNN-BLOCK DBSCAN: fast clustering for large-scale data. IEEE Trans. Syst. Man Cybern. Syst. (2019). https://doi.org/10.1109/TSMC.2019.2956527

Chen, Y.W., Hu, X.L., Fan, W.T., Shen, L.L., Zhang, Z., Liu, X. et al.: Fast density peak clustering for large scale data based on kNN. Knowl. Based Syst. 187, 7. Article ID: 104824

Acknowledgement

This paper is partially supported by Natural Science Foundation of China (61602250); Henan Key Research and Development Project (182102310629); Guangxi Key Laboratory of Trusted Software (kx201901); Fundamental Research Funds for the Central Universities (CDLS-2020-03); Key Laboratory of Child Development and Learning Science (Southeast University), Ministry of Education; Royal Society International Exchanges Cost Share Award, UK (RP202G0230); Medical Research Council Confidence in Concept Award, UK (MC_PC_17171); Hope Foundation for Cancer Research, UK (RM60G0680); and British Heart Foundation Accelerator Award, UK.

Author information

Authors and Affiliations

Corresponding authors

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Zhang, YD., Satapathy, S.C., Liu, S. et al. A five-layer deep convolutional neural network with stochastic pooling for chest CT-based COVID-19 diagnosis. Machine Vision and Applications 32, 14 (2021). https://doi.org/10.1007/s00138-020-01128-8

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00138-020-01128-8