Abstract

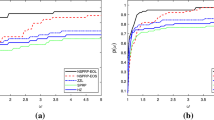

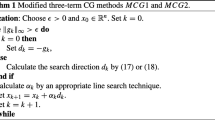

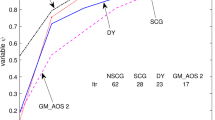

Recently, Peyghami et al. (Optim Meth Soft 43:1–28, 2015) proposed a modified secant equation, which applied a new updating Yabe and Takano’s rule as an adaptive version of the parameter of conjugate gradient. Here, using this modified secant equation, we propose a new modified descent spectral nonlinear conjugate gradient method. The proposed method includes two major features; the higher-order accuracy in approximating the second-order curvature information of the objective function and the sufficient descent condition. Global convergence of the proposed method is proved for uniformly convex functions and general functions. Numerical experiments are done on a set of test functions of the CUTEr collection. The results are compared with some well-known methods.

Similar content being viewed by others

References

Andrei N (2007a) A scaled bfgs preconditioned conjugate gradient algorithm for unconstrained optimization. Appl Math Lett 20(6):645–650

Andrei N (2007b) Scaled conjugate gradient algorithms for unconstrained optimization. Comput Optim Appl 38(3):401–416

Andrei N (2007c) Scaled memoryless bfgs preconditioned conjugate gradient algorithm for unconstrained optimization. Optim Methods Softw 22(4):561–571

Andrei N (2008) A scaled nonlinear conjugate gradient algorithm for unconstrained optimization. Optimization 57(4):549–570

Andrei N (2010) Accelerated scaled memoryless bfgs preconditioned conjugate gradient algorithm for unconstrained optimization. Eur J Oper Res 204(3):410–420

Babaie-Kafaki S (2014) Two modified scaled nonlinear conjugate gradient methods. J Comput Appl Math 261:172–182

Babaie-Kafaki S, Ghanbari R, Mahdavi-Amiri N (2010) Two new conjugate gradient methods based on modified secant equations. J Comput Appl Math 234(5):1374–1386

Barzilai J, Borwein JM (1988) Two-point step size gradient methods. IMA J Numer Anal 8(1):141–148

Birgin EG, Martínez JM (2001) A spectral conjugate gradient method for unconstrained optimization. Appl Math Optim 43(2):117–128

Bojari S, Eslahchi MR (2019) Two families of scaled three-term conjugate gradient methods with sufficient descent property for nonconvex optimization. Numer Algorithms 83(3):901–933

Bongartz I, Conn A, Gould N, Toint P (1995) Cute: Constrained and unconstrained testing environment. ACM Trans Math Softw 21:123–160

Dai YH, Liao LZ (2001) New conjugacy conditions and related nonlinear conjugate gradient methods. Appl Math Optim 43:87–101

Dai YH, Han J, Liu G, Sun D, Yin H, Yuan Y (2000) Convergence properties of nonlinear conjugate gradient methods. SIAM J Optim 10(2):345–358

Dai Y, Yuan J, Yuan YX (2002) Modified two-point stepsize gradient methods for unconstrained optimization. Comput Optim Appl 22(1):103–109

Dolan ED, Moré JJ (2001) Benchmarking optimization software with performance profiles. CoRR arXiv:cs.MS/0102001

Faramarzi P, Amini K (2019) A modified spectral conjugate gradient method with global convergence. J Optim Theory Appl 182(2):667–690

Ford J, Moghrabi I (1994) Multi-step quasi-newton methods for optimization. J Comput Appl Math 50(1):305–323

Gilbert JC, Nocedal J (1992) Global convergence properties of conjugate gradient methods for optimization. SIAM J Optim 2(1):21–42

Jian J, Chen Q, Jiang X, Zeng Y, Yin J (2017) A new spectral conjugate gradient method for large-scale unconstrained optimization. Optim Methods Softw 32(3):503–515

Li DH, Fukushima M (2001) A modified bfgs method and its global convergence in nonconvex minimization. J Comput Appl Math 129(1):15–35

Li G, Tang C, Wei Z (2007) New conjugacy condition and related new conjugate gradient methods for unconstrained optimization. J Comput Appl Math 202(2):523–539

Livieris IE, Pintelas P (2013) A new class of spectral conjugate gradient methods based on a modified secant equation for unconstrained optimization. J Comput Appl Math 239:396–405

Perry A (1978) A modified conjugate gradient algorithm. Oper Res 26(6):1073–1078

Peyghami MR, Ahmadzadeh H, Fazli A (2015) A new class of efficient and globally convergent conjugate gradient methods in the dai liao family. Optim Methods Softw 30(4):843–863

Raydan M (1997) The Barzilai and Borwein gradient method for the large scale unconstrained minimization problem. SIAM J Optim 7(1):26–33

Shanno DF (1978) Conjugate gradient methods with inexact searches. Math Oper Res 3(3):244–256

Sun W, Yuan Y (2006) Optimization theory and methods nonlinear programming. Springer, New York

Wei Z, Yu G, Yuan G, Lian Z (2004) The superlinear convergence of a modified bfgs-type method for unconstrained optimization. Comput Optim Appl 29:315–332

Wei Z, Li G, Qi L (2006) New quasi-newton methods for unconstrained optimization problems. Appl Math Comput 175(2):1156–1188

Wolfe P (1969) convergence conditions for ascent methods. SIAM Rev 11(2):226–235

Yabe H, Takano M (2004) Global convergence properties of nonlinear conjugate gradient methods with modified secant condition. Comput Optim Appl 28(2):203–225

Yu G, Guan L, Chen W (2008) Spectral conjugate gradient methods with sufficient descent property for large-scale unconstrained optimization. Optim Methods Softw 23(2):275–293

Zhang J, Xu C (2001) Properties and numerical performance of quasi-newton methods with modified quasi-newton equations. J Comput Appl Math 137(2):269–278

Zhang JZ, Deng NY, Chen LH (1999) New quasi-newton equation and related methods for unconstrained optimization. J Optim Theory Appl 102(1):147–167

Zhang L, Zhou W, Li D (2007) Some descent three-term conjugate gradient methods and their global convergence. Optim Methods Softw 22(4):697–711. https://doi.org/10.1080/10556780701223293

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Nezhadhosein, S. A Modified Descent Spectral Conjugate Gradient Method for Unconstrained Optimization. Iran J Sci Technol Trans Sci 45, 209–220 (2021). https://doi.org/10.1007/s40995-020-01012-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40995-020-01012-0