Abstract

We present the details of the photometric and astrometric calibration of the Pan-STARRS1 3π Survey. The photometric goals were to reduce the systematic effects introduced by the camera and detectors, and to place all of the observations onto a photometric system with consistent zero-points over the entire area surveyed, the ≈30,000 deg2 north of δ = −30°. Using external comparisons, we demonstrate that the resulting photometric system is consistent across the sky to between 7 and 12.4 mmag depending on the filter. For bright stars, the systematic error floor for individual measurements is (σg, σr, σi, σz, σy) = (14, 14, 15, 15, 18) mmag. The astrometric calibration compensates for similar systematic effects so that positions, proper motions, and parallaxes are reliable as well. The bright-star systematic error floor for individual astrometric measurements is 16 mas. The Pan-STARRS Data Release 2 (DR2) astrometric system is tied to the Gaia DR1 coordinate frame with a systematic uncertainty of ∼5 mas.

Export citation and abstract BibTeX RIS

1. Introduction

From 2010 May through 2014 March, the Pan-STARRS Science Consortium used the 1.8 m Pan-STARRS1 telescope to perform a set of wide-field science surveys. These surveys are designed to address a range of science goals, including the search for hazardous asteroids, the study of the formation and architecture of the Milky Way galaxy, and the search for Type Ia supernovae to measure the history of the expansion of the universe. The majority of the time (56%) was spent on surveying the three-quarters of the sky north of −30° decl. with  ,

,  ,

,  ,

,  , and

, and  filters in the so-called 3π Survey. Another ∼25% of the time was concentrated on repeated deep observations of 10 specific fields in the Medium-Deep Survey. The rest of the time was used for several other surveys, including a search for potentially hazardous asteroids in our solar system. The details of the telescope, surveys, and resulting science publications are described by Chambers et al. (2016).

filters in the so-called 3π Survey. Another ∼25% of the time was concentrated on repeated deep observations of 10 specific fields in the Medium-Deep Survey. The rest of the time was used for several other surveys, including a search for potentially hazardous asteroids in our solar system. The details of the telescope, surveys, and resulting science publications are described by Chambers et al. (2016).

The wide-field Pan-STARRS1 telescope consists of a 1.8 m diameter f/4.4 primary mirror with an 0.9 m secondary, producing a 33 field of view (Hodapp et al. 2004). The optical design yields low distortion and minimal vignetting even at the edges of the illuminated region. The optics, in combination with the natural seeing, result in generally good image quality: the median image quality for the 3π Survey is FWHM = (131, 119, 111, 107, 102) for ( ,

,  ,

,  ,

,  ,

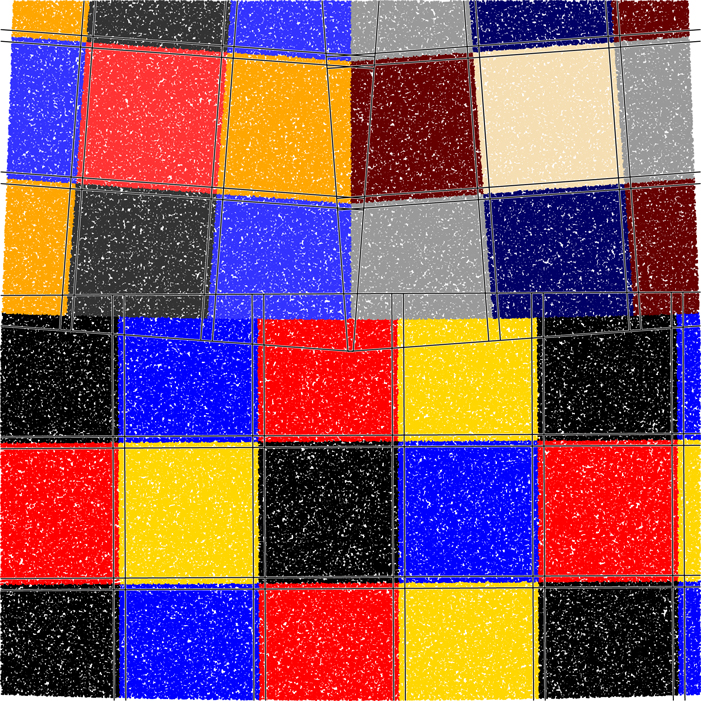

,  ), with a floor of ∼07. The Pan-STARRS1 camera (Tonry & Onaka 2009) is a mosaic of 60 edge-abutted 4800 × 4800 pixel back-illuminated CCID58 Orthogonal Transfer Arrays manufactured by Lincoln Laboratory (Tonry et al. 2006, 2008). The CCDs have 10 μm pixels subtending 0258 and are 70 μm thick. The detectors are read out using a StarGrasp CCD controller, with a readout time of 7 s for a full unbinned image (Onaka et al. 2008). The active, usable pixels cover ∼80% of the field of view. Figure 1 illustrates the physical layout of the devices in the camera with respect to the parity of the sky.

), with a floor of ∼07. The Pan-STARRS1 camera (Tonry & Onaka 2009) is a mosaic of 60 edge-abutted 4800 × 4800 pixel back-illuminated CCID58 Orthogonal Transfer Arrays manufactured by Lincoln Laboratory (Tonry et al. 2006, 2008). The CCDs have 10 μm pixels subtending 0258 and are 70 μm thick. The detectors are read out using a StarGrasp CCD controller, with a readout time of 7 s for a full unbinned image (Onaka et al. 2008). The active, usable pixels cover ∼80% of the field of view. Figure 1 illustrates the physical layout of the devices in the camera with respect to the parity of the sky.

Figure 1. Diagram illustrating the layout of OTA devices in GPC1. The blue dots mark the locations of the amplifiers for xy00 cells in each chip. When cells are mosaicked to a single pixel grid, the pixel in this corner is at chip coordinate (0, 0). The figure illustrates the orientation of the OTA devices relative to the parity of the sky. An exposure taken with north at the top of the field of view will have east to the left when the OTA devices are mosaicked as shown. Note that the devices OTA0Y—OTA3Y are rotated by 180° relative to the other half of the camera. The labeling of the nonexistent corner OTAs is provided to orient the focal plane.

Download figure:

Standard image High-resolution imageNightly observations are conducted remotely from the Advanced Technology Research Center in Kula, the main facility of the University of Hawaii's Institute for Astronomy operations on Maui. During the Pan-STARRS1 Science Survey, images obtained by the Pan-STARRS1 system were stored first on computers at the summit, then copied with low latency via internet to the dedicated data analysis cluster located at the Maui High Performance Computer Center in Kihei, Maui.

Pan-STARRS produced its first large-scale public data release, Data Release 1 (DR1) on 16 December 2016. DR1 contains the results of the third full reduction of the Pan-STARRS 3π Survey archival data, identified as PV3. Previous reductions (PV0, PV1, and PV2; see Magnier et al. 2020a) were used internally for pipeline optimization and the development of the initial photometric and astrometric reference catalog. The products from these reductions were not publicly released but have been used to produce a wide range of scientific papers from the Pan-STARRS1 Science Consortium members (Chambers et al. 2016). DR1 contained only average information resulting from the many individual images obtained by the 3π Survey observations. A second data release, DR2, was made available on 2019 January 28. DR2 provides measurements from all of the individual exposures and include an improved calibration of the PV3 processing of that data set.

This is the fifth in a series of seven papers describing the Pan-STARRS1 Surveys, the data reduction techniques and the resulting data products. This paper (Paper V) describes the final calibration process and the resulting photometric and astrometric quality.

Chambers et al. (2016, Paper I) provides an overview of the Pan-STARRS System, the design and execution of the Surveys, the resulting image and catalog data products, a discussion of the overall data quality and basic characteristics, and a brief summary of important results.

Magnier et al. (2020a, Paper II) describes how the various data processing stages are organized and implemented in the Image Processing Pipeline (IPP), including details of the the processing database which is a critical element in the IPP infrastructure .

Waters et al. (2020, Paper III) describes the details of the pixel processing algorithms, including detrending, warping, and adding (to create stacked images) and subtracting (to create difference images), and the resulting image products and their properties.

Magnier et al. (2020b, Paper IV) describes the details of the source detection and photometry, including point-spread-function (PSF) and extended source fitting models, and the techniques for "forced" photometry measurements.

Flewelling et al. (2020, Paper VI) describes the details of the resulting catalog data and its organization in the Pan-STARRS database.

M. Huber et al. (2020, in preparation, Paper VII) describes the Medium-Deep Survey in detail, including the unique issues and data products specific to that survey. The Medium-Deep Survey is not part of DR1 or DR2 and will be made available in a future data release.

The Pan-STARRS1 filters and photometric system have already been described in detail in Tonry et al. (2012).

2. Pan-STARRS1 Data Analysis

Images obtained by Pan-STARRS1 are automatically processed in real time by the Pan-STARRS1 IPP (see Paper II). Real-time analysis goals are aimed at feeding the discovery pipelines of the asteroid search and supernova search teams. The data obtained for the Pan-STARRS1 Science Survey have also been used in three additional complete reprocessing of the data: Processing Versions 1, 2, and 3 (PV1, PV2, and PV3). The real-time processing of the data is considered "PV0." Except as otherwise noted, this article describes the calibration of the PV3 analysis of the data. Between DR1 and DR2, improvements were made to the calibration of both the photometry and astrometry, as described in this article.

The pipeline data processing steps are described in detail in Papers II, III, and IV. In summary, individual images are detrended: nonlinearity and bias corrections are applied, a dark current model is subtracted, and flat-field corrections are applied. The  -band images are also corrected for fringing: a master fringe pattern is scaled to match the observed fringing and subtracted. Mask and variance image arrays are generated with the detrend analysis and carried forward at each stage of the IPP processing. Source detection and photometry are performed for each chip independently. As discussed below, preliminary astrometric and photometric calibrations are performed for all chips in a single exposure in a single analysis. We refer to these measurements as the "chip" photometry and astrometry products.

-band images are also corrected for fringing: a master fringe pattern is scaled to match the observed fringing and subtracted. Mask and variance image arrays are generated with the detrend analysis and carried forward at each stage of the IPP processing. Source detection and photometry are performed for each chip independently. As discussed below, preliminary astrometric and photometric calibrations are performed for all chips in a single exposure in a single analysis. We refer to these measurements as the "chip" photometry and astrometry products.

Chip images are geometrically transformed based on the astrometric solution into a set of predefined pixel grids covering the sky, called skycells. These transformed images are called the warp images. Sets of warps for a given part of the sky and in the same filter may be added together to generate deeper "stack" images. PSF matched difference images are generated from combinations of warps and stacks; the details of the difference images and their calibration are outside of the scope of this article.

Astronomical objects are detected and characterized in the stack images. The details of the analysis of the sources in the stack images are discussed in Paper IV, but in brief, these include PSF photometry, along with a range of measurements driven by the goals of understanding the galaxies in the images. Because of the significant mask fraction of the GPC1 focal plane and the varying image quality both within and between exposures, the effective PSF of the Pan-STARRS1 Survey (PS1) stack images (often including more than 10 input exposures taken in different conditions) is highly variable. The PSF varies significantly on scales as small as a few to tens of pixels, making accurate PSF modeling essentially infeasible. The PSF photometry of sources in the stack images is thus degraded significantly compared to the quality of the photometry measured for the individual chip images.

To recover most of the photometric quality of the individual chip images while also exploiting the depth afforded by the stacks, the PV3 analysis makes use of forced photometry on the individual warp images. PSF photometry is measured on the warp images for all sources that are detected in the stack images images. The positions determined in the stack images are used in the warp images, but the PSF model is determined for each warp independently based on brighter stars in the warp image. The only free parameter for each object is the flux, which may be insignificant or even negative for sources that are near the faint limit of the stack detections. When the fluxes from the individual warp images are averaged, a reliable measurement of the faint source flux is determined. The details of this analysis are described in detail in Paper IV.

The data products from the chip photometry, stack photometry, and forced-warp photometry analysis stages are ingested into the internal calibration database called the Desktop Virtual Observatory, or DVO (see Section 4 in Paper II) and used for photometric and astrometric calibrations. In this article, we discuss the photometric calibration of the individual exposures, the stacks, and the warp images. We also discuss the astrometric calibration of the individual exposures and the stack images.

3. Pipeline Calibration

3.1. Overview

As images are processed by the data analysis system, every exposure is calibrated individually with respect to a photometric and astrometric reference database. The goal of this calibration step is to generate a preliminary astrometric calibration, to be used by the warping analysis to determine the geometric transformation of the pixels, and a preliminary photometric transformation, to be used by the stacking analysis to ensure the warps are combined using consistent flux units.

The program used for the pipeline calibration, psastro, loads the measurements of the chip detections from their individual output catalog files. It uses the header information populated at the telescope to determine an initial astrometric calibration guess based on the position of the telescope boresite R.A., decl., and position angle as reported by the telescope & camera subsystems. Using the initial guess, psastro loads astrometric and photometric data from the reference database.

3.2. Reference Catalogs

During the course of the PS1SC Survey, several reference databases have been used. For the first 20 months of the survey, psastro used a reference catalog with synthetic PS1  ,

,  ,

,  ,

,  ,

,  photometry generated by the Pan-STARRS IPP team based on combined photometry from Tycho (B, V), USNO (red, blue, IR Monet et al. 2003), and Two Micron All Sky Survey (2MASS) J, H, K (Skrutskie et al. 2006). The astrometry in the database was from 2MASS (Skrutskie et al. 2006). After 2012 May, a reference catalog generated from internal recalibration of the PV0 analysis of PS1 photometry and astrometry was used for the reference catalog.

photometry generated by the Pan-STARRS IPP team based on combined photometry from Tycho (B, V), USNO (red, blue, IR Monet et al. 2003), and Two Micron All Sky Survey (2MASS) J, H, K (Skrutskie et al. 2006). The astrometry in the database was from 2MASS (Skrutskie et al. 2006). After 2012 May, a reference catalog generated from internal recalibration of the PV0 analysis of PS1 photometry and astrometry was used for the reference catalog.

Coordinates and calibrated magnitudes of stars from the reference database are loaded by psastro. A model for the positions of the 60 chips in the focal plane is used to determine the expected astrometry for each chip based on the boresite coordinates and position angle reported by the header. Reference stars are selected from the full field of view of the GPC1 camera, padded by an additional 25% to ensure a match can be determined even in the presence of substantial errors in the boresite coordinates. It is important to choose an appropriate set of reference stars: if too few are selected, the chance of finding a match between the reference and observed stars is diminished. In addition, because stars are loaded in brightness order, a selection that is too small is likely to contain only stars that are saturated in the GPC1 images. On the other hand, if too many reference stars are chosen, there is a higher chance of a false-positive match, especially as many of the reference stars may not be detected in the GPC1 image. The selection of the reference stars includes a limit on the brightest and faintest magnitudes of the stars selected.

The astrometric analysis is necessarily performed first; after the astrometry is determined, an automatic byproduct is a reliable match between reference and observed stars, allowing a comparison of the magnitudes to determine the photometric calibration.

3.3. Astrometric Models

Three somewhat distinct astrometric models are employed within the IPP at different stages. The simplest model is defined independently for each chip: a simple TAN projection as described by Calabretta & Greisen (2002) is used to relate sky coordinates to a Cartesian tangent-plane coordinate system. A pair of low-order polynomials is used to relate the chip pixel coordinates to this tangent-plane coordinate system. The transforming polynomials are of the form:

where P, Q are the tangent-plane coordinates, X, Y are the coordinates on the 60 GPC1 chips, and  are the polynomial coefficients for each order i, j. In the psastro analysis, i + j < = Norder, where the order of the fit, Norder, may be 1 to 3, under the restriction that sufficient stars are needed to constrain the order.

are the polynomial coefficients for each order i, j. In the psastro analysis, i + j < = Norder, where the order of the fit, Norder, may be 1 to 3, under the restriction that sufficient stars are needed to constrain the order.

A second form of astrometry model, which yields a somewhat higher accuracy, consists of a set of connected solutions for all chips in a single exposure. This model also uses a TAN projection to relate the sky coordinates to a locally Cartesian tangent-plane coordinate system. A set of polynomials is then used to relate the tangent-plane coordinates to a "focal plane" coordinate system, L, M:

This set of polynomials accounts for effects such as optical distortion in the camera and distortions due to changing atmospheric refraction across the field of the camera. Because these effects are smooth across the field of the camera, a single pair of polynomials can be used for each exposure. As in the chip analysis above, the psastro code restricts the exponents with the rule i + j < = Norder, where the order of the fit, Norder, may be 1 to 3, under the restriction that sufficient stars are needed to constrain the order. For each chip, a second set of polynomials describes the transformation from the chip coordinate systems to the focal coordinate system:

A third form of the astrometry model is used in the context of the calibration determined within the DVO database system. We retain the two levels of transformations (chip  focal plane

focal plane  tangent plane), but the relationship between the chip and focal plane is represented by only the linear terms in the polynomial, supplemented by a coarse grid of displacements, δL, δM, sampled across the coordinate range of the chip. This displacement grid may have a resolution of up to 6 × 6 samples across the chip. The displacement for a specific chip coordinate value is determined via bilinear interpolation between the nearest sample points. Thus, the chip to focal-plane transformation may be written as

tangent plane), but the relationship between the chip and focal plane is represented by only the linear terms in the polynomial, supplemented by a coarse grid of displacements, δL, δM, sampled across the coordinate range of the chip. This displacement grid may have a resolution of up to 6 × 6 samples across the chip. The displacement for a specific chip coordinate value is determined via bilinear interpolation between the nearest sample points. Thus, the chip to focal-plane transformation may be written as

These high-order transformations are required for the individual chips to follow small-scale distortions due to the optics (stable from exposure to exposure) as well as the atmosphere (changes from over time). The spatial scale on which the astrometric deviations due to the atmosphere are varying is related to the isoplanatic patch size. We note that, in the typical conditions at the Pan-STARRS1 site, if the seeing is due to low-lying atmospheric layers, the isoplanatic patch scale will be at most a few arcminutes (Beckers 1988) and smaller when the seeing comes from higher altitudes.

We also note that, in our detailed astrometric analysis within the database system, we perform an initial correction for several systematic effects, including the color-dependent correction due to differential chromatic refraction. The corrected chip positions are the inputs to the equations above (see Section 6.1).

3.4. Cross-correlation Search

The first step of the analysis is to attempt to find the match between the reference stars and the detected objects. psastro uses 2D cross-correlation to search for the match. The guess astrometry calibration is used to define a predicted set of Xref, Yref values for the reference catalog stars. For all possible pairs between the two lists, the values of

are generated. The collection of ΔX, ΔY values are collected in a 2D histogram with a sampling of 50 pixels, and the peak pixel is identified. If the astrometry guess were perfect, this peak pixel would be expected to lie at (0, 0) and contain all of the matched stars. However, the astrometric guess may be wrong in several ways. An error in the constant term above,  shifts the peak to another pixel, from which

shifts the peak to another pixel, from which  can easily be determined. An error in the plate scale or a rotation will smear out the peak pixel potentially across many pixels in the 2D histogram.

can easily be determined. An error in the plate scale or a rotation will smear out the peak pixel potentially across many pixels in the 2D histogram.

To find a good match in the face of plate scale and rotation errors, the cross-correlation analysis above is performed for a series of trials in which the scale and rotation are perturbed from the nominal value by a small amount. For each trial, the peak pixel is found and a figure of merit is measured. The figure of merit is defined as  , where

, where  is the second moment of ΔX, Y for the star pairs associated with the peak pixel, and Np

is the number of star pairs in the peak. This figure of merit is thus most sensitive to a narrow distribution with many matched pairs. For the PS1 exposures, rotation offsets of (−10, −05, 00, 05, 10) and plate scales of (+1%, 0, −1%) of the nominal plate scale are tested. The best match among these 15 cross-correlation tests is selected and used to generate a better astrometry guess for the chip.

is the second moment of ΔX, Y for the star pairs associated with the peak pixel, and Np

is the number of star pairs in the peak. This figure of merit is thus most sensitive to a narrow distribution with many matched pairs. For the PS1 exposures, rotation offsets of (−10, −05, 00, 05, 10) and plate scales of (+1%, 0, −1%) of the nominal plate scale are tested. The best match among these 15 cross-correlation tests is selected and used to generate a better astrometry guess for the chip.

3.5. Pipeline Astrometric Calibration

The astrometry solution from the cross-correlation step above is again used to select matches between the reference stars and observed stars in the image. The matching radius starts off quite large, and a series of fits is performed to generate the transformation between chip and tangent-plane coordinates. Three clipping iterations are performed, with outliers >3σ rejected on each pass, where here σ is determined from the distribution of the residuals in each dimension (X, Y) independently. After each fit cycle, the matches are redetermined using a smaller radius and the fit retried.

The astrometry solutions from the independent chip fits are used to generate a single model for the camera-wide distortion terms. The goal is to determine the two-stage fit (chip  focal plane

focal plane  tangent plane). There are a number of degenerate terms between these two levels of transformation, most obviously between the parameters that define the constant offset from chip to focal plane (

tangent plane). There are a number of degenerate terms between these two levels of transformation, most obviously between the parameters that define the constant offset from chip to focal plane ( ) and those that define the offset from focal plane to tangent plane (

) and those that define the offset from focal plane to tangent plane ( ). We limit (

). We limit ( ) to be 0, 0 to remove this degeneracy.

) to be 0, 0 to remove this degeneracy.

The initial fit of the astrometry for each chip follows the distortion introduced by the camera: the apparent plate scale for each chip is the combination of the plate scale at the optical axis of the camera, modified by the local average distortion. To isolate the effect of distortion, we choose a single common plate scale for the set of chips and redefine the chip  sky calibrations as a set of chip

sky calibrations as a set of chip  focal-plane transformations using that common pixel scale. We can now compare the observed focal-plane coordinates, derived from the chip coordinates, and the tangent-plane coordinates, derived from the projection of the reference coordinates. One caveat is that the chip reference coordinates are also degenerate with the fitted distortion. To avoid being sensitive to the exact positions of the chips at this stage, we measure the local gradient between the focal-plane and tangent-plane coordinate systems. We then fit the gradient with a polynomial of order 1 less than the polynomial desired for the distortion fit. The coefficients of the gradient fit are then used to determine the coefficients for the polynomials representing the distortion.

focal-plane transformations using that common pixel scale. We can now compare the observed focal-plane coordinates, derived from the chip coordinates, and the tangent-plane coordinates, derived from the projection of the reference coordinates. One caveat is that the chip reference coordinates are also degenerate with the fitted distortion. To avoid being sensitive to the exact positions of the chips at this stage, we measure the local gradient between the focal-plane and tangent-plane coordinate systems. We then fit the gradient with a polynomial of order 1 less than the polynomial desired for the distortion fit. The coefficients of the gradient fit are then used to determine the coefficients for the polynomials representing the distortion.

Once the common distortion coming from the optics and atmosphere have been modeled, psastro determines polynomial transformations from the 60 chips to the focal-plane coordinate system. At this stage, five iterations of the chip fits are performed. Before each iteration, the reference stars and detected objects are matched using the current best set of transformations. These fits start with low order (1) and large matching radius. As the iterations proceed, the radius is reduced and the order is allowed to increase, up to third order for the final iterations.

3.6. Pipeline Photometric Calibration

After the astrometric calibration is determined, the photometric calibration is performed by psastro. When the reference stars are loaded, the apparent magnitude in the filter of interest is also loaded. Stars for which the reference magnitude is brighter than ( ,

,  ,

,  ,

,  ,

,  ) = (19, 19, 18.5, 18.5, 17.5) are used to determine the zero-points by comparison with the instrumental magnitudes. For the PV3 analysis, an outlier-rejecting median is used to measure the zero-point. For early versions of the pipeline analysis, when the reference catalog used synthetic magnitudes, it was necessary to search for the blue edge of the distribution: the synthetic magnitude poorly predicted the magnitudes of stars in the presence of significant extinction or for the very red stars, making the blue edge somewhat more reliable as a reference than the mean. Once the calibration was based on a reference catalog generated from Pan-STARRS1 photometry, this methods was no longer needed. Note that we do not fit for the airmass slope in this analysis. The nominal airmass slope is used for each filter; any deviation from the nominal value is effectively folded into the observed zero-point. The zero-point may be measured separately for each chip or as a single value for the entire exposure; the latter option was used for the PV3 analysis.

) = (19, 19, 18.5, 18.5, 17.5) are used to determine the zero-points by comparison with the instrumental magnitudes. For the PV3 analysis, an outlier-rejecting median is used to measure the zero-point. For early versions of the pipeline analysis, when the reference catalog used synthetic magnitudes, it was necessary to search for the blue edge of the distribution: the synthetic magnitude poorly predicted the magnitudes of stars in the presence of significant extinction or for the very red stars, making the blue edge somewhat more reliable as a reference than the mean. Once the calibration was based on a reference catalog generated from Pan-STARRS1 photometry, this methods was no longer needed. Note that we do not fit for the airmass slope in this analysis. The nominal airmass slope is used for each filter; any deviation from the nominal value is effectively folded into the observed zero-point. The zero-point may be measured separately for each chip or as a single value for the entire exposure; the latter option was used for the PV3 analysis.

3.7. Outputs

The calibrations determined by psastro are saved as part of the header information in the output FITS tables. For each exposure, a single multi-extension FITS table is written. In these files, the measurements from each chip are written as a separate FITS table. A second FITS extension for each chip is used to store the header information from the original chip image. The original chip header is modified so that the extension corresponds to an image with no pixel data: NAXISis set to 0, even though NAXIS1 and NAXIS2 are retained with the original dimensions of the chip. A pixel-less primary header unit (PHU) is generated with a summary of some of the important and common chip-level keywords (e.g., DATE-OBS). The astrometric transformation information for each chip is saved in the corresponding header using standard (and some nonstandard) WCS keywords. For the two-level astrometric model, the PHU header carries the astrometric transformation related to the projection and the camera-wide distortions. Photometric calibrations are written as a set of keywords to individual chip headers and, if the calibration is performed at the exposure level, to the PHU. The photometry calibration keywords are:

- 1.ZPT_REF : the nominal zero-point for this filter

- 2.ZPT_OBS : the measured zero-point for this chip/exposure

- 3.ZPT_ERR : the standard deviation of ZPT_OBS

- 4.ZPT_NREF : the number of stars used to measure ZPT_OBS

- 5.ZPT_MIN : minimum reference magnitude included in analysis

- 6.ZPT_MAX : maximum reference magnitude included in analysis

The keyword ZPT_OBS is used to set the initial zero-point when the data from the exposure are loaded into the DVO database.

4. Calibration Database

Data from the GPC1 chip images, the stack images, and the warp images are loaded into the DVO calibration database using the real-time analysis astrometric calibration to guide the association of detections into objects. After the full PV3 DVO database was constructed, including all of the chip, stack, and warp detections, several external catalogs were merged into the database. First, the complete 2MASS PSC was loaded into a stand-alone DVO database, which was then merged into the PV3 master database. Next, the DVO database of synthetic photometry in the PS1 bands (see Section 3.2) was merged in. Next, the full Tycho database was added, followed by the AllWISE database. After the Gaia DR1 in August 2016 (Gaia Collaboration et al. 2016), we generated a DVO database of the Gaia positional and photometric information and merged that into the master PV3 3π DVO database.

The master DVO database is used to perform the full photometric and astrometric calibration of the PS1 data. During these analysis steps, a wide variety of conditions are noted for individual measurements, for the objects (either as a whole or for specific filters) and for the images. A set of bit-valued flags is used in the database to record these conditions. Table 1 lists the flags specific to individual measurements. These values are stored in the DVO database in the field Measure.dbFlags and exposed in the public database (PSPS; Paper VI) in the fields Detection.infoFlag3, StackObjectThin.XinfoFlag3 (where X is one of grizy), and ForcedWarpMeasurement.FinfoFlag3. Table 2 lists the flags that are set for each filter for individual objects in the database. These values are recorded in the DVO database field SecFilt.flags and are exposed in PSPS in the fields MeanObject.XFlags and StackObjectThin.XinfoFlag4, where X in both cases is one of grizy. Table 3 lists the flags specific to an object as a whole. These values are stored in the DVO database field Average.flags and are exposed in PSPS in the field MeanObject.objInfoFlag. Table 4 lists the flags raised for images. These flags are stored in the DVO database field Image.flags and are exposed in PSPS in the field ImageMeta.qaFlags. The types of conditions that are recorded by these bits range from information about the presence of external measurements (e.g., 2MASS or WISE) to determinations of good- or bad-quality measurements for astrometry or photometry. In the sections below, the flag values in these tables are described where appropriate. Note that some of the listed bits are either ephemeral (used internal to specific programs) or are not relevant to the current DR2 analysis and reserved for future use.

Table 1. Per-measurement Flag Bit Values

| Bit Name | Bit Value | Description |

|---|---|---|

| ID_MEAS_NOCAL | 0x00000001 | detection ignored for this analysis (photcode, time range)—internal only |

| ID_MEAS_POOR_PHOTOM | 0x00000002 | detection is photometry outlier (not used for PV3) |

| ID_MEAS_SKIP_PHOTOM | 0x00000004 | detection was ignored for photometry measurement (not used for PV3) |

| ID_MEAS_AREA | 0x00000008 | detection near image edge (not used for PV3) |

| ID_MEAS_POOR_ASTROM | 0x00000010 | detection is astrometry outlier |

| ID_MEAS_SKIP_ASTROM | 0x00000020 | detection was not used for image calibration (not reported for PV3) |

| ID_MEAS_USED_OBJ | 0x00000040 | detection was used during update objects |

| ID_MEAS_USED_CHIP | 0x00000080 | detection was used during update chips (not saved for PV3) |

| ID_MEAS_BLEND_MEAS | 0x00000100 | detection is within the radius of multiple objects (not used for PV3) |

| ID_MEAS_BLEND_OBJ | 0x00000200 | multiple detections within the radius of object (not used for PV3) |

| ID_MEAS_WARP_USED | 0x00000400 | measurement used to find mean warp photometry |

| ID_MEAS_UNMASKED_ASTRO | 0x00000800 | measurement was not masked in final astrometry fit |

| ID_MEAS_BLEND_MEAS_X | 0x00001000 | detection is within the radius of multiple objects across catalogs (not used for PV3) |

| ID_MEAS_ARTIFACT | 0x00002000 | detection is thought to be non-astronomical (not used for PV3) |

| ID_MEAS_SYNTH_MAG | 0x00004000 | magnitude is synthetic (not used for DR2) |

| ID_MEAS_PHOTOM_UBERCAL | 0x00008000 | externally supplied zero-point from ubercal analysis |

| ID_MEAS_STACK_PRIMARY | 0x00010000 | this stack measurement is in the primary skycell |

| ID_MEAS_STACK_PHOT_SRC | 0x00020000 | this measurement supplied the stack photometry |

| ID_MEAS_ICRF_QSO | 0x00040000 | this measurement is an ICRF reference position (not used for PV3) |

| ID_MEAS_IMAGE_EPOCH | 0x00080000 | this measurement is registered to the image epoch (not used for PV3) |

| ID_MEAS_PHOTOM_PSF | 0x00100000 | this measurement is used for the mean PSF mag |

| ID_MEAS_PHOTOM_APER | 0x00200000 | this measurement is used for the mean ap mag |

| ID_MEAS_PHOTOM_KRON | 0x00400000 | this measurement is used for the mean Kron mag |

| ID_MEAS_MASKED_PSF | 0x01000000 | this measurement is masked based on IRLS weights for mean PSF mag |

| ID_MEAS_MASKED_APER | 0x02000000 | this measurement is masked based on IRLS weights for mean ap mag |

| ID_MEAS_MASKED_KRON | 0x04000000 | this measurement is masked based on IRLS weights for mean Kron mag |

| ID_MEAS_OBJECT_HAS_2MASS | 0x10000000 | measurement comes from an object with 2MASS data |

| ID_MEAS_OBJECT_HAS_GAIA | 0x20000000 | measurement comes from an object with Gaia data |

| ID_MEAS_OBJECT_HAS_TYCHO | 0x40000000 | measurement comes from an object with Tycho data |

| These DVO flags correspond to PSPS flags DetectionFlags3 (Paper VI, Table 18), but without the leading ID_MEAS_. | ||

Download table as: ASCIITypeset image

Table 2. Relphot Per-filter Info Flag Bit Values

| Bit Name | Bit Value | Description |

|---|---|---|

| ID_SECF_STAR_FEW | 0x00000001 | Used within relphot: skip star (not reported for PV3) |

| ID_SECF_STAR_POOR | 0x00000002 | Used within relphot: skip star (not reported for PV3) |

| ID_SECF_USE_SYNTH | 0x00000004 | Synthetic photometry used in average measurement (not used in PV3) |

| ID_SECF_USE_UBERCAL | 0x00000008 | Ubercal photometry used in average measurement |

| ID_SECF_HAS_PS1 | 0x00000010 | PS1 photometry used in average measurement |

| ID_SECF_HAS_PS1_STACK | 0x00000020 | PS1 stack photometry exists |

| ID_SECF_HAS_TYCHO | 0x00000040 | Tycho photometry used for synth mags (not used in PV3) |

| ID_SECF_FIX_SYNTH | 0x00000080 | Synth mags repaired with zpt map (not used in PV3) |

| ID_SECF_RANK_0 | 0x00000100 | Average magnitude uses rank 0 values |

| ID_SECF_RANK_1 | 0x00000200 | Average magnitude uses rank 1 values |

| ID_SECF_RANK_2 | 0x00000400 | Average magnitude uses rank 2 values |

| ID_SECF_RANK_3 | 0x00000800 | Average magnitude uses rank 3 values |

| ID_SECF_RANK_4 | 0x00001000 | Average magnitude uses rank 4 values |

| ID_SECF_OBJ_EXT_PSPS | 0x00002000 | In PSPS ID_SECF_OBJ_EXT is saved here so it fits within 16 bits |

| ID_SECF_STACK_PRIMARY | 0x00004000 | PS1 stack photometry includes a primary skycell |

| ID_SECF_STACK_BESTDET | 0x00008000 | PS1 stack best measurement is a detection (not forced) |

| ID_SECF_STACK_PRIMDET | 0x00010000 | PS1 stack primary measurement is a detection (not forced) |

| ID_SECF_STACK_PRIMARY_MULTIPLE | 0x00020000 | PS1 stack object has multiple primary measurements |

| ID_SECF_HAS_SDSS | 0x00100000 | This photcode has SDSS photometry (not used for PV3) |

| ID_SECF_HAS_HSC | 0x00200000 | This photcode has HSC photometry (not used for PV3) |

| ID_SECF_HAS_CFH | 0x00400000 | This photcode has CFH photometry (not used for PV3) |

| ID_SECF_HAS_DES | 0x00800000 | This photcode has DES photometry(not used for PV3) |

| ID_SECF_OBJ_EXT | 0x01000000 | Extended in this band |

| These DVO flags correspond to PSPS flags ObjectFilterFlags (PaperVI, Table 13), but without the leading ID_. | ||

Download table as: ASCIITypeset image

Table 3. Per-object Flag Bit Values

| Bit Name | Bit Value | Description |

|---|---|---|

| ID_OBJ_FEW | 0x00000001 | used within relphot: skip star (not reported for PV3) |

| ID_OBJ_POOR | 0x00000002 | used within relphot: skip star (not reported for PV3) |

| ID_OBJ_ICRF_QSO | 0x00000004 | object IDed with known ICRF quasar (not used for PV3) |

| ID_OBJ_HERN_QSO_P60 | 0x00000008 | identified as likely QSO (Hernitschek et al. 2016), PQSO ≥ 0.60 |

| ID_OBJ_HERN_QSO_P05 | 0x00000010 | identified as possible QSO (Hernitschek et al. 2016), PQSO ≥ 0.05 |

| ID_OBJ_HERN_RRL_P60 | 0x00000020 | identified as likely RR Lyra (Hernitschek et al. 2016), PRRLyra ≥ 0.60 |

| ID_OBJ_HERN_RRL_P05 | 0x00000040 | identified as possible RR Lyra (Hernitschek et al. 2016), PRRLyra ≥ 0.05 |

| ID_OBJ_HERN_VARIABLE | 0x00000080 | identified as a variable by Hernitschek et al. (2016) |

| ID_OBJ_TRANSIENT | 0x00000100 | identified as a nonperiodic (stationary) transient (not used for PV3) |

| ID_OBJ_HAS_SOLSYS_DET | 0x00000200 | identified with a known solar system object (asteroid or other) |

| ID_OBJ_MOST_SOLSYS_DET | 0x00000400 | most detections from a known solar system object |

| ID_OBJ_LARGE_PM | 0x00000800 | star with a large proper motion (not used for PV3) |

| ID_OBJ_RAW_AVE | 0x00001000 | simple weighted-average position was used (no IRLS fitting) |

| ID_OBJ_FIT_AVE | 0x00002000 | average position was fitted |

| ID_OBJ_FIT_PM | 0x00004000 | proper-motion model was fitted |

| ID_OBJ_FIT_PAR | 0x00008000 | full parallax and proper-motion model was fitted |

| ID_OBJ_USE_AVE | 0x00010000 | average position used (no proper motion or parallax) |

| ID_OBJ_USE_PM | 0x00020000 | proper-motion fit used (no parallax) |

| ID_OBJ_USE_PAR | 0x00040000 | full fit with proper motion and parallax |

| ID_OBJ_NO_MEAN_ASTROM | 0x00080000 | mean astrometry could not be measured |

| ID_OBJ_STACK_FOR_MEAN | 0x00100000 | stack position used for mean astrometry |

| ID_OBJ_MEAN_FOR_STACK | 0x00200000 | mean astrometry could not be measured |

| ID_OBJ_BAD_PM | 0x00400000 | failure to measure proper-motion model |

| ID_OBJ_EXT | 0x00800000 | extended in Pan-STARRS data |

| ID_OBJ_EXT_ALT | 0x01000000 | extended in external data (2MASS) |

| ID_OBJ_GOOD | 0x02000000 | good-quality measurement in Pan-STARRS data |

| ID_OBJ_GOOD_ALT | 0x04000000 | good-quality measurement in external data (2MASS) |

| ID_OBJ_GOOD_STACK | 0x08000000 | good-quality object in the stack (>1 good stack) |

| ID_OBJ_BEST_STACK | 0x10000000 | the primary stack measurements are the "best" measurements |

| ID_OBJ_SUSPECT_STACK | 0x20000000 | suspect object in the stack (>1 good or suspect stack, <2 good) |

| ID_OBJ_BAD_STACK | 0x40000000 | poor-quality object in the stack (<1 good stack) |

| These DVO flags correspond to PSPS flags ObjectInfoFlags (Paper VI, Table 11), but without the leading ID_OBJ_. | ||

Download table as: ASCIITypeset image

Table 4. Per-image Flag Bit Values

| Bit Name | Bit Value | Description |

|---|---|---|

| ID_IMAGE_NEW | 0x00000000 | no calibrations yet attempted |

| ID_IMAGE_PHOTOM_NOCAL | 0x00000001 | user-set value used within relphot: ignore |

| ID_IMAGE_PHOTOM_POOR | 0x00000002 | relphot says image is bad (dMcal > limit) |

| ID_IMAGE_PHOTOM_SKIP | 0x00000004 | user-set value: assert that this image has bad photometry |

| ID_IMAGE_PHOTOM_FEW | 0x00000008 | currently too few measurements for photometry |

| ID_IMAGE_ASTROM_NOCAL | 0x00000010 | user-set value used within relastro: ignore |

| ID_IMAGE_ASTROM_POOR | 0x00000020 | relastro says image is bad (dR, dD > limit) |

| ID_IMAGE_ASTROM_FAIL | 0x00000040 | relastro fit diverged, fit not applied |

| ID_IMAGE_ASTROM_SKIP | 0x00000080 | user-set value: assert that this image has bad astrometry |

| ID_IMAGE_ASTROM_FEW | 0x00000100 | currently too few measurements for astrometry |

| ID_IMAGE_PHOTOM_UBERCAL | 0x00000200 | externally supplied photometry zero-point from ubercal analysis |

| ID_IMAGE_ASTROM_GMM | 0x00000400 | image was fitted to positions corrected by the galaxy motion model |

| These DVO flags correspond to PSPS flags ImageFlags (Paper VI, Table 14), but without the leading ID_IMAGE_. | ||

Download table as: ASCIITypeset image

5. Photometry Calibration

5.1. Ubercal Analysis

The photometric calibration of the DVO database starts with the "ubercal" analysis technique as described by Schlafly et al. (2012). This analysis is performed by the group at Harvard, loading data from the raw detection files into their instance of the Large Survey Database (LSD; Juric 2011), a system similar to DVO used to manage the detections and determine the calibrations.

In this first stage, the goal is to determine an initial highly reliable collection of zero-points for exposures without any confounding systematic error sources. To this end, only photometric nights are selected and all other exposures are ignored. Each night is allowed to have a single fitted zero-point (corresponding to the sum zpref + Mcal below) and a single fitted value for the airmass extinction coefficient (Kλ ) per filter. The zero-points and extinction terms are determined as a least-squares minimization process using the repeated measurements of the same stars from different nights to tie nights together. This analysis relies on the chemical and thermodynamic stability of the atmosphere during a photometric night so that the zero-point and extinction slope are stable as a result. Flat-field corrections are also determined as part of the minimization process. In the original (PV1) ubercal analysis, Schlafly et al. (2012) determined flat-field corrections for 2 × 2 subregions of each chip in the camera and four distinct time periods ("seasons"), ranging from as short as 1 month to nearly 15 months. Later analysis (PV2) used an 8 × 8 grid of flat-field corrections to good effect.

The ubercal analysis was rerun for PV3 by the Harvard group. For the PV3 analysis, under the pressure of time to complete the analysis, we chose to use only a 2 × 2 grid per chip as part of the ubercal fit and to leave higher frequency structures to the later analysis. A fifth flat-field season consisting of nearly the last 2 yr of data was also included for PV3. In retrospect, as we show below, the data from the latter part of the survey would probably benefit from additional flat-field seasons.

By excluding nonphotometric data and only fitting two parameters for each night, the ubercal solution is robust and rigid. It is not subject to unexpected drift or the sensitivity of the solution to the vagaries of the data set. The ubercal analysis is also especially aided by the inclusion of multiple Medium-Deep field observations every night, helping to tie down overall variations of the system throughput and acting as internal standard star fields. The resulting photometric system is shown by Schlafly et al. (2012) to have zero-points that are consistent with those determined using SDSS as an external reference, with standard deviations of (8.0, 7.0, 9.0, 10.7, 12.4) mmag in ( ,

,  ,

,  ,

,  ,

,  ). Internal comparisons show the zero-points of individual exposures to be consistent with the ubercal solution with a standard deviation of 5 mmag. The former is an upper limit on the overall system zero-point stability, as it includes errors from the SDSS zero-points, while the latter is likely a lower limit. As we discuss below, this zero-point consistency is confirmed by our additional external comparison.

). Internal comparisons show the zero-points of individual exposures to be consistent with the ubercal solution with a standard deviation of 5 mmag. The former is an upper limit on the overall system zero-point stability, as it includes errors from the SDSS zero-points, while the latter is likely a lower limit. As we discuss below, this zero-point consistency is confirmed by our additional external comparison.

The overall zero-point for each filter is not naturally determined by the ubercal analysis; an external constraint on the overall photometric system is required for each filter. Schlafly et al. (2012) used photometry of the MD09 Medium-Deep field to match the photometry measured by Tonry et al. (2012) on the reference photometric night of MJD 55744 (UT 02 July 2011). Scolnic et al. (2014), 2015) have reexamined the photometry of Calspec standards (Bohlin 1996) as observed by PS1. Scolnic et al. (2014) reject two of the seven stars used by Tonry et al. (2012) and add photometry of five additional stars. Scolnic et al. (2015) further reject measurements of Calspec standards obtained close to the center of the camera field of view where the PSF size and shape change very rapidly. The result of this analysis modifies the overall system zero-points by 20–35 mmag compared with the system determined by Schlafly et al. (2012). We note that this correction to the overall system zero-point is large compared to the relative zero-point consistency noted by Schlafly et al. (2012) because the absolute zero-points are not independently constrained by the ubercal analysis.

5.2. Apply Zero-points

The ubercal analysis above results in a table of zero-points for all exposures considered to be photometric, along with a set of low-resolution flat-field corrections. It is now necessary to use this information to determine zero-points for the remaining exposures and to improve the resolution of the flat-field correction. This analysis is done within the IPP DVO database system.

The ubercal zero-points and the flat-field correction data are loaded into the PV3 DVO database using the program setphot. This program converts the reported zero-point and flat-field values to the DVO internal representation in which the zero-point of each image is split into three main components:

where zpref and Kλ are static values for each filter representing, respectively, the nominal reference zero-point and the slope of the trend with respect to the airmass (ζ) for each filter. These static values are listed in Table 5. When setphot was run, these static zero-points have been adjusted by the Calspec offsets listed in Table 5 based on the analysis of Calspec standards by Scolnic et al. (2015). These offsets bring the photometric system defined by the ubercal analysis into alignment with Scolnic et al. (2015). The value Mcal is the offset needed by each exposure to match the ubercal value or to bring the non-ubercal exposures into agreement with the rest of the exposures, as discussed below. The flat-field information is encoded in a table of flat-field offsets as a function of time, filter, and camera position. Each image that is part of the ubercal subset is marked with a bit in the field Image.flags: ID_IMAGE_PHOTOM_UBERCAL = 0x00000200.

Table 5. PS1/GPC1 Zero-points and Coefficients

| Filter | Zero-point | Zero-point | Airmass |

|---|---|---|---|

| (Raw) | (Calspec) | Slope | |

| 24.563 | 24.583 | 0.147 |

| 24.750 | 24.783 | 0.085 |

| 24.611 | 24.635 | 0.044 |

| 24.240 | 24.278 | 0.033 |

| 23.320 | 23.331 | 0.073 |

Download table as: ASCIITypeset image

When setphot applies the ubercal information to the image tables, it also updates the individual measurements associated with those images. In the DVO database schema, the normalized instrumental magnitude, minst = −2.5 log10 (DN/sec) is stored for each measurement, with an arbitrary (but fixed) constant offset of 25 to place the modified instrumental magnitudes into approximately the correct range. Associated with each measurement are two correction magnitudes: Mcal and Mflat, along with the airmass for the measurement, calculated using the altitude of the individual detection as determined from the R.A., decl., the observatory latitude, and the sidereal time. For a camera with the field of view of the PS1 GPC1, the airmass may vary significantly within the field of view, especially at low elevations. In the worst cases, at the celestial pole, the airmass within a single exposure may span a range of 2.56–2.93. The complete calibrated ("relative") magnitude is determined from the stored database values as

The calibration offsets, Mcal and Mflat, represent the per-exposure zero-point correction and the slowly changing flat-field correction, respectively. These two values are split so the flat-field corrections may be determined and applied independently from the time-resolved zero-point variations. Note that the above corrections are applied to each of the types of measurements stored in the database, PSF, Aperture, and Kron. The calibration math remains the same regardless of the kind of magnitude being measured (see, however, Section 5.3.2 for the difference in the stack calibration). Also note that for the moment, this discussion should only be considered as relevant to the chip measurements. Below we discuss the implications for the stack and warp measurements.

When the ubercal zero-points and flat-field data are loaded, setphot updates the Mcal values for all measurements that have been derived from the ubercal images. These measurements are also marked in the field Measure.dbFlags with the bit ID_MEAS_PHOTOM_UBERCAL = 0x00008000. At this stage, setphot also updates the values of Mflat for all GPC1 measurements in the appropriate filters.

5.3. Relphot Analysis

Relative photometry is used to determine the zero-points of the exposures that were not included in the ubercal analysis. The relative photometry analysis has been described in the past by Magnier et al. (2013). We review that analysis here, along with specific updates for PV3.

As described above, the instrumental magnitude and the calibrated magnitude are related by additive magnitude offsets, which account for effects such as the instrumental variations and atmospheric attenuation (Equation 12). From the collection of measurements, we can generate an average magnitude for a single star (or other object):

We find that the color difference of the different chips can be ignored and set the color-trend slope to 0.0. Note that we only use a single mean airmass extinction term for all exposures—the difference between the mean and the specific value for a given night is taken up as an additional element of the atmospheric attenuation.

We write a global χ2 equation, which we attempt to minimize by finding the best mean magnitudes for all objects and the best Mcal offset for each exposure:

If everything were fitted at once and allowed to float, this system of equations would have Nexposures + Nstars ∼ 2 × 105 + N × 109 unknowns. We solve the system of equations by iteration, solving first for the best set of mean magnitudes in the assumption of zero clouds, then solving for the clouds implied by the differences from these mean magnitudes. Even with 1–2 magnitudes of extinction, the offsets converge to the millimagnitude level within eight iterations.

Only high-quality measurements are used in the relative photometry analysis of the exposure zero-points. We use only the brighter objects, limiting the density to a maximum of 4000 objects per square degree (lower in areas where we have more observations). When limiting the density, we prefer objects which are brighter (but not saturated), and those with the most measurements (to ensure better coverage over the available images).

There are a few classes of outliers that we need to be careful to detect and avoid. First, any single measurement may be deviant for a number of reasons (e.g., it lands in a bad region of the detector, contamination by a diffraction spike or other optical artifact, etc.). We attempt to exclude these poor measurements in advance by rejecting measurements that the photometric analysis has flagged the result as suspicious. We reject detections that are excessively masked; these include detections that are too close to other bright objects, diffraction spikes, ghost images, or the detector edges. However, these rejections do not catch all cases of bad measurements.

After the initial iterations, we also perform outlier rejections based on the consistency of the measurements. For each star, we use a two-pass outlier clipping process. We first define a robust median and sigma from the inner 50% of the measurements. Measurements that are more than 5σ from this median value are rejected, and the mean and standard deviation (weighted by the inverse error) are recalculated. We then reject detections that are more than 3σ from the recalculated mean.

Suspicious (e.g., variable or otherwise poorly measured) stars are also excluded from the analysis. We exclude stars with reduced χ2 values more than 20.0, or more than twice the median, whichever is larger. We also exclude stars with standard deviation (of the measurements used for the mean) greater than 0.005 mags or twice the median standard deviation, whichever is greater.

Similarly for images, we exclude those with more than 2 magnitudes of extinction or for which the standard deviation of the zero-points are more than 0.075 mags or twice the median value, whichever is greater. These cuts are somewhat conservative to limit us to only good measurements. The images and stars rejected above are not used to calculate the system of zero-points and mean magnitudes. These cuts are updated several times as the iterations proceed. After the iterations have completed, the images that have been rejected are calibrated based on their overlaps with other images.

We note that the goal of these rejections is to avoid biasing the zero-points by including clearly inconsistent or poor-quality measurements. The criteria have been chosen by inspection of the data set to avoid rejecting too many valid measurements, but the specific numbers are admittedly ad hoc. However, as long as the exclusions do not bias the results, the exact choices are not critical. The only exclusion we make that is not symmetric with respect to the average values is the choice to reject images with substantial extinction. However, we believe this choice is justified because we know real images with clouds will often have significant extinction variations across the field and will thus be poorly represented by a single-exposure zero-point.

We overweight the ubercal measurements in order to tie the relative photometry system to the ubercal zero-points. Ubercal images and measurements from those images are not allowed to float in the relative photometry analysis. Detections from the ubercal images are assigned weights of 10x their default (inverse-variance) weight. The choice of 10, while somewhat arbitrary, is chosen to ensure that the ubercal data will dominate the result unless it represents much less than 10% of the measurements. Because most areas of the sky have at least a few epochs of ubercal data per filter, only for rare regions will the non-ubercal data drive the results. The calculation of the formal error on the mean magnitudes propagates this additional weight, so that the errors on the ubercal observations dominates where they are present:

The calculation of the relative photometry zero-points is performed for the entire 3π data set in a single, highly parallelized analysis. The measurement and object data in the DVO database are distributed across a large number of computers in the IPP cluster: for PV3, 100 parallel hosts are used. These machines by design control data from a large number of unconnected small patches on the sky, with the goal of speeding queries for arbitrary regions of the sky. As a result, this parallelization is entirely inappropriate as the basis of the relative photometry analysis. For the relative photometry calculation (and later for relative astrometry calculation), the sky is divided into a number of large, contiguous regions, each bounded by lines of constant R.A. and decl., 73 regions in the case of the PV3 analysis. A separate computer, called a "region host," is responsible for each of these regions: that computer is responsible for calculating the mean magnitudes of the objects that land within its region and for determining the exposure zero-points for exposures for which the center of the exposure lands in the region of responsibility.

The iterations described above (calculate mean magnitudes, calculate zero-points, calculate new measurements) are performed on each of the 73 region hosts in parallel. However, between certain iteration steps, the region hosts must share some information. After mean object magnitudes are calculated, the region hosts must share the object magnitudes for the objects that are observed by exposures controlled by neighboring region hosts. After image calibrations have been determined by each region host, the image calibrations must be shared with the neighboring region hosts so measurement values associated with objects owned by a neighboring region host may be updated.

The complete workflow of the all-sky relative photometry analysis starts with an instance of the program running on a master computer. This machine loads the image database table and assigns the images to the 73 region hosts. A process is then launched on each of the region hosts which is responsible for managing the image calibration analysis on that host. These processes in turn make an initial request of the photometry information (object and measurement) from the 100 parallel DVO partition machines. In practice, the processes on the region hosts are launched in series by the master process to avoid overloading the DVO partition machines with requests for photometry data from all region hosts at once. Once all of the photometry have been loaded, the region hosts perform their iterations, sharing the data that they need to share with their neighbors and blocking while they wait for the data they need to receive from their neighbors. The management of this stage is performed by communication between the region hosts. At the end of the iterations, the regions hosts write out their final image calibrations. The master machine then loads the full set of image calibrations and then applies these calibrations back to all measurements in the database, updating the mean photometry as part of this process. The calculations for this last step are performed in parallel on the DVO partition machines.

With the above software, we are able to perform the entire relphot analysis for the full 3π region at once, avoiding any possible edge effects. The region host machines have internal memory ranging from 96 to 192 GB. Regions are drawn, and the maximum allowed density was chosen, to match the memory usage to the memory available on each machine. A total of 9.8 TB of RAM was available for the analysis, allowing for up to 6000 objects per square degree in the analysis.

5.3.1. Photometric Flat Field

For PV3, the relphot analysis was performed two times. The first analysis used only the flat-field corrections determined by the ubercal analysis, with a resolution of 2 × 2 flat-field values for each GPC1 chip (corresponding to ≈2400 pixels), and five separate flat-field "seasons." However, we knew from prior studies that there were significant flat-field structures on smaller scales. We used the data in DVO after the initial relphot calibration to measure the flat-field residual with much finer resolution: 124 × 124 flat-field values for each GPC1 chip (40 × 40 pixels per point). For this analysis, we did not use the entire database, but instead extracted relatively bright, but unsaturated measurements (instrumental magnitudes between −10.5 and −14.5) for stars with at least eight measurements, including three used to measure the average photometry in the corresponding filter. These measurements were extracted from a collection of 10 sky regions in both low and high stellar density regions covering a total of ∼5800 square degrees of sky. Unlike the lower-resolution photometric flat fields determined in the ubercal analysis, the photometric flat fields calculated in this analysis are static in time; they supplement the flats from the ubercal analysis. A total of 1.95 billion measurements were extracted for this analysis.

We then used setphot to apply this new flat-field correction, as well as the ubercal flat-field corrections, to the data in the database. At this point, we reran the entire relphot analysis to determine zero-points and to set the average magnitudes.

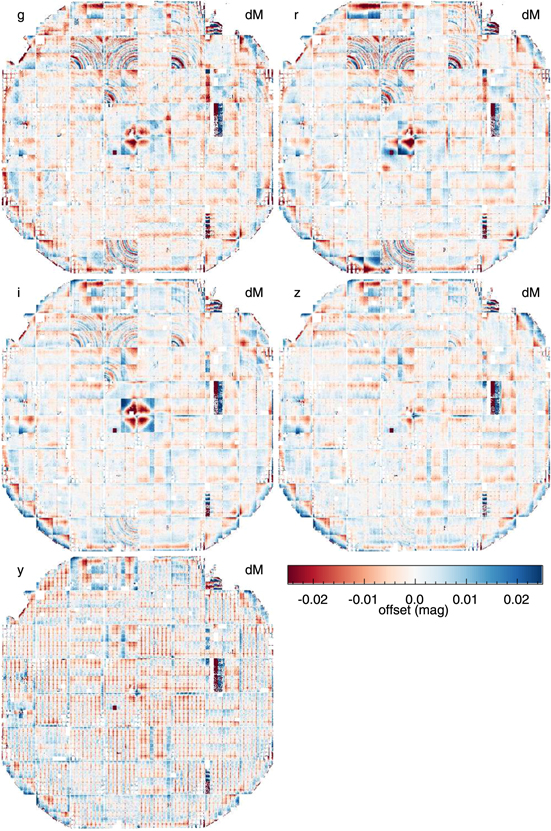

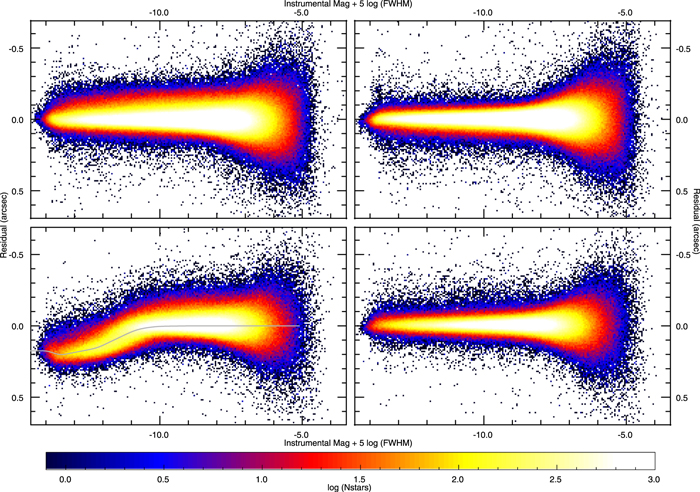

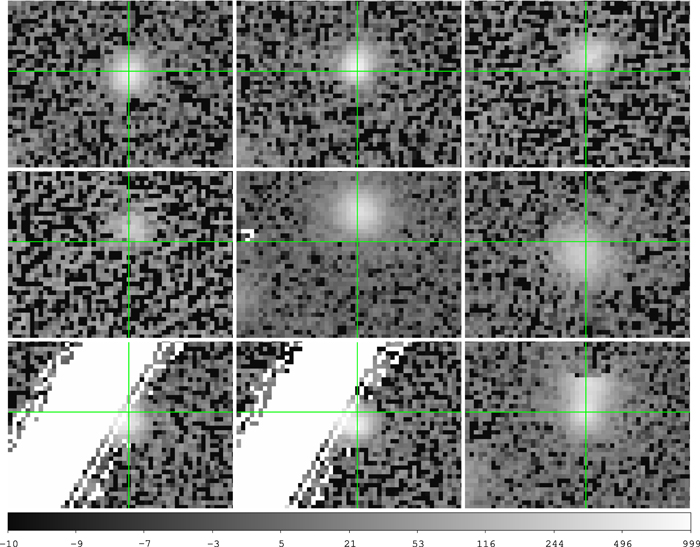

Figure 2 shows the high-resolution photometric flat-field corrections applied to the measurements in the DVO database. These flat fields make low-level corrections of up to ≈0.03 magnitudes. Several features of interest are apparent in these images.

Figure 2. High-resolution flat-field correction images for the five filters grizy. These images are shown in standard camera orientation with OTA00 in the lower-left corner and OTA07 in the upper-right corner. Fine "tree-ring" structures are visible in several chips, especially in the bluer bands. The effect of the central "tent" on the photometry, presumably due to the rapidly varying PSF in this region, may also be seen.

Download figure:

Standard image High-resolution imageFirst, at the center of the camera is an important structure caused by the telescope optics, which we call the "tent." In this portion of the focal plane, the image quality degrades very quickly. The photometry is systematically biased because the PSF model cannot follow the real changes in the PSF shape on these small scales. As is evident in the image, the effect is such that the flux measured using a PSF model is systematically low, as expected if the PSF model is too small.

The square outline surrounding the "tent" is due to the 2 × 2 sampling per chip used for the ubercal flat-field corrections. The imprint of the ubercal flat field is visible throughout this high-resolution flat field: in regions where the underlying flat-field structure follows a smooth gradient across a chip, the ubercal flat field partly corrects the structure, leaving behind a sawtooth residual. The high-resolution flat field corrects the residual structures well.

Especially notable in the bluer filters is a pattern of quarter circles centered on the corners of the chips. These patterns are similar to the "tree rings" reported by the Dark Energy Survey team (Plazas et al. 2014) and identified as a result of the lateral migration of electrons in the detectors owing to electric fields due to dopant variations. Unlike the tree-ring features discussed by these other authors, the strong features observed in the GPC1 photometry are not caused by lateral electric fields but rather by variations in the vertical electron diffusion rate due to electric field variations perpendicular to the plane of the detector. This effect is discussed in detail by Magnier et al. (2018). The photometric features are due to low-level changes in the PSF size, which we attribute to the variable charge diffusion.

Other features include some poorly responding cells (e.g., in OTA14) and effects at the edges of chips, possibly where the PSF model fails to follow the changes in the PSF.

5.3.2. Stack and Warp Photometric Calibration

For stacks and warps, the image calibrations were determined after the relative photometry was performed on the individual chips. Each stack and each warp was tied via relative photometry to the average magnitudes from the chip photometry, as described below. In this case, no flat-field corrections were applied. For the stacks, such a correction would not be possible after the stack has been generated because multiple chip coordinates contribute to each stack pixel coordinate. For the warps, it is in principle possible to map back to the corresponding chip, but the information was not available in the DVO database, and thus it was not possible at this time to determine the flat-field correction appropriate for a given warp. This latter effect is one of several that degrade the warp photometry compared to the chip photometry at the bright end.

For the stack calibration, we calculate two separate zero-points: one for photometry tied to the PSF model and a second for the aperture-like measurements (total aperture magnitudes, Kron magnitude, circular fixed-radius aperture magnitudes). This split is needed because of the limited quality of the stack PSF photometry due to the highly variable PSF in the stacks. Aperture magnitudes, however, are not significantly affected by the PSF variations. We therefore tie the PSF magnitudes to the average of the chip photometry PSF magnitudes, but the aperture-like magnitudes are tied by equating the stack Kron magnitudes to the average chip Kron magnitudes.

5.4. Object Photometry

Once the image photometric calibrations (zero-points and flat-field corrections) have been determined and applied to the measurements from each image, we can calculate the best average photometry for each object. We calculate average magnitudes for the chip photometry; for the forced-warp photometry, we calculate the average of the fluxes and report both average fluxes and the equivalent average magnitudes. Because the chip photometry requires a signal-to-noise ratio of 5 for a detection, the bias introduced by averaging magnitudes is small. As the forced-warp photometry measurements have low signal-to-noise ratio, with potentially negative flux values, it is necessary to average the fluxes.

The first challenge is to select which measurements to use in the calculation of the average photometry. For the 3π Survey data, a single object may have anywhere from zero to roughly 20 measurements in a given filter. Not all measurements are of equal value, but we need a process that assigns an average photometry value in all cases (and a way for the user to recognize average values that should be treated with care). As discussed in more detail below, we have defined a triage process to select the "best" set of measurements available in each filter for each object. Once the set of measurements to be used in the analysis is determined, we use the iteratively reweighted least-squares (IRLS) technique (see, e.g., Green 1984) to determine the average photometry given the possible presence of non-Gaussian outliers even within the best subset of measurements.

5.4.1. Selection of Measurements

To choose the measurements that will be used in the analysis, we give each measurement a rank value based on a variety of tests of the quality of the measurement, with lower values being better quality. In the description below, the ranking values are defined as follows:

- 1.rank 0: perfect measurement (no quality concerns)

- 2.rank 1: PSF "perfect pixel" quality factor (PSF_QF_PERFECT) < 0.85. PSF_QF_PERFECT measures the PSF-weighted fraction of pixels that are not masked (see Paper IV).

- 3.rank 2: photometry analysis flag field (photFlags) has one of the "poor-quality" bits raised. These bits are listed below; OR-ed together, they have the hexadecimal value 0xe0440130.

- (a)PM_SOURCE_MODE_POOR = 0x00000010: fit succeeded, but with low signal-to-noise ratio or high chi-square

- (b)PM_SOURCE_MODE_PAIR = 0x00000020: source fitted with a double PSF

- (c)PM_SOURCE_MODE_BLEND = 0x00000100: source is a blend with other sources

- (d)PM_SOURCE_MODE_BELOW_MOMENTS_SN = 0x00040000: moments not measured due to low signal-to-noise ratio

- (e)PM_SOURCE_MODE_BLEND_FIT = 0x00400000: source was fitted as a blended object

- (f)PM_SOURCE_MODE_ON_SPIKE = 0x20000000: peak lands on diffraction spike

- (g)PM_SOURCE_MODE_ON_GHOST = 0x40000000: peak lands on ghost or glint

- (h)PM_SOURCE_MODE_OFF_CHIP = 0x80000000: peak lands off edge of chip

- 4.rank 3: poor measurement as defined by relphot. This may be due to a fixed allowed region on the detector or due to an outlier clipped analysis. In the 3π PV3 calibration, these tests were not applied.

- 5.rank 4 : PSF quality factor (PSF_QF) < 0.85. PSF_QF measures the PSF-weighted fraction of pixels which are not masked as "bad," but may be "suspect." Bad values are blank, highly nonlinear, or nonresponsive; suspect pixels include those pixels on ghosts, diffraction spikes, bright-star bleeds, and the mildly saturated cores of bright stars. Suspect values may have some use in measuring a flux, but with caution (see Papers II and III).

- 6.rank 5: photometric calibration of the GPC1 exposure is determined by relphot to be poor. This situation occurs if there are too few stars available for the calibration (<10 selected stars, or if the selected stars account for <5% of all stars in the exposure). An exposure may also be identified as poor if the zero-point is excessively deviant (>2 mag from the nominal value) or if the standard deviation of the calibration residuals is more than twice the median standard deviation for all exposures.

- 7.rank 6 : photometry analysis flag field (photFlags) has one of the "bad-quality" bits raised. These bits are listed below; OR-ed together they have the hexadecimal value 0x1003bc88.

- (a)PM_SOURCE_MODE_FAIL = 0x00000008: nonlinear fit failed (nonconverge, off-edge, run to zero)

- (b)PM_SOURCE_MODE_SATSTAR = 0x00000080: source model peak is above saturation

- (c)PM_SOURCE_MODE_BADPSF = 0x00000400: failed to get good estimate of object's PSF

- (d)PM_SOURCE_MODE_DEFECT = 0x00000800: source is thought to be a defect

- (e)PM_SOURCE_MODE_SATURATED = 0x00001000: source is thought to be saturated pixels (bleed trail)

- (f)PM_SOURCE_MODE_CR_LIMIT = 0x00002000: source has crNsigma above limit

- (g)PM_SOURCE_MODE_MOMENTS_FAILURE = 0x00008000: could not measure the moments

- (h)PM_SOURCE_MODE_SKY_FAILURE = 0x00010000: could not measure the local sky

- (i)PM_SOURCE_MODE_SKYVAR_FAILURE = 0x00020000: could not measure the local sky variance

- (j)PM_SOURCE_MODE_SIZE_SKIPPED = 0x10000000: size could not be determined

- 8.rank 7: measurement is from an invalid time period or photometry code. This rank level is not used in the 3π PV3 calibration. Measurements were not restricted on the basis of the time of the observation, and only GPC1 measurements were explicitly included.

- 9.rank 8: instrumental magnitude out of range. This rank level was not used in the 3π PV3 calibration.

Rank values are assigned exclusively starting from the highest values: if a measurements satisfies the rule for, e.g., rank 6, it will not be tested for ranks 5 and lower. After all measurements have been assigned a ranking value, the set of all measurements with the common lowest value are selected to be used for the average photometry analysis. If measurements from ranks 0 through 4 were used for the average photometry for a given filter, a per-filter mask bit value is raised identifying which rank was used. These bits are called ID_SECF_RANK_0 through ID_SECF_RANK_4 (see Table 2). This assessment of the valid measurements is performed independently for PSF, Kron, and seeing-matched total aperture magnitudes. All measurements that are retained to determine the average value are marked with bit flags: ID_MEAS_PHOTOM_PSF, ID_MEAS_PHOTOM_KRON, or ID_MEAS_PHOTOM_APER depending on which average magnitude is being calculated.

5.4.2. Iteratively Reweighted Least-squares Fitting

With an automatic process applied to hundreds of millions of objects, it is important for the analysis to provide a measurement of the photometry of each object that is robust against failures or other outliers. We would like to calculate an average magnitude for each filter under the assumption that the flux of the star is constant and all measurements are drawn from that population. However, even after rejecting bad measurements based on the quality information above, individual measurements may still be deviant. The Pan-STARRS1 detections have a relatively high rate of non-Gaussian outliers, partly because of the wide range of instrumental features affecting the data (see Paper III). We have used IRLS fitting to reduce the sensitivity of the fits to outlier measurements.

We have also used bootstrap resampling to determine confidence limits on our fits given the observed collection of photometry measurements. In this case, the analysis is fitting the trivial model that the photometry measurements are derived from a population with an underlying constant value. The discussion below applies to both the average of the chip photometry magnitudes and the forced-warp photometry fluxes. This technique is used to calculate the average magnitudes for all three types of photometry stored in the DVO database: PSF, Kron, and seeing-matched total aperture photometry.

Iteratively reweighted least-squares fitting describes a class of parameter estimation techniques in which weights are modified compared to those derived from the standard error in order to improve the speed of convergence or the robustness to deviant measurements. Broad reviews of these techniques can be found in Green (1984) and Street et al. (1988). In our implementation, the IRLS analysis starts with an ordinary least-squares fit, using the weights for each measurement as determined from Poisson statistics. Because our model is a constant flux, this step is equivalent to calculating a simple weighted average.

Next, the deviations from the average value for each photometry measurement are calculated. The deviation, normalized by the Poisson error, is used to modify the standard weight. We use a Cauchy function to define a new weight:

using

where Fo is the average magnitude (or flux for forced-warp photometry), Fi is the measured magnitude (or flux), σ is the standard Poisson-based error on the photometry measurement, and ω is the ordinary Poisson weight (σ−2). This modified weight has the behavior that if the observed photometry differs from the model by a substantial amount, the weight is greatly reduced, while the weight approaches the standard weight if the model and observed positions agree well. Thus, this procedure is equivalent to sigma clipping but allows the impact of the outliers to be reduced in a continuous way, rather than rigidly accepting or rejecting them.

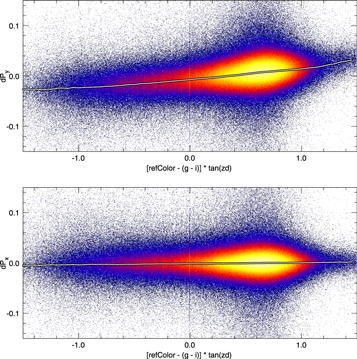

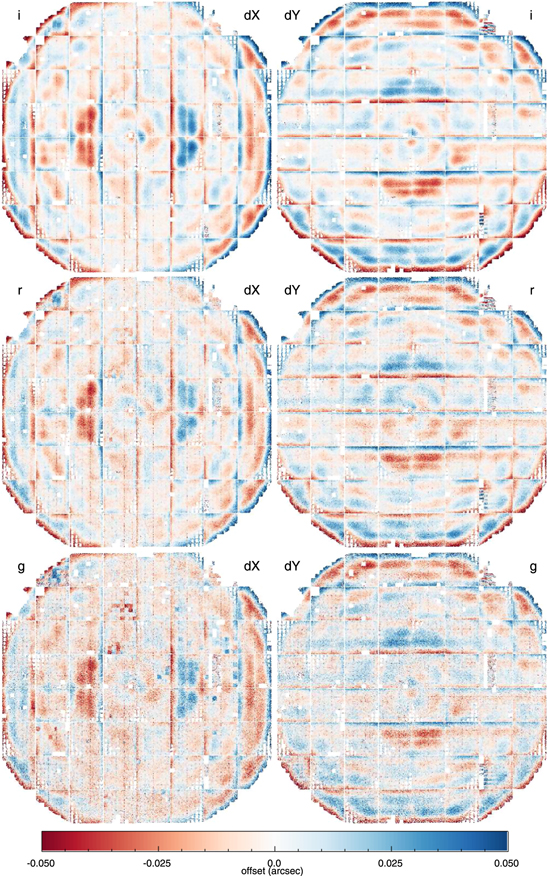

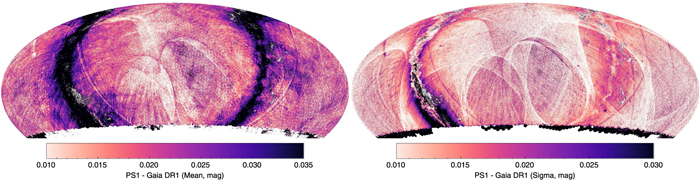

The weighted-average photometry is recalculated with these modified weights. New values for ω are calculated, and the weighted average is calculated again. In each iteration, the weighted-average photometry values are compared to the values from the previous iteration. If they have not changed significantly (<10−6) or if the fractional change is less than some tolerance (10−4), then iterations are halted and the last weighted-average values are used. If convergence is not reached in 10 iterations, the process is halted in any case and a flag raised for the object to note that IRLS did not converge.