Abstract

Over 3 billion astronomical sources have been detected in the more than 22 million orthogonal transfer CCD images obtained as part of the Pan-STARRS1 3π survey. Over 85 billion instances of those sources have been automatically detected and characterized by the Pan-STARRS Image Processing Pipeline photometry software, psphot. This fast, automatic, and reliable software was developed for the Pan-STARRS project but is easily adaptable to images from other telescopes. We describe the analysis of the astronomical sources by psphot in general as well as for the specific case of the third processing version used for the first two public releases of the Pan-STARRS 3π Survey data.

Export citation and abstract BibTeX RIS

1. Introduction

The 1.8 m Pan-STARRS1 telescope (PS1) is located on the summit of Haleakala on the Hawaiian island of Maui. The wide-field optical design of the telescope (Hodapp et al. 2004) produces a 33 field of view with low distortion and minimal vignetting even at the edges of the illuminated region. The optics and natural seeing combine to yield good image quality: 75% of the images have FWHM values less than (151, 139, 134, 127, 121) for ( ,

,  ,

,  ,

,  ,

,  ), with a floor of ∼07.

), with a floor of ∼07.

The Pan-STARRS1 camera (Tonry & Onaka 2009), known as GPC1, consists of a mosaic of 60 back-illuminated CCDs manufactured by Lincoln Laboratory. The CCDs each consist of an 8 × 8 grid of 590 × 598 pixel readout regions, yielding an effective 4846 × 4868 detector. Initial performance assessments are presented in Onaka et al. (2008). Routine observations are conducted remotely from the Advanced Technology Research Center in Kula, the main facility of the University of Hawaii's Institute for Astronomy (IfA) operations on Maui. The Pan-STARRS1 filters and photometric system have already been described in detail in Tonry et al. (2012).

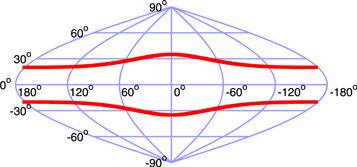

For nearly 4 yr, from 2010 May through 2014 March, this telescope was used to perform a collection of astronomical surveys under the aegis of the Pan-STARRS Science Consortium. The majority of the time (56%) was spent on surveying the three-quarters of the sky north of −30° decl. with  ,

,  ,

,  ,

,  ,

,  filters in the so-called 3π Survey. Another

filters in the so-called 3π Survey. Another  of the time was concentrated on repeated deep observations of 10 specific fields in the Medium-Deep Survey. The rest of the time was used for several other surveys, including a search for potentially hazardous asteroids in our solar system. The details of the telescope, surveys, and resulting science publications are described by Chambers et al. (2016).

of the time was concentrated on repeated deep observations of 10 specific fields in the Medium-Deep Survey. The rest of the time was used for several other surveys, including a search for potentially hazardous asteroids in our solar system. The details of the telescope, surveys, and resulting science publications are described by Chambers et al. (2016).

Since 2014 March, PS1 has been rededicated to a mission of searching for hazardous asteroids, funded by the NASA NEO Program. Additional partners collaborate with the Pan-STARRS team to harvest the transient sources such supernovae and gravitational wave counterparts. A second Pan-STARRS telescope (PS2; Chambers et al. 2016, K. C. Chambers et al. 2020, in preparation), generally matching the PS1 design (Morgan et al. 2012) has since been constructed and has been producing science results since early 2018.

Pan-STARRS produced its first large-scale public data release, Data Release 1 (DR1), on 2016 December 16. DR1 contains the results of the third full reduction of the Pan-STARRS 3π Survey archival data, identified as PV3. Previous reductions (PV0, PV1, and PV2; see Magnier et al. 2020a) were used internally for pipeline optimization and the development of the initial photometric and astrometric reference catalog (Magnier et al. 2020b). The products from these reductions were not publicly released but have been used to produce a wide range of scientific papers from the Pan-STARRS1 Science Consortium members (Chambers et al. 2016). DR1 contained only average information resulting from the many individual images obtained by the 3π Survey observations. A second data release, DR2, was made available 2019 January 28. DR2 provides measurements from all of the individual exposures and includes improved astrometric calibration as well as improvements to the photometric calibration of the stack and "forced-warp" measurements from the PV3 processing of that data set.

The Pan-STARRS public data releases are hosted by the Barbara A. Mikulski Archive for Space Telescopes (MAST) at the Space Telescope Science Institute. MAST provides access to the image data products and a hierarchical database of measurements using a system developed specifically for the Pan-STARRS data set. Development of this database system was the product of a collaboration between the Pan-STARRS Project and Alex Szalay's database development group at The Johns Hopkins University (JHU; Heasley 2008). The resulting system, called the Published Science Products Subsystem, or PSPS (Heasley et al. 2006), was initially used within the Pan-STARRS Science Consortium for large-scale data access. A duplicate PSPS installation was created at MAST for the DR1 and DR2 public releases.

This is the fourth in a series of seven papers describing the Pan-STARRS1 Surveys, the data reduction techniques, and the resulting data products. This paper (Paper IV) describes the details of the source detection and photometry, including point-spread-function (PSF) and extended source model fitting, and the techniques for "forced" photometry measurements. The same analysis software, called psphot, is used for individual images, image stacks, and difference images. The software described here was used with a single consistent set of parameters for the complete PV3 analysis, used for both DR1 and DR2. The software was also used for the analysis of the Medium-Deep Survey data, though with a different software version and some modifications of the analysis parameters to better suit the longer exposures. This program as well as the rest of the Pan-STARRS Image Processing Pipeline (IPP) software suite is available for download from http://ipp.ifa.hawaii.edu.

Chambers et al. (2016, Paper I) provide an overview of the Pan-STARRS System, the design and execution of the Surveys, the resulting image and catalog data products, a discussion of the overall data quality and basic characteristics, and a brief summary of important results.

Magnier et al. (2020a, Paper II) describe how the various data processing stages are organized and implemented in the Image Processing Pipeline (IPP), including details of the processing database, which is a critical element in the IPP infrastructure.

Waters et al. (2020, Paper III) describe the details of the pixel processing algorithms, including detrending, warping, and adding (to create stacked images) and subtracting (to create difference images), and the resulting image products and their properties.

Magnier et al. (2020b, Paper V) describe the final calibration process and the resulting photometric and astrometric quality.

Flewelling et al. (2020, Paper VI) describe the details of the resulting catalog data and its organization in the Pan-STARRS database system, PSPS.

M. Huber et al. (2020, in preparation) describe the Medium-Deep Survey in detail, including the unique issues and data products specific to that survey. The Medium-Deep Survey is not part of DRs 1 or 2 and will be made available in a future data release.

In this article, we use the following typefaces to distinguish different concepts:

- 1.Small caps for the analysis stages.

- 2.Italics for database tables and columns.

- 3.Fixed-width font for program names, variables, and miscellaneous constants.

The latter category includes a number of configuration parameters used to define the psphot analysis. In those cases, unless the values used for the PV3 analysis are explicitly discussed, we include the PV3 value immediately after the configuration variable name in parentheses.

2. Background

The photometric and astrometric precision goals for the Pan-STARRS1 surveys were quite stringent. The astrometric goals were relative astrometric accuracy of 10 mas and absolute astrometric accuracy of 100 mas with respect to the ICRS reference stars. For photometry, the goal was 10 mmag accuracy within the internal photometric system across the sky, though the tie to an absolute standard was not required to meet this standard.

An additional constraint on the Pan-STARRS analysis system comes from the high data rate. PS1 produces typically ∼500 exposures per night, corresponding to ∼750 billion pixels of imaging data. The images range from high galactic latitudes to the Galactic bulge, so large numbers of measurable stars can be expected in much of the data. The combination of the high precision goals of the astrometric and photometric measurements and the high data rate (and a finite computing budget) means that the process of detecting, classifying, and measuring the astronomical sources in the image data stream in a timely fashion are a significant challenge.

In order to achieve these ambitious goals, the source detection, classification, and measurement process must be both precise and efficient. Not only is it necessary to make a careful measurement of the flux of individual sources, it is also critical to characterize the image PSF and its variations across the field and from image to image. Because comparisons between images must be reliable, the measurements must be stable for both photometry and astrometry.

A variety of astronomical software packages perform the basic source detection, measurement, and classification tasks needed by the Pan-STARRS IPP. Each of these programs have their own advantages and disadvantages. Below we discuss some of the most widely used of these other packages, highlighting the features of the programs that are particularly desirable and noting aspects of the programs that are problematic for the IPP.

- 1.DoPhot: analytical fitted model with aperture corrections. Pros: well-tested, stable code. Cons: limited range of models, algorithm converges slowly to a PSF model, limited tests of PSF validity, inflexible code base, Fortran (Schechter et al. 1993).

- 2.DAOPhot: pixel-map PSF model with analytical component. Pros: well-tested, high-quality photometry. Cons: difficult to use in an automated fashion; does it handle 2D variations well? (Stetson 1987).

- 3.Sextractor: pure aperture measurement with rudimentary source subtraction. Pros: fast, widely used, easy to automate. Cons: poor source separation in crowded regions, PSF modeling was only in beta, not widely used at the time (Bertin & Arnouts 1996).

- 4.galfit: detailed galaxy modeling. Not a multisource PSF analysis tool. Cons: does not provide a PSF model, not easily automated, very detailed results in very slow processing, only a galaxy analysis program (Peng et al. 2002).

- 5.SDSS phot. Cons: tightly integrated into the SDSS software environment (Lupton et al. 2001).

When the IPP development was starting, the existing photometry packages either did not meet the accuracy requirements or required too much human intervention to be considered for the needs of PS1. In the case of the SDSS photo tool, the software was judged to be too tightly integrated to the architecture of SDSS to be easily reintegrated into the Pan-STARRS pipeline. A new photometry analysis package was developed using lessons learned from the existing photometry systems. In the process, the source analysis software was written using the data analysis C-code library written for the IPP, psLib (Magnier et al. 2020a). Components of the photometry code were integrated into the IPP's midlevel astronomy data analysis toolkit called psModules (Magnier et al. 2020a). The resulting software, "psphot," can be used either as a standalone C program or as a set of library functions that may be integrated into other programs

Several variants of psphot have been used in the PS1 PV3 analysis. The main variant of psphot operates on a single image or a group of related images representing the data read from the multiple chips of a mosaic camera from a single exposure. The images are expected to have already been detrended so that pixel values are linearly related to the flux. The gain may be specified by the configuration system or a variance image may be supplied. A mask may also be supplied to mark good, bad, and suspect pixels. This variant of psphot can be called a standalone program, also called psphot. In standard IPP operations, this variant is used as a library call within the analysis program ppImage during the chip analysis stage.

In the standard IPP analysis, the initial stage of processing is performed in parallel on each of the individual CCDs in the camera. This so-called chip-stage analysis includes the detrending of the CCD image (instrumental signature removal) as well as the detection and analysis of sources in the image using the basic version of psphot. The next stage of the analysis, the camera stage, consists of photometric and astrometric calibration.

After the calibrations are available, the detrended CCD images from an entire exposure are geometrically transformed to a common pixel grid in the warp stage of the pipeline. The resulting warped images are generated on a predefined tessellation of the sky that starts with projection centers spaced roughly 4° across the sky. Around each of these projection centers, a large regular pixel grid is defined and then subdivided along pixel boundaries into smaller units that are well matched to the memory footprint of our processing computers. These smaller images, called "skycells," are defined with 1' of overlap with their neighbors so that any modest-sized object can be analyzed entirely on a single pixel grid. Note that the term skycell is used to describe the particular subdivision of the sky. A typical exposure from the GPC1 camera generates warp images on roughly 70 skycells. We refer to the specific warped images from an exposure as "warps."

Multiple warps for the same skycell are combined together in the stack stage of the IPP by coadding the flux to generate a deep "stack" image. Alternatively, one warp may be subtracted from another warp of the same skycell, or a stack image may be subtracted from a warp image, or indeed from another stack. These subtraction operations are used to detect moving and transient objects within the IPP. Different variants of psphot are used for the source detection and analysis for each of these different analysis stages.

The variant called psphotStack accepts a set of images, each representing the same patch of sky (with pixels aligned) in a different filter. This version was used in the IPP for the analysis of the deep "stacks" produced by the IPP stack stage. Nominally, the full grizy filter set was used for the analysis of the PS1 PV3 stack images, though where insufficient data were available in a given filter, a subset of these filters was processed as a group. As discussed in detail below, the psphotStack analysis includes the capability of measuring forced PSF photometry in some filter images based on the position of sources detected in the other filters. It also includes an option to convolve the set of images to a single, common PSF size across the filters for the purpose of fixed-aperture photometry.

Another variant of psphot used in the PV3 analysis is called psphotFullForce. In this variant, a set of images all representing the same coaligned pixels are processed together, with the positions of sources to be analyzed loaded from a supplied file. In this variant of the analysis, sources are not discovered—only the supplied sources are considered. PSF models are determined for each exposure, and the forced PSF photometry is measured for all sources. A subset of sources may also be used to measure forced galaxy shape parameters. As described below, a grid of galaxy models is fitted based on the supplied guess model.

3. psphot Design Goals

The top-level design goals of psphot are to detect and determine the instrumental positions and fluxes of astronomical sources in the images. For extended sources, the goals also include the measurement of a variety of morphological information, including galaxy model parameters and nonparametric measurements of the sizes and profiles of the galaxies to aid in classification and for weak lensing analysis. For trailed asteroids, the goal also includes the measurement of the length and direction of the trail.

Beyond these basic elements, psphot has a number of design goals that we believe will help make it usable in a wide range of circumstances. The critical astronomy-driven measurement goals of the Pan-STARRS project, which drive the design of psphot, are the photometric accuracy goal (10 mmag) and the relative astrometric accuracy goal (10 mas) for bright stars for which the photon shot noise is small compared to the systematic errors.

For psphot, the photometry accuracy goal implies that the measured photometry of stellar sources must be substantially better than this 10 mmag goal as the photometry error per image is combined with an error in the flat-field calibration and an error in measuring the atmospheric effects. We have set a goal for psphot of 3 mmag photometric consistency for bright stars between pairs of images obtained in photometric conditions at the same pointing, i.e., to remove sensitivity to flat-field errors. This goal splits the difference between the three main contributors and still allows some leeway. This goal must be met for well-sampled images and images with only modest undersampling.

The relative astrometric calibration depends on the consistency of the individual measurements. The measurements from psphot must be sufficiently representative of the true source position to enable astrometric calibration at the 10 mas level. The error in the individual measurements will be folded together with the errors introduced by the optical system, the effects of seeing, and the available reference catalogs. We have set a goal for psphot of 5 mas consistency between the true source position and the measured position given reasonable PSF variations under simulations. This level must be reached for images with 250 mas pixels, implying psphot must introduce measurement errors less than 1/50 of a pixel. The choice of 32 bit floating point data values for the source centroids places a numerical limit of 1 × 10–7 on the accuracy of a pixel relative to the size of a chip (because a single data value is used for X or Y). For the 48002 GPC chips, this yields a limit of about 0.25 mas.

The design goals for psphot are chosen to make the program flexible, general, and able to meet the unknown usage cases future projects may require:

- 1.Flexible PSF model. Different image sources require different ways of representing the PSF. Ideally, both analytical and pixel-based versions should be possible.

- 2.PSF spatial variation. Most images result in some spatial PSF variations at a certain level. The PSF representation should naturally incorporate 2D variations.

- 3.Flexible non-PSF models. psphot must be able to represent PSF-like sources as well as non-PSF sources (e.g., galaxies). It must be easy to add new source models as interesting representations of sources are invented.

- 4.Clean code base. psphot should incorporate a high-degree of abstraction and encapsulation so that changes to the code structure can be performed without pulling the code apart and starting from scratch.

- 5.PSF validity tests. psphot should include the ability to choose different types of PSF models for different situations, or to provide the user with methods for assessing the different PSF models.

- 6.Careful systematic corrections. psphot must carefully measure and correct for the photometric and astrometric trends introduced by using analytical PSF models.

- 7.User configurable. psphot should allow users to change the options easily and to allow different approaches to the analysis.

The success of the psphot implementation in meeting the photometry and astrometry design requirements is demonstrated by the achieved accuracy for the Pan-STARRS 3π Survey data. For a survey like the Pan-STARRS1 3π Survey to achieve photometry and astrometry accuracy at the level of our goals, not only must the measurement of the astronomical detections be precise, but it is necessary for the detrending and calibration processes to correct for a wide variety of systematic effects, and it is also necessary for the observations to be performed in such a way that the data can be calibrated well. These other aspects of the process are discussed in detail elsewhere (Papers I, III, and V). In the end, the goals were largely achieved for the Pan-STARRS1 3π Survey. As reported in Paper V, the resulting photometric system is consistent across the sky to between 7 and 12.4 mmag, depending on the filter. The systematic error floor for individual photometry measurements is  mmag. The bright-star systematic error floor for individual astrometric measurements is 16 mas and the Pan-STARRS Data Release 2 (DR2) astrometric system is tied to the Gaia DR1 (Lindegren et al. 2016) coordinate frame with a systematic uncertainty of ∼5 mas.

mmag. The bright-star systematic error floor for individual astrometric measurements is 16 mas and the Pan-STARRS Data Release 2 (DR2) astrometric system is tied to the Gaia DR1 (Lindegren et al. 2016) coordinate frame with a systematic uncertainty of ∼5 mas.

4. Basic Analysis

4.1. Overview

The basic psphot analysis is divided into several major stages, as listed below.

- 1.Image preparation. Load data, characterize the image background, load or construct variance and mask images.

- 2.Initial source detection. Smooth, find peaks, measure basic properties with focus on the point sources to measure the PSF.

- 3.PSF determination. Select PSF candidates, perform model fits, build PSF model from fits, select best PSF model class.

- 4.Bright-source analysis. Fit sources with PSFs, determine PSF validity, subtract PSF-like sources, fit non-PSF model(s), select best model class, subtract model.

- 5.Faint source analysis. Detect low-level sources, measure properties (aperture or PSF).

- 6.Aperture corrections.Measure the curve of growth, spatial aperture variations, and background-error corrections.

- 7.Output. Write out sources in selected format, write out difference image, variance image, etc., as selected.

In addition to this basic sequence, additional analysis steps may be performed. An "extended source" analysis mode is available to measure photometry and morphology of galaxies and other resolved sources. Forced photometry may be performed for both point-like and extended sources. A special mode is available for the photometry of sources detected in difference images. These different modes are discussed in their own sections below.

Table 1 lists the types of analyses performed by psphot, specifying which of the psphot use cases performs the given analysis. The table also provides a reference to the section of this paper in which the analysis is described. Not all analyses are relevant to all sources in all images. The table identifies those cases where the analyses are applied to only a subset of all sources.

Table 1. Measurements Performed by psphot, and Whether Performed in Each of the 4 IPP Analysis Stages

| Measurement | CHIP | STACK | FORCED WARP | DIFF | Section | Details |

|---|---|---|---|---|---|---|

| Background Subtraction | Y | Y | Y | N a | 4.3 | N/A |

| Peaks | Y | Y | N | Y | 4.4.1 | All detections |

| Footprints | Y | Y | N | Y | 4.4.2 | All detections |

| Moments | Y | Y | Y | Y | 4.4.3 | All detections |

| PSF Model | Y | Y | Y | N b | 4.5 | Selected bright stars |

| Bright-star Profile | Y | Y | N | Y | 4.6.1 | Saturated stars |

| Radial Profiles v1 | Y | Y | N | Y | 4.6.3 | All detections |

| Kron Fluxes | Y | Y | Y | Y | 4.6.4 | All detections |

| Source-size Tests | Y | Y | N | Y | 4.6.5 | All detections |

| Nonlinear PSF Fits | Y | Y | N | N | 4.6.6 | S/N > 20 |

| Unconvolved Galaxy Model | Y | Y | N | N | 4.6.8 | S/N > 20, extended |

| Unconvolved Streak Model | N | N | N | Y | 4.6.8 | S/N > 20, extended |

| Linear PSF Fits | Y | Y | Y | Y | 4.7 | All detections |

| Radial Profiles v2 | Y | Y | N | Y | 5.1 | Gal. latitude cut |

| Petrosian Fluxes | N | Y | Y | N | 5.2 | Gal. latitude cut |

| Convolved Galaxy Models | N | Y | N | N | 5.3 | Gal. latitude cut, mag cut |

| Fixed-aperture Photometry | N | Y | Y | N | 5.4 | All detections |

| Convolved, Fixed Apertures | N | Y | N | N | 5.4 | All detections |

| Aperture Corrections | Y | Y | Y | N | 4.8 | All detections |

| Forced PSF Fluxes | N | N | Y | N | 6 | All detections |

| Forced Galaxy Models | N | N | Y | N | 6.2 | Requires stack galaxy models |

| Lensing Parameters | N | Y | Y | N | 6.3 | All detections |

Notes. The analysis is described in this article in the listed sections.

a Background subtraction is performed by ppSub before calling psphot. b PSF modeling is performed by ppSub on the input warps before calling psphotDownload table as: ASCIITypeset image

psphot is highly configurable. Users may choose via the configuration system which of the above analyses are performed. This is useful for testing but also allows for specialized use cases. For example, the PSF model may already be available from external information, in which case the PSF modeling stage can be skipped.

Ultimately, all measurements of individual astronomical sources from psphot are reported in one of the tables in the PSPS database. As discussed in detail in Paper VI, measurements from individual exposures are available from the Detection table. Measurements of objects in the stacked images are stored in one of several Stack... tables, while the "forced" measurements from individual warp images are stored in tables beginning with ForcedWarp.

4.2. Informational and Warning Bit Flags

During the psphot analysis, there are a wide variety of conditions that are identified by the analysis software. As part of the output data for each detected source, two fields that encode these conditions as bit values in the two 32 bit integers are provided. The following two tables list the individual bit values in these two fields. These informational and warning bits are described in more detail later in this article.

Table 2 lists the flags recorded in the output field FLAGS. When data from psphot is loaded into a DVO database (Magnier et al. 2020b), these values are stored in the field Measure.photFlags and exposed in the public database (PSPS; Flewelling et al. 2020) in the fields Detection.infoFlag, StackObjectThin.XinfoFlag (where X is one of grizy), and ForcedWarpMeasurement.FinfoFlag. Table 3 lists the flags recorded in the output field FLAGS2. When data from psphot are loaded into a DVO database (Magnier et al. 2020b), these values are stored in the field Measure.photFlags2, and they are exposed in PSPS in the fields Detection.infoFlag2, StackObjectThin.XinfoFlag2 (where X is one of grizy), and ForcedWarpMeasurement.FinfoFlag2.

Table 2. Detection Flag Values #1 Reported by psphot

| Flag Name | Flag Value | Description |

|---|---|---|

| PM_SOURCE_MODE_PSFMODEL | 0x00000001 | Source fitted with a PSF model (linear or nonlinear) |

| PM_SOURCE_MODE_EXTMODEL | 0x00000002 | Source fitted with an extended source model |

| PM_SOURCE_MODE_FITTED | 0x00000004 | Source fitted with nonlinear model (PSF or EXT; good or bad) |

| PM_SOURCE_MODE_FAIL | 0x00000008 | Fit (nonlinear) failed (nonconverge, off-edge, run to zero) |

| PM_SOURCE_MODE_POOR | 0x00000010 | Fit succeeds, but low-S/N or high chi-square |

| PM_SOURCE_MODE_PAIR | 0x00000020 | Source fitted with a double PSF |

| PM_SOURCE_MODE_PSFSTAR | 0x00000040 | Source used to define PSF model |

| PM_SOURCE_MODE_SATSTAR | 0x00000080 | Source model peak is above saturation |

| PM_SOURCE_MODE_BLEND | 0x00000100 | Source is a blend with other sources a |

| PM_SOURCE_MODE_EXTERNAL | 0x00000200 | Source based on supplied input position |

| PM_SOURCE_MODE_BADPSF | 0x00000400 | Failed to get good estimate of object's PSF |

| PM_SOURCE_MODE_DEFECT | 0x00000800 | Source is thought to be a defect |

| PM_SOURCE_MODE_SATURATED | 0x00001000 | Source is thought to be saturated pixels (bleed trail) |

| PM_SOURCE_MODE_CR_LIMIT | 0x00002000 | Source has crNsigma above limit |

| PM_SOURCE_MODE_EXT_LIMIT | 0x00004000 | Source has extNsigma above limit |

| PM_SOURCE_MODE_MOMENTS_FAILURE | 0x00008000 | Could not measure the moments |

| PM_SOURCE_MODE_SKY_FAILURE | 0x00010000 | Could not measure the local sky |

| PM_SOURCE_MODE_SKYVAR_FAILURE | 0x00020000 | Could not measure the local sky variance |

| PM_SOURCE_MODE_BELOW_MOMENTS_SN | 0x00040000 | Moments not measured due to low S/N. a |

| PM_SOURCE_MODE_BIG_RADIUS | 0x00100000 | Poor moments for small radius, try large radius |

| PM_SOURCE_MODE_AP_MAGS | 0x00200000 | Source has an aperture magnitude |

| PM_SOURCE_MODE_BLEND_FIT | 0x00400000 | Source was fitted as a blend |

| PM_SOURCE_MODE_EXTENDED_FIT | 0x00800000 | Full extended fit was used |

| PM_SOURCE_MODE_EXTENDED_STATS | 0x01000000 | Extended aperture stats calculated |

| PM_SOURCE_MODE_LINEAR_FIT | 0x02000000 | Source fitted with the linear fit |

| PM_SOURCE_MODE_NONLINEAR_FIT | 0x04000000 | Source fitted with the nonlinear fit |

| PM_SOURCE_MODE_RADIAL_FLUX | 0x08000000 | Radial flux measurements calculated |

| PM_SOURCE_MODE_SIZE_SKIPPED | 0x10000000 | Size could not be determined a |

| PM_SOURCE_MODE_ON_SPIKE | 0x20000000 | Peak lands on diffraction spike |

| PM_SOURCE_MODE_ON_GHOST | 0x40000000 | Peak lands on ghost or glint |

| PM_SOURCE_MODE_OFF_CHIP | 0x80000000 | Peak lands off edge of chip |

Notes. These are saved in output catalogs as the field FLAGS, in the DVO database as Measure.photFlags, and in the public database as Detection.infoFlag, StackObjectThin.XinfoFlag (where X is one of grizy), and ForcedWarpMeasurement.FinfoFlag.

a Not used for DR1 or DR2.Download table as: ASCIITypeset image

Table 3. Detection Flag Values #2 Reported by psphot

| Flag Name | Flag Value | Description |

|---|---|---|

| PM_SOURCE_MODE2_DIFF_WITH_SINGLE | 0x00000001 | Diff source matched to a single positive detection |

| PM_SOURCE_MODE2_DIFF_WITH_DOUBLE | 0x00000002 | Diff source matched to positive detections in both images |

| PM_SOURCE_MODE2_MATCHED | 0x00000004 | Source generated based on another image |

| PM_SOURCE_MODE2_ON_SPIKE | 0x00000008 |

of (PSF-weighted) pixels land on diffraction spike of (PSF-weighted) pixels land on diffraction spike |

| PM_SOURCE_MODE2_ON_STARCORE | 0x00000010 |

of (PSF-weighted) pixels land on star core of (PSF-weighted) pixels land on star core |

| PM_SOURCE_MODE2_ON_BURNTOOL | 0x00000020 |

of (PSF-weighted) pixels land on burntool of (PSF-weighted) pixels land on burntool |

| PM_SOURCE_MODE2_ON_CONVPOOR | 0x00000040 |

of (PSF-weighted) pixels land on convpoor of (PSF-weighted) pixels land on convpoor |

| PM_SOURCE_MODE2_PASS1_SRC | 0x00000080 | Source detected in first pass analysis |

| PM_SOURCE_MODE2_HAS_BRIGHTER_NEIGHBOR | 0x00000100 | Peak is not the brightest in its footprint |

| PM_SOURCE_MODE2_BRIGHT_NEIGHBOR_1 | 0x00000200 |

|

| PM_SOURCE_MODE2_BRIGHT_NEIGHBOR_10 | 0x00000400 |

|

| PM_SOURCE_MODE2_DIFF_SELF_MATCH | 0x00000800 | Positive detection match is probably this source |

| PM_SOURCE_MODE2_SATSTAR_PROFILE | 0x00001000 | Saturated source is modeled with a radial profile |

| PM_SOURCE_MODE2_ECONTOUR_FEW_PTS | 0x00002000 | Too few points to measure the elliptical contour |

| PM_SOURCE_MODE2_RADBIN_NAN_CENTER | 0x00004000 | Radial bins failed with too many NaN center bin |

| PM_SOURCE_MODE2_PETRO_NAN_CENTER | 0x00008000 | Petrosian radial bins failed with too many NaN center bin a |

| PM_SOURCE_MODE2_PETRO_NO_PROFILE | 0x00010000 | Petrosian not built because radial bins missing |

| PM_SOURCE_MODE2_PETRO_INSIG_RATIO | 0x00020000 | Insignificant measurement of Petrosian ratio |

| PM_SOURCE_MODE2_PETRO_RATIO_ZEROBIN | 0x00040000 | Petrosian ratio in the zeroth bin (likely bad) |

| PM_SOURCE_MODE2_EXT_FITS_RUN | 0x00080000 | We attempted to run extended fits on this source |

| PM_SOURCE_MODE2_EXT_FITS_FAIL | 0x00100000 | At least one of the model fits failed |

| PM_SOURCE_MODE2_EXT_FITS_RETRY | 0x00200000 | Trailed asteroid model fit was retried with new window |

| PM_SOURCE_MODE2_EXT_FITS_NONE | 0x00400000 | All of the model fits failed |

Notes. These are saved in output catalogs as the field FLAGS2, in the DVO database as Measure.photFlags2, and in the public database as Detection.infoFlag2, StackObjectThin.XinfoFlag2 (where X is one of grizy), and ForcedWarpMeasurement.FinfoFlag2.

a Not used for DR1 or DR2.Download table as: ASCIITypeset image

4.3. Image Preparation

The first step is to prepare the image for detection of the astronomical sources. We need three separate images: the measured flux (signal image), the corresponding variance image, and a mask defining which pixels are valid and which should be ignored. The signal and variance images are represented internally as 32 bit floating point values. The variance and mask images may either be provided by the user, or they may be automatically generated from the input image, based on configuration-defined values for the image gain, read noise, saturation, and so forth. Within the IPP analysis, we normally use images that are equivalent to the digital numbers (scaled by the detrend images), but as long as the variance image is constructed in a consistent fashion, psphot can use images in electron, calibrated flux units, or other conventions (though this would require some tuning of configuration parameters). For the function-call form of the program, the flux image is provided in the API, and references to the mask and variance are provided in the configuration information. As in the standalone C program, the variance and mask may be constructed automatically by psphot.

The mask is represented as a 16 bit integer image in which a value of 0 represents a valid pixel. Each of the 16 bits define different reasons a pixel should be ignored, listed in Table 4. This allows us to optionally respect or ignore the mask depending on the circumstance. For example, in some cases, we ignore saturated pixels completely while in other circumstances, it may be useful to know the flux value of the saturated pixel. In addition, the mask pixels are used to define the pixels available during a model fit; those which should be ignored for that specific fit are "marked" by setting a special bit (MARK=0x8000). The initial mask, if not supplied by the user or library calls, is constructed by default from the image by applying three rules: (1) Pixels that are above a specified saturation level are marked as saturated. The level is specified by the camera format keyword CELL.SATURATION, which may specify a value or define a header keyword which in turn specifies the value in the image header. In the case of PS1 PV3, the header keyword MAXLIN specifies the saturation level for each chip (see Waters et al. 2020). (2) Pixels that are below a user-defined value (CELL.BAD = 0 for PV3) are considered unresponsive and masked as dead. (3) Pixels that lie outside of a user-defined coordinate window are considered nondata pixels (e.g., overscan) and are marked as invalid. (psphot recipe keywords XMIN, XMAX, YMIN, YMAX, all set to 0 for PS1 PV3—invalid pixels were specified for PS1 PV3 with a supplied mask image (see Waters et al. 2020).

Table 4. Pixel Values for Input GPC1 Mask Images Used by psphot

| Mask Name | Mask Value | Dynamic? | Suspect? | Description |

|---|---|---|---|---|

| DETECTOR | 0x0001 | N | N | A detector defect is present. |

| FLAT | 0x0002 | N | N | The flat-field model does not calibrate the pixel reliably. |

| DARK | 0x0004 | N | N | The dark model does not calibrate the pixel reliably. |

| BLANK | 0x0008 | N | N | The pixel does not contain valid data. |

| CTE | 0x0010 | N | N | The pixel has poor charge transfer efficiency. |

| SAT | 0x0020 | Y | N | The pixel is saturated. |

| LOW | 0x0040 | Y | N | The pixel has a lower value than expected. |

| SUSPECT | 0x0080 | Y | Y | The pixel is suspected of being bad a . |

| BURNTOOL | 0x0080 | Y | Y | The pixel contains a burntool-repaired streak. |

| CR | 0x0100 | Y | N | A cosmic ray is present. |

| SPIKE | 0x0200 | Y | Y | A diffraction spike is present. |

| GHOST | 0x0400 | Y | Y | An optical ghost is present. |

| STREAK | 0x0800 | Y | Y | A streak is present. |

| STARCORE | 0x1000 | Y | Y | A bright star core is present. |

| CONV.BAD | 0x2000 | Y | N | The pixel is bad after convolution with a bad pixel. |

| CONV.POOR | 0x4000 | Y | Y | The pixel is poor after convolution with a bad pixel. |

| MARK | 0x8000 | X | X | An internal flag for temporarily marking a pixel. |

Notes. The table gives the bit value used to mark the listed effects. Bits marked as "dynamic" are set for each image based on the contents, such as the locations of bright stars. Bits marked as "suspect" represent effects that do not definitely affect the photometry, but users should be careful. The mask image headers also list these values.

a The SUSPECT bit is generic and only used if a specific reason cannot be identified. It is overloaded on the same bit as BURNTOOL.Download table as: ASCIITypeset image

The library functions used by psphot understand two types of masked pixels: "bad" and "suspect." Bad pixels are those that should not be used in any operations, while suspect pixels are those for which the reported signal may be contaminated or biased, but may be usable in some contexts. For example, a pixel with poor charge transfer efficiency is likely to be too untrustworthy to use in any circumstance, while a pixel in which persistence ghosts have been subtracted might be useful for detection or even analysis of brighter sources. Table 4 lists the 16 bit values used for PS1 mask images, along with their description (see Waters et al. 2020 for additional information).

An important point to note is that psphot does not attempt to interpolate or replace bad pixel values in the images before processing. The GPC1 images have quite extensive masking due to both defects and natural gaps between detectors and amplifier regions. On average, roughly 71% of the full usable field of view is covered with valid pixels (see Paper III for more discussion). Any attempt to interpolate bad pixels would be quickly overwhelmed by these extensive regions. Rather than attempt to fill in the bad pixels, we rely in the PS1 PV3 processing on the fact that regions on the sky were observed many times. Thus, it should be noted that model-fitting measurements (which can naturally ignore masked pixels) should generally be more reliable than aperture-like measurements for single exposures. Aperture-like measurements from the stacks do not suffer from this masking issue. See also the discussion of the PSF_QF and PSF_QF_PERFECT parameters for judging the impact of masking on a particular source (Section 4.5.3).

The variance image, if not supplied, is constructed by default from the flux image using the configuration supplied gain and read noise values to calculate the appropriate Poisson statistics for each pixel. The parameters are determined based on the camera format keywords CELL.GAIN and CELL.READNOISE, which in the case of PS1 PV3 refer to the header keywords GAIN and RDNOISE. In this case, the image is assumed to represent the readout from a single detector, with well-defined gain and read-noise characteristics. This assumption is not always valid. For example, if the input flux image is the result of an image stack with a variable number of input measurements per pixel (due to masking and dithering), the variance cannot be calculated from the signal image alone. It is necessary in such a case to supply a variance image that accurately represents the variance as a function of position in the image.

Some image processing steps introduce cross-correlation between pixel fluxes. An obvious case is smoothing, but geometric transformations that redistribute fractional flux between neighboring pixels also introduces cross-correlations. In the noise model, it is necessary to track the impact of the cross-correlations on the per-pixel variance. In the general case, this would require a complete covariance image, consisting of the set of cross-correlated pixels for each image pixel. Because a typical smoothing or warping operation may introduce correlation between 25 and 100 neighboring pixels, the size of such a covariance image is prohibitive.

Before sources are detected in the image, a model of the background is subtracted. The image is divided into a grid of background points with a spacing defined by the psphot recipe values BACKGROUND.XBIN, BACKGROUND.YBIN, set to 400 pixels (∼100'') for PV3. Superpixels of size BACKGROUND.XSAMPLE, BACKGROUND.YSAMPLE (2 × 2 for PV3) times larger than this spacing are used to measure the local background for each background grid point, thus oversampling the background spatial variations. In the interest of speed, a subset of IMSTATS_NPIX (10,000 for PV3) randomly selected unmasked pixels in these regions are used to determine the background. The background value for each superpixel is determined by fitting a Gaussian distribution to the histogram of pixel values.

If the image were empty of stars and only contained flux from a uniform background sky, we would expect the distribution to be Poisson distributed and in general in a high-enough signal range to be essentially Gaussian. We fit a symmetric Gaussian to all histogram bins within 15% of the peak bin value to determine the mean and standard deviation values for the background.

If, however, the sky is not empty of stars or other sources, and we have correctly masked the large majority of nonresponsive pixels, then we expect the flux distribution of the pixels to be asymmetric with a Gaussian core representing the sky and a tail to the high end representing the pixels with astronomical source flux contributions. We would like to determine the mean of the underlying Gaussian without suffering bias from the stellar flux. We thus perform a second Gaussian fit using an asymmetric subset of the histogram pixels, fitting those histogram bins that are left of the peak but for which the bin value is greater than 25% of the peak bin, or right of the peak but only using those bins for which the bin value is greater than 50% of the peak bin value.

If the fit to the asymmetric lower fraction of the curve is less than the symmetric fit but greater than the above lower bound of the full symmetric fit, then the lower fraction value is kept as the true mean sky value for this superpixel. Table 5 shows a comparison of this technique to several other methods to measure the sky background using simulated data with a range of stellar densities. The stellar density listed in the table is the number of stars per square degree at the 5σ detection limit in the lowest-density image. In our simulations, we find that as the stellar density rises to values typical in the Galactic plane regions, this technique results in a more accurate estimate of the background, though it still overestimates the background compared to the truth.

Table 5. Comparison of Background Measurement Methods

| Density | True | Image | Image | Gauss | psphot |

|---|---|---|---|---|---|

) ) | Sky | Mean | Median | Fit | Value |

| 4.2 | 202.8 | 203.3 | 202.8 | 202.8 | 202.9 |

| 4.7 | 202.8 | 204.9 | 203.1 | 203.0 | 203.0 |

| 5.2 | 202.8 | 210.6 | 204.0 | 203.5 | 203.5 |

| 5.7 | 202.8 | 233.9 | 207.4 | 205.4 | 205.3 |

| 6.2 | 202.8 | 300.9 | 219.7 | 211.2 | 210.6 |

| 6.7 | 202.8 | 534.6 | 286.2 | 242.8 | 233.9 |

Note. Backgrounds were measured for simulated images with the given stellar density (at the low-density detection threshold) and known background level. The psphot technique is less biased at high stellar densities.

Download table as: ASCIITypeset image

Bilinear interpolation is used to generate a full-resolution image from the grid of background points, and this image is then subtracted from the science image. The background image and the background standard deviation image are kept in memory from which the values of SKY and SKY_SIGMA are calculated for each source in the output catalog. For more details of the background subtraction, see the discussion in Section 3.11 of Waters et al. (2020).

Because the subtraction of the sky model suppresses larger-scale structures, features such as large galaxies that are comparable to the superpixel size are adversely affected by the subtraction. Photometry for galaxies larger than ∼30'' is unreliable as a result. The superpixel size used for the sky model in the PV3 analysis was chosen as a compromise between the need to follow bright features with small spatial scales and the desire to measure photometry of galaxies of sizes up to at least 30''. Features that we wished to suppress include both astronomical sources, such as bright nebulosity and the wings of bright stars, and non-astronomical sources, such as moonlight and other scattered-light sources. In some contexts, we have used a finer spacing for the background model, such as in the dedicated analysis of the photometry of the Andromeda galaxy, where we are only interested in stellar sources, and the analysis is otherwise badly affected by the background from this galaxy.

4.4. Initial Source Detection

4.4.1. Peak Detection

The initial source detection step is focused on finding and identifying the brighter point sources. The goals are twofold: (1) to select sources that can be used to model the PSF and (2) to subtract the brighter sources so that fainter sources may be found throughout the image .

The sources are initially detected by finding the location of local peaks in the image. The flux and variance images are smoothed with a small circularly symmetric kernel using a two-pass 1D Gaussian. The smoothed flux and variance images are combined to generate a significance image in signal-to-noise units, including correction for the covariance, if known. At this stage, the goal is only to detect the brighter sources, above a user-defined signal-to-noise ratio (S/N) limit (PEAKS_NSIGMA_LIMIT = 20.0 for PV3). A maximum of PEAKS_NMAX (5000 for PV3) are found at this stage.

For an image with a Gaussian PSF of the same size, this method would represent the optimal detection algorithm, equivalent to a matched filter. At this stage, our goal is simply to detect the brighter sources, so the exact size and shape of the PSF are not critical. The detection efficiency for the brighter sources is not strongly dependent on the form of this smoothing function. Instead, our goal with the smoothing kernel is to reduce our sensitivity to pixel-to-pixel fluctuations in the location of the peak of the sources in the image.

The local peaks in the smoothed image are found by first detecting local peaks in each row. For each peak, the neighboring pixels are then examined, and the peak is accepted or rejected depending on a set of simple rules. The rules are defined so that we choose a unique set of peaks that are not immediately adjacent to other peaks. First, any peak that is greater than all eight neighboring pixels is kept. Any peak that is lower than any of the eight neighboring pixels is rejected. Any peak that has the same value as any of the other eight pixels is kept if the pixel X and Y coordinates are greater than or equal to the other equal-value pixels. This last rule means that a flat-topped region will result in peaks at the maximum X and Y corners of the region.

We use the 9 pixels that include the source peak to fit for the position and position errors. We model the peak of the sources as a 2D quadratic polynomial, and use a very simple biquadratic fit to these pixels. We use the following function to describe the peak:

and write the chi-square equation:

By approximating the error per pixel as the Poisson error on just the peak, pulling that term out of the above equation, and recognizing that the values  in the 3 × 3 grid centered on the peak pixel have values of only 0 or 1, we can greatly simplify the chi-square equation to a square matrix equation with the following values:

in the 3 × 3 grid centered on the peak pixel have values of only 0 or 1, we can greatly simplify the chi-square equation to a square matrix equation with the following values:

Inverting the 3 × 3 matrix terms for C00, C20, and C02, the location of the peak is determined from the minimum of the biquadratic function above and is given by

The resulting peak position, ( ), is used as the default starting coordinate for the source. Later in the psphot analysis, improved measurements of the source positions are calculated as discussed below.

), is used as the default starting coordinate for the source. Later in the psphot analysis, improved measurements of the source positions are calculated as discussed below.

4.4.2. Footprints

The peaks detected in the image may correspond to real sources, but they may also correspond to noise fluctuations, especially in the wings of bright stars. psphot attempts to identify peaks that may be formally significant but are not locally significant. It first generates a set of "footprints," contiguous collections of pixels in the smoothed significance image above the detection threshold (PEAKS_NSIGMA_LIMIT). These regions are grown by a small amount to avoid errors on rough edges—an image of the footprints is convolved with a disk of radius FOOTPRINT_GROW_RADIUS (3 pixels for PV3). Peaks are assigned to the footprints in which they are contained (note by construction all peaks must be located in a footprint because the peaks must be above the detection threshold).

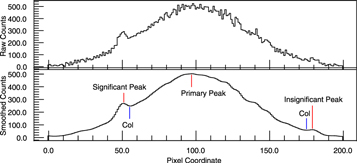

For any peak that is not the brightest peak in that footprint, it is possible to reach the brightest peak by following a sequence of the highest valued pixels between the two peaks. The lowest pixel along this (potentially meandering) path is the key col for this peak (as used in topographic descriptions of a mountain). If the key col for a given peak is less than FOOTPRINT_CULL_NSIGMA_DELTA (4.0 for PV3) sigmas below the peak of interest, the peak is considered to be locally insignificant and removed from the list of possible detections (see Figure 1). If more than one such path is possible, the path with the highest key col is used for this test. In the vicinity of a saturated star, the rule is somewhat more aggressive as the flat-topped or structured saturated top of a bright star may appear as multiple peaks with highly significant cols between them. However, this is an artifact of the proximity to saturation. Sources for which the peak is greater than 50% of the saturation value require the col to also be a fixed fraction (5%) of the saturation below the peak to avoid being marked as locally insignificant.

Figure 1. Illustration of peak finding and culling peaks within a footprint. Insignificant peaks within the footprint of a brighter peak are ignored in further processing. Note that this 1D illustration is representative of the full 2D path that may be followed from one peak to the next.

Download figure:

Standard image High-resolution imageSometimes, it is useful to know if a source has a near neighbor that may be affecting the photometry. Three flag bits are used to identify such possible situations. Peaks that are not the brightest peak within a single footprint have the flag bit PM_SOURCE_MODE2_HAS_BRIGHTER_NEIGHBOR set. This is a fairly common situation. We also define the following ratio to compare the flux of the bright source to the flux of a neighbor scaled by intervening area:  where

where  is the flux of the brightest neighbor in the footprint,

is the flux of the brightest neighbor in the footprint,  is the flux of the source of interest, and r is the separation between the two sources. If

is the flux of the source of interest, and r is the separation between the two sources. If  , the flag bit PM_SOURCE_MODE2_HAS_BRIGHT_NEIGHBOR_1 is set. If

, the flag bit PM_SOURCE_MODE2_HAS_BRIGHT_NEIGHBOR_1 is set. If  , the flag bit PM_SOURCE_MODE2_HAS_BRIGHT_NEIGHBOR_10 is set.

, the flag bit PM_SOURCE_MODE2_HAS_BRIGHT_NEIGHBOR_10 is set.

4.4.3. Centroid and Higher-order Moments

Once a collection of peaks has been identified, a number of basic properties of the sources related to the first, second, and higher moments are measured. These moments can be used for a crude classification of the sources. As discussed below, the second moments are used to select candidate stellar sources to be used in modeling the PSF and to identify "cosmic rays" and extended sources. The radial moment is used in the measurement of the Kron magnitudes (Kron 1980). The higher-order moments are provided primarily for image quality diagnostics.

In order to measure the moments, it is necessary to define an appropriate aperture in which the moments are measured. We also apply a "window function," down-weighting the pixels by a Gaussian, centered on the object, with size  chosen to be large compared to the PSF size,

chosen to be large compared to the PSF size,  . This window function reduces the noise of the measurement of the moments by suppressing the noisy pixels at high radial distance as well as by reducing the contaminating effects of neighboring stars. The choice of

. This window function reduces the noise of the measurement of the moments by suppressing the noisy pixels at high radial distance as well as by reducing the contaminating effects of neighboring stars. The choice of  and the aperture is an iterative process: for a given value of

and the aperture is an iterative process: for a given value of  , the PSF stars will have a measured value of the PSF size,

, the PSF stars will have a measured value of the PSF size,  which is different from the true value due to the effect of the window function. The measured value of the PSF size will be biased high or low depending on both the signal-to-noise of the source and the size of the window function compared to the true PSF size.

which is different from the true value due to the effect of the window function. The measured value of the PSF size will be biased high or low depending on both the signal-to-noise of the source and the size of the window function compared to the true PSF size.

These effects are illustrated in Figure 2 using simulated data. An image was generated with a PSF model matching the radial profile of the PS1 PSF model with  corresponding to an FWHM of 14. For bright stars, as the window function

corresponding to an FWHM of 14. For bright stars, as the window function  is increased, the measured FWHM rises from an initially underestimated value to meet the truth value. For faint stars, the measured value of the FWHM is initially underestimated as well. However, as the value of

is increased, the measured FWHM rises from an initially underestimated value to meet the truth value. For faint stars, the measured value of the FWHM is initially underestimated as well. However, as the value of  increases, the measured FWHM for faint stars rises and then overshoots the truth value, while the scatter increases. Thus, for large values of

increases, the measured FWHM for faint stars rises and then overshoots the truth value, while the scatter increases. Thus, for large values of  , the result is both a poorly estimated FWHM for the image and a trend with the S/N of the star. We attempt to minimize the scatter and trends with instrumental magnitude at the cost of overall bias.

, the result is both a poorly estimated FWHM for the image and a trend with the S/N of the star. We attempt to minimize the scatter and trends with instrumental magnitude at the cost of overall bias.

Figure 2. Example of the biases encountered when measuring the second moments. A simulated image was generated using the PS1 PSF profile. Panels (a)–(e) correspond to a different value of  , corresponding to the window FWHM values as marked. The solid red line is the true FWHM of the PSF used to generate the stars (14 in all cases). The blue solid line is the FWHM of the window function. The gray dots are the FWHM derived from the measured second moments for stars in the image. The median of this distribution (mag

, corresponding to the window FWHM values as marked. The solid red line is the true FWHM of the PSF used to generate the stars (14 in all cases). The blue solid line is the FWHM of the window function. The gray dots are the FWHM derived from the measured second moments for stars in the image. The median of this distribution (mag  ) is listed as "obs." The ratio of the median FWHM to the FWHM of the window function is listed as "ratio," while the ratio of the median FWHM to the true stellar FWHM is listed as "bias." The dotted blue line is the target (65% of the window function). In this example, we would choose

) is listed as "obs." The ratio of the median FWHM to the FWHM of the window function is listed as "ratio," while the ratio of the median FWHM to the true stellar FWHM is listed as "bias." The dotted blue line is the target (65% of the window function). In this example, we would choose  between 05 and 08 (FWHM between 264 and 352), so the dotted blue line would match the bright end of the gray dots. See discussion in the text for the choice of target window.

between 05 and 08 (FWHM between 264 and 352), so the dotted blue line would match the bright end of the gray dots. See discussion in the text for the choice of target window.

Download figure:

Standard image High-resolution imageIn a real image, we do not know the true value of the PSF size. If we simply choose a very large window function and rely on bright stars, our estimate of the PSF size will be quite noisy. Compounding this problem are the two additional facts that (1) we do not know which are the real stars (as opposed to bright galaxies or possible image artifacts) and (2) the brighter stars are themselves subject to additional biases due to saturation and other nonlinear effects (c.f., "the Brighter-Fatter" effect; Antilogus et al. 2014; Gruen et al. 2015). To make a robust choice for  , we choose a value such that the measured value of

, we choose a value such that the measured value of  is 65% of

is 65% of  . The resulting second-moment values are biased somewhat low (∼75% of the truth value for the PS1 PSF profile) but are relatively unbiased as a function of brightness.

. The resulting second-moment values are biased somewhat low (∼75% of the truth value for the PS1 PSF profile) but are relatively unbiased as a function of brightness.

To choose the value of  , we try a sequence of values spanning a range guaranteed to contain any reasonable seeing values. The values are specified in the psphot recipe as PSF.SIGMA.VALUES and have the following values for PS1 PV3: (1, 2, 3, 4.5, 6, 9, 12, 18) pixels ∼(026, 051, 077, 115, 154, 23, 31, 46). For each of these

, we try a sequence of values spanning a range guaranteed to contain any reasonable seeing values. The values are specified in the psphot recipe as PSF.SIGMA.VALUES and have the following values for PS1 PV3: (1, 2, 3, 4.5, 6, 9, 12, 18) pixels ∼(026, 051, 077, 115, 154, 23, 31, 46). For each of these  values, we then select candidate PSF stars based on the distribution of the measured

values, we then select candidate PSF stars based on the distribution of the measured  in the two principal directions:

in the two principal directions:  and

and  (see Section 4.5.2, below). For each test value of

(see Section 4.5.2, below). For each test value of  , we determine the ratio

, we determine the ratio  , i.e., the ratio of the window size to the observed PSF size. We interpolate to find a value of

, i.e., the ratio of the window size to the observed PSF size. We interpolate to find a value of  for which

for which  is expected to be 0.65. We use an aperture with a radius of

is expected to be 0.65. We use an aperture with a radius of  to select the pixels for the measurement of the moments.

to select the pixels for the measurement of the moments.

Once  has been determined, moments are measured as defined below:

has been determined, moments are measured as defined below:

where fi

is the flux in a pixel; si

is the local sky value for that pixel; wi

is the value of the window function for the pixel;  is the window-weighted sum of the source flux, used to renormalize the moments; and ri

is the radius of a pixel,

is the window-weighted sum of the source flux, used to renormalize the moments; and ri

is the radius of a pixel,  . The sums are performed over all (unmasked) pixels in the aperture. For the centroid calculation (

. The sums are performed over all (unmasked) pixels in the aperture. For the centroid calculation ( ), the peak coordinate (see Section 4.4.1) is used to define the aperture and the window function; for higher-order moments, the centroid is used to center the window function.

), the peak coordinate (see Section 4.4.1) is used to define the aperture and the window function; for higher-order moments, the centroid is used to center the window function.

The motivation for measuring these higher-order moments was to select exposures with image quality problems. For example, trefoil caused by errors in the collimation and alignment can in principle be detected with the third-order moments. In our experience, these statistics can be used to select some images with such problems, but we have not been able to use these values to exclude poor images from the data processing. If we were to reject images based on these moments, we would reject too many images with image quality issues that are not so poor as to preclude a useful analysis. A future machine-learning-based analysis starting with these moments might potentially provide a better rejection statistic, but such work is beyond the scope of this article.

For sources with peak flux above the saturation limit, the moments are generally poorly measured if the aperture defined by  is used. For these sources, the quality of the measurement is compromised by the saturation. However, it is still useful to estimate the first and second moments of the source in order to allow a crude measurement of the brightness from the wings of the source. In this case, a larger aperture, three times the standard aperture, is used to make a crude estimate. For such sources, the flag bit PM_SOURCE_MODE_BIG_RADIUS is set, and the source is ignored in all analyses below except for the analysis applied to very bright stars (Section 4.6.1).

is used. For these sources, the quality of the measurement is compromised by the saturation. However, it is still useful to estimate the first and second moments of the source in order to allow a crude measurement of the brightness from the wings of the source. In this case, a larger aperture, three times the standard aperture, is used to make a crude estimate. For such sources, the flag bit PM_SOURCE_MODE_BIG_RADIUS is set, and the source is ignored in all analyses below except for the analysis applied to very bright stars (Section 4.6.1).

If the measured centroid coordinates ( ) differ from the peak coordinates by a large amount (1.5

) differ from the peak coordinates by a large amount (1.5 ), then the peak is identified as being of poor quality and is skipped in further analyses; the flag bit PM_SOURCE_MOMENTS_FAILURE is set for such sources. In such a case, it is likely that the "peak" was identified in a region of flat flux distribution or many saturated or edge pixels. During the analysis of the moments, the background ("sky") model is also examined for the location of each source. The value of the background and the variance of the background are recorded for each source. In some cases, the sky model or the variance is not well defined at the location of a specific sources (e.g., due to an extrapolation failure). In these cases, the flag bits PM_SOURCE_SKY_FAILURE or PM_SOURCE_SKYVAR_FAILURE are set as appropriate, and the measurement of the moments is skipped.

), then the peak is identified as being of poor quality and is skipped in further analyses; the flag bit PM_SOURCE_MOMENTS_FAILURE is set for such sources. In such a case, it is likely that the "peak" was identified in a region of flat flux distribution or many saturated or edge pixels. During the analysis of the moments, the background ("sky") model is also examined for the location of each source. The value of the background and the variance of the background are recorded for each source. In some cases, the sky model or the variance is not well defined at the location of a specific sources (e.g., due to an extrapolation failure). In these cases, the flag bits PM_SOURCE_SKY_FAILURE or PM_SOURCE_SKYVAR_FAILURE are set as appropriate, and the measurement of the moments is skipped.

In addition to the moments above, the first and half-radial moments, Mr and Mh as defined below, are calculated:

Note that the window function is not applied in the calculation of these moments.

With the first radial moment, we can calculate a preliminary Kron radius and magnitude. Kron magnitudes are provided as an option for galaxy photometry. In addition, the comparison of Kron and PSF magnitudes is useful as a star–galaxy separator. The Kron radius (Kron 1980) is defined the be 2.5× the first radial moment. The Kron flux is the sum of (sky-subtracted) pixel fluxes within the Kron radius. We also calculate the flux in two related annular apertures: the Kron inner flux is the sum of pixel values for the annulus  , while the Kron outer flux is the sum of pixel values for

, while the Kron outer flux is the sum of pixel values for  . The first radial moment is limited at the low and high ends by

. The first radial moment is limited at the low and high ends by  , where

, where  is the first radial moment of the PSF stars, or

is the first radial moment of the PSF stars, or  if that cannot be determined.

if that cannot be determined.  is set to the size of the moment's aperture,

is set to the size of the moment's aperture,  . These Kron measurements are performed for all sources with a valid set of moments. At this stage, the measurement of the Kron parameters are preliminary as the aperture has been chosen as a fixed size relative to the size of the PSF. At a later stage, higher-quality Kron parameters appropriate to galaxies are measured with more care paid to the exact aperture used (Section 4.6.4).

. These Kron measurements are performed for all sources with a valid set of moments. At this stage, the measurement of the Kron parameters are preliminary as the aperture has been chosen as a fixed size relative to the size of the PSF. At a later stage, higher-quality Kron parameters appropriate to galaxies are measured with more care paid to the exact aperture used (Section 4.6.4).

4.5. PSF Determination

4.5.1. PSF Model versus Source Model

The PSF of an image describes the shape of all unresolved sources in the image. In a typical wide-field image, the shape of unresolved sources varies as a function of position in the image. The full PSF thus needs to include a model with parameters that vary across the image.

The PSF used by psphot consists of an analytical function combined with a pixelized representation of the residual differences between the analytical model and the true PSF. Both the shape parameters of the analytical model and the pixelized residual differences are allowed to vary in two dimensions across the images.

Within psphot, several analytical models may be used to describe the smooth portion of the PSF, but all share a few common characteristics. As an example, a simple model consists of a 2D elliptical Gaussian:

Here, the model parameters consist of the centroid coordinates ( ), the elliptical shape parameters (

), the elliptical shape parameters ( ), the model normalization (Io

), and the local value of the background (S).

), the model normalization (Io

), and the local value of the background (S).

A specific source will have a particular set of values for the model parameters, some of which depend on the PSF model and the position of the source in the image, while the rest are unique to the individual source. For the case of the elliptical Gaussian model, the PSF parameters would be the shape terms ( ) while the independent parameters would be the centroid, normalization and local sky values (

) while the independent parameters would be the centroid, normalization and local sky values ( ), though as noted below (Section 4.6.6), in practice we do not allow the sky to be fitted independently as we subtract the background model. Thus, the shape parameters are each a function of the source centroid coordinates:

), though as noted below (Section 4.6.6), in practice we do not allow the sky to be fitted independently as we subtract the background model. Thus, the shape parameters are each a function of the source centroid coordinates:

psphot represents the variation in the PSF parameters as a function of position in the image in two possible ways, specified by the configuration. The first option is to use a 2D polynomial that is fitted to the measured parameter values across the image. The second option is to use a grid of values that are measured for sources within a subregion of the image. In the latter case, the value at a specific coordinate in the image is determined via bilinear interpolation between the nearest grid points. The order of the polynomial or the sampling size of the grid is dynamically determined depending on the number of available of PSF stars. In the case of the PV3 analysis, the grid of values was used, with a maximum of 6 × 6 samples per GPC1 chip image (grid cells of size ∼34). For the earlier PV2 analysis, the maximum grid sampling was 3 × 3 per GPC1 chip image (grid cells of size ∼69). For the PV1 analysis, the polynomial representation was used, with up to third-order terms. The higher order representation was used for PV3 in order to follow some of the observed PSF variations in the images.

Figure 3 illustrates the 2D variations in the PSF shapes seen in PS1 data. This figure shows the FWHM and e1 and e2 polarizations of the stars as a function of position in four exposures. For images with good image quality, variations of the PSF shape due to the optical aberrations can be seen. The optical aberrations vary as the active collimation and alignment are adjusted and as the focus changes. These aberrations are coupled to the piston of the chips, which have been adjusted to crudely follow the focal surface (Chambers et al. 2016). During regular operations, images with large PSFs are usually caused by the atmosphere (seeing) or by telescope tracking errors, both of which result in common shapes across the field of the camera. In the figure, the top panel shows the circularization of the PSF due to the atmosphere washing out the lower-level variations caused by the optics.

Figure 3. Examples of 2D PSF variations. Each row represents an exposure. The leftmost column shows the distribution of FWHM across the camera; the median value in arcseconds is given in the inset. The middle column gives the e1 polarization measured from second moments (see Section 6.3), while the right column gives the e2 polarization.

Download figure:

Standard image High-resolution imageSeveral analytical functions that are likely candidates to describe the smooth portion of the PSF are available in psphot:

- 1.Gaussian :

- 2.Pseudo-Gaussian :

[PGAUSS]

[PGAUSS]

- 3.Variable Power Law :

[RGAUSS],

[RGAUSS],

- 4.Steep Power Law :

[QGAUSS]

[QGAUSS]

- 5.PS1 Power Law :

[PS1_V1]

[PS1_V1]

The Pseudo-Gaussian is a Taylor expansion of the Gaussian and is used by Dophot (Schechter et al. 1993). The latter profiles are similar to the Moffat profile form (Moffat 1969; Buonanno et al. 1983), with small differences. For these PSF models, the functions are evaluated at the pixel center. Unlike some galaxy model representations (see Section 5.3 ), the first derivatives of these functions approach zero as the radius approaches zero, so subpixel integration is not necessary. A user may choose to try more than one analytical function for a given image. As discussed below (Section 4.5.3), psphot can automatically choose the best model based on the quality of the PSF fits.

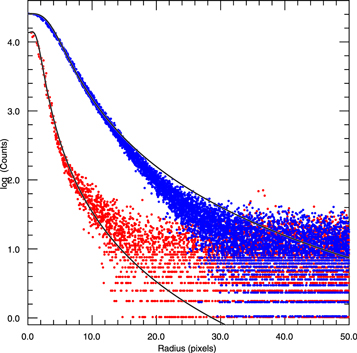

For the PS1 GPC1 analysis, we used the PS1_V1 model, which we found by experimentation to match well to the observed profiles generated by PS1. Figure 4 shows example radial profiles for moderately bright stars in fairly good (09) and poor (22) seeing. Using a fixed power-law exponent results in somewhat faster profile fitting compared to the variable power-law exponent model.

Figure 4. Radial profiles of stellar images from PS1. These two profiles illustrate the radial trend of the PS1 PSFs for a star with FWHM 09 (red) and 22 (blue). The red and blue points are individual pixel values. The black line shows the PSF model with radial trend of the form  . The models use a 1D average of the 2D analytical portion of the PSF models fitted to these specific stars in their standard analysis.

. The models use a 1D average of the 2D analytical portion of the PSF models fitted to these specific stars in their standard analysis.

Download figure:

Standard image High-resolution imageThe analytical models in psphot are written with a high degree of code abstraction, making it relatively easy to add different analytical models to the software. The same portion of code used to describe the analytical portion of the PSF sources is also used for galaxy models.

Once the smooth component of the PSF has been fitted with an analytical model, a pixel representation of the residuals is generated. This representation is constructed as an image of the expected residuals for any position in the image. The value of each pixel in the image model is determined from 2D fits to the measured residuals of the PSF stars.

The residual model is calculated using the residuals for all PSF stars. The residuals (and their errors) for each star are renormalized by the flux of the star to put them on a consistent flux scale. For each PSF star, all pixels within a user-specified radius (PSF.RESIDUALS.RADIUS=9) are selected for the measurement. For a given pixel in the model, the value is calculated from the four closest pixels in the PSF stars via bilinear interpolation. Pixels may be used in this analysis if their S/N exceeds a user-defined limit. For the PV3 3π analysis, we allowed all pixels within the user-specified radius, not limiting on the basis of the S/N.

Pixels for a given star that are more than a number of sigmas (PSF.RESIDUALS.NSIGMA=3.0) deviant from the median value of the pixels from all stars are rejected.

If no spatial variation is allowed, the mean or median value is calculated for the model pixel based on the user-specified mean statistic (PSF.RESIDUALS.STATISTIC=ROBUST_MEDIAN).

If spatial variation is requested, then the pixel values are fitted to a linear model:

where ![$R[({x}_{\mathrm{mod}},{y}_{\mathrm{mod}})][({x}_{\mathrm{ccd}},{y}_{\mathrm{ccd}})]$](https://content.cld.iop.org/journals/0067-0049/251/1/5/revision3/apjsabb82cieqn77.gif) is the value of the residual for model pixel

is the value of the residual for model pixel  for a star with centroid at image pixel

for a star with centroid at image pixel  . The parameters

. The parameters  are the elements of the 2D linear fit for each pixel

are the elements of the 2D linear fit for each pixel  in the model.

in the model.

4.5.2. Candidate PSF Source Selection

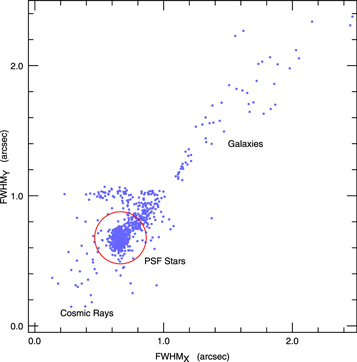

The first stage of determining the PSF model for an image is to identify a collection of sources in the image which are likely to be unresolved (i.e., stars). psphot uses the source sizes as estimated from the second moments to make the initial guess at a collection of unresolved sources. At this point, the program has measured the second-order moments for all sources identified by their peaks, as well as an approximate S/N, above the bright threshold. All sources with an S/N greater than a user-defined parameter (PSF_SN_LIM = 20.0 for PS1 PV3) are selected by psphot, though sources that have more than a certain number of saturated pixels are excluded at this stage. The program then examines the 2D plane of  in search of a concentrated clump of sources (see Figure 5). To do this, it constructs an artificial image with pixels representing the value of

in search of a concentrated clump of sources (see Figure 5). To do this, it constructs an artificial image with pixels representing the value of  , using

, using  as the size of a pixel in this artificial image. The binned

as the size of a pixel in this artificial image. The binned  plane is then examined to find a significant peak. Unless the image is extremely sparse, such a peak will be well defined and should represent the sources which are all very similar in shape. Other sources in the image will tend to land in very different locations, failing to produce a single peak. To avoid detecting a peak from the unresolved cosmic rays, sources that have second moments very close to 0 are ignored. For these sources, the flag bit PM_SOURCE_MODE_DEFECT is set.