Abstract

In light of recent advances in technology, there has been growing interest in virtual reality (VR) simulations for training purposes in a range of high-performance environments, from sport to nuclear decommissioning. For a VR simulation to elicit effective transfer of training to the real-world, it must provide a sufficient level of validity, that is, it must be representative of the real-world skill. In order to develop the most effective simulations, assessments of validity should be carried out prior to implementing simulations in training. The aim of this work was to test elements of the physical fidelity, psychological fidelity and construct validity of a VR golf putting simulation. Self-report measures of task load and presence in the simulation were taken following real and simulated golf putting to assess psychological and physical fidelity. The performance of novice and expert golfers in the simulation was also compared as an initial test of construct validity. Participants reported a high degree of presence in the simulation, and there was little difference between real and virtual putting in terms of task demands. Experts performed significantly better in the simulation than novices (p = .001, d = 1.23), and there was a significant relationship between performance on the real and virtual tasks (r = .46, p = .004). The results indicated that the simulation exhibited an acceptable degree of construct validity and psychological fidelity. However, some differences between the real and virtual tasks emerged, suggesting further validation work is required.

Similar content being viewed by others

1 Introduction

The use of immersive training is a growing area of interest in many sectors. Virtual reality (VR) training has already been applied to sporting skills (Gray 2019; Neumann et al. 2018), as well as surgery (Gurusamy et al. 2008) and rehabilitation (Adamovich et al. 2009). This often involves the generation of an immersive computer-simulation which the user can interact with and manipulate (Burdea and Coiffet 2003; Wann and Mon-Williams 1996). Consumer grade VR equipment that delivers such interactive and immersive environments is becoming increasingly affordable and portable, thus providing new possibilities for training. For these training approaches to enable effective transfer to real-world performance, simulations must be representative of the real skills that they intend to train and accurately represent real performance (Bright et al. 2012; Gray 2019). Therefore, more rigorous testing of the validity and fidelity of environments is required before they can be widely adopted in sports training (Harris et al. 2020). Here, we explore an evidence-based approach to simulation validation by testing the fidelity and validity of a golf putting simulator.

In the sporting domain, the existing (but sparse) research has largely supported the effectiveness of VR training for visuomotor skills such as baseball batting, darts and table tennis (Gray 2017; Lammfromm and Gopher 2011; Tirp et al. 2015; Todorov et al. 1997). However, it remains unclear which facets of a simulation determine training effectiveness. Fidelity and validity refer to particular qualities of the simulation that contribute to training success (Gray 2019). Fidelity refers to how well a simulation reconstructs the real-world environment, both in terms of appearance but also the emotional states, cognitions and behaviours it elicits from its users. Meanwhile, validity broadly relates to accurate measurement or reproduction of real task performance. This is not to say the simulation is the same, but that it captures key features of the task (Stoffregen et al. 2003). While a high degree of physical fidelityFootnote 1 (how a simulation looks, feels and sounds) is useful for immersing the user and achieving engagement (Ijsselsteijn et al. 2004), skills can still be learned in environments with low physical fidelity. For instance, positive real-world transfer has been observed for juggling and table tennis with low fidelity 2D environments (Lammfromm and Gopher 2011; Todorov et al. 1997). Gray (2019) suggests that, with regards to training, psychological fidelity (the extent to which the environment replicates the perceptual-cognitive demands of the real task) is more important than physical fidelity. High psychological fidelity means athletes are more likely to utilise similar perceptual information to control their actions, display similar gaze behaviour, and experience a similar level of cognitive demand to the real-world task. These additional elements are likely to facilitate transfer (Gray 2019; Harris et al. 2020). Therefore, in order to develop more effective simulations, more attention must be paid to testing a range of validity metrics.

First, a valid simulation, which is likely to produce positive real-world transfer, should have good physical fidelity to provide an immersive experience and elicit user engagement (Bowman and McMahan 2007; Ijsselsteijn et al. 2004). Second, it should display construct validity, that is, it should provide a good representation of the task it intends to train, and accurately reflect performance. For instance, for a simulation to be a valid representation of a sporting skill, individuals with real-world expertise should also be experts in the simulation. This kind of expert versus novice discrimination has been widely used for validating surgical simulations (Bright et al. 2012; Sweet et al. 2004), as it indicates whether the simulation has captured some important features of task performance. Finally, the perceptual-cognitive demands of the task should also resemble the real task (i.e. psychological fidelity). Ideally, measures such as gaze behaviour and mental effort should be employed to compare the psychological demands of the virtual and real tasks. Additional types of fidelity that could contribute to successful design include ergonomic/biomechanical and affective/emotional fidelity (see summary of types of fidelity in Table 1).

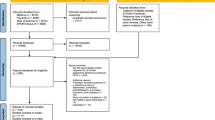

Consequently, we aimed to assess the validity of a golf putting simulation in a variety of ways. Following the recommendations of recent framework for the testing and validation of simulated environments (Harris et al. 2020) we aimed to assess a number of elements of fidelity and validity of the VR environment. Firstly, participants were asked to report feelings of presenceFootnote 2 to ensure that the simulation was of sufficiently high physical fidelity to immerse the user in the task (Slater 2003). Secondly, as in medical simulation validation we assessed construct validity by comparing the performance of novices and experts in the simulation (Bright et al. 2012; Sweet et al. 2004). If real-world expertise corresponds to expertise in the simulation, it would provide initial evidence that the simulation is a good representation of the real task. It was expected that expert golfers would exhibit significantly better performance in both real and simulated putting, and that there would be a correspondence between real-world and VR performance. Thirdly, to address aspects of psychological fidelity, that is the perceptual and cognitive demands of the simulation, we explored whether the cognitive demands of the simulation were similar to the real-world using a self-report measure of task load (the SIM-TLX; Harris et al. 2019b). Additionally, we compared perceived distance to the hole between real and VR tasks. Concerns have been raised that stereoscopic viewing in head-mounted displays can influence the performance of visuomotor skills due to the conflict between cues to depth (Bingham et al. 2001; Harris et al. 2019a). Additionally, some early three degrees of freedom VR systems induced a perception of ‘flatness’ (Willemsen et al. 2008). As processing of distance and depth information is important for performing an aiming skill such as golf putting, we assessed whether distance was perceived to be similar between the real and VR tasks.

2 Methods

2.1 Participants

Thirty-six participants took part in the study; 18 expert amateur golfers (11 male, mean age = 29.2 years, SD = 13.7) and 18 novice golfers (10 male, mean age = 21.9 years, SD = 1.8). Expert golfers were recruited from three competitive golf teams (University of Exeter Golf Club, Exeter Golf and Country Club, and Devon Golf men’s first team). All expert golfers were of a high level and held active category one handicaps (≤ 5.0), with an average handicap of 1.7 (SD = 2.5). Novice golfers were recruited from undergraduate sport science students at the University of Exeter (mean age = 21.94 years, SD = 1.83). ‘Novice golfers’ were defined as individuals with no official golf handicaps or prior formal golf putting experience (as in Moore et al. 2012). Participants were provided with details of the study before attending testing, and gave written consent before testing began. Ethical approval was obtained from the University Ethics Committee prior to data collection.

2.2 Task and materials

2.2.1 VR golf task

The VR golf task (see Fig. 1) was developed using the gaming engine Unity 2018.2.10.f1 and the Unity Experiment Framework (Brookes et al. 2019). The simulation was presented using an HTC Vive HMD (HTC Inc., Taoyuan City, Taiwan), and a 3xs laptop (Scan Computers, Bolton, UK) with an i7 processer and GeForce GTX 1080 graphics card (NVIDIA Inc., Santa Clara, CA). A Vive tracker sensor was attached to the head of a real putter to allow tracking and create the VR putter. Auditory feedback (which sounded like a club striking a ball) was provided on contact with the ball, but no additional haptic feedback was provided. The ball was matched in size and weight to a real golf ball. Participants putted from 10 ft (3.05 m) to a target the same size and shape (diameter 10.80 cm) as a standard golf hole. The game incorporated background audio (wind in the trees and bird noises) to simulate the real-world and enhance immersion.

2.2.2 Real-world golf task

Real-world putts were taken from 10ft (3.05 m) to a target the size of a regulation hole (diameter 10.80 cm) on an indoor artificial putting green. As in the simulation, the hole was covered so it remained visible but the ball would not drop in. Participants used a standard putter (Cleveland Classic Collection HB 1 putter), and regulation size (4.27 cm diameter) white golf balls.

2.3 Measures

2.3.1 Presence questionnaire

Expert participants were asked to rate their sense of presence—the feeling that they were really in the virtual world—using questions from the Presence Questionnaire (Slater et al. 1995). These questions relate to the experience of being in the virtual environment, such as ‘To what extent were there times during the experience when the virtual environment was the reality for you?’ There are three questions in total and responses are given on a seven-point Likert scale.

2.3.2 Putting performance

Putting performance in both the real-world and VR was operationalized as radial error of the ball from the hole (i.e. the two-dimensional Euclidean distance between the top of the ball and the edge of the hole; in cm), as in Walters-Symons et al. (2018). In the real-world condition the distance was measured with a tape measure following each attempt and was recorded automatically by the simulation in the VR condition. If the ball landed on top of the hole, a score of zero was recorded. On real-world trials where the ball hit the boundary of the putting green (90 cm behind the hole), the largest possible error was recorded (90 cm) (as in Moore et al. 2012).

2.3.3 Perceived distance

Following both VR and real-world baseline putts participants were asked to make a verbal estimate, in feet, of the distance between the hole and the putting location. The estimate was recorded by the experimenter.

2.3.4 Simulation task load index (SIM-TLX)

The SIM-TLX (Harris et al. 2019a) assesses workload during simulated tasks using a two-part evaluation. Following task completion participants rated the level of demand they had experienced on nine bipolar scales, reflecting different workload dimensions: mental demands; physical demands; temporal demands; frustration; task complexity; situational stress; distractions; perceptual strain; and task control. Ratings were made on a visual analogue scale anchored between ‘very low’ and ‘very high’ (see supplementary materials). Participants also rated the relative importance of each workload dimension for the task, by making a series of 45 pair-wise comparisons between dimensions. A workload score for each dimension is calculated based on the product of the visual analogue scores and the weighting dimension. An overall workload score is obtained from the sum of all nine dimensions (cf. the NASA-TLX; Hart and Staveland 1988).

2.4 Procedure

Participants attended the lab on one occasion for approximately 20 min. Participants were given just 3 familiarisation putts on each of the real and VR tasks to avoid any learning effects. They then completed 10 real-world putts and 10 putts in VR, which was done in a counterbalanced order to account for order effects. Participants were instructed to land the ball as close to the ‘hole’ as possible. After each of the baseline assessments, participants were asked to estimate the distance to the hole. Following the VR putts, experts also answered the Presence Questionnaire and completed the SIM-TLX.

2.5 Statistical analysis

Statistical analysis was performed in JASP (v0.9.2; JASP team 2018). Data were checked for homogeneity of variance (Levene’s test), and skewness and kurtosis. Performance data exceeding 3 standard deviations from the mean were excluded (three data points for VR putting and zero data points for real-world putting). A 2 (Group: Expert vs Novice) × 2 (Task: real-world vs VR) mixed ANOVA was used to compare both putting accuracy and distance estimation for novice and expert groups between VR and real-world tasks. A MANOVA was run on overall TLX questionnaire scores, with follow-up t-tests to examine individual scales. Cohen’s d effect sizes were calculated for all t-tests, and partial eta squared for all F-tests. All data are available through the Open Science Framework (https://osf.io/dchgz/).

3 Results

3.1 Presence

Overall scores on the Presence Questionnaire (completed by expert golfers only) could potentially range from 0 to 21 (21 indicating the highest level of presence). The mean score during VR putting was 12.7 (SD = 4.5), above the mid-way point of the scale, indicating a good level of presence in the VR environment. The distribution plot below (Fig. 2) shows, however, that there was a high degree of variation across participants.

3.2 Putting performance

To compare group differences in real-world and VR task performance a 2 (task) x 2 (group) ANOVA was run on radial error scores. A main effect of task indicated that radial error scores were significantly higher in the VR task, F(1,34) = 25.29, p < .001, η 2p = .43. There was also a main effect of group, with experts displaying lower radial errors, F(1,34) = 33.06, p < .001, η 2p = .49. There was no interaction effect, F(1,34) = 0.25, p = .62, η 2p = .01. As expected, Bonferroni-Holm corrected t-tests showed that, compared to novice golfers (M = 51.6 cm, SD = 50.9), expert golfers (M = 25.5 cm, SD = 23.4) displayed significantly lower radial errors when putting in the real-world, t(34) = 6.84, p < .001, d = 2.28. Similarly, compared to novice golfers (M = 74.2 cm, SD = 29.3), expert golfers (M = 44.1 cm, SD = 18.5) displayed significantly lower radial errors when putting in VR, t(34) = 3.68, p = .001, d = 1.23 (Fig. 3). Overall there was a significant positive relationship between performance on the real and virtual tasks, r(35) = .46, p = .004.

3.3 Perceived putting distance

A 2 (group) x 2 (task) mixed ANOVA showed that there was no difference in perceived putting distance between real-world and VR tasks, F(1,34) = 0.07, p = .79, η 2p = .002. There was, however, a significant effect of group, F(1,34) = 16.97, p < .001, η 2p = .330, with novices estimating the hole to be closer than their more experienced golfing counterparts (see Fig. 4). There was no interaction effect, F(1,34) = 0.18, p = .67, η 2p = .005.

3.4 Task load

A one-way multivariate ANOVA was run to test whether there was an overall difference in task load (as measured by the SIM-TLX) between real and virtual putting. There was an overall effect of group, F(1,34) = 2.75, p = .02, Pillai’s trace = 0.49. Follow-up t-tests, with Bonferroni-Holm correction, revealed that the only dimension of task load that significantly differed between VR and real-world conditions was perceptual strain (p = .02), which was higher in VR. All other comparisons were non-significant (p’s > .24). Figure 5 suggests that situational stress and time demands were also somewhat higher in the VR condition.

4 Discussion

Despite previous work extolling the benefits of training sporting skills in VR (Gray 2017; Lammfromm and Gopher 2011; Tirp et al. 2015), methods for testing the validity of environments prior to their use are not well established (Harris et al. 2020). For the field of VR training to progress, rigorous approaches to simulation validation are required (e.g. Bright et al. 2012; Sweet et al. 2004; Tirp et al. 2015). Consequently, we aimed to apply an evidence-based method in testing the validity and fidelity of a golf putting simulator.

Overall, evaluation of the simulation indicated a fairly good correspondence between the real and virtual tasks. Firstly, expert users reported a moderate to high level of presence in the environment, relative to previous use of this scale (Usoh et al. 2000), suggesting that the physical fidelity was sufficient to immerse users in the task. Presence is unlikely to be a major determinant of training efficacy but is an important first step for user engagement (Bowman and McMahan 2007; Ijsselsteijn et al. 2004).

Secondly, the construct validity of the simulation was supported, as expert golfers outperformed novices in both the real and virtual tasks, displaying significantly lower radial errors. We also found an overall positive correlation between real and virtual performance. The radial errors of both groups were, however, larger in VR, as was the variance in performance (see Fig. 3). Additionally, the correlation between real and VR performance was only moderate (r = .46). This highlights that, although the simulation showed a certain level of construct validity, there remain important differences between the two tasks. An additional finding, which further supports the construct validity of the simulation, was that expert golfers were more accurate at estimating putting distance in both tasks. It has previously been demonstrated that golfers are more accurate at estimating egocentric distance (Durgin and Li 2011) and Fig. 4 illustrates that while the expert group were accurate across both conditions, admittedly with some variability, novices consistently underestimated distance. Underestimation of distance has previously been reported as a general effect in head-mounted VR, although this effect does seem attenuated in newer systems (Interrante et al. 2006). A similar degree of underestimation by novices was seen in both real and VR tasks in the present work.

With regard to assessing psychological fidelity, there was no difference in perceived putting distance between the two tasks. As distance estimation plays an important role in the golf putt, and perceptual information can be distorted in VR (Willemsen et al. 2008), this is an encouraging result. One possibility to consider, however, is that participants could have assumed that distances were similar and aligned their second estimate with their first. Additionally, self-report scales were used to assess whether the demands of the VR task differed from the real task. After accounting for multiple comparisons, only the degree of perceptual strain was significantly different between the tasks. Perceptual difficulties in HMDs are not uncommon; users can experience discomfort as a result of rendering lag or multisensory conflict during locomotion, which may preclude their use by some individuals (Cobb et al. 1999). Overall, these assessments largely supported the psychological fidelity of the VR task.

5 Conclusions

In this study, we aimed to adopt an evidence-based approach to testing the validity of a VR simulation of a sporting skill. Assessment of construct validity clearly indicated that the putting simulation was sufficiently representative of the real task to distinguish novice from expert performers. Additionally, the simulation created a good degree of presence in the virtual environment. Assessments of task load indicated some differences from the real task, but overall the simulation was a fair representation of real putting. Nevertheless, this does not mean that the simulation is fully validated, and further work is still required, such as assessing biomechanical fidelity through comparisons of body and putter movement to determine whether the simulation elicits realistic putting mechanics. Future work may seek to investigate additional sources of fidelity, such as affective and biomechanical fidelity (e.g. Bideau et al. 2003, 2004) and their relationship with transfer of learning. Additionally, greater fidelity in terms of self-visualization may contribute to more embodiment and presence in the simulation and could influence the acquisition of sporting skills (Slater et al. 2009).

In general, caution is still required in the use of VR for training sporting skills. The close coupling of sensory inputs with motor responses (needed for sporting expertise) may be challenging to accurately simulate with current VR technologies, which often provide limited haptic feedback and unusual cues to depth (see Harris et al. 2019a for a review). However, studies such as this one serve to illustrate that VR simulations may be sufficiently representative—for some skills and for some purposes—to allow the study and/or training of sporting skills.

Notes

The term face validity is sometimes used to refer to the physical accuracy of a simulation, particularly in the surgical training literature (e.g. Bright et al. 2012).

Presence refers to the psychological response of feeling ‘in the simulation’. Presence is linked to immersion, which is the objective level of sensory fidelity provided by the VR system (Slater 2003), but the terms are sometimes used interchangeably.

References

Adamovich SV, Fluet GG, Tunik E, Merians AS (2009) Sensorimotor training in virtual reality: a review. NeuroRehabilitation 25:29–44. https://doi.org/10.3233/NRE-2009-0497

Bideau B, Kulpa R, Ménardais S, Fradet L, Multon F, Delamarche P, Arnaldi B (2003) Real handball goalkeeper vs virtual handball thrower. Presence Teleoper Virtual Environ 12(4):411–421

Bideau B, Multon F, Kulpa R, Fradet L, Arnaldi B (2004) Virtual reality applied to sports: do handball goalkeepers react realistically to simulated synthetic opponents? In: Proceedings of the 2004 ACM SIGGRAPH international conference on Virtual Reality continuum and its applications in industry, pp 210–216

Bingham GP, Bradley A, Bailey M, Vinner R (2001) Accommodation, occlusion, and disparity matching are used to guide reaching: a comparison of actual versus virtual environments. J Exp Psychol Hum 27(6):1314–1334. https://doi.org/10.1037/0096-1523.27.6.1314

Bowman DA, McMahan RP (2007) Virtual reality: how much immersion is enough? Computer 40:36–43. https://doi.org/10.1109/MC.2007.257

Bright E, Vine S, Wilson MR, Masters RSW, McGrath JS (2012) Face validity, construct validity and training benefits of a virtual reality turp simulator. Int J Surg 10:163–166. https://doi.org/10.1016/j.ijsu.2012.02.012

Brookes J, Warburton M, Alghadier M, Mon-Williams M, Mushtaq F (2019) Studying human behavior with virtual reality: the unity experiment framework. Res Methods Behav. https://doi.org/10.3758/s13428-019-01242-0

Burdea GC, Coiffet P (2003) Virtual reality technology. Wiley, New York

Cobb SVG, Nichols S, Ramsey A, Wilson JR (1999) Virtual reality-induced symptoms and effects (VRISE). Presence Teleoper Virtual Environ 8:169–186. https://doi.org/10.1162/105474699566152

Durgin FH, Li Z (2011) Perceptual scale expansion: an efficient angular coding strategy for locomotor space. Atten Percept Psychophys 73:1856–1870. https://doi.org/10.3758/s13414-011-0143-5

Gray R (2017) Transfer of training from virtual to real baseball batting. Front Psychol. https://doi.org/10.3389/fpsyg.2017.02183

Gray R (2019) Virtual environments and their role in developing perceptual-cognitive skills in sports. In: Williams AM, Jackson RC (eds) Anticipation and decision making in sport. Taylor & Francis, New York

Gurusamy K, Aggarwal R, Palanivelu L, Davidson BR (2008) Systematic review of randomized controlled trials on the effectiveness of virtual reality training for laparoscopic surgery. Br J Surg 95:1088–1097. https://doi.org/10.1002/bjs.6344

Harris DJ, Buckingham G, Wilson MR, Vine SJ (2019a) Virtually the same? How impaired sensory information in virtual reality may disrupt vision for action. Exp Brain Res 237:2761–2766. https://doi.org/10.1007/s00221-019-05642-8

Harris DJ, Wilson MR, Vine SJ (2019b) Development and validation of a simulation workload measure: the Simulation Task Load Index (SIM-TLX). Virtual Real. https://doi.org/10.1007/s10055-019-00422-9

Harris DJ, Bird JM, Smart AP, Wilson MR, Vine SJ (2020) A framework for the testing and validation of simulated environments in experimentation and training. Front Psychol 11:605. https://doi.org/10.3389/fpsyg.2020.00605

Hart SG, Staveland LE (1988) Development of NASA-TLX (task load index): results of empirical and theoretical research. In: Advances in psychology, Elsevier, Amsterdam, pp 139–183. https://doi.org/10.1016/S0166-4115(08)62386-9

Ijsselsteijn WA, Bonants R, Westerink J, deJager M (2004) Effects of immersion and a virtual coach on motivation and presence in a home fitness application. In: Proceedings of virtual reality design and evaluation workshop, pp 22–23

Interrante V, Ries B, Anderson L (2006) Distance perception in immersive virtual environments, revisited. In: IEEE virtual reality conference (VR 2006), pp 3–10. https://doi.org/10.1109/VR.2006.52

JASP team (2018) JASP (version 0.9)[computer software]. University of Amsterdam, Amsterdam

Lammfromm R, Gopher D (2011) Transfer of skill from a virtual reality trainer to real juggling. BIO Web Conf 1:00054. https://doi.org/10.1051/bioconf/20110100054

Moore LJ, Vine SJ, Cooke A, Ring C, Wilson MR (2012) Quiet eye training expedites motor learning and aids performance under heightened anxiety: the roles of response programming and external attention. Psychophysiology 49:1005–1015. https://doi.org/10.1111/j.1469-8986.2012.01379.x

Neumann DL, Moffitt RL, Thomas PR, Loveday K, Watling DP, Lombard CL, Antonova S, Tremeer MA (2018) A systematic review of the application of interactive virtual reality to sport. Virtual Real 22:183–198. https://doi.org/10.1007/s10055-017-0320-5

Slater M (2003) A note on presence terminology. Presence-Connect 3:1–5

Slater M, Usoh M, Steed A (1995) Taking steps: the influence of a walking technique on presence in virtual reality. ACM Trans Comput Hum Interact 2:201–219. https://doi.org/10.1145/210079.210084

Slater M, Pérez Marcos D, Ehrsson H, Sanchez-Vives MV (2009) Inducing illusory ownership of a virtual body. Front Neurosci 3:29

Stoffregen TA, Bardy BG, Smart LJ, Pagulayan R (2003) On the nature and evaluation of fidelity in virtual environments. In: Hettinger LJ, Haas MW (eds) Virtual and adaptive environments: applications, implications and human performance issues. Lawrence Erlbaum Associates, Mahwah, pp 111–128. https://doi.org/10.1201/9781410608888.ch6

Sweet R, Kowalewski T, Oppenheimer P, Weghorst S, Satava R (2004) Face, content and construct validity of the university of Washington virtual reality transurethral prostate resection trainer. J Urol 172:1953–1957. https://doi.org/10.1097/01.ju.0000141298.06350.4c

Tirp J, Steingröver C, Wattie N, Baker J, Schorer J (2015) Virtual realities as optimal learning environments in sport: a transfer study of virtual and real dart throwing. Psychol Test Assess Model 57:13

Todorov E, Shadmehr R, Bizzi E (1997) Augmented feedback presented in a virtual environment accelerates learning of a difficult motor task. J Mot Behav 29:147–158. https://doi.org/10.1080/00222899709600829

Usoh M, Catena E, Arman S, Slater M (2000) Using presence questionnaires in reality. Presence Teleoper Virtual Environ 9:497–503. https://doi.org/10.1162/105474600566989

Walters-Symons R, Wilson M, Klostermann A, Vine S (2018) Examining the response programming function of the Quiet Eye: Do tougher shots need a quieter eye? Cogn Process 19:47–52. https://doi.org/10.1007/s10339-017-0841-6

Wann J, Mon-Williams M (1996) What does virtual reality NEED? Human factors issues in the design of three-dimensional computer environments. Int J Hum Comput Stud 44:829–847. https://doi.org/10.1006/ijhc.1996.0035

Willemsen P, Gooch AA, Thompson WB, Creem-Regehr SH (2008) Effects of stereo viewing conditions on distance perception in virtual environments. Presence Teleoper Virtual Environ 17:91–101. https://doi.org/10.1162/pres.17.1.91

Funding

This work was supported by a Royal Academy of Engineering UKIC Fellowship awarded to D Harris. F.M and M.M-W were supported by Fellowships from the Alan Turing Institute and a Research Grant from the EPSRC (EP/R031193/1).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Harris, D.J., Buckingham, G., Wilson, M.R. et al. Exploring sensorimotor performance and user experience within a virtual reality golf putting simulator. Virtual Reality 25, 647–654 (2021). https://doi.org/10.1007/s10055-020-00480-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10055-020-00480-4