Abstract

Non-convex optimization with local search heuristics has been widely used in machine learning, achieving many state-of-art results. It becomes increasingly important to understand why they can work for these NP-hard problems on typical data. The landscape of many objective functions in learning has been conjectured to have the geometric property that “all local optima are (approximately) global optima”, and thus they can be solved efficiently by local search algorithms. However, establishing such property can be very difficult. In this paper, we analyze the optimization landscape of the random over-complete tensor decomposition problem, which has many applications in unsupervised learning, especially in learning latent variable models. In practice, it can be efficiently solved by gradient ascent on a non-convex objective. We show that for any small constant \(\varepsilon > 0\), among the set of points with function values \((1+\varepsilon )\)-factor larger than the expectation of the function, all the local maxima are approximate global maxima. Previously, the best-known result only characterizes the geometry in small neighborhoods around the true components. Our result implies that even with an initialization that is barely better than the random guess, the gradient ascent algorithm is guaranteed to solve this problem. However, achieving such a initialization with random guess would still require super-polynomial number of attempts. Our main technique uses Kac–Rice formula and random matrix theory. To our best knowledge, this is the first time when Kac–Rice formula is successfully applied to counting the number of local optima of a highly-structured random polynomial with dependent coefficients.

Similar content being viewed by others

Notes

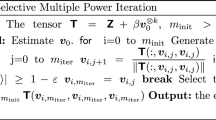

Power method in this case is equivalent to set \(x^{t+1} = \frac{\nabla f}{\Vert \nabla f(x^t)\Vert }\), or \(x = \frac{\sum _{i=1}^n \langle {a_i,x}\rangle ^3 a_i}{\Vert \sum _{i=1}^n \langle {a_i,x}\rangle ^3 a_i\Vert }\) in our model. It is exactly equivalent to gradient ascent with a properly chosen finite learning rate.

We note that by stochastic gradient descent we meant the algorithm that is analyzed in [4]. To get a global maximum in polynomial time (polynomial in \(\log (1/\varepsilon )\) to get \(\varepsilon \) precision), one also needs to slightly modify stochastic gradient descent in the following way: one can run SGD until 1/d accuracy and then switch to gradient descent. Since the problem is locally strongly convex, the local convergence is linear.

We omit the long list of regularity conditions here for simplicity. See more details at [40, Theorem 12.1.1]. The most important regularity condition requires that f is morse with probability 1. This is implied by Lemma 2 below. The proof of this lemma is the same as the proof of Lemma 3.1 in Auffinger et al. [42].

References

Ge, R., Ma, T.: On the optimization landscape of tensor decompositions. In: Advances in Neural Information Processing Systems, pp. 3653–3663 (2017)

Dauphin, Y.N., Pascanu, R., Gulcehre, C., Cho, K., Ganguli, S., Bengio, Y.: Identifying and attacking the saddle point problem in high-dimensional non-convex optimization. In: Advances in Neural Information Processing Systems, pp. 2933–2941 (2014)

Choromanska, A., Henaff, M., Mathieu, M., Arous, G.B., LeCun, Y.: The loss surfaces of multilayer networks. In: AISTATS (2015)

Ge, R., Huang, F., Jin, C., Yuan, Y.: Escaping from saddle points—online stochastic gradient for tensor decomposition. In: Proceedings of The 28th Conference on Learning Theory, pp. 797–842 (2015)

Lee, J.D., Simchowitz, M., Jordan, M.I., Recht, B.: Gradient descent only converges to minimizers. In: Proceedings of the 29th Conference on Learning Theory, COLT 2016, New York, USA, June 23–26, 2016, pp. 1246–1257 (2016)

Nesterov, Y., Polyak, B.T.: Cubic regularization of newton method and its global performance. Math. Program. 108(1), 177–205 (2006)

Sun, J., Qu, Q., Wright, J.: When are nonconvex problems not scary? Preprint arXiv:1510.06096 (2015)

Bandeira, A.S., Boumal, N., Voroninski, V.: On the low-rank approach for semidefinite programs arising in synchronization and community detection. In: Conference on Learning Theory, pp. 361–382 (2016)

Bhojanapalli, S., Neyshabur, B., Srebro, N.: Global optimality of local search for low rank matrix recovery. In: Advances in Neural Information Processing Systems, pp. 3873–3881 (2016)

Kawaguchi, K.: Deep Learning without Poor Local Minima. ArXiv e-prints (2016)

Ge, R., Lee, J.D., Ma, T.: Matrix completion has no spurious local minimum. In: Advances in Neural Information Processing Systems, pp. 2973–2981 (2016)

Hardt, M., Ma, T.: Identity matters in deep learning. In: ICLR (2017)

Hardt, M., Ma, T., Recht, B.: Gradient descent learns linear dynamical systems. J. Mach. Learn. Res. 19(1), 1025–1068 (2018)

Chang, J.T.: Full reconstruction of Markov models on evolutionary trees: identifiability and consistency. Math. Biosci. 137, 51–73 (1996)

Mossel, E., Roch, S.: Learning nonsingular phylogenies and hidden Markov models. Ann. Appl. Probab. 16(2), 583–614 (2006)

Hsu, D., Kakade, S.M., Zhang, T.: A spectral algorithm for learning hidden Markov models. J. Comput. Syst. Sci. 78(5), 1460–1480 (2012)

Anandkumar, A., Hsu, D., Kakade, S.M.: A method of moments for mixture models and hidden Markov models. In: COLT (2012)

Anandkumar, A., Foster, D.P., Hsu, D., Kakade, S.M., Liu, Y-K: A spectral algorithm for latent Dirichlet allocation. In: Advances in Neural Information Processing Systems 25 (2012)

Hsu, D., Kakade, S.M.: Learning mixtures of spherical Gaussians: moment methods and spectral decompositions. In: Fourth Innovations in Theoretical Computer Science (2013)

Comon, P., Luciani, X., De Almeida, A.: Tensor decompositions, alternating least squares and other tales. J. Chemom. 23(7–8), 393–405 (2009)

Kolda, T.G., Mayo, J.R.: Shifted power method for computing tensor eigenpairs. SIAM J. Matrix Anal. Appl. 32(4), 1095–1124 (2011)

Ge, R., Lee, J.D., Ma, T.: Learning one-hidden-layer neural networks with landscape design. In: International Conference on Learning Representations (2018)

Janzamin, M., Sedghi, H., Anandkumar, A.: Beating the perils of non-convexity: guaranteed training of neural networks using tensor methods. ArXiv e-prints (2015)

Cohen, N., Shashua, A.: Convolutional rectifier networks as generalized tensor decompositions. In: International Conference on Machine Learning, pp. 955–963 (2016)

Arora, S., Ge, R., Ma, T., Moitra, A.: Simple, efficient and neural algorithms for sparse coding. In: Proceedings of The 28th Conference on Learning Theory (2015)

De Lathauwer, L., Castaing, J., Cardoso, J.-F.: Fourth-order cumulant-based blind identification of underdetermined mixtures. IEEE Trans. Signal Process. 55(6), 2965–2973 (2007)

Bhaskara, A., Charikar, M., Moitra, A., Vijayaraghavan, A.: Smoothed analysis of tensor decompositions. In: Proceedings of the 46th Annual ACM Symposium on Theory of Computing, pp. 594–603. ACM (2014)

Goyal, N., Vempala, S., Xiao, Y.: Fourier pca and robust tensor decomposition. In: Proceedings of the Forty-Sixth Annual ACM Symposium on Theory of Computing, pp. 584–593 (2014)

Barak, B., Kelner, J.A., Steurer, D.: Dictionary learning and tensor decomposition via the sum-of-squares method. In: Proceedings of the Forty-Seventh Annual ACM on Symposium on Theory of Computing, STOC 2015, Portland, OR, USA, June 14–17, 2015, pp. 143–151 (2015)

Ge, R., Ma, T.: Decomposing overcomplete 3rd order tensors using sum-of-squares algorithms. Preprint arXiv:1504.05287 (2015)

Hopkins, S.B., Schramm, T., Shi, J., Steurer, D.: Fast spectral algorithms from sum-of-squares proofs: tensor decomposition and planted sparse vectors. In: Proceedings of the 48th Annual ACM SIGACT Symposium on Theory of Computing, STOC 2016, Cambridge, MA, USA, June 18–21, 2016, pp. 178–191 (2016)

Ma, T., Shi, J., Steurer, D.: Polynomial-time tensor decompositions with sum-of-squares. In: FOCS 2016 (2016)

Håstad, J.: Tensor rank is np-complete. J. Algorithms 11(4), 644–654 (1990)

Hillar, C.J., Lim, L.-H.: Most tensor problems are np-hard. J. ACM (JACM) 60(6), 45 (2013)

Ma, T., Shi, J., Steurer, D.: Polynomial-time tensor decompositions with sum-of-squares. In: 2016 IEEE 57th Annual Symposium on Foundations of Computer Science (FOCS), pp. 438–446. IEEE (2016)

Anandkumar, A., Ge, R., Janzamin, M.: Learning overcomplete latent variable models through tensor methods. In: Proceedings of the Conference on Learning Theory (COLT), Paris, France (2015)

Cartwright, D., Sturmfels, B.: The number of eigenvalues of a tensor. Linear Algebra Appl. 438(2), 942–952 (2013)

Abo, H., Seigal, A., Sturmfels, B.: Eigenconfigurations of tensors. Algebraic Geom. Methods Discrete Math. 685, 1–25 (2017)

Anandkumar, A., Ge, R.: Analyzing tensor power method dynamics in overcomplete regime. JMLR (2016)

Adler, R.J., Taylor, J.E.: Random Fields and Geometry. Springer, Berlin (2009)

Auffinger, A., Arous, G.B., Černỳ, J.: Random matrices and complexity of spin glasses. Commun. Pure Appl. Math. 66(2), 165–201 (2013)

Auffinger, A., Arous, G.B., et al.: Complexity of random smooth functions on the high-dimensional sphere. Ann. Probab. 41(6), 4214–4247 (2013)

Boumal, N., Absil, P.-A., Cartis, C.: Global rates of convergence for nonconvex optimization on manifolds. IMA J. Numerical Anal. 39(1), 1–33 (2019)

Absil, P.A., Mahony, R., Sepulchre, R.: Optimization Algorithms on Matrix Manifolds. Princeton University Press, Princeton (2007)

Wikipedia: Schauder fixed point theorem—wikipedia, the free encyclopedia, Accessed 26 May (2016)

Wainwright, Martin: Basic tail and concentration bounds (2015)

Hsu, D., Kakade, S.M., Zhang, T.: A tail inequality for quadratic forms of subgaussian random vectors. Electron. Commun. Probab. 17(52), 1–6 (2012)

Laurent, B., Massart, P.: Adaptive estimation of a quadratic functional by model selection. Ann. Stat. 28(5), 1302–1338 (2000)

Wikipedia: Incomplete gamma function—wikipedia, the free encyclopedia, Accessed 13 September(2016)

Weisstein, E.W.: Incomplete gamma function—from mathworld–a wolfram web resource (2016)

Candes, E.J., Tao, T.: Decoding by linear programming. IEEE Trans. Inf. Theory 51(12), 4203–4215 (2005)

Candes, E.J.: The restricted isometry property and its implications for compressed sensing. Comptes Rendus Mathematique 346(9), 589–592 (2008)

Ledoux, M., Talagrand, M.: Probability in Banach Spaces: Isoperimetry and Processes. Springer, Berlin (2013)

Rudelson, M., Vershynin, R.: Non-asymptotic theory of random matrices: extreme singular values. In: Proceedings of the International Congress of Mathematicians 2010 (ICM 2010) (In 4 Volumes) Vol. I: Plenary Lectures and Ceremonies Vols. II–IV: Invited Lectures, pp. 1576–1602. World Scientific (2010)

Baraniuk, R., Davenport, M., DeVore, R., Wakin, M.: A simple proof of the restricted isometry property for random matrices. Constr. Approx. 28(3), 253–263 (2008)

Acknowledgements

This paper was done in part while the authors were hosted by Simons Institute. We are indebted to Ramon van Handel for very useful comments on random matrix theory. We thank Nicolas Boumal and Daniel Stern for helpful discussions. This research was supported by NSF, Office of Naval Research, and the Simons Foundation.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

A shorter version of this paper has appeared in NIPS 2017 [1].

Electronic supplementary material

Below is the link to the electronic supplementary material.

Appendices

A Brief introduction to manifold optimization

Let \({\mathcal {M}}\) be a Riemannian manifold. For every \(x\in {\mathcal {M}}\), let \(T_x {\mathcal {M}}\) be the tangent space to \({\mathcal {M}}\) at x, and \(P_x\) denotes the projection to the tangent space \(T_x {\mathcal {M}}\). Let \(\mathrm{grad}\ f(x)\in T_x {\mathcal {M}}\) be the gradient of f at x on \({\mathcal {M}}\) and \(\mathrm{Hess}\ f(x)\) be the Riemannian Hessian. Note that \(\mathrm{Hess}\ f(x)\) is a linear mapping from \(T_x {\mathcal {M}}\) onto itself.

In this paper, we work with \({\mathcal {M}}=S^{d-1}\), and we view it as a submanifold of \(\mathbb {R}^d\). We also view f as the restriction of a smooth function \(\bar{f}\) to the manifold \({\mathcal {M}}\). In this case, we have that \(T_x {\mathcal {M}}= \{z\in \mathbb {R}^d: z^{\top } x = 0\}\), and \(P_x = \mathrm{Id}- xx^{\top }\). The gradient can be computed by,

where \(\nabla \) is the usual gradient in the ambient space \(\mathbb {R}^d\). Moreover, the Riemannian Hessian is defined as,

Here we refer the readers to the book [44] for the derivation of these formulae and the exact definition of gradient and Hessian on the manifolds.Footnote 4

B Toolbox

In this section we collect most of the probabilistic statements together. Most of them follow from basic (but sometimes tedious) calculation and calculus. We provide the full proofs for completeness.

1.1 B.1 Sub-exponential random variables

In this subsection we give the formal definition of sub-exponential random variables, which is used heavily in our analysis. We also summarizes it’s properties.

Definition 1

(c.f. [46, Definition 2.2]) A random variable X with mean \(\mu = {\mathbb {E}}\left[ X\right] \) is sub-exponential if there are non-negative parameters \((\nu ,b)\) such that

The following lemma gives a sufficient condition for X being a sub-exponential random variable.

Lemma 17

Suppose random variable X satisfies that for any \(t\ge 0\),

Then X is a sub-exponential random variable with parameter \((\nu ,b)\) satisfying \(\nu \lesssim p\) and \(b\lesssim q\).

The following Lemma gives the reverse direction of Lemma 17 which controls the tail of a sub-exponential random variable.

Lemma 18

([46, Proposition 2.2]) Suppose X is a sub-exponential random variable with mean 0 and parameters \((\nu ,b)\). Then,

A summation of sub-exponential random variables remain sub-exponential (with different parameters).

Lemma 19

(c.f. [46]) Suppose independent random variable \(X_1,\dots ,X_n\) are sub-exponential variables with parameter \((\nu _1,b_1),\dots , (\nu _n,b_n)\) respectively, then \(X_1+\dots +X_n\) is a sub-exponential random variable with parameter \((\nu ^*,b^*)\) where \(\nu ^* =\sqrt{\sum _{k\in [n]} \nu _k^2}\) and \(b^* = \max _k b_k\).

The following Lemma uses the Lemma above to prove certain sum of powers of Gaussian random variables are sub-exponential random variables.

Lemma 20

Let \(x\sim \mathcal {N}(0,1)\) and \(z = (x^4 -3x^2){\mathbf {1}}({|x|\le \tau ^{1/4}})\) where \(\tau > C\) for a sufficiently large constant C. Then we have that Z is a sub-exponential random variable with parameter \((\nu ,b) \) satisfying \(\nu = \sqrt{41}\) and \(b=(4+o_{\tau }(1))\sqrt{\tau }\).

Proof

We verify that Z satisfies the Bernstein condition

For \(k = 2\), we have that \({\mathbb {E}}\left[ z^2\right] \le {\mathbb {E}}\left[ x^{8}-6x^6+9x^4\right] = 41 = \frac{1}{2} \cdot 2! \cdot \nu ^2\). For any k with \((1-o_{\tau }(1))\frac{e\sqrt{\tau }}{4}\ge k > 3\), we have

On the other hand, when \(k\ge (1-o_{\tau }(1))\frac{e\sqrt{\tau }}{4}\), we have

\(\square \)

1.2 B.2 Norm of Gaussian random variables

The following Lemma shows that the norm of a Gaussian random variable has an sub-exponential upper tail and sub-Gaussian lower tail.

Lemma 21

([47, Proposition 1.1]) Let \(A\in \mathbb {R}^{n\times n}\) be a matrix and \(\Sigma = A^{\top }A\). Let \(x\sim \mathcal {N}(0,\mathrm{Id}_n)\). Then for all \(t \ge 0\),

Moreover, \(\Vert Ax\Vert ^2\) is a sub-exponential random variable with parameter \((\nu ,b)\) with \(\nu \lesssim \sqrt{\mathrm{tr}(\Sigma ^2)}\) and \(b\lesssim \Vert \Sigma \Vert \).

We note that this is a slight strengthen of [47, Proposition 1.1] with the lower tail. The strengthen is simple. The key of [47, Proposition 1.1] is to work in the eigenspace of \(\Sigma \) and apply [48, Lemma 1]. The lower tail can be bounded exactly the same by using the lower tail guarantee of [48, Lemma 1]. The second statement follows simply from Lemma 17.

The following Lemma is a simple helper Lemma that says that the norm of a product of a Gaussian matrix with a vector has a sub-Gaussian lower tail.

Lemma 22

Let \(v\in \mathbb {R}^m\) be a fixed vector and \(z_1,\dots , z_m\in \mathbb {R}^{n}\) be independent Gaussian random with mean \(\mu \in \mathbb {R}^n\) and covariance \(\Sigma \). Then \(X = [z_1,\dots , z_m]v\) is a Gaussian random variable with mean \((v^{\top }{\mathbf {1}})\mu \) and covariance \(\Vert v\Vert ^2 \Sigma \).

Moreover, we have that for any \(t \ge 0\),

Proof

The mean and variance of X can be computed straightforwardly. Towards establishing (B.3), let \(\bar{\mu } =\mu /\Vert {\mu }\Vert \), and we define \(X' = (\mathrm{Id}- \bar{\mu } \bar{\mu }^{\top })X\). Thus we have \(\Vert {X}\Vert \ge \Vert {X'}\Vert \) and that \(X'\) is Gaussian random variable with mean 0 and covariance \(\Sigma ' = (\mathrm{Id}- \bar{\mu }\bar{\mu }^{\top })\Sigma (\mathrm{Id}- \bar{\mu } \bar{\mu }^{\top })\). Then by Lemma 21, we have that

Finally we observe that \(\mathrm{tr}{\Sigma }\ge \mathrm{tr}(\Sigma ')\ge \mathrm{tr}(\Sigma )-\Vert {\Sigma }\Vert \) and this together with equation above completes the proof. \(\square \)

1.3 B.3 Moments of sub-exponential random variables

In this subsection, we give several Lemmas regarding the estimation of the moments of (truncated) sub-exponential random variables.

We start with a reduction Lemma that states that one can control the moments of sub-exponential random variable with the moments of the Gaussian random variable and exponential random variables.

Lemma 23

Suppose X is a sub-exponential random variable with mean 0 and parameters \((\nu ,b)\). Let \(X_1\sim {\mathcal {N}}(0,\nu ^2)\) and \(X_2\) be a exponential random variable with mean 2b. Let \(p\ge 1\) be an integer and \(s \ge 0\). Then,

Proof

Let p(x) be the density of X and let \(G(x) = \Pr [X\ge x]\). By Lemma 18 we have \(G(x)\le e^{-x^2/(2\nu ^2)}+e^{-x/(2b)}\). Then we have

\(\square \)

Next we bound the p-th moments of a sub-exponential random variable with zero mean.

Lemma 24

Let p be an even integer and let X be a sub-exponential random variable with mean 0 and parameters \((\nu ,b)\). Then we have that

Proof

Let \(X_1\sim {\mathcal {N}}(0,\nu ^2)\) and \(X_2\) be a exponential random variable with mean 2b. By Lemma 23, we have that

For the negative part we can obtain the similar bound, \({\mathbb {E}}\left[ (-X)^p{\mathbf {1}}({X\le 0})\right] \lesssim \nu ^p p!! +(2b)^p p!\). These together complete the proof. \(\square \)

The Lemma above implies the bound for the moments of sub-exponential variable with arbitrary mean via Holder inequality.

Lemma 25

Let X be a sub-exponential random variable with mean \(\mu \) and parameters \((\nu ,b)\). Let p be an integer. Then for any \(\varepsilon \in (0,1)\) we have,

Proof

Write \(X = \mu + Z\) where Z has mean 0. Then we have that for any \(\varepsilon \in (0,1)\), by Holder inequality,

\(\square \)

The next few Lemmas are to bound the moments of a truncated sub-exponential random variable. We use Lemma 23 to reduce the estimates to Gaussian and exponential cases. Thus, we first bound the moment of a truncated Gaussian.

Claim 7

Suppose \(X\sim \mathcal {N}(0,1)\). Then, as \(p\rightarrow \infty \), we have for \(s \ge \sqrt{p}\), \({\mathbb {E}}\left[ |X|^p{\mathbf {1}}({|X|\ge s})\right] \le (1+o_p(1))^p \cdot p \cdot e^{-s^2/2}s^p\nonumber \)

Proof

We assume p to be an odd number for simplicity. The even case can be bounded by the odd case straightforwardly. Let \(\Gamma (\cdot ,\cdot )\) be the upper incomplete Gamma function, we have,

\(\square \)

The next Claim estimates the moments of a truncated exponential random variable.

Claim 8

Suppose X has exponential distribution with mean 1 (that is, the density is \(p(x) = e^{-x}\)). Then, as \(p\rightarrow \infty \), for any \(s\ge (1+\varepsilon )p\) and \(\varepsilon \in (0,1)\), we have \({\mathbb {E}}\left[ X^p {\mathbf {1}}({X\ge s})\right] \lesssim e^{-s}s^p/\varepsilon \)

Proof

We have that

\(\square \)

Combing the two Lemmas and use Lemma 23 we obtain the bounds for sub-exponential random variables.

Lemma 26

Suppose X is a sub-exponential random variable with mean \(\mu \) and parameters \((\nu ,b)\). Then, for integer \(p\ge 2\), real numbers \(\eta ,\varepsilon \in (0,1)\) and \(s\ge \max \{\nu \sqrt{p}, 2bp(1+\eta )\}\), we have

where \(\zeta _p \rightarrow 0\) as \(p\rightarrow \infty \).

Proof

Write \(X = \mu + Z\) where Z has mean 0. Then we have that for any \(\varepsilon \in (0,1)\), by Holder inequality,

Therefore it remains to bound \({\mathbb {E}}\left[ |Z|^p\right] \). Let p(z) be the density of Z. Since Z is sub-exponential, we have that \(p(z) \le \max \{e^{-z^2/(2\nu ^2)}, e^{-z/(2b)}\} \le e^{-z^2/(2\nu ^2)}+e^{-z/(2b)}\). Let \(Z_1\sim {\mathcal {N}}(0,\nu ^2)\) and \(Z_2\) be an exponential random variable with mean 2b. Then by Lemma 23, we have

Combing inequality above and Eq. (B.5) we complete the proof. \(\square \)

Finally, the following is a helper claim that is used in the proof of Claim 8.

Claim 9

For any \(\mu \in \mathbb {R}\) and \(\beta > 0\), we have that

Proof

Let F(x) denote the RHS of (B.6). We differentiate F(x) and obtain that

Therefore, the claim follows from integration both hands of the equation above. \(\square \)

1.4 B.4 Auxiliary Lemmas

Lemma 27

Let \(\bar{\tau }\) be unit vector in \(\mathbb {R}^n\), \(w\in \mathbb {R}^n\), and \(z \sim \mathcal {N}(0,\sigma ^2\cdot (\mathrm{Id}_n-\bar{q}\bar{q}^{\top }))\). Then \(\Vert {z\odot w}\Vert ^{2}\) is a sub-exponential random variable with parameter \((\nu ,b)\) satisfying \(\nu \lesssim \sigma ^2\Vert w\Vert _4^2\) and \(b\lesssim \sigma ^2\Vert w\Vert _{\infty }^2\). Moreover, it has mean \(\sigma ^2(\Vert w\Vert ^2 - \langle {\bar{q}^{\odot 2},w^{\odot 2}}\rangle )\).

Proof

We have that \(z\odot w\) is a Gaussian random variable with covariance matrix \(\Sigma = \sigma ^2 P_{\bar{q}} {{\,\mathrm{diag}\,}}(w^{\odot 2})P_{\bar{q}}\). Then, \(z\odot w\) can be written as \(\Sigma ^{1/2}x\) where \(x\sim \mathcal {N}(0,\mathrm{Id}_n)\). Then by Lemma 21, we have that \(\Vert z\odot w\Vert ^2 =\Vert \Sigma ^{1/2}x\Vert ^2\) is a sub-exponential random variable with parameter \((\nu ,b)\) satisfying

Here at the second and forth line we both used the fact that \(\mathrm{tr}(PA)\le \mathrm{tr}(A)\) holds for any symmetric PSD matrix and projection matrix P.

Moreover, we have \(b\lesssim \Vert \Sigma \Vert \le \sigma ^2\max _k w_k^2 \). Finally, we have the mean of \(\Vert z\odot w\Vert ^2\) is \(\mathrm{tr}(\Sigma ) =\sigma ^2(\Vert w\Vert ^2 - \langle {\bar{q}^{\odot 2},w^{\odot 2}}\rangle )\), which completes the proof. \(\square \)

Claim 10

(folklore) We have that \(e\left( \frac{n}{e}\right) ^n \le n!\le e\left( \frac{n+1}{e}\right) ^{n+1}\). It follows that

Claim 11

For any \(\varepsilon \in (0,1)\) and \(A,B\in \mathbb {R}\), we have \((A+B)^d \le \max \{(1+\varepsilon )^d|A|^d, (1/\varepsilon +1)^d |B|^d\}\)

Claim 12

For any vectors a, b, and any \(\varepsilon >0\), we have \(\Vert a+b\Vert _6^6 \le (1+\varepsilon ) \Vert a\Vert _6^6 + O(1/\varepsilon ^5) \Vert b\Vert _6^6\).

Proof

By Cauchy-Schwartz we know we only need to prove this when a, b are real numbers instead of vectors. For numbers a, b, \((a+b)^6 = a^6 +6a^5b+ 15a^4b^2 + 20a^3b^3+15a^2b^4+6ab^5+b^6\). All the intermediate terms \(a^ib^j (i+j = 6)\) can be bounded by \(\varepsilon /56 \cdot a^6 +O(1/\varepsilon ^5) b^6\), therefore we know \((a+b)^6 \le (1+\varepsilon ) a^6 + O(1/\varepsilon ^5)b^6\). \(\square \)

Lemma 28

For any vector \(z \in \mathbb {R}^n\), and any partition of [n] into two subsets S and L, and any \(\eta \in (0,1)\), we have that,

Proof

By Cauchy-Schwarz inequality, we have that

Therefore, we obtain that

\(\square \)

1.5 B.5 Restricted isometry property and corollaries

In this section we will describe the Restricted Isometry Property (RIP), which was introduced in [51]. This is the crucial tool that we used in Sect. 5.

Definition 2

(RIP property) Suppose \(A \in \mathbb {R}^{d\times n}\) is a matrix whose columns have unit norm. We say the matrix A satisfies the \((k, \delta )\)-RIP property, if for any subset S of columns of size at most k, let \(A_S\) be the \(d\times |S|\) matrix with columns in S, we have

In our case the random components \(a_i\)’s do not have unit norm, we abuse notation and say A is RIP if the column normalized matrix satisfy the RIP property.

Intuitively RIP condition means the norm of Ax is very close to \(\Vert x\Vert \), if the number of nonzero entries in x is at most k. From [] we know a random matrix is RIP with high probability:

Theorem 6

([52]) For any constant \(\delta > 0\), when \(k = d/\Delta \log n\) for large enough constant \(\Delta \), with high probability a random Gaussian matrix is \((k,\delta )\)-RIP.

In our analysis, we mostly use the RIP property to show any given vector x cannot have large correlation with many components \(a_i\)’s.

1.6 B.6 Concentration inequalities

We first show if we only look at the components with small correlations with x, the function value cannot be much larger than 3n.

Lemma 29

With high probability over \(a_i\)’s, for any unit vector x, for any threshold \(\tau > 1\)

Proof

Let \(X_i = {\mathbf {1}}({|\langle {a_i,x}\rangle | \le \tau }) \langle {a_i,x}\rangle ^4 -{\mathbb {E}}[{\mathbf {1}}({|\langle {a_i,x}\rangle | \le \tau }) \langle {a_i,x}\rangle ^4]\). Notice that \({\mathbb {E}}[{\mathbf {1}}({|\langle {a_i,x}\rangle | \le \tau }) \langle {a_i,x}\rangle ^4] \le {\mathbb {E}}[\langle {a_i,x}\rangle ^4] = 3\). Clearly \({\mathbb {E}}[X_i] = 0\) and \(X_i \le \tau ^4\). We can apply Bernstein’s inequality, and we know for any x,

When \(t \ge C((\sqrt{nd}+d\tau ^4)\log d)\) for large enough constant, the probability is smaller than \(\exp (-C'd\log d)\) for large constant \(C'\), so we can union bound over all vectors in an \(\varepsilon \)-net with \(\varepsilon = 1/d^3\). This is true even if we set the threshold to \(2\tau \).

For any x not in the \(\varepsilon \)-net, let \(x'\) be its closest neighbor in \(\varepsilon \)-net, when \(a_i\)’s have norm bounded by \(O(\sqrt{d})\) (which happens with high probability), it is easy to show whenever \(|\langle {x,a_i}\rangle |\le \tau \) we always have \(|\langle {x',a_i}\rangle |\le 2\tau \). Therefore the sum for x cannot be much larger than the sum for \(x'\). \(\square \)

Using very similar techniques we can also show the contribution to the gradient for these small components is small

Lemma 30

With high probability over \(a_i\)’s, for any unit vector x, for any threshold \(\tau > 1\)

Proof

Let \(v = \sum _{i=1}^n {\mathbf {1}}({|\langle {a_i,x}\rangle | \le \tau }) \langle {a_i,x}\rangle ^3 a_i\). First notice that the inner-product \(\langle {v,x}\rangle \) is exactly the sum we bounded in Lemma 29. Therefore we only need to bound the norm in the orthogonal direction of x. Since \(a_i\)’s are Gaussian we know \(\langle {a_i,x}\rangle \) and \(P_x a_i\) are independent. So we can apply vector Bernstein’s inequality, and we know

By the same \(\varepsilon \)-net argument as Lemma 29 we can get the desired bound. \(\square \)

Finally we prove similar conditions for the tensor T applied to the vector x twice.

Lemma 31

With high probability over \(a_i\)’s, for any unit vector x, for any threshold \(\tau > 1\)

Proof

Let \(H = \sum _{i=1}^n {\mathbf {1}}({|\langle {a_i,x}\rangle | \le \tau }) \langle {a_i,x}\rangle ^2 a_i a_i^{\top }\). First notice that the quadratic form \(x^{\top } H x\) is exactly the sum we bounded in Lemma 29. We will bound the spectral norm in the orthogonal direction of x, and use the fact that H is a PSD matrix, so \(\Vert H\Vert \le 2(x^{\top } H x + \Vert P_x H P_x|)\) (this is basically the fact that \((a+b)^2 \le 2a^2+2b^2\)). Since \(a_i\)’s are Gaussian we know \(\langle {a_i,x}\rangle \) and \(P_x a_i\) are independent. So we can apply matrix Bernstein’s inequality, because \({\mathbb {E}}[P_x a_i a_i^{\top } P_x] = P_x\), we know

By the same \(\varepsilon \)-net argument as Lemma 29 we can get the desired bound. \(\square \)

C Missing proofs in Section 3

Proof of Lemma 4

Let \(A = [a_1,\dots , a_n]\). First of all by standard random matrix theory (see, e.g., [53, Section 3.3] or [54, Theorem 2.6]), we have that with high probability, \(\left\Vert {A}\right\Vert \le \sqrt{n}+1.1\sqrt{d}\). Therefore, we have that with high probability \(\sum \langle {a_i,x}\rangle ^2 \le \left\Vert {A}\right\Vert \le n + 3\sqrt{nd}\). By the RIP property of random matrix A (see [55, Theorem 5.2] or Theorem 6), we that with high probability, Eq. (3.4) holds.

Finally, using a straightforward \(\varepsilon \)-net argument and union bound, we have that for every unit vector x, \(\sum _{i=1}^{n}\langle {a_i,x}\rangle ^4\ge 15n- O(\sqrt{nd}\log d)\). Therefore, when \(1/\delta ^2 \cdot d\log ^2 d \le n\), Eq. (3.5) also holds. \(\square \)

Rights and permissions

About this article

Cite this article

Ge, R., Ma, T. On the optimization landscape of tensor decompositions. Math. Program. 193, 713–759 (2022). https://doi.org/10.1007/s10107-020-01579-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10107-020-01579-x