Abstract

Recommender systems are tools that support online users by pointing them to potential items of interest in situations of information overload. In recent years, the class of session-based recommendation algorithms received more attention in the research literature. These algorithms base their recommendations solely on the observed interactions with the user in an ongoing session and do not require the existence of long-term preference profiles. Most recently, a number of deep learning-based (“neural”) approaches to session-based recommendations have been proposed. However, previous research indicates that today’s complex neural recommendation methods are not always better than comparably simple algorithms in terms of prediction accuracy. With this work, our goal is to shed light on the state of the art in the area of session-based recommendation and on the progress that is made with neural approaches. For this purpose, we compare twelve algorithmic approaches, among them six recent neural methods, under identical conditions on various datasets. We find that the progress in terms of prediction accuracy that is achieved with neural methods is still limited. In most cases, our experiments show that simple heuristic methods based on nearest-neighbors schemes are preferable over conceptually and computationally more complex methods. Observations from a user study furthermore indicate that recommendations based on heuristic methods were also well accepted by the study participants. To support future progress and reproducibility in this area, we publicly share the session-rec evaluation framework that was used in our research.

Similar content being viewed by others

1 Introduction

Recommender systems (RS) are software applications that help users in situations of information overload, and they have become a common feature on many modern online services. Collaborative filtering (CF) techniques, which are based on behavioral data collected from larger user communities, are among the most successful technical approaches in practice. Historically, these approaches mostly rely on the assumption that information about longer-term preferences of the individual users is available, e.g., in the form of a user–item rating matrix (Resnick et al. 1994). In many real-world applications, however, such longer-term information is often not available, because users are not logged in or because they are first-time users. In such cases, techniques that leverage behavioral patterns in a community can still be applied (Jannach and Zanker 2019). The difference is that instead of the long-term preference profiles only the observed interactions with the user in the ongoing session can be used to adapt the recommendations to the assumed needs, preferences, or intents of the user. Such a setting is usually termed a session-based recommendation problem (Quadrana et al. 2018).

Interestingly, research on session-based recommendation was very scarce for many years despite the high practical relevance of the problem setting. Only in recent years, we can observe an increased interest in the topic in academia (Wang et al. 2019), which is at least partially caused by the recent availability of public datasets in particular from the e-commerce domain. This increased interest in session-based recommendations coincides with the recent boom of deep learning (neural) methods in various application areas. Accordingly, it is not surprising that several neural session-based recommendation approaches have been proposed in recent years, with gru4rec being one of the pioneering and most cited works in this context (Hidasi et al. 2016a).

From the perspective of the evaluation of session-based algorithms, the research community—at the time when the first neural techniques were proposed—had not yet established a level of maturity as is the case for problem setups that are based on the traditional user–item rating matrix. This led to challenges that concerned both the question what represents the state of the art in terms of algorithms and the question of the evaluation protocol when time-ordered user interaction logs are the input instead of a rating matrix. Partly due to this unclear situation, it soon turned out that in some cases comparably simple non-neural techniques, in particular ones based on nearest-neighbors approaches, can lead to very competitive or even better results than neural techniques (Jannach and Ludewig 2017; Ludewig and Jannach 2018). Besides being competitive in terms of accuracy, such more simple approaches often have the advantage that their recommendations are more transparent and can more easily be explained to the users. Furthermore, these simpler methods can often be updated online when new data become available, without requiring expensive model retraining.

However, during the last few years after the publication of gru4rec, we have mostly observed new proposals in the area of complex models. With this work, our aim is to assess the progress that was made in the last few years in a reproducible way. To that purpose, we have conducted an extensive set of experiments in which we compared twelve session-based recommendation techniques under identical conditions on a number of datasets. Among the examined techniques, there are six recent neural approaches, which were published at highly ranked publication outlets such as KDD, AAAI, or SIGIR after the publication of the first version of gru4rec in 2015.Footnote 1

The main outcome of our offline experiments is that the progress that is achieved with neural approaches to session-based recommendation is still limited. In most experiment configurations, one of the simple techniques outperforms all the neural approaches. In some cases, we could also not confirm that a more recently proposed neural method consistently outperforms the much earlier gru4rec method. Generally, our analyses point to certain underlying methodological issues, which were also observed in other application areas of applied machine learning. Similar observations regarding the competitiveness of established and often more simple approaches were made before, e.g., for the domains of information retrieval, time-series forecasting, and recommender systems (Yang et al. 2019; Ferrari Dacrema et al. 2019b; Makridakis et al. 2018; Armstrong et al. 2009), and it is important to note that these phenomena are not tied to deep learning approaches.

To help overcome some of these problems for the domain of session-based recommendation, we share our evaluation framework session-rec onlineFootnote 2. The framework not only includes the algorithms that are compared in this paper; it also supports different evaluation procedures, implements a number of metrics, and provides pointers to the public datasets that were used in our experiments.

Since offline experiments cannot inform us about the quality of the recommendation as perceived by users, we have furthermore conducted a user study. In this study, we compared heuristic methods with a neural approach and the recommendations produced by a commercial system (Spotify) in the context of an online radio station. The main outcomes of this study are that heuristic methods also lead to recommendations—playlists in this case—that are well accepted by users. The study furthermore sheds some light on the importance of other quality factors in the particular domain, i.e., the capability of an algorithm to help users discover new items.

The paper is organized as follows. Next, in Sect. 2, we provide an overview of the algorithms that were used in our experiments. Section 3 describes our offline evaluation methodology in more detail, and Sect. 4 presents the outcomes of the experiments. In Sect. 5, we report the results of our user study. Finally, we summarize our findings and their implications in Sect. 7.

2 Algorithms

Algorithms of various types were proposed over the years for session-based recommendation problems. A detailed overview of the more general family of sequence-aware recommender systems, where session-based ones are a part of, can be found in Quadrana et al. (2018). In the context of this work, we limit ourselves to a brief summary of parts of the historical development and how we selected algorithms for inclusion in our evaluations.

2.1 Historical development and algorithm selection

Nowadays, different forms of session-based recommendations can be found in practical applications. The recommendation of related items for a given reference object can, for example, be seen as a basic and very typical form of session-based recommendations in practice. In such settings, the selection of the recommendations is usually based solely on the very last item viewed by the user. Common examples are the recommendation of additional articles on news Web sites or recommendations of the form “Customers who bought ...also bought” on e-commerce sites. Another common application scenario is the creation of automated playlists, e.g., on YouTube, Spotify, or Last.fm. Here, the system creates a virtually endless list of next-item recommendations based on some seed item and additional observations, e.g., skips or likes, while the media is played. These application domains—Web page and news recommendation, e-commerce, music playlists—also represent the main driving scenarios in academic research.

For the recommendation of Web pages to visit, Mobasher et al. proposed one of the earliest session-based approaches based on frequent pattern mining in 2002 (Mobasher et al. 2002). In 2005, Shani et al. (2005) investigated the use of an MDP-based (Markov Decision Process) approach for session-based recommendations in e-commerce and also demonstrated its value from a business perspective. Alternative technical approaches based on Markov processes were later on proposed in 2012 and 2013 for the news domain in Garcin et al. (2012, 2013).

An early approach to music playlist generation was proposed in 2005 (Ragno et al. 2005), where the selection of items was based on the similarity with a seed song. The music domain was, however, also very important for collaborative approaches. In 2012, the authors of Hariri et al. (2012) used a session-based nearest-neighbors technique as part of their approach for playlist generation. This nearest-neighbors method and improved versions thereof later on turned out to be highly competitive with today’s neural methods (Ludewig and Jannach 2018). More complex methods were also proposed for the music domain, e.g., an approach based on Latent Markov Embeddings (Chen et al. 2012) from 2012.

Some novel technical proposals in the years 2014 and 2015 were based on a non-public e-commerce dataset from a European fashion retailer and either used Markov processes and side information (Tavakol and Brefeld 2014) or a simple re-ranking scheme based on short-term intents (Jannach et al. 2015). More importantly, however, in the year 2015, the ACM RecSys conference hosted a challenge, where the problem was to predict if a consumer will make a purchase in a given session, and if so, to predict which item will be purchased. A corresponding dataset (YOOCHOOSE) was released by an industrial partner, which is very frequently used today for benchmarking session-based algorithms. Technically, the winning team used a two-stage classification approach and invested a lot of efforts into feature engineering to make accurate predictions (Romov and Sokolov 2015).

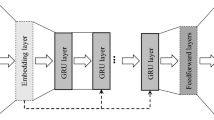

In late 2015, Hidasi et al. (2016a) then published the probably first deep learning-based method for session-based recommendation called gru4rec, a method which was continuously improved later on, e.g., in Hidasi and Karatzoglou (2018) or Tan et al. (2016). In their work, they also used the mentioned YOOCHOOSE dataset for evaluation, although with the slightly different optimization goal, i.e., to predict the immediate next item click event. As one of their baselines, they used an item-based nearest-neighbors technique. They found that their neural method is significantly better than this technique in terms of prediction accuracy. The proposal of their method and the booming interest in neural approaches subsequently led to a still ongoing wave of new proposals that apply deep learning approaches to session-based recommendation problems.

In the present work, we consider a selection of algorithms that reflects these historical developments. We consider basic algorithms based on item co-occurrences, sequential patterns and Markov processes as well as methods that implement session-based nearest-neighbors techniques. Looking at neural approaches, we benchmark the latest versions of gru4rec as well as five other methods that were published later and which state that they outperform at least the initial version of gru4rec to a significant extent.

Regarding the selected neural approaches, we limit ourselves to methods that do not use side information about the items in order to make our work easily reproducible and not dependent on such metadata. Another constraint for the inclusion in our comparison is that the work was published in major conferences, i.e., one that is rated A or A* according to the Australian CORE scheme. Finally, while in theory algorithms should be reproducible based on the technical descriptions in the paper, there are usually many small implementation details that can influence the outcome of the measurement. Therefore, like in Ferrari Dacrema et al. (2019b), we only considered approaches where the source code was available and could be integrated in our evaluation framework with reasonable effort.

2.2 Considered algorithms

In total, we considered 12 algorithms in our comparison. Table 1 provides an overview of the non-neural methods. Table 2 correspondingly shows the neural methods considered in our analysis, ordered by their publication date.

Except for the ct method, the non-neural methods from Table 1 are conceptually very simple or almost trivial. As mentioned above, this can lead to a number of potential practical advantages compared to more complex models, e.g., regarding online updates and explainability. From the perspective of the computational costs, the time needed to “train” the simple methods is often low, as this phase often reduces to counting item co-occurrences in the training data or to preparing some in-memory data structures. To make the nearest-neighbors technique scalable, we implemented the internal data structures and data sampling strategies proposed in Jannach and Ludewig (2017). Specifically, we pre-process the training data to build fast in-memory look-up tables. These tables can then be used to almost immediately retrieve a set of potentially relevant neighbor sessions in the training data given an item in the test session. Furthermore, to speed up processing times, we sample only a fraction (e.g., 1000) of the most recent training sessions when we look for neighbors, as this proved effective in several application domains. In the end, the ct method was the only one from the set of non-neural methods for which we encountered scalability issues in the form of memory consumption and prediction time when the set of recommendable items is huge.

Regarding alternative non-neural approaches, note that in our previous evaluation in Ludewig and Jannach (2018) only one neural method, but several other machine learning approaches were benchmarked. We do not include these alternative machine learning methods (iknn, fpmc, mc, smf, bpr-mf, fism, fossil)Footnote 3. In our present analysis, because the findings in Ludewig and Jannach (2018) showed that they either are generally not competitive or only lead to competitive results in few special cases.

The development over time regarding the neural approaches is summarized in Table 3. The table also indicates which baselines were used in the original papers. The analysis shows that gru4rec was considered as a baseline in all papers. Most papers refer to the original gru4rec publication from 2016 or an early improved version that was proposed shortly afterward (which we term gru4rec+ here, see Tan et al. 2016). Most papers, however, do not refer to the improved version (gru4rec2) discussed in Hidasi and Karatzoglou (2018). Since the public code for gru4rec was constantly updated, we, however, assume that the authors ran benchmarks against the updated versions. narm, as one of the earlier neural techniques, is the only neural method other than gru4rec that is considered quite frequently by more recent works.

The analysis of the used baselines furthermore showed that only one of the more recent papers proposing a neural method (csrm) considers, i.e., Wang et al. (2019), session-based nearest-neighbors techniques as a baseline, even though their competitiveness was documented in a publication at the ACM Recommender Systems conference in 2017 (Jannach and Ludewig 2017). Wang et al. (2019) (csrm), however, only consider the original proposal and not the improved versions from 2018 (Ludewig and Jannach 2018). The only other papers in our analysis, which consider session-based nearest-neighbors techniques as baselines, are about non-neural techniques (ct and stan). The paper proposing stan furthermore is an exception in that since it considers quite a number of neural approaches (gru4rec2, stamp, narm, sr-gnn) in its comparison.

3 Evaluation methodology

We benchmarked all methods under the same conditions, using the evaluation framework that we share online to ensure reproducibility of our results.

3.1 Datasets

We considered eight datasets from two domains for our evaluation, e-commerce and music. Six of them are public and several of them were previously used to benchmark session-based recommendation algorithms. Table 4 briefly describes the datasets.

We pre-processed the original datasets in a way that all sessions with only one interaction were removed. As done in previous works, we also removed items that appeared less than five times in the dataset. Multiple interactions with the same item in one session were kept in the data. While the repeated recommendation of an item does not lead to item discovery, such recommendations can still be helpful from a user’s perspective, e.g., as reminders (Ren et al. 2019; Lerche et al. 2016; Jannach et al. 2017). Furthermore, we use an evaluation procedure where we run repeated measurements on several subsets (splits) of the original data; see Sect. 3.2. The average characteristics of the subsets for each dataset are shown in Table 5. We share all datasets except ZALANDO and 8TRACKS online.

3.2 Evaluation procedure and metrics

3.2.1 Data and splitting approach

We apply the following procedure to create train–test splits. Since most datasets consist of time-ordered events, usual cross-validation procedures with the randomized allocation of events across data splits cannot be applied. Several authors only use one single time-ordered training–testing split for their measurements. This, however, can lead to undesired random effects. We therefore rely on a protocol where we create five non-overlapping and contiguous subsets (splits) of the datasets. As done in previous works, we use the last n days of each split for evaluation (testing) and the other days for training the models.Footnote 4 The reported measurements correspond to the averaged results obtained for each split. The playlist datasets (AOTM and 8TRACKS) are exceptions here as they do not have timestamps. For these datasets, we therefore randomly generated timestamps, which allows us to use the same procedure as for the other datasets. Note that during the evaluation, we only considered items in the test split that appeared at least once in the training data.

3.2.2 Hyperparameter optimization

Proper hyperparameter tuning is essential when comparing machine learning approaches. We therefore tuned all hyperparameters for all methods and datasets in a systematic approach, using MRR@20 as an optimization target as done in previous works. Technically, we created subsets from the training data for validation. The size of the validation set was chosen in a way that it covered the same number of days that was used in the final test set. We applied a random hyperparameter optimization approach with 100 iterations as done in Hidasi and Karatzoglou (2018), Liu et al. (2018) and Li et al. (2017). Since narm and csrm only have a smaller set of hyperparameters, we only had to do 50 iterations for these methods. Since the tuning process was particularly time-consuming for sr-gnn and nextitnet, we had to limit the number of iterations to 50 both for sr-gnn on the ZALANDO dataset and for nextitnet on the RSC15 dataset. The final hyperparameter values for each method and dataset can be found online, along with a description of the investigated ranges.

3.2.3 Accuracy measures

For each session in the test set, we incrementally reveal one event of a session after the other, as was proposed in Hidasi et al. (2016a)Footnote 5. The task of the recommendation algorithm is to generate a prediction for the next event(s) in the session in the form of a ranked list of items. The resulting list can then be used to apply standard accuracy measures from information retrieval. The measurement can be done in two different ways.

-

As in Hidasi et al. (2016a) and other works, we can measure if the immediate next item is part of the resulting list and at which position it is ranked. The corresponding measures are the Hit Rate and the Mean Reciprocal Rank.

-

In typical information retrieval scenarios, however, one is usually not interested in having one item right (e.g., the first search result), but in having as many predictions as possible right in a longer list that is displayed to the user. For session-based recommendation scenarios, this applies as well, as usually, e.g., on music and e-commerce sites, more than one recommendation is displayed. Therefore, we measure Precision and Recall in the usual way, by comparing the objects of the returned list with the entire remaining session, assuming that not only the immediate next item is relevant for the user. In addition to Precision and Recall, we also report the Mean Average Precision metric.

The most common cutoff threshold in the literature is 20, probably because this was the chosen threshold by the authors of gru4rec (Hidasi et al. 2016a). We have made measurements for alternative list lengths as well, but will only report the results when using 20 as a list length in this paper. We report additional results for cutoff thresholds of 5 and 10 in an online appendix.Footnote 6

3.2.4 Coverage and popularity

Depending on the application domain, factors other than prediction accuracy might be relevant as well, including coverage, novelty, diversity, or serendipity (Shani and Gunawardana 2011). Since we do not have information about item characteristics, we focus on questions of coverage and novelty in this work.

With coverage, we here refer to what is sometimes called “aggregate diversity” (Adomavicius and Kwon 2012). Specifically, we measure the fraction of items of the catalog that ever appears in any top-n list presented to the users in the test set. This coverage measure in a way also evaluates the level of context adaptation, i.e., if an algorithm tends to recommend the same set of items to everyone or specifically varies the recommendations for a given session.

We approximate the novelty level of an algorithm by measuring how popular the recommended items are on average. The underlying assumption is that recommending more unpopular items leads to higher novelty and discovery effects. Algorithms that mostly focus on the recommendation of popular items might be undesirable from a business perspective, e.g., when the goal is to leverage the potential of the long tail in e-commerce settings. Technically, we measure the popularity level of an algorithm as follows. First, we compute min-max normalized popularity values of each item in the training set. Then, during evaluation, we compute the popularity level of an algorithm by determining the average popularity value of each item that appears in its top-n recommendation list. Higher values correspondingly mean that an algorithm has a tendency to recommend rather popular items.

3.2.5 Running times

Complex neural models can need substantial computational resources to be trained. Training a “model”, i.e., calculating the statistics, for co-occurrence-based approaches like sr or ar can, in contrast, be done very efficiently. For nearest-neighbors-based approaches, actually no model is learned at all. Instead, some of our nearest-neighbors implementations need some time to create internal data structures that allow for efficient recommendation at prediction time. In the context of this paper, we will report running times for some selected datasets from both domains.

We executed all experiments on the same physical machine. The running times for the neural methods were determined using a GPU; the non-neural methods used a CPU. In theory, running times should be compared on the same hardware. Therefore, since the running times of the neural methods are much longer even when a GPU can be used, we can assume that the true difference in computational complexity is in fact even higher than we can see in our measurements.

3.2.6 Stability with respect to new data

In some application domains, e.g., news recommendation or e-commerce, new user–item interaction data can come in at a high rate. Since retraining the models to accommodate the new data can be costly, a desirable characteristic of an algorithm can be that the performance of the model does not degenerate too quickly before the retraining happens. To put it differently, it is desirable that the models do not overfit too much to the training data.

To investigate this particular form of model stability, we proceeded as follows. First, we trained a model on the training data \(T_0\) of a given train-test splitFootnote 7. Then, we made measurements using two different protocols, which we term retraining and no-retraining, respectively.

-

In the retraining configuration, we first evaluated the model that was trained on \(T_0\) using the data of the first day of the test set. Then, we added this first day of the test set to \(T_0\) and retrained the model on this extended dataset, which we name \(T_1\). Then, we continued with the evaluation with the data from the second day of the test data, using the model trained on \(T_1\). This process of adding more data to the training set, retraining the full model, and evaluating on the next day of the test set was done for all days of the test set except the last one.

-

In the no-retraining configuration, we also evaluated the performance day by day on the test data, but did not retrain the models, i.e., we used the model trained on \(T_0\) for all days in the test data.

To enable a fair comparison in both configurations, we only considered items in the evaluation phase that appeared at least once in the original training data \(T_0\).

Note that the absolute accuracy values for a given test day depend on the characteristics of the recorded data on that day. In some cases, the accuracy for the second test day can therefore even be higher than for the first test day, even if there was no retraining. An exact comparison of absolute values is therefore not too meaningful. However, we consider the relative accuracy drop when using the initial model \(T_0\) for a number of consecutive days as an indicator of the generalizability or stability of the learned models, provided that the investigated algorithms start from a comparable accuracy level.

4 Results

In this section, we report the results of our offline evaluation. We will first focus on accuracy, then look at alternative quality measures, and finally discuss aspects of scalability and the stability of different models over time.

4.1 Accuracy results

4.1.1 E-commerce datasets

Table 6 shows the results for the e-commerce datasets, ordered by the values obtained for the MAP@20 metric. The non-neural models are marked with full circles, while the neural ones can be identified by empty ones. The highest value across all techniques is printed in bold; the highest value obtained by the other family of algorithms—neural or non-neural—is underlined. Stars indicate significant differences (\(p<\) 0.05) according to a Kruskal–Wallis test between all the models and a Wilcoxon signed-rank test between the best-performing techniques from each category. The results for the individual datasets can be summarized as follows.

-

On the RETAIL dataset, the nearest-neighbors methods consistently lead to the highest accuracy results on all the accuracy measures. Among the complex models, the best results were obtained by gru4rec on all the measures except for MRR, where sr-gnn led to the best value. The results for narm and gru4rec are almost identical on most measures.

-

The results for the DIGI dataset are comparable, with the neighborhood methods leading to the best accuracy results. gru4rec is again the best method across the complex models on all the measures.

-

For the ZALANDO dataset, the neighborhood methods dominate all accuracy measures, except for the MRR. Here, gru4rec is minimally better than the simple sr method. Among the complex models, gru4rec achieves the best HR value, and the recent sr-gnn method is the best one on the other accuracy measures.

-

Only for the RSC15 dataset, we can observe that a neural method (narm) is able to slightly outperform our best simple baseline vstan in terms of MAP, Precision, and Recall. Interestingly, however, narm is one of the earlier neural methods in this comparison. The best Hit Rate is achieved by vstan, and the best MRR by sr-gnn. The differences between the best neural and non-neural methods are often tiny, in most cases around or less than 1 %.

Looking at the results across the different datasets, we can make the following additional observations.

-

Across all e-commerce datasets, the vstan method proposed in this paper is, for most measures, the best neighborhood-based method. This suggests that it is reasonable to include it as a baseline in future performance comparisons.

-

The ranking of the neural methods varies largely across the datasets and does not follow the order in which the methods were proposed. Like for the non-neural methods, the specific ranking therefore seems to be strongly depending on the dataset characteristics. This makes it particularly difficult to judge the progress that is made when only one or two datasets are used for the evaluation.

-

The results for the RSC15 dataset are generally different from the other results. Specifically, we found that some neural methods (narm, sr-gnn, nextitnet) are competitive and sometimes even slightly outperform our baselines. Moreover, stamp and nextitnet are usually not among the top performers, but work well for this dataset. Unlike for other e-commerce datasets, ct works particularly well for this dataset in terms of the MRR. Given these observations, it seems that the RSC15 dataset has some unique characteristics that are different from the other e-commerce datasets. Therefore, it seems advisable to consider multiple datasets with different characteristics in future evaluations.

-

We did not include measurements for nextitnet, one of the most recent methods, for some of the datasets (ZALANDO, 30MU, 8TRACKS, NOWP), because our machines ran out of memory (> 32 GB). These datasets were either comparably large or had longer sessions on average.

4.1.2 Music domain

In Table 7, we present the results for the music datasets. In general, the observations are in line with what we observed for the e-commerce domain regarding the competitiveness of the simple methods.

-

Across all datasets excluding the 8TRACKS dataset, the nearest-neighbors methods are consistently favorable in terms of Precision, Recall, MAP, and the Hit Rate, and the ct method leads to the best MRR. Moreover, the simple sr technique often leads to very good MRR values.

-

For the 8TRACKS dataset, the best Recall, MAP, and the Hit Rate values are again achieved by neighborhood methods. The best Precision and the MRR values are, however, achieved by a neural method (narm).

-

Again, no consistent ranking of the algorithms can be found across the datasets. In particular, the neural approaches take largely varying positions in the rankings across the datasets. Generally, narm seems to be a technique which performs consistently well on most datasets and measures.

4.2 Coverage and popularity

Tables 6 and 7 also contain information about the popularity bias of the individual algorithms and coverage information. Remember that we described in Sect. 3.2 how the numbers were calculated. From the results, we can identify the following trends regarding individual algorithms and the different algorithm families.

4.2.1 Popularity bias

-

The ct method is very different from all other methods in terms of its popularity bias, which is much higher than for any other method.

-

The gru4rec method, on the other hand, is the method that almost consistently recommends the most unpopular (or: novel) items to the users.

-

The neighborhood-based methods are often in the middle. There are, however, also neural methods, in particular sr-gnn, which seem to have a similar or sometimes even stronger popularity bias than the nearest-neighbors approaches. The assumption that nearest-neighbors methods are in general more focusing on popular items than neural methods can therefore not be confirmed through our experiments.

4.2.2 Coverage

-

In terms of coverage, we found that gru4rec often leads to the highest values.

-

The coverage of the neighborhood-based methods varies quite a lot, depending on the specific algorithm variant. In some configurations, their coverage is almost as high as for gru4rec, while in others the coverage can be low.

-

The coverage values of the other neural methods also do not show a clear ranking, and they are often in the range of the neighborhood-based methods and sometimes even very low.

4.3 Scalability

We present selected results regarding the running times of the algorithms for two e-commerce datasets and one music dataset in Table 8. The reported times were measured for training and predicting for one data split. The numbers reported for predicting correspond to the average time needed to generate a recommendation for a session beginning in the test set. For this measurement, we used a workstation computer with an Intel Core i7-4790k processor and an Nvidia GeForce GTX 1080 Ti graphics card (Cuda 10.1/CuDNN 7.5).

The results generally show that the computational complexity of neural methods is, as expected, much higher than for the non-neural approaches. In some cases, researchers therefore only use a smaller fraction of the original datasets, e.g., or of the RSC15 dataset. Several algorithms—both neural ones and the ct method—exhibit major scalability issues when the number of recommendable items increases. Furthermore, for the nextitnet method, we found that it is consuming a lot of memory for some datasets, as mentioned above, leading to out-of-memory errors.

In some cases, like for ct or sr-gnn, not only the training time increases, but also the prediction times. In particular, the prediction times can, however, be subject to strict time constraints in production settings. The prediction times for the nearest-neighbors methods are often slightly higher than those measured for methods like gru4rec, but usually lie within the time constraints of real-time recommendation (e.g., requiring about 30ms for one prediction for the ZALANDO dataset).

Since datasets in real-world environments can be even larger, this leaves us with questions regarding the practicability of some of the approaches. In general, even in case where a complex neural method would slightly outperform one of the more simple ones in an offline evaluation, it remains open if it is worth the effort to put such complex methods into production. For the ZALANDO dataset, for example, the best neural method (sr-gnn) needs several orders of magnitudeFootnote 8 more time to train than the best non-neural method vstan, which also only needs half the time for recommending.

A final interesting observation is that there can be a large spread, i.e., in the range of an order of magnitude and more, between the running times of the neural methods. For example, the methods that use convolution (nextitnet) or graph structures (sr-gnn) often need much more time than other techniques like gru4rec or narm. A detailed theoretical analysis of the computational complexity of the different algorithms and their underlying architectures is, however, beyond the scope of our present work, which compares the effectiveness and efficiency in an empirical way.

4.4 Stability with respect to new data

We report the stability results for the examined neural and non-neural algorithms in Table 9. Given the computational requirements for this simulation-based analysis, which requires multiple full retraining phases, we selected one of the smaller datasets for each domain in this analysis, DIGI and NOWP.

We used two months of training data and 10 days of test data for both datasets, DIGI and NOWP. The reported values show how much the accuracy results of each algorithm degrade (in percent), averaged across the test days when there is no daily re-training.

We can see from the results that the drop in accuracy without retraining can vary a lot across datasets (domains). For the DIGI dataset, the decrease in performance ranges between 0 and 10 percent across the different algorithms and performance measures. The NOWP dataset from the music domain seems to be more short-lived, with more recent trends that have to be considered. Here, the decrease in performance ranges from about 15 to 50 percent in terms of HR and from about 15 to 85 percent in terms of MRR.Footnote 9

Looking at the detailed results, we see that in both families of algorithms, i.e., neural and non-neural ones, some algorithms are much more stable than others when new data are added to a given dataset. For the non-neural approaches, we see that nearest-neighbor approaches are generally better than the other baselines techniques based on association rules or context trees.

Among the neural methods, narm is the most stable one on the DIGI dataset, but often falls behind the other deep learning methods on the NOWP dataset.Footnote 10 On this latter dataset, the csrm method leads to the most stable results. In general, however, no clear pattern across the datasets can be found regarding the performance of the neural methods when new data come in and no retraining is done.

Overall, given that the computational costs of training complex models can be high, it can be advisable to look at the stability of algorithms with respect to new data when choosing a method for production. According to our analysis, there can be strong differences across the algorithms. Furthermore, the nearest-neighbors methods appear to be quite stable in this comparison.

5 Observations from a user study

Offline evaluations, while predominant in the literature, can have certain limitations, in particular when it comes to the question of how the quality of the provided recommendations is perceived by users. We therefore conducted a controlled experiment, in which we compared different algorithmic approaches for session-based recommendation in the context of an online radio station. In the following sections, we report the main insights of this experiment. While the study did not include all algorithms from our offline analysis, we consider it helpful to obtain a more comprehensive picture regarding performance of session-based recommenders. More details about the study can be found in Ludewig and Jannach (2019).

5.1 Research questions and study setup

5.1.1 Research questions

Our offline analysis indicated that simple methods are often more competitive than the more complex ones. Our main research question therefore was how the recommendations generated by such simple methods are perceived by its users in different dimensions, in particular compared to recommendations by a complex method. Furthermore, we were interested how users perceive the recommendations of a commercial music streaming service, in our case Spotify, in the same situation.

5.1.2 Study setup

An online music listening application in the form of an “automated radio station” was developed for the purpose of the study. Similar to existing commercial services, users of the application could select a track they like (called a “seed track”), based on which the application created a playlist of subsequent tracks.

The users could then listen to an excerpt of the next track and were asked to provide feedback about it as shown in Fig. 1. Specifically, they were asked if (1) if they already knew the track, (2) to what extent the track matched the previously played track, and (3) to what extent they liked the track (independent of the playlist). In addition, the participants could press a “like” button before skipping to the next track. In case of such a like action, the list of upcoming tracks was updated. Users were visually hinted that such an update takes place. This update of the playlist was performed for all methods including Spotify, i.e., in that case we re-fetched a new playlist through Spotify API after each like statement.

Once the participants had listened to and rated at least 15 tracks, they were forwarded to a post-task questionnaire. In this questionnaire, we asked the participants 11 questions about how they perceived the service; see also Pu et al. (2011). Specifically, the participants were asked to provide answers to the questions using seven-point Likert scale items, ranging from “completely disagree” to “completely agree”. The questions, which include a twelfth question as an attention check, are listed in Table 10. In the table, we group the questions according to the different quality dimensions they refer to, inspired by Pu et al. (2011). This grouping was not visible for participants in the online study.

The study itself was based on a between-subjects design, where the treatments for each user group correspond to different algorithmic approaches to generate the recommendations. We included algorithms from different families in our study.

-

ar: Association rules of length two, as described in Sect. 2. We included this method as a simple baseline.

-

cagh: Another relatively simple baseline, which recommends the greatest hits of artists similar to those liked in the current session. This music-specific method is often competitive in offline evaluations as well; see Bonnin and Jannach (2014).

-

sknn: The basic nearest-neighbors method described above. We took the simple variant as a representative for the family of such approaches, as it performed particularly well in the ACM RecSys 2018 challenge (Ludewig et al. 2018).

-

gru4rec: The RNN-based approach discussed above, used as a representative for neural methods. narm would have been a stable alternative, but did not scale well for the used dataset.

-

spotify: Recommendations in this treatment group were retrieved in real time from Spotify API.

We optimized and trained all models on the Million Playlist Dataset Million Playlist Dataset (MPD)Footnote 11 provided by Spotify. We then recruited study participants using Amazon’s Mechanical Turk crowdsourcing platform. After excluding participants who did not pass the attention checks, we ended up with N=250 participants, i.e., 50 for each treatment group, for which we were confident that they provided reliable feedback.

Most of the recruited participants (almost 80%) were US-based. The most typical age range was between 25 and 34, with more than 50% of the participants falling into this category. On average, the participants considered themselves to be music enthusiasts, with an average response of 5.75 (on the seven-point scale) to a corresponding survey question. As usual, the participants received a compensation for their efforts through the crowdsourcing platform.

5.2 User study outcomes

The main observations can be summarized as follows.

5.2.1 Feedback the listening experience

Looking at the feedback that was observed during the listening session, we observed the following.

-

Number of Likes There were significant differences regarding the number of likes we observed across the treatment groups. Recommendations by the simple ar method received the highest number of likes (6.48), followed by sknn (5.63), cagh (5.38), gru4rec (5.36), and spotify (4.48).

-

Popularity of Tracks We found a clear correlation (r=0.89) between the general popularity of a track in the MPD dataset and the number of likes in the study. The ar and cagh methods recommended, on average, the most popular tracks. The recommendations by spotify and gru4rec were more oriented toward tracks with lower popularity.

-

Track Familiarity There were also clear differences in terms of how many of the recommended tracks were already known by the users. The cagh (10.83 %) and sknn (10.13 %) methods recommended the largest number of known tracks. The ar method, even though it recommended very popular tracks, led to much more unfamiliar recommendations (8.61 %). gru4rec was somewhere in the middle (9.30 %), and spotify recommended the most novel tracks to users (7.00 %).

-

Suitability of Track Continuations The continuations created by sknn and cagh were perceived to be the most suitable ones. The differences between sknn and ar, gru4rec, and spotify were significant. The recommendations made by the ar method were considered to match the playlist the least. This is not too surprising because the ar method only considers the very last played track for the recommendation of subsequent tracks.

-

Individual Track Ratings The differences regarding the individual ratings for each track rating are generally small and not significant. Interestingly, the playlist-independent ratings for tracks recommended by the ar method were the lowest ones, even though these recommendations received the highest number of likes. An analysis of the rating distribution shows that the ar method often produces very bad recommendations, with a mode value of 1 on the 1–7 rating scale.

5.2.2 Post-task questionnaire

The detailed statistics of the answers to the post-task questionnaire are shown in Table 11. The analysis of the data revealed the following aspects:

-

Q1: The radio station based on sknn was significantly more liked than the stations that used gru4rec, ar, and spotify.

-

Q2: All radio stations matched the users general taste quite well, with median values between 5 and 6 on a seven-point scale. Only the station based on the ar method received a significantly lower rating than the others.

-

Q3: The sknn method was found to perform significantly better than ar and gru4rec with respect to identifying tracks that musically match the seed track.

-

Q4: The adaptation of the playlist based on the like statements was considered good for all radio stations. Again, the feedback for the ar method was significantly lower than for the other methods.

-

Q5 and Q6: No significant differences were found regarding the surprise level of the different recommendation strategies.

-

Q7: Regarding the capability of recommending unknown tracks that the users liked, the recommendations by spotify were perceived to be much better than for the other methods, with significant differences compared to all other methods.

-

Q9 to Q12: The best performing methods in terms of the intention to reuse and the intention to recommend the radio station to others were sknn, cagh, and spotify. gru4rec and ar were slightly worse, sometimes with differences that were statistically significant.

Overall, the study confirmed that methods like sknn do not only perform well in an offline evaluation, but are also able, according to our study, to generate recommendations that are well perceived in different dimensions by the users. The study also revealed a number of additional insights.

First, we found that optimizing for like statements can be misleading. The ar method received the highest number of likes, but was consistently worse than other techniques in almost all other dimensions. Apparently, this was caused by the fact that the ar method made a number of bad recommendations; see also Patrick et al. (2013) for an analysis of the effects on bad recommendations in the music domain.

Second, it turned out that discovery support seems to be an important factor in this particular application domain. While the recommendations of spotify were slightly less appreciated than those by sknn, we found no difference in terms of the user’s intention to reuse the system or to recommend it to friends. We hypothesize that the better discovery support of SPOTIFY’s recommendations was an important factor for this phenomenon. This observation points to the importance of considering multiple potential quality factors when comparing systems.

6 Research limitations

Our work does not come without limitations, both regarding the offline evaluations and the user study.

6.1 Potential data biases

One general problem of offline evaluations based on historical data is that we often know very little about the circumstances and environment in which the data were collected. For the e-commerce datasets, for example, what we see as interactions in the log can be at least partially the result of the recommender system that was in place during the time of data collection, or it can simply be the result of how certain items or categories were promoted in the online shop. For the music datasets, and in particular for data obtained from Last.fm (30MU), it might be that the logs to some extent reflect what the Last.fm radio station functionality was playing automatically given a seed track. Well-performing algorithms, i.e., those that predict the next items in the log accurately, might therefore be the ones that are able to “reconstruct” the logic of an existing recommender in some ways. The results of such a biased offline evaluation might therefore not fully reflect the effectiveness of a system.

Over the years, a number of approaches were proposed to deal with such problems, e.g., by using evaluation measures that take biases into account or by trying to “de-bias” the datasets (Steck 2010; Carraro and Bridge 2019). In particular, in the context of reinforcement learning and bandit-based approaches, a number of research proposals were made for unbiased offline evaluation protocols to obtain more realistic performance estimates from log data; see Li et al. (2011) for an early work. The analysis or consideration of such biases was, however, not in the scope of the work, which aimed at the comparison of different existing algorithms using standard evaluation protocols. While the outcomes of these analyses (and of the original works) maybe therefore suffer from potential biases, the conducted user study provided us with strong indications that the generated recommendations were also liked by users.

6.2 Empirical nature of the work

Generally, our work—like the papers that proposed the analyzed neural models—is mainly an empirical one in terms of the research approach. Algorithmic papers that propose new models in many cases do not start with a theoretical model, but probably more often with an intuition of what kind of signals there could be in the data. In case performance increases are found when using model that is designed to capture these signals, a common approach in that context is to use ablation studies to determine, again empirically, to what extent certain parts of the network architecture contribute to the overall performance. In the context of our comparative work, in contrast, it would be interesting to understand why even computationally very complex models are not consistently performing better than the more simple models. Possible explanations could be that some underlying assumptions do not hold for the majority of the datasets. In some domains, the sequential ordering of the events, as captured by RNNs, might for example not be very important. Another problem could lie in a certain tendency of overfitting of the complex models, even when the hyperparameters are optimized on a held-out validation set. A detailed analytical investigation of the potential reasons why each of the six complex models in our comparison does not perform consistently better than the more simple ones, however, lies beyond the scope of this present work and is left for future work.

6.3 User study limitations

Finally, the user study discussed in Sect. 5—like most studies of that type—has certain limitations as well. Typical issues that apply also to our study are questions related the representativeness of the user population. Furthermore, while we developed a realistic and fully functional online radio station, the setting remains artificial and users were paid for their participation. The attention checks and the statistics of how users interacted with the system, however, make us confident that the majority of the participants completed the task with care and that the results are reliable. Another potential limitation of our study design is that we used one single item for each of the investigated quality dimensions in the post-task questionnaire. Since we mainly used established questions from the literature, e.g., from Pu et al. (2011), the associated risks are low.

7 Conclusions and ways forward

Our work reveals that despite a continuous stream of papers that propose new neural approaches for session-based recommendation, the progress in the field seems still limited. According to our evaluations, today’s deep learning techniques are in many cases not outperforming much simpler heuristic methods. Overall, this indicates that there still is a huge potential for more effective neural recommendation methods in the future in this area.

In a related analysis of deep learning techniques for recommender systems (Ferrari Dacrema et al. 2019a, b), the authors found that different factors contribute to what they call phantom progress. One first problem is related to the reproducibility of the reported results. They found that in less than a third of the investigated papers, the code was made available to other researchers. The problem also exists to some extent for session-based recommendation approaches. To further increase the level of reproducibility, we share our evaluation framework publicly, so that other researchers can easily benchmark their own methods with a comprehensive set of neural and non-neural approaches on different datasets.

Through sharing our evaluation framework, we hope to also address other methodological and procedural issues mentioned in Ferrari Dacrema et al. 2019b) that can make the comparison of algorithms unreliable or inconclusive. Regarding methodological issues, we for example found works that determined the optimal number of training epochs on the test set and furthermore determined the best Hit Rate and MRR values across different optimization epochs. Regarding procedural issues, we found that while researchers seemingly rely on the same datasets as previous works, they sometimes apply different data pre-processing strategies. Furthermore, the choice of the baselines can make the results inconclusive. Most investigated works do not consider the sknn method and its variants as a baseline. Some works only compare variants of one method and include a non-neural, but not necessarily strong other baseline. In many cases, little is also said about the optimization of the hyperparameters of the baselines. The session-rec framework used in our evaluation should help to avoid these problems, as it contains all the code for data pre-processing, evaluation, and hyperparameter optimization. Such frameworks are generally important to ensure replicability and reproducibility of research results (Çoba and Zanker 2017). Furthermore, sharing the framework allows other researchers to inspect the exact details of how the algorithms are implemented and evaluated, which is important as no de facto standards exist in the literature, which can sometimes lead to inconclusive and inconsistent results (Said and Bellogín 2014).

Moreover, also from a methodological perspective, our analyses indicated that optimizing solely for accuracy can be insufficient also for session-based recommendation scenarios. Depending on the application domain, other quality factors such as coverage, diversity, or novelty should be considered besides efficiency, because they can be crucial for the adoption and success of the recommendation service. Given the insights from our controlled experiment, we furthermore argue that more user studies and field tests are necessary to understand the characteristics of successful recommendations in a given application domain.

Looking at future directions, in particular methods that leverage side information about users and items seem to represent a promising way forward; see de Souza Pereira Moreira et al. (2018, 2019), Huang et al. (2018), Hidasi et al. (2016b). In Hidasi et al. (2016b), the authors for example use a parallel RNN architecture to incorporate image and text information in the session modeling process. In de Souza Pereira Moreira et al. (2018, 2019), both item information and user context information are combined in a neural architecture for news recommendation. Huang et al. (2018), finally, combine RNNs with Key-Value memory networks to build a hybrid system that integrates information about item attributes in the sequential recommendation process.

A main challenge when trying to analyze and compare such methods under identical conditions, as was the goal of our present work, is that these works rely on largely different and often specific datasets, e.g., containing image information, or are optimized for a specific problem setting, e.g., cold-start situations in the news domain. An important direction for future work therefore lies in analyzing to what extent the benefits of such hybrid architectures generalize beyond individual application domains.

Notes

Compared to our previous work presented in Ludewig and Jannach (2018) and Ludewig et al. (2019), our present analysis includes considerably more recent deep learning techniques and baseline approaches. We also provide the outcomes of additional measurements regarding the scalability and stability of different algorithms. Finally, we also contrast the outcomes of the offline experiments with the findings obtained in a user study (Ludewig and Jannach 2019).

iknn: Item-based kNN (Hidasi et al. 2016a), fpmc: Factorized Personalized Markov Chains (Rendle et al. 2010), mc: Markov Chains (Norris 1997), smf: Session-based Matrix Factorization (Ludewig and Jannach 2018), bpr-mf: Bayesian Personalized Ranking (Rendle et al. 2009), fism: Factored Item Similarity Models (Kabbur et al. 2013), fossil: FactOrized Sequential Prediction with Item SImilarity ModeLs (He and McAuley 2016).

The number of days used for testing (n) was determined based on the characteristics of the dataset. We, for example, used the last day for the RSC15 dataset, two for RETAIL, five for the music datasets, and seven for DIGI to ensure that train–test splits are comparable.

Note that the revealed items from a session can be used by an algorithm for the subsequent predictions, but the revealed interactions are not added to the training data.

We also optimized the hyperparameters on a subset of \(T_0\) that was used as a validation set. The hyperparameters were kept constant for the remaining measurements.

The training time for sr-gnn is 10,000 times higher than for vstan.

Generally, comparing the numbers across the datasets is not meaningful due to their different characteristics.

The experiments for nextitnet could not be completed on this dataset because the method’s resource requirements exceeded our computing capacities.

References

Adomavicius, G., Kwon, Y.O.: Improving aggregate recommendation diversity using ranking-based techniques. IEEE Trans. Knowl. Data Eng. 24(5), 896–911 (2012)

Armstrong, T.G., Moffat, A., Webber, W., Zobel, J.: Improvements that don’t add up: Ad-hoc retrieval results since 1998. In: Proceedings of the 18th ACM Conference on Information and Knowledge Management, CIKM ’09, pp. 601–610 (2009)

Bonnin, G., Jannach, D.: Automated generation of music playlists: survey and experiments. ACM Comput. Surv. 47(2), 26:1–26:35 (2014)

Carraro, D., Bridge, D.: Debiased offline evaluation of recommender systems: a weighted-sampling approach (extended abstract). In: Proceedings of the ACM RecSys 2019 Workshop on Reinforcement and Robust Estimators for Recommendation (REVEAL ’19) (2019)

Chau, P.Y.K., Ho, S.Y., Ho, K.K.W., Yao, Y.: Examining the effects of malfunctioning personalized services on online users’ distrust and behaviors. Decis. Support Syst. 56, 180–191 (2013)

Chen, S., Moore, J.L., Turnbull, D., Joachims, T.: Playlist prediction via metric embedding. In: Proceedings of the 18th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, KDD ’12, pp. 714–722 (2012)

Cho, K., van Merrienboer, B., Bahdanau, D., Bengio, Y.: On the properties of neural machine translation: encoder–decoder approaches. CoRR, abs/1409.1259 (2014)

Çoba, L., Zanker, M.: Replication and reproduction in recommender systems research—evidence from a case-study with the rrecsys library. In: 30th International Conference on Industrial Engineering and Other Applications of Applied Intelligent Systems, IEA/AIE ’17, pp. 305–314 (2017)

de Souza Pereira Moreira, G. Ferreira, Felipe, M., da Cunha, A.M.: News session-based recommendations using deep neural networks. In: Proceedings of the 3rd Workshop on Deep Learning for Recommender Systems, DLRS ’18, pp. 15–23 (2018)

de Souza Pereira Moreira, G., Jannach, D. da Cunha, A.M.: Contextual hybrid session-based news recommendation with recurrent neural networks. IEEE Access 7 (2019)

Ferrari Dacrema, M., Boglio, S., Cremonesi, P., Jannach, D.: A troubling analysis of reproducibility and progress in recommender systems research, CoRR, abs/2004.00646 (2019a)

Ferrari Dacrema, M., Cremonesi, P., Jannach, D.: Are we really making much progress? A worrying analysis of recent neural recommendation approaches. In: Proceedings of the 13th ACM Conference on Recommender Systems, RecSys ’19, pp. 101–109 (2019b)

Garcin, F., Dimitrakakis, C., Faltings, B.: Personalized news recommendation with context trees. In: Proceedings of the 7th ACM Conference on Recommender Systems, RecSys ’13, pp. 105–112 (2013)

Garcin, F., Zhou, K., Faltings, B., Schickel, V.: Personalized news recommendation based on collaborative filtering. In: Proceedings of the 2012 IEEE/WIC/ACM International Joint Conferences on Web Intelligence and Intelligent Agent Technology, WI-IAT ’12, pp. 437–441 (2012)

Garg, D., Gupta, P., Malhotra, P., Vig, L., Shroff, G.: Sequence and time aware neighborhood for session-based recommendations: Stan. In: Proceedings of the 42nd International ACM SIGIR Conference on Research and Development in Information Retrieval, SIGIR ’19, pp. 1069–1072 (2019)

Hariri, N., Mobasher, B., Burke, R.: Context-aware music recommendation based on latent topic sequential patterns. In: Proceedings of the Sixth ACM Conference on Recommender Systems, RecSys ’12, pp. 131–131 (2012)

Hariri, N., Mobasher, B., Burke, R.: Adapting to user preference changes in interactive recommendation. In: Proceedings of the 24th International Conference on Artificial Intelligence, IJCAI ’15, pp. 4268–4274. AAAI (2015)

He, R., McAuley, J.: Fusing similarity models with markov chains for sparse sequential recommendation. CoRR, abs/1609.09152 (2016)

Hidasi, B., Karatzoglou, A.: Recurrent neural networks with top-k gains for session-based recommendations. In: Proceedings of the 27th ACM International Conference on Information and Knowledge Management, CIKM ’18, pp. 843–852 (2018)

Hidasi, B., Karatzoglou, A., Baltrunas, L., Tikk, D.: Session-based recommendations with recurrent neural networks. In: Proceedings International Conference on Learning Representations, ICLR ’16 (2016)

Hidasi, B., Quadrana, M., Karatzoglou, A., Tikk, D.: Parallel recurrent neural network architectures for feature-rich session-based recommendations. In: Proceedings of the 10th ACM Conference on Recommender Systems, RecSys ’16, pp. 241–248 (2016)

Huang, J., Zhao, W.X., Dou, H., Wen, J.-R., Chang, E.Y.: Improving sequential recommendation with knowledge-enhanced memory networks. In: The 41st International ACM SIGIR Conference on Research & Development in Information Retrieval, SIGIR ’18, pp. 505–514 (2018)

Jannach, D., Lerche, L., Jugovac, M.: Adaptation and evaluation of recommendations for short-term shopping goals. In: Proceedings of the 9th ACM Conference on Recommender Systems, RecSys ’15, pp. 211–218 (2015)

Jannach, D., Ludewig, M.: When recurrent neural networks meet the neighborhood for session-based recommendation. In: Proceedings of the 11th ACM Conference on Recommender Systems, RecSys ’17, pp. 306–310 (2017)

Jannach, D., Ludewig, M., Lerche, L.: Session-based item recommendation in e-commerce: on short-term intents, reminders, trends, and discounts. User-Model. User-Adapted Interact. 27(3–5), 351–392 (2017)

Jannach, D., Zanker, M.: Collaborative filtering: matrix completion and session-based recommendation tasks. In: Collaborative Recommendations: Algorithms, Practical Challenges and Applications, pp. 1–38 (2019)

Kabbur, S., Ning, X., Karypis, G.: FISM: factored item similarity models for top-n recommender systems. In: KDD ’13, pp. 659–667 (2013)

Lerche, L., Jannach, D., Ludewig, M.: On the value of reminders within e-commerce recommendations. In: UMAP ’16, pp. 27–35 (2016)

Li, J., Ren, P., Chen, Z., Ren, Z., Lian, T., Ma, J.: Neural attentive session-based recommendation. In: Proceedings of the 2017 ACM on Conference on Information and Knowledge Management, CIKM ’17, pp. 1419–1428 (2017)

Li, L., Chu, W., Langford, J., Wang, X.: Unbiased offline evaluation of contextual-bandit-based news article recommendation algorithms. In: Proceedings of the Fourth ACM International Conference on Web Search and Data Mining, WSDM ’11, pp. 297–306 (2011)

Liu, Q., Zeng, Y., Mokhosi, R., Zhang, H.: STAMP: short-term attention/memory priority model for session-based recommendation. In: Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, KDD ’18, pp. 1831–1839 (2018)

Ludewig, M., Jannach, D.: Evaluation of session-based recommendation algorithms. User-Model. User-Adapted Interact. 28(4–5), 331–390 (2018)

Ludewig, M., Jannach, D.: User-centric evaluation of session-based recommendations for an automated radio station. In: Proceedings of the 13th ACM Conference on Recommender Systems, RecSys ’19, pp. 516–520 (2019)

Ludewig, M., Kamehkhosh, I., Landia, N., Jannach, D.: Effective nearest-neighbor music recommendations. In: Proceedings of the ACM Recommender Systems Challenge 2018, RecSys Challenge ’18, pp. 3:1–3:6 (2018)

Ludewig, M., Mauro, N., Latifi, S., Jannach, D.: Performance comparison of neural and non-neural approaches to session-based recommendation. In: Proceedings of the 13th ACM Conference on Recommender Systems, RecSys ’19, pp. 462–466 (2019)

Makridakis, S., Spiliotis, E., Assimakopoulos, V.: Statistical and machine learning forecasting methods: concerns and ways forward. PloS one 13(3), (2018)

Mi, F., Faltings, B.: Context tree for adaptive session-based recommendation. CoRR, abs/1806.03733 (2018)

Mobasher, B., Dai, H., Luo, T., Nakagawa, M.: Using sequential and non-sequential patterns in predictive web usage mining tasks. In: Proceedings of IEEE International Conference on Data Mining, ICDM ’02, pp. 669–672 (2002)

Norris, J.R.: Markov Chains. Cambridge University Press, Cambridge (1997)

Pu, P., Chen, L., Hu, R.: A user-centric evaluation framework for recommender systems. In: Proceedings of the 5th ACM Conference on Recommender Systems, RecSys ’11, pp. 157–164 (2011)

Quadrana, M., Cremonesi, P., Jannach, D.: Sequence-aware recommender systems. ACM Comput. Surv. 54, 1–36 (2018)

Ragno, R., Burges, C.J.C., Herley, C.: Inferring similarity between music objects with application to playlist generation. In: Proceedings of the 7th ACM SIGMM International Workshop on Multimedia Information Retrieval, MIR ’05, pp. 73–80 (2005)

Ren, P., Chen, Z., Li, J., Ren, Z., Ma, J., de Rijke, M.: Repeatnet: a repeat aware neural recommendation machine for session-based recommendation. In: The Thirty-Third AAAI Conference on Artificial Intelligence, AAAI ’19, pp. 4806–4813 (2019)

Rendle, S., Freudenthaler, C., Gantner, Z., Schmidt-Thieme, L.: Bpr: Bayesian personalized ranking from implicit feedback. In: UAI ’09, pp. 452–461 (2009)

Rendle, S., Freudenthaler, C., Schmidt-Thieme, L.: Factorizing personalized markov chains for next-basket recommendation. In: WWW ’10, pp. 811–820 (2010)

Resnick, P., Iacovou, N., Suchak, M., Bergstrom, P., Riedl, J.: Grouplens: an open architecture for collaborative filtering of netnews. In: Proceedings of the 1994 ACM Conference on Computer Supported Cooperative Work, CSCW ’94, pp. 175–186 (1994)

Romov, P., Sokolov, E.: RecSys Challenge 2015: ensemble learning with categorical features. In: Proceedings of the 2015 International ACM Recommender Systems Challenge, RecSys ’15 Challenge, pp. 1:1–1:4 (2015)

Said, A., Bellogín, A.: Comparative recommender system evaluation: benchmarking recommendation frameworks. In: Proceedings of the 8th ACM Conference on Recommender Systems, RecSys ’14, pp. 129–136 (2014)

Shani, G., Gunawardana, A.: Evaluating recommendation systems. In: Recommender Systems Handbook, pp. 257–297 (2011)

Shani, G., Heckerman, D., Brafman, R.I.: An MDP-based recommender system. J. Mach. Learn. Res. 6, 1265–1295 (2005)

Steck, H.: Training and testing of recommender systems on data missing not at random. In: Proceedings of the 16th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, KDD ’10, pp. 713–722 (2010)

Tan, Y.K., Xu, X., Liu, Y.: Improved recurrent neural networks for session-based recommendations. In: Proceedings of the 1st Workshop on Deep Learning for Recommender Systems, DLRS ’16, pp. 17–22 (2016)

Tavakol, M., Brefeld, U.: Factored MDPs for detecting topics of user sessions. In: Proceedings of the 8th ACM Conference on Recommender Systems, RecSys ’14, pp. 33–40 (2014)

Wang, M., Ren, P., Mei, L., Chen, Z., Ma, J., de Rijke, M.: A collaborative session-based recommendation approach with parallel memory modules. In: Proceedings of the 42nd International ACM SIGIR Conference on Research and Development in Information Retrieval, SIGIR ’19, pp. 345–354 (2019)

Wang, S., Cao, L., Wang, Y.: A survey on session-based recommender systems. CoRR, abs/1902.04864 (2019)

Wu, S., Tang, Y., Zhu, Y., Wang, L., Xie, X., Tan, T.: Session-based recommendation with graph neural networks. In: Proceedings of the Thirty-Third AAAI Conference on Artificial Intelligence, AAAI, pp. 346–353 (2019)

Yang, W., Lu, K., Yang, P., Lin, J.: Critically examining the neural hype: weak baselines and the additivity of effectiveness gains from neural ranking models. In: Proceedings of the 42nd International ACM SIGIR Conference on Research and Development in Information Retrieval, SIGIR ’19, pp. 1129–1132 (2019)

Yuan, F., Karatzoglou, A., Arapakis, I., Jose, J.M., He, X.: A simple convolutional generative network for next item recommendation. In: Proceedings of the 12th ACM International Conference on Web Search and Data Mining, WSDM ’19, pp. 582–590 (2019)

Acknowledgements

We thank Liliana Ardissono for her valuable feedback on the paper.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work combines and significantly extends our own previous work published in Ludewig and Jannach (2019) and Ludewig et al. (2019). This paper or a similar version is not currently under review by a journal or conference. This paper is void of plagiarism or self-plagiarism as defined by the Committee on Publication Ethics and Springer Guidelines. A preprint version of this work is available at https://arxiv.org/abs/1910.12781.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ludewig, M., Mauro, N., Latifi, S. et al. Empirical analysis of session-based recommendation algorithms. User Model User-Adap Inter 31, 149–181 (2021). https://doi.org/10.1007/s11257-020-09277-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11257-020-09277-1