Abstract

Spatial and temporal patterns of snow cover extent (SCE) and snow water equivalent (SWE) over the terrestrial Arctic are analyzed based on multiple observational datasets and an ensemble of CMIP5 models during 1979–2005. For evaluation of historical simulations of the Coupled Model Intercomparison Project (CMIP5) ensemble, we used two reanalysis products, one satellite-observed product and an ensemble of different datasets. The CMIP5 models tend to significantly underestimate the observed SCE in spring but are in better agreement with observations in autumn; overall, the observed annual SCE cycle is well captured by the CMIP5 ensemble. In contrast, for SWE, the annual cycle is significantly biased, especially over North America, where some models retain snow even in summer, in disagreement with observations. The snow margin position (SMP) in the CMIP5 historical simulations is in better agreement with observations in spring than in autumn, when close agreement across the CMIP5 models is only found in central Siberia. Historical experiments from most CMIP5 models show negative pan-Arctic trends in SCE and SWE. These trends are, however, considerably weaker (and less statistically significant) than those reported from observations. Most CMIP5 models can more accurately capture the trend pattern of SCE than that of SWE, which shows quantitative and qualitative differences with the observed trends over Eurasia. Our results demonstrate the importance of using multiple data sources for the evaluation of snow characteristics in climate models. Further developments should focus on the improvement of both dataset quality and snow representation in climate models, especially ESM-SnowMIP.

Similar content being viewed by others

1 Introduction

Snow is a critical component of the Arctic climate system. Over northern Eurasia and North America, the duration of snow cover ranges from 7 to 10 months per year (Brown et al. 2017), with the maximum snow extent covering over 40% of the Northern Hemisphere land area (approximately \( 47 \times 10^{6} \;{\text{km}}^{2} \)) each year (Robinson and Frei 2000; Lemke et al. 2007). Snow affects a variety of high-latitude climate processes and feedbacks (Cohen and Rind 1991; Groisman et al. 1994). The high reflectivity (albedo being 0.8–0.9 for dry snow) and low thermal conductivity of snow have a cooling effect and modulate snow-albedo feedback (Qu and Hall 2007; Fletcher et al. 2015; Wegmann et al. 2018). The contribution of terrestrial snow to the Earth’s radiation budget at the top of the atmosphere through snow-albedo feedback is closely comparable to that of sea ice (Flanner et al. 2011; Singh et al. 2015). Snow also prevents large energy losses from the underlying soil and notably ice growth and the development of seasonal permafrost (Lawrence and Slater 2009; Gouttevin et al. 2012; Koven et al. 2013; Slater et al. 2017). As a natural water reservoir, snow plays a critical role in the high-latitude hydrological cycle, including evaporation and runoff (Groisman et al. 2017). The runoff generated by snow in the Arctic drainage basin contributes up to 75% of the total annual flow in the Northwest Territories in Canada (Woo and Marsh 1978). Mankin et al. (2015) found that 68 Northern Hemisphere river basins providing water availability to approximately 2 billion people are expected to be impacted by a 67% decrease in the snow supply during this century.

Snow is also one of the most variable components of the climate system (Gutzler and Rosen 1992; Henderson et al. 2018). With the Arctic warming twice as fast as the global rate (e.g., Serreze and Barry 2011), the Arctic snow cover duration is declining by 2–4 days per decade (Wang et al. 2013; Brown et al. 2017), with snow melting starting earlier in spring (Cayan et al. 2001; Stewart 2009). However, the snow response to increasing temperature and precipitation is quite complex and depends regionally on the climate regime and elevation (Brown and Mote 2009; Hernández-Henríquez et al. 2015).

The pattern of snow cover duration trends is not homogeneous in all Arctic sectors (Choi et al. 2010; Peng et al. 2013; Brown et al. 2017). Barichivich et al. (2013) stated that the Eurasian Arctic experienced larger reductions in the snow-covered season (12.6 days) than the North American Arctic region (6.2 days) during 1982–2011. Integrated over the Arctic, the snow cover extent (SCE) has considerably decreased in recent decades (e.g., Rupp et al. 2013; Kunkel et al. 2016; Hori et al. 2017), and there is also evidence from multiple data sources that the maximum snow accumulation is decreasing, with the strongest decline identified in spring (Brown 2000). However, trend magnitudes vary spatially in both the Eurasian and Canadian sectors (Bulygina et al. 2011; Atkinson et al. 2006). With increasing temperatures and atmospheric moisture in the midlatitudes, snowfall is projected to decrease partly due to the transition of solid-state precipitation to liquid-state precipitation (Räisänen 2008). The pan-Arctic tendencies in SCE and the duration of the snow period in the Arctic are projected to continue in the 21st century (Collins et al. 2013). However, the manifestation of these large-scale tendencies on the regional scale with different trends in various regions suggests that natural climate variability also contributes to the changes in snow characteristics (Mudryk et al. 2014).

State-of-the-art terrestrial snow-cover models differ in complexity, ranging from those explicitly accounting for detailed snow stratigraphy (e.g., CROCUS, Brun et al. 1997; Vionnet et al. 2012) to models of intermediate complexity with 2–3 layers and to highly simplified configurations with zero-layer (combined with soil) or single-layer snow models (e.g., Slater et al. 2001). Earth system models (ESMs) usually employ zero- and single-layer configurations, which are computationally efficient but involve many limitations (Bokhorst et al. 2016; Krinner et al. 2018). Generally, snow water equivalent (SWE) is a prognostic variable (ES-DOC, https://es-doc.org) resulting from the balance between the snowfall rate, evaporation and snowmelt rate (Manabe 1969; Thackeray et al. 2016). However, snow cover fraction or extent is diagnosed from SWE through different parameterizations (Wu and Wu 2004) and is also mediated by various conservation properties (e.g., water discharge-storage), coupled with the atmosphere and land cover types, which depend essentially on characteristics of the land model integrated in the global climate model (GCM). For example, to diagnose SCE, the ISBA surface model (“Interaction Sol-Biosphère-Atmosphère”) component of CNRM-CM5, uses an asymptotic function of SWE (Douville et al. 1995), whereas the CLM 4 (“Community Land Model”) land model from CCSM4 uses a hyperbolic tangent approximation (Xu and Dirmeyer 2013).

Several studies have evaluated snow characteristics (SWE, SCE and snowfall) in climate models from phase 5 of the Coupled Model Intercomparison Project (CMIP5) (Brutel-Vuilmet et al. 2013; Kapnick and Delworth 2013; Terzago et al. 2014; Connolly et al. 2019). The main finding is the underestimation of the observed decreasing trend in spring SCE in the Northern Hemisphere over 1979–2015 (Derksen and Brown 2012) by CMIP5 models, which is typically explained by the underestimation of the boreal temperature in models (Brutel-Vuilmet et al. 2013). Likewise, the spread in snow-albedo feedback (SAF) was not reduced in CMIP5 with respect to CMIP3, likely due to a broad spread in the approaches used to analyze vegetation in models (Qu and Hall 2013). The association of the structural differences in the snowpack in models with a spread in vegetation and albedo parametrizations was recently confirmed by Thackeray et al. (2018). Notably, models displaying the largest bias in SAF also show clear structural and parametric biases in the representation of snow characteristics. In most works, snow regimes in autumn have received less attention than those in spring.

In this respect, the representation of snow-associated feedbacks in climate models, especially during the shoulder seasons (when Arctic snow cover exhibits the strongest variability), is of special importance. During the offset season, downwelling shortwave and longwave radiation enable snow melt, and during the onset season, temperatures are sufficiently cold to favor solid precipitation and snow accumulation (Sicart et al. 2006). In spring, the SAF is stronger as snow cover starts to age and recede due to increasing temperature and insolation (Qu and Hall 2013; Thackeray et al. 2016). While variations in snow characteristics are smaller in autumn than in spring, they also experience changes that are linked to atmospheric circulation (Henderson et al. 2018). Observational (Cohen et al. 2007) and modeling (Peings et al. 2012) studies established a link between SCE anomalies over Eurasia and the winter phase of the Arctic Oscillation (AO) and the North Atlantic Oscillation (NAO). However, Gastineau et al. (2017), using an ensemble of CMIP5 models, demonstrated that this relationship is simulated by only four models and is largely underestimated by the majority of the CMIP5 ensemble. Douville et al. (2017) questioned the robustness of the snow-NAO relationship and argued for the importance of eastward phases of the Quasi-Biennial Oscillation (QBO) in modulating snow cover variability. There are also reports about other snow-associated large-scale teleconnections. For instance, positive (negative) SCE winter-to-spring anomalies in Eurasia may be followed by negative (positive) anomalies in rainfall during the Indian summer monsoon (Prabhu et al. 2017; Senan et al. 2016). We note, however, that this snow-related teleconnection is quite controversial (Peings and Douville 2010; Zhang et al. 2019).

In summary, there is a need for a comprehensive evaluation of snow characteristics in climate models using available observations over the last decades to demonstrate which Arctic snow features are most robust across different models and which are not well represented. Here, we focus on the representation of Arctic terrestrial snow (SCE and SWE) during the onset (October–November) and melting (March–April) seasons in the CMIP5 model ensemble. The paper is organized as follows. Section 2 briefly describes the snow data sources (CMIP5 models, reanalyses and observations) and the methodology of comparison. An evaluation of the long-term climatology of snow characteristics and annual cycles is presented in Sect. 3. The characteristics of climate variability in SCE and SWE are evaluated in Sect. 4. Section 5 summarizes the results and suggests some possible lines of future research.

2 Data and methods

2.1 Models and observational data sets

We use historical simulations of 16 CMIP5 models (Table 1) and focus on the representation of SCE and SWE in these models. For each model, we analyzed the model ensemble mean derived from all available ensemble realizations for a given model. For some assessments, we considered the multimodel mean derived as the average across all ensemble means for selected CMIP5 models. For validation of SCE and SWE in the CMIP5 models, we used two reanalyses (NOAA 20th Century Reanalysis and NCEP/CFSR), a satellite dataset from Rutgers University and a dataset combined from satellite observations, reanalyses and a snow product (CanSISE). Details of these datasets are given in Table 2.

NOAA-CIRES 20th Century Reanalysis version 2 (‘NOAAV2c’) (Compo et al. 2011) covers the period from 1871 to 2012, with an output temporal and spatial resolution of 3 h and \({2.0^{\circ } \times 1.75^{\circ }}\), respectively. The National Center for Environmental Prediction Climate Forecast System Reanalysis (‘NCEP/CFSR’) (Saha et al. 2010) covers the period from 1979 to 2010 and has a 6-hourly temporal resolution and \({0.5^{\circ }\times 0.5^{\circ }}\) spatial resolution. From these two products, we used SCE only. The Northern Hemisphere weekly SCE Climate Data Record from Rutgers University is freely available at the NOAA (National Oceanic and Atmospheric Administration) National Center for Environmental Information (NCEI) (‘NOAA CDR’) and represents the longest satellite-based record of snow cover for the period from 1966 to the present at an \({89 \times 89}\) cartesian grid with a resolution of 190.6 km at \({60^{\circ }}\)N latitude. The data are binary, with ‘1’ indicating grid cells with at least 50% snow cover and ‘0’ indicating cells considered to be snow free (Robinson and Frei 2000). CanSISE Observation-Based Ensemble Version 2 of the Northern Hemisphere Terrestrial Snow Water Equivalent (Mudryk and Derksen 2017) (’CanSISE’) is a terrestrial SWE dataset based on passive microwave observations and ground-based weather stations, two reanalyses (ERA-Interim/Land, Balsamo et al. (2015) and MERRA, Rienecker et al. (2011)), the Crocus snowpack model (Brun et al. 2013) and the GLDAS product (Global Land Data Assimilation System Version 2) (Rodell et al. 2004; Rodell and Beaudoing 2013). This dataset has a daily temporal resolution and \({1.0^{\circ }}\) spatial resolution and covers the period from 1981 to 2010.

2.2 Preprocessing and methods

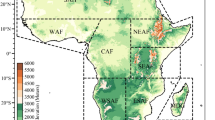

We analyzed the period from 1979 to 2005 for SCE and from 1981 to 2005 for SWE. For intercomparison, all models, reanalyses and observational datasets (except for NOAA CDR) have been regridded onto a \({1.0^{\circ } \times 1.0^{\circ }}\) lat-lon grid using bilinear interpolation. NOAA CDR was used on its original grid. We defined the terrestrial Arctic as the region north of \({50^{\circ }{\text{N}}}\). For large-scale averages applied in some analyses, we separately considered the North American sector and the Eurasian sector, which were separated by \({180^{\circ }{\text{E}}}\) (Fig. 1). Being focused on terrestrial snow, we analyzed the grid cells with a land fraction of at least 50% and with a permanent ice fraction of less than 15%. We applied to all analyzed products the land-ice mask of the GISS-E2-R model, which is the most restrictive mask, especially over coastal areas of North America. This ensures effective elimination of ocean regions and ice cells among all models.

To produce monthly time series of snow characteristics, all reanalyses and CanSISE products were averaged over calendar months. In the NOAA CDR dataset, weekly binary charts are attributed to the fifth day of the week, which was found to be the most representative day of the week regarding snow chart boundaries (Robinson et al. 1993). Furthermore, the monthly averaging of these data is provided by averaging all weekly charts that fall into a particular month. For instance, the chart week from 30 October to 5 November is attributed to October. This procedure introduces some uncertainty, which is, however, small, especially given that for many estimates, we used 2-monthly averaging periods.

We compute the snow margin position (SMP), which is defined as the 50% SCE contour. For both reanalyses, NOAAV2c and NCEP/CFSR, the SMP is computed directly by considering the contour of 50% SCE of the March–April and October–November climatologies. The NOAA CDR data are not included in this analysis due to the incompatibility between grid projections, as mentioned in the previous paragraph. To achieve comparability between CMIP5 model data and reanalyses, we assigned binary values in each model, with ‘1’ assigned to all grid cells with \({\ge }\) 50% and ‘0’ assigned to the remaining grid cells. To construct the binary field for models providing ensemble members, we first applied the conversion of SCE to the binary form for each ensemble member and then set the grid cell to ‘1’ if more than half of the ensemble members for a particular model report ‘1’, otherwise, the grid cell in the ensemble averaged model time series was set to ‘0’. By doing so, for each season, we obtain 16 binary fields for each CMIP5 model. To further evaluate the SMP among CMIP5 models, we summed the number of grid cells with a value of ‘1’ in each season over the model ensemble. For example, a value of ‘4’ in a grid cell implies that 4 out of 16 models display \({\ge }\) 50%. If this grid cell is within the contour of the snow margin in the reanalyses, the SMP in these 4 models is considered to be in agreement with the reanalyses. Taylor diagrams (Taylor 2001) providing an informative graphical summary of the extent to which model patterns are matching observations in terms of correlation, centered root-mean-square (RMS) difference and the amplitude of variability quantified by standard deviations (STD), were built using standard Python routines. RMS errors applied to the analysis of model consistency with data were computed using a standard procedure by taking the square root of the sum of squared residuals.

3 Evaluation of long-term climatology of snow characteristics

3.1 Snow cover extent

The climatology of SCE in the NCEP/CFSR reanalysis (Fig. 2) is used here as a reference for evaluation of SCE in CMIP5 models. The October-November climatology in NCEP/CFSR (Fig. 2a) shows the maximum SCE (80–100%) over northern Alaska, the Canadian Arctic Archipelago and northeastern Eurasia. Over North America, high values of SCE are observed in the Brooks mountain range, in the Mackenzie Mountains and over the Canadian Arctic Archipelago. Over Eurasia, the maximum SCE is observed over northeastern Siberia with maximum values of 90–100% over the Verkoïansk, Tcherski and Koryak mountain ranges.

In March–April (Fig. 2b), complete snow cover (100%) is observed over northeastern Eurasia and northern Canada and Alaska. Note that the areas covered by more than 80% snow match the areas of continuous permafrost well (Brown et al. 1997). In the southern part of Western Eurasia, snow has almost melted, with the SCE being approximately 15%, whereas in Scandinavia, SCE values of 80–100% are observed north of \({60^{\circ }{\text{N}}}\). Over North America, the decrease in SCE from north to south is more pronounced than over Eurasia in both seasons.

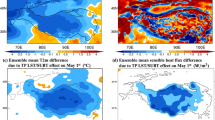

Same as in Fig. 3 but for March–April

We now turn to the evaluation of differences in climatology between the ensemble of CMIP5 models and the NCEP/CFSR reanalysis (Figs. 3, 4). In October-November, 7 of the 16 models (bcc-csm1-1, CSIRO-Mk3-6-0, MIROC’s and MPI’s versions; Fig. 3a, e, i, j, k, l, m) show a general underestimation of SCE of up to 60% over the whole terrestrial Arctic. In contrast, the models CCSM4, inmcm4, MRI-CGCM3 and NorESM1-M/ME (Fig. 3c, h, n, o, p) demonstrate general overestimation of SCE over North America and eastern Eurasia by 50–60%. GISS-E2-H/R (Fig. 3f, g) exhibits overestimation in western Eurasia and eastern North America by 30% and underestimation in the coastal regions of the East Siberian Sea and Bering Sea by 25%. The smallest differences are observed for CanESM2 and CNRM-CM5 (Fig. 3b, c), and they show good agreement with NCEP/CFSR, especially in central Siberia.

Analysis of differences in March–April SCE between selected CMIP5 models and NCEP/CFSR reanalysis identify three groups of models. Compared to NCEP/CFSR, the first group (bcc-csm1-1, CSIRO-Mk3-6-0 and both versions of MPI-ESM-LR/P, Fig. 4a, e, l, m) generally underestimates snow cover by almost 50%, with the largest differences observed in the southern part of western Eurasia. The second group (CanESM2, MIROC-ESM/-CHEM and MRI-CGCM3; Fig. 4b, i, j, n) broadly overestimates SCE, with the largest differences observed in the Rocky Mountains (almost 60% by CanESM2). These four models show a zonal underestimation of 35% along the southern edge of western Eurasia (Fig. 4b, i, j), although the underestimation of MRI-CGCM3 is smaller at 15% (Fig. 4n). The third group (CNRM-CM5, GISS-E2-H/R and MIROC5; Fig. 4d, f, g, k) exhibits a dipole structure of differences, with underestimation of SCE in the north and overestimation in the south, both being within 30%. The model inmcm4 (Fig. 4h) shows overestimation by 15–40% in the southern part of eastern Eurasia and local underestimation by less than 30% in western Canada. Similar patterns are shown by CCSM4 (Fig. 4c) and NorESM1-M/-ME (Fig. 4o, p), with underestimation by up to 30% in western Eurasia and eastern Canada and overestimation by 15% in southeastern Eurasia and western Canada. The smallest differences are observed in both versions of NorESM1-M/ME (Fig. 4o, p) and range within \({\pm }\) 35% in southern Eurasia and in the Rocky Mountains.

The differences between the CMIP5 models and the NOAAV2c reanalysis (not shown) are very similar to those found for NCEP/CFSR in terms of spatial patterns. However, NOAAV2c tends to slightly overestimate the SCE climatology of NCEP/CFSR (Fig. S1). Therefore, when models have shown a positive (negative) bias compared to NCEP/CFSR, these differences are smaller (larger) in magnitude and less (more) significant when using NOAAV2c as a reference.

3.2 Snow water equivalent

The climatology of Arctic SWE in the melting (March–April) and onset (October–November) seasons over 1981–2005 derived from the CanSISE ensemble product is shown in Fig. 5. In October–November (Fig. 5a), maximum values of up to 100 \({\text{kg}}\cdot{ {\text{m}}^{-2}}\) are observed east of the Yenisei River. All over the Arctic, the SWE is approximately 20 \({\text{kg}}\cdot{ {\text{m}}^{-2}}\), with higher values of up to 60 \({\text{kg}}\cdot{ {\text{m}}^{-2}}\) over the Verkhoyansk, Tcherski and Koryak mountain ranges. In March–April (Fig. 5b), SWE values are higher than in October–November. The areas with high SWE include the Kamchatka Peninsula and the region east of the Yenisei River, where the SWE reaches 300 \({\text{kg}}\cdot{ {\text{m}}^{-2}}\). High values of up to 210 \({\text{kg}}\cdot{ {\text{m}}^{-2}}\) are observed over the Ural and Kolyma Mountains and eastern Canada. The remaining part of the terrestrial Arctic is characterized by SWE values of approximately 50 \({\text{kg}}\cdot{ {\text{m}}^{-2}}\).

White areas in Fig. 5 correspond to the land-ice mask of the CanSISE ensemble product visible over the western coast of Canada and Alaska and over Scandinavia. This mask is originally from the MERRA reanalysis of Rienecker et al. (2011), which considers a grid cell masked when the land-ice fraction is \({>}\) 0 (L. Mudryk, personal communication). As mentioned in Sect. 2, this mask was not used for the analysis; instead, the land-ice mask from GISS-E2-R was used.

We now turn to evaluate the difference in SWE climatology between the CMIP5 ensemble and the CanSISE product (Figs. 6, 7). The October-November differences between CanSISE and modeled SWE (Fig. 6) are smaller than the March–April differences (by almost a factor of 3; Fig. 7), with the strongest overestimation found for NorESM1-M and NorESM-ME (Fig. 6o, p) and reaching 80 \({\text{kg}}\cdot{ {\text{m}}^{-2}}\) over far eastern Eurasia. In March–April, CSIRO-Mk3-6-0 and MPI-ESM-LR/P show a broad underestimation of SWE, which is especially strong in Eurasia. In March–April, there is an overall overestimation of SWE across nearly all CMIP5 models compared to the CanSISE ensemble product, with differences ranging from 40 to 120 \({\text{kg}}\cdot{ {\text{m}}^{-2}}\) over most of northern Eurasia and North America. Local underestimation by up to \(-120\) \({\text{kg}}\cdot{ {\text{m}}^{-2}}\) is observed in the majority of models in the delta of the Yenisei River and is especially pronounced in GISS-E2-H/R, MIROC-ESM/-CHEM and MIROC-5 (Fig. 7f, g, i, j, k). This pattern likely results from the lack of capability of large-scale GCMs to capture this local effect. The pattern of underestimation of SWE over western Eurasia is evident in the CCSM4, CSIRO-Mk3-6-0, MPI-ESM-LR/P and NorESM1-M models. Among these, CSIRO-Mk3-6-0 demonstrates a pan-Arctic pattern of underestimation with differences of approximately 26 \({\text{kg}}\cdot{ {\text{m}}^{-2}}\) (Table 4). The relative differences are larger in March–April than in October–November, which suggests that the discrepancies in the SWE representation are more related to the melting process, i.e., SWE changes in March–April are more sensitive to temperature changes.

3.3 Annual cycle

We turn now to the comparative analysis of the annual cycle of snow characteristics in CMIP5 models, reanalyses and observations for different Arctic regions. Regional climatological monthly means were obtained for the period 1979–2005 for SCE and for 1981–2005 for SWE. Note that we applied a model-specific land-ice mask to each CMIP5 model and the GISS-E2-R mask to the reanalyses (NCEP/CFSR, NOAAV2c) and CanSISE product. For the NOAA CDR dataset (which has a binary land mask but does not provide an ice mask), we applied the July SCE climatology (1979–2005) to generate a virtual ice fraction mask. Thus, we masked the grid cells with more than 85% SCE, which is equivalent to a perennial SCE (ice fraction) of less than 15% as we had done with CMIP5.

Annual cycle of snow cover extent (top row; 1979–2005) and of snow water equivalent (bottom row; 1981–2005) for the Arctic (left column; a, d), Eurasia (center column; b, e) and North America (right column; c, f) of the ensemble mean of CMIP5 selection compared to the reanalyses (NCEP/CFSR and NOAAV2c) and NOAA CDR for SCE and to the CanSISE product for SWE

For the whole Arctic (Fig. 8a), the annual cycle of SCE in both NCEP/CFSR and NOAAV2c is in good agreement with the NOAA CDR, with the largest differences not exceeding 10%. Between both reanalyses, the highest differences are only approximately 10% in May, and they are observed in Eurasia. Compared to the NOAA CDR, the multimodel mean generally reproduces the annual cycle, with the largest discrepancies of up to 25% in October and November.

The majority of the CMIP5 models tend to underestimate SCE throughout the year, most notably in autumn and winter (October–February). This underestimation is most distinct in North America (Fig. 8c), while some models (CCSM4, MRI-CGCM3 and NorESM1-M/ME) match the NOAA CDR quite closely over Eurasia (Fig. 8b), with differences within 10%. The largest discrepancies with respect to observational data (close to 40%) are identified in the MIROC versions during the months of October and November and over both subdomains (Fig. 8b, c). When melting starts, discrepancies across the models are also evident. Considering the March-June period, MPI-ESM-LR/P, CSIRO-Mk3-6-0, bcc-csm1-1 and inmcm4 tend to underestimate SCE by up to 30% in May, mainly in Eurasia (Fig. 8b), and report complete snow melt in June. In contrast, the other models maintain some snow cover until July. This underestimation is mostly visible over North America, with values of up to 40% in MPI-ESM-LR (Fig. 8c). Other models (CanESM2, CNRM-CM5 and MRI-CGCM3) tend to slightly overestimate SCE by up to 10% in May primarily due to the Eurasia pattern (Fig. 8b). During the May–August period, compared to NOAA CDR, both versions of NorESM1-M/ME show an overestimation of SCE by up to 15%, implying that these two models are not capable of completely melting snow in summer, especially over Eurasia (Fig. 8b).

Compared to SCE, the annual cycle of SWE (Fig. 8d–f) is characterized by a relatively stronger spread among the models and larger deviations from observations. There is a definite overestimation of SWE in the CMIP5 models with respect to the CanSISE product. The multimodel mean shows a positive bias throughout the annual cycle, with the largest overestimation during March–May, when the difference for the whole Arctic reaches 40 \({\text{kg}}\cdot{ {\text{m}}^{-2}}\) (Fig. 8d), with values of 65 \({\text{kg}}\cdot{ {\text{m}}^{-2}}\) in North America (Fig. 8f) and 30 \({\text{kg}}\cdot{ {\text{m}}^{-2}}\) in Eurasia (Fig. 8e). Considering the whole Arctic (Fig. 8d), the largest differences are observed during the melting season, from March to June, reaching 20–30 \({\text{kg}}\cdot{ {\text{m}}^{-2}}\). Among CMIP5 models, the greatest SWE overestimation is in excess of 100 \({\text{kg}}\cdot{ {\text{m}}^{-2}}\) and occurs in April for MRI-CGCM3 (Fig. 8d). The differences are larger over North America (Fig. 8f) than over Eurasia (Fig. 8e).

Notably, there are three models (CSIRO-Mk3-6-0 and both versions of MPI-ESM-LR/P) that match the annual cycle of the CanSISE product fairly well for the whole Arctic and exhibit differences in SWE from 10 to +20 \({\text{kg}}\cdot{ {\text{m}}^{-2}}\). For the period from August to September, when CanSISE is characterized by complete snow melt in the Arctic (Fig. 8d), some models (MIROC5 and both versions of NorESM1-M/ME) still show values of up to 50 \({\text{kg}}\cdot{ {\text{m}}^{-2}}\) over North America (Fig. 8c). During the snow season (January to May), nearly all models display large overestimations over all subdomains. The only exception is one group of models (MPI-ESM-LR/P, CSIRO-Mk3-6-0 and NorESM1-ME) that shows a negative bias over Eurasia (Fig. 8e).

3.4 Snow margin position

We analyze the SMP for the onset (October–November) and melting (March–April) seasons for the CMIP5 ensemble and the two reanalyses. Both reanalyses are in better agreement with each other in March–April (Fig. 9b) than in October–November (Fig. 9a). In March–April, the snow margins in both products match each other almost perfectly. However, in October–November, the reanalyses exhibit large spatial discrepancies with a deviation between the SMPs of approximately 10\(^\circ \) latitude. These discrepancies are more pronounced over North America than over Eurasia. This is due to a less intense onset of SCE in NOAAV2c than in NCEP/CFSR, which is consistent with the faster rate of increase in SCE in NCEP/CFSR than in NOAAV2c in October–November (Fig. 8c).

The snow margin position (SMP) is defined as the contourline of 50% snow cover fraction averaged over 1979–2005 in the NCEP/CFSR and NOAAV2c reanalyses (purple and pink lines, respectively) for October–November (a) and March–April (b). The color bar shows the number of CMIP5 models that display an ensemble seasonal mean of snow cover fraction equal or superior to 50%

The SMP is also in a better agreement between different models and reanalyses during March–April than in October–November. This suggests that the spatial discrepancies in the SMP are likely due to differences in the starting time of the melting season among the CMIP5 models. The mismatch is accentuated in southwestern Siberia, with only 3–4 models being consistent with the reanalyses. The SMP over North America is more accurately represented, with 10–12 models being in close agreement with the reanalyses. In central and northern Eurasia, over Scandinavia, Canada and Alaska, all models match the reanalyses reasonably well (Fig. 9b). However, in October–November (Fig. 9a), the agreement between the model datasets and the reanalyses becomes less evident. Only three of the 16 models feature SMPs close to that of NCEP/CFSR, whereas the SMPs of 10 other models are closer to that of NOAAV2c. A reasonably good agreement across all datasets is identified only over the Siberian Plateau, the Verkhoyansk mountainous region and northern Canada. The worst agreement in the SMP is observed in the southern part of North America and the region west of Lake Baikal. Some disagreements observed in coastal areas are associated with different ice-land masks.

3.5 Summary of evaluation of climatologies

Analysis of the CMIP5 ensemble spread in the estimates of SCE and SWE is summarized in Tables 3 and 4, which present the long-term means over the terrestrial Arctic. During October–November, the multimodel mean (68% SCE) is in general agreement with observations closely matching the NOAA CDR and overestimating the NCEP/CFSR and NOAAV2c by approximately 15%. Among CMIP5 models, CCSM4, inmcm4, NorESM1-M/ME, and MRI-CGCM3 tend to overestimate the observed SCE by approximately 20–25%, with the remaining models showing SCE values close to those of the observations. In March–April, there is good agreement between the reference datasets (NCEP/CFSR and NOAAV2c), which deviate from each other by less than 10%; however, in October–November, differences may amount to 15%. In March–April, the observed SCE is approximately 80–85%, implying that almost all the terrestrial Arctic is covered in snow (as in Fig. 9b). The multimodel CMIP5 mean strongly underestimates the observed SCE in March–April by approximately 30%. There is a group of models (bcc-csm1-1, CSIRO-Mk3-6-0, inmcm4, and MPI-ESM-LR/P) with March–April SCE values ranging from 30 to 45%. The remaining models yield March–April SCE values of 60–70%, which are closer to the observed values but are nevertheless strongly negatively biased. For SWE, the observed CanSISE mean is 10 \({\text{kg}}\cdot{ {\text{m}}^{-2}}\) smaller than the multimodel mean (Table 4). The best agreement with observations is demonstrated by both versions of MPI-ESM-LR/P and CSIRO-Mk3-6-0. The NorESM1-M model is an obvious outlier and yields SWE values that are nearly twice as high as the observations. In March–April, the observed CanSISE mean is approximately 60% smaller than the multimodel mean. The spread in model SWE in March–April is quite large and ranges from a minimum of 33 \({\text{kg}}\cdot{ {\text{m}}^{-2}}\) (CSIRO-Mk3-6-0) to a maximum of approximately 148 \({\text{kg}}\cdot{ {\text{m}}^{-2}}\) (MRI-CGCM3). Only three models (inmcm4 and both versions of MPI-ESM-LR/P) are in relatively good agreement with the observations.

4 Evaluation of trends and interannual variability in snow characteristics

We turn now to the evaluation of the past variability in SCE and SWE over the terrestrial Arctic in the CMIP5 model historical runs for the onset (October–November) and melting (March–April) seasons during 1979–2005 (for SCE) and 1981–2005 (for SWE).

4.1 Interannual variability of snow characteristics

Highlighting the assessment presented above (Sect. 3.5), the analysis of seasonal time series of SCE and SWE (Fig. 10) also sheds some light on the origins of the differences revealed by Tables 3 and 4. Thus, disagreement between the observed SCE in October-November is especially evident after 1995 when the NOAA CDR shows an upward tendency, whereas the NCEP/CFSR and NOAAV2c both show a decrease in SCE (Fig. 10a). This may also affect trend estimates, which is considered below.

Time series of snow cover (top row; 1979–2005) and snow water equivalent (bottom row; 1981–2005) averaged over the terrestrial Arctic in October–November (a, c) and in March–April (b, d). Individual CMIP5 models are shown in color dotted lines, multimodel mean in black thick line, SCE reanalyses (NCEP/CFSR and NOAAV2c) in grey and NOAA CDR for SCE and CanSISE ensemble for SWE are in black-squared

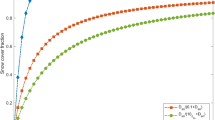

Taylor diagram used in the evaluation of the time series of snow cover (top row; 1979–2005) and snow water equivalent (bottom row; 1981–2005) over the Arctic in October–November (a, c) and in March–April (b, d) in CMIP5 models (a–p letters). For SCE a, b, NCEP/CFSR reanalysis (Q) and NOAAV2c reanalysis (R) are included versus the satellite-based NOAA CDR (black star). For SWE c, d we use as reference the CanSISE ensemble product (black star). The radial distance to the origin indicates the standard deviation, the centered root-mean-squared (RMS) is the distance to the reference point (star in x-axis) and the azimuthal positions give the correlation coefficient R. The RMS difference is computed once the overall bias between model and observations has been removed

Figure 11 shows Taylor diagrams (Taylor 2001) built for the seasonal time series. In October–November (Fig. 11a), the magnitude of interannual variability in the NCEP/CFSR reanalysis is close to the observational reference (4.8% and 4.5%, respectively). By contrast, NOAAV2c reanalysis and the majority of models exhibit standard deviations (STDs) that are considerably smaller than in observations. In March–April (Fig. 11b), the discrepancies with the NOAA CDR are smaller than those in October–November, with the centered RMS difference being larger for all models and reanalyses. The characteristics of interannual variability in NCEP/CFSR, NOAAV2c and NOAA CDR SCE are in agreement with each other, with STDs ranging from 2.4 to 2.9% (Fig. 11b). However, only NOAAV2c showed a positive and significant correlation with NOAA CDR. Most of the CMIP5 models show much smaller STDs than the observations and practically no correlation with observations on the interannual scale, except for bcc-csm1-1 and MIROC5, which show weak and marginally significant correlations of 0.4.

In October–November (Fig. 11c), the majority of the CMIP5 models underestimate the magnitude of the interannual variability in SWE reported by the observations, except for inmcm4, NorESM1-ME and all versions of MIROCs (Fig. 11c), which are considered to be outliers with respect to STDs (more than 4 \({\text{kg}}\cdot{ {\text{m}}^{-2}}\)) and are not shown in Fig. 11c. The March–April SWE in the CMIP5 models is characterized by a large spread (Fig. 11d). The observed interannual variability in SWE (4.45 \({\text{kg}}\cdot{ {\text{m}}^{-2}}\)) is well captured by CCSM4, MIROC-ESM and MPI-ESM-P. For the majority of models, the centered RMS differences are approximately 6 \({\text{kg}}\cdot{ {\text{m}}^{-2}}\). The MIROC5, inmcm4 and NorESM1-ME models are characterized by the highest RMS values and STDs. The lowest magnitude of interannual variability in CSIRO-Mk3-6-0 is likely due to the number of ensemble members averaged (10 members, Table 1).

All CMIP5 models display low correlation with the observational reference dataset for both SCE and SWE. This is not surprising considering the fundamental limitations of GCMs in reproducing interannual variability (Taylor et al. 2012). Thus, the simulated temporal evolution in the models may miss the impact of unforced internal variations such as El Niño, the NAO and the Atlantic Multidecadal Oscillation (AMO). As a result, the interannual variability correlates significantly with observations only by chance. The Northern Hemisphere temperature (crucial for snow melting in March–April) and precipitation (the origin of snow during October–November) are dominated to a large extent by internal climate variability, such as the NAO (Hurrell et al. 2003), and the associated positioning of cyclone tracks (Gulev et al. 2002; Popova 2007; Tilinina et al. 2013; Webster et al. 2019).

4.2 Seasonal trend analysis

We estimate SCE and SWE trends in CMIP5 models from the individual model ensemble members, the ensemble of a single model and from the multimodel ensemble mean. In total, we analyze 73 individual model realizations provided by 16 models (Table 1) and compare them with estimates based upon observations.

Land Arctic trend of snow cover (top row; 1979–2005) and snow water equivalent (bottom row; 1981–2005) in October–November (a, c) and March–April (b, d). Trends are computed for each individual model realization (circle), the ensemble model mean (diamond) and the multimodel mean (black square). Shaded regions indicate the standard error of the trend in observation reference dataset when is statistically significant at 90% of confidence level (t-test). Filled markers indicated statistical significance at 90% of confidence level (t-test)

In October–November (Fig. 12a, Table 3), the NOAA CDR displays a trend of \(+3.28 \pm 0.91\%\)/decade and is thus a clear outlier with respect to the other reference datasets. The positive October trend estimated by the NOAA CDR in the recent period has already been reported by Brown and Derksen (2013) and Estilow et al. (2015); however, there is mounting factual evidence that this is misrepresented by GCMs and reanalysis products (Allchin and Déry 2019). NCEP/CFSR and NOAAV2c show SCE trends of \(-1.61 \pm 1.15\%\)/decade and \(-0.76 \pm 0.59\%\)/decade, respectively, but they lack statistical significance. Consequently, we consider NCEP/CFSR as a reference for model evaluation since it displays a p-value slightly lower than that of NOAAV2c (0.17 and 0.21, respectively). The multimodel mean shows an October–November SCE trend of \(-0.72 \pm 0.12\%\)/decade, which falls in the range given by the NCEP/CFSR estimates. We also note generally weaker SCE trends in models with respect to those from NCEP/CFSR. Thirteen of 16 models are within the observed SCE trend range. Considering individual ensemble members, 37 estimates fall into the range implied by observations; thus, approximately 50% of individual model ensemble members present trends that are qualitatively consistent with observations. The largest spread across ensemble members is observed for bcc-csm1-1, CanESM2 and both versions of MPI-ESM-LR/P.

NCEP/CFSR shows an insignificant increase in March–April SCE, disagreeing with NOAA CDR and NOAAV2c, as both consistently display significantly negative trends of \(-1.21 \pm 0.58\%\)/decade and \(-1.26 \pm 0.68\%\)/decade, respectively (Fig. 12b, Table 3). The multimodel mean with a value of \({1.10} \pm 0.13\%\)/decade falls in the range of estimates given by NOAA CDR and NOAAV2c. However, five models (inmcm4, MIROC-ESM-CHEM, MPI-ESM-LR, MRI- CGCM3 and NorESM1-ME) report SCE trends outside of the observation range, but the deviations are statistically significant in only two of them (MIROC-ESM-CHEM and MPI-ESM-LR) (Table 3). Among the individual ensemble members, 39 of 73 (more than 50%) report significant trends consistently with observations. Remarkably, some models (bcc-csm1-1, CNRM-CM5, MIROC5 and NorESM1-M) demonstrate a quite strong spread in trend estimates among their ensemble members.

In October–November (Fig. 12c, Table 4), most CMIP5 models show stronger negative trends in SWE compared to the CanSISE product, which displays a weak and insignificant SWE trend of \(-0.63 \pm 0.76\) \({\text{kg}}\cdot{ {\text{m}}^{-2}}\)/decade, while the multimodel mean trend is \(-1.65 \pm 0.18\) \({\text{kg}}\cdot{ {\text{m}}^{-2}}\)/decade. Six out of the 16 CMIP5 models (CanESM2, CSIRO-Mk3-6-0, GISS-E2-H/R and MPI-ESM- LR/P) fall inside the range given by observations; however, only two of them present significant trends (GISS-E2-H and MPI-ESM-LR). None of the individual model members simulate a significant SWE trend inside the range given by the CanSISE product (except for one member of CSIRO-Mk3-6-0).

In March–April (Fig. 12d), SWE in the CanSISE product shows an insignificant downward tendency for the whole Arctic (\(-1.09\pm 1.25\) \({\text{kg}}\cdot{ {\text{m}}^{-2}}\)/decade). The multimodel model mean, however, shows a strong, negative significant trend in SWE of \(-2.85 \pm 0.34\) \({\text{kg}}\cdot{ {\text{m}}^{-2}}\)/dec. Five models (bcc-csm1-1, CSIRO-Mk3-6-0, GISS-E2-R, MPI-ESM-LR and MRI-CGCM3) report trends in March-April SWE falling in the range given by observations, but only two of them are statistically significant (CSIRO-Mk3-6-0 and GISS-E2-R). Among individual ensemble members, only 5 of the 73 significant SWE trends fall within the observed range (all belonging to the CSIRO-Mk3-6-0 model). Thus, less than 7% of ensemble simulations are in agreement with the observed SWE trend in March-April. The individual realizations of bcc-csm1-1, CNRM-CM5, MIROC5 and MRI-CGCM3 have the largest spread of trend estimates. Generally, the spread of individual members is smaller for October–November than for March–April, except for the MIROC5 and MRI-CGCM3 models.

Left column: number of CMIP5 models displaying a positive/negative SCE trend in October–November (a) and in March–April (c) during 1979–2005. Only SCE trends statistically significant at 80% from t-Student distribution are counted. Right column: spatial trend of SCE trend (%/dec) from NCEP/CFSR reanalysis in October–November (b) and March–April (d) during 1979–2005. Hatched regions indicate SCE trends statistically significant at 90% from t-Student distribution

Left column: number of CMIP5 models displaying a positive/negative SWE trend in October–November (a) and March–April (c) during 1981–2005. Only SWE trends statistically significant at 80% from t-Student distribution are counted. Right column: spatial trend of SWE trend ((\({\text{kg}}\cdot{ {\text{m}}^{-2}}\))/dec) in October–November (b) and March–April (d) during 1981–2005 of CanSISE ensemble product. Hatched regions indicate SWE trends statistically significant at 90% from t-Student distribution

The spatial patterns of linear trends in individual models are extremely noisy, with trends of different signs coexisting in close proximity. Figures 13a, c and 14a, c quantify the degree of consensus across the 16 models [based on 14 snow depth (SND) models] in terms of the linear trends in SCE and SWE. For each grid cell, we compute the maximum number of models agreeing on the sign of trend and on its significance. For instance, if 8 models in a particular cell show significant positive trends, 5 show significant negative trends and 3 show insignificant trends, this grid cell has a “\(+8\)” value. If the numbers of models with positive and negative trends are equal, the grid cell is set to zero. For this analysis, we used the 80% significance level (t-test) to estimate the statistical significance of individual trends.

Figure 13b shows the NCEP/CFSR trend pattern in SCE in October–November. NCEP/CFSR shows decreasing trends over eastern Europe and north of the Eurasian dry land band (EDLB, Groisman et al. 2018) in Siberia. Over North America, negative trends in SCE (− 8 to 12%/decade) dominate over most regions except the western mountainous regions (Fig. 13b). Locally, positive trends (up to \(+\) 12%/decade) are observed over northeastern Eurasia in the Cherskly Mountain Range. The model ensemble exhibits negative trends dominating over positive ones practically everywhere (Fig. 13a). This coincides with NCEP/CFSR over eastern Europe and north of the EDLB in Siberia but not over the northeastern Eurasia and western North America.

In March–April (Fig. 13d), NCEP/CFSR exhibits a significant positive trend over the eastern part of Siberia, with a maximum of \(+\) 12%/decade observed east of Lake Baikal. Positive SCE trends of \(+\) 10 to 12%/decade are also observed over the mountainous regions in the western part of North America. Most of eastern Europe is characterized by significantly negative trends, amounting to − 12%/decade over eastern Scandinavia and south of the Ural Mountains. The best agreement between CMIP5 models and observations is located over western Eurasia, where 8–12 models (out of 16) display significantly negative trends. Over northeastern Eurasia, 4–8 models exhibit positive trends, in agreement with NCEP/CFSR. The North American pattern of negative trends is captured by 4–8 CMIP5 models; however, the CMIP5 ensemble does not capture the pattern of positive trends over the Rocky Mountains and over the Laurentian Plateau (Fig. 13c).

A similar analysis is carried out for SWE during the period 1981–2005 (Fig. 14). The CanSISE product shows very few locations with statistically significant trends in both seasons. In October–November (Fig. 14b), SWE in the CanSISE product demonstrates negative trends over northwestern Canada and the southern part of Eastern Eurasia and locally positive trends over northern Eurasia along the coast of the Arctic Ocean. In March–April (Fig. 14b), a statistically significant decrease in SWE is observed in northern Eurasia along the Arctic Ocean coast, and a local increase is identified over the southern part of western Siberia. In October–November (Fig. 14a), the model ensemble reports negative trends nearly everywhere, with approximately 8 models showing downward SWE changes in North America and western Eurasia. Eastern Eurasia is characterized by little consensus among the CMIP5 models, with estimates showing mostly insignificant trends and less than 4 of the 16 models demonstrating statistically significant trends. In March–April, most models report primarily negative trends in SWE over eastern Eurasia and primarily positive tendencies over northern Siberia (Fig. 14c).

5 Summary and conclusion

We analyzed the representation of SCE (1979–2005) and SWE (1981–2005) in the Arctic during the onset (October–November) and melting season (March–April) in the historical runs of CMIP5 climate models on the basis of two reanalysis products, one satellite-observed product and an ensemble of different datasets. For SCE, we identified three groups of CMIP5 models: those broadly overestimating the observed SCE (up to 60% regionally), those underestimating the observed climatological SCE (with the greatest differences of 40–50%) and those overestimating the SCE over the northern regions and underestimating the SCE over the southern regions. Differences are especially remarkable in March–April but are also pronounced during October–November. For SWE, we identified a pan-Arctic overestimation in most CMIP5 models except for CSIRO-Mk3-6-0 and MPI-ESM-LR/P, which show either general underestimation or an east–west (underestimation–overestimation) pattern over Eurasia. Again, these patterns are most pronounced during March–April, when positive biases may be on the same order as the mean values. The observed annual cycle of SCE is relatively well captured by CMIP5 models, mainly in autumn, but during the spring–summer season, most models tend to significantly underestimate the observed SCE, especially over North America. At the same time, the annual cycle of SWE is largely positively biased with respect to observations in most CMIP5 models, indicating persistent snow during summer months, thus contradicting observations.

The SMP is better captured by CMIP5 models in March–April, with the best agreement found over Eastern Eurasia and Western North America. In October–November, the CMIP5 ensemble identifies a considerable northward shift in the SMP, and overall agreement occurs only in central Siberia, whereas only 4–6 out of the 16 models match the location of the observed snow margin.

We found that the magnitudes of interannual variability in SCE and SWE are significantly underestimated in most CMIP5 models compared to observations. With respect to pan-Arctic interdecadal trends, most CMIP5 models show weakly negative (but in many cases statistically significant) trends in both SCE (in March–April) and SWE (in both seasons), which is not the case for the observational datasets, which show mostly insignificant trends in pan-Arctic snow characteristics. Regionally, the CMIP5 ensemble captures a relatively good observational trend pattern in SCE, but trends in SWE show no consistency with the regional trend patterns over both continents.

In agreement with previous studies (Brutel-Vuilmet et al. 2013; Mudryk et al. 2014), we found that the SCE annual cycle is relatively well captured by CMIP5 models compared to the three different reference datasets used. Nevertheless, the significant overestimation and the large spread of snow mass over the Northern Hemisphere reported by Roesch (2006) in the previous generation of models (CMIP3) has not been significantly reduced in CMIP5. Regional differences in snow cover duration in autumn (Peng et al. 2013; Brown et al. 2017; Liston and Hiemstra 2011) are likely the cause of the spatial differences in the position of the snow margin in the onset season in both reanalyses and models.

In March–April, we have found that SCE discrepancies in CMIP5 models are observed over the southern part of the Arctic, where temperature changes are substantial and may cause the snow to recede. This is consistent with the inability of CMIP5 models to capture the sensitivity of snow cover to temperature changes (Fletcher et al. 2012; Brutel-Vuilmet et al. 2013; Thackeray et al. 2016). Conversely, during the onset season, snow changes are less dependent on temperature variations. In fact, snow onset is highly coupled with variability in regional precipitation and surface temperature, as both are needed to initiate snow cover (Mudryk et al. 2017; Ye 2019). The accumulation and persistence of snow are both linked to modes of variability in the atmosphere, such as the NAO and AO (Bamzai 2003; Cohen et al. 2012; Liu et al. 2012). However, these modes of variability are not always accurately reproduced in the CMIP5 ensemble (Ning and Bradley 2016; Davini and Cagnazzo 2014), which may result in a poor representation of snow-atmosphere coupling (e.g., Furtado et al. 2015; Gastineau et al. 2017). We hypothesize that the October-November mismatches and the strong overestimation of SWE during the entire snow season may be linked to an inadequate representation of atmospheric internal variability and snow-atmosphere coupling. This is consistent with the relatively low interannual variability found in CMIP5 models (Fig. 11) and the internal limitations of CMIP5 models (Taylor et al. 2012).

An important lesson from our work is that the use of multiple data sources is strongly recommended for model evaluation of snow characteristics. We used multiple datasets to evaluate climate variability in CMIP5 model experiments. We have found that in both the March-April and October–November seasons, CMIP5 snow characteristics display negative but weaker-than-observed trends, consistent with previous studies at the Northern Hemisphere scale (Brutel-Vuilmet et al. 2013; Mudryk et al. 2014; Connolly et al. 2019). Additionally, we confirm that, compared to the other data sources, the October–November trend of the NOAA CDR dataset (\(+3.28 \pm 0.91\%\)/dec) is an outlier, as previously reported by Brown and Derksen (2013) and Estilow et al. (2015). The discrepancies found in SCE trends among the reference datasets imply the importance of a multidataset approach to evaluating snow characteristics in models. As far as evaluation is sensitive to the choice of the reference dataset (e.g., Gómez-Navarro et al. 2012), the use of a single reference dataset may result in biased conclusions. Analysis of multiple datasets (Mudryk et al. 2015) or an ensemble product (CanSISE, Mudryk and Derksen 2017) helps to overcome the limitations of individual datasets and provides a better assessment of the climate state.

Overall, we can conclude that there is still a lack of confidence in climate model simulations of snow in the Arctic partially due to high spatial and temporal variability in snow characteristics but also due to model skill limitations. This raises serious concerns about the robustness of future projections of snow in climate models (Hinzman et al. 2013). Polar amplification (Déry and Brown 2007; Hernández-Henríquez et al. 2015) enhances SAF over northern latitudes and higher elevations and has already surpassed climate projections over the 2008–2012 period (Derksen and Brown 2012). In the context of a warmer and wetter Arctic (Screen and Simmonds 2013; Bintanja and Selten 2014; Dufour et al. 2016), the impact of snow on surface albedo (Thackeray et al. 2018), circulation patterns (Rydzik and Desai 2014), permafrost (Biskaborn et al. 2019) and water resources (Mankin et al. 2015) should be considered a high priority. In this respect, more targeted simulations with ESM-SnowMIP (Krinner et al. 2018) and with a new generation of models (Eyring et al. 2016) are highly desirable for enriching our knowledge of snow-climate interactions and for providing improved future projections of snow characteristics under polar amplification.

Further development of this work will focus on the analysis of CMIP6 simulations, many of which provide a larger number of ensemble members and daily data on snow-associated parameters in a few models. This will allow for the comparative assessment of the progress achieved by CMIP6 compared to CMIP5 in representation of snow parameters in historical simulations and will form the foundation for the evaluation of climate projections in both CMIP5 and CMIP6. This assessment will concentrate on the analysis of the development of new snow parameterizations in many CMIP6 configurations (Eyring et al. 2016; Krinner et al. 2018). On the observational side, given new reference periods in historical simulations in CMIP6, new comparisons should include the extensive use of the Arctic System Reanalysis (ASRv2, Bromwich et al. 2018) potentially in conjunction with experiments with regional configurations developed under Arctic-CORDEX (http://climate-cryosphere.org/activities/targeted/polar-cordex/arctic, Koenigk et al. 2015) performed on rotated polar grids with up to 10 regional climate models.

References

Allchin MI, Déry SJ (2019) Shifting spatial and temporal patterns in the onset of seasonally snow-dominated conditions in the northern hemisphere, 1972–2017. J Clim 32(16):4981–5001

Arora V, Scinocca J, Boer G, Christian J, Denman K, Flato G, Kharin V, Lee W, Merryfield W (2011) Carbon emission limits required to satisfy future representative concentration pathways of greenhouse gases. Geophys Res Lett 38(L05805)

Atkinson D, Brown R, Alt B, Agnew T, Bourgeois J, Burgess M, Duguay C, Henry G, Jeffers S, Koerner R et al (2006) Canadian cryospheric response to an anomalous warm summer: a synthesis of the climate change action fund project “The state of the arctic cryosphere during the extreme warm summer of 1998”. Atmos Ocean 44(4):347–375

Balsamo G, Albergel C, Beljaars A, Boussetta S, Brun E, Cloke H, Dee D, Dutra E, Muñoz-Sabater J, Pappenberger F et al (2015) ERA-interim/land: a global land surface reanalysis data set. Hydrol Earth Syst Sci 19(1):389–407

Bamzai A (2003) Relationship between snow cover variability and Arctic Oscillation index on a hierarchy of time scales. Int J Climatol 23(2):131–142

Barichivich J, Briffa KR, Myneni RB, Osborn TJ, Melvin TM, Ciais P, Piao S, Tucker C (2013) Large-scale variations in the vegetation growing season and annual cycle of atmospheric CO2 at high northern latitudes from 1950 to 2011. Glob Change Biol 19(10):3167–3183

Bentsen M, Bethke I, Debernard JB, Iversen T, Kirkevåg A, Seland Ø, Drange H, Roelandt C, Seierstad IA, Hoose C, Kristjánsson JE (2013) The Norwegian earth system model, noresm1-m part 1: description and basic evaluation of the physical climate. Geosci Model Dev 6(3):687–720. https://doi.org/10.5194/gmd-6-687-2013

Bintanja R, Selten F (2014) Future increases in arctic precipitation linked to local evaporation and sea-ice retreat. Nature 509(7501):479–482

Biskaborn BK, Smith SL, Noetzli J, Matthes H, Vieira G, Streletskiy DA, Schoeneich P, Romanovsky VE, Lewkowicz AG, Abramov A et al (2019) Permafrost is warming at a global scale. Nat Commun 10(1):264

Bokhorst S, Pedersen SH, Brucker L, Anisimov O, Bjerke JW, Brown RD, Ehrich D, Essery RLH, Heilig A, Ingvander S, Johansson C, Johansson M, Jónsdóttir IS, Inga N, Luojus K, Macelloni G, Mariash H, McLennan D, Rosqvist GN, Sato A, Savela H, Schneebeli M, Sokolov A, Sokratov SA, Terzago S, Vikhamar-Schuler D, Williamson S, Qiu Y, Callaghan TV (2016) Changing Arctic snow cover: a review of recent developments and assessment of future needs for observations, modelling, and impacts. Ambio 45(5):516–537. https://doi.org/10.1007/s13280-016-0770-0

Bromwich D, Wilson A, Bai L, Liu Z, Barlage M, Shih CF, Maldonado S, Hines K, Wang SH, Woollen J et al (2018) The arctic system reanalysis, version 2. Bull Am Meteorol Soc 99(4):805–828

Brown RD (2000) Northern hemisphere snow cover variability and change, 1915–97. J Climate 13(13):2339–2355

Brown R, Derksen C (2013) Is Eurasian October snow cover extent increasing? Environ Res Lett 8(2):024006

Brown RD, Mote PW (2009) The response of northern hemisphere snow cover to a changing climate. J Climate 22(8):2124–2145. https://doi.org/10.1175/2008JCLI2665.1

Brown J, Ferrians O Jr, Heginbottom J, Melnikov E (1997) Circum-Arctic map of permafrost and ground-ice conditions. US Geological Survey, Reston

Brown R, Schuler DV, Bulygina O, Derksen C, Luojus K, Mudryk L, Wang L, Yang D (2017) Arctic terrestrial snow cover. In: AMAP 2017 snow, water, ice and permafrost in the Arctic (SWIPA), p 269

Brun E, Martin E, Spiridonov V (1997) Coupling a multi-layered snow model with a gcm. Ann Glaciol 25:66–72

Brun E, Vionnet V, Boone A, Decharme B, Peings Y, Valette R, Karbou F, Morin S (2013) Simulation of northern Eurasian local snow depth, mass, and density using a detailed snowpack model and meteorological reanalyses. J Hydrometeorol 14(1):203–219. https://doi.org/10.1175/jhm-d-12-012.1

Brutel-Vuilmet C, Ménégoz M, Krinner G (2013) An analysis of present and future seasonal northern hemisphere land snow cover simulated by CMIP5 coupled climate models. Cryosphere 7(1):67–80. https://doi.org/10.5194/tc-7-67-2013

Bulygina O, Groisman PY, Razuvaev V, Korshunova N (2011) Changes in snow cover characteristics over Northern Eurasia since 1966. Environ Res Lett 6(4):045204

Cayan DR, Kammerdiener SA, Dettinger MD, Caprio JM, Peterson DH (2001) Changes in the onset of spring in the western United States. Bull Am Meteorol Soc 82(3):399–416

Choi G, Robinson DA, Kang S (2010) Changing northern hemisphere snow seasons. J Climate 23(19):5305–5310

Cohen J, Rind D (1991) The effect of snow cover on the climate. J Climate 4(7):689–706

Cohen J, Barlow M, Kushner PJ, Saito K (2007) Stratosphere-troposphere coupling and links with Eurasian land surface variability. J Climate 20(21):5335–5343

Cohen JL, Furtado JC, Barlow MA, Alexeev VA, Cherry JE (2012) Arctic warming, increasing snow cover and widespread boreal winter cooling. Environ Res Lett 7(1):014007

Collier M, Jeffrey SJ, Rotstayn LD, Wong K, Dravitzki S, Moseneder C, Hamalainen C, Syktus J, Suppiah R, Antony J et al (2011) The CSIRO-Mk3. 6.0 atmosphere-ocean GCM: participation in CMIP5 and data publication. In: International congress on modelling and simulation–MODSIM

Collins M, Knutti R, Arblaster J, Dufresne JL, Fichefet T, Friedlingstein P, Gao X, Gutowski WJ, Johns T, Krinner G et al (2013) Long-term climate change: projections, commitments and irreversibility. In: Climate change 2013-the physical science basis: contribution of working group I to the fifth assessment report of the intergovernmental panel on climate change. Cambridge University Press, pp 1029–1136

Compo GP, Whitaker JS, Sardeshmukh PD, Matsui N, Allan RJ, Yin X, Gleason BE, Vose RS, Rutledge G, Bessemoulin P, Brönnimann S, Brunet M, Crouthamel RI, Grant AN, Groisman PY, Jones PD, Kruk MC, Kruger AC, Marshall GJ, Maugeri M, Mok HY, Nordli Ø, Ross TF, Trigo RM, Wang XL, Woodruff SD, Worley SJ (2011) The twentieth century reanalysis project. Q J R Meteorol Soc 137(654):1–28. https://doi.org/10.1002/qj.776

Connolly R, Connolly M, Soon W, Legates DR, Cionco RG, Velasco Herrera V et al (2019) Northern hemisphere snow-cover trends (1967–2018): a comparison between climate models and observations. Geosciences 9(3):135

Davini P, Cagnazzo C (2014) On the misinterpretation of the North Atlantic Oscillation in CMIP5 models. Climate Dyn 43(5–6):1497–1511

Derksen C, Brown R (2012) Spring snow cover extent reductions in the 2008–2012 period exceeding climate model projections. Geophys Res Lett 39(L19504)

Déry SJ, Brown RD (2007) Recent northern hemisphere snow cover extent trends and implications for the snow-albedo feedback. Geophys Res Lett. https://doi.org/10.1029/2007GL031474

Douville H, Royer JF, Mahfouf JF (1995) A new snow parameterization for the Météo-France climate model. Climate Dyn 12(1):21–35

Douville H, Peings Y, Saint-Martin D (2017) Snow-(N)AO relationship revisited over the whole twentieth century. Geophys Res Lett 44(1):569–577. https://doi.org/10.1002/2016gl071584

Dufour A, Zolina O, Gulev SK (2016) Atmospheric moisture transport to the arctic: assessment of reanalyses and analysis of transport components. J Climate 29(14):5061–5081

Estilow TW, Young AH, Robinson DA (2015) A long-term Northern Hemisphere snow cover extent data record for climate studies and monitoring. Earth Syst Sci Data 7(1):137–142

Eyring V, Bony S, Meehl GA, Senior CA, Stevens B, Stouffer RJ, Taylor KE (2016) Overview of the coupled model intercomparison project phase 6 (CMIP6) experimental design and organization. Geosci Model Dev 9(5):1937–1958. https://doi.org/10.5194/gmd-9-1937-2016

Flanner MG, Shell KM, Barlage M, Perovich DK, Tschudi M (2011) Radiative forcing and albedo feedback from the Northern Hemisphere cryosphere between 1979 and 2008. Nat Geosci 4(3):151–155

Fletcher CG, Zhao H, Kushner PJ, Fernandes R (2012) Using models and satellite observations to evaluate the strength of snow albedo feedback. J Geophys Res Atmos 117(D11)

Fletcher CG, Thackeray CW, Burgers TM (2015) Evaluating biases in simulated snow albedo feedback in two generations of climate models. J Geophys Res Atmos 120(1):12–26

Furtado JC, Cohen JL, Butler AH, Riddle EE, Kumar A (2015) Eurasian snow cover variability and links to winter climate in the CMIP5 models. Climate Dyn 45(9–10):2591–2605

Gastineau G, García-Serrano J, Frankignoul C (2017) The influence of autumnal Eurasian snow cover on climate and its link with Arctic Sea Ice cover. J Climate 30(19):7599–7619. https://doi.org/10.1175/JCLI-D-16-0623.1

Gent PR, Danabasoglu G, Donner LJ, Holland MM, Hunke EC, Jayne SR, Lawrence DM, Neale RB, Rasch PJ, Vertenstein M, Worley PH, Yang ZL, Zhang M (2011) The community climate system model version 4. J Climate 24(19):4973–4991. https://doi.org/10.1175/2011JCLI4083.1

Giorgetta MA, Jungclaus J, Reick CH, Legutke S, Bader J, Böttinger M, Brovkin V, Crueger T, Esch M, Fieg K et al (2013) Climate and carbon cycle changes from 1850 to 2100 in MPI-ESM simulations for the Coupled Model Intercomparison Project phase 5. J Adv Model Earth Syst 5(3):572–597

Gómez-Navarro J, Montávez J, Jerez S, Jiménez-Guerrero P, Zorita E (2012) What is the role of the observational dataset in the evaluation and scoring of climate models? Geophys Res Lett 39(24)

Gouttevin I, Menegoz M, Dominé F, Krinner G, Koven C, Ciais P, Tarnocai C, Boike J (2012) How the insulating properties of snow affect soil carbon distribution in the continental pan-Arctic area. J Geophys Res Biogeosci 117(G2)

Groisman PY, Karl TR, Knight RW, Stenchikov GL (1994) Changes of snow cover, temperature, and radiative heat balance over the Northern Hemisphere. J Climate 7(11):1633–1656

Groisman P, Shugart H, Kicklighter D, Henebry G, Tchebakova N, Maksyutov S, Monier E, Gutman G, Gulev S, Qi J et al (2017) Northern Eurasia future initiative (NEFI): facing the challenges and pathways of global change in the twenty-first century. Prog Earth Planet Sci 4(1):41

Groisman P, Bulygina O, Henebry G, Speranskaya N, Shiklomanov A, Chen Y, Tchebakova N, Parfenova E, Tilinina N, Zolina O et al (2018) Dryland belt of Northern Eurasia: contemporary environmental changes and their consequences. Environ Res Lett 13(11):115008

Gulev SK, Jung T, Ruprecht E (2002) Climatology and interannual variability in the intensity of synoptic-scale processes in the North Atlantic from the NCEP-NCAR reanalysis data. J Climate 15(8):809–828

Gutzler DS, Rosen RD (1992) Interannual variability of wintertime snow cover across the Northern hemisphere. J Climate 5(12):1441–1447

Henderson GR, Peings Y, Furtado JC, Kushner PJ (2018) Snow-atmosphere coupling in the Northern hemisphere. Nat Climate Change 8(11):954–963

Hernández-Henríquez MA, Déry SJ, Derksen C (2015) Polar amplification and elevation-dependence in trends of Northern hemisphere snow cover extent, 1971–2014. Environ Res Lett 10(4):044010

Hinzman LD, Deal CJ, McGuire AD, Mernild SH, Polyakov IV, Walsh JE (2013) Trajectory of the arctic as an integrated system. Ecol Appl 23(8):1837–1868

Hori M, Sugiura K, Kobayashi K, Aoki T, Tanikawa T, Kuchiki K, Niwano M, Enomoto H (2017) A 38-year (1978–2015) Northern hemisphere daily snow cover extent product derived using consistent objective criteria from satellite-borne optical sensors. Remote Sens Environ 191:402–418

Hurrell JW, Kushnir Y, Ottersen G, Visbeck M (2003) An overview of the North Atlantic oscillation. Geophys Monogr Am Geophys Union 134:1–36

Kapnick SB, Delworth TL (2013) Controls of global snow under a changed climate. J Climate 26(15):5537–5562. https://doi.org/10.1175/JCLI-D-12-00528.1

Koenigk T, Berg P, Döscher R (2015) Arctic climate change in an ensemble of regional Cordex simulations. Polar Res 34(1):24603

Koven CD, Riley WJ, Stern A (2013) Analysis of permafrost thermal dynamics and response to climate change in the CMIP5 earth system models. J Climate 26(6):1877–1900. https://doi.org/10.1175/jcli-d-12-00228.1

Krinner G, Derksen C, Essery R, Flanner M, Hagemann S, Clark M, Hall A, Rott H, Brutel-Vuilmet C, Kim H et al (2018) ESM-SnowMIP: assessing snow models and quantifying snow-related climate feedbacks. Geosci Model Dev 11:5027–5049

Kunkel KE, Robinson DA, Champion S, Yin X, Estilow T, Frankson RM (2016) Trends and extremes in Northern hemisphere snow characteristics. Curr Climate Change Rep 2(2):65–73. https://doi.org/10.1007/s40641-016-0036-8

Lawrence DM, Slater AG (2009) The contribution of snow condition trends to future ground climate. Climate Dyn 34(7–8):969–981. https://doi.org/10.1007/s00382-009-0537-4

Lemke P, Ren J, Alley R, Allison I, Carrasco J, Flato G, Fujii Y, Kaser G, Mote P, Thomas R et al (2007) Observations: changes in snow, ice and frozen ground, climate change 2007: the physical science basis. In: Contribution of working group I to the fourth assessment report of the intergovernmental panel on climate change. Cambridge University Press, Cambridge, pp 337–383

Liston GE, Hiemstra CA (2011) The changing cryosphere: Pan-arctic snow trends (1979–2009). J Climate 24(21):5691–5712

Liu J, Curry JA, Wang H, Song M, Horton RM (2012) Impact of declining Arctic sea ice on winter snowfall. Proc Natl Acad Sci 109(11):4074–4079

Manabe S (1969) Climate and the ocean circulation. Mon Weather Rev 97(11):739–774

Mankin JS, Viviroli D, Singh D, Hoekstra AY, Diffenbaugh NS (2015) The potential for snow to supply human water demand in the present and future. Environ Res Lett 10(11):114016

Mudryk LR, Derksen C (2017) CanSISE observation-based ensemble of Northern hemisphere terrestrial snow water equivalent, version 2. Bull Am Meteorol Soc. https://doi.org/10.5067/96ltniikJ7vd

Mudryk L, Kushner P, Derksen C (2014) Interpreting observed Northern hemisphere snow trends with large ensembles of climate simulations. Climate Dyn 43(1–2):345–359

Mudryk L, Derksen C, Kushner P, Brown R (2015) Characterization of Northern hemisphere snow water equivalent datasets, 1981–2010. J Climate 28(20):8037–8051

Mudryk LR, Kushner PJ, Derksen C, Thackeray C (2017) Snow cover response to temperature in observational and climate model ensembles. Geophys Res Lett 44(2):919–926. https://doi.org/10.1002/2016gl071789

Ning L, Bradley RS (2016) NAO and PNA influences on winter temperature and precipitation over the eastern United States in CMIP5 GCMs. Climate Dyn 46(3–4):1257–1276

Peings Y, Douville H (2010) Influence of the Eurasian snow cover on the Indian summer monsoon variability in observed climatologies and CMIP3 simulations. Climate Dyn 34(5):643–660

Peings Y, Saint-Martin D, Douville H (2012) A numerical sensitivity study of the influence of Siberian snow on the northern annular mode. J Climate 25(2):592–607

Peng S, Piao S, Ciais P, Friedlingstein P, Zhou L, Wang T (2013) Change in snow phenology and its potential feedback to temperature in the Northern Hemisphere over the last three decades. Environ Res Lett 8(1):014008

Popova V (2007) Winter snow depth variability over northern Eurasia in relation to recent atmospheric circulation changes. Int J Climatol 27(13):1721–1733

Prabhu A, Oh J, Iw Kim, Kripalani R, Mitra A, Pandithurai G (2017) Summer monsoon rainfall variability over North East regions of India and its association with Eurasian snow, Atlantic Sea Surface temperature and Arctic Oscillation. Climate Dyn 49(7–8):2545–2556

Qu X, Hall A (2007) What controls the strength of snow-albedo feedback? J Climate 20(15):3971–3981. https://doi.org/10.1175/jcli4186.1

Qu X, Hall A (2013) On the persistent spread in snow-albedo feedback. Climate Dyn 42(1–2):69–81. https://doi.org/10.1007/s00382-013-1774-0

Räisänen J (2008) Warmer climate: less or more snow? Climate Dyn 30(2–3):307–319

Rienecker MM, Suarez MJ, Gelaro R, Todling R, Bacmeister J, Liu E, Bosilovich MG, Schubert SD, Takacs L, Kim GK et al (2011) MERRA: NASA’s modern-era retrospective analysis for research and applications. J Climate 24(14):3624–3648

Robinson DA, Frei A (2000) Seasonal variability of Northern hemisphere snow extent using visible satellite data. Prof Geogr 52(2):307–315

Robinson DA, Dewey KF, Heim RR Jr (1993) Global snow cover monitoring: an update. Bull Am Meteorol Soc 74(9):1689–1696

Rodell M, Houser PR, Jambor U, Gottschalck J, Mitchell K, Meng CJ, Arsenault K, Cosgrove B, Radakovich J, Bosilovich M, Entin JK, Walker JP, Lohmann D, Toll D (2004) The global land data assimilation system. Bull Am Meteorol Soc 85(3):381–394. https://doi.org/10.1175/BAMS-85-3-381

Rodell M, Beaudoing HK (2013) GLDAS Noah Land Surface Model l4 Monthly 0.25 \(\times \) 0.25 Degree Version 2.0. Goddard Earth Sciences Data and Information Services Center, Greenbelt

Roesch A (2006) Evaluation of surface albedo and snow cover in AR4 coupled climate models. J Geophys Res Atmos 111(D15)

Rupp DE, Mote PW, Bindoff NL, Stott PA, Robinson DA (2013) Detection and attribution of observed changes in Northern Hemisphere spring snow cover. J Climate 26(18):6904–6914. https://doi.org/10.1175/jcli-d-12-00563.1

Rydzik M, Desai AR (2014) Relationship between snow extent and midlatitude disturbance centers. J Climate 27(8):2971–2982

Saha S, Moorthi S, Pan HL, Wu X, Wang J, Nadiga S, Tripp P, Kistler R, Woollen J, Behringer D, Liu H, Stokes D, Grumbine R, Gayno G, Wang J, Hou YT, Chuang H, Juang HMH, Sela J, Iredell M, Treadon R, Kleist D, Delst PV, Keyser D, Derber J, Ek M, Meng J, Wei H, Yang R, Lord S, van den Dool H, Kumar A, Wang W, Long C, Chelliah M, Xue Y, Huang B, Schemm JK, Ebisuzaki W, Lin R, Xie P, Chen M, Zhou S, Higgins W, Zou CZ, Liu Q, Chen Y, Han Y, Cucurull L, Reynolds RW, Rutledge G, Goldberg M (2010) The NCEP climate forecast system reanalysis. Bull Am Meteorol Soc 91(8):1015–1058. https://doi.org/10.1175/2010bams3001.1

Schmidt GA, Ruedy R, Hansen JE, Aleinov I, Bell N, Bauer M, Bauer S, Cairns B, Canuto V, Cheng Y, Del Genio A, Faluvegi G, Friend AD, Hall TM, Hu Y, Kelley M, Kiang NY, Koch D, Lacis AA, Lerner J, Lo KK, Miller RL, Nazarenko L, Oinas V, Perlwitz JP, Perlwitz J, Rind D, Romanou A, Russell GL, Sato M, Shindell DT, Stone PH, Sun S, Tausnev N, Thresher D, Yao MS (2006) Present day atmospheric simulations using GISS Model: comparison to in-situ, satellite and reanalysis data. J Climate 19:153–192. https://doi.org/10.1175/JCLI3612.1

Screen JA, Simmonds I (2013) Exploring links between Arctic amplification and mid-latitude weather. Geophys Res Lett 40(5):959–964

Senan R, Orsolini YJ, Weisheimer A, Vitart F, Balsamo G, Stockdale TN, Dutra E, Doblas-Reyes FJ, Basang D (2016) Impact of springtime Himalayan-Tibetan Plateau snowpack on the onset of the Indian summer monsoon in coupled seasonal forecasts. Climate Dyn 47(9–10):2709–2725

Serreze MC, Barry RG (2011) Processes and impacts of Arctic amplification: a research synthesis. Glob Planet change 77(1–2):85–96

Sicart JE, Pomeroy J, Essery R, Bewley D (2006) Incoming longwave radiation to melting snow: observations, sensitivity and estimation in northern environments. Hydrol Process 20(17):3697–3708

Singh D, Flanner M, Perket J (2015) The global land shortwave cryosphere radiative effect during the MODIS era. Cryosphere 9(6)

Slater AG, Schlosser CA, Desborough C, Pitman A, Henderson-Sellers A, Robock A, Vinnikov KY, Entin J, Mitchell K, Chen F et al (2001) The representation of snow in land surface schemes: results from PILPS 2 (d). J Hydrometeorol 2(1):7–25

Slater AG, Lawrence DM, Koven CD (2017) Process-level model evaluation: a snow and heat transfer metric. Cryosphere 11(2):989–996

Stewart IT (2009) Changes in snowpack and snowmelt runoff for key mountain regions. Hydrol Process 23(1):78–94

Taylor KE (2001) Summarizing multiple aspects of model performance in a single diagram. J Geophys Res Atmos 106(D7):7183–7192

Taylor KE, Stouffer RJ, Meehl GA (2012) An overview of CMIP5 and the experiment design. Bull Am Meteorol Soc 93(4):485–498

Terzago S, von Hardenberg J, Palazzi E, Provenzale A (2014) Snowpack changes in the Hindu Kush-Karakoram-Himalaya from CMIP5 global climate models. J Hydrometeorol 15(6):2293–2313

Thackeray CW, Fletcher CG, Mudryk LR, Derksen C (2016) Quantifying the uncertainty in historical and future simulations of Northern hemisphere spring snow cover. J Climate 29(23):8647–8663. https://doi.org/10.1175/jcli-d-16-0341.1

Thackeray CW, Qu X, Hall A (2018) Why do models produce spread in snow albedo feedback? Geophys Res Lett 45(12):6223–6231

Tilinina N, Gulev SK, Rudeva I, Koltermann P (2013) Comparing cyclone life cycle characteristics and their interannual variability in different reanalyses. J Climate 26(17):6419–6438

Vionnet V, Brun E, Morin S, Boone A, Faroux S, Le Moigne P, Martin E, Willemet J (2012) The detailed snowpack scheme Crocus and its implementation in SURFEX v7. 2. Geosci Model Dev 5:773–791

Voldoire A, Sanchez-Gomez E, y Mélia DS, Decharme B, Cassou C, Sénési S, Valcke S, Beau I, Alias A, Chevallier M, Déqué M, Deshayes J, Douville H, Fernandez E, Madec G, Maisonnave E, Moine MP, Planton S, Saint-Martin D, Szopa S, Tyteca S, Alkama R, Belamari S, Braun A, Coquart L, Chauvin F (2012) The detailed snowpack scheme Crocus and its implementation in SURFEX v7. 2. Climate Dyn 40(9–10):209–2121. https://doi.org/10.1007/s00382-011-1259-y

Volodin E, Dianskii A, Gusev A (2010) Simulating present-day climate with the INMCM4.0 coupled model of the atmospheric and oceanic general circulations. Izvestiya Atmos Ocean Phys 46:414–431. https://doi.org/10.1134/S000143381004002X

Wang L, Derksen C, Brown R, Markus T (2013) Recent changes in pan Arctic melt onset from satellite passive microwave measurements. Geophys Res Lett 40(3):522–528

Watanabe M, Suzuki T, O’ishi R, Komuro Y, Watanabe S, Emori S, Takemura T, Chikira M, Ogura T, Sekiguchi M et al (2010) Improved climate simulation by MIROC5: mean states, variability, and climate sensitivity. J Climate 23(23):6312–6335

Watanabe S, Hajima T, Sudo K, Nagashima T, Takemura T, Okajima H, Nozawa T, Kawase H, Abe M, Yokohata T, Ise T, Sato H, Kato E, Takata K, Emori S, Kawamiya M (2011) MIROC-ESM 2010: model description and basic results of CMIP5-20c3m experiments. Geosci Model Dev 4(4):845–872. https://doi.org/10.5194/gmd-4-845-2011

Webster MA, Parker C, Boisvert L, Kwok R (2019) The role of cyclone activity in snow accumulation on Arctic sea ice. Nat Commun 10(1):1–12