Abstract

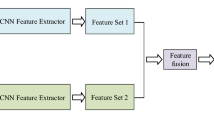

Developing remote sensing technology enables the production of very high-resolution (VHR) images. Classification of the VHR imagery scene has become a challenging problem. In this paper, we propose a model for VHR scene classification. First, convolutional neural networks (CNNs) with pre-trained weights are used as a deep feature extractor to extract the global and local CNNs features from the original VHR images. Second, the spectral residual-based saliency detection algorithm is used to extract the saliency map. Then, saliency features from the saliency map are extracted using CNNs in order to extract robust features for the VHR imagery, especially for the image with salience object. Third, we use the feature fusion technique rather than the raw deep features to represent the final shape of the VHR image scenes. In feature fusion, discriminant correlation analysis (DCA) is used to fuse both the global and local CNNs features and saliency features. DCA is a more suitable and cost-effective fusion method than the traditional fusion techniques. Finally, we propose an enhanced multilayer perceptron to classify the image. Experiments are performed on four widely used datasets: UC-Merced, WHU-RS, Aerial Image, and NWPU-RESISC45. Results confirm that the proposed model performs better than state-of-the-art scene classification models.

Similar content being viewed by others

References

Itti, L.: Automatic foveation for video compression using a neurobiological model of visual attention. IEEE Trans. Image Process. 13(10), 1304–1318 (2004)

Yang, Y., Newsam, S.: Bag-of-visual-words and spatial extensions for land-use classification. In: Proceedings of the 18th SIGSPATIAL International Conference on Advances in Geographic Information Systems. November 2010. San Jose California, USA: ACM

Lu, X., Li, X., Mou, L.: Semi-supervised multitask learning for scene recognition. IEEE Trans. Cybern. 45(9), 1967–1976 (2014)

Zhang, F., Du, B., Zhang, L.: Scene classification via a gradient boosting random convolutional network framework. IEEE Trans. Geosci. Remote Sens. 54(3), 1793–1802 (2015)

Tian, T., et al.: Land-use classification with biologically inspired color descriptor and sparse coding spatial pyramid matching. Multimedia Tools Appl. 76(21), 22943–22958 (2017)

Zhu, Q., et al.: Bag-of-visual-words scene classifier with local and global features for high spatial resolution remote sensing imagery. IEEE Geosci. Remote Sens. Lett. 13(6), 747–751 (2016)

Shahriari, M., Bergevin, R.: Land-use scene classification: a comparative study on bag of visual word framework. Multimedia Tools Appl. 76(21), 23059–23075 (2017)

Liu, Y., et al.: Scene classification based on a deep random-scale stretched convolutional neural network. Remote Sens. 10(3), 444–453 (2018)

Zheng, Q., et al.: Improvement of generalization ability of deep CNN via implicit regularization in two-stage training process. IEEE Access 6, 15844–15869 (2018)

Tokozume, Y., Ushiku, Y., Harada, T.: Between-class learning for image classification. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. June 2018. Salt Lake City, Utah: IEEE

Zhang, W., Tang, P., Zhao, L.: Remote sensing image scene classification using CNN-CapsNet. Remote Sens. 11(5), 494 (2019)

Sabour, S., Frosst, N., Hinton, G. E.: Dynamic routing between capsules. In: 31st Conference on Neural Information Processing Systems. December 2017. Long Beach, CA, USA

Akodad, S., et al.: An ensemble learning approach for the classification of remote sensing scenes based on covariance pooling of CNN features. In: 27th European Signal Processing Conference. 2019. La Coruña, Spain: HAL Id

Huang, H., Xu, K.: Combing triple-part features of convolutional neural networks for scene classification in remote sensing. Remote Sens. 11(14), 1687 (2019)

Huang, W., Wang, Q., Li, X.: Feature sparsity in convolutional neural networks for scene classification of remote sensing image. In: IEEE International Geoscience and Remote Sensing Symposium (IGARSS). July 2019. Yokohama, Japan

Liu, B.-D., et al.: Weighted spatial pyramid matching collaborative representation for remote-sensing-image scene classification. Remote Sens. 11(5), 518 (2019)

Zhang, L., Yang, M., Feng, X.: Sparse representation or collaborative representation: which helps face recognition?. In: 2011 International Conference on Computer Vision. November 2011. Barcelona, Spain: IEEE

Hou, X., Zhang, L.: Saliency detection: a spectral residual approach. In 2007 IEEE Conference on Computer Vision and Pattern Recognition. June 2007. Minneapolis, USA: IEEE

Chaib, S., et al.: Deep feature fusion for VHR remote sensing scene classification. IEEE Trans. Geosci. Remote Sens. 55(8), 4775–4784 (2017)

Yu, Y., Liu, F.: A two-stream deep fusion framework for high-resolution aerial scene classification. Comput. Intell. Neurosci. 2018, 1–13 (2018)

Zeng, D., et al.: Improving remote sensing scene classification by integrating global-context and local-object features. Remote Sens. 10(5), 734 (2018)

Haghighat, M., Abdel-Mottaleb, M., Alhalabi, W.: Discriminant correlation analysis: real-time feature level fusion for multimodal biometric recognition. IEEE Trans. Inf. Forensics Security 11(9), 1984–1996 (2016)

Duchi, J., Hazan, E., Singer, Y.: Adaptive subgradient methods for online learning and stochastic optimization. J. Mach. Learn. Res. 12, 2121–2159 (2011)

Chen, S., et al.: Embedding attention and residual network for accurate salient object detection. IEEE Trans. Cybern. 50(5), 2050–2062 (2020)

Zhang, F., Du, B., Zhang, L.: Saliency-guided unsupervised feature learning for scene classification. IEEE Trans. Geosci. Remote Sens. 53(4), 2175–2184 (2014)

Pan, S.J., et al.: Domain adaptation via transfer component analysis. IEEE Trans. Neural Netw. 22(2), 199–210 (2010)

Long, M. et al.: Learning transferable features with deep adaptation networks. arXiv:02791, (2015)

Yosinski, J. et al.: How transferable are features in deep neural networks?. In: Advances in Neural Information Processing Systems. December 2014. Montreal, Canada: NIPS

Li, Z., Hoiem, D.: Learning without forgetting. IEEE Trans. Pattern Anal. Mach. Intell. 40(12), 2935–2947 (2017)

Srivastava, N., et al.: Dropout: a simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 15(1), 1929–1958 (2014)

Xia, G.-S. et al.: Structural high-resolution satellite image indexing. In: ISPRS TC VII Symposium-100 Years ISPRS. July 2010. Vienna, Austria: HAL

Bian, X., et al.: Fusing local and global features for high-resolution scene classification. IEEE J. Selected Topics Appl. Earth Observ. Remote Sens. 10(6), 2889–2901 (2017)

Cheng, G., Han, J., Lu, X.: Remote sensing image scene classification: benchmark and state of the art. Proc. IEEE 105(10), 1865–1883 (2017)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. arXiv:1409.1556, (2014)

Chollet, F.: Xception: Deep learning with depthwise separable convolutions. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. July 2017. Honolulu, Hawaii: IEEE

Wang, E.K., et al.: A sparse deep learning model for privacy attack on remote sensing images. Mathe. Biosci. Eng. MBE 16(3), 1300–1312 (2019)

Zhang, J., et al.: A full convolutional network based on DenseNet for remote sensing scene classification. Math. Biosci. Eng 16(5), 3345–3367 (2019)

Anwer, R.M., et al.: Binary patterns encoded convolutional neural networks for texture recognition and remote sensing scene classification. ISPRS J. Photogr. Remote Sens. 138, 74–85 (2018)

Qi, K., et al.: Concentric circle pooling in deep convolutional networks for remote sensing scene classification. Remote Sens. 10(6), 934 (2018)

Gu, X., Angelov, P. P.: A semi-supervised deep rule-based approach for remote sensing scene classification. In: INNSBDDL 2019, Proceedings of the International Neural Networks Society. 2020, Springer, Cham. pp. 257–266

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Shawky, O.A., Hagag, A., El-Dahshan, ES.A. et al. A very high-resolution scene classification model using transfer deep CNNs based on saliency features. SIViP 15, 817–825 (2021). https://doi.org/10.1007/s11760-020-01801-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-020-01801-5