Development of Automated Sleep Stage Classification System Using Multivariate Projection-Based Fixed Boundary Empirical Wavelet Transform and Entropy Features Extracted from Multichannel EEG Signals

Abstract

:1. Introduction

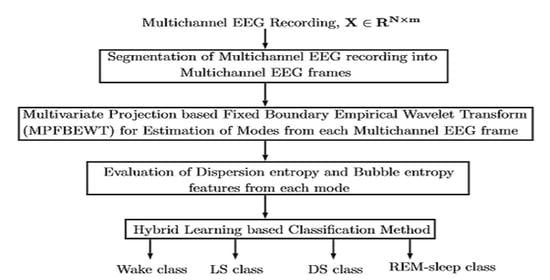

2. Multi-Channel EEG Database

3. Method

3.1. EEG Frame Evaluation

3.2. Multivariate Fixed Boundary-Based EWT Filter Bank

| Algorithm 1: Evaluation of modes obtained from multi-channel electroencephalogram (EEG) signal using multivariate projection-based fixed boundary empirical wavelet transform (MPFBEWT) filter bank. |

|

3.3. Entropy Features Extraction

3.4. Hybrid Learning based Classifier

| Algorithm 2: Hybrid learning classifier algorithm for classification of sleep stages. |

|

4. Results and Discussion

- (i)

- We obtained the highest classification performance compared to the spectral, and time–frequency-based entropy features of EEG signals.

- (ii)

- The extracted discriminative multi-scale BE and DE entropy features have yielded high classification accuracy.

- (iii)

- The proposed information–theoretic approach is simple and computationally less intensive.

- (iv)

- The developed hybrid learning model is evaluated for five types of sleep stage classification strategies.

- (v)

- We achieved a robust model using 10-fold CV and hold-out strategies.

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Tripathy, R.; Gajbhiye, P.; Acharya, U.R. Automated sleep apnea detection from cardio-pulmonary signal using bivariate fast and adaptive EMD coupled with cross time-frequency analysis. Comput. Biol. Med. 2020, 120, 103769. [Google Scholar] [CrossRef] [PubMed]

- Singh, H.; Tripathy, R.K.; Pachori, R.B. Detection of sleep apnea from heart beat interval and ECG derived respiration signals using sliding mode singular spectrum analysis. Digit. Signal Process. 2020, 104, 102796. [Google Scholar] [CrossRef]

- Tripathy, R.; Acharya, U.R. Use of features from RR-time series and EEG signals for automated classification of sleep stages in deep neural network framework. Biocybern. Biomed. Eng. 2018, 38, 890–902. [Google Scholar] [CrossRef]

- Šušmáková, K.; Krakovská, A. Discrimination ability of individual measures used in sleep stages classification. Artif. Intell. Med. 2008, 44, 261–277. [Google Scholar] [CrossRef] [PubMed]

- Müller, B.; Gäbelein, W.D.; Schulz, H. A taxonomic analysis of sleep stages. Sleep 2006, 29, 967–974. [Google Scholar] [CrossRef]

- Jurysta, F.; Van De Borne, P.; Migeotte, P.F.; Dumont, M.; Lanquart, J.P.; Degaute, J.P.; Linkowski, P. A study of the dynamic interactions between sleep EEG and heart rate variability in healthy young men. Clin. Neurophysiol. 2003, 114, 2146–2155. [Google Scholar] [CrossRef]

- Acharya, R.; Faust, O.; Kannathal, N.; Chua, T.; Laxminarayan, S. Non-linear analysis of EEG signals at various sleep stages. Comput. Methods Programs Biomed. 2005, 80, 37–45. [Google Scholar] [CrossRef]

- Brunner, D.P.; Dijk, D.J.; Tobler, I.; Borbély, A.A. Effect of partial sleep deprivation on sleep stages and EEG power spectra: Evidence for non-REM and REM sleep homeostasis. Electroencephalogr. Clin. Neurophysiol. 1990, 75, 492–499. [Google Scholar] [CrossRef]

- Rodríguez-Sotelo, J.L.; Osorio-Forero, A.; Jiménez-Rodríguez, A.; Cuesta-Frau, D.; Cirugeda-Roldán, E.; Peluffo, D. Automatic sleep stages classification using EEG entropy features and unsupervised pattern analysis techniques. Entropy 2014, 16, 6573–6589. [Google Scholar] [CrossRef] [Green Version]

- Collop, N.A. Scoring variability between polysomnography technologists in different sleep laboratories. Sleep Med. 2002, 3, 43–47. [Google Scholar] [CrossRef]

- Tripathy, R. Application of intrinsic band function technique for automated detection of sleep apnea using HRV and EDR signals. Biocybern. Biomed. Eng. 2018, 38, 136–144. [Google Scholar] [CrossRef]

- Moser, D.; Anderer, P.; Gruber, G.; Parapatics, S.; Loretz, E.; Boeck, M.; Kloesch, G.; Heller, E.; Schmidt, A.; Danker-Hopfe, H.; et al. Sleep classification according to AASM and Rechtschaffen & Kales: Effects on sleep scoring parameters. Sleep 2009, 32, 139–149. [Google Scholar] [PubMed]

- Koley, B.; Dey, D. An ensemble system for automatic sleep stage classification using single channel EEG signal. Comput. Biol. Med. 2012, 42, 1186–1195. [Google Scholar] [CrossRef] [PubMed]

- Huang, C.S.; Lin, C.L.; Yang, W.Y.; Ko, L.W.; Liu, S.Y.; Lin, C.T. Applying the fuzzy c-means based dimension reduction to improve the sleep classification system. In Proceedings of the 2013 IEEE International Conference on Fuzzy Systems (FUZZ-IEEE), Hyderabad, India, 7–10 July 2013; pp. 1–5. [Google Scholar]

- Supratak, A.; Dong, H.; Wu, C.; Guo, Y. DeepSleepNet: A model for automatic sleep stage scoring based on raw single-channel EEG. IEEE Trans. Neural Syst. Rehabil. Eng. 2017, 25, 1998–2008. [Google Scholar] [CrossRef] [Green Version]

- Hassan, A.R.; Bhuiyan, M.I.H. Computer-aided sleep staging using complete ensemble empirical mode decomposition with adaptive noise and bootstrap aggregating. Biomed. Signal Process. Control. 2016, 24, 1–10. [Google Scholar] [CrossRef]

- Michielli, N.; Acharya, U.R.; Molinari, F. Cascaded LSTM recurrent neural network for automated sleep stage classification using single-channel EEG signals. Comput. Biol. Med. 2019, 106, 71–81. [Google Scholar] [CrossRef]

- Aboalayon, K.A.I.; Faezipour, M.; Almuhammadi, W.S.; Moslehpour, S. Sleep stage classification using EEG signal analysis: A comprehensive survey and new investigation. Entropy 2016, 18, 272. [Google Scholar] [CrossRef]

- Boostani, R.; Karimzadeh, F.; Nami, M. A comparative review on sleep stage classification methods in patients and healthy individuals. Comput. Methods Programs Biomed. 2017, 140, 77–91. [Google Scholar] [CrossRef]

- Song, I.; Ji, Y.; Cho, B.; Ku, J.; Chee, Y.; Lee, J.; Lee, S.; Kim, I.Y.; Kim, S.I. Multifractal analysis of sleep EEG dynamics in humans. In Proceedings of the 2007 3rd International IEEE/EMBS Conference on Neural Engineering, Kohala Coast, HI, USA, 2–5 May 2007; pp. 546–549. [Google Scholar]

- Fraiwan, L.; Lweesy, K.; Khasawneh, N.; Wenz, H.; Dickhaus, H. Automated sleep stage identification system based on time–frequency analysis of a single EEG channel and random forest classifier. Comput. Methods Programs Biomed. 2012, 108, 10–19. [Google Scholar] [CrossRef]

- Tsinalis, O.; Matthews, P.M.; Guo, Y.; Zafeiriou, S. Automatic sleep stage scoring with single-channel EEG using convolutional neural networks. arXiv 2016, arXiv:1610.01683. [Google Scholar]

- Lajnef, T.; Chaibi, S.; Ruby, P.; Aguera, P.E.; Eichenlaub, J.B.; Samet, M.; Kachouri, A.; Jerbi, K. Learning machines and sleeping brains: Automatic sleep stage classification using decision-tree multi-class support vector machines. J. Neurosci. Methods 2015, 250, 94–105. [Google Scholar] [CrossRef] [PubMed]

- Andreotti, F.; Phan, H.; Cooray, N.; Lo, C.; Hu, M.T.; De Vos, M. Multichannel sleep stage classification and transfer learning using convolutional neural networks. In Proceedings of the 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Honolulu, HI, USA, 18–21 July 2018; pp. 171–174. [Google Scholar]

- Jain, S.; Panda, R.; Tripathy, R.K. Multivariate sliding mode singular spectrum analysis for the decomposition of multisensor timeseries. IEEE Sens. Lett. 2020. [Google Scholar] [CrossRef]

- Park, C.; Looney, D.; ur Rehman, N.; Ahrabian, A.; Mandic, D.P. Classification of motor imagery BCI using multivariate empirical mode decomposition. IEEE Trans. Neural Syst. Rehabil. Eng. 2012, 21, 10–22. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bhattacharyya, A.; Pachori, R.B. A multivariate approach for patient-specific EEG seizure detection using empirical wavelet transform. IEEE Trans. Biomed. Eng. 2017, 64, 2003–2015. [Google Scholar] [CrossRef]

- Gilles, J. Empirical wavelet transform. IEEE Trans. Signal Process. 2013, 61, 3999–4010. [Google Scholar] [CrossRef]

- Rehman, N.; Mandic, D.P. Multivariate empirical mode decomposition. Proc. R. Soc. A Math. Phys. Eng. Sci. 2010, 466, 1291–1302. [Google Scholar] [CrossRef]

- Thirumalaisamy, M.R.; Ansell, P.J. Fast and adaptive empirical mode decomposition for multidimensional, multivariate signals. IEEE Signal Process. Lett. 2018, 25, 1550–1554. [Google Scholar] [CrossRef]

- Kannathal, N.; Choo, M.L.; Acharya, U.R.; Sadasivan, P. Entropies for detection of epilepsy in EEG. Comput. Methods Programs Biomed. 2005, 80, 187–194. [Google Scholar] [CrossRef]

- Chen, T.; Ju, S.; Yuan, X.; Elhoseny, M.; Ren, F.; Fan, M.; Chen, Z. Emotion recognition using empirical mode decomposition and approximation entropy. Comput. Electr. Eng. 2018, 72, 383–392. [Google Scholar] [CrossRef]

- Manis, G.; Aktaruzzaman, M.; Sassi, R. Bubble entropy: An entropy almost free of parameters. IEEE Trans. Biomed. Eng. 2017, 64, 2711–2718. [Google Scholar]

- Rostaghi, M.; Azami, H. Dispersion entropy: A measure for time-series analysis. IEEE Signal Process. Lett. 2016, 23, 610–614. [Google Scholar] [CrossRef]

- Tripathy, R.K.; Paternina, M.R.; Arrieta, J.G.; Zamora-Méndez, A.; Naik, G.R. Automated detection of congestive heart failure from electrocardiogram signal using Stockwell transform and hybrid classification scheme. Comput. Methods Programs Biomed. 2019, 173, 53–65. [Google Scholar] [CrossRef] [PubMed]

- Ghosh, S.K.; Ponnalagu, R.; Tripathy, R.; Acharya, U.R. Automated detection of heart valve diseases using chirplet transform and multiclass composite classifier with PCG signals. Comput. Biol. Med. 2020, 118, 103632. [Google Scholar] [CrossRef] [PubMed]

- Terzano, M.G.; Parrino, L.; Sherieri, A.; Chervin, R.; Chokroverty, S.; Guilleminault, C.; Hirshkowitz, M.; Mahowald, M.; Moldofsky, H.; Rosa, A.; et al. Atlas, rules, and recording techniques for the scoring of cyclic alternating pattern (CAP) in human sleep. Sleep Med. 2001, 2, 537–553. [Google Scholar] [CrossRef]

- Goldberger, A.L.; Amaral, L.A.; Glass, L.; Hausdorff, J.M.; Ivanov, P.C.; Mark, R.G.; Mietus, J.E.; Moody, G.B.; Peng, C.K.; Stanley, H.E. PhysioBank, PhysioToolkit, and PhysioNet: Components of a new research resource for complex physiologic signals. Circulation 2000, 101, e215–e220. [Google Scholar] [CrossRef] [Green Version]

- Hu, Y.; Li, F.; Li, H.; Liu, C. An enhanced empirical wavelet transform for noisy and non-stationary signal processing. Digit. Signal Process. 2017, 60, 220–229. [Google Scholar] [CrossRef]

- Panda, R.; Jain, S.; Tripathy, R.; Acharya, U.R. Detection of shockable ventricular cardiac arrhythmias from ECG signals using FFREWT filter-bank and deep convolutional network. Comput. Biol. Med. 2020, 124, 103939. [Google Scholar] [CrossRef]

- Gajbhiye, P.; Tripathy, R.K.; Pachori, R.B. Elimination of ocular artifacts from single channel EEG signals using FBSE-EWT based rhythms. IEEE Sens. J. 2019, 20, 3687–3696. [Google Scholar] [CrossRef]

- Cajochen, C.; Dijk, D.J. Electroencephalographic activity during wakefulness, rapid eye movement and non-rapid eye movement sleep in humans: Comparison of their circadian and homeostatic modulation. Sleep Biol. Rhythms 2003, 1, 85–95. [Google Scholar] [CrossRef]

- Amthor, F. Neuroscience for Dummies; John Wiley & Sons: Hoboken, NJ, USA, 2016. [Google Scholar]

- White, J.; Drinnan, M.; Smithson, A.; Griffiths, C.; Gibson, G. Respiratory muscle activity during rapid eye movement (REM) sleep in patients with chronic obstructive pulmonary disease. Thorax 1995, 50, 376–382. [Google Scholar] [CrossRef] [Green Version]

- Cai, T.T.; Wang, L. Orthogonal matching pursuit for sparse signal recovery with noise. IEEE Trans. Inf. Theory 2011, 57, 4680–4688. [Google Scholar] [CrossRef]

- Chapman, R.M.; Armington, J.C.; Bragdon, H.R. A quantitative survey of kappa and alpha EEG activity. Electroencephalogr. Clin. Neurophysiol. 1962, 14, 858–868. [Google Scholar] [CrossRef]

- Cohen, J. A coefficient of agreement for nominal scales. Educ. Psychol. Meas. 1960, 20, 37–46. [Google Scholar] [CrossRef]

- Deng, X.; Liu, Q.; Deng, Y.; Mahadevan, S. An improved method to construct basic probability assignment based on the confusion matrix for classification problem. Inf. Sci. 2016, 340, 250–261. [Google Scholar] [CrossRef]

- Cuevas, A.; Febrero, M.; Fraiman, R. An anova test for functional data. Comput. Stat. Data Anal. 2004, 47, 111–122. [Google Scholar] [CrossRef]

- Cuesta-Frau, D. Slope Entropy: A New Time Series Complexity Estimator Based on Both Symbolic Patterns and Amplitude Information. Entropy 2019, 21, 1167. [Google Scholar] [CrossRef] [Green Version]

- Li, P.; Liu, C.; Li, K.; Zheng, D.; Liu, C.; Hou, Y. Assessing the complexity of short-term heartbeat interval series by distribution entropy. Med. Biol. Eng. Comput. 2015, 53, 77–87. [Google Scholar] [CrossRef] [PubMed]

- Tripathy, R.K.; Deb, S.; Dandapat, S. Analysis of physiological signals using state space correlation entropy. Healthc. Technol. Lett. 2017, 4, 30–33. [Google Scholar] [CrossRef]

- Viswabhargav, C.S.; Tripathy, R.; Acharya, U.R. Automated detection of sleep apnea using sparse residual entropy features with various dictionaries extracted from heart rate and EDR signals. Comput. Biol. Med. 2019, 108, 20–30. [Google Scholar] [CrossRef]

| Classes | Wake | S1-Sleep | S2-Sleep | S3-Sleep | S4-Sleep | REM | Total |

|---|---|---|---|---|---|---|---|

| Number of Frames | 2613 | 1537 | 4955 | 2707 | 2601 | 2947 | 17360 |

| True/Predicted | W | LS | DS | REM |

|---|---|---|---|---|

| W | ||||

| LS | ||||

| DS | ||||

| REM |

| Cross-Validation | Accuracy (%) | Sensitivity (%) | Specificity (%) | Kappa Score |

|---|---|---|---|---|

| Hold-out CV | ||||

| 10-fold CV |

| Cross-Validation | Overall Accuracy (%) | Accuracy of Wake (%) | Accuracy of NREM (%) | Accuracy of REM (%) | Kappa Score |

|---|---|---|---|---|---|

| Hold-out CV | |||||

| 10-fold CV |

| Cross-Validation | Overall Accuracy (%) | Accuracy of Wake (%) | Accuracy of LS (%) | Accuracy of DS (%) | Accuracy of REM (%) | Kappa Score |

|---|---|---|---|---|---|---|

| Hold-out CV | ||||||

| 10-fold CV |

| Cross-Validation | Overall Accuracy (%) | Accuracy of Wake (%) | Accuracy of S1-Sleep (%) | Accuracy of S2-Sleep (%) | Accuracy of S3-Sleep (%) | Accuracy of REM (%) | Kappa Score |

|---|---|---|---|---|---|---|---|

| Hold-out | |||||||

| 10-fold |

| Cross-Validation | Overall Accuracy (%) | Accuracy of Wake (%) | Accuracy of S1-Sleep (%) | Accuracy of S2-Sleep (%) | Accuracy of S3-Sleep (%) | Accuracy of S4-Sleep (%) | Accuracy of REM (%) | Kappa Score |

|---|---|---|---|---|---|---|---|---|

| Hold-out | ||||||||

| 10-fold |

| Parameters | Validation Set | Test Set | ||||

|---|---|---|---|---|---|---|

| L | a | d | Overall Accuracy (%) | Kappa score | Overall Accuracy (%) | Kappa score |

| 10 | 2 | 1 | 72.18 | 0.631 | 72.72 | 0.637 |

| 10 | 3 | 2 | 70.77 | 0.619 | 71.34 | 0.622 |

| 8 | 2 | 1 | 71.62 | 0.630 | 71.77 | 0.631 |

| 8 | 3 | 2 | 69.90 | 0.608 | 69.70 | 0.605 |

| 5 | 2 | 1 | 59.48 | 0.466 | 62.77 | 0.510 |

| 5 | 3 | 2 | 51.30 | 0.372 | 48.31 | 0.336 |

| Sparsity Level | Nearest Neighbors | Overall Accuracy (%) | |

|---|---|---|---|

| nn | Validation Set | Test Set | |

| 2 | 1 | 37.96 | 39.78 |

| 4 | 2 | 56.11 | 56.87 |

| 6 | 3 | 60.67 | 62.08 |

| 8 | 4 | 65.38 | 65.97 |

| 10 | 5 | 65.62 | 68.04 |

| 12 | 6 | 69.38 | 70.11 |

| 14 | 7 | 69.70 | 71.01 |

| 16 | 8 | 70.12 | 70.97 |

| 18 | 9 | 70.18 | 72.48 |

| 20 | 10 | 72.18 | 72.72 |

| 22 | 11 | 71.54 | 71.66 |

| Feature Extraction Methods | Classifier Used | Overall Accuracy (%) |

|---|---|---|

| Spectral Features evaluated from different rhythms of multi-channel EEG signals [14] | MSVM | 68.24 |

| Different non-linear features extracted from multi-channel EEG signals [9] | Unsupervised learning (J-means clustering) | 57.40 |

| Time domain and spectral features extracted from multi-channel EEG [23] | DSVM | 74.80 |

| Learnable features evaluated from multi-channel EEG signal in convolution layer stages [24] | Transfer learning using CNN | 67.70 |

| Renyi entropy features computed from the time-frequency representation of single-channel EEG signals [21] | Random forest | 73.21 |

| Multi-scale DE and BE features extracted from Multi-channel EEG signal (proposed work) | hybrid learning | 73.88 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tripathy, R.K.; Ghosh, S.K.; Gajbhiye, P.; Acharya, U.R. Development of Automated Sleep Stage Classification System Using Multivariate Projection-Based Fixed Boundary Empirical Wavelet Transform and Entropy Features Extracted from Multichannel EEG Signals. Entropy 2020, 22, 1141. https://doi.org/10.3390/e22101141

Tripathy RK, Ghosh SK, Gajbhiye P, Acharya UR. Development of Automated Sleep Stage Classification System Using Multivariate Projection-Based Fixed Boundary Empirical Wavelet Transform and Entropy Features Extracted from Multichannel EEG Signals. Entropy. 2020; 22(10):1141. https://doi.org/10.3390/e22101141

Chicago/Turabian StyleTripathy, Rajesh Kumar, Samit Kumar Ghosh, Pranjali Gajbhiye, and U. Rajendra Acharya. 2020. "Development of Automated Sleep Stage Classification System Using Multivariate Projection-Based Fixed Boundary Empirical Wavelet Transform and Entropy Features Extracted from Multichannel EEG Signals" Entropy 22, no. 10: 1141. https://doi.org/10.3390/e22101141