Abstract

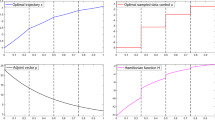

In the present paper we derive a Pontryagin maximum principle for general nonlinear optimal sampled-data control problems in the presence of running inequality state constraints. We obtain, in particular, a nonpositive averaged Hamiltonian gradient condition associated with an adjoint vector being a function of bounded variation. As a well known challenge, theoretical and numerical difficulties may arise due to the possible pathological behavior of the adjoint vector (jumps and singular part lying on parts of the optimal trajectory in contact with the boundary of the restricted state space). However, in our case with sampled-data controls, we prove that, under certain general hypotheses, the optimal trajectory activates the running inequality state constraints at most at the sampling times. Due to this so-called bouncing trajectory phenomenon, the adjoint vector experiences jumps at most at the sampling times (and thus in a finite number and at precise instants) and its singular part vanishes. Taking advantage of these informations, we are able to implement an indirect numerical method which we use to solve three simple examples.

Similar content being viewed by others

Notes

The terminology indirect numerical method is opposed to the one of direct numerical method which consists in a full discretization of the optimal control problem resulting into a constrained finite-dimensional optimization problem that can be numerically solved from various standard optimization algorithms and techniques.

In particular we have opted for the use of the Ekeland variational principle in view of generalizations to the general time scale setting in further research works.

References

Ackermann, J.E.: Sampled-Data Control Systems: Analysis and Synthesis, Robust System Design. Springer, Berlin (1985)

Aronna, M.S., Bonnans, J.F., Goh, B.S.: Second order analysis of control-affine problems with scalar state constraint. Math. Program. 160(1–2, Ser. A), 115–147 (2016)

Aström, K.J.: On the choice of sampling rates in optimal linear systems. IBM Res. Eng. Stud. (1963)

Aström, K.J., Wittenmark, B.: Computer-Controlled Systems. Prentice Hall, Upper Saddle River (1997)

Bachman, G., Narici, L.: Functional Analysis. Dover Publications Inc., Mineola (2000). (Reprint of the 1966 original)

Bakir, T., Bonnard, B., Bourdin, L., Rouot, J.: Pontryagin-type conditions for optimal muscular force response to functional electrical stimulations. J. Optim. Theory Appl. 184(2), 581–602 (2020)

Bettiol, P., Frankowska, H.: Normality of the maximum principle for nonconvex constrained Bolza problems. J. Differ. Equ. 243(2), 256–269 (2007)

Bettiol, P., Frankowska, H.: Hölder continuity of adjoint states and optimal controls for state constrained problems. Appl. Math. Optim. 57(1), 125–147 (2008)

Bini, E., Buttazzo, G.M.: The optimal sampling pattern for linear control systems. IEEE Trans. Automat. Control 59(1), 78–90 (2014)

Boltyanskii, V.G.: Optimal Control of Discrete Systems. Wiley, New York (1978)

Bonnans, J.F., de la Vega, C.: Optimal control of state constrained integral equations. Set Valued Var. Anal. 18(3–4), 307–326 (2010)

Bonnans, J.F., de la Vega, C., Dupuis, X.: First- and second-order optimality conditions for optimal control problems of state constrained integral equations. J. Optim. Theory Appl. 159(1), 1–40 (2013)

Bonnans, J.F., Hermant, A.: No-gap second-order optimality conditions for optimal control problems with a single state constraint and control. Math. Program. 117(1–2, Ser. B), 21–50 (2009)

Bonnard, B., Faubourg, L., Launay, G., Trélat, E.: Optimal control with state constraints and the space shuttle re-entry problem. J. Dyn. Control Syst. 9(2), 155–199 (2003)

Bourdin, L.: Note on Pontryagin maximum principle with running state constraints and smooth dynamics: proof based on the Ekeland variational principle. Research notes—available on HAL (2016)

Bourdin, L., Dhar, G.: Continuity/constancy of the hamiltonian function in a pontryagin maximum principle for optimal sampled-data control problems with free sampling times. Math. Control Signals Syst. 31(4), 503–544 (2019)

Bourdin, L., Trélat, E.: Pontryagin maximum principle for finite dimensional nonlinear optimal control problems on time scales. SIAM J. Control Optim. 20(4), 526–547 (2013)

Bourdin, L., Trélat, E.: Pontryagin maximum principle for optimal sampled-data control problems. In: 16th IFAC Workshop on Control Applications of Optimization CAO’2015 (2015)

Bourdin, L., Trélat, E.: Optimal sampled-data control, and generalizations on time scales. Math. Control Relat. Fields 6(1), 53–94 (2016)

Bourdin, L., Trélat, E.: Linear-quadratic optimal sampled-data control problems: convergence result and Riccati theory. Autom. J. IFAC 79, 273–281 (2017)

Bressan, A., Piccoli, B.: Introduction to the Mathematical Theory of Control, volume 2 of AIMS Series on Applied Mathematics. American Institute of Mathematical Sciences (AIMS), Springfield (2007)

Burk, F.E.: A Garden of Integrals, volume 31 of the Dolciani Mathematical Expositions. Mathematical Association of America, Washington (2007)

Carothers, N.L.: Real Analysis. Cambridge University Press, Cambridge (2000)

Cesari, L.: Optimization—Theory and Applications, volume 17 of Applications of Mathematics (New York). Springer, New York (1983). (Problems with ordinary differential equations)

Cho, D.I., Abad, P.L., Parlar, M.: Optimal production and maintenance decisions when a system experience age-dependent deterioration. Optim. Control Appl. Methods 14(3), 153–167 (1993)

Clarke, F.H.: The generalized problem of Bolza. SIAM J. Control Optim. 14(4), 682–699 (1976)

Clarke, F.H.: Optimization and Nonsmooth Analysis, volume 5 of Classics in Applied Mathematics, 2nd edn. Society for Industrial and Applied Mathematics (SIAM), Philadelphia (1990)

Coddington, E.A., Levinson, N.: Theory of Ordinary Differential Equations. McGraw-Hill Book Company Inc, New York (1955)

Cots, O.: Geometric and numerical methods for a state constrained minimum time control problem of an electric vehicle. ESAIM Control Optim. Calc. Var. 23(4), 1715–1749 (2017)

Cots, O., Gergaud, J., Goubinat, D.: Direct and indirect methods in optimal control with state constraints and the climbing trajectory of an aircraft. Optim. Control Appl. Methods 39(1), 281–301 (2018)

Dmitruk, A.V.: On the development of Pontryagin’s maximum principle in the works of A. Ya. Dubovitskii and A. A. Milyutin. Control Cybern. 38(4A), 923–957 (2009)

Dmitruk, A.V., Kaganovich, A.M.: Maximum principle for optimal control problems with intermediate constraints. Comput. Math. Model. 22(2), 180–215 (2011). Translation of Nelineĭnaya Din. Upr. No. 6(2008), 101–136

Dmitruk, A.V., Osmolovskii, N.P.: Necessary conditions for a weak minimum in optimal control problems with integral equations subject to state and mixed constraints. SIAM J. Control Optim. 52(6), 3437–3462 (2014)

Dmitruk, A.V., Osmolovskii, N.P.: Necessary conditions for a weak minimum in a general optimal control problem with integral equations on a variable time interval. Math. Control Relat. Fields 7(4), 507–535 (2017)

Dmitruk, A.V., Osmolovskii, N.P.: A general Lagrange multipliers theorem and related questions. In: Control Systems and Mathematical Methods in Economics, volume 687 of Lecture Notes in Economics and Mathematical Systems, pp. 165–194. Springer, Cham (2018)

Dmitruk, A.V., Osmolovskii, N.P.: Proof of the maximum principle for a problem with state constraints by the V-change of time variable. Discrete Contin. Dyn. Syst. Ser. B 24(5), 2189–2204 (2019)

Dubovitskii, A.Y., Milyutin, A.A.: Extremum problems in the presence of restrictions. USSR Comput. Math. Math. Phys. 5(3), 1–80 (1965)

Ekeland, I.: On the variational principle. J. Math. Anal. Appl. 47, 324–353 (1974)

Evans, L.C.: An introduction to mathematical optimal control theory. Version 0.2, Lecture notes

Fadali, M.S., Visioli, A.: Digital Control Engineering: Analysis and Design. Elsevier, New York (2013)

Faraut, J.: Calcul intégral (L3M1). EDP Sciences, Les Ulis (2012)

Gamkrelidze, R.V.: Optimal control processes for bounded phase coordinates. Izv. Akad. Nauk SSSR. Ser. Mat. 24, 315–356 (1960)

Girsanov, I.V.: Lectures on Mathematical Theory of Extremum Problems. Springer, Berlin (1972). Edited by B. T. Poljak, Translated from the Russian by D. Louvish, Lecture Notes in Economics and Mathematical Systems, vol. 67

Grasse, K.A., Sussmann, H.J.: Global controllability by nice controls. In: Nonlinear Controllability and Optimal Control, volume 133 of Monographs and Textbooks in Pure and Applied Mathematics, pp. 33–79. Dekker, New York (1990)

Grüne, L., Pannek, J.: Nonlinear Model Predictive Control. Communications and Control Engineering Series, 2nd edn. Springer, Cham (2017). (Theory and algorithms)

Halkin, H.: A maximum principle of the pontryagin type for systems described by nonlinear difference equations. SIAM J. Control 4(1), 90–111 (1966)

Hartl, R.F., Sethi, S.P., Vickson, R.G.: A survey of the maximum principles for optimal control problems with state constraints. SIAM Rev. 37(2), 181–218 (1995)

Hestenes, M.R.: Calculus of Variations and Optimal Control Theory. Robert E. Krieger Publishing Co., Inc., Huntington (1980). Corrected reprint of the 1966 original

Hiriart-Urruty, J.B.: Les mathématiques du mieux faire, vol. 2: La commande optimale pour les débutants. Collection Opuscules (2008)

Holtzman, J.M., Halkin, H.: Discretional convexity and the maximum principle for discrete systems. SIAM J. Control 4(2), 263–275 (1966)

Ioffe, A.D., Tihomirov, V.M.: Theory of Extremal Problems, volume 6 of Studies in Mathematics and its Applications. North-Holland Publishing Co., Amsterdam (1979). (Translated from the Russian by Karol Makowski)

Jacobson, D.H., Lele, M.M., Speyer, J.L.: New necessary conditions of optimality for control problems with state-variable inequality constraints. J. Math. Anal. Appl. 35, 255–284 (1971)

Kim, N., Rousseau, A., Lee, D.: A jump condition of PMP-based control for PHEVs. J. Power Sources 196(23), 10380–10386 (2011)

Landau, I.D., Zito, G.: Digital Control Systems: Design: Identification and Implementation. Springer, Berlin (2006)

Lee, E.B., Markus, L.: Foundations of Optimal Control Theory, 2nd edn. Robert E. Krieger Publishing Co., Inc, Melbourne (1986)

Li, X., Yong, J.: Optimal Control Theory for Infinite-Dimensional Systems. Systems and Control: Foundations and Applications. Birkhäuser Boston Inc, Boston (1995)

Limaye, B.V.: Functional Analysis, second edn. New Age International Publishers Limited, New Delhi (1996)

Malanowski, K.: On normality of Lagrange multipliers for state constrained optimal control problems. Optimization 52(1), 75–91 (2003)

Maurer, H.: On optimal control problems with bounded state variables and control appearing linearly. SIAM J. Control Optim. 15(3), 345–362 (1977)

Maurer, H., Kim, J.R., Vossen, G.: On a state-constrained control problem in optimal production and maintenance. In: Optimal Control and Dynamic Games, pp. 289–308. Springer (2005)

Milyutin, A.A.: Extremum problems in the presence of constraints. Ph.D. thesis, Doctoral Dissertation, Institute of Applied Mathematics, Moscow (1966)

Mordukhovich, B.S.: Variational Analysis and Generalized Differentiation. I, volume 330 of Grundlehren der Mathematischen Wissenschaften [Fundamental Principles of Mathematical Sciences]. Springer, Berlin (2006). (Basic theory)

Pontryagin, L.S., Boltyanskii, V.G., Gamkrelidze, R.V., Mishchenko, E.F.: The Mathematical Theory of Optimal Processes. Wiley, New York (1962)

Puchkova, A., Rehbock, V., Teo, K.L.: Closed-form solutions of a fishery harvesting model with state constraint. Optim. Control Appl. Methods 35(4), 395–411 (2014)

Rampazzo, F., Vinter, R.B.: A theorem on existence of neighbouring trajectories satisfying a state constraint, with applications to optimal control. IMA J. Math. Control Inf. 16(4), 335–351 (1999)

Robbins, H.: Junction phenomena for optimal control with state-variable inequality constraints of third order. J. Optim. Theory Appl. 31(1), 85–99 (1980)

Rockafellar, R.T.: State constraints in convex control problems of Bolza. SIAM J. Control 10, 691–715 (1972)

Santina, M.S., Stubberud, A.R.: Basics of sampling and quantization. In: Handbook of Networked and Embedded Control Systems, Control Engineering, pp. 45–69. Birkhauser, Boston (2005)

Sethi, S.P., Thompson, G.L.: Optimal Control Theory, 2nd edn. Kluwer Academic Publishers, Boston (2000). (Applications to management science and economics)

Trélat, E.: Contrôle optimal: théorie & applications. Vuibert, Paris (2005)

van Keulen, T., Gillot, J., de Jager, B., Steinbuch, M.: Solution for state constrained optimal control problems applied to power split control for hybrid vehicles. Automat. J. IFAC 50(1), 187–192 (2014)

Van Reeven, V., Hofman, T., Willems, F., Huisman, R., Steinbuch, M.: Optimal control of engine warmup in hybrid vehicles. Oil Gas Sci. Technol. 71(1), 14 (2016)

Vinter, R.: Optimal Control. Modern Birkhäuser Classics. Birkhäuser, Boston (2010). (Paperback reprint of the 2000 edition)

Volz, R.A., Kazda, L.F.: Design of a digital controller for a tracking telescope. IEEE Trans. Automat. Control AC–12(4), 359–367 (1966)

Wheeden, R.L., Zygmund, A.: Measure and Integral. Pure and Applied Mathematics (Boca Raton), 2nd edn. CRC Press, Boca Raton (2015). (An introduction to real analysis)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Preliminaries for the proof of Theorem 3.1

This appendix is devoted to some required preliminaries for the proof of Theorem 3.1 found in Appendix B. In Sect. A.1 we give some recalls on renorming Banach spaces and on the regularity of distance functions. Sect. A.2 is concerned with the sensitivity analysis of the state equation in Problem (\(\mathrm {OSCP}\)). Then, in Sect. A.3, we give some recalls on Stieltjes integrations and on Fubini formulas. Finally Sect. A.4 is devoted to Duhamel formulas for Cauchy-Stieltjes Problems (FCSP) and (BCSP).

1.1 About renorming Banach spaces and regularity of distance functions

Let \((Y, \Vert \cdot \Vert )\) be a normed space. We recall that the dual space of \((Y,\Vert \cdot \Vert )\), which we denote by \(Y^*:={\mathcal {L}}((Y,\Vert \cdot \Vert ),{\mathbb {R}})\), is the space of linear continuous forms on \((Y,\Vert \cdot \Vert )\). We recall that \(Y^*\) can be endowed with the dual norm \(\Vert \cdot \Vert ^{*}\) defined by

In this situation we denote by \((Y^*,\Vert \cdot \Vert ^*):=\mathrm {dual}(Y,\Vert \cdot \Vert )\). We recall the following proposition on renorming separable Banach spaces.

Proposition A.1

Let \((Y,\Vert \cdot \Vert )\) be a separable Banach space and let \((Y^*, \Vert \cdot \Vert ^*)=\mathrm {dual}(Y,\Vert \cdot \Vert )\). Then there exists a norm \(\mathrm {N}\) on Y equivalent to \(\Vert \cdot \Vert \) such that:

-

(i)

\(\mathrm {N}^*\) is equivalent to \(\Vert \cdot \Vert ^*\);

-

(ii)

\(\mathrm {N}^*\) is strictly convex;

where \((Y^*,\mathrm {N}^*)=\mathrm {dual}(Y,\mathrm {N})\).

Proof

We refer to [56, Theorem 2.18, p. 42] or to [15, Proposition 4, p. 16] for a complete proof. \(\square \)

Let \(F : Y \rightarrow {\mathbb {R}}\) be a convex function. Recall that the subdifferential of F at a point \(y \in Y\) is defined to be the set

We recall that a function \(F : Y \rightarrow {\mathbb {R}}\) is said to be strictly Hadamard-differentiable at a point \(y \in Y\) with the strict Hadamard derivative \(DF ( y ) \in Y^*\) if

for every compact set \(\mathrm {K} \subset Y\). We refer to [62, pp. 312–313] for more details. Finally we denote by \(\mathrm {d_S} : Y \rightarrow {\mathbb {R}}\) the distance function to a nonempty subset \(\mathrm {S} \subset Y\) defined by \(\mathrm {d_S}(y):=\inf _{y' \in \mathrm {S}}\Vert y-y' \Vert \) for all \(y \in Y\), and by \(\mathrm {d^2_S} : Y \rightarrow {\mathbb {R}}\) the squared distance function defined by \(\mathrm {d^2_S}(y) := \mathrm {d_S} (y)^2\) for all \(y \in Y\). We recall the following proposition on the regularity of distance functions.

Proposition A.2

Let \((Y, \Vert \cdot \Vert )\) be a normed space. Let \(\mathrm {S}\subset Y\) be a nonempty closed convex subset and let us assume that \(\Vert \cdot \Vert ^*\) is strictly convex, where \((Y^*,\Vert \cdot \Vert ^*):=\mathrm {dual}(Y,\Vert \cdot \Vert )\). Then it holds that:

-

(i)

\(\mathrm {d_S}\) is convex and 1-Lipschitz continuous;

-

(ii)

\(\mathrm {d_S}\) is strictly Hadamard-differentiable on \(Y {\setminus }\mathrm {S}\) with \(\Vert D\mathrm {d_S}(y)\Vert ^*=1\) and \(\partial \mathrm {d_S}(y)=\{D\mathrm {d_S}(y)\}\) for all \(y\in Y{\setminus } \mathrm {S}\);

-

(iii)

\(\mathrm {d^2_S}\) is strictly Hadamard-differentiable on \(Y {\setminus }\mathrm {S}\) with \(D\mathrm {d^2_S} (y)=2\mathrm {d_S}(y)D \mathrm {d_S}(y)\) for all \(y\in Y{\setminus }\mathrm {S}\);

-

(iv)

\(\mathrm {d^2_S}\) is Fréchet-differentiable on \(\mathrm {S}\) with \(D\mathrm {d^2_S}(y)=0_{Y^*}\) for all \(y\in \mathrm {S}\).

Proof

The proof of (i) is a standard result. We refer to [62, Theorem 3.54, p. 313] and [15, Appendix B.2] for the proof of (ii). The proofs of (iii) and (iv) are straightforward. \(\square \)

1.2 About sensitivity analysis of the state equation in Problem (\(\mathrm {OSCP}\))

For all \(u\in \mathrm {L}^\infty _m \) we consider the Cauchy problem (\(\mathrm {CP}_u\)) given by

Before proceeding to the sensitivity analysis of the Cauchy problem (\(\mathrm {CP}_u\)) with respect to the control u, we first recall some definitions and results from the classical Cauchy–Lipschitz (or Picard-Lindelöf) theory (see e.g., [28]).

Definition A.1

Let \(u\in \mathrm {L}^\infty _m \). A (local) solution to the Cauchy problem (\(\mathrm {CP}_u\)) is a couple (x, I) such that:

-

(i)

I is an interval such that \(\{ 0 \} \varsubsetneq I \subset [0,T]\);

-

(ii)

\(x \in \mathrm {AC}([0,T'],{\mathbb {R}}^n)\), with \({\dot{x}}(t) = f(x(t),u(t),t)\) for a.e. \(t \in [0,T']\), for all \(T' \in I\);

-

(iii)

\(x(0)=x_0\).

Let \((x_1,I_1)\) and \((x_2,I_2)\) be two (local) solutions to the Cauchy problem (\(\mathrm {CP}_u\)). We say that \((x_2,I_2)\) is an extension (resp. strict extension) to \((x_1,I_1)\) if \(I_1\subset I_2\) (resp. \(I_1 \varsubsetneq I_2\)) and \(x_2(t) = x_1(t)\) for all \(t \in I_1\). A maximal solution to the Cauchy problem (\(\mathrm {CP}_u\)) is a (local) solution that does not admit any strict extension. Finally a global solution to the Cauchy problem (\(\mathrm {CP}_u\)) is a solution (x, I) such that \(I=[0,T]\).

Proposition A.3

For all \(u\in \mathrm {L}^\infty _m\), the Cauchy problem (\(\mathrm {CP}_u\)) admits a unique maximal solution, denoted by \((x(\cdot ,u),I(u))\), which is an extension to any other local solution.

We now introduce the notion of controls admissible for globality.

Definition A.1

A control \(u\in \mathrm {L}^\infty _m \) is said to be admissible for globality if the corresponding maximal solution \((x(\cdot ,u),I(u))\) is global, that is, if \(I(u) = [0,T]\). In what follows we denote by \(\mathcal {AG}\subset \mathrm {L}^\infty _m \) the set of all controls admissible for globality.

Remark A.1

Using the standard combination of the Gronwall lemma with the blow-up theorem for nonglobal solutions in ordinary differential equations theory, we can establish the following sufficient condition. Given a control \(u\in \mathrm {L}^\infty _m \), if there exist a nonnegative coercive mapping \(\Theta : {\mathbb {R}}^n \rightarrow {\mathbb {R}}_+\) of class \(\mathrm {C}^1\) with two nonnegative constants \(c_1\), \(c_2 \ge 0\) such that \(\langle f(x,u(t),t) , \nabla \Theta ( x ) \rangle _{{\mathbb {R}}^n} \le c_1 \Theta (x) + c_2\) for all \( x \in {\mathbb {R}}^n\) and for a.e. \(t \in [0,T]\), then \(u \in \mathcal {AG}\). This sufficient condition covers, not only some typical situations for which \(\mathcal {AG} = \mathrm {L}^\infty _m \) (such as global Lipschitz dynamics, or more generally dynamics with a sublinear growth, taking \(\Theta (x) := \Vert x \Vert _{{\mathbb {R}}^n}^2\) for all \(x \in {\mathbb {R}}^n\)), but also some dynamics with polynomial growth for which \(\mathcal {AG} \subsetneq \mathrm {L}^\infty _m \). As an illustration, take the scalar case \(n=m=1\) and the dynamics \(f(x,u,t):=x-ux^3\) for all \((x,u,t) \in {\mathbb {R}}\times {\mathbb {R}}\times [0,T]\). In that example, if a scalar control \(u \in \mathrm {L}^\infty _m\) takes only nonnegative values on [0, T], by considering \(\Theta (x) := x^2\) for all \(x \in {\mathbb {R}}\), we prove that \(u \in \mathcal {AG}\).

In the following lemma we state a continuous dependence result for the trajectory \(x(\cdot ,u)\) with respect to the control u. In particular we prove that \(\mathcal {AG}\) is open.

Lemma A.1

For all \(u\in \mathcal {AG}\), there exists \(\varepsilon _u>0\) such that \(\overline{\mathrm {B}}_{\mathrm {L}^\infty _m}(u,\varepsilon _u)\subset \mathcal {AG}\), where \(\overline{\mathrm {B}}_{\mathrm {L}^\infty _m}(u,\varepsilon _u)\) stands for the standard closed ball in \(\mathrm {L}^\infty _m\) centered at u and of radius \(\varepsilon _u\). Moreover the map

is Lipschitz continuous.

Proof

This proof is standard and essentially based on the classical Gronwall lemma. We refer to [17, Lemmas 1 and 3, pp. 3795–3797], [19, Lemmas 4.3 and 4.5, pp. 73–74] (in the general framework of time scale calculus) or to [15, Propositions 1 and 2, pp. 4–5] (in a more classical framework, closer to the present considerations) for similar statements with detailed proofs. \(\square \)

Remark A.2

Let \(u\in \mathcal {AG}\) and \(\varepsilon _u>0\) as given in Lemma A.1. Let \(u' \in \overline{\mathrm {B}}_{\mathrm {L}^\infty _m}(u,\varepsilon _u)\) and \((u_k)_{k\in {\mathbb {N}}}\) be a sequence in \(\overline{\mathrm {B}}_{\mathrm {L}^\infty _m}(u,\varepsilon _u)\) converging to \(u'\) in \(\mathrm {L}^\infty _m\). From Lemma A.1, we deduce that the sequence \((x(\cdot ,u_k))_{k\in {\mathbb {N}}}\) uniformly converges to \(x(\cdot ,u')\) over [0, T].

In the next proposition we state a differentiability result for the trajectory \(x(\cdot ,u)\) with respect to a convex \(\mathrm {L}^\infty \)-perturbation of the control u.

Proposition A.4

Let \(u\in \mathcal {AG}\) and let \(z \in \mathrm {L}^\infty _m\). We consider the convex \(\mathrm {L}^\infty \)-perturbation of u given by

for all \(\rho \in [0,1]\). Then:

-

(i)

there exists \(0<\rho _0\le 1\) such that \(u_z(\cdot ,\rho )\in \mathcal {AG}\) for all \(\rho \in [0,\rho _0]\);

-

(ii)

the map

$$\begin{aligned} \rho \in ([0,\rho _0],\vert \cdot \vert )\longmapsto x(\cdot ,u_z(\cdot ,\rho ))\in (\mathrm {C}_n,\Vert \cdot \Vert _\infty ), \end{aligned}$$is differentiable at \(\rho =0\) and its derivative is equal to the variation vector \(w_z(\cdot ,u) \in \mathrm {AC}_n\) being the unique solution (that is global) to the linearized Cauchy problem given by

$$\begin{aligned} {\left\{ \begin{array}{ll} {\dot{w}}(t) = \partial _1 f(x(t,u),u(t),t)\times w(t)+\partial _2 f(x(t,u),u(t),t)\times (z(t)-u(t)) \\ \qquad \text { for a.e. }t \in [0,T], \\ w(0)=0_{{\mathbb {R}}^n}. \end{array}\right. } \end{aligned}$$

Proof

This proof is standard and essentially based on the classical Gronwall lemma. We refer to [17, Lemma 4 and Proposition 1, pp. 3797–3798] for a similar statement with detailed proof. \(\square \)

We conclude this section by a technical lemma on the convergence of variation vectors which is required in the proof of our main result.

Lemma A.2

Let \(u\in \mathcal {AG}\) and \(\varepsilon _u>0\) as in Lemma A.1. Let \(z \in \mathrm {L}^\infty _m\). Let \(u' \in \overline{\mathrm {B}}_{\mathrm {L}^\infty _m}(u,\varepsilon _u)\) and \((u_k)_{k\in {\mathbb {N}}}\) be a sequence in \(\overline{\mathrm {B}}_{\mathrm {L}^\infty _m}(u,\varepsilon _u)\) converging to \(u'\) in \(\mathrm {L}^\infty _m\). Then the sequence \((w_z(\cdot ,u_k))_{k\in {\mathbb {N}}}\) uniformly converges to \(w_z(\cdot ,u')\) over [0, T].

Proof

This proof is standard and essentially based on the classical Gronwall lemma. We refer to [17, Lemmas 4.8 and 4.9, pp. 77–78] for a similar statement with detailed proof. \(\square \)

1.3 About Stieltjes integrations and Fubini formulas

In this section our aim is to recall some notions on Stieltjes integrations and to recall some Fubini formulas. We refer to standard references and books such as [5, 22, 23, 41, 75] for more details. We also refer to [15, Appendix C] and references therein. In the sequel we denote by \(\mathrm {C}^+_n:=\mathrm {C}([0,T],({\mathbb {R}}_{+})^n)\) where \({\mathbb {R}}_{+}:=[0,+\infty )\). We denote by \(\mathrm {C}^*_n\) as the dual space of \(\mathrm {C}_n\) (see Sect. A.1 for some details on dual spaces). We first recall the following Riesz representation theorem (see [57, Theorem 14.5, pp. 245–246] or [15, Proposition 7, p. 19]).

Proposition A.5

\(\text {(Riesz representation theorem).}\) Let \(\psi ^* \in \mathrm {C}^*_1\). Then there exists a unique \(\eta \in \mathrm {NBV}_1\) such that:

for all \(\psi \in \mathrm {C}_1\). Moreover it holds that:

-

(i)

\(\langle \psi ^* , \psi \rangle _{\mathrm {C}^*_1\times \mathrm {C}_1}\ge 0\) for all \(\psi \in \mathrm {C}^{+}_1\) if and only if \(\eta \) is monotonically increasing on [0, T];

-

(ii)

\(\psi ^* = 0_{\mathrm {C}^*_1}\) if and only if \(\eta =0_{\mathrm {NBV}_1}\).

Recall that if \(\eta \in \mathrm {NBV}_1\) is monotonically increasing on [0, T], then \(\eta \) induces a finite nonnegative Borel measure \(d\eta \) on [0, T] by defining \(d\eta (\{ 0 \}) := \eta (0^+)\) and \(d\eta ((a,b]):=\eta (b)-\eta (a)\) for all semiopen intervals \((a,b] \subset [0,T]\) and by using the Carathéodory extension theorem. Furthermore, for all \(\psi \in \mathrm {C}_1\), the Riemann–Stieltjes integral \(\int _a^b \psi (\tau ) d\eta (\tau )\) coincides with the Lebesgue–Stieltjes integral \(\int _{(a,b]} \psi (\tau ) d\eta (\tau )\) for all \(0 \le a \le b \le T\). We refer to [41, p. 83] and [75, p. 288] for more details. Consequently the Fubini formula

holds for all \(\Psi \in \mathrm {L}^\infty ([0,T]^2,{\mathbb {R}})\) such that \(\Psi \) is continuous in its first variable.

We now introduce some notations for Riemann–Stieltjes integrals with respect to vectorial functions of bounded variation. We denote by

for all \(\psi =(\psi _j)_{j=1,\ldots ,q}\in \mathrm {C}_{q}\) and all \(\eta = (\eta _j)_{j=1,\ldots ,q}\in \mathrm {BV}_{q}\). Moreover we denote by

and

for all \(\psi =(\psi _r)_{r=1,\ldots ,n}\in \mathrm {C}_{n}\), all \( \eta = (\eta _j)_{j=1,\ldots ,q}\in \mathrm {BV}_{q}\) and all continuous matrices \(M=(m_{r j})_{r j} : [0,T] \rightarrow {\mathbb {R}}^{n \times q}\). In particular one can easily prove that, if \(\psi \in {\mathbb {R}}^{n}\) (i.e. \(\psi \in \mathrm {C}_{n}\) constant), then

for all \( \eta = (\eta _j)_{j=1,\ldots ,q}\in \mathrm {BV}_{q}\) and all continuous matrices \(M=(m_{r j})_{r j} : [0,T] \rightarrow {\mathbb {R}}^{n \times q}\).

Finally, from the Fubini formula (4) and the above notations, one can easily deduce that the Fubini formula

holds for all \(\Psi \in \mathrm {L}^\infty ([0,T]^2,{\mathbb {R}}^{q})\) being continuous in its first variable and for all \( \eta = (\eta _j)_{j=1,\ldots ,q}\in \mathrm {NBV}_{q}\) such that \(\eta _j\) is monotonically increasing on [0, T] for each \(j=1,\ldots ,q\).

1.4 About problems (FCSP) and (BCSP) and Duhamel formulas

Let us consider the framework and the notations introduced in Sect. 2.2. Our aim in this section is to provide Duhamel formulas for the solutions to Problems (FCSP) and (BCSP). To this aim, we recall that the state-transition matrix \(\Phi (\cdot ,\cdot ) : [0,T]^2 \rightarrow {\mathbb {R}}^{n \times n}\) associated with \(A\in \mathrm {L}^\infty ([0,T],{\mathbb {R}}^{n \times n})\) is defined as follows. For all \(s\in [0,T]\), \(\Phi (\cdot ,s)\) is the unique solution (that is global) to the linear forward/backward Cauchy problem given by

The equalities

both hold for all \((t,s)\in [0,T]^2\). From these two equalities and the Fubini formulas from Sect. A.3, one can easily derive the following proposition. We also refer to [15, Appendix D] for some details.

Proposition A.6

[Duhamel formulas] The solutions to (FCSP) and (BCSP) are given by

and

for all \(t\in [0,T]\), where \(\Phi (\cdot ,\cdot )\) stands for the state-transition matrix associated with A.

Proof of Theorem 3.1

This appendix is dedicated to the detailed proof of Theorem 3.1. Section B.1 deals with the case \(L = 0\) (the case \(L\ne 0\) is treated in Sect. B.2 with a simple change of variable). In Sect. B.1.1 the Ekeland variational principle is applied on an appropriate penalized functional in order to derive a crucial inequality (see Inequality (9)). In Sect. B.1.2 we conclude the proof of Theorem 3.1 by introducing the adjoint vector p.

We first remark that the running inequality state constraints in Problem (\(\mathrm {OSCP}\)) can be written as \({\mathfrak {h}}(x)\in \mathrm {S}\) where:

-

\({\mathfrak {h}}: \mathrm {C}_n\rightarrow \mathrm {C}_q\) is defined as \({\mathfrak {h}}(x) := h(x,\cdot )\) for all \(x \in \mathrm {C}_n\). Note that \({\mathfrak {h}}\) is of class \(\mathrm {C}^1\) with \(D{\mathfrak {h}}(x)(x')=\partial _1 h (x,\cdot ) \times x'\) for all x, \(x' \in \mathrm {C}_n\);

-

\(\mathrm {S}:=\mathrm {C}([0,T],({\mathbb {R}}_-)^q)\) where \({\mathbb {R}}_- := (-\infty ,0]\). We emphasize that \(\mathrm {S} \subset \mathrm {C}_q\) is a nonempty closed convex cone of \(\mathrm {C}_q\) with a nonempty interior.

Recall that \((\mathrm {C}_q,\Vert \cdot \Vert _\infty )\) is a separable Banach space. Applying Proposition A.1, we endow \(\mathrm {C}_q\) with an equivalent norm \(\Vert \cdot \Vert _{\mathrm {C}_q}\) such that the associated dual norm \(\Vert \cdot \Vert _{\mathrm {C}^*_q}\) is strictly convex. We denote by \(\mathrm {d_S} : \mathrm {C}_q \rightarrow {\mathbb {R}}\) the 1-Lipschitz continuous distance function to \(\mathrm {S}\) (see Sect. A.1). Then, from Proposition A.2, we know that \(\mathrm {d_S}\) and \(\mathrm {d^2_S}\) are strictly Hadamard-differentiable on \(\mathrm {C}_q {\setminus } \mathrm {S}\) with \(D\mathrm {d^2_S}(x)=2\mathrm {d_S}(x)D\mathrm {d_S}(x)\) and \(\Vert D\mathrm {d_S}(x) \Vert _{\mathrm {C}_q^*} = 1\) for all \(x\in \mathrm {C}_q {\setminus } \mathrm {S}\), and that \(\mathrm {d^2_S}\) is Fréchet-differentiable on \(\mathrm {S}\) with \(D\mathrm {d^2_S}(x)=0_{\mathrm {C}_q^*}\) for all \(x\in \mathrm {S}\).

1.1 The case \(L=0\)

In the whole section we will assume that \(L=0\) in Problem (\(\mathrm {OSCP}\)) (see Sect. B.2 for the case \(L \ne 0\)). Let \((x,u)\in \mathrm {AC}_n\times \mathrm {PC}^{\mathbb {T}}_m\) be a solution to Problem (\(\mathrm {OSCP}\)). Following the notation introduced in Sect. A.2, it holds that \(u \in \mathcal {AG}\) and that \(x=x(\cdot ,u)\). In what follows we will also consider the positive real number \(\varepsilon _{u} > 0\) given in Lemma A.1.

1.1.1 Application of the Ekeland variational principle

Let us recall a simplified version (but sufficient for our purposes) of the Ekeland varational principle (see [38]).

Proposition B.1

(Ekeland variational principle) Let \((\mathrm {E},\mathrm {d_E})\) be a complete metric set. Let \({\mathcal {J}}: \mathrm {E} \rightarrow {\mathbb {R}}^+\) be a continuous nonnegative map. Let \(\varepsilon >0\) and \(e \in \mathrm {E}\) such that \({\mathcal {J}}(e) = \varepsilon \). Then there exists \(e_\varepsilon \in \mathrm {E}\) such that \(\mathrm {d_E}(e_\varepsilon ,e)\le \sqrt{\varepsilon }\), and \(-\sqrt{\varepsilon } \; \mathrm {d_E}(e',e_\varepsilon )\le {\mathcal {J}}(e')-{\mathcal {J}}(e_\varepsilon )\) for every \(e' \in \mathrm {E}\).

We introduce the set

From the closedness assumption on \(\mathrm {U}\), one can easily prove that \((\mathrm {E}_u,\Vert \cdot \Vert _{\mathrm {L}^\infty _m})\) is a complete metric set. Let us choose a sequence \((\varepsilon _k)_{k\in {\mathbb {N}}}\) such that \(0<\sqrt{\varepsilon _k}<\varepsilon _u\) for all \(k\in {\mathbb {N}}\) and satisfying \(\lim _{k\rightarrow \infty }\varepsilon _k=0\). We introduce the penalized functional

for all \(k\in {\mathbb {N}}\). From Lemma A.1, note that \({\mathcal {J}}_k\) is correctly defined for all \(k\in {\mathbb {N}}\). Also, from Lemma A.1 and from the continuities of g, \({\mathfrak {h}}\) and \(\mathrm {d}^2_\mathrm {S}\) (see Proposition A.2), it follows that \({\mathcal {J}}_k\) is continuous as well for all \(k\in {\mathbb {N}}\). Note that \({\mathcal {J}}_k\) is nonnegative and, since the constraint \({\mathfrak {h}}( x) \in \mathrm {S}\) is satisfied, it holds that \({\mathcal {J}}_k(u)=\varepsilon _k\) for all \(k\in {\mathbb {N}}\). Therefore, from the Ekeland variational principle (see Proposition B.1), we conclude that there exists a sequence \((u_k)_{k\in {\mathbb {N}}} \subset \mathrm {E}_u\) such that

and

for all \(u'\in \mathrm {E}_u\) and all \(k\in {\mathbb {N}}\). In particular, from Inequality (5), note that the sequence \((u_k)_{k \in {\mathbb {N}}}\) converges to u in \(\mathrm {L}^\infty _m\). From optimality of the couple (x, u), note that \({\mathcal {J}}_k(u')>0\) for all \(u'\in \mathrm {E}_u\) and all \(k\in {\mathbb {N}}\). We thus define correctly the couple \((\lambda _k,\psi ^*_k) \in {\mathbb {R}} \times \mathrm {C}^*_q\) as

and

for all \(k \in {\mathbb {N}}\). From Proposition A.2 it holds that \(|\lambda _k|^2+\Vert \psi ^*_k \Vert ^2_{\mathrm {C}^*_q}=1\) for all \(k\in {\mathbb {N}}\). As a consequence, we can extract subsequences (which we do not relabel) such that \((\lambda _k)_{k\in {\mathbb {N}}}\) converges to some \(\lambda \ge 0\) and \((\psi ^*_k)_{k\in {\mathbb {N}}}\) weakly\(^*\) converges to some \(\psi ^* \in \mathrm {C}^*_q\). In particular it holds that \(|\lambda |^2+\Vert \psi ^* \Vert ^2_{\mathrm {C}^*_q} \le 1\). At this step note that we cannot ensure that the couple \((\lambda ,\psi ^*)\) is not trivial. The nontriviality is guaranteed by the next proposition.

Proposition B.2

The couple \((\lambda ,\psi ^*)\in {\mathbb {R}}\times \mathrm {C}^*_q\) is nontrivial and it holds that

for all \(\psi \in \mathrm {S}\).

Proof

Let \(k\in {\mathbb {N}}\) be fixed. From Proposition A.2, if \({\mathfrak {h}}(x(\cdot ,u_k))\notin \mathrm {S}\), then \(D\mathrm {d_S}({\mathfrak {h}}(x(\cdot ,u_k)) ) \in \partial \mathrm {d_S} ({\mathfrak {h}}(x(\cdot ,u_k)) )\). Hence, if \({\mathfrak {h}}(x(\cdot ,u_k))\notin \mathrm {S}\), it holds that

for all \(\psi \in \mathrm {S}\). As a consequence, in both cases \({\mathfrak {h}}(x(\cdot ,u_k))\in \mathrm {S}\) and \({\mathfrak {h}}(x(\cdot ,u_k))\notin \mathrm {S}\), it holds that

for all \(\psi \in \mathrm {S}\). Using Lemma A.1 and taking the limit as k tends to \(+\infty \), we get Inequality (7). Now let us prove that the couple \((\lambda ,\psi ^*)\in {\mathbb {R}}\times \mathrm {C}^*_q\) is nontrivial. Since \(\mathrm {S}\) has a nonempty interior, there exists \(\xi \in \mathrm {S}\) and \(\delta >0\) such that \(\xi +\delta \psi \in \mathrm {S}\) for all \(\psi \in \overline{\mathrm {B}}_{\mathrm {C}_q}(0_{\mathrm {C}_q},1)\). Hence we obtain from Inequality (8) that

for all \(\psi \in \overline{\mathrm {B}}_{\mathrm {C}_q} (0_{\mathrm {C}_q},1)\) and all \(k \in {\mathbb {N}}\). We deduce that

for all \(k \in {\mathbb {N}}\). Using Lemma A.1 and taking the limit as k tends to \(+\infty \), we obtain that

Since \(\delta >0\), the last inequality implies that the couple \((\lambda ,\psi ^*)\) is nontrivial which completes the proof. \(\square \)

Finally, in the next result, we use Inequality (6) with convex \(\mathrm {L}^\infty \)-perturbations of the control \(u_k\) in order to establish a crucial inequality.

Proposition B.3

The inequality

holds for all \(z \in \mathrm {PC}^{\mathbb {T}}_m\) with values in \(\mathrm {U}\), where \(w_z(\cdot ,u)\) is the variation vector defined in Proposition A.4.

Proof

Let \(z \in \mathrm {PC}^{\mathbb {T}}_m\) with values in \(\mathrm {U}\). We fix \(k \in {\mathbb {N}}\). Since \(\mathrm {U}\) is convex, it is clear that the convex \(\mathrm {L}^\infty \)-pertubation of the control \(u_k\) associated with z, defined by \(u_{k,z}(t,\rho ):= u_k(t) +\rho (z(t)-u_k(t))\) for all \(t\in [0,T]\) and all \(0\le \rho \le 1\), belongs to \(\mathrm {PC}^{\mathbb {T}}_m\) and takes values in \(\mathrm {U}\). Furthermore it holds that \(\Vert u_{k,z}(\cdot ,\rho )-u \Vert _{\mathrm {L}^\infty _m} \le \rho \Vert z-u_k \Vert _{\mathrm {L}^\infty _m}+\Vert u_k-u \Vert _{\mathrm {L}^\infty _m} \le \rho \Vert z-u_k \Vert _{\mathrm {L}^\infty _m} + \sqrt{\varepsilon _k}\). Since \(\sqrt{\varepsilon _k} < \varepsilon _u\), we deduce that \(u_{k,z}(\cdot ,\rho ) \in \mathrm {E}_u\) for small enough \(\rho >0\). From Inequality (6) we get that

for small enough \(\rho >0\). From Proposition A.4 and from strict Hadamard-differentiability of \(\mathrm {d}_\mathrm {S}^2\) over \(\mathrm {C}_q {\setminus } \mathrm {S}\) and Fréchet-differentiability of \(\mathrm {d}_\mathrm {S}^2\) over \(\mathrm {S}\) (see Proposition A.2), taking the limit as \(\rho \) tends to 0, we get that

with the convention that the second term on the right-hand side is zero if \({\mathfrak {h}}(x(\cdot ,u_k))\in \mathrm {S}\). Using the definition of \(\lambda _k\) and \(\psi ^*_k\), we deduce that

We take the limit of this inequality as k tends to \(+\infty \). From the smoothness of g and h and from Lemmas A.1 and A.2, Inequality (9) is proved. \(\square \)

1.1.2 Introduction of the adjoint vector

We can now conclude the proof of Theorem 3.1 (in the case \(L=0\)) by introducing the adjoint vector p. We refer to Sects. 2.2, A.3 and A.4 for notations and background concerning Stieltjes integrations and linear Cauchy–Stieltjes problems.

Introduction of the nontrivial couple \((p^0,\eta )\) and complementary slackness condition. We introduce \(p^0 := -\lambda \le 0\) and we write \(\psi ^*=(\psi ^*_j)_{j=1,\ldots ,q}\) where \(\psi ^*_j\in \mathrm {C}^*_1\) for every \(j=1,\ldots ,q\). From the Riesz representation theorem (see Proposition A.5), there exists a unique \(\eta _j \in \mathrm {NBV}_1\) such that

for all \(\psi \in \mathrm {C}_1\) and all \(j=1,\ldots ,q\). Furthermore \(\psi ^*_j=0_{\mathrm {C}^*_1}\) if and only if \(\eta _j=0_{\mathrm {NBV}_1}\). Thus it follows from Proposition B.2 that the couple \((p^0,\eta )\) is not trivial, where \(\eta := (\eta _j)_{j=1,\ldots ,q} \in \mathrm {NBV}_q\). Moreover, from Inequality (7) (and the fact that \(\mathrm {S}\) is a cone containing \({\mathfrak {h}}(x)\)), one can easily deduce that \(\langle \psi ^*_j, {\mathfrak {h}}_j(x)\rangle _{\mathrm {C}^*_1 \times \mathrm {C}_1}=0\), that is,

for all \(j=1,\ldots ,q\). Finally one can similarly deduce from Inequality (7) that \(\langle \psi ^*_j, \psi \rangle _{\mathrm {C}^*_1 \times \mathrm {C}_1} \ge 0\) for all \(\psi \in \mathrm {C}^+_1\) and all \(j=1,\ldots ,q\). From Proposition A.5, it follows that \(\eta _j\) is monotonically increasing on [0, T] for all \(j=1,\ldots ,q\).

Adjoint equation. We define the adjoint vector \(p\in \mathrm {BV}_n\) as the unique solution to the backward linear Cauchy–Stieltjes problem given by

From the Duhamel formula for backward linear Cauchy–Stieltjes problems (see Proposition A.6) and using notations introduced in Sect. A.3, it holds that

for all \(t\in [0,T]\), where \(\Phi (\cdot ,\cdot ):[0,T]^2 \rightarrow {\mathbb {R}}^{n \times n}\) stands for the state-transition matrix associated with \(\partial _1 f(x,u,\cdot )\in \mathrm {L}^\infty ([0,T],{\mathbb {R}}^{n \times n})\).

Nonpositive averaged Hamiltonian gradient condition. From Inequality (9) and using notations introduced in Sect. A.3, it holds that

for all \(z \in \mathrm {PC}^{\mathbb {T}}_m\) with values in \(\mathrm {U}\). From the definition of the variation vector \(w_z(\cdot ,u)\) and the classical Duhamel formula for standard forward linear Cauchy problems, it holds that

for all \(\tau \in [0,T]\). Substituting this expression into the previous inequality and using the last Fubini formula given in Sect. A.3, it follows that

for all \(z \in \mathrm {PC}^{\mathbb {T}}_m\) with values in \(\mathrm {U}\). Finally, grouping like terms, we exactly obtain

for all \(z\in \mathrm {PC}^{\mathbb {T}}_m\) with values in \(\mathrm {U}\). For all \(i=0,\ldots ,N-1\) and all \(v \in \mathrm {U}\), let us consider \(z_{i,v} \in \mathrm {PC}^{\mathbb {T}}_m\) with values in \(\mathrm {U}\) as

for all \(s \in [0,T]\). Substituting z by \(z_{i,v}\) in the above inequality and from the definition of the Hamiltonian H, we exactly get that

for all \(v \in \mathrm {U}\) and all \(i=0,\ldots ,N-1\). The proof of Theorem 3.1 is complete (in the case \(L=0\)).

1.2 The case \(L \ne 0\)

In the previous section we have proved Theorem 3.1 in the case \(L = 0\) (without Lagrange cost). This section is dedicated to the case \(L \ne 0\). Let \((x,u)\in \mathrm {AC}_n\times \mathrm {PC}^{\mathbb {T}}_m\) be a solution to Problem (\(\mathrm {OSCP}\)). Let us introduce

for all \(t \in [0,T]\). We see that the augmented couple \(((x,X),u)\in \mathrm {AC}_{n+1}\times \mathrm {PC}^{\mathbb {T}}_m\) is a solution to the augmented optimal sampled-data control problem with running inequality state constraints of Mayer form given by

where \({\tilde{g}}: {\mathbb {R}}^{n+1} \rightarrow {\mathbb {R}}\) is defined by \({\tilde{g}}(x_1,X_1):=g(x_1)+X_1\) for all \((x_1,X_1)\in {\mathbb {R}}^{n+1}\) and where \({\tilde{h}} : {\mathbb {R}}^{n+1} \times [0,T] \rightarrow {\mathbb {R}}^q\) is defined by \({\tilde{h}}((x_1, X_1),t) := h(x_1,t)\) for all \((x_1,X_1)\in {\mathbb {R}}^{n+1}\) and all \(t\in [0,T]\). Note that Problem (\(\mathrm {OSCP}_{aug}\)) satisfies all of the assumptions of Theorem 3.1 and is without Lagrange cost. We introduce the augmented Hamiltonian \({\tilde{H}} : {\mathbb {R}}^{n+1} \times {\mathbb {R}}^m \times {\mathbb {R}}^{n+1} \times [0,T] \rightarrow {\mathbb {R}}\) defined as

for all \(((x,X),u,(p,P),t)\in {\mathbb {R}}^{n+1} \times {\mathbb {R}}^m \times {\mathbb {R}}^{n+1} \times [0,T] \). Applying Theorem 3.1 (without Lagrange cost, proved in the previous section), we deduce the existence of a nontrivial couple \((p^0,\eta )\), where \(p^0\le 0\) and \(\eta =(\eta _j)_{j=1,\ldots ,q}\in \mathrm {NBV}_q\), such that all conclusions of Theorem 3.1 are satisfied. In particular, the adjoint vector \((p,P) \in \mathrm {BV}_{n+1}\) satisfies the backward linear Cauchy-Stieltjes problem given by

We deduce that \(P(T)=p^0\) and \(dP=0\) over [0, T]. Thus \(P(t)=p^0\) for all \(t \in [0,T]\), and we obtain that \(p \in \mathrm {BV}_n\) satisfies the backward linear Cauchy–Stieltjes problem

The rest of the proof is straightforward from all the necessary conditions obtained from the version of Theorem 3.1 without Lagrange cost.

Rights and permissions

About this article

Cite this article

Bourdin, L., Dhar, G. Optimal sampled-data controls with running inequality state constraints: Pontryagin maximum principle and bouncing trajectory phenomenon. Math. Program. 191, 907–951 (2022). https://doi.org/10.1007/s10107-020-01574-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10107-020-01574-2

Keywords

- Optimal control

- Sampled-data control

- Pontryagin maximum principle

- State constraints

- Ekeland variational principle

- Indirect numerical method

- Shooting method