Abstract

Software-as-a-service applications are experiencing immense growth as their comparatively low cost makes them an important alternative to traditional software. Following the initial adoption phase, vendors are now concerned with the continued usage of their software. To analyze the influence of different measures to improve continued usage over time, a longitudinal study approach using data from a SaaS vendor was implemented. Employing a linear mixed model, the study finds several measures to have a positive effect on a software’s usage penetration. In addition to these activation measures performed by the SaaS vendor, software as well as client characteristics were also examined, but did not display significant estimates. The findings emphasize the need for proactive activation initiatives to raise usage penetration. More generally, the study contributes novel insights into the scarcely researched field of influencing factors on SaaS usage continuance.

Similar content being viewed by others

1 Introduction

With the continuing trend toward IT industrialization, public cloud services constitute an evolution of business by allowing for a new opportunity to shape the relationship between IT service customers and vendors (Van der Meulen and Pettey 2008). Cloud computing can be categorized into three broad service categories, namely infrastructure-as-a-service (IaaS), platform-as-a-service (PaaS), and software-as-a-service (SaaS). According to Gartner (2016), these cloud services are subject to a 26.4 % growth in 2017, resulting in a total market size of $ 89.8 billion worldwide. SaaS solutions make up the biggest amount among the three service categories with a forecasted market size of $ 46.3 billion for 2017.

For software-as-a-service applications, customers do not pay to own the software but instead only pay to access and use it . In turn, the SaaS provider hosts and operates the application (Cisco 2009). This business model offers various advantages to its customers, such as reduced IT dependence and costs and more flexibility as a company is able to scale the SaaS solution quickly as business conditions change (Waters 2005). The SaaS business model is also of advantage to its vendors. Compared to traditional software vendors, SaaS solution providers typically operate in close connection with their clients, which leads to greater knowledge about customers and their requirements. As clients pay on a regular basis in exchange for continued access to the application, SaaS companies possess a predictable recurring revenue stream (Cloud Strategies 2013). However, SaaS vendors also critically depend on the renewals of subscriptions making them highly sensitive to the clients constant software usage. Consequently, large parts of the SaaS vendor tasks can be compared to traditional customer relationship management. If the respective software solution demonstrates low or declining usage within a company, a renewal of the contract after the initial period of agreement will become unlikely. Therefore, adequately managing usage continuance intention, which is defined as the decision a user makes to use an application beyond the initial adoption (Ratten 2016), is key to the success of a SaaS business. Monitoring and likewise forecasting usage constitutes the foundation for strategic decision makers in SaaS companies to make informed business decisions. Research on SaaS in later phases of the software lifecycle, such as the usage continuance, is sparse (Walther et al. 2015). The existing literature stream on continued SaaS use primarily explores the influence of service quality, trust, and satisfaction on continuence use intention in SaaS (Benlian et al. 2010; 2011; Yang and Chou 2015). Only few studies address additional influencing factors by applying a socio-technical approach (Walther et al. 2015) or including assumptions from social cognitive theory (Ratten 2016). Furthermore, current studies share the limitation of using cross sectional data. The present work will examine determinants to continued SaaS use while implementing a longitudinal study approach. The data employed is not sampled by interviewing SaaS clients but rather the SaaS vendor himself. This approach enables a new perspective on the matter and allows for a focus on how the specific SaaS solution as well as a client’s characteristics influence usage continuance. Moreover, the present work is the first to examine the effect of activation measures performed by SaaS vendors on their software’s usage. The resulting research hypotheses center exactly around these three aspects, i.e. the software and the client characteristics, as well as the activation measures. To examine the research hypotheses, a linear mixed model is built to fit the underlying data and the effect of predictors on the software’s usage is determined. The final model is employed to predict usage figures in an out-of-time validation setting.

2 Related Work

In order to comprehend the existing body of knowledge on SaaS usage continuance, a literature review using the search terms ‘SaaS’, ‘Software-as-a-Service’, ‘continuance’, and ‘post-adoption’ was conducted. Although the term ‘post-adoption’ technically refers to behaviors that follow initial acceptance, it is often used as a synonym for continuance (Karahanna et al. 1999). The review revealed a steadily expanding body of literature exploring the drivers of SaaS adoption, specifically focusing on the circumstances under which companies introduce SaaS (Walther et al. 2015). Factors influencing the adoption decision process are examined for not only SaaS applications (Wu 2011a; 2011b), but likewise for cloud computing solutions in general (Sharma et al. 2016). In the cloud computing context, an additional stream of literature explores adoption issues related to change management (El-Gazzar et al. 2016; Wang et al. 2016). However, as SaaS is a relatively new phenomenon, research on later phases of the software lifecycle, such as the usage continuance, is sparse (Walther et al. 2015). Table 1 presents the reviewed studies and their focus.

The literature was categorized into different research goals by analyzing the paper’s focus on different SaaS performance measures. Then, the methodology of every study was evaluated including the type and source of data used and the different statistical model applied to the data. Research on customer behavior in similar fields has repeatedly shown that client characteristics have a strong impact on repurchase behavior and satisfaction (Mittal and Kamakura 2001; Ranaweera et al. 2005). Therefore, the literature was also analyzed for the inclusion of client characteristics in the models. Finally, it was analyzed whether vendor activation measures were considered as effects on usage continuance as they can have significant effect in other research areas (Kang et al. 2006). As a result of the literature review, the subsequent section will give an overview of research relevant for this study. The identified gaps in previous work will then be used to refine research objectives in Section 3.

2.1 Continuance Use Intention in Software-as-a-Service

A large share of literature on usage continuance in software-as-a-service strives to explain the role of service quality, trust, and satisfaction for continued SaaS use (Benlian et al. 2010; 2011; Yang and Chou 2015). Benlian et al. (2011) developed a SaaS measure to capture service quality evaluations in SaaS solutions. In doing so, they validated already established service quality dimensions (i.e. rapport, responsiveness, reliability, and features) and identified two new factors, namely security and flexibility. Yang and Chou (2015) explored the effects of service quality on trust, which in turn was hypothesized to affect a SaaS client’s post-adoption intention. They focused on three types of service quality (client orientation, client response, and environment) which proved to have a positive influence on trust in the service quality as well as trust in the provider. Moreover, both types of trust displayed a positive effect on post-adoption intention. Further research is concerned with the relationship between service quality and trust, which in turn are influencing factors of SaaS satisfaction (Chou and Chiang 2013; Pan and Mitchell 2015). However, compared to this study, none of these studies explicitly integrate usage continuance into their research models.

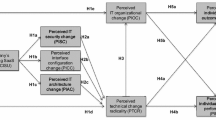

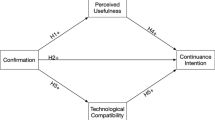

In addition to service quality, Walther et al. (2015) examined continuance intention of cloud-based enterprise systems, which constitute a specific form of SaaS, by taking a more extensive area of variables into account. Following a socio-technical approach, they included three factors of continuance forces (system quality, information quality, and net benefits) and two sources of continuance inertia (technical integration and system investment) into their research model. The results showed that system quality had the highest positive effect on continuance intention, followed by system investment. In turn, information quality was the only variable to not show a significant effect. Also, a recent study by Ratten (2016) explored continuance use intention for cloud computing solutions in general by building upon social cognitive theory. The analysis of survey data from managers of technology firms showed personal attitude to be the most important factor for continuance use of cloud computing.

The focus of the present work lies on the identification of factors influencing continuous use intention in SaaS applications as opposed to modeling churn rates of SaaS customers. Nevertheless, it should be mentioned that an interesting stream of literature evolved to analyze and predict actual churn rates in SaaS (Coussement and van den Poel 2008; Frank and Pittges 2009; Sukow and Grant 2013).

2.2 Identified Research Gaps

The review of related work identified an informative body of literature related to usage continuance in the software-as-a-service industry. However, previous studies suffer from several limitations with regard to their methodology as well as the analyzed influencing factors.

First, all empirical studies on usage continuance presented above collected their data from a single respondent within several organizations (Benlian et al. 2010; 2011; Yang and Chou 2015; Walther et al. 2015; Ratten 2016). To be exact, they sent out survey questionnaires to IT managers or key decision makers of firms that had recently adopted a SaaS application, or as in the case of the study from Ratten (2016), a general cloud computing solution. As existing analyses are based on self-reported data collected from a single source, Benlian et al. (2010) and Walther et al. (2015) express concerns about the study methodology. It can be argued that the results represent individual views rather than a shared opinion within a company, which might lead to a single respondent as well as a social desirability bias (Walther et al. 2015). Further, existing findings must be interpreted considering the limitations of cross-sectional studies. As data was only sampled once rather than over time, not all possibilities to adequately understand temporal relationships and to measure actual behavior are exhausted (Benlian et al. 2010; Walther et al. 2015). A research gap therefore lies in the acquisition of longitudinal data as well as data provided by not only single respondents to overcome restrictions of prior studies.

In addition to existing limitations in methodology, research gaps exist with regard to the factors examined to influence usage continuance in software-as-a-service. Current literature mainly focuses on the influence of service quality and the related concepts of trust and satisfaction on continued SaaS use (Benlian et al. 2010; 2011; Yang and Chou 2015). Although Walther and Eymann (2012) and Ratten (2016) introduced the inclusion of additional factors into the analysis, e.g. system investment and personal attitudes, so far no study examined the role of a client’s characteristics on usage continuance in SaaS. Client characteristics include constructs such as service age and client involvement, which might impact usage patterns and the degree to which continued usage can be attained. Further, Walther et al. (2015) suggest the inclusion of ‘hard data’ describing the implemented SaaS solution to further reduce common method variance. At last, only Goode et al. (2015) provide an analysis of a SaaS vendors possibilities to actively influence usage. However, they focus on security and not on activation measures.

3 Hypothesis Development

This work uses SaaS vendor interview panel data to examine how software and client characteristics influence continuance use intention in SaaS. Furthermore, the influence of vendor activation measures is considered as no previous work has included either client characteristics or vendor activation measures. An analysis on two levels is undertaken. First, the aim is to adequately characterize each client’s pattern of change over time in order to describe individual usage trajectory. This within-individual change over time shall be captured and examined. Second, it is intended to examine the association between predictor variables and the patterns of change to assess differences between clients. These interindividual differences are of equivalent importance. In general, the goal is to detect heterogeneity in change across clients and determine the relationship between predictors and the shape of each client’s individual trajectory. Overall, usage shall be examined according to a client’s software solution, a client’s characteristics, as well as the activation measures that were undertaken by the SaaS provider to increase usage.

3.1 Research Hypotheses

Thirteen distinct hypotheses were developed on the basis of the literature review and identified research objectives. Table 2 summarizes the derived hypotheses.

The hypotheses being tested can be divided into three broader categories, namely software characteristics, client characteristics, and activation measures. The outline of the present section is guided by this classification.

3.1.1 Software Characteristics

The first set of hypotheses is concerned with characteristics of the implemented software solution. Clients of a SaaS solution naturally do not access the exact same software solution but rather have their own system in place holding different features. These distinct characteristics are expected to influence a client’s software usage.

First, every client contributes a different amount of content to populate the SaaS solution before its launch. Afterwards, during the software’s utilization period, every client assures the continuous integration of additional content to a different extent. As a result, the amount of a client’s software content varies greatly. Trocchia and Janda (2003) show that a higher amount of content on retail websites is generally seen as positive. Therefore, SaaS solutions containing more content are expected to provide a higher added value to its users and thus are hypothesized to entail a higher usage penetration. Usage penetration refers to the share of users within a company using a SaaS solution within a given time period.

Hypothesis 1: The more content is available to users in the software, the higher the software’s usage penetration.

In addition to the amount of content available to the users of a SaaS solution, the content’s quality is likewise of interest. As Walther et al. (2015) were not able to find significant evidence of information quality to influence continuance intention, the present study aims at validating this finding. Therefore, hypothesis two states that higher levels of content quality lead to higher incentives to access the software and therefore increase usage penetration.

Hypothesis 2: The better the quality of a client’s software content, the higher the software’s usage penetration.

Apart from a software’s content, access barriers to the software might affect its utilization. The required completion of credentials at every login as well as the resulting troubles if these credentials are forgotten can discourage users to log in to the SaaS solution. Hope and Zhang (2015) show, that the implementation of a single sign-on (SSO) mechanism increases the likelihood to use and recommend software. Therefore, the third hypothesis can be formulated as follows.

Hypothesis 3: Clients who have implemented single sign-on to access their SaaS solution hold a higher usage penetration.

Furthermore, it is assumed that the look and feel of a software design affects a user’s decision to log in to the SaaS solution (Benlian et al. 2011). Therefore, an improved software design is proposed to have a positive impact on usage penetration.

Hypothesis 4: Clients using an improved and more contemporary software design hold a higher usage penetration.

In the study of Benlian et al. (2010) the variable feature, defined as the degree of the SaaS application’s functionalities to meet the client’s business requirements, was shown to have a significant effect on SaaS service quality, which in turn significantly influenced SaaS continuance intention. To follow up on these findings, the last hypothesis related to the software’s characteristics is concerned with the implemented modules within a client’s software solution. Modules differ with regard to their required interaction with the user. Process-oriented modules depict real-world processes within the SaaS system and therefore require the interaction of involved users on a regular basis. Hence, having implemented such a module is hypothesized to positively influence usage.

Hypothesis 5: Clients who have implemented a process-oriented module (as opposed to only knowledge management modules) hold a higher usage penetration.

3.1.2 Client Characteristics

The second set of hypotheses is related to the distinct features characterizing a SaaS client. First, the period of time a client has been using the SaaS solution is expected to have a negative influence on its usage. This is due to the fact that users tend to initially log in to the software after its launch but then do not percieve it as useful or find it difficult to include the use of the new system into their daily routine leading to an overall low adoption (Wallace and Sheetz 2014). Therefore, it might become more difficult to keep employees using the software once the initial launch promotion has faded.

Hypothesis 6: The longer a client has been using the SaaS solution, the smaller the software’s usage penetration.

Further, SaaS clients differ with respect to their motivation to establish the software solution internally and incentivize employees to deploy the software in a long-term perspective. However, client involvement is crucial for software adoption and satisfaction (Baroudi et al. 1986). Therefore, it is hypothesized that clients demonstrating a greater involvement possess an increased usage penetration.

Hypothesis 7: The greater a client’s involvement in managing his software-as-a-service solution, the higher the software’s usage penetration.

In addition to the client’s age and involvement, characteristics of the relationship between the SaaS client and vendor are hypothesized to affect usage. Clients differ with regard to their type of internal management structure. Some clients are managed on a centralized basis with one headquarter making the decision for the other regions of the world with regard to how the SaaS solution should be adopted. On the contrary, there exist clients for which every region is solely responsible for making decision about the SaaS implementation and ongoing procedures. El-Gazzar et al. (2016) show that in particular complex hierarchical organizations had difficulties adopting new software. As strategic decisions and activation measures on the side of the SaaS client strongly influence the software’s usage, having only one authority on the client’s side to manage the SaaS solution is expected to result in a complexity decrease and hence in a higher usage penetration.

Hypothesis 8: Clients that can be managed on a centralized basis hold a higher usage penetration.

Additionally, clients differ with regard to their SaaS contact persons. Similar to the type of management, having numerous contact persons is making the coordination between the SaaS client and vendor cumbersome. Hence, the amount of contact persons is expected to negatively influence usage penetration.

Hypothesis 9: The fewer persons are in charge of taking care of the SaaS solution on the client’s side, the higher the software’s usage penetration.

The last dimension of the relationship between the SaaS client and provider is the demand for counseling, i.e. the strength and intensity of the relationship between both parties. The study of Palos-Sanchez et al. (2017) concludes that there is a considerable impact of communication on percieved ease of use. It is therefore expected that the extent to which a SaaS provider is able to communicate with, advise and influence his client has a positive impact on the software usage.

Hypothesis 10: The higher a client’s demand for counseling through the SaaS consultant (via phone and email), the higher the software’s usage penetration.

3.1.3 Activation Measures

The remaining hypotheses examine the options SaaS vendors possess to increase usage penetration of a client’s software solution. The impact of activation strategies has been explored in different contexts (Hibbard 2009; Leimeister et al. 2009). A variety of tools is available to activate clients and consumers in the digital space in particular. First of all, it is expected that a banner implemented at the client’s intranet and redirecting to the client’s SaaS solution has an impact on the software’s usage. Therefore, it is hypothesized that the placement of a banner has a direct and positive effect on usage penetration.

Hypothesis 11: The presence of a banner redirecting to a client’s SaaS solution increases a client’s usage penetration.

The next hypotheses are concerned with the performance of communication and education measures. For one, SaaS vendors usually possess the ability to send out newsletters to the software’s users. These newsletters contain clickable links to animate users to access the SaaS solution and remind users of its existence. In their work, Merisavo and Raulas (2004) show that newsletters and e-mail marketing have a strong impact on website visits and brand loyalty. Therefore, newsletters are proposed to also have a positive impact on SaaS usage.

Hypothesis 12: Sending out newsletters increases a client’s usage penetration.

Furthermore, SaaS vendors occasionally conduct trainings for new and already existing users. These trainings might constitute onboarding sessions for new users or simply refresher trainings for an existing user base. Benlian et al. (2010) include trainings in their rapport variable and show that it has relatively low impact on usage intention and satisfaction. For usage penetration, trainings are predicted to have some influence on a software’s usage. This leads to the final hypothesis.

Hypothesis 13: Performing trainings increases a client’s usage penetration.

The identified research gaps deduced from the literature review as well as the consequent research hypotheses form the basis for the proceeding study methodology.

4 Methodology

In order to test the hypotheses, a combination of a qualitative and quantitative approach was undertaken. The following chapter discusses the steps of this research, including the modeling of longitudinal data, the model choice, effects of fixed and random factors and the variables in the final data set.

4.1 Longitudinal Study Design

This study uses a longitudinal design as opposed to the cross-sectional designs of previous papers. As longitudinal data provides repeated measurements of the same units, one is able to control for unknown or unmeasured determinants of the variable that are constant over time and therefore for omitted variable bias (Andreß et al. 2013). It is also easier to assess whether changes of the independent variable precede changes of the dependent one or vice versa. Further, longitudinal studies allow for the analysis of intra-individual change across time. Finally longitudinal data can be used to examine measurement error and assess the reliability of a variable by comparing several measurements of the respective variable over time. This method, called test-retest reliability, is easily done with longitudinal data (Andreß et al. 2013) Generalized linear mixed models (GLMMs) are employed to estimate the effect of change in the independent variables on a particular participant, given their individual characteristics. In order to fit and analyze the linear mixed models, the R ‘lme4’ package of Bates et al. (2015) was applied.

4.1.1 Types of Factors and their Associated Effects in a LMM

Equation 1 depicts a general linear mixed model in matrix notation. yi represents the outcome variable for subject i, with i = 1,...,N.

Xit is a matrix of the fixed effects predictor variables including time, with t = 1,...,T, and β as a vector of the fixed effects regression coefficients. Then, Zit is the design matrix for the random effects and can be read as the random complement to the fixed Xit. Further, γi is a vector of the random effects that can be interpreted as the random complement to the fixed β. Eventually, 𝜖i is a vector of the errors representing the part of yi that is not explained by the model. Categorical variables, for which all levels that are of interest in the study have been included, are defined as fixed factors (West et al. 2007). These might include variables such as age group, gender, or treatment method. Contrary, random factors are categorical variables with levels that have only been sampled from a population of levels being studied. All possible levels of the random factor are not present in the data set, although the study aims at making inferences about the entire population of levels (West et al. 2007). Fixed and random factors both posses related effects on the dependent variable. The relationships between the predictor variables, i.e. the fixed factors and the continuous covariates, and the dependent variable for an entire population of units of analysis is described by fixed effects. Random effects on the other hand are random values linked to the levels of a random factor. These values are specific to a given level of a random factor and usually represent random deviations from the relationships described by fixed effects (West et al. 2007). Random effects can either enter the linear mixed model as random intercepts, describing random deviations for a given subject from the model’s overall fixed intercept, or as random slopes, describing random deviations for a given subject from the overall fixed effects in the model (West et al. 2007).

4.2 Study and Variable Description

The raw data was contributed by the Market Logic Software AG. Market Logic offers numerous plug and play modules, among others in the areas of knowledge management, analytics, as well as marketing and research management. Market Logic’s SaaS solution is deployed by marketing and research teams of global clients stemming from the FMCG, healthcare, retail, high-tech, finance, and communications sectors (Market Logic Software 2017). The data sample comprises information on 17 clients for a period of 65 weeks. This data set includes missing values for some clients as not all of them had been launched at the beginning of the time frame under investigation. Further, these clients were selected on the basis of the timespan since the software solution had been launched in their company, i.e. a major selection criteria constituted the amount of data that was already available for the proposed analysis. As Market Logic is a rather young corporation, there only exist few clients that have been actively using the software for a longer time frame. Hence, the employed data sample is limited in this regard.

Table 3 describes the variables of the final data set, their type and source. The first column lists the corresponding hypothesis for every variable. Two hypotheses consist of multiple indicators, all other hypotheses are based on single indicators. For this kind of modeling we took inspiration from Posey et al. (2014). The first two variables are identifying variables for the data and therefore not part of the model. Afterwards the variables are split into three major sources. System export variables have been extracted from system export files which were dumped a single time to retroactively construct the longitudinal data. All system export variables are obtained by counting and normalizing occurrences, i.e. for r.project the number of active projects at the specific point in time. These observed variables are self-developed indicators for the first construct. All interview variables have been taken from structured interviews conducted with the respective account managers at Market Logic. The respective questionnaire can be found in the online Appendix A. Time varying variables were collected through the help of retrospective questions to get information on the past 65 weeks. The categorical variables newsletter and training include the levels “No, Content, Other” and “No, new, existing”. In addition to these time-varying variables, further static variables were retrieved during the interviews for which scaling and other details can be obtained from the questionnaire (Appendix A). Eventually, the dependent variable usage.penetration, i.e. the weekly usage penetration of a client’s SaaS solution, was captured. A percentage rather than an absolute user count was employed to ensure comparability across clients. The monitoring and reporting tool provided information on the weekly login data of the software’s users. The dependent variable is calculated by dividing a client’s weekly user count by the client’s potential weekly user base. Overall, all variables referenced in Table 3 except for id, t and usage.penetration are used as independent variables, forming the present dataset together with the dependent variable usage.penetration.

5 Descriptive Analysis

The following section reports the results of an exploratory analysis of the data. As out-of-time validation will be used, only data from the first year, i.e. the first 52 weeks, are utilized to build the final model. Further, as two of the 17 clients had not yet been launched in the first 52 weeks, the subsequent dataset only involves 15 clients. The data of the additional two clients will be used at a later point to validate the final model. The present panel is characterized by the number of points in time measured exceeding the number of observed clients. This characteristic is sometimes also referred to as type II panel (Andreß et al. 2013). Graphical data analysis is a necessary component of good research methodology (Locascio and Atri 2011). As the underlying data set entails a longitudinal design, it is natural to explore this data on change. Hence, it is analyzed how each client changes over time. The following analysis is able to investigate how a single client changes over time and whether different clients change in similar or different ways. Further, this visual display of the longitudinal data set can aid in the identification of outliers, reveal unexpected relationships, and help explain ensuing statistical results (Long 2012). Figure 1 depicts, for each client, the weekly values of the dependent variable, usage.penetration (black line) over the first 52 weeks of the observation period. The figure also shows the result of an OLS regression of the dependent using the week number as input (blue line) and a corresponding 95 % confidence interval (grey-shaded area) Fig. 1 displays the graphs of individual-level curves of ascertained scores for all 15 clients and across the first 52 periods. Inspection of Fig. 1 provides information about the extent of individual variability, which is substantial for suggesting the number of random effects in the later model (Long 2012). The single values of clients 4, 5 and 8 are high and volatile in comparison to other clients, especially at the beginning of the period. Volatility results from all three clients having just been launched at the beginning of the observed timespan. Hence, up to this point the user base was still small inducing high percentage changes. As the values seem to be consistent with the overall data set, the extreme values of clients 4, 5 and 8 will not be treated as outliers. One of the most important relationships to plot for longitudinal data on multiple subjects is the trend of the dependent variable over time and by subject (Bates 2010). Hence, in addition to the individual-level curves Fig. 1 displays the fitted ordinary least squares (OLS) curves with the 95 % confidence interval superimposed on the trajectory of each client.

5.1 Conditioning on Predictors

Comparing the exploratory OLS-fitted curves with the observed data points allows for the evaluation of how well the chosen linear change model fits each client’s change curve. For some clients (e.g. 10 and 14) the linear change model fits well as their observed and fitted values nearly coincide and the 95 % confidence level interval is small. For other clients (e.g. 4 and 5) the observed and fitted values are more disparate. This is due to the extreme values as well as the rather volatile shape of the curves. Despite substantial variation in the quality of exploratory model fit, Fig. 1 supports the assumption that a linear model is adequate for modeling the underlying data as for the majority of clients the fitted curves deviate only slightly from a straight line.

When analyzing interindividual difference, it becomes apparent that both fitted intercepts and slopes vary, reflecting the heterogeneity in trajectories. To explain these differences, individual characteristics conditional on predictors need to be observed. Information gained from such graphs can then be used to specify fixed effects for the final model (Long 2012). The variables quality, sso, and process that characterize the software of a client are potential candidates to be included into the linear mixed model as fixed effects. For the variables characterizing the client himself, only age and involve show promising patterns that indicate a potential significant effect on usage penetration. Finally, the plots of all three variables related to activation measures performed by SaaS vendors indicate an effect on usage penetration. For the sake of brevity, evaluation will be demonstrated on two figures. To exemplify the analysis of boxplots, Fig. 2 is evaluated. Client consultants were asked to rate the quality of the content that is available to users in the software. Figure 2 displays four boxplots of the usage penetration scores conditional on the level of quality of the software’s content.

Although the differences of spread of the single boxplots impede a comparison, one might conclude by examining the medians that the underlying data displays a rather bell-shaped behavior. Hence, the model selection process should likewise consider a quadratic term of quality to test for significant effects on the dependent variable. Other than boxplots, conditional plots were used to evaluate variable correlation. Figure 3 depicts the conditional plot of the continuous variable age. As hypothesized, the smoothing line displays a descending, almost exponential trend. The plot indicates that usage penetration is high but volatile during the first year, while it becomes more stable and smaller over time. This is except the extreme values originating from one client. Hence, the plot suggests to include the variable age into the final model while accounting for specific clients through a random intercept effect. All hypotheses were evaluated using conditional plots or boxplots.

A summary of the results obtained through the exploratory analysis is presented in Table 4. The overview lists all 13 previously formulated hypotheses together with their related variables. Further, it states the graphically observed influence of each of the 19 independent variables on usage penetration based on the conditional plots. For clients using Single sign-on, usage penetration is indeed higher than for clients with no SSO as the interquartile ranges of both boxplots only overlap slightly. Boxplots of the variable process display that clients having implemented a process-oriented module hold a higher usage penetration than clients only utilizing the software’s knowledge management modules. Therefore both Single sign-on and process have positive effect on usage penetration. The boxplots conditional on the variable involve indicate that a higher degree of involvement is related to higher usage penetration values. However, similar to quality, it might be concluded that the underlying data displays a u-shaped behavior. Hence, the model selection process should consider a quadratic term. All three activation measures show positive effect in their conditional boxplots with higher medians when the activation measure is utilized. The exception being trainings for new users which could however be explained by the low number of observations for this case. All remaining variables showed little to no trends in conditional plots or differences between categories in boxplots. Plots used for this evaluation can be found in the online Appendix C.

5.2 Model Building

This chapter illustrates the fitting of a linear mixed model. First, it should be briefly illustrated how the linear mixed model was chosen. When the number of fixed and random effects is not known in advance, the prevailing approach to model building is the step-up strategy (Ryoo 2011). Additionally to the step up strategy, the likelihood ratio test (LRT) was used for model selection. It is the most commonly used test in regular hypothesis testing settings due to its desirable theoretical properties and the fact that it is easy to construct (Zhang and Lin 2008). Linear mixed models are suitable for the analysis of longitudinal data when one is interested in modeling the effects of time and other predictors on a continuous dependent variable. Further, these models allow to investigate the amount of between-subject variance in the effects of the predictors across the subjects of the study (West et al. 2007). Hence, models will be considered that allow the client specific coefficients describing individual time trajectories to vary randomly. The objective of fitting a model to the data set is to answer questions about the process which generated these data. Consequently, the model built in this section aims at being a useful approximation of the underlying process resulting in a small number of parameters whose values can be interpreted as answers to the research questions(Diggle et al. 2013).

5.2.1 Model Selection Process

Nineteen independent variables related to the formulated hypotheses are treated as fixed factors in the current analysis. Only the variables id and t are considered for the random effects part of the model. As the variable id represents only a sample of clients and the variable t an exemplary time fragment, both variables are not of direct interest but their underlying effects on the dependent variable are still crucial to include into the final model.

Intercept-Only Model

The starting model constitutes an intercept-only model. This is the simplest model that can be considered since it only comprises a random effect associated with the intercept of each client. As the exploratory analysis showed, usage penetration values highly dependent on the client they belong to. Therefore, it is sensible to allow the intercept term to vary across clients. The first model, i.e. the intercept-only model, is fitted in R using the lmer() function of the ‘lme4’ package.

For visualization purposes, the fit of model 1 is displayed in Fig. 4. The graphs look similar to mean curves of each client. However, model 1 is different from a means-only model in that it captures the between-subjects variability by incorporating a random effects part (Ryoo 2011). Hence the green curve in Fig. 4 is simply modeled by the random intercept effect of each client.

Model with fixed and random Effects

The selection of the fixed effect part of the linear mixed model, results in the model of Equation 3.

Using client as a random intercept effect significantly improves the model’s fit. Therefore, the intercept term of the final model is allowed to vary across clients and thereby the between-client variation is modeled. The variable t constitutes the second random factor that is evaluated as a random effect in the model. As t is a time-varying variable, it is sensible to not include it as a random intercept but rather as a random slope effect. With that it is tested whether clients do not only have different initial usage penetration but also vary in their trajectories over time. The final model allows a client to not only have an own intercept but also an own slope. Hence, the linear mixed effects model takes on the form of Eq. 4.

6 Results

6.1 Hypothesis Testing

Testing the main hypotheses using linear mixed models requires an approach to obtain approximate p-values of the fixed effect estimates. To that end, fixed factors were successively removed from the final linear mixed model. The LRT was utilized for the comparison of reference and nested models. The results listed in Table 5 show that the variables banner, newsletter, and training are highly significant with p-values of < 0.001. However, the inclusion of random factor t caused a drop in significance for the fixed factor age. Therefore, the effect of this factor can only be accepted at a marginal significance level of 10% with the p-value being 0.0619. In order to validate these results, p-values of the fixed effect parameters were also calculated based on an approximate F-test. For this, both Satterthwaite’s as well as Kenward-Roger’s approximations for the degrees of freedoms were utilized. To perform this task in R, the package ‘lmerTest’ was chosen (Kuznetsova et al. 2016). The results of both Kenward-Roger’s and Satterthwaite’s are also listed in Table 5. Both approximations result in the same significance levels. Moreover, the results were identical to the ones proposed by the likelihood ratio test. Hence, only the activation measure variables, namely banner, newsletter, and training, display a highly significant effect on usage penetration, whereas the variable age can only be reported significant at a 10% α-level. Furthermore, to obtain the p-values of individual factor levels, the Kenward-Roger test was used again and results can also be found in Table 5.

The results reveal that the levels of fixed factors newsletter and training possess different significance levels. The p-value of newsletters not related to the SaaS software’s content is greater, although still corresponding to a significance level of 5%. The p-value for trainings conducted to an existing user base, however, can only be accepted at a α-level of 10% with a p-value of 0.08. Therefore, the intercept term as well as the fixed effects, except for age and training/existing, display a significant effect on usage penetration. Although the results are only significant at an α-level of 10 %, the estimate of the fixed effect age suggests, as hypothesized, that the maturity of a client’s software solutions has a negative effect on usage penetration. In particular, every additional week following the initial launch of the software decreases usage penetration by 0.04 %. The estimate therefore indicates that usage penetration decreases on average by about 2 % per year. The results also suggest that activating a banner on a SaaS client’s intranet elevates usage penetration by 5.18 %. All other measures listed in Table 5 also positively contribute on a significant level.

Overall, apart from hypothesis 6, hypotheses one through ten can not be supported by the available data. Consequently, a significant effect of neither software nor client characteristics on usage penetration could be identified in this study. Only age, with a p-value of 0.06, can be accepted at a marginal significance of 10%. As all three variables testing the effect of activation measures on usage penetration showed a significant effect, there is support for hypothesis eleven, twelve, and thirteen.

6.2 Predictive Accuracy

To avoid over-fitting, the final linear mixed model is utilized in an out-of-time validation to forecast future usage penetration values. As was explained above, only data for the first 52 weeks that included observations from 15 clients were examined. In order to test the predictive accuracy of the final model, it is applied to the remaining data of the given period. First, the model attempts to predict future usage penetration values of the previously discussed 15 clients. Subsequently, the model strives to predict usage penetration for two newly launched and previously unseen clients whose data were not part of the model building process.

The most basic predictive check is a visual comparison of the observed data to a replication under the model built (Gelman and Hill 2009). Therefore, in order to estimate how accurately the model predicts novel observations, Fig. 5 displays the predicted usage penetration curves based on the final model for the weeks 53 through 65. Apart from some exceptions (e.g. client 5), the model seems to predict the usage penetration levels of each client quite well. Further, for some clients (e.g. clients 9 and 12) the model is even able to correctly predict small increases and drops in usage penetration.

In addition, predicted to actual responses are compared by plotting the predicted versus the actual usage penetration values. The respective graph can be found in the online Appendix D. Predictions are close to the actual values except for usage penetration of about 20% where predictions deviate more strongly. The root mean squared error of the prediction is 5.23 as opposed to the RMSE of the final model on the training data which was 4.36. However, given the plotted graphs as well as the RMSE values, it can still be concluded that the model adequately predicts usage penetration values for clients that were already part of the training data set.

Furthermore, the model’s predictive accuracy shall be measured by predicting usage penetration values of clients that have not been part of the training data set, i.e. that were not included in building the final linear mixed model. Two clients within the collected data set had only been launched in week 44 and 50, so that data for the first year under investigation was not sufficiently available. Therefore, the final model now strives to predict the usage penetration values of these previously unseen clients. The respective graph that plots the predicted usage penetration curves based on the final model can be found in Fig. 6. For client 17 the model is able to predict the average level of usage penetration values, whereas it fails in modeling the correct level for client 16. For both clients, no increases and drops in usage penetration are modeled.

The predicted versus the actual values are likewise plotted for the previously unseen clients and the graph can again be found in the online Appendix D. This plot also indicates that the model fails to predict usage penetration values for previously unseen clients. The RMSE for the previously unseen clients is considerably higher with a value of 13.93. This constitutes an increase of almost 220% as compared to the RMSE of the final model on the training data.

Overall, it can be concluded that the final model possesses the ability to predict future usage penetration values of clients whose former data have been used in the model building process. However, the final model fails to predict usage penetration values of clients within their initial post-launch phase whose data were not part of the model building process. The inability of the model to generalize to these new clients suggests that the connection between covariates and the dependent, usage penetration, is different shortly after onboarding a new client. Considering a longer client history during estimation, it is plausible that the model is geared toward more mature clients and fails to predict usage penetration patterns of new clients well if these clients behave differently.

7 Discussion

The preceding section aimed at modeling usage penetration values of SaaS clients by applying a linear mixed model. Whereas existing literature on continued SaaS use mainly focuses on service quality, trust, and satisfaction, the independent variables in focus of the present study were related to characteristics of the SaaS solution and the SaaS client, as well as activation measures taken by the SaaS provider. Despite the importance of the differences between clients, the client specific characteristics such as degree of involvement, management structure, or counseling demand did not reveal a significant effect on usage penetration. As a result, hypotheses related to a client’s characteristics could not be confirmed in the present study. Only the age of a client showed a slight trend towards significance but could not be validated on an α-level of 5 %. However, this result has to be examined with caution given the approximation of the user base for calculating the dependent variable. Obsolete users might accrue over time leading to a bias in the underlying user base count and therefore to a decrease in usage penetration. Consequently, it has to be taken into account during the statistical inference. Therefore, incorporating the previous matter and the high p-value of age, performing inference related to the client’s software age is not recommended. Similarly to client characteristics, software characteristics such as amount of content, quality of content and availability of SSO also had no significant effect on usage penetration in the specified model. However, the present study does find that performing activation measures has a strong

and statistically significant impact on usage penetration. In particular, activating a banner on the client’s intranet home page has the greatest influence on the dependent variable. Using a banner positively influenced usage penetration by 5.2 % which is likely due to a banner unobtrusively promoting the SaaS solution and creating awareness as well as directly redirecting the user to the platform. It therefore represents a favorable way to ensure continued use intention in software-as-a-service. Newsletters and trainings hold a smaller effect on usage penetration, but still influence it by + 1.8 % to + 2.9 %. Content newsletters express a greater significance than other newsletters, yet, this might be due to their increased occurrence in the data set. Furthermore, not all clients allow newsletters to be sent out to their employees. Therefore, newsletters as an activation measure to increase usage are only practicable for some clients. Out of all activation measure variables, training existing users displays the smallest effect on usage penetration and is only marginally significant at an α-level of 10 %. A reason for the low level of significance of trainings to existing users might be due to the smaller amount of occurrences in the data set. However, the smaller size of the effect on usage penetration seems reasonable, as these trainings do not only serve the purpose of refreshing knowledge about the software for occasional users but to likewise train power users on new functionalities. A summary on all hypotheses and the respective results can be found in Table 6.

The suitability of the model specification was examined by analyzing residuals and calculating fit and error metrics such as R2 and RMSE. Additional information is available in Appendix B. Overall, some violations of model assumptions are observed for the employed data. However, simulation results from Jacqmin-Gadda et al. (2007) suggest that these violations did not impede the final model. The between-client variation, displayed by the variance of the random intercept term id, accounts for the greatest part of variation in the model. This points out that a specific client, i.e. the level of the variable id, influences the respective usage penetration values to a great extent. As a result, the final model proved appropriate for forecasting out of sample usage penetration values of clients whose data have been included into the model building process. However, it performed worse in predicting usage penetration values of new clients. Finally, to answer the formulated research question the present work concludes on the basis of the data analyzed that characteristics of the software as well as the client do not influence continuance use intention in software-as-a-service. However, SaaS vendors are able to increase weekly software usage penetration by around 2 % - 5 % through the execution of activation measures, i.e. intranet banners, newsletters, and trainings.

7.1 Implications for Practice

These results provide insights to SaaS account managers displaying the effect of activation measures on software usage. This enables managers to specifically perform usage triggers as needed. Since usage figures are frequently used as performance indicators and constitute the foundation of bonus payments, they are of considerable importance to SaaS account managers. Hence, it is especially valuable to obtain a concrete measure of the influence an account manager has on usage through the execution of activation measures. The insights generated in the course of the present work are likewise of relevance to the senior management of SaaS companies. As the management typically specifies the usage goals of every client team, it is of advantage to get an improved feeling for the factors influencing a client’s usage to assess usage potentials and allow for better informed decisions. Further, for both parties it is an extremely valuable insight of the study to show that clients vary strongly from one another. Hence, when evaluating a client’s performance it is important to take the respective circumstances into account. Finally, problems with the generalization of usage penetration prediction models to new clients were observed in the study. Corresponding results suggest that new clients need treatment different from those of mature clients. This treatment could consist of using specific forecasting models for new clients, which might incorporate a substantial amount of expert judgement to address the lack for data for these clients. One may also consider to routinely update usage penetration prediction models once auxiliary data from new clients becomes available.

7.2 Implications for Research

Results also provide some implications for research on SaaS. In particular, this work showed that by focusing on usage penetration, researchers can get a more holistic view of the customer lifecycle. Therefore, future studies should consider usage penetration as target variable as it can be observed before churn happens, therefore including trends, and it provides objective information on use as opposed to the more subjective intention and satisfaction. Another important takeaway for researchers is that tools that traditionally stem from customer relationship management (CRM) research strongly impact the SaaS lifecycle. Therefore, CRM research and methods should be considered when developing studies, especially as considerable effort is spent by SaaS vendors on tools such as activation measures.

8 Limitations and Future Research

The present study could successfully overcome limitations of former research methodologies by implementing a longitudinal study approach and collecting data directly from a SaaS provider rather than questioning key decision makers at SaaS implementing firms. However, this type of method likewise possesses drawbacks such as memory error (Bernard et al. 1984). Even though the present research design limited the amount of retrospective questions to areas that could be answered with the help of documentation, some records might be incomplete or erroneous. Especially, when providing input for the variables newsletter and training, account managers struggled to retrieve the exact dates on when the specific activation measures were performed. Further, the variables quality, involve, mgmt.central, contact, phone, and email could only be retrieved in a static manner, i.e. they lack information on change over time. This constitutes a limitation to the current study as it is only an approximation to assume that the values of these variables were constant over time. The discussion on the variables related to a client’s characteristics raises the question of the appropriateness of the variables captured. Either the variables were not suitable to characterize a client in the given context or the lack of variation in time for some variables might have influenced the non-significance. In addition, as clients vary considerably, a greater sample size might have contributed to the significance of certain fixed factors. The same holds true for the characteristics that define a client’s SaaS solution. It is for future research to validate if the lack of significance is due to the analyzed sample or if a software’s characteristics in the SaaS context does not influence its usage significantly. Based on this study’s findings, future research is encouraged to further investigate the effect of client and software specific characteristics on continued SaaS use. Particularly, studies involving time-varying variables on a client’s characteristics and analyses involving a greater sample size should yield valuable insights for SaaS providers. Besides, in line with previous research the current study recommends the analysis of relevant data sub-samples to add additional practical insights into the most important drivers of SaaS usage continuance. Future studies should therefore consider the examination of different industries, firm sizes, or user groups and their related effects on continued SaaS use. Testing survival models to capture when client’s usage penetration exceeds or falls below a previously defined threshold could also be an interesting avenue for future research and may allow to address challenges related to predicting the development of usage penetration for new clients, which was observed in the study.

9 Conclusion

The present study explored the role of software characteristics, client characteristics, and activation measures in the context of usage continuance in software-as-a-service. By implementing a longitudinal study approach and employing data collected from a SaaS provider, this work was able to contribute to the existing literature. With the help of a linear mixed model it could be shown that activating a banner on the client’s intranet home page, sending out a newsletter, and conducting a training has a positive effect on usage penetration. Empirical evidence was insufficient to judge the effect of software and client characteristics on usage penetration. In conclusion, with the SaaS business model gaining more and more relevance for software vendors, analyzing influencing factors on continued software usage will continue to be important. Especially as SaaS companies grow, they are confronted with the challenge of customer churn. Hence, establishing a dedicated client controlling and risk reporting is key for a successful SaaS business. Part of this process involves the identification of factors influencing a software’s usage to assist the prevention of customer churn at the earliest stage possible.

References

Andreß, H.-J., Golsch, K., & Schmidt, A.W. (2013). Applied panel data analysis for economic and social surveys. Berlin: Springer.

Baroudi, J.J., Olson, M.H., & Ives, B. (1986). An empirical study of the impact of user involvement on system usage and information satisfaction. Communications of the ACM, 29(3), 232–238.

Bates, D.M. (2010). Lme4: Mixed-effects modeling with R. New York: Springer.

Bates, D., Mächler, M., Bolker, B., & Walker, S. (2015). Fitting linear mixed-effects models using lme4. Journal of Statistical Software 67(1).

Benlian, A., Koufaris, M., & Hess, T. (2010). The Role of SAAS service quality for continued SAAS use: Empirical insights from SAAS using firms. ICIS 2010 Proceedings.

Benlian, A., Koufaris, M., & Hess, T. (2011). Service quality in software-as-a-service:, developing the saas-qual measure and examining its role in usage continuance. Journal of Management Information Systems, 28 (3), 85–126.

Bernard, H.R., Killworth, P., Kronenfeld, D., & Sailer, L. (1984). The problem of informant accuracy: the validity of retrospective data. Annual Review of Anthropology, 13(1), 495–517.

Chou, S.-W., & Chiang, C.-H. (2013). Understanding the formation of software-as-a-service (SaaS) satisfaction from the perspective of service quality. Decision Support Systems, 56, 148–155.

Cisco. (2009). The cisco powered network cloud: an exciting managed services opportunity. Corporate White Paper, Cisco AG.

Cloud Strategies. (2013). Why software vendors are going SaaS. available at: https://cloudstrategies.biz/going-to-saas/.

Coussement, K., & van den Poel, D. (2008). Churn prediction in subscription services: an application of support vector machines while comparing two parameter-selection techniques. Expert Systems with Applications, 34(1), 313–327.

Diggle, P., Heagerty, P.J., Liang, K.-Y., & Zeger, S.L. (2013). Analysis of longitudinal data, volume 25 of Oxford statistical science series, 2nd edn. Oxford: Oxford University Press.

El-Gazzar, R., Hustad, E., & Olsen, D.H. (2016). Understanding cloud computing adoption issues: A Delphi study approach. Journal of Systems and Software, 118, 64–84.

Frank, B., & Pittges, J. (2009). Analyzing customer churn in the software as a service (SaaS) industry. Southeastern InfORMS Conference Proceedings, 2009, 481–488.

Gartner. (2016). Forecast analysis: public cloud services, worldwide, 4Q16 Update. available at: https://www.gartner.com/newsroom/id/3616417.

Gelman, A., & Hill, J. (2009). Data analysis using regression and multilevel/hierarchical models. Analytical methods for social research. 11. printing edition. Cambridge: Cambridge University Press.

Goode, S., Lin, C., Tsai, J.C., & Jiang, J.J. (2015). Rethinking the role of security in client satisfaction with software-as-a-service (saas) providers. Decision Support Systems, 70, 73–85.

Hibbard, J.H. (2009). Using systematic measurement to target consumer activation strategies. Medical Care Research and Review, 66(1_suppl), 9S–27S.

Hope, P., & Zhang, X. (2015). Examining user satisfaction with single sign-on and computer application roaming within emergency departments. Health informatics journal, 21(2), 107–119.

Jacqmin-Gadda, H., Sibillot, S., Proust, C., Molina, J.-M., & Thiébaut, R. (2007). Robustness of the linear mixed model to misspecified error distribution. Computational Statistics & Data Analysis, 51(10), 5142–5154.

Kang, H., Hahn, M., Fortin, D.R., Hyun, Y.J., & Eom, Y. (2006). Effects of perceived behavioral control on the consumer usage intention of e-coupons. Psychology & Marketing, 23(10), 841–864.

Karahanna, E., Straub, D.W., & Chervany, N.L. (1999). Information technology adoption across time:, a cross-sectional comparison of pre-adoption and post-adoption beliefs. MIS Quarterly, 23(2), 183.

Kuznetsova, A., Brockhoff, P.B., & Christensen, R.H.B. (2016). LmerTest: tests in linear mixed effects models: R package version 2.0-33. available at: https://CRAN.R-project.org/package=lmerTest.

Leimeister, J.M., Huber, M., Bretschneider, U., & Krcmar, H. (2009). Leveraging crowdsourcing: activation-supporting components for it-based ideas competition. Journal of management information systems, 26(1), 197–224.

Locascio, J.J., & Atri, A. (2011). An overview of longitudinal data analysis methods for neurological research. Dementia and Geriatric Cognitive Disorders EXTRA, 1(1), 330–357.

Long, J.D. (2012). Longitudinal data analysis for the behavioral sciences using R. SAGE, Los Angeles, California.

Market Logic Software. (2017). About market logic. available at: https://www.marketlogicsoftware.com/about/.

Merisavo, M., & Raulas, M. (2004). The impact of e-mail marketing on brand loyalty. Journal of Product & Brand Management, 13(7), 498–505.

Mittal, V., & Kamakura, W.A. (2001). Satisfaction, repurchase intent, and repurchase behavior: Investigating the moderating effect of customer characteristics. Journal of marketing research, 38(1), 131–142.

Palos-Sanchez, P.R., Arenas-Marquez, F.J., & Aguayo-Camacho, M. (2017). Cloud computing (saas) adoption as a strategic technology: results of an empirical study. Mobile Information Systems, 2017.

Pan, W., & Mitchell, G. (2015). Software as a service (SaaS) quality management and service level agreement. INFuture2015 pp 225–234.

Posey, C., Roberts, T., Lowry, P.B., & Bennett, B. (2014). Multiple indicators and multiple causes (mimic) models as a mixed-modelling technique: a tutorial and an annotated example. Communications of the Association for Information Systems 36(11).

Ranaweera, C., McDougall, G., & Bansal, H. (2005). A model of online customer behavior during the initial transaction: Moderating effects of customer characteristics. Marketing Theory, 5(1), 51–74.

Ratten, V. (2016). Continuance use intention of cloud computing: Innovativeness and creativity perspectives. Journal of Business Research, 69(5), 1737–1740.

Ryoo, J.H. (2011). Model selection with the linear mixed model for longitudinal data. Multivariate behavioral research, 46(4), 598–624.

Sharma, S.K., Al-Badi, A.H., Govindaluri, S.M., & Al-Kharusi, M.H. (2016). Predicting motivators of cloud computing adoption: A developing country perspective. Computers in Human Behavior, 62, 61–69.

Sukow, A.E.R., & Grant, R. (2013). Forecasting and the role of churn in software-as-a-service business models. iBusiness, 05(01), 49–57.

Trocchia, P.J., & Janda, S. (2003). How do consumers evaluate internet retail service quality?. Journal of services marketing, 17(3), 243–253.

Van der Meulen, R., & Pettey, C. (2008). Gartner says cloud computing will be as influential as E-business: special report examines the realities and risks of cloud computing. available at: https://www.gartner.com/newsroom/id/707508.

Wallace, L.G., & Sheetz, S.D. (2014). The adoption of software measures: a technology acceptance model (tam) perspective. Information & Management, 51(2), 249–259.

Walther, S., & Eymann, T. (2012). The role of confirmation on IS continuance intention in the context of On-Demand enterprise systems in the Post-Acceptance phase. AMCIS 2012 proceedings, Paper 2.

Walther, S., Sarker, S., Urbach, N., Sedera, D., Eymann, T., & Otto, B. (2015). Exploring organizational level continuance of cloud-based enterprise systems. ECIS 2015 Proceedings.

Wang, C., Wood, L.C., Abdul-Rahman, H., & Lee, Y.T. (2016). When traditional information technology project managers encounter the cloud: Opportunities and dilemmas in the transition to cloud services. International Journal of Project Management, 34(3), 371–388.

Waters, B. (2005). Software as a service: a look at the customer benefits. Journal of Digital Asset Management, 1(1), 32–39.

West, B.T., Welch, K.B., & Galecki, A.T. (2007). Linear mixed models: a practical guide using statistical software. Boca Raton: Chapman & Hall/CRC.

Wu, W.-W. (2011a). Developing an explorative model for SaaS adoption. Expert Systems with Applications, 38(12), 15057–15064.

Wu, W.-W. (2011b). Mining significant factors affecting the adoption of SaaS using the rough set approach. Journal of Systems and Software, 84(3), 435–441.

Yang, C.-C., & Chou, S.-W. (2015). Understanding the success of software-as-a-service (saas): the perspective of post-adoption use, PACIS 2015 Proceedings.

Zhang, D., & Lin, X. (2008). Variance component testing in generalized linear mixed models for longitudinal/clustered data and other related topics. In Dunson, D.B. (Ed.) Random effect and latent variable model selection volume 192 of lecture notes in statistics (pp. 19–36). New York: Springer.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix

This Appendix supplies additional material for Usage Continuance in Software-as-a-Service. Appendix A contains questionnaires used in the data acquisition process. In Appendix B, further plots on model diagnostics can be found. Appendix C includes the remaining plots of the studies Chapter 5.1 Conditioning on predictors. Finally, Appendix D gives further insight into the models predictive performance.

Appendix A: Questionnaire

Appendix B: Model Diagnostics

Appendix C: Graphs Conditioning on Predictors

Appendix D: Predictive Accuracy Graphs

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Baumann, E., Kern, J. & Lessmann, S. Usage Continuance in Software-as-a-Service. Inf Syst Front 24, 149–176 (2022). https://doi.org/10.1007/s10796-020-10065-w

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10796-020-10065-w