Abstract

Approximations of the Dirac delta distribution are commonly used to create sequences of smooth functions approximating nonsmooth (generalized) functions, via convolution. In this work we show a-priori rates of convergence of this approximation process in standard Sobolev norms, with minimal regularity assumptions on the approximation of the Dirac delta distribution. The application of these estimates to the numerical solution of elliptic problems with singularly supported forcing terms allows us to provide sharp \(H^1\) and \(L^2\) error estimates for the corresponding regularized problem. As an application, we show how finite element approximations of a regularized immersed interface method results in the same rates of convergence of its non-regularized counterpart, provided that the support of the Dirac delta approximation is set to a multiple of the mesh size, at a fraction of the implementation complexity. Numerical experiments are provided to support our theories.

Similar content being viewed by others

1 Introduction

Singular source terms are often used in partial differential equations (PDE) to model interface problems, phase transitions, or fluid-structure interaction problems. The immersed boundary method (IBM, [29]) is a good example of a model problem where a Dirac delta distribution supported on an immersed fiber or surface is used to capture complex dynamics that are happening on possibly moving interfaces. Similar forcing terms can be used, for example, to model fictitious boundaries in the domain [13], or to couple solids and fluids across non-matching discretizations [17].

When the discretization scheme is based on variational principles, singular sources may be incorporated exactly in the numerical scheme by the definition of their action on test functions [3, 4, 17]. However, if the discretization scheme is based on finite differences [29] or finite volumes [27], this procedure is cumbersome, and a typical alternative is to regularize the source term by taking its convolution with an approximation of the Dirac delta distribution, or by modifying the differential operators themselves to incorporate the knowledge about the interface [22].

Several works are dedicated to the design of good Dirac approximations [19, 34] to use in this regularization process, and their convergence properties are well known in the literature of immersed boundary methods [14, 21].

When the source term is a single Dirac distribution, [19] proved convergence in both the weak-\(*\) topology of distributions, as well as in a weighted Sobolev norm, provided that certain momentum conditions are satisfied.

In many applications, however, the Dirac distribution is not used directly as a source term, but only in formal convolutions to represent the coupling between overlapping domains. In this context, the resulting source term may or may not be singular, according to the co-dimension of the immersed domain itself. To fix the ideas, consider a source term of the kind

The above formalism is used in immersed methods to represent a (possibly singular) coupling between B and \(\varOmega \). Here \(\delta \) is a d-dimensional Dirac distribution. If the co-dimension of the immersed domain B is zero, then the above forcing term reduces to

where \(\chi _B\) is the indicator function of B, owing to the distributional definition of \(\delta \). However, if the co-dimension of B is greater than zero, the integration over B does not exhaust the singularity of the Dirac distribution: the resulting F is still singular, and it should be interpreted as the distribution whose effect on smooth functions \(\varphi \) is given by:

In the three dimensional case, assuming that \(f\in L^2(B)\), the regularity of F goes from being \(L^2(\varOmega )\) when the co-dimension of B is zero, to a negative Sobolev space which cannot be smoother than \(H^{-1/2}(\varOmega )\), \(H^{-1}(\varOmega )\), and \(H^{-3/2}(\varOmega )\) when B is a Lipschitz surface, Lipschitz curve, or point, respectively.

In all cases, a regularization of F is possible by convolution with an approximate (possibly smooth) Dirac function. In most of the literature that exploits this technique from the numerical point of view, pointwise convergence, truncation, and Taylor expansions are used to argue that high order convergence can be achieved in \(L^p(\varOmega )\) norms for the numerical approximation of the regularized problem, provided that some specific conditions are met in the construction of the approximate Dirac function [23, 24, 28]. Convergence of the regularized solution to the exact solution of the original problem is usually left aside, with the notable exceptions of the work by [19], where only the degenerate case of B being a single point is considered, and the work by [30], where suboptimal estimates in \(W^{-1, p}(\varOmega )\) norm for some \(p\in [1,\tfrac{d}{d-1})\) are provided for finite element discretizations of the immersed boundary method applied to the Stokes problem.

Even though the mollification (also known as regularization) technique is widely used in the mathematical analysis community, very little is known about the the speed at which regularized functions converge to their non-regularized counterparts in standard Sobolev norms, when non-smooth approximations of the Dirac delta distribution are considered.

In this work we provide convergence results in standard Sobolev norms that mimic closely the a-priori estimates available in finite element analysis, and we investigate the performance of the finite element method using regularized forcing data, in the energy and \(L^2(\varOmega )\) norms, for a class of singular source terms commonly used in interface problems, fluid structure interaction problems, and fictitious boundary methods.

We start by providing an a priori framework in standard Sobolev spaces for unbounded domains, to study the convergence of regularized functions to their non-regularized counterparts, with minimum requirements on the regularization kernel.

We extend this framework to bounded domains, assuming that the support of the forcing data is away from the physical domain (see the definition in (14)), and we study the convergence speed of elliptic problems with regularized forcing terms to their non-regularized counterparts, with minimum requirements on the support of the forcing term. For compactly supported kernels, in Theorem 5, we provide sharp convergence estimates in the energy norm in terms of powers of the radius of the support. We also provide an \(L^2(\varOmega )\) error estimate by following a duality argument (or Aubin–Nitsche trick) and using the \(H^2\) interior regularity of a dual problem; we refer to Theorem 6 for more details. We note that although we only consider Dirichlet boundary conditions in this paper, the convergence results that we derive for the regularized problem can be also applied to elliptic problems with other boundary conditions; see Remark 2.

As an application, we investigate how the regularization affects the total error of the finite element approximation of an interface problem via immersed methods, and we show that all the estimates we obtain are sharp. Even though a regularization in this case is not necessary when using the finite element method [3], the biggest advantage of using regularization comes from the fact that its numerical implementation is trivial; see Remark 6. Theorems 7 and 8 show that the regularization does not affect the overall performance of the finite element approximation in both energy and \(L^2(\varOmega )\) norms when choosing the regularization parameter to be a multiple of the maximal size of the quasi-uniform subdivision of \(\varOmega \).

The rest of the paper is organized as follows. We first introduce some notations in Sect. 2, and provide general results in both bounded and unbouned domains in Sect. 3. These results are applied to a general model elliptic problem in Sect. 4, where we use the results of Sect. 3 to derive sharp error estimates in energy and \(L^2(\varOmega )\) norms. An application to immersed methods is provided in Sect. 5, where we apply the theory to a finite element approximation of an interface problem. Finally we discuss the numerical implementation of the singular forcing data (with and without regularization) and validate our findings via numerical simulations in Sect. 6.

2 Notations and preliminaries

In what follows, we set \(\varOmega \) to be a bounded Lipschitz domain in \({\mathbb {R}^d}\) with \(d=2\) or 3. We write \(A\preceq B\) when \(A\le cB\) for some constant c independent of regularization and discretization parameters (mentioned in Sect. 1) in A and B. We indicate \(A\sim B\) when both \(A\preceq B\), and \(B\preceq A\). For \(a\in \mathbb {R}\), we denote with \(a^-\) (or \(a^+\)) any real number strictly smaller (or greater) than a.

For \(x\in {\mathbb {R}^d}\), we use |x| to indicate the euclidean norm of x, and given a normed space X, we denote by \(X'\) and \(\langle \cdot ,\cdot \rangle _{X',X}\) its dual space and the duality pairing, respectively. We also denote by \(\Vert \cdot \Vert _X\) and \(\Vert \cdot \Vert _{X'}\) the norm of X and the operator norm of \(X'\), i.e.,

2.1 Sobolev spaces

For \(s\in {\mathbb {N}}\) and \(p>1\), we denote by \(W^{s,p}(\varOmega )\), \(H^s(\varOmega )\) and \(L^2(\varOmega )\) the usual Sobolev spaces. For convention we set \(H^0(\varOmega )=L^2(\varOmega )\) and denote with \((\cdot ,\cdot )_\varOmega \) the \(L^2(\varOmega )\) inner product. We denote with \(|\cdot |_{H^s(\varOmega )}\) the usual semi-norm of \(H^s(\varOmega )\), and we set \(|\cdot |_{H^0(\varOmega )}:=\Vert \cdot \Vert _{L^2(\varOmega )}\). Let \({H^1_0(\varOmega )}\) be the set of functions in \(H^1(\varOmega )\) vanishing on \(\partial \varOmega \). It is well known that \({H^1_0(\varOmega )}\) is the closure of the space of infinitely differentiable functions with compact support in \({\overline{\varOmega }}\) (denoted by \(C^\infty _c({\overline{\varOmega }})\) or \({\mathcal {D}}(\varOmega )\)) with respect to the norm of \(H^1(\varOmega )\). For \(s\in (0,1)\), \(H^s(\varOmega )\) denotes the space of functions whose Sobolev–Slobodeckij norm

is finite. Similarly, for \(s\in (1,2)\), the norm of \(H^s(\varOmega )\) is

It is well known that for \(s\in (0,2)\), \(H^s(\varOmega )=[L^2(\varOmega ),H^2(\varOmega )]_s\), where \([X,Y]_s\) denotes the interpolation space between X and Y using the real method. For \(s\in [0,2]\), we denote \(H^{-s}(\varOmega )=H^s(\varOmega )'\).

2.2 Distributions

We indicate with \({\mathcal {D}}({\mathbb {R}^d})\) or \({\mathcal {D}}(\varOmega )\) the spaces of smooth functions with compact support in \({\mathbb {R}^d}\) or in \(\varOmega \), i.e., \({\mathcal {D}}({\mathbb {R}^d}) := C^{\infty }_c({\mathbb {R}^d})\) and \({\mathcal {D}}(\varOmega )=C^{\infty }_c(\overline{\varOmega })\), and with \({\mathcal {D}}'({\mathbb {R}^d})\) and \({\mathcal {D}}'(\varOmega )\) their dual spaces (also known as the spaces of distributions). The d-dimensional Dirac delta distribution centered in x is defined as the linear functional \(\delta _x\) in \({\mathcal {D}}'\) such that

where A is either \({\mathbb {R}^d}\) or \(\varOmega \).

3 Regularization

In the mathematical literature, mollifiers were introduced by Sobolev [33], and formalized by Friedrichs [12], to provide an effective way of regularizing non smooth or singular functions by performing the convolution with a smooth and compactly supported function that integrates to one (the kernel, or mollifier).

The outcome of the procedure is a function that has the same regularity property of the kernel, normally taken to be \(C^\infty \). In this section we relax the requirements of the kernel, and we provide some basic results that can be obtained by regularizing with approximated Dirac distributions that are not necessarily \(C^\infty \). In particular, we consider functions \(\psi \) that satisfy the following assumptions:

Assumption 1

Given \(k \in {\mathbb {N}}\), let \(\psi (x)\) in \(L^\infty ({\mathbb {R}^d})\) be such that

-

1.

Compact support

\(\psi (x)\) is compactly supported, with support \(supp (\psi )\) contained in \(B_{r_0}\) (the ball centered in zero with radius \(r_0\)) for some \(r_0>0\);

-

2.

k-th order moments condition

$$\begin{aligned} \int _{\mathbb {R}^d}y^\alpha _i\psi (x-y)\, \mathrm{d}y = x^\alpha _i \qquad i=1\ldots d, \quad 0\le \alpha \le k, \quad {\text { for all }}x \in {\mathbb {R}^d}; \end{aligned}$$(1)

Lemma 1

(Convergence to \(\delta \)) A function \(\psi \) that satisfies Assumption 1 for some \(k\ge 0\), defines a one-parameter family of Dirac delta approximations, i.e., define

then

where \(\delta (x)\) is the Dirac delta distribution and the limit must be understood in the space of Schwartz distributions.

Notice that Assumption 1.2 with \(k=0\) implies that both \(\psi \) and \({\delta ^\varepsilon }\) have unit integral. Moreover, we make no additional assumptions on the global regularity of \(\psi \), except from requiring it to be in \(L^\infty ({\mathbb {R}^d})\).

Any function \(\psi \) that satisfies Assumption 1 defines a one-parameter family of Dirac delta approximations \({\delta ^\varepsilon }\), through which it is possible to define a regularization in both \({\mathbb {R}^d}\) and \(\varOmega \):

Definition 1

(Regularization) For a function \(v\in L^1(A)\) we define its regularization \({v^\varepsilon }(x)\) in the domain A (either \(\varOmega \) or \({\mathbb {R}^d}\)) through the mollifier \(\psi \) by

where \({\delta ^\varepsilon }\) is defined as in Eq. (2), i.e.,

and \(\psi \) satisfies Assumption 1 for some \(k\ge 0\).

For functionals F in negative Sobolev spaces, say \(F\in H^{-s}(A)\), with \(s\ge 0\), we define its regularization \(F^\varepsilon \) by the action of F on \(v^\varepsilon \) with \(v\in H^s(A)\), i.e.,

Lemma 2

(\(L^1\) growth control) A Dirac approximation \({\delta ^\varepsilon }\) constructed from a function \(\psi \) that satisfies Assumption 1 (irrespective of \(k\ge 0\)), also satisfies the following polynomial growth condition:

where the hidden constant depends on m, d and the choice of \(\psi \).

Proof

By considering the change of variable \(x = \xi \varepsilon \), we observe that

\(\square \)

We shall consider two common methods for choosing \(\psi (x)\). In the first case we choose a one dimensional function \(\psi _\rho \in L^\infty (\mathbb {R})\) so that \(\psi _\rho \) is supported in [0, 1], and we define the function \(\psi \) as

where \(I_d\) is a scaling factor, chosen so that \(\psi (x)\) integrates to one. With this choice, \(\psi \) satisfies Assumption 1 with k at least equal to one (i.e., it satisfies the first moments conditions).

The second construction method is usually referred to as tensor product construction. We start from a \(L^\infty (\mathbb {R})\) function \(\psi _{1d}\) that satisfies Assumption 1 for some k in dimension one. Then we define \(\psi (x)\) in dimension d by

The tensor product approximation \(\psi (x)\) satisfies Assumption 1 with \(r_0=\sqrt{d}\) and the same k of \(\psi _{1d}\). In particular \(k=0\) if \(\psi _{1d}\) is not symmetric, and k is equal to at least one if \(\psi _{1d}\) is symmetric. We refer to [19, Section 3] for an in depth discussion on other possible choices of Dirac approximation classes \(\psi (x)\) with possibly higher order moment conditions.

3.1 Unbounded domains

We begin by providing some results that follow from an application of Young’s inequality for convolutions:

Lemma 3

(Young’s inequality for convolutions [35]) Given \(f,g\in L^2({\mathbb {R}^d})\) and \(h\in L^1({\mathbb {R}^d})\),

Lemma 4

For \(0\le s \le k+1\), let \(v\in H^s({\mathbb {R}^d})\), and let \({v^\varepsilon }\) be defined by Definition 1. Then there holds

Proof

We start by considering \(v\in C^\infty _c({\mathbb {R}^d})\), and the case where s is integer, and \(1 \le s \le k+1\). By Taylor expansion, it is possible to expand v(y) around an arbitrary point x in a polynomial part \(p_x\) and a residual part \(r_x\), i.e.:

We start by considering \(v\in C^\infty _c({\mathbb {R}^d})\), and the case where s is integer, and \(1 \le s \le k+1\). By Taylor expansion, it is possible to expand v(y) around an arbitrary point x in a polynomial part \(p_x\) and a residual part \(r_x\), i.e.:

Here \(\alpha \) and \(\beta \) are multi-indices. If we regularize v, since the regularization is a linear operator, we obtain:

where in the last equality follows from Assumption 1.2 so that \(p_x^\varepsilon = p_x\) for any polynomial of order up to k.

Given any \(\theta \in L^2({\mathbb {R}^d})\), we deduce

Applying the definition of \(r_x(y)\), Fubini’s theorem, and by the change of variable \(\xi =x+ t(y-x)\) for a fixed \(x\in {\mathbb {R}^d}\), and \(t\in (0,1)\), we have:

Applying Lemmas 3 and 2 we get

To show (8) for \(s=0\), we note that the regularization operator is \(L^2\)-stable, i.e., for any \(\theta \in L^2({\mathbb {R}^d})\),

To show (8) for \(s=0\), we note that the regularization operator is \(L^2\)-stable, i.e., for any \(\theta \in L^2({\mathbb {R}^d})\),

Here we applied again Young’s inequality (Lemma 3) together with Lemma 2 for \(m=0\). Hence, \(\Vert {v^\varepsilon }\Vert _{L^2({\mathbb {R}^d})}\preceq \Vert v\Vert _{L^2({\mathbb {R}^d})}\), and \(\Vert v-{v^\varepsilon }\Vert _{L^2({\mathbb {R}^d})}\preceq \Vert v\Vert _{L^2({\mathbb {R}^d})}\) follows from the triangle inequality.

Taking the sup over \(\theta \) with unit \(L^2({\mathbb {R}^d})\) norm, and applying interpolation estimates between \(s=0\) and \(s=k+1\), the proof is complete by a density argument.

Taking the sup over \(\theta \) with unit \(L^2({\mathbb {R}^d})\) norm, and applying interpolation estimates between \(s=0\) and \(s=k+1\), the proof is complete by a density argument.

\(\square \)

The above lemma immediately implies the following convergence result in \({\mathbb {R}^d}\):

Theorem 2

(Regularization estimates in \({\mathbb {R}^d}\)) Let \(F\in H^m({\mathbb {R}^d})\), \(m \in [-k-1,0]\). For \(-k-1\le s \le m\le 0\), there holds

Moreover, let \(v\in H^m({\mathbb {R}^d})\), \(m \in [0,k+1]\). For \(s\in [-k-1,m]\) so that \(m-s \le k+1\), there holds

Here we identify v in the negative Sobolev space by the duality pairing: \(\langle v,\cdot \rangle = (v,\cdot )_{L^2({\mathbb {R}^d})}\).

Proof

Let us show the desired estimates in three steps.

For \(v\in C^\infty _c({\mathbb {R}^d})\), the definition of the weak derivative of \(D^\alpha {\delta ^\varepsilon }\) for \(|\alpha |\le k+1\) yields that for \(x\in {\mathbb {R}^d}\),

For \(v\in C^\infty _c({\mathbb {R}^d})\), the definition of the weak derivative of \(D^\alpha {\delta ^\varepsilon }\) for \(|\alpha |\le k+1\) yields that for \(x\in {\mathbb {R}^d}\),

Hence, we apply Lemma 4 to \((\sum _{|\alpha |=k+1} D^\alpha v)^\varepsilon \) with \(s=0\) to get

Interpolating the estimates between (8) and (11) implies (10) for \(0\le s \le m \le k+1\).

For \(F\in H^{m}({\mathbb {R}^d})\) with \(-k-1\le m\le 0\), we have

For \(F\in H^{m}({\mathbb {R}^d})\) with \(-k-1\le m\le 0\), we have

Similarly, for \(F\in H^{m}({\mathbb {R}^d})\) with \(-k-1\le m\le 0\),

So the first assertion follows from the interpolation between (12) and (13).

For \(v\in H^m({\mathbb {R}^d})\), \(m \in [0,k+1]\), interpolating the result (\(s\le 0\)) between \(\Vert v-v^\varepsilon \Vert _{H^{s}({\mathbb {R}^d})} \preceq \varepsilon ^{-s}\Vert v\Vert _{L^2({\mathbb {R}^d})}\) and \(\Vert v-v^\varepsilon \Vert _{L^{2}({\mathbb {R}^d})} \preceq \varepsilon ^{m}\Vert v\Vert _{H^m({\mathbb {R}^d})}\) concludes the proof of the second desired estimate, with \(m-s\le k+1\). \(\square \)

For \(v\in H^m({\mathbb {R}^d})\), \(m \in [0,k+1]\), interpolating the result (\(s\le 0\)) between \(\Vert v-v^\varepsilon \Vert _{H^{s}({\mathbb {R}^d})} \preceq \varepsilon ^{-s}\Vert v\Vert _{L^2({\mathbb {R}^d})}\) and \(\Vert v-v^\varepsilon \Vert _{L^{2}({\mathbb {R}^d})} \preceq \varepsilon ^{m}\Vert v\Vert _{H^m({\mathbb {R}^d})}\) concludes the proof of the second desired estimate, with \(m-s\le k+1\). \(\square \)

3.2 Bounded domains

The generalization of the previous results in bounded domains is non-trivial, due to the presence of boundaries. We start by providing some results that work well when restricting v to a region which is strictly contained in \(\varOmega \). Let this region be defined by a Lipschitz domain \(\omega \subset \varOmega \), and assume that there exists a positive constant \(c_0\) such that

The following result relies on the interior regularity of v. More precisely, according to (14), we assume that the regularization parameter satisfies \(\varepsilon \le \varepsilon _0\le c_0\) for some fixed \(\varepsilon _0\), and we set

Assuming that \(v\in H^s({\omega ^{\varepsilon _0}})\cap L^1(\varOmega )\) with \(s\in [0,k+1]\), we next provide similar error estimates compared with the unbounded case in the previous section. We note that results below are instrumental to our error estimates for the numerical approximation of the model problems in the next section.

Lemma 5

(\(L^2(\omega )\) estimate) For \(0\le s \le k+1\), and \(\varepsilon \le \varepsilon _0\le c_0\), let \(v\in L^1(\varOmega )\cap H^s(\omega ^{\varepsilon _0})\), and let \({v^\varepsilon }\) be defined as in Definition 1. Then there holds

Proof

We denote by \({\widetilde{\cdot }}\) the zero extension from \(\omega \), \({\omega ^{\varepsilon _0}}\) or \(\varOmega \) to \({\mathbb {R}^d}\). Since \(\varepsilon <{{\,\mathrm{dist}\,}}(\partial \omega ,\partial \varOmega )\), for any \(x\in \omega \), \({B_\varepsilon }(x)\subset \varOmega \) and

For any \(\theta \in L^2(\omega )\) and \(v\in C_0^\infty ({\overline{{\omega ^{\varepsilon _0}}}})\), we follow the proof of Lemma 4 to get

Here we apply again Assumption 1.2 for the last equality above. When it comes to the last inequality in (17), we note that for a fixed \(x\in \omega \) and for any \(y\in {\omega ^{\varepsilon _0}}\), the change of variable \(\xi = t(y-x)+x \) belongs to \({\omega ^{\varepsilon _0}}\) for any \(t\in (0,1)\). Hence we proceed following the proof of Lemma 4, Step 1 and apply Lemma 3 again to conclude the proof for a positive integer s. Replacing v and \(\theta \) with \({\widetilde{v}}\) and \({\widetilde{\theta }}\) in the Proof of Lemma 4, Step 2, we obtain (16) with \(s=0\). The assertion for any \(s\in [0,k+1]\) follows from the interpolation between \(s=0\) and \(s=k+1\). \(\square \)

Corollary 1

(\(H^s(\omega )\) estimate) For \(0\le s\le m \le k+1\), and \(\varepsilon _0\le c_0\), let \(v\in L^1(\varOmega )\cap H^m({\omega ^{\varepsilon _0}})\), and let \({v^\varepsilon }\) be defined by Definition 1. Then there exists \(\varepsilon _1 > 0\) small enough so that for \(\varepsilon < \varepsilon _1\),

Proof

Let m be a positive integer. Integration by parts yields that for \(v\in C^\infty _c({\overline{{\omega ^{\varepsilon _0}}}})\) with \(x\in \omega ^{\varepsilon _{0}/2}\) and for \(\varepsilon < \varepsilon _0/2\),

Repeating the above argument shows that given a multi-index \(\beta \) so that \(|\beta |=m\), \(D^\beta {v^\varepsilon }(x) = {(D^\beta v)^\varepsilon (x)}\) for \(x\in \omega ^{\varepsilon _0/2^m}\) when \(\varepsilon < \varepsilon _0/2^m\). We then apply (16) in Lemma 5 with \(s=0\) to \((\sum _{|\beta |\le m} D^\beta v)^\varepsilon \) and a density argument to obtain that

Interpolating the above estimate with (16) yields the desired estimate. \(\square \)

Given a functional \(F\in H^s(\varOmega )\) with \(-k-1\le s\le 0\), we say \(\text {supp}_m(F)\subseteq \omega \) for some \(m\in [s,0]\) if \(F \in H^{m}(\omega )\) for some \(s\in [-k-1,m]\) so that

Lemma 6

Let \(F\in H^{s}(\varOmega )\) with \(-k-1\le s\le 0\), and \(supp _m(F)\subseteq \omega \) for some \(m\in [s,0]\). Then, there holds

Proof

The proof is identical to the Proof of Theorem 2, replacing the application of Lemma 4 with that of Lemma 5 and Corollary 1. \(\square \)

The above results are summarized in the following theorem:

Theorem 3

(Regularization estimates in \(\varOmega \)) Let \(\omega \) be such that (14) holds, i.e.

Define the extension of \(\omega \) as in (15), i.e.,

and let \(\varepsilon \le \varepsilon _0\le c_0\). For \(0\le s \le m \le k+1\) where k is the order of the moments conditions satisfied by \({\delta ^\varepsilon }\) as in Assumption 1.2, we have:

-

If \(v\in H^m(\omega ^{\varepsilon _0}) \cap L^1(\varOmega )\), then

$$\begin{aligned} \Vert v-v^\varepsilon \Vert _{H^s(\omega )} \preceq \varepsilon ^{m-s} \Vert v\Vert _{H^m(\omega ^{\varepsilon _0})}. \end{aligned}$$ -

If \(F \in H^{-m}(\varOmega )\) and \(supp _{-s}(F) \subseteq \omega \), then

$$\begin{aligned} \Vert F-{F^\varepsilon }\Vert _{H^{-m}(\varOmega )} \preceq \varepsilon ^{m-s} \Vert F\Vert _{H^{-s}(\omega )}. \end{aligned}$$

4 Model problem

We are now in a position to apply the results of Theorem 3 to a model elliptic problem. Let A(x) be a symmetric \(d\times d\) matrix. We assume that all entries of A(x) are in \(C^1({\overline{\varOmega }})\), uniformly bounded, and that A(x) is positive definite, i.e., there exist positive constants \(a_0,a_1\) satisfying

We also set c(x) to be a nonnegative function in \(C^{0,1}({\overline{\varOmega }})\). We now define the forcing data for our model problem. We set \(F\in H^{-1}(\varOmega )\). We assume that for some \(s\in (0,\tfrac{1}{2}]\), \(\text {supp}_{s-1}(F)\subset \omega \).

Based on the above definitions, our model problem reads: find the distribution u satisfying

To approximate Problem (20) using the finite element method, we shall consider its variational formulation: find \(u\in V:={H^1_0(\varOmega )}\) satisfying

where

4.1 Regularity

Our error estimates rely on standard regularity results for elliptic problems: given \(g\in V'\), let \(T : V' \rightarrow V\) be the solution operator satisfying

We first note that if \(g\in L^2(\varOmega )\), we identify \(\langle g,\cdot \rangle _{V',V}\) with \((g,\cdot )_\varOmega \) and hence Tg has the \(H^2\) interior regularity, i.e., given a subset K such that \({\overline{K}}\subset \varOmega \), \(Tg\in H^2(K)\) and

where the hiding constant depends on K and \(\varOmega \); we refer to [11, Theorem 1 of Section 6.1] and [7, Theorem 5.33] for a standard proof. The following assumption provides the regularity of Tg up to the boundary

Assumption 4

(elliptic regularity) There exists \(r\in (0,1]\) and a positive constant \(C_r\) satisfying

As an example, consider the case where \(\varOmega \) is a polytope, A(x) is the identity matrix, and \(c(x)=0\), i.e., \({\mathcal {A}}\) becomes the Dirichlet form

Based the regularity results provided by [6], r in Assumption 4 is between \(\tfrac{1}{2}\) and 1 and can be decided by the shape of \(\varOmega \). Assumption 4 also implies that the solution u in (21) belongs to \(H^{1+\min \{s,r\}}(\varOmega )\cap {H^1_0(\varOmega )}\).

4.2 Analysis of a regularized problem

Now we are ready to define a regularized problem of (21): find \({\texttt {u}^\varepsilon }\in V\) satisfying

Remark 1

Notice that we denote with \({\texttt {u}^\varepsilon }\) the solution to Problem 25, and in this case the superscript \(\varepsilon \) does not denote a regularization (hence the different font used for \({\texttt {u}^\varepsilon }\)).

In order to bound the error between u and \({\texttt {u}^\varepsilon }\), we use the boundedness and coercivity of \({\mathcal {A}}(\cdot ,\cdot )\), and obtain that

This implies that

Applying Lemma 6 yields

Theorem 5

(\(H^1(\varOmega )\) error estimate) Under the assumptions in Proposition 6, let u and \({\texttt {u}^\varepsilon }\) be the solutions of problem (21) and (25), respectively. Then, there holds

4.3 \(L^2(\varOmega )\) error estimate

We next show the convergence rate for \({\texttt {u}^\varepsilon }\) in \(L^2(\varOmega )\) norm. To this end, we additionally assume that \({\delta ^\varepsilon }\) satisfies the moments conditions in Assumption 1.2 with \(k\ge 1\).

Theorem 6

(\(L^2(\varOmega )\) error estimate) Under the assumptions of Lemma 6, and Assumption 1.2 with \(k\ge 1\), let u and \({\texttt {u}^\varepsilon }\) be the solutions of problems (21) and (25), respectively. For a fixed \(\varepsilon _0<c_0\) and \(\varepsilon < \varepsilon _0/2\), there holds

Proof

We consider the following dual problem: find \(z\in V\) such that

Hence, we choose \(v=u-{\texttt {u}^\varepsilon }\) and obtain that

Due to the interior regularity of z, \(u-{\texttt {u}^\varepsilon }\in {H^1_0(\varOmega )}\subset L^2(\varOmega )\) implies that

We continue to estimate the right hand side of (26) by

where in the second inequality, we invoke Lemma 5 for z. Combing (26) and (27) concludes the proof of the theorem. \(\square \)

Remark 2

We have to point out that the estimates in Theorems 5 and 6 also hold for Problem (20) with other boundary conditions. These error estimates depend on the smoothness of the test function and of the solution for the dual problem in the neighborhood of \(\omega \) while \(\omega \) is away from the boundary. The analysis of the case where \(\omega \) is attached to the boundary \(\partial \varOmega \) is left aside for future investigation.

5 Application to immersed methods

The general results presented in the previous section can be applied immediately to immersed interface and immersed boundary methods [2, 15, 16, 18]. In this section, we consider an interface problem whose variational formulation can be written as in the model problem (21), and we shall consider its finite element approximation using the regularization of the forcing data given by the application of Definition 1 to functions in negative Sobolev spaces.

5.1 An interface problem via immersed methods

Let \(\varGamma \subset \varOmega \) be a closed Lipschitz interface with co-dimension one. We set \(\omega \) be the domain inside \(\varGamma \), and we assume that \(\omega \) satisfies the assumptions introduced in Sect. 2 (see Fig. 1).

Given \(f\in H^{s-1/2}(\varGamma )\) with \(s\in [0,\tfrac{1}{2}]\), we consider the following Poisson problem

For \(x\in \varGamma \), \(\nu (x)\) denotes the normal vector and \(\tfrac{\partial u}{\partial \nu }\) denotes the corresponding normal derivative. We also use the notation \(\llbracket \cdot \rrbracket \) for the jump across \(\varGamma \). More precisely speaking, letting \(u^-=u\big |_{\omega }\) and \(u^+=u\big |_{\varOmega \backslash {\overline{\omega }}}\), the jump across \(\varGamma \) is defined by

The above problem can be reformulated in the entire domain \(\varOmega \) using a singular forcing term that induces the correct jump on the gradient of the solution. (cf. [20]). The variational fomulation of the new problem is provided by (21) where \({\mathcal {A}}\) is the Dirichlet form (24) and

Here \(\delta \) denotes the d-dimensional Dirac delta distribution, and Eq. (29) should be interpreted variationally, i.e.,

We note that for any s in \([0,\tfrac{1}{2})\), \({\mathcal {M}}\) is a bounded map from \(H^{s-1/2}(\varGamma )\) to \(H^{s-1}({\omega ^{\varepsilon _1}})\cap H^{-1}(\varOmega )\) and hence the variational formulation makes sense. In fact, for \(v\in H^1(\varOmega )\),

Here we apply the trace Theorem (e.g. [26, Theorem 1.5.1.2]) for the second inequality. The above estimate together with (30) shows that \(F\in V'\). It also implies that \(F\in H^{s-1}({\omega ^{\varepsilon _1}})\) and hence \(\text {supp}(F)_{s-1}\subseteq \omega \). So F satisfies the setting in Sect. 4 and we can apply the results from Theorems 5 and 6 to the interface problem to get

We note that Fubini’s Theorem yields that

and hence

which is the classical formulation of \({F^\varepsilon }\) that can be found in the literature of the Immersed Boundary Method [29], where \({\delta ^\varepsilon }\) is always taken to be even (i.e., \(k\ge 1\)), and \({F^\varepsilon }\) is introduced as the regularization of f on \(\varGamma \), via the d-dimensional Dirac approximation \({\delta ^\varepsilon }\).

5.2 Finite element approximation of the regularized problem

In this section, we consider a finite element approximation of the regularized problem (25). Assume that \(\varOmega \) is polytope and let \(\{{\mathcal {T}}_h(\varOmega )\}_{h>0}\) be a family of conforming subdivisions of \({\overline{\varOmega }}\) made of simplices with h denoting their maximal size. We assume that \({\mathcal {T}}_h(\varOmega )\) are shape-regular and quasi-uniform in the sense of [5, 10].

Let \({\mathbb {V}}_h\) be the space of continuous piecewise linear functions subordinate to \({\mathcal {T}}_h(\varOmega )\) that vanish on \(\partial \varOmega \). Let \(I_h : H^1_0(\varOmega )\rightarrow {\mathbb {V}}_h\) be the Scott–Zhang interpolation [32] which has the following approximation property

The discrete counterpart of the regularized problem (25) reads: find \({\texttt {u}^\varepsilon _h}\in {\mathbb {V}}_h\) satisfying

For \(v_h\in {\mathbb {V}}_h\), \(\langle {F^\varepsilon },v_h\rangle _{V',V}\) can be computed by using a quadrature formula on \(\varGamma \) and a quadrature formula on \(\tau \in {\mathcal {T}}_h(\varOmega )\). We refer to the next Section for the details of the implementation.

The following theorem shows the error between u and its final approximation \({\texttt {u}^\varepsilon _h}\).

Theorem 7

(\(H^1(\varOmega )\) error estimate) Let u and \({\texttt {u}^\varepsilon _h}\) be the solutions to (21) and (34), respectively. Under Assumptions 4 and 1, we have

Proof

The coercivity of \({\mathcal {A}}(\cdot ,\cdot )\) implies that \({\mathcal {A}}(\cdot ,\cdot )\) is also \({\mathbb {V}}_h\) elliptic. The first Strang’s Lemma (see, e.g. [5, Theorem 4.1.1]) yields

Setting \(v_h=I_h u\) and invoking (33) together with Assumption 4 and Lemma 6, we conclude that

\(\square \)

We next show a \(L^2(\varOmega )\) error estimate between u and \({\texttt {u}^\varepsilon _h}\).

Theorem 8

(\(L^2(\varOmega )\) error estimate) Following the settings from Theorem 7, we additionally assume that \({\delta ^\varepsilon }\) satisfies Assumption 1.2 with \(k\ge 1\). Then we have

Proof

We first provide a bound on the error between \({\texttt {u}^\varepsilon }\) and \({\texttt {u}^\varepsilon _h}\) under the regularity assumption of f. In fact, the triangle inequality together with Theorems 6 and 7 implies that

Next we bound \(\Vert {\texttt {u}^\varepsilon }-{\texttt {u}^\varepsilon _h}\Vert _{L^2(\varOmega )}\) using a duality argument. Let \(\texttt {Z}\in {H^1_0(\varOmega )}\) satisfy that

Hence \(\texttt {Z}\in H^{1+r}(\varOmega )\). Applying Galerkin orthogonality

we obtain that

This, together with the regularity estimate \(\Vert \texttt {Z}\Vert _{H^{1+r}(\varOmega )}\preceq \Vert {\texttt {u}^\varepsilon }-{\texttt {u}^\varepsilon _h}\Vert _{L^2(\varOmega )}\), shows that

The triangle inequality \(\Vert u-{\texttt {u}^\varepsilon _h}\Vert _{L^2(\varOmega )}\le \Vert u-{\texttt {u}^\varepsilon }\Vert _{L^2(\varOmega )}+\Vert {\texttt {u}^\varepsilon }-{\texttt {u}^\varepsilon _h}\Vert _{L^2(\varOmega )}\) together with the above estimate and the \(L^2(\varOmega )\) estimate in Theorem 6 concludes the proof of the theorem. \(\square \)

We end this section with some remarks to further explain the error estimates and also for the numerical experiments in the next section.

Remark 3

(knowing the regularity of the solution) If the regularity of the solution is known, e.g., \(u\in H^{1+\beta }(\varOmega )\) for some \(\beta \in (0,1]\), we can apply the interpolation estimate (33) with \(s=\beta \) in Theorems 7 and 8 to get

and

Remark 4

(choices of \(\varepsilon \)) Usually we can choose \(\varepsilon = ch^q\) for some \(q\in (0,1]\) and for a fixed factor c. Hence, error estimates in the above remark become

6 Numerical illustrations

We implement the linear system of discrete problem (34) using the deal.II finite element Library [1, 25, 31]. Before validating our error estimates given by Theorems 7 and 8 via a series of numerical experiments, we want to make some remarks on the computation of the right hand side vector in discrete linear system.

Remark 5

(Approximation of the surface \(\varGamma \)) We shall compute the right hand side data on an approximation of a \(C^2\) interface \(\varGamma \) using the technology of the surface finite element method for the Laplace–Beltrami problem [8, 9]. Let \(\varGamma _{h_0}\) be a polytope which consists of simplices with co-dimension one, where \(h_0\) denotes the maximal size of the subdivision. All vertices of these simplices lie on \(\varGamma \) and similar to \({\mathcal {T}}_h(\varOmega )\), we assume that this subdivision of \(\varGamma _{h_0}\), denoted by \({\mathcal {T}}_{h_0}(\varGamma )\), is conforming, shape-regular, and quasi-uniform. We compute the forcing data at \(\varGamma _{h_0}\) with \(f^e(x) = f(p(x))\), where \(p(x)=x-d(x)\nabla d(x)\in \varGamma \) and d(x) is the signed distance function for \(\varGamma \). The error analysis for how the finite element approximation of u in (28) is affected by \(\varGamma _{h_0}\) is out of the scope of this paper. In what follows, we assume that \(h_0\) is small enough compared with h so that the error from the interface approximation does not affect the total error of our test problems.

Remark 6

(Comparing with the usual approach) Based on the previous remark and given a shape function \(\phi _h\in {\mathbb {V}}_h\), we can approximate the right hand side data \(\langle {F^\varepsilon },\phi _h\rangle _{V',V}\) using quadrature rules on both \(\tau _1\in {\mathcal {T}}_h(\varOmega )\) and \(\tau _2\in {\mathcal {T}}_{h_0}(\varGamma )\), i.e.,

Here \(\tau _1^\varepsilon \) follows from the definition (15) and \(supp (\phi _h)\) denotes the support of \(\phi _h\). \(\{w_{j_1},q_{j_1}\}_{j_1=1}^{J_{\tau _1}}\) and \(\{w_{j_2},q_{j_2}\}_{j_2=1}^{J_{\tau _2}}\) are pairs of quadrature weights and quadrature points defined on \(\tau _1\in \mathcal T_h(\varOmega )\) and \(\tau _2\in {\mathcal {T}}_{h_0}(\varGamma )\), respectively. On the other hand, letting Q be the collection of quadrature points for all \(\tau _2\in {\mathcal {T}}_{h_0}(\varGamma )\), the finite element method allows one to approximate directly \(\langle F,v_h\rangle _{V',V}\) by

We note that we usually compute \(\phi _h(q_{j_1})\) by using a transformation \(B_{\tau _1}\) mapping from the reference simplex \({\hat{\tau }}\) to \(\tau _1\in {\mathcal {T}}_h(\varOmega )\) and set \(q_{j_1} = B_{\tau _1}{\hat{q}}_{j_1}\) and \(w_{j_1} = {\hat{w}}_{j_1}|\det B_{\tau _1}|\), where \(\{{\hat{w}}_{j_1},{\hat{q}}_{j_1}\}\) is the pair of quadrature weights and quadrature points for \({\hat{\tau }}\). In the implementation, we store the reference shape function \({\hat{\phi _h}}\), the shape values \({\hat{\phi }}_h({\hat{q}}_{j_1})=\phi _h(q_{j_1})\) and \(w_{j_1}\) offline (and similar implementations for \(\tau _2\)) but we have to compute \( \phi _h(q_{j_2})\) online by \({\hat{\phi }}_h(B_{\tau _1}^{-1} q_{j_2})\).

We finally note that searching for \(q_{j_2}\in Q\) and \(\tau _2\in {\mathcal {T}}_{h_0}(\varGamma )\) intersecting with \(\tau _1\) can be accelerated by creating R-trees for \(q_{j_2}\) and bounding boxes of \(\tau _2\), respectively. Then we use the searching algorithms for intersection with the bounding box of \(\tau _1\in {\mathcal {T}}_h(\varOmega )\). In our implementation, we use the searching algorithms from the Boost.Geometry.Index library.

6.1 Tests on the unit square domain with different \({\delta ^\varepsilon }(x)\)

We test the interface problem (28) on the unit square domain but with a non-homogeneous Dirichlet boundary condition, following the examples provided in [18]. We set \(\varOmega =(0,1)^2\) and solve

where \(\varGamma =\partial B_{0.2}({\mathbf {c}})\) with \({\mathbf {c}}=(0.3,0.3)^{^\intercal }\), \(f=\tfrac{1}{0.2}\) and \(g=\ln (|x-{\mathbf {c}}|)\). The analytic solution is

In view of Assumption 4 and Remark 4, \(r=1\), \(s=\tfrac{1}{2}\) and \(u\in H^{3/2^-}(\varOmega )\). Hence setting \(\varepsilon = h\) yields

where \(\#\text {DoFs}\) stands for the number of degree of freedoms and we used the fact that \(h\sim \#\text {DoFs}^{-1/d}\) for quasi-uniform meshes. We shall compute \({\texttt {u}^\varepsilon _h}\) on a sequence of unstructured, quasi-uniform, quadrilateral meshes: we start to compute \(u^\varepsilon _{h_1}\) on a coarse mesh of \(\varOmega \) in Fig. 2. Then we compute the next approximated solution in a higher resolution based on the global refinement of the previous mesh. In the meantime, we also take the global refinement of approximated interface according to Remark 5. The right plot in Fig. 2 shows the approximated solution on the mesh produced from the coarse mesh with six-time global refinement (744,705 degree of freedoms).

In Fig. 3 we report \(L^2(\varOmega )\) and \(H^1(\varOmega )\) errors against \(\#\text {DoFs}\) using the following types of \({\delta ^\varepsilon }(x)\):

-

Radially symmetric \(C^1\): use (5) with \(\psi _\rho (x) = (1+\cos (|\pi x|))\chi _{B_1(0)}(x)/2\), where \(\chi _{B_1(0)}(x)\) is the characteristic function on \(B_1(0)\);

-

Tensor product \(C^1\): use (6) with \(\psi _{1d}(x) = (1+\cos (|\pi x|))\chi _{(-1,1)}(x)/2\);

-

Tensor product \(C^\infty \): use (6) with \(\psi _{1d} (x) = e^{1-1/(1-|x|^2)}\chi _{(-1,1)}(x)\);

-

Tensor product \(L^\infty \): use (6) with \(\psi _{1d} (x) = \tfrac{1}{2}\chi _{(-1,1)}(x)\).

The predicted rates are observed in all four types. Errors in the first three types behave similar while errors for the last type are larger than those in the other cases.

6.2 Tests on a L-shaped domain

Let \(\varOmega \) be the L-shaped domain \((-1,1)^2\backslash ([0,1]\times [-1,0])\) and \(\varGamma =\partial B_{0.2}({\mathbf {c}})\) with \({\mathbf {c}}=(-0.5,-0.5)^{^\intercal }\). We use the polar coordinates \((r(x),\theta (x))\) to define u by

so that \(u\in H^{4/3^-}(\varOmega )\) is the solution to the test problem (35) with \(f=1.5\) on \(\varGamma \) and \(g=u\) on \(\partial \varOmega \). Assumption 4 and Remark 4 imply that \(r=\tfrac{2}{3}\), \(s=\tfrac{1}{2}\) and \(\beta =\tfrac{1}{3}\). So letting \( \varepsilon =h^q, q\in (0,1]\), we should expect that

and

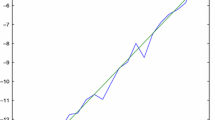

Figure 4 provides the coarse mesh of the numerical test and also the solution on the mesh with six-time global refinement (1,574,913 degrees of freedoms). In Fig. 5 we also report \(L^2(\varOmega )\) and \(H^1(\varOmega )\) errors against \( \#\text {DoFs}\) with \(q=0.2,0.4,0.6,0.8,1\) using the \({\delta ^\varepsilon }(x)\) with the type Tensor product \(C^1\). We again observed the predicted convergence rates from Fig. 5.

\(L^2(\varOmega )\) and \(H^1(\varOmega )\) errors against the number of degree of freedoms (\(\#\)DoFs) using the type Tensor product \(C^1\) for \({\delta ^\varepsilon }\). For each fixed type of \({\delta ^\varepsilon }\) we plot the error decay with the setting \(\varepsilon = h^q\) for \(q=0.2,0.4,0.6,0.8,1\). For each error plot, the last slope of the segment together with the predicted convergence rate are reported

(Left) clip of the coarse mesh (\(0\le x_1\le 0.3\)) of the unit cube \(\varOmega \) together with the subdivision of the interface \(\varGamma \) in red and (right) the approximated solution computed on the three-time global refinement mesh using the regularization with the type Tensor product \(C^1\)

Plots of \(L^2(\varOmega )\) error decay (left) and CPU time for right hand side assembling against the number of degree of freedoms using with or without regularization according to Remark 6. We regularize the forcing data using the type Tensor product C1 with \(\varepsilon =h\)

6.3 Tests on the unit cube

We finally test the interface problem (35) in the three dimensional space by setting \(\varOmega =(0,1)^3\), \(\varGamma =B_{0.2}({\mathbf {c}})\) with \({\mathbf {c}}=(0.3,0.3,0.3)^{^\intercal }\), \(f=1/0.2^2\) and \(g=1/|x-{\mathbf {c}}|\). Then the analytical solution is given by

Figure 6 shows the unstructured coarse mesh of the unit cube as well as the approximated solution on the mesh after the forth-time global refinement (2,324,113 degrees of freedoms). In Fig. 7 we plot the \(L^2(\varOmega )\) error decay by setting \(\varepsilon =h\) and the error decay without using Dirac delta approximations as mentioned in Remark 6. We also plot the CPU time for the computation of the right hand side vector against \(\#\text {DoFs}\) with or without regularization in Fig. 7. We note that the the computer we use has 2.2 GHz Intel Core i7 with 16GB memory. Figure 7 shows that both the computation time for the right hand side assembling and error using the regularization approach are comparable to those using the usual method. We have to point out that according to Remark 6, we cannot evaluate \(B_{\tau _1}^{-1}\) explicitly when the mesh is unstructured and here we use the Newton iteration instead. So if the geometry of each element is simple such as cube, the computation time without regularization can be reduced significantly.

References

Alzetta, G., Arndt, D., Bangerth, W., Boddu, V., Brands, B., Davydov, D., Gassmöller, R., Heister, T., Heltai, L., Kormann, K., Kronbichler, M., Maier, M., Pelteret, J.P., Turcksin, B., Wells, D.: The deal.II library, version 9.0. J. Numer. Math. 26(4), 173–183 (2018)

Alzetta, G., Heltai, L.: Multiscale modeling of fiber reinforced materials via non-matching immersed methods. Comput. Struct. 239, 106334 (2020)

Boffi, D., Gastaldi, L.: A finite element approach for the immersed boundary method. Comput. Struct. 81(8–11), 491–501 (2003)

Boffi, D., Gastaldi, L., Heltai, L., Peskin, C.S.: On the hyper-elastic formulation of the immersed boundary method. Comput. Methods Appl. Mech. Eng. 197(25–28), 2210–2231 (2008)

Ciarlet, P.G.: The finite element method for elliptic problems, Classics in Applied Mathematics, vol. 40. Society for Industrial and Applied Mathematics (SIAM), Philadelphia, PA (2002). Reprint of the 1978 original [North-Holland, Amsterdam; MR0520174 (58 #25001)]

Dauge, M.: Elliptic boundary value problems on corner domains. Lecture Notes in Mathematics, vol. 1341. Springer-Verlag, Berlin (1988)

Demengel, F., Demengel, G.: Functional spaces for the theory of elliptic partial differential equations. Universitext. Springer, London; EDP Sciences, Les Ulis (2012). Translated from the 2007 French original by Reinie Erné

Demlow, A.: Higher-order finite element methods and pointwise error estimates for elliptic problems on surfaces. SIAM J. Numer. Anal. 47(2), 805–827 (2009)

Dziuk, G.: Finite elements for the Beltrami operator on arbitrary surfaces. In: Partial Differential Equations and Calculus of Variations, Lecture Notes in Mathematics, vol. 1357, pp. 142–155. Springer, Berlin (1988)

Ern, A., Guermond, J.L.: Theory and Practice of Finite Elements, Applied Mathematical Sciences, vol. 159. Springer-Verlag, New York (2004)

Evans, L.C.: Partial Differential Equations, Graduate Studies in Mathematics, vol. 19, 2nd edn. American Mathematical Society, Providence, RI (2010)

Friedrichs, K.O.: The identity of weak and strong extensions of differential operators. Trans. Am. Math. Soc. 55, 132 (1944)

Glowinski, R., Pan, T.W., Périaux, J.: A fictitious domain method for external incompressible viscous flow modeled by Navier–Stokes equations. Comput. Methods Appl. Mech. Eng. 112(1–4), 133–148 (1994)

Griffith, B.E., Peskin, C.S.: On the order of accuracy of the immersed boundary method: Higher order convergence rates for sufficiently smooth problems. J. Comput. Phys. 208(1), 75–105 (2005)

Heltai, L.: On the stability of the finite element immersed boundary method. Comput. Struct. 86(7–8), 598–617 (2008)

Heltai, L., Caiazzo, A.: Multiscale modeling of vascularized tissues via non-matching immersed methods. Int. J. Numer. Methods Biomed. Eng. 35(12), e3264 (2019)

Heltai, L., Costanzo, F.: Variational implementation of immersed finite element methods. Comput. Methods Appl. Mech. Eng. 229–232, 110–127 (2012)

Heltai, L., Rotundo, N.: Error estimates in weighted sobolev norms for finite element immersed interface methods. Comput. Math. Appl. 78(11), 3586–3604 (2019)

Hosseini, B., Nigam, N., Stockie, J.M.: On regularizations of the Dirac delta distribution. J. Comput. Phys. 305, 423–447 (2016)

Hsiao, G.C., Wendland, W.L.: Boundary integral equations, Applied Mathematical Sciences, vol. 164. Springer-Verlag, Berlin (2008)

Lai, M.C., Peskin, C.S.: An immersed boundary method with formal second-order accuracy and reduced numerical viscosity. J. Comput. Phys. 160(2), 705–719 (2000)

Leveque, R.J., Li, Z.: The immersed interface method for elliptic equations with discontinuous coefficients and singular sources. SIAM J. Numer. Anal. 31(4), 1019–1044 (1994)

Liu, Y., Mori, Y.: Properties of discrete delta functions and local convergence of the immersed boundary method. SIAM J. Numer. Anal. 50(6), 2986–3015 (2012)

Liu, Y., Mori, Y.: \(L^p\) convergence of the immersed boundary method for stationary stokes problems. SIAM J. Numer. Anal. 52(1), 496–514 (2014)

Maier, M., Bardelloni, M., Heltai, L.: Linear operator - a generic, high-level expression syntax for linear algebra. Comput. Math. Appl. 72(1), 1–24 (2016)

McLean, W.: Strongly Elliptic Systems and Boundary Integral Equations. Cambridge University Press, Cambridge (2000)

Mittal, R., Iaccarino, G.: Immersed boundary methods. Ann. Rev. Fluid Mech. 37(1), 239–261 (2005)

Mori, Y.: Convergence proof of the velocity field for a stokes flow immersed boundary method. Commun. Pure Appl. Math. 61(9), 1213–1263 (2008)

Peskin, C.S.: The immersed boundary method. Acta. Numer. 11(1), 479–517 (2002)

Saito, N., Sugitani, Y.: Analysis of the immersed boundary method for a finite element Stokes problem. Numer. Methods Partial Diff. Eqn. 35(1), 181–199 (2019)

Sartori, A., Giuliani, N., Bardelloni, M., Heltai, L.: deal2lkit: A toolkit library for high performance programming in deal.II. SoftwareX 7, 318–327 (2018)

Scott, L.R., Zhang, S.: Finite element interpolation of nonsmooth functions satisfying boundary conditions. Math. Comp. 54(190), 483–493 (1990)

Soboleff, S.: Sur un théorème d’analyse fonctionnelle. Matematicheskii Sbornik 46(3), 471–497 (1938)

Tornberg, A.K., Engquist, B.: Numerical approximations of singular source terms in differential equations. J. Comput. Phys. 200(2), 462–488 (2004)

Young, W.H.: On the multiplication of successions of fourier constants. Proc. Royal Soc. A: Math. Phys. Eng. Sci. 87(596), 331–339 (1912)

Funding

Open access funding provided by Scuola Internazionale Superiore di Studi Avanzati - SISSA within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The authors would like to thank anonymous referees for their helpful comments and suggestions. LH is partially supported by the Italian Ministry of Instruction, University and Research (MIUR), under the 2017 PRIN project NA-FROM-PDEs MIUR PE1, “Numerical Analysis for Full and Reduced Order Methods for the efficient and accurate solution of complex systems governed by Partial Differential Equations”.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Heltai, L., Lei, W. A priori error estimates of regularized elliptic problems. Numer. Math. 146, 571–596 (2020). https://doi.org/10.1007/s00211-020-01152-w

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00211-020-01152-w