Abstract

We consider large non-Hermitian real or complex random matrices \(X\) with independent, identically distributed centred entries. We prove that their local eigenvalue statistics near the spectral edge, the unit circle, coincide with those of the Ginibre ensemble, i.e. when the matrix elements of \(X\) are Gaussian. This result is the non-Hermitian counterpart of the universality of the Tracy–Widom distribution at the spectral edges of the Wigner ensemble.

Similar content being viewed by others

1 Introduction

Following Wigner’s motivation from physics, most universality results on the local eigenvalue statistics for large random matrices concern the Hermitian case. In particular, the celebrated Wigner–Dyson statistics in the bulk spectrum [44], the Tracy–Widom statistics [56, 57] at the spectral edge and the Pearcey statistics [47, 58] at the possible cusps of the eigenvalue density profile all describe eigenvalue statistics of a large Hermitian random matrix. In the last decade there has been a spectacular progress in verifying Wigner’s original vision, formalized as the Wigner–Dyson–Mehta conjecture, for Hermitian ensembles with increasing generality, see e.g. [2, 15, 23,24,25,26, 35, 37, 40, 42, 45, 48, 52, 52] for the bulk, [5, 12, 13, 34, 38, 39, 46, 50, 53] for the edge and more recently [17, 22, 33] at the cusps.

Much less is known about the spectral universality for non-Hermitian models. In the simplest case of the Ginibre ensemble, i.e. random matrices with i.i.d. standard Gaussian entries without any symmetry condition, explicit formulas for all correlation functions have been computed first for the complex case [31] and later for the more complicated real case [10, 36, 49] (with special cases solved earlier [20, 21, 43]). Beyond the explicitly computable Ginibre case only the method of four moment matching by Tao and Vu has been available. Their main universality result in [54] states that the local correlation functions of the eigenvalues of a random matrix \(X\) with i.i.d. matrix elements coincide with those of the Ginibre ensemble as long as the first four moments of the common distribution of the entries of \(X\) (almost) match the first four moments of the standard Gaussian. This result holds for both real and complex cases as well as throughout the spectrum, including the edge regime.

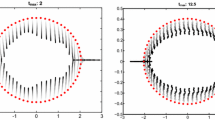

In the current paper we prove the edge universality for any \(n\times n\) random matrix \(X\) with centred i.i.d. entries in the edge regime, in particular we remove the four moment matching condition from [54]. More precisely, under the normalization \({{\,\mathrm{\mathbf {E}}\,}}\left|x_{ab}\right|^2= \frac{1}{n}\), the spectrum of \(X\) converges to the unit disc with a uniform spectral density according to the circular law [6,7,8, 30, 32, 51]. The typical distance between nearest eigenvalues is of order \(n^{-1/2}\). We pick a reference point \(z\) on the boundary of the limiting spectrum, \(\left|z\right|=1\), and rescale correlation functions by a factor of \(n^{-1/2}\) to detect the correlation of individual eigenvalues. We show that these rescaled correlation functions converge to those of the Ginibre ensemble as \(n\rightarrow \infty \). This result is the non-Hermitian analogue of the Tracy–Widom edge universality in the Hermitian case. A similar result is expected to hold in the bulk regime, i.e. for any reference point \(\left|z\right|<1\), but our method is currently restricted to the edge.

Investigating spectral statistics of non-Hermitian random matrices is considerably more challenging than Hermitian ones. We give two fundamental reasons for this: the first one is already present in the proof of the circular law on the global scale. The second one is specific to the most powerful existing method to prove universality of eigenvalue fluctuations.

The first issue a general one; it is well known that non-Hermitian, especially non-normal spectral analysis is difficult because, unlike in the Hermitian case, the resolvent \((X-z)^{-1}\) of a non-normal matrix is not effective to study eigenvalues near \(z\). Indeed, \((X-z)^{-1}\) can be very large even if \(z\) is away from the spectrum, a fact that is closely related to the instability of the non-Hermitian eigenvalues under perturbations. The only useful expression to grasp non-Hermitian eigenvalues is Girko’s celebrated formula, see (14) later, expressing linear statistics of eigenvalues of \(X\) in terms of the log-determinant of the symmetrized matrix

Girko’s formula is much more subtle and harder to analyse than the analogous expression for the Hermitian case involving the boundary value of the resolvent on the real line. In particular, it requires a good lower bound on the smallest singular value of \(X-z\), a notorious difficulty behind the proof of the circular law. Furthermore, any conceivable universality proof would rely on a local version of the circular law as an a priori control. Local laws on optimal scale assert that the eigenvalue density on a scale \(n^{-1/2+\epsilon }\) is deterministic with high probability, i.e. it is a law of large number type result and is not sufficiently refined to detect correlations of individual eigenvalues. The proof of the local circular law requires a careful analysis of \(H^z\) that has an additional structural instability due to its block symmetry. A specific estimate, tailored to Girko’s formula, on the trace of the resolvent of \((H^z)^2\) was the main ingredient behind the proof of the local circular law on optimal scale [14, 16, 59], see also [54] under three moment matching condition. Very recently the optimal local circular law was even proven for ensembles with inhomogeneous variance profiles in the bulk [3] and at the edge [4], the latter result also gives an optimal control on the spectral radius. An optimal local law for \(H^z\) in the edge regime previously had not been available, even in the i.i.d. case.

The second major obstacle to prove universality of fluctuations of non-Hermitian eigenvalues is the lack of a good analogue of the Dyson Brownian motion. The essential ingredient behind the strongest universality results in the Hermitian case is the Dyson Brownian motion (DBM) [19], a system of coupled stochastic differential equations (SDE) that the eigenvalues of a natural stochastic flow of random matrices satisfy, see [27] for a pedagogical summary. The corresponding SDE in the non-Hermitian case involves not only eigenvalues but overlaps of eigenvectors as well, see e.g. [11, Appendix A]. Since overlaps themselves have strong correlation whose proofs are highly nontrivial even in the Ginibre case [11, 29], the analysis of this SDE is currently beyond reach.

Our proof of the edge universality circumvents DBM and it has two key ingredients. The first main input is an optimal local law for the resolvent of \(H^z\) both in isotropic and averaged sense, see (13) later, that allows for a concise and transparent comparison of the joint distribution of several resolvents of \(H^z\) with their Gaussian counterparts by following their evolution under the natural Ornstein-Uhlenbeck (OU). We are able to control this flow for a long time, similarly to an earlier proof of the Tracy–Widom law at the spectral edge of a Hermitian ensemble [41]. Note that the density of eigenvalues of \(H^z\) develops a cusp as \(\left|z\right|\) passes through 1, the spectral radius of \(X\). The optimal local law for very general Hermitian ensembles in the cusp regime has recently been proven [22], strengthening the non-optimal result in [2]. This optimality was essential in the proof of the universality of the Pearcey statistics for both the complex Hermitian [22] and real symmetric [17] matrices with a cusp in their density of states. The matrix \(H^z\), however, does not satisfy the key flatness condition required [22] due its large zero blocks. A very delicate analysis of the underlying matrix Dyson equation was necessary to overcome the flatness condition and prove the optimal local law for \(H^z\) in [3, 4].

Our second key input is a lower tail estimate on the lowest singular value of \(X-z\) when \(\left|z\right|\approx 1\). A very mild regularity assumption on the distribution of the matrix elements of \(X\), see (4) later, guarantees that there is no singular value below \(n^{-100}\), say. Cruder bounds guarantee that there cannot be more than \(n^\epsilon \) singular values below \(n^{-3/4}\); note that this natural scaling reflects the cusp at zero in the density of states of \(H^z\). Such information on the possible singular values in the regime \([n^{-100}, n^{-3/4}]\) is sufficient for the optimal local law since it is insensitive to \(n^\epsilon \)-eigenvalues, but for universality every eigenvalue must be accounted for. We therefore need a stronger lower tail bound on the lowest eigenvalue \(\lambda _1\) of \((X-z)(X-z)^*\). With supersymmetric methods we recently proved [18] a precise bound of the form

modulo logarithmic corrections, for the Ginibre ensemble whenever \(\left|z\right|=1 + \mathcal {O}(n^{-1/2})\). Most importantly, (2) controls \(\lambda _1\) on the optimal \(n^{-3/2}\) scale and thus excluding singular values in the intermediate regime \([n^{-100}, n^{-3/4-\epsilon }]\) that was inaccessible with other methods. We extend this control to \(X\) with i.i.d. entries from the Ginibre ensemble with Green function comparison argument using again the optimal local law for \(H^z\).

1.1 Notations and conventions

We introduce some notations we use throughout the paper. We write \(\mathbb {H}\) for the upper half-plane \(\mathbb {H}:=\{z\in \mathbb {C}\vert \mathfrak {I}z>0\}\), and for any \(z\in \mathbb {C}\) we use the notation \({\text {d}}\!{}z:= 2^{-1} \text {i}({\text {d}}\!{}z\wedge {\text {d}}\!{}\overline{z})\) for the two dimensional volume form on \(\mathbb {C}\). For any \(2n\times 2n\) matrix \(A\) we use the notation \(\langle A\rangle := (2n)^{-1}{{\,\mathrm{Tr}\,}}A\) to denote the normalized trace of \(A\). For positive quantities \(f,g\) we write \(f\lesssim g\) and \(f\sim g\) if \(f \le C g\) or \(c g\le f\le Cg\), respectively, for some constants \(c,C>0\) which depends only on the constants appearing in (3). We denote vectors by bold-faced lower case Roman letters \({\varvec{x}}, {\varvec{y}}\in \mathbb {C}^k\), for some \(k\in \mathbb {N}\). Vector and matrix norms, \(\Vert \varvec{x}\Vert \) and \(\Vert A\Vert \), indicate the usual Euclidean norm and the corresponding induced matrix norm. Moreover, for a vector \({\varvec{x}}\in \mathbb {C}^k\), we use the notation \({\text {d}}\!{}{\varvec{x}}:= {\text {d}}\!{}x_1\dots {\text {d}}\!{}x_k\).

We will use the concept of “with very high probability” meaning that for any fixed \(D>0\) the probability of the event is bigger than \(1-n^{-D}\) if \(n\ge n_0(D)\). Moreover, we use the convention that \(\xi >0\) denotes an arbitrary small constant.

We use the convention that quantities without tilde refer to a general matrix with i.i.d. entries, whilst any quantity with tilde refers to the Ginibre ensemble, e.g. we use \(X\), \(\{\sigma _i\}_{i=1}^n\) to denote a non-Hermitian matrix with i.i.d. entries and its eigenvalues, respectively, and \(\widetilde{X}\), \(\{\widetilde{\sigma }_i\}_{i=1}^n\) to denote their Ginibre counterparts.

2 Model and main results

We consider real or complex i.i.d. matrices \(X\), i.e. matrices whose entries are independent and identically distributed as \(x_{ab} {\mathop {=}\limits ^{d}} n^{-1/2}\chi \) for a random variable \(\chi \). We formulate two assumptions on the random variable \(\chi \):

Assumption (A)

In the real case we assume that \({{\,\mathrm{\mathbf {E}}\,}}\chi =0\) and \({{\,\mathrm{\mathbf {E}}\,}}\chi ^2=1\), while in the complex case we assume \({{\,\mathrm{\mathbf {E}}\,}}\chi ={{\,\mathrm{\mathbf {E}}\,}}\chi ^2=0\) and \({{\,\mathrm{\mathbf {E}}\,}}\left|\chi \right|^2=1\). In addition, we assume the existence of high moments, i.e. that there exist constants \(C_p>0\) for each \(p\in \mathbb {N}\), such that

Assumption (B)

There exist \(\alpha ,\beta >0\) such that the probability density \(g:\mathbb {F}\rightarrow [0,\infty )\) of the random variable \(\chi \) satisfies

where \(\mathbb {F}=\mathbb {R},\mathbb {C}\) in the real and complex case, respectively.

Remark 1

We remark that we use Assumption (B) only to control the probability of a very small singular value of \(X-z\). Alternatively, one may use the statement

for any \(l\ge 1\), uniformly in \(\left|z\right|\le 2\), that follows directly from [55, Theorem 3.2] without Assumption (B). Using (5) makes Assumption (B) superfluous in the entire paper, albeit at the expense of a quite sophisticated proof.

We denote the eigenvalues of \(X\) by \(\sigma _1,\dots ,\sigma _n\in \mathbb {C}\), and define the \(k\)-point correlation function \(p_k^{(n)}\) of \(X\) implicitly such that

for any smooth compactly supported test function \(F:\mathbb {C}^k \rightarrow \mathbb {C}\), with \(i_j\in \{1,\dots , n\}\) for \(j\in \{1,\dots ,k\}\) all distinct. For the important special case when \(\chi \) follows a standard real or complex Gaussian distribution, we denote the \(k\)-point function of the Ginibre matrix \(X\) by \(p_k^{(n,\text {Gin}(\mathbb {F}))}\) for \(\mathbb {F}=\mathbb {R},\mathbb {C}\). The circular law implies that the \(1\)-point function converges

to the uniform distribution on the unit disk. On the scale \(n^{-1/2}\) of individual eigenvalues the scaling limit of the \(k\)-point function has been explicitly computed in the case of complex and real Ginibre matrices, \(X\sim \text {Gin}(\mathbb {R}),\text {Gin}(\mathbb {C})\), i.e. for any fixed \(z_1,\dots ,z_k,w_1,\dots ,w_k\in \mathbb {C}\) there exist scaling limits \(p_{z_1,\dots ,z_k}^{(\infty )}=p_{z_1,\dots ,z_k}^{(\infty ,\text {Gin}(\mathbb {F}))}\) for \(\mathbb {F}=\mathbb {R},\mathbb {C}\) such that

Remark 2

The \(k\)-point correlation function \(p_{z_1,\dots , z_k}^{(\infty ,\text {Gin}(\mathbb {F}))}\) of the Ginibre ensemble in both the complex and real cases \(\mathbb {F}=\mathbb {C},\mathbb {R}\) is explicitly known; see [31] and [44] for the complex case, and [10, 20, 28] for the real case, where the appearance of \(\sim n^{1/2}\) real eigenvalues causes a singularity in the density. In the complex case \(p_{z_1,\dots , z_k}^{(\infty ,\text {Gin}(\mathbb {C}))}\) is determinantal, i.e. for any \(w_1,\dots , w_k\in \mathbb {C}\) it holds

where for any complex numbers \(z_1\), \(z_2\), \(w_1\), \(w_2\) the kernel \(K_{z_1,z_2}^{(\infty ,\text {Gin}(\mathbb {C}))}(w_1,w_2)\) is defined by

-

(i)

For \(z_1\ne z_2\), \(K_{z_1,z_2}^{(\infty ,\text {Gin}(\mathbb {C}))}(w_1,w_2)=0\).

-

(ii)

For \(z_1=z_2\) and \(\left|z_1\right|>1\), \(K_{z_1,z_2}^{(\infty ,\text {Gin}(\mathbb {C}))}(w_1,w_2)=0\).

-

(iii)

For \(z_1=z_2\) and \(\left|z_1\right|<1\),

$$\begin{aligned} K_{z_1,z_2}^{(\infty ,\text {Gin}(\mathbb {C}))}(w_1,w_2)=\frac{1}{\pi }e^{-\frac{\left|w_1\right|^2}{2} -\frac{\left|w_2\right|^2}{2}+w_1\overline{w_2}}. \end{aligned}$$ -

(iv)

For \(z_1=z_2\) and \(\left|z_1\right|=1\),

$$\begin{aligned} K_{z_1,z_2}^{(\infty ,\text {Gin}(\mathbb {C}))}(w_1,w_2)=\frac{1}{2\pi }\left[ 1+ {{\,\mathrm{erf}\,}}\left( -\sqrt{2}(z_1\overline{w_2}+w_1\overline{z_2})\right) \right] e^{-\frac{\left|w_1\right|^2}{2}-\frac{\left|w_2\right|^2}{2}+w_1\overline{w_2}}, \end{aligned}$$where

$$\begin{aligned} {{\,\mathrm{erf}\,}}(z):= \frac{2}{\sqrt{\pi }}\int _{\gamma _z} e^{-t^2}\, {\text {d}}\!{}t, \end{aligned}$$for any \(z\in \mathbb {C}\), with \(\gamma _z\) any contour from \(0\) to \(z\).

For the corresponding much more involved formulas for \(p_k^{(\infty ,\text {Gin}(\mathbb {R}))}\) we refer the reader to [10].

Our main result is the universality of \(p_{z_1,\dots ,z_k}^{(\infty ,\text {Gin}(\mathbb {R},\mathbb {C}))}\) at the edge. In particular we show, that the edge-scaling limit of \(p_k^{(n)}\) agrees with the known scaling limit of the corresponding real or complex Ginibre ensemble.

Theorem 1

(Edge universality) Let \(X\) be an i.i.d. \(n\times n\) matrix, whose entries satisfy Assumption (A) and (B). Then, for any fixed integer \(k\ge 1\), and complex spectral parameters \(z_1, \dots , z_k\) such that \(\left|z_j\right|^2=1\), \(j=1,\dots ,k\), and for any compactly supported smooth function \(F:\mathbb {C}^k \rightarrow \mathbb {C}\), we have the bound

where the constant in \(\mathcal {O}(\cdot )\) may depend on \(k\) and the \(C^{2k+1}\) norm of \(F\), and \(c>0\) is a small constant depending on \(k\).

2.1 Proof strategy

For the proof of Theorem 1 it is essential to study the linearized \(2n\times 2n\) matrix \(H^z\) defined in (1) with eigenvalues \(\lambda _1^z\le \dots \le \lambda _{2n}^z\) and resolvent \(G(w)=G^z(w):= (H^z-w)^{-1}\). We note that the block structure of \(H^z\) induces a spectrum symmetric around \(0\), i.e. \(\lambda _i^z=-\lambda _{2n-i+1}^z\) for \(i=1,\dots ,n\). The resolvent becomes approximately deterministic as \(n\rightarrow \infty \) and its limit can be found by solving the simple scalar equation

which is a special case of the matrix Dyson equation (MDE), see e.g. [1]. In the following we may often omit the \(z\)-dependence of \(\widehat{m}^z\), \(G^z(w)\), \(\dots \), in the notation. We note that on the imaginary axis we have \(\widehat{m}(\text {i}\eta )=\text {i}\mathfrak {I}\widehat{m}(\text {i}\eta )\), and in the edge regime \(\left|1-\left|z\right|^2\right|\lesssim n^{-1/2}\) we have the scaling [4, Lemma 3.3]

For \(\eta >0\) we define

where \(M\) should be understood as a \(2n\times 2n\) whose four \(n\times n\) blocks are all multiples of the identity matrix, and we note that [4, Eq. (3.62)]

Throughout the proof we shall make use of the following optimal local law which is a direct consequence of [4, Theorem 5.2] (extending [3, Theorem 5.2] to the edge regime). Compared to [4] we require the local law simultaneously in all the spectral parameters \(z,\eta \) and for \(\eta \) slightly below the fluctuation scale \(n^{-3/4}\). We defer the proofs for both extensions to “Appendix A”.

Proposition 1

(Local law for \(H^z\)) Let \(X\) be an i.i.d. \(n\times n\) matrix, whose entries satisfy Assumption (A) and (B), and let \(H^z\) be as in (1). Then for any deterministic vectors \(\varvec{x},\varvec{y}\) and matrix \(R\) and any \(\xi >0\) the following holds true with very high probability: Simultaneously for any \(z\) with for \(\left|1-\left|z\right|\right|\lesssim n^{-1/2}\) and all \(\eta \) such that \(n^{-1}\le \eta \le n^{100}\) we have the bounds

For the application of Proposition 1 towards the proof of Theorem 1 the special case of \(R\) being the identity matrix, and \(\varvec{x},\varvec{y}\) being either the standard basis vectors, or the vectors \(\varvec{1}_\pm \) of zeros and ones defined later in (58).

The linearized matrix \(H^z\) can be related to the eigenvalues \(\sigma _i\) of \(X\) via Girko’s Hermitization formula [32, 54]

for rescaled test functions \(f_{z_0}(z):= n f(\sqrt{n}(z-z_0))\), where \(f:\mathbb {C}\rightarrow \mathbb {C}\) is smooth and compactly supported. When using (14) the small \(\eta \) regime requires additional bounds on the number of small eigenvalues \(\lambda _i^z\) of \(H^z\), or equivalently small singular values of \(X-z\). For very small \(\eta \), say \(\eta \le n^{-100}\), the absence of eigenvalues below \(\eta \), can easily be ensured by Assumption (B). For \(\eta \) just below the critical scale of \(n^{-3/4}\), however, we need to prove an additional bound on the number of eigenvalues, as stated below.

Proposition 2

For any \(n^{-1}\le \eta \le n^{-3/4}\) and \(\left|\left|z\right|^2-1\right|\lesssim n^{-1/2}\) we have the bound

on the number of small eigenvalues, for any \(\xi >0\).

We remark that the precise asymptotics of (15) are of no importance for the proof of Theorem 1. Instead it would be sufficient to establish that for any \(\epsilon >0\) there exists \(\delta >0\) such that we have \({{\,\mathrm{\mathbf {E}}\,}}\left|\{i \vert \left|\lambda _i^z\right|\le n^{-3/4-\epsilon }\}\right|\lesssim n^{-\delta }\).

The paper is organized as follows: in Sect. 3 we will prove Proposition 2 by a Green function comparison argument, using the analogous bound for the Gaussian case, as recently obtained in [18]. In Sect. 4 we will then present the proof of our main result, Theorem 1, which follows from combining the local law (13), Girko’s Hermitization identity (14), the bound on small singular values (15) and another long-time Green function comparison argument.

3 Estimate on the lower tail of the smallest singular value of \(X-z\)

The main result of this section is an estimate of the lower tail of the density of the smallest \(\left|\lambda _i^z\right|\) in Proposition 2. For this purpose we introduce the following flow

with initial data \(X_0=X\), where \(B_t\) is the real or complex matrix valued standard Brownian motion, i.e. \(B_t\in \mathbb {R}^{n\times n}\) or \(B_t\in \mathbb {C}^{n\times n}\), accordingly with \(X\) being real or complex, where \((b_t)_{ab}\) in the real case, and \(\sqrt{2}\mathfrak {R}[(b_t)_{ab}], \sqrt{2}\mathfrak {I}[(b_t)_{ab}]\) in the complex case, are independent standard real Brownian motions for \(a,b\in [n]\). The flow (16) induces a flow \({\text {d}}\!{}\chi _t=-\chi _t{\text {d}}\!{}t/2+{\text {d}}\!{}b_t\) on the entry distribution \(\chi \) with solution

where \(g {\sim } {\mathcal {N}}(0,1)\) is a standard real or complex Gaussian, independent of \(\chi \), with \({{\,\mathrm{\mathbf {E}}\,}}g^2=0\) in the complex case. By linearity of cumulants we find

where \(\kappa _{i,j}(x)\) denotes the joint cumulant of \(i\) copies of \(x\) and \(j\) copies of \(\overline{x}\), in particular \(\kappa _{2,0}(x)=\kappa _{0,2}(x)=\kappa _{1,1}(x)=1\) for \(x=\chi ,g\) in the real case, and \(\kappa _{0,2}(x)=\kappa _{2,0}(x)=0\ne \kappa _{1,1}(x)=1\) for \(x=\chi ,g\) in the complex case.

Thus (17) implies that, in distribution,

where \(\widetilde{X}\) is a real or complex Ginibre matrix independent of \(X_0=X\). Then, we define the \(2n\times 2n\) matrix \(H_t=H_t^z\) as in (1) replacing \(X\) by \(X_t\), and its resolvent \(G_t(w)=G_t^z(w):= (H_t-w)^{-1}\), for any \(w\in \mathbb {H}\). We remark that we defined the flow in (16) with initial data \(X\) and not \(H^z\) in order to preserve the shape of the self consistent density of states of the matrix \(H_t\) along the flow. In particular, by (16) it follows that \(H_t\) is the solution of the flow

with

where \(I\) denotes the \(n \times n\) identity matrix.

Proposition 3

Let \(R_t:= \langle G_t(\text {i}\eta ) \rangle =\text {i}\langle \mathfrak {I}G_t(\text {i}\eta )\rangle \), then for any \(n^{-1}\le \eta \le n^{-3/4}\) it holds that

for any arbitrary small \(\xi >0\) and any \(0\le t_1< t_2\le +\infty \), with the convention that \(e^{-\infty }=0\).

Proof

Denote \(W_t:=H_t+Z\). By (20) and Ito’s Lemma it follows that

where \(\alpha , \beta \in [2n]^2\) are double indices, \(w_\alpha (t)\) are the entries of \(W_t\) and

denotes the joint cumulant of \(w_\alpha ,w_\beta ,\dots \), and \(\partial _\alpha := \partial _{w_\alpha }\). By (18) and the independence of \(\chi \) and \(g\) it follows that \(\kappa _t(\alpha ,\beta )=\kappa _0(\alpha ,\beta )\) for all \(\alpha ,\beta \) and

for \(j>1\), where for a double index \(\alpha =(a,b)\), we use the notation \(\alpha ':= (b,a)\), and \(l,k\) with \(l+k=j+1\) denote the number of double indices among \(\alpha ,\beta _1,\dots ,\beta _j\) which correspond to the upper-right, or respectively lower-left corner of the matrix \(H\). In the sequel the value of \(\kappa _{k,l}(\chi )\) is of no importance, but we note that Assumption (A) ensures the bound \(\left|\kappa _{k,l}(\chi )\right| \lesssim \sum _{j\le k+l} C_j<\infty \) for any \(k,l\), with \(C_j\) being the constants from Assumption (A).

We will use the cumulant expansion that holds for any smooth function \(f\):

where the error term \(\varOmega (K,f)\) goes to zero as the expansion order \(K\) goes to infinity. In our application the error is negligible for, say, \(K=100\) since with each derivative we gain an additional factor of \(n^{-1/2}\) and due to the independence (24) the sums of any order have effectively only \(n^2\) terms. Applying (25) to (22) with \(f=\partial _\alpha R_t\), the first order term is zero due to the assumption \({{\,\mathrm{\mathbf {E}}\,}}x_\alpha =0\), and the second order term cancels. The third order term is given by

Proof of Eq. (26)

It follows from the resolvent identity that \(\partial _\alpha G=-G\varDelta ^\alpha G\), where \(\varDelta ^\alpha \) is the matrix of all zeros except for a \(1\) in the \(\alpha \)-th entry.Footnote 1 Thus, neglecting minuses and irrelevant constant factors, for any fixed \(\alpha \), the sum (26) is given by a sum of terms of the form

Hence, considering all possible choices of \(\gamma _{1},\gamma _2,\gamma _3\) and using independence to conclude that \(\kappa _t(\alpha ,\beta _1,\beta _2)\) can only be non-zero if \(\beta _1,\beta _2\in \{\alpha ,\alpha '\}\) we arrive at

where the sums are taken over \((a,b)\in [2n]^2\setminus ([n]^2\cup [n+1,2n]^2)\) and \(c\in [2n]\), and we dropped the time dependence of \(G=G_t\) for notational convenience.

We estimate the three sums in (27) using that, by (10), (12), it follows

from Proposition 1, and Cauchy-Schwarz estimates by

and similarly

and

This concludes the proof of (26) by choosing \(\xi \) in Proposition 1 accordingly. \(\square \)

Finally, in the cumulant expansion of (22) we are able to bound the terms of order at least four trivially. Indeed, for the fourth order, the trivial bound is \(e^{-2t}\) since the \(n^3\) from the summation is compensated by the \(n^{-2}\) from the cumulants and the \(n^{-1}\) from the normalization of the trace. Morever, we can always perform at least two Ward-estimates on the first and last \(G\) with respect to the trace index. Thus we can estimate any fourth-order term by \( e^{-2t}(n\eta )^{-2}\le e^{-3t/2}n^{-7/2}\eta ^{-4}\), and we note that the power-counting for higher order terms is even better than that. Whence we have shown that \({{\,\mathrm{\mathbf {E}}\,}}\left|{\text {d}}\!{}R_t/{\text {d}}\!{}t\right| \lesssim e^{-3t/2}n^{-7/2}\eta ^{-4} \) and the proof of Proposition 3 is complete after integrating (22) in \(t\) from \(t_1\) to \(t_2\). \(\square \)

Let \(\widetilde{X}\) be a real or complex \(n\times n\) Ginibre matrix and let \(\widetilde{H}^z\) be the linearized matrix defined as in (1) replacing \(X\) by \(\widetilde{X}\). Let \(\widetilde{\lambda }_i=\widetilde{\lambda }_i^z\), with \(i\in \{1,\dots , 2n\}\), be the eigenvalues of \(\widetilde{H}^z\). We define the non negative Hermitian matrix \(\widetilde{Y}=\widetilde{Y}^z:= (\widetilde{X}-z)(\widetilde{X}-z)^*\), then, by [18],[Eq. (13c)-(14)] it follows that for any \(\eta \le n^{-3/4}\) we have

for \(\widetilde{X}\) distributed according to the complex, or respective, real Ginibre ensemble.

Combining (28) and Proposition 3 we now present the proof of Proposition 2.

Proof of Proposition 2

Let \(\lambda _i(t)\), with \(i\in \{1,\dots , 2n\}\), be the eigenvalues of \(H_t\) for any \(t\ge 0\). Note that \(\lambda _i(0)=\lambda _i\), since \(H_0=H^z\). By (21), choosing \(t_1=0\), \(t_2=+\infty \) it follows that

for any \(\xi >0\). Since the distribution of \(H_\infty \) is the same as \(\widetilde{H}^z\) it follows that

and combining (28) with (29), we immediately conclude the bound in (15). \(\square \)

4 Edge universality for non-Hermitian random matrices

In this section we prove our main edge universality result, as stated in Theorem 1. In the following of this section without loss of generality we can assume that the test function \(F\) is of the form

with \(f^{(1)},\dots , f^{(k)}:\mathbb {C}\rightarrow \mathbb {C}\) being smooth and compactly supported functions. Indeed, any smooth function \(F\) can be effectively approximated by its truncated Fourier series (multiplied by smooth cutoff function of product form); see also [54, Remark 3]. Using the effective decay of the Fourier coefficients of \(F\) controlled by its \(C^{2k+1}\) norm, a standard approximation argument shows that if (8) holds for \(F\) in the product form (30) with an error \(\mathcal {O}( n^{-c(k)})\), then it also holds for a general smooth function with an error \(\mathcal {O}( n^{-c})\), where the implicit constant in \(\mathcal {O}(\cdot )\) depends on \(k\) and on the \(C^{2k+1}\)-norm of \(F\), and the constant \(c>0\) depends on \(k\).

To resolve eigenvalues on their natural scale we consider the rescaling \(f_{z_0}(z):= n f(\sqrt{n}(z-z_0))\) and compare the linear statistics \(n^{-1}\sum _i f_{z_0}(\sigma _i)\) and \(n^{-1}\sum _i f_{z_0}(\widetilde{\sigma }_i)\), with \(\sigma _i,\widetilde{\sigma }_i\) being the eigenvalues of \(X\) and of the comparison Ginibre ensemble \(\widetilde{X}\), respectively. For convenience we may normalize both linear statistics by their deterministic approximation from the local law (13) which, according to (14) is given by

where \(\mathbb {D}\) denotes the unit disk of the complex plane.

Proposition 4

Let \(k\in \mathbb {N}\) and \(z_1, \dots , z_k\in \mathbb {C}\) be such that \(\left|z_j\right|^2=1\) for all \(j\in [k]\), and let \(f^{(1)},\dots ,f^{(k)}\) be smooth compactly supported test functions. Denote the eigenvalues of an i.i.d. matrix \(X\) satisfying Assumptions (A)–(B) and a corresponding real or complex Ginibre matrix \(\widetilde{X}\) by \(\{\sigma _i\}_{i=1}^n\), \(\{\widetilde{\sigma }_i\}_{i=1}^n\). Then we have the bound

for some small constant \(c(k)>0\), where the implicit multiplicative constant in \(\mathcal {O}(\cdot )\) depends on the norms \(\Vert \varDelta f^{(j)}\Vert _1\), \(j=1,2, \ldots , k\).

Proof of Theorem 1

Theorem 1 follows directly from Proposition 4 by the definition of the \(k\)-point correlation function in (6), the exclusion-inclusion principle and the bound

\(\square \)

The remainder of this section is devoted to the proof of Proposition 4. We now fix some \(k\in \mathbb {N}\) and some \(z_1,\dots ,z_k,f^{(1)},\dots ,f^{(k)}\) as in Proposition 4. All subsequent estimates in this section, also if not explicitly stated, hold true uniformly for any \(z\) in an order \(n^{-1/2}\)-neighborhood of \(z_1,\dots , z_k\). In order to prove (32), we use Girko’s formula (14) to write

where

with \(\eta _0:= n^{-3/4-\delta }\), for some small fixed \(\delta >0\), and for some very large \(T>0\), say \(T:= n^{100}\). We define \(\widetilde{I}_1^{(j)}\), \(\widetilde{I}_2^{(j)}\), \(\widetilde{I}_3^{(j)}\), \(\widetilde{I}_4^{(j)}\) analogously for the Ginibre ensemble by replacing \(H^z\) by \(\widetilde{H}^z\) and \(G^z\) by \(\widetilde{G}^z\).

Proof of Proposition 4

The first step in the proof of Proposition 4 is the reduction to a corresponding statement about the \(I_3\)-part in (33), as summarized in the following lemma.

Lemma 1

Let \(k\ge 1\), let \(I_3^{(1)},\dots , I_3^{(k)}\) be the integrals defined in (33), with \(\eta _0=n^{-3/4-\delta }\), for some small fixed \(\delta >0\), and let \(\widetilde{I}_3^{(1)}, \dots , \widetilde{I}_3^{(k)}\) be defined as in (33) replacing \(m^z\) with \(\widetilde{m}^z\). Then,

for some small constant \(c_2(k,\delta )>0\).

In order to conclude the proof of Proposition 4, due to Lemma 1, it only remains to prove that

for any fixed \(k\) with some small constant \(c(k)>0\), where we recall the definition of \(I_3\) and the corresponding \(\widetilde{I}_3\) for Ginibre from (33). The proof of (35) is similar to the Green function comparison proof in Proposition 3 but more involved due to the fact that we compare products of resolvents and that we have an additional \(\eta \)-integration. Here we define the observable

where we recall that \(G_t^z(w):= (H^z_t-w)^{-1}\) with \(H^z_t=H_t\) as in (20). \(\square \)

Lemma 2

For any \(n^{-1}\le \eta _0\le n^{-3/4}\) and \(T=n^{100}\) and any small \(\xi >0\) it holds that

uniformly in \(0\le t_1<t_2\le +\infty \) with the convention that \(e^{-\infty }=0\).

Since \(Z_0=\prod _j I_3^{(j)}\) and \(Z_\infty =\prod _j \widetilde{I}_3^{(j)}\), the proof of Proposition 4 follows directly from (35), modulo the proofs of Lemmata 1–2 that will be given in the next two subsections. \(\square \)

4.1 Proof of Lemma 1

In order to estimate the probability that there exists an eigenvalue of \(H^z\) very close to zero, we use the following proposition that has been proven in [3, Prop. 5.7] adapting the proof of [9, Lemma 4.12].

Proposition 5

Under Assumption (B) there exists a constant \(C>0\), depending only on \(\alpha \), such that

for all \(u>0\) and \(z\in \mathbb {C}\).

In the following lemma we prove a very high probability bound for \(I_1^{(j)}\), \(I_2^{(j)}\), \(I_3^{(j)}\), \(I_4^{(j)}\). The same bounds hold true for \(\widetilde{I}_1^{(j)}\), \(\widetilde{I}_2^{(j)}\), \(\widetilde{I}_3^{(j)}\), \(\widetilde{I}_4^{(j)}\) as well. These bounds in the bulk regime were already proven in [3, Proof of Theorem 2.5] the current edge regime is analogous, so we only provide a sketch of the proof for completeness.

Lemma 3

For any \(j\in [k]\) the bounds

hold with very high probability for any \(\xi >0\). The bounds analogous to (39) also hold for \(\widetilde{I}_l^{(j)}\).

Proof

For notational convenience we do not carry the \(j\)-dependence of \(I_l^{(j)}\) and \(f^{(j)}\), and the dependence of \(\lambda _i,H,G,M,\widehat{m}\) on \(z\) within this proof. Using that

we easily estimate \(\left|I_1\right|\) as follows

for any \(\xi >0\) with very high probability owing to the high moment bound (3). By (9) it follows that \(\left|\mathfrak {I}\widehat{m}^z(\text {i}\eta )-(\eta +1)^{-1}\right|\sim \eta ^{-2}\) for large \(\eta \), proving also the bound on \(I_4\) in (39). The bound for \(I_3\) follows immediately from the averaged local law in (13).

For the \(I_2\) estimate we split the \(\eta \)-integral of \(\mathfrak {I}m^z(\text {i}\eta )-\mathfrak {I}\widehat{m}^z(\text {i}\eta )\) in \(I_2\) as follows

where \(l\in \mathbb {N}\) is a large fixed integer. Using (10) we find that the third term in (40) is bounded by \(n^{-1-\delta }\). Choosing \(l\) large enough, it follows, as in [3, Eq. (5.35)] using the bound (38) that

with very high probability for any \(\xi >0\). Alternatively, this bound also follows from (5) without Assumption (B), circumventing Proposition 5, see Remark 1. For the second term in (40) we define \(\eta _1:= n^{-3/4+\xi }\) with some very small \(\xi >0\) and using \(\log (1+x)\le x\) we write

by the averaged local law in (13), and \(\langle \mathfrak {I}M^z(\text {i}\eta _1)\rangle \lesssim \eta _1^{1/3}\) from (10). Here from the second to third line in (42) we used that

again by the local law. By redefining \(\xi \), this concludes the high probability bound on \(I_2\) in (39), and thereby the proof of the lemma. \(\square \)

In the following lemma we prove an improved bound for \(I_2^{(j)}\), compared with (39), which holds true only in expectation. The main input of the following lemma is the stronger lower tail estimate on \(\lambda _i\), in the regime \(\left|\lambda _i\right|\ge n^{-l}\), from (15) instead of (43).

Lemma 4

Let \(I_2^{(j)}\) be defined in (33), then

for any \(j\in \{1,\dots , k\}\).

Proof

We split the \(\eta \)-integral of \(\mathfrak {I}m^z(\text {i}\eta )-\mathfrak {I}\widehat{m}^z(\text {i}\eta )\) as in (40). The third term in the r.h.s. of (40) is of order \(n^{-1-4\delta /3}\). Then, we estimate the first term in the r.h.s. of (40) in terms of the smallest (in absolute value) eigenvalue \(\lambda _{n+1}\) as

where in the last inequality we use (38) with \(u=e^{-t} n\). Note that by (15) it follows that

Hence, by (46), using similar computations to (42), we conclude that

Note that the only difference to prove (47) respect to (42) is that the first term in the first line of the r.h.s. of (42) is estimated using (46) instead of (43). Finally, choosing \(l\ge \alpha ^{-1} (3+\beta )(1+\alpha )+2\), and combining (45), (47) we conclude (44). \(\square \)

Equipped with Lemmata 3–4, we now present the proof of Lemma 1.

Proof of Lemma 1

Using the definitions for \(I_1^{(j)}, I_2^{(j)},I_3^{(j)}, I_4^{(j)}\) in (33), and similar definitions for \(\widetilde{I}_1^{(j)}, \widetilde{I}_2^{(j)},\widetilde{I}_3^{(j)}, \widetilde{I}_4^{(j)}\), we conclude that

Then, if \(j_2\ge 1\), by Lemmas 3 and 4, using that \(T=n^{100}\) in the definition of \(I_1^{(j)},\dots , I_4^{(j)}\) in (33), it follows that

for any \(j_1,j_3, j_4 \ge 0\), and a small constant \(c(_2k,\delta )>0\) which only depends on \(k, \delta \). If, instead, \(j_2=0\), then at least one among \(j_1\) and \(j_4\) is not zero, since \(0\le j_3\le k-1\) and \(j_1+j_2+j_3+j_4=k\). Assume \(j_1\ge 1\), the case \(j_4\ge 1\) is completely analogous, then

Since similar bounds hold true for \(\widetilde{I}_1^{(i_1)}, \widetilde{I}_2^{(i_2)}, \widetilde{I}_3^{(i_3)}, \widetilde{I}_4^{(i_4)}\) as well, the above inequalities conclude the proof of (34). \(\square \)

4.2 Proof of Lemma 2

We begin with a lemma generalizing the bound in (39) to derivatives of \(I_3^{(j)}\).

Lemma 5

Assume \(n^{-1}\le \eta _0\le n^{-3/4}\) and fix \(l\ge 0\), \(j\in [k]\) and a double index \(\alpha =(a,b)\) such that \(a\ne b\). Then, for any choice of \(\gamma _i\in \{\alpha ,\alpha '\}\) and any \(\xi >0\) we have the bounds

where \(\partial _\gamma ^l:=\partial _{\gamma _1}\dots \partial _{\gamma _l}\), with very high probability uniformly in \(t\ge 0\).

Proof

We omit the \(t\)- and \(z\)-dependence of \(G_t^z\), \(\widehat{m}^z\) within this proof since all bounds hold uniformly in \(t\ge 0\) and \(\left|z-z_j\right|\lesssim n^{-1/2}\). We also omit the \(\eta \)-argument from these functions, but the \(\eta \)-dependence of all estimates will explicitly be indicated. Note that the \(l=0\) case was already proven in (39). We now separately consider the remaining cases \(l=1\) and \(l\ge 2\). For notational simplicity we neglect the \(n^\xi \) multiplicative error factors (with arbitrarily small exponents \(\xi >0\)) applications of the local law (13) within the proof. In particular we will repeatedly use (13) in the form

where we defined the parameter

4.2.1 Case \(l=1\)

This follows directly from

where in the last step we used \(\Vert G(\text {i}T)\Vert \le T^{-1}=n^{-100}\) and (49). Since this bound is uniform in \(z\) we may bound the remaining integral by \( n\Vert \varDelta f^{(j)}\Vert _1 \), proving (48).

4.2.2 Case \(l\ge 2\)

For the case \(l\ge 2\) there are many assignments of \(\gamma _i\)’s to consider, e.g.

but all are of the form that there are two \(G\)-factors carrying the independent summation index \(c\). In the case that \(a\equiv b+n\pmod {2n}\) we simply bound all remaining \(G\)-factors by \(1\) using (49) and use a simple Cauchy-Schwarz inequality to obtain

Now it follows from the Ward-identity

and the very crude bound \( \left|G_{aa}\right|\lesssim 1 \) from (49) and \(\left|\widehat{m}\right|\lesssim 1\), that

By estimating the remaining \(z\)-integral in (50) by \( n\Vert \varDelta f^{(j)}\Vert \) the claimed bound in (48) for \(a=b+n\pmod {2n}\) follows.

In the case \(a\not \equiv b+n\pmod {2n}\) we can use (49) to gain a factor of \(\psi \) for some \(G_{ab}\) or \(G_{bb}-\widehat{m}\) in all assignments except for the one in which all but two \(G\)-factors are diagonal, and those \(G_{aa},G_{bb}\)-factors are replaced by \(\widehat{m}\). For example, we would expand

where in all but the first term we gained at least a factor of \(\psi \). Using Cauchy-Schwarz as before we thus have the bound

where strictly speaking, the second and third terms are only present for even, or respectively odd, \(l\). For the first term in (52) we again proceed by applying the Ward identity (51), and (49) to obtain the bound

For the second and third terms in (52) we use \(\text {i}G^2=G'\), where prime denotes \(\partial _\eta \), and integration by parts, \(\left|\widehat{m}'\right|\lesssim \eta ^{-2/3}\) from (12), and (49) to obtain the bounds

and

In the explicit deterministic term we performed an integration and estimated

The claim (48) for \(l\ge 2\) and \(a\not \equiv b+n\pmod {2n}\) now follows from estimating the remaining \(z\)-integral in (52) by \(n\Vert \varDelta f^{(j)}\Vert _1\). \(\square \)

Proof of Lemma 2

By (20) and Ito’s Lemma it follows that

where we recall the definition of \(\kappa _t\) in 23. In fact, the point-wise estimate from Lemma 5 gives a sufficiently strong bound for most terms in the cumulant expansion, the few remaining terms will be computed more carefully.

In the cumulant expansion (25) of (53) the second order terms cancel exactly and we now separately estimate the third-, fourth- and higher order terms.

4.2.3 Order three terms

For the third order, when computing \(\partial _\alpha \partial _{\beta _1}\partial _{\beta _2} Z_t\) through the Leibniz rule we have to consider all possible assignments of derivatives \(\partial _\alpha ,\partial _{\beta _1},\partial _{\beta _2}\) to the factors \(I_3^{(1)},\dots ,I_3^{(k)}\). Since the particular functions \(f^{(j)}\) and complex parameters \(z_j\) play no role in the argument, there is no loss in generality in considering only the assignments

for the second and third term of which we obtain a bound of

using Lemma 5 and the cumulant scaling (24). Note that the condition \(a\ne b\) in the lemma is ensured by the fact that for \(a=b\) the cumulants \(\kappa _t(\alpha ,\beta _1,\dots )\) vanish.

The first term in (54) requires an additional argument. We write out all possible index allocations and claim that ultimately we obtain the same bound, as for the other two terms in (54), i.e.

where

Proof of Eq. (55)

Compared to the previous bound in Lemma 5 we now exploit the \(a,b\) summation via the isotropic structure of the bound in the local law (59). We have the simple bounds

as a consequence of the Ward identity (51) and using (13) and (10). For the first term in (56) we can thus use (57) and (51) to obtain

For the second term in (56) we split \(G_{bb}=\widehat{m}+ \mathcal {O}(\psi )\) and bound it by

where \(e_a\) denotes the \(a\)-th standard basis vector,

are vectors of \(n\) ones and zeros, respectively, of norm \(\Vert \varvec{1}_\pm \Vert =\sqrt{n}\) and \(s(a):=-\) for \(a\le n\), and \(s(a):=+\) for \(a>n\). Here in the second step we used a Cauchy-Schwarz inequality for the \(a\)-summation in both integrals after estimating the \(G^2\)-terms using (57). Finally, for the third term in (56) we split both \(G_{aa}=\widehat{m}+\mathcal {O}(\psi )\) and \(G_{bb}=\widehat{m}+\mathcal {O}(\psi )\) to estimate

using (57). In the last integral we used that \(\left|\widehat{m}\right|\lesssim (1+\eta )^{-1}\) to ensure the integrability in the large \(\eta \)-regime. Inserting these estimates on (56) into (55) and estimating the remaining integral by \(n\Vert \varDelta f^{(1)}\Vert _1\) completes the proof of (55). \(\square \)

4.2.4 Order four terms

For the fourth-order Leibniz rule we have to consider the assignments

for all of which we obtain a bound of

4.2.5 Higher order terms

For terms order at least \(5\), there is no need to additionally gain from any of the factors of \(I_3\) and we simply bound all those, and their derivatives, by \(n^\xi \) using Lemma 5. This results in a bound of \(n^{\xi -(l-4)/2} e^{-l t/2} \prod _j \Vert \varDelta f^{(j)}\Vert _1 \) for the terms of order \(l\).

By combining the estimates on the terms of order three, four and higher order derivatives, and integrating in \(t\) we obtain the bound (37). This completes the proof of Lemma 2. \(\square \)

5 A. Extension of the local law

Proof of Proposition 1

The statement for fixed \(z,\eta \) follows directly from [4, Theorem 5.2], if \(\eta \ge \eta _0:= n^{-3/4+\epsilon }\). For smaller \(\eta _1\), using \(\partial _\eta G(\text {i}\eta )=\text {i}G^2(\text {i}\eta )\), we write

and estimate the first term using the local law by \( n^{-1/4+\xi } \). For the second term we bound

from \(\Vert M'\Vert \lesssim (\mathfrak {I}\widehat{m})^{-2}\) and (10), and use monotonicity of \(\eta \mapsto \eta \langle \varvec{x},\mathfrak {I}G(\text {i}\eta )\varvec{x}\rangle \) in the form

After integration we thus obtain a bound of \( \Vert \varvec{x}\Vert \Vert \varvec{y}\Vert n^{4\epsilon /3}/(n\eta _1)\) which proves the first bound in (13). The second, averaged, bound in (13) follows directly from the first one since below the scale \(\eta \le n^{-3/4}\) there is no additional gain from the averaging, as compared to the isotropic bound.

In order to conclude the local law simultaneously in all \(z,\eta \) we use a standard grid argument. To do so, we choose a regular grid of \(z\)’s and \(\eta \)’s at a distance of, say, \(n^{-3}\) and use Lipschitz continuity (with Lipschitz constant \(n^2\)) of \((\eta ,z)\mapsto G^z(\text {i}\eta )\) and a union bound over the exceptional events at each grid point. \(\square \)

Notes

The matrix \(\varDelta ^\alpha \) is not to be confused with the Laplacian \(\varDelta f\) in Girko’s formula (14).

References

Ajanki, O.H., Erdős, L., Krüger, T.: Stability of the matrix Dyson equation and random matrices with correlations. Probab. Theory Relat. Fields 173, 293–373 (2019)

Ajanki, O.H., Erdős, L., Krüger, T.: Universality for general Wigner-type matrices. Probab. Theory Relat. Fields 169, 667–727 (2017)

Alt, J., Erdős, L., Krüger, T.: Local inhomogeneous circular law. Ann. Appl. Probab. 28, 148–203 (2018)

Alt, J., Erdős, L., Krüger, T.: Spectral radius of random matrices with independent entries, preprint (2019) arXiv:1907.13631

Alt, J., Erdős, L., Krüger, T., Schröder, D.: Correlated random matrices: band rigidity and edge universality. Ann. Probab. 48, 963–1001 (2020)

Bai, Z.D.: Circular law. Ann. Probab. 25, 494–529 (1997)

Bai, Z.D., Yin, Y.Q.: Limiting behavior of the norm of products of random matrices and two problems of Geman–Hwang. Probab. Theory Relat. Fields 73, 555–569 (1986)

Bordenave, C., Caputo, P., Chafaï, D., Tikhomirov, K.: On the spectral radius of a random matrix: an upper bound without fourth moment. Ann. Probab. 46, 2268–2286 (2018)

Bordenave, C., Chafaï, D.: Around the circular law. Probab. Surv. 9, 1–89 (2012)

Borodin, A., Sinclair, C.D.: The Ginibre ensemble of real random matrices and its scaling limits. Commun. Math. Phys. 291, 177–224 (2009)

Bourgade, P., Dubach, G.: The distribution of overlaps between eigenvectors of Ginibre matrices. Probab. Theory Relat. Fields 177, 397–464 (2020)

Bourgade, P., Erdős, L., Yau, H.-T.: Edge universality of beta ensembles. Commun. Math. Phys. 332, 261–353 (2014)

Bourgade, P., Erdős, L., Yau, H.-T., Yin, J.: Universality for a class of random band matrices. Adv. Theor. Math. Phys. 21, 739–800 (2017)

Bourgade, P., Yau, H.-T., Yin, J.: Local circular law for random matrices. Probab. Theory Relat. Fields 159, 545–595 (2014)

Bourgade, P., Yau, H.-T., Yin, J.: Random band matrices in the delocalized phase, I: Quantum unique ergodicity and universality, preprint (2018) arXiv:1807.01559

Bourgade, P., Yau, H.-T.: The local circular law II: the edge case. Probab. Theory Relat. Fields 159, 619–660 (2014)

Cipolloni, G., Erdős, L., Krüger, T., Schröder, D.: Cusp universality for random matrices, II: the real symmetric case. Pure Appl. Anal. 1, 615–707 (2019)

Cipolloni, G., Erdős, L., Schröder, D.: Optimal lower bound on the least singular value of the shifted Ginibre ensemble, preprint (2019) arXiv: 1908.01653

Dyson, F.J.: A Brownian-motion model for the eigenvalues of a random matrix. J. Math. Phys. 3, 1191–1198 (1962)

Edelman, A.: The probability that a random real Gaussian matrix has k real eigenvalues, related distributions, and the circular law. J. Multivar. Anal. 60, 203–232 (1997)

Edelman, A., Kostlan, E., Shub, M.: How many eigenvalues of a random matrix are real? J. Am. Math. Soc. 7, 247–267 (1994)

Erdős, L., Krüger, T., Schröder, D.: Cusp universality for random matrices I: local law and the complex Hermitian case. Commun. Math. Phys. 378, 1203–1278 (2020)

Erdős, L., Krüger, T., Schröder, D.: Random matrices with slow correlation decay. Forum Math. Sigma 7(Paper No. e8), 89 (2019)

Erdős, L., Péché, S., Ramírez, J.A., Schlein, B.: Bulk universality for Wigner matrices. Commun. Pure Appl. Math. 63, 895–925 (2010)

Erdős, L., Schlein, B.: Universality of random matrices and local relaxation flow. Invent. Math. 185, 75–119 (2011)

Erdős, L., Schnelli, K.: Universality for random matrix flows with timedependent density. Ann. Inst. Henri Poincaré Probab. Stat. 53, 1606–1656 (2017)

Erdős, L., Yau, H.-T.: A Dynamical Approach to Random Matrix Theory. Courant Lecture Notes in Mathematics, vol. 28, p. ix+226. Courant Institute of Mathematical Sciences/American Mathematical Society, New York/Providence (2017)

Forrester, P., Nagao, T.: Eigenvalue statistics of the real Ginibre ensemble. Phys. Rev. Lett. 99, 050603 (2007)

Fyodorov, Y.V.: On statistics of bi-orthogonal eigenvectors in real and complex Ginibre ensembles: combining partial Schur decomposition with supersymmetry. Commun. Math. Phys. 363, 579–603 (2018)

Geman, S.: The spectral radius of large random matrices. Ann. Probab. 14, 1318–1328 (1986)

Ginibre, J.: Statistical ensembles of complex, quaternion, and real matrices. J. Math. Phys. 6, 440–449 (1965)

Girko, V.L.: The circular law. Teor. Veroyatnost. i Primenen. 29, 669–679 (1984)

Hachem, W., Hardy, A., Najim, J.: Large complex correlated Wishart matrices: the Pearcey kernel and expansion at the hard edge. Electron. J. Probab. 21(Paper No. 1), 36 (2016)

Huang, J., Landon, B., Yau, H.-T.: Bulk universality of sparse random matrices. J. Math. Phys. 56(123301), 19 (2015)

Johansson, K.: Universality of the local spacing distribution in certain ensembles of Hermitian Wigner matrices. Commun. Math. Phys. 215, 683–705 (2001)

Kanzieper, E., Akemann, G.: Statistics of real eigenvalues in Ginibre’s ensemble of random real matrices. Phys. Rev. Lett. 95(230201), 4 (2005)

Landon, B.: Convergence of local statistics of Dyson Brownian motion. Commun. Math. Phys. 355, 949–1000 (2017)

Landon, B., Yau, H.-T.: Edge statistics of Dyson Brownian motion, preprint (2017) arXiv:1712.03881

Lee, J.O., Schnelli, K.: Edge universality for deformed Wigner matrices. Rev. Math. Phys. 27(1550018), 94 (2015)

Lee, J.O., Schnelli, K.: Local law and Tracy–Widom limit for sparse random matrices. Probab. Theory Relat. Fields 171, 543–616 (2018)

Lee, J.O., Schnelli, K.: Tracy–Widom distribution for the largest eigenvalue of real sample covariance matrices with general population. Ann. Appl. Probab. 26, 3786–3839 (2016)

Lee, J.O., Schnelli, K., Stetler, B., Yau, H.-T.: Bulk universality for deformed Wigner matrices. Ann. Probab. 44, 2349–2425 (2016)

Lehmann, N., Sommers, H.-J.: Eigenvalue statistics of random real matrices. Phys. Rev. Lett. 67, 941–944 (1991)

Mehta, M.L.: Random Matrices and the Statistical Theory of Energy Levels, p. x+259. Academic Press, New York (1967)

Pastur, L., Shcherbina, M.: Bulk universality and related properties of Hermitian matrix models. J. Stat. Phys. 130, 205–250 (2008)

Pastur, L., Shcherbina, M.: On the edge universality of the local eigenvalue statistics of matrix models. Mat. Fiz. Anal. Geom. 10, 335–365 (2003)

Pearcey, T.: The structure of an electromagnetic field in the neighbourhood of a cusp of a caustic. Philos. Mag. (7) 37, 311–317 (1946)

Sodin, S.: The spectral edge of some random band matrices. Ann. Math. (2) 172, 2223–2251 (2010)

Sommers, H.-J., Wieczorek, W.: General eigenvalue correlations for the real Ginibre ensemble. J. Phys. A 41(405003), 24 (2008)

Soshnikov, A.: Universality at the edge of the spectrum in Wigner random matrices. Commun. Math. Phys. 207, 697–733 (1999)

Tao, T., Vu, V.: Random matrices: the circular law. Commun. Contemp. Math. 10, 261–307 (2008)

Tao, T., Vu, V.: Random matrices: universality of local eigenvalue statistics. Acta Math. 206, 127–204 (2011)

Tao, T., Vu, V.: Random matrices: universality of local eigenvalue statistics up to the edge. Commun. Math. Phys. 298, 549–572 (2010)

Tao, T., Vu, V.: Random matrices: universality of local spectral statistics of non-Hermitian matrices. Ann. Probab. 43, 782–874 (2015)

Tao, T., Vu, V.: Smooth analysis of the condition number and the least singular value. Math. Comput. 79, 2333–2352 (2010)

Tracy, C.A., Widom, H.: Level-spacing distributions and the Airy kernel. Commun. Math. Phys. 159, 151–174 (1994)

Tracy, C.A., Widom, H.: On orthogonal and symplectic matrix ensembles. Commun. Math. Phys. 177, 727–754 (1996)

Tracy, C.A., Widom, H.: The Pearcey process. Commun. Math. Phys. 263, 381–400 (2006)

Yin, J.: The local circular law III: general case. Probab. Theory Relat. Fields 160, 679–732 (2014)

Funding

Open access funding provided by Institute of Science and Technology (IST Austria).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Partially supported by ERC Advanced Grant No. 338804 and the European Union’s Horizon 2020 research and innovation programme under the Marie Skłodowska-Curie Grant Agreement No. 665385.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Cipolloni, G., Erdős, L. & Schröder, D. Edge universality for non-Hermitian random matrices. Probab. Theory Relat. Fields 179, 1–28 (2021). https://doi.org/10.1007/s00440-020-01003-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00440-020-01003-7