Abstract

Thermo-mechanical finite element (FE) models predict the thermal behavior of machine tools and the associated mechanical deviations. However, one disadvantage is their high computational expense, linked to the evaluation of the large systems of differential equations. Therefore, projection-based model order reduction (MOR) methods are required in order to create efficient surrogate models. This paper presents a parametric MOR method for weakly coupled thermo-mechanical FE models of machine tools and other similar mechatronic systems. This work proposes a reduction method, Krylov Modal Subspace (KMS), and a theoretical bound of the reduction error. The developed method addresses the parametric dependency of the convective boundary conditions using the concept of system bilinearization. The reduced-order model reproduces the thermal response of the original FE model in the frequency range of interest for any value of the parameters describing the convective boundary conditions. Additionally, this paper investigates the coupling between the reduced-order thermal system and the mechanical response. A numerical example shows that the reduced-order model captures the response of the original system in the frequency range of interest.

Similar content being viewed by others

1 Introduction

Machine tools, such as milling machines, grinding machines, or lathes, are complex mechatronic systems and key components in the manufacturing process. Increasing the precision of machine tools enables to manufacture more accurate parts required in many engineering applications. Among the different error sources limiting the precision of machine tools, thermally induced deviations are the main contributor to geometric errors in manufactured parts, as stated by Mayr et al. [18]. During the manufacturing process, the temperature distribution of machine tools is inhomogeneous and varies over time, leading to deformations of the structural parts. Internal and external sources are responsible for the time-varying temperature distribution, as summarized by Bryan [13]. Internal sources refer to heat losses at the machine elements during the manufacturing process, such as friction at the bearings. External sources refer to the surroundings of the machine tool, such as fluctuations of the environmental temperature of the workshop.

Virtual prototypes are a great asset to improve the thermo-mechanical behavior of machine tools, as explained by Altintas et al. [1]. They enable to test different design alternatives virtually before a physical prototype is available. Virtual prototypes are numerical models that describe the physical processes leading to errors in manufactured parts. Thermo-mechanical models of machine tools are based on the finite element (FE) discretization of the heat transfer and elasticity equations. However, discretized thermo-mechanical models of machine tools have a large scale due their geometrical complexity, requiring a high computational effort. Many engineering applications use surrogate models, a computationally efficient model reproducing the physical behavior of the high-fidelity model. Among the different techniques to create surrogate models, this work focuses on projection based model order reduction (MOR). MOR projects the original model into a lower dimensional subspace containing the most relevant information of the response of the system. As stated by Benner et al. [8], the main benefit of MOR is that it retains the systems structure enabling the traceability of the dynamical evolution. Additionally, the use of the underlying system structure facilitates the derivation of theoretical error bounds of the surrogate model. In comparison to other surrogate modeling techniques, MOR is an intrusive method, i.e. it needs to access and modify the system matrices.

Several works apply MOR to thermo-mechanical models of machine tools and other mechatronic systems. Herzog et al. [14] presented a thermo-mechanical model of a machine tool column. They concentrated on the problem of optimal sensor placement, i.e. selecting the optimal points to place temperature sensors in order to predict the thermally induced deviations. Finding the optimal location of the temperature sensors is an iterative process with a large amount of model evaluations. Thus, the authors proposed a reduced thermo-mechanical model based on proper orthogonal decomposition (POD). The thermo-mechanical model described the thermal response of a machine tool column to localized heat sources, representing the losses of a drive and friction of the ballscrew nut. Herzog et al. considered that the heat loads had a constant position over time, resulting in a time-invariant optimal sensor placement algorithm. However, relative movements between the machine tool axes lead to moving thermal contacts and heat loads. Therefore, efficient thermo-mechanical models of machine tools need to consider the position dependency of the thermal and mechanical response.

Lang et al. [16] compared two methods to trace the position dependency of the thermal behavior of reduced thermal models of machine tools. In their first approach, Lang et al. divided the trajectory of the path into several segments and calculated for each of them a reduction basis by means of balanced truncation (BT). During the movement of the machine tool, the model switches to the local reduced system that is the closest to the actual position. The second reduction approach focused on a global reduction method, i.e. one projection matrix valid for all the positions of the axes. The proposed reduction method is a parametric iterative rational Krylov algorithm (IRKA), which for each parameter sample selects the optimal expansion points and tangential directions in a \({\mathcal {H}}_2\) optimal sense. Both reduction approaches showed similar accuracy compared to the full model, while the time required for reduction of the parametric IRKA was considerably smaller than for the local basis with BT. However, the amplitude of the thermal response of machine tools decays at higher excitation frequencies. Therefore, MOR approaches capturing the thermal response of the system in frequency range of interest are more efficient. Lang et al. considered the position dependency of the temperature response. However, the stiffness of the machine tool varies at different positions of the axes. Thus, it is required to couple efficiently the temperature distribution to a mechanical model that can reproduce the position-dependent mechanical response.

Convective boundary conditions describe the interaction between the structural parts of the machine tool with the surrounding fluid media. Convective heat exchange is the main driving mechanism of the thermal response of the machine tools. It describes the heat exchange due to fluctuations of the environmental temperature [19, 24] or introduction of cooling fluid into the working space [22]. The heat transfer coefficient (HTC) is the proportionality constant between the convective heat flux and the temperature difference between the fluid and structure. The modification of the conditions of the external fluid flow results in a variation of the HTC. Therefore, efficient thermo-mechanical models need to enable the modification of the HTC. In the context of projection-based MOR, the HTC is a model parameter that needs to be traced after reduction, i.e. parametric MOR techniques are required.

Benner et al. [8] provided a comprehensive review of parametric MOR methods. The parametric reduction approaches are classified into two groups, namely local or global reduction bases. A local reduction basis is constructed evaluating the system response at several samples of the parameter space. In order to switch the value of the parameter, interpolating between reduced systems is required. There are several alternatives in the literature to perform this interpolation, such as interpolating between the locally reduced system matrices [17, 20], between the local subspaces [2], or between the transfer functions [6]. Parametric reduction with local bases provides efficient models valid for several discrete samples of the parameters. However, the convective boundary conditions of thermal models of machine tools vary continuously. Therefore, parametric MOR approaches with global reduction bases are more suitable for the models under investigation. These methods create a single set of projection bases for the values of the parameter space.

Several reduction approaches with global bases rely on bilinearization of the systems equations. These methods express the parametric system in a bilinear form, which is a special type of non-linear systems. Phillips [21] used functional series expansion for the reduction of bilinear systems with rational interpolation. Continuing the work of Phillips et al. [4] generalized the construction of the reduction basis matching a desired number of moments of the bilinear system. Breiten and Damm [10] proposed a reduction method for bilinear systems with Krylov subspace methods including expansion points at different values than zero. Benner and Breiten [7] presented a reduction approach for bilinear systems based on IRKA, named BIRKA. The authors proved that BIRKA is optimal in \({\mathcal {H}}_2\) for parameter-varying systems. Bruns and Benner [11] applied BIRKA to create a heat transfer model of an electrical motor. They investigated the parameters concerning the thermal contacts and convective heat transfer.

This paper proposes a novel parametric MOR reduction approach for thermo-mechanical models of mechatronics systems, such as machine tools. The presented MOR method considers the characteristic behavior of these models in order to build the reduction basis, enabling the possibility to trace the parameters describing the convective boundary conditions. The main contributions of this work can be summarized as:

-

Developing a novel MOR approach which efficiently represents the thermal behavior of the system in the frequency range of interest. Associated to the MOR method, an a-priori error estimator is proposed in order to have an upper bound of the error in the frequency range of interest.

-

Enabling the traceability of the parameters describing the convective boundary conditions, HTC, after reduction. The parametric MOR method ensures that the a-priori error estimator is an upper bound of the reduction error for any value of the HTC.

-

Coupling efficiently the thermal response to the mechanical structural deformations. For any temperature distribution, the reduced model needs to evaluate the thermally induced structural deformations.

2 Formulation of the parametric thermo-mechanical model

Thermo-mechanical models of machine tools describe the temperature distribution and the associated structural deformations. The temperature distribution is a continuous function \(T(t, \varvec{z})\) defined at every point \(\varvec{z}\) of the domain \(\varOmega \) over time. Based on the energy conservation principle, the heat transfer equation is a partial differential equation (PDE) describing the temporal and spatial evolution of the temperature field as

where \(c_p\) is the specific heat capacity, \(\rho \) is the material density, and \(\lambda \) is the thermal conductivity. The definition of the boundary and initial conditions ensure a unique solution of the PDE. The Neumann boundary conditions can be interpreted as a heat flux applied to a surface \(\varGamma _1\). Neumann boundary conditions are defined as

where \({\dot{q}}(t, \varvec{z})\) is the heat flux applied on the boundary \(\varGamma _1\). Convection is represented by Robin boundary conditions. The Robin boundary conditions are defined as

where \(h(t, \varvec{z})\) is the heat transfer coefficient and \(T_{ext}(t, \varvec{z})\) is the external temperature acting on \(\varGamma _2\). The Robin boundary condition represents the convective heat exchange between an external fluid media and the structure or the thermal contact between two parts. Finally, the initial condition is

over the whole domain \(\varOmega \).

The heat transfer equations describe the spatial distribution and temporal evolution of the temperature. However, the thermally induced mechanical deviations are the main output of interest. Therefore, a coupled thermo-mechanical model is required. The thermo-mechanical coupling implies that the temperature field affects the structural deformation due to non-zero thermal expansion coefficients. However, the mechanical work resulting from the deformation of the structure does not alter the temperature field. Thus, a weakly coupled mechanical system is needed. The assumption of weak coupling holds for thermo-mechanical models with small strain rates.

The force balance equation relates in continuum mechanics the stress tensor with the external forces as

where \(\varvec{\sigma }\) is the stress tensor at a point \(\varvec{z}\) in the domain \(\varOmega \) and \(\varvec{f}\) is the external force vector. Small deformations and strains are assumed, and thus a linear elastic model is considered. The stress tensor can be decomposed into an elastic part \(\varvec{\sigma }_e\) and a thermal part \(\varvec{\sigma }_{th}\). Considering the constitutive equation, they can be expressed as

where \(\varvec{\epsilon }\) is the strain tensor, E is the Young modulus, \(\nu \) is the Poisson modulus, \(\varvec{I}\) is the identity tensor, \(\alpha \) is the thermal expansion coefficient, and \(T_{ref}\) is the reference temperature. Under the assumption of small deformations, the strain tensor can be linearized as the gradient of the displacements \(\varvec{u}_s\) as

Numerical methods enable the solution of heat transfer and elasticity equations for general complex domains. The FE method is a well-established numerical method to solve the heat transfer and elasticity equations. Bathe [5] provides a comprehensive review of FE methods. Several commercial and open-source FE simulation packages are available, being extensively used in computer aided engineering (CAE). The FE-discretization interpolates a scalar field inside an element e with the values at the nodes. The interpolation functions are called antsatz functions \(\varvec{n}_e(\varvec{z})\), defined for any point \(\varvec{z}\) inside the domain of the element \(\varOmega ^e\). Considering the interpolation of the temperature field with the ansatz function and the principle of virtual work, the PDE of Eq. (1) to (4) is transformed into a system of ordinary differential equations (ODE) for the element e. Assembling for all the elements of the FE-mesh, the ODE for the whole system can be obtained as

where \(\varvec{C}_{th}\) is the thermal capacity matrix, \(\varvec{K}_{cond}\) is the thermal conductivity matrix, \(\varvec{K}_{conv}\) is the thermal convection matrix, \(\varvec{q}_{ext}\) is the thermal heat input vector, and \(\varvec{x}\) is the temperature state vector. The thermal heat input vector, \(\varvec{q}_{ext}\) , is a vector considering external heat fluxes applied on the boundary \(\varGamma _1\) and the convective heat fluxes applied to the boundary \(\varGamma _2\), according to Eqs. 2 and 3 .

From the different physical parameters describing Eq. (9), the value of the HTC are of special interest for thermo-mechanical models of machine tools. The FE-discretization of the Robin boundary condition affects both the convection matrix \(\varvec{K}_{conv}\) and the heat input vector \(\varvec{q}_{ext}\). Equation (3) defines the HTC, \(h(t, \varvec{z})\), as the proportionality constant between the convective heat flux and the temperature difference between the structure and an external temperature. For the following derivations, it is assumed that the spatial distribution of the HTC does not change over time. Therefore, the HTC can be expressed separating the spatial and temporal dependency as \(h(t, \varvec{z}) = h(t)w(\varvec{z})\). Considering this, the parametric dependency of the convection matrix and heat input vector of an element e can be expressed as

Thermal FE models usually discretize the convective boundary condition into a finite number of boundaries with the same HTC,

where \(n_c\) is the number of independent boundaries. The HTCs for all individual boundaries, \(h_i(t)\), can be arranged in a parameter vector \(\varvec{p} \in {\mathbb {R}}^{n_c \times 1}\). Therefore, the system of ODE of Eq. (9) can be expressed in state space representation as a function of \(\varvec{p}\) as

where \(\varvec{E} \in {\mathbb {R}}^{n \times n}\) is the left-hand side system matrix or mass matrix, \(\varvec{A}(\varvec{p}) \in {\mathbb {R}}^{n \times n}\) is the system matrix, \(\varvec{B}\in {\mathbb {R}}^{n \times m}\) is the input matrix, \(\varvec{x}(t) \in {\mathbb {R}}^{n \times 1}\) is the state vector, and \(\varvec{u}(t)\in {\mathbb {R}}^{m \times 1}\) is the input vector. The input vector of Eq. (13) represents the magnitude of the heat flux, which can be either external or the convective heat fluxes. Considering the assumptions leading to Eq. (10), the parametric dependency of the state space representation of the Eq. (13) can be expressed in an affine form as

where \(\varvec{D}_i\) represents the convection matrix for each boundary \(\varGamma _i\). The temperature output vector of the system \(\varvec{y}_{th}(t)\) is defined as

where \(\varvec{C}_{therm}\in {\mathbb {R}}^{p \times n}\) is the thermal output matrix. The FE discretization of Eq. (5) leads to system of linear equations

where \(\varvec{K}\) is the stiffness matrix, \(\varvec{K}_{th}\) is the thermal coupling matrix, \(\varvec{x}_{ref}\) is the reference temperature vector, \(\varvec{f}_{ext}\) is the external force vector, and \(\varvec{x}_{mech}\) is the displacement state vector. The suffix \(_{mech}\) refers to the mechanical systems. On one hand, the thermal states \(\varvec{x}\) are an input of the mechanical system. On the other hand, external mechanical forces, such as preloads at the machine tool elements, affect the response of the mechanical system. Therefore, instead of defining the mechanical deviations as an output of the system of Eq. (13), a separate state \(\varvec{x}_{mech}\) is defined. Defining a separate state and system matrix has another practical advantage during model development. It enables the creation and validation of a mechanical model of the structure and then extend it in order to consider thermo-mechanical effects. Therefore, having an dedicated mechanical model is beneficial from a practical point of view.

The state space representation of the mechanical system is

where \(\varvec{K}\) is the mechanical system matrix or stiffness matrix, \(\varvec{B}_{ext}\) is the input matrix of the external mechanical forces, and \(\varvec{u}_{ext}(t)\) is the input vector of the external mechanical forces. The product \(\varvec{B}_{ext}\varvec{u}_{ext}(t)\) refers to external mechanical forces or torques applied to the mechanical degrees of freedom (DOF) of the system.

In order to complete the state space representation, an output vector is defined as \(\varvec{y}_{mech}(t)\)

where \(\varvec{C}_{mech}\) is the output matrix. The output of the mechanical model are the displacements at several points of interest, such as the relative deviations between tool and workpiece.

3 Formulation of the reduced-order model

The FE-discretization of thermo-mechanical models of machine tools leads to the system equations describing the thermal transient behavior. In order to increase the computational efficiency of the models, this work proposes to create surrogate models by projection-based MOR. MOR methods construct a subspace containing the most relevant information of the high fidelity model.

Let \({\mathcal {V}}\) be the reduced subspace of dimension \(r \ll n\), where n is the dimension of the original system. Let \(\varvec{V} \in {\mathbb {R}}^{n \times r}\), such that \({\mathcal {V}} = \text {span} (\varvec{V})\). The reduced state of the system \(\varvec{\tilde{x}}(t)\) is defined as

The different MOR approaches propose different methods to construct the reduction basis \(\varvec{V}\). Once the reduction subspace is defined, the thermal system is projected into the reduction subspace substituting Eq. (19) into Eqs. (14) and (15). The system can be expressed as

By enforcing the Petrov–Galerkin condition, a basis \(\varvec{W}\) can be found such that

leading to the projected system matrices \(\varvec{\tilde{E}}\), \(\varvec{\tilde{A}}\), \(\varvec{\tilde{D}}_i\), \(\varvec{\tilde{B}}\), and \(\varvec{\tilde{C}}_{therm}\) and the following reduced system

The dimension of the reduced output is \(\varvec{\tilde{y}}(t)\in {\mathbb {R}}^{p \times 1}\), which is the same as the original thermal output \(\varvec{y}_{th}(t)\) of Eq. 15.

This work considers one-sided projection, i.e. \(\varvec{W} = \varvec{V}\). As explained by Antoulas [3], the main advantage of one-sided projection is that it ensures the preservation of the stability after projection.

4 Krylov Modal Subspace (KMS) reduction

The goal of this work is to create a reduced system that reproduces the thermal response for any value of the parameters describing the convective heat exchange between the structure and the surrounding fluid. Equation (14) presents an affine representation of the parametric dependency, separating the system matrix into two terms. The first step considers the thermal part of the system that does not depend on the parameter. A reduction basis is created such that the reduced system reproduces the response of the system where the values of the parameters are set to a fixed value. This section introduces the MOR approach and an error bound for the error between the original and the reduced-order model. In a second step, the projection basis is extended in order to enable the modification of the HTC after reduction, which is the focus of the next section.

The projection basis needs to capture the most relevant part of the response of the system. The thermal models of mechatronic systems, such as machine tools, have a certain characteristic behavior compared to other general first order systems. Firstly, the amplitude of the thermal response of the system decays at higher excitation frequencies. Therefore, the reduced system only needs to approximate the thermal response of the system in a low frequency range. Secondly, the steady state response is relevant in order to describe the behavior of the system. Thus, the reduced system needs to match the steady state temperature field of the original system for the different input and output combinations.

This work proposes a reduction method that considers the characteristic behavior of thermal models of machine tools to construct the projection basis. On one hand, moment matching Krylov subspace methods approximate the response of the system around an expansion point \(s_e\). The expansion point is placed at a low frequency close to zero in order to match the steady state response of the system. On the other hand, the eigenvectors of the system up to a certain frequency are included in the projection basis. Therefore, the thermal reduced system reproduces the thermal behavior of the system in the frequency range of interest. Spescha [23] introduced the concept of KMS reduction with application in structural dynamics. This paper extends the KMS method to first order systems and presents a theoretical bound of the reduction error.

Let \({\mathcal {V}}_k \subset {\mathbb {R}}^{n} \) be the Krylov subspace with one expansion point \(s_e\), such that

An orthonormal basis \(\varvec{V}_k\) of \({\mathcal {V}}_k\) can be constructed, such that \({\mathcal {V}}_k = \text {span} (\varvec{V}_k)\). The original system of Eq. (13) can be projected into the subspace \({\mathcal {V}}_k\). The reduced system matches the response of the system around the expansion point \(s_e\). If an expansion point \(s_e\) is chosen close to 0, the reduced system matches the steady state response.

Let \({\mathcal {V}}_{\mu } \subset {\mathbb {R}}^{n} \) be the truncated modal subspace. The subspace \({\mathcal {V}}_{\mu }\) is the span of the first \(\mu \) eigenvectors of the system of Eq. (13), defined as

where \(\varvec{\phi }_i\) is an eigenvector associated to the eigenvalue \(\alpha _i\) such that \((s_e\varvec{E} - \varvec{A})\varvec{\phi }_i = \alpha _i \varvec{\phi }_i\). The system matrices \(\varvec{A}\) and \(\varvec{E}\), defined in Eqs. (9), (10), (11), and (13), are negative semi-definite, resulting in all real non-positive eigenvalues. Therefore, the eigenvectors form an orthonormal basis \(\varvec{V}_{\mu }\) of \({\mathcal {V}}_{\mu }\), such that \({\mathcal {V}}_{\mu } = \text {span} (\varvec{V}_{\mu })\). The original system of Eq. (13) can be projected into the subspace \({\mathcal {V}}_{\mu }\). The reduced system approximates the dynamic thermal behavior in a certain frequency range.

The KMS reduction projects the system of Eq. (13) by means of a projection matrix \(\varvec{V}\). The basis \(\varvec{V}\) spans linear subspace \({\mathcal {V}} \subset {\mathbb {R}}^{n} \), i.e. \({\mathcal {V}} = \text {span} (\varvec{V})\), such that

The subspace \({\mathcal {V}}\) captures the information about both the steady state response and the thermal transient behavior of the system. Therefore, this projection basis satisfies the requirement for accurate approximation of the thermal behavior of mechatronic systems.

4.1 A-priori error estimator

The reduced system needs to represent accurately the thermal response of the system in the frequency range of interest, i.e. for all \(\omega \in [0 , \omega _{max}]\). The upper bound of the frequency range of interest, \(\omega _{max}\), determines how many eigenvectors, \(\mu \), are to be included in the KMS reduction basis. Therefore, this work proposes an a-priori error estimator for the KMS method that relates the maximum eigenfrequency, \(\omega _{\mu } = \left|\alpha _{\mu }\right|\), of the KMS basis with the frequency range of interest.

The error estimator presented in this section considers that the input and output matrix of Eqs. (13) and (15) are the same, i.e. \(\varvec{B} = \varvec{C}^T_{therm}\), leading to one-sided projection. For the derivation of the error estimator of the KMS method, the Krylov subspace defined in Eq. (23) has an expansion point \(s_e \in {\mathbb {R}}\) close to zero and a single iteration. Before introducing the error estimator, some definitions and preliminary results are introduced.

Firstly, a suitable error definition is required. Different error values are proposed in the literature, as summarized by Benner et al. [8]. Let \(\varvec{E}(j\omega )\) be the absolute reduction error frequency response function (FRF) defined for each frequency \(\omega \) as

where \(\varvec{H}(j\omega )\) and \(\varvec{\tilde{H}}(j\omega )\) are the FRF of the original and reduced system respectively. \(\varvec{E}(j\omega )\) is a matrix of dimension p (number of outputs) by m (number of inputs). Let \(e_{ij}(j\omega )\) be the relative error for the ith input and jth output combination as

where \(h_{ij}(j\omega )\) is the element in ith row and jth column of \(\varvec{H}(j\omega )\), and \(\tilde{h}_{ij}(j\omega )\) is the element in ith row and jth column of \(\varvec{\tilde{H}}(j\omega )\).

Secondly, some remarks about the subspaces associated to the KMS \({\mathcal {V}}\) are required. The subspace \({\mathcal {V}}_{\nu }\subset {\mathbb {R}}^{n}\) can be defined as the subspace of the remaining modes not included in \({\mathcal {V}}_{\mu }\), i.e.

Due to the properties of the system matrix, the subspace \({\mathcal {V}}_{\mu }\) is the orthogonal complement of \({\mathcal {V}}_{\nu }\), such that \({\mathbb {R}}^{n} = {\mathcal {V}}_{\mu } \oplus {\mathcal {V}}_{\nu }\). Let \(\varvec{\varPhi }\) be a matrix whose columns are the eigenvectors \(\varvec{\phi }_i\) of the system of Eq. (13), normalized to the capacity matrix such that \(\varvec{\varPhi }^T\varvec{E} \varvec{\varPhi } = \varvec{I}\). The system of Eq. (13) and (15) can be expressed in modal coordinates \( \varvec{x} = \varvec{\varPhi }\varvec{x}_m\) as

where \(\varvec{\varOmega } = \text {diag}(\alpha _1, \dots , \alpha _n)\) is a diagonal matrix with the n eigenvalues \(\alpha _k\) of the system. The system matrix can be expressed as block matrix, such that

Let \({\mathcal {V}}_{\nu k} \subset {\mathbb {R}}^{n} \) be the Krylov subspace with one moment around the expansion point \(s_e \in {\mathbb {R}}\) of the original system projected into the subspace \({\mathcal {V}}_{\nu }\), such that

The definition of \({\mathcal {V}}_{\nu k}\) leads to a preliminary result expressed in Fact 1.

Fact 1

The KMS \({\mathcal {V}}\) is the direct sum of \({\mathcal {V}}_{\mu }\) and \({\mathcal {V}}_{\nu k}\).

Proof

The Krylov subspace of the system in modal coordinates Eq. (29) is

As \(s_e\varvec{I}+\varvec{\varOmega }\) is a diagonal matrix, the Krylov subspace of the original system can be expressed as

The \({\mathcal {V}}_{\mu k}\) is a subspace of \({\mathcal {V}}_{\mu }\), i.e. \({\mathcal {V}}_{\mu k} \subset {\mathcal {V}}_{\mu }\). Therefore, \({\mathcal {V}} = {\mathcal {V}}_k +{\mathcal {V}}_{\mu } = {\mathcal {V}}_{\mu k} + {\mathcal {V}}_{\nu k} + {\mathcal {V}}_{\mu } = {\mathcal {V}}_{\nu k} + {\mathcal {V}}_{\mu } \).

In addition, \({\mathcal {V}}_{\nu k}\) is also a subspace of \({\mathcal {V}}_{\nu }\), i.e. \({\mathcal {V}}_{\nu k} \subset {\mathcal {V}}_{\nu }\). Since \({\mathcal {V}}_{\nu } \cap {\mathcal {V}}_{\mu } = \varvec{0}\), it follows that \({\mathcal {V}}_{\nu k} \cap {\mathcal {V}}_{\mu } = \varvec{0}\). This implies that \({\mathcal {V}}_{\nu k}\) is the orthogonal complement of \({\mathcal {V}}_{\mu }\), i.e. \({\mathcal {V}} = {\mathcal {V}}_{\nu k} \oplus {\mathcal {V}}_{\mu }\). \(\square \)

Fact 1 relates the subspaces \({\mathcal {V}}\), \({\mathcal {V}}_{\mu }\), and \({\mathcal {V}}_{\nu k}\), stating that any vector in \({\mathcal {V}}\) can be decomposed uniquely into two terms, one in \({\mathcal {V}}_{\mu }\) and another one in \({\mathcal {V}}_{\nu k}\). This property is useful to separate the error of the reduced system, as shown in Fact 2.

Fact 2

The error \(e_{ij}(j \omega )\) of Eq. (27) can be separated as the product of two terms

being \(e_{\mu _{ij}}(j\omega )\) and \(e_{\nu k_{ij}}(j\omega )\) defined as

where \(\tilde{h}_{\mu _{ij}}(j\omega )\) is the element in ith row and jth column of the FRF of the system projected into \({\mathcal {V}}_{\mu }\), \(\tilde{h}_{\nu _{ij}}(j\omega )\) is the element in ith row and jth column of the FRF of the system projected into \({\mathcal {V}}_{\nu }\), and \(\tilde{h}_{\nu k_{ij}}(j\omega )\) is the element in ith row and jth column of the FRF of the system projected into \({\mathcal {V}}_{\nu k}\).

Proof

The Fact 1 enables to express the FRF of the reduced system as \(\tilde{h}_{ij}(j \omega ) = \tilde{h}_{\mu _{ij}}(j \omega ) + \tilde{h}_{\nu k_{ij}}(j \omega )\). Additionally, the transfer function of the original system can be expressed as \(h_{ij}(j \omega ) = \tilde{h}_{\mu _{ij}}(j \omega ) + \tilde{h}_{\nu _{ij}}(j \omega )\). Substituting in the error definition

Multiplying the previous expression by \(\frac{\tilde{h}_{\nu _{ij}}(j \omega )}{\tilde{h}_{\nu _{ij}}(j \omega )}\), the following is obtained

Substituting \(\tilde{h}_{\nu _{ij}}(j \omega )\) in the numerator by \(h_{ij}(j \omega ) - \tilde{h}_{\mu _{ij}}(j \omega )\) and reorganizing the terms, the error separation of Eq. (37) is obtained as

\(\square \)

The result of Fact 2 enables the separation of the error in two terms. The goal is to find an bound for the error \(e_{ij}(j \omega )\). The first step for an error bound of the KMS method is to determine an upper bound for the term \(e_{\nu k_{ij}}(j \omega )\). The following theorem shows a theoretical error bound for all frequencies of the term \(e_{\nu k_{ij}}(j \omega )\), provided that the input and the output are the same, namely \(i = j\).

Theorem 1

The magnitude of the error \(e_{\nu k_{ii}}(j \omega )\) defined in Eq. (37) is bounded by \(e_{est}(j\omega )\) defined as

for all \(\omega \in [0 , \infty ]\) such that \(\omega \in {\mathbb {R}}\) given that \(\omega _{m} < \omega _{mo+1}\) and that the input and the output are the same, i.e. \(i = j\).

Proof

The FRF of the system projected into \({\mathcal {V}}_{\nu }\), is

where \(\nu = n - \mu \) is the dimension of the subspace \({\mathcal {V}}_\nu \), \(\omega _{k}\) are the absolute value of the eigenvalues of the system, and \(b_k\) corresponds to the kth element of the ith column of \(\varvec{B}_{\nu }\). The subspace \({\mathcal {V}}_{\nu k}\) can be defined according to Eq. (33) as \(\text {range}(\varvec{v})\) where

Projecting the system of Eq. (44) into \({\mathcal {V}}_{\nu k}\), the following system is obtained

where \(\tilde{e}\), \(\tilde{a}\), and \(\tilde{b}\) take the following values

The transfer function of the reduced system \(\tilde{h}_{\nu k}\) is

Firstly, some properties of the error \(e_{\nu k_{ii}}(j \omega )\) need to be discussed. According to Eq. (39), the poles of the transfer function of the real error, \(e_{\nu k_{ii}}(s)\) with \(s \in {\mathbb {C}}\), are the poles of \(\tilde{h}_{\nu k_{ij}}(s)\), the poles \(\tilde{h}_{\nu _{ii}}(s)\), and the zeros of \(\tilde{h}_{\nu _{ii}}(s)\). Given that the system matrices are positive semi-definite, all the poles of \(\tilde{h}_{\nu _{ii}}(s)\) are real. Additionally, the poles of \(\tilde{h}_{\nu _{ii}}(s)\) are real, as it can be seen from Eq. (47). Furthermore, the zeros of the transfer function \(\tilde{h}_{\nu _{ii}}(s)\) are real numbers. This fact can be shown by contradiction. Let \(s = \alpha \pm j\beta \) be zeros of the transfer function \(\tilde{h}_{\nu _{ii}}(s)\), such that \(\beta > 0 \). The zero of the transfer function needs to satisfy that

Given that \(\beta > 0 \) and \(\frac{b_k^2 }{((\alpha +\omega _{k})^2 + \beta ^2)} \ge 0\), the transfer function is only zero for \(\beta = 0\), reaching a contradiction. Therefore, the zeros of transfer function \(\tilde{h}_{\nu _{ii}}(s)\) are real. Thus, the poles of \(e_{\nu k_{ii}}(j \omega )\) are all real. This leads to a smooth FRF response, without any resonance frequency. This property is relevant for the derivation of the error bound.

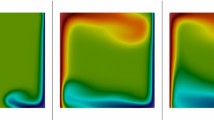

In order to proof that the proposed estimator of Eq. (43) bounds the error for all frequencies, several counterexamples are created, which are illustrated in Fig. 1. Figure 1a shows the case where the magnitude of the error, \(e_{\nu k_{ii}}(j\omega )\), is higher than the magnitude of the estimator, \(e_{est}(j\omega )\), at high frequencies. Figure 1b depicts the case where the poles of the error at lower frequencies than the poles of the estimator. The third counterexample in Fig. 1c illustrates the case where the slope of the error is lower than the slope of the estimator. The slope of the error is related with the number of zeros at low frequency. The error estimator has two zeros at the expansion point \(s_e\). Thus, it needs to be shown that the actual error at least two zeros at the expansion point, which is placed close to zero. Figure 1d shows graphically that if the other three counterexamples are not true, the error estimator is an upper bound of the FRF of the of the real error for the whole frequency range.

Therefore, it needs to be proven that the counterexamples of Fig. 1 are not possible following the next steps:

-

1:

\(\lim \limits _{\omega \rightarrow \infty }|e_{est}(j \omega ) |> \lim \limits _{\omega \rightarrow \infty }|e_{\nu k_{ii}}(j \omega ) |\)

-

2:

All the poles of the actual error \(e_{\nu k_{ii}}(j \omega )\) are at a higher frequency than the ones of the error estimator \(e_{est}(j \omega )\)

-

3:

\(e_{\nu k_{ii}}(j \omega )\) has at least two zeros at \(\omega \) close to zero

The first condition refers to the magnitude of the FRF at infinity, which is associated to the Markov parameters. From the definition of the error estimator \(\lim \limits _{\omega \rightarrow \infty }\left|e_{est}(j\omega ) \right|= 1\). The magnitude of \(e_{\nu k_{ii}}(j \omega )\) at infinity can be expressed as

In order to evaluate the limit of the error, the limit \(\lim \limits _{\omega \rightarrow \infty }\frac{\tilde{h}_{\nu k_{ii}}(j \omega )}{\tilde{h}_{\nu _{ii}}(j \omega )}\) needs to be evaluated.

where \(\frac{\tilde{b}^2}{\tilde{e} }\) can be expressed according to Eq. (47) as

Equation (51) shows that the limit \(\lim \limits _{\omega \rightarrow \infty }\frac{\tilde{h}_{\nu k_{ii}}(j \omega )}{\tilde{h}_{\nu _{ii}}(j \omega )}\) is a positive real number. Therefore, the condition on the magnitude of the error of Eq. (50) can be expressed as

In fact, an upper bound for the term \(\frac{\tilde{b}^2}{\tilde{e} }\) can be found as follows

considering that \(\omega _1 \le \omega _k\) for all \(k \in [1, \nu ]\). The upper bound for the term \(\frac{\tilde{b}^2}{\tilde{e} }\) implies that the condition of Eq. (53) is satisfied. Therefore, the magnitude of the actual error is bounded by 1, as stated in Eq. (51). This completes the first step of the proof, showing that the magnitude of the error estimator is an upper bound of the magnitude of the actual error, \(e_{\nu k_{ii}}(j \omega )\), at infinity.

The second step in this proof is ensuring that the poles of the actual error are at a higher frequency than the ones of the error estimator. Let \(e_{\nu k_{ii}}(s)\) the transfer function of the actual error, where \(s \in {\mathbb {C}}\). The error estimator, \(e_{est}(s)\), defined in Eq. (43) has two poles at \(\omega _m\). The poles of the error \(e_{\nu k_{ii}}(s)\) are the poles of \(\tilde{h}_{\nu k_{ii}}(s)\), the poles \(\tilde{h}_{\nu _{ii}}(s)\), and the zeros of \(\tilde{h}_{\nu _{ii}}(s)\), which need to be at a lower frequency than \(\omega _m\).

The poles of \(\tilde{h}_{\nu _{ii}}(s)\) are \(\omega _1 \dots \omega _\nu \). By definition, these poles are at a higher frequency than \(\omega _m\).

The zeros of \(\tilde{h}_{\nu _{ij}}(s)\) are real numbers, as discussed in the beginning of this proof. Furthermore, it needs to be shown that these zeros are at higher frequencies than \(\omega _m\), which is proven by contradiction. Assume that \(\omega _0\) is a zero of the transfer function, i.e. \(\tilde{h}_{\nu _{ii}}(s=-\omega _0)=0\), such that \(0<\omega _0 <\omega _1\). Substituting this into Eq. (44), the transfer function is

However, given that \(0\le \omega _1 \le \omega _{\nu }\) the all the terms of the summation are strictly positive. Thus, \(\tilde{h}_{\nu _{ii}}(s=-\omega _0)\ne 0\), reaching a contradiction. Therefore, it is shown that the zeros of the transfer function of the reduced system \(\tilde{h}_{\nu _{ii}}(s)\) are at a higher frequency than \(\omega _m\).

The pole \(\tilde{h}_{\nu k_{ii}}(s)\), \(\omega _{\nu k_{ii}}\), can be written as

which can be understood as a weighted summation, where the weight factors are \(\frac{b_{k}^2}{(\omega _{k}+s_e)^2}\). This shows that the poles of the transfer function satisfy \(\omega _1 \le \omega _0 \le \omega _r \). The location of \(\omega _0\) depends on the static gains, \(b_{k}\). The more controllable and observable a mode \(\omega _k \) is, the closer \(\omega _0 \) is to \(\omega _k\). Thus, the pole of the transfer function of the reduced system \(\tilde{h}_{\nu k_{ii}}(s)\) is at a higher frequency than \(\omega _m\). This concludes the second step, ensuring that the poles of the actual error \(e_{\nu k_{ii}}(s)\) are at a higher frequency than the ones of the error estimator.

The third step needs to ensure that the transfer function of the actual error, \(e_{\nu k_{ii}}(s)\), has at least two zeros close to zero. The zeros of the actual error are related with the zeros \(\tilde{h}_{\nu k_{ii}}(s) - \tilde{h}_{\nu _{ii}}(s)\). Due to the properties of moment matching Krylov reduction, the reduced-order system matches at least two moments of the transfer function at the expansion point, \(s_e\), as explained by Antoulas [3]. By choosing the expansion point sufficiently low, the \(\tilde{h}_{\nu k_{ii}}(s) - \tilde{h}_{\nu _{ii}}(s)\) has two zeros close to zero. Therefore, the transfer function \(e_{\nu k_{ii}}(s)\) has also two zeros close to zero.

This proof shows that the error \(e_{\nu k_{ii}}( j \omega )\) has a bounded magnitude at infinity, the poles of the error are a higher frequency than the poles of the estimator, and the error has at least two zeros close to zero frequency. The combination of these three conditions proves that the magnitude of the error \(e_{\nu k_{ii}}( j \omega )\) is bounded by the estimator \(\left|e_{est}(j\omega ) \right|\).

\(\square \)

The result of Theorem 1 proposes an error bound of the term \(e_{\nu k_{ii}}(j \omega )\) of Eq. (37). In order to estimate the reduction error, \(e_{ii}(j \omega ) \), an upper bound of the term \(e_{\mu _{ii}}(j \omega )\) of Eq. (37) is also required.

Fact 3

The magnitude of the error \(e_{\mu _{ii}}(j \omega )\) defined in Eq. (37) is bounded by 1, i.e.

for all \(\omega \in [0 , \infty ]\) such that \(\omega \in {\mathbb {R}}\).

Proof

The FRF of the error \(e_{\mu _{ii}}(j \omega )\) defined in Eq. (38) of Fact 2 can be expressed as

considering that \(\tilde{h}_{\nu _{ii}}(j\omega ) = h_{ii}(j\omega ) -\tilde{h}_{\mu _{ii}}(j\omega )\), as shown in Fact 1. In order to prove this fact, the two extreme conditions are considered. Firstly, the case where all modes in \({\mathcal {V}}_{\nu }\) are not observable and not controllable is analyzed. In this case, the magnitude of \(\tilde{h}_{\nu _{ii}}\) is zero. Thus, the magnitude of the error \( \left|e_{\mu _{ii}}(j \omega ) \right|\) is zero for all frequencies, as all the relevant modes describing the response of the system are contained in \({\mathcal {V}}_{\mu }\). Secondly, it is considered that all modes in \({\mathcal {V}}_{\mu }\) are not observable and not controllable. In this case, the original system and the system projected into \({\mathcal {V}}_{\nu }\) are the same, i.e. \(\tilde{h}_{\nu _{ii}} = h_{ii}(j \omega )\). Thus, the magnitude of the error \( \left|e_{\mu _{ii}}(j \omega ) \right|\) is 1, as none of the relevant modes describing the response of the system are included in \({\mathcal {V}}_{\mu }\). These two cases represent the two extreme conditions. For any other case, some of the modes \({\mathcal {V}}_{\mu }\) and \({\mathcal {V}}_{\nu }\) are observable and controllable, leading to a magnitude of the error between 0 and 1. Therefore, \(\left|e_{\mu _{ii}}(j\omega ) \right|\le 1\) for all frequencies. \(\square \)

The results of Theorem 1 and Fact 3 state that an error bound of \(e_{ii}(j \omega )\) for the KMS reduction error is

Theorem 1 shows a theoretical error bound for the KMS reduction, provided that the same inputs and outputs are considered. This error bound estimates a-priori the error and enables to choose how many modes need to be included in the KMS projection basis. Provided a frequency range of interest \([0, \omega _{max}]\), the error estimator determines that all modes under \(\omega _{m}\) need to be included in the reduction basis so that the magnitude of the error of the reduced-order system, \(\left|e_{ii}(j \omega ) \right|\), does not exceed a certain value \(\epsilon \). Section 7 illustrates the validity of the error estimator with numerical examples for different combinations of the inputs and outputs.

5 Parametric KMS reduction

The KMS method creates a reduction basis that captures the most relevant information of the reduction system in the frequency range of interest. This section presents a method to extend the KMS basis to include the possibility to modify the values of the HTC in the reduced system. The system of Eq. (14) shows the parametric dependency as an affine representation. This affine formulation of the system can be interpreted as bilinear system. The term \(\sum _{i=1}^{n_c}h_i\varvec{D}_i \varvec{x}(t)\) can be understood as an input of the system that depends linearly on the state. Bilinear systems are a special class of non-linear systems that are linear in the input and state, but not jointly linear in the input and state.

Before presenting the reduction approach, several considerations are required on the convection matrices \(\varvec{D}_i\) of Eq. (14). Equation (10) defines the convection matrix of an element e, which is integrated numerically. The evaluation of the integral at the Gauss points leads to off-diagonal terms in \(\varvec{K}^e_{conv}\). This is called consistent convection matrix. However, it is customary to diagonalize this matrix in order to avoid numerical oscillations close to the convective boundary condition, as pointed out by Bruns [12]. Therefore, a diagonal convection matrix \(\varvec{D}_i\) is considered, with zeros for all the DOF not affected by the convective boundary condition i. The diagonal convection matrix \(\varvec{D}_i\) can be understood as a linear map \({\mathcal {D}}_i\), whose range has a dimension equal to the number of nodes in the convective boundary, \(n_{d_i}\).

Another important consideration for the development of the reduction method is determining the dimension of the subspace spanned by \((s_e\varvec{E-\varvec{A}})^{-1}\varvec{D}_i\). The matrix \((s_e\varvec{E-\varvec{A}})^{-1}\) can be understood as a linear transformation, \({\mathcal {A}}\). Given that \((s_e\varvec{E-\varvec{A}})^{-1}\) is invertible, \({\mathcal {A}}\) is a bijective linear transformation, i.e. \(\text {dim}( \text {range} ((s_e\varvec{E-\varvec{A}})^{-1})) = n\). Thus,

The intersection of the subspaces spanned by the several convection matrices also needs to be discussed. In thermo-mechanical models of mechatronic systems, the different convective boundary conditions affect different surfaces. Thus, it can be stated that \(\varGamma _i \cap \varGamma _j = 0 \, \forall i\ne j\). Therefore

This can be extended to the range of \({\mathcal {A}} \circ {\mathcal {D}}_i\) as

Let \(\varvec{V}_{KMS}\) be the KMS reduction basis of the system without parametric convective boundary conditions. The basis \(\varvec{V}_{KMS}\) spans the linear subspace defined in Eq. (25). All the states in the KMS are a linear combination of the columns of \(\varvec{V}_{KMS}\), i.e. \(\varvec{x} \in \text {span}(\varvec{V}_{KMS})\).

In order to consider the consider the state-dependent inputs, \(\varvec{D}_i \varvec{x}\), an iterative approach is applied. The states multiplied by the convection matrix, i.e. \(\varvec{D}_i\varvec{V}_{KMS}\), provide a new set of inputs for the reduction. The matrix \(\varvec{D}_i\varvec{V}_{KMS}\) can be added as further inputs to the Krylov subspace of Eq. (23), providing a new set of vectors \(\varvec{V}^1_{i}\). This set of new basis vectors multiplied by the convection matrix, i.e. \(\varvec{D}_i\varvec{V}^1_{i}\), provide new inputs. They can be added to the Krylov subspace delivering a new set of basis vectors \(\varvec{V}^2_{i}\). This iterative process can be expressed as

where \(n_{me}\) is the number of iterations. The sum of the subspaces spanned by the matrices \(\varvec{V}_{KMS}\), \(\varvec{V}^1_{i}\), \(\dots \), \(\varvec{V}^k_{i}\), \(\dots \), \(\varvec{V}^{n_{me}}_{i}\) is the reduced subspace \({\mathcal {V}}_{i}\)

Comparing the definition of \({\mathcal {V}}_{i}\) with the definition of the Krylov subspace, it can be stated that

For the creation of the subspace \({\mathcal {V}}_{i}\), \(n_{me} < n_{d_i}\) terms are selected. This process of adding new vectors to the reduction basis \(\varvec{V}_{KMS}\) can be understood as a Krylov reduction of the subspace spanned by \((s_e\varvec{E-\varvec{A}})^{-1}\varvec{D}_i\). The reduced subspace can be calculated for all the \(n_c\) convective boundary conditions. Given \({\mathcal {V}}_{i}\) and \({\mathcal {V}}_{j}\) for two different convective boundary conditions, it can be stated that

which comes from the properties of the convection matrices shown in Eq. (62). The reduction subspace \({\mathcal {V}}_{p}\) is the addition of subspaces \({\mathcal {V}}_{i}\) as

where \(\varvec{V}\) is an orthonormal basis of the subspace \({\mathcal {V}}_{p}\).

The KMS reduction considers certain modes of the system, \(\varvec{V}_{\mu }\), in order to form the projection basis. The KMS method selects modes up to a certain frequency. The error estimator ensures that the error to the original system remains below a given tolerance for the frequency range of interest. However, the system matrix changes with the introduction of the convective boundary conditions, as shown in Eq. (14). Thus, the eigenvalues and eigenvectors of the system change. It is required to determine if more modes need to be considered in order to ensure that the error remains bounded below a certain \(\epsilon \) in the frequency range of interest.

In order to investigate this, the Weyl’s Inequality Theorem is presented as a preliminary result. Theorem 2 bounds the eigenvalues of the sum of two matrices \(\varvec{M} + \varvec{N}\) given the eigenvalues of \(\varvec{M}\) and \(\varvec{N}\).

Theorem 2

(Weyl’s Inequality) Let \(\varvec{M}\), \(\varvec{N}\) be symmetric matrices of dimension \({\mathbb {R}}^{n}\) and let \(\varvec{S} = \varvec{M} + \varvec{N}\). Let \(\alpha _1 \ge \alpha _2 \ge \dots \alpha _n\), \(\beta _1 \ge \beta _2 \ge \dots \beta _n\), and \(\gamma _1 \ge \gamma _2 \ge \dots \gamma _n\) be the eigenvalues of the matrices \(\varvec{M}\), \(\varvec{N}\), and \(\varvec{S}\) respectively. Then,

Proof

See Bhatia [9] Chapter 3. \(\square \)

The result of Theorem 2 can be used to analyze the properties of the eigenvalues of the system of Eq. (14) with increasing values of the HTC \(h_i\).

Theorem 3

Let the system matrix be \(\varvec{A}^l_d = \varvec{A} + \sum _{i=1}^{n_c}h^l_i\varvec{D}_i\), where \(h^l_i\ge 0\) is a sample of the ith parameters defining the \(n_c\) convective boundary conditions. Let \(0 \ge \alpha ^l_1 \ge \alpha ^l_2 \ge \dots \alpha ^l_n \) be the eigenvalues of \(\varvec{A}^l_d\). Let the system matrix be \(\varvec{A}^m_d = \varvec{A} + \sum _{i=1}^{n_c}h^l_i\varvec{D}_i\), where \(h^m_i\) is another sample of the i parameters such that \(h^m_i \ge h^l_i\ge 0\). Let \(0 \ge \alpha ^m_1 \ge \alpha ^m_2 \ge \dots \alpha ^m_n \) be the eigenvalues of \(\varvec{A}^m_d\). Then, \(\alpha ^m_j \le \alpha ^l_j\) for \(j =1 \dots n\).

Proof

The system matrices \(\varvec{A}\) and \(\varvec{D}_i\) are negative semi-definite, i.e. all the eigenvalues are real and non-positive. The result of Theorem 2 can be particularized for \(i=j\) as

Additionally, considering a negative semi-definite matrix \(\varvec{N}\) it can be stated that

as \(\beta _{1} \le 0\). Let \(\delta h_i\) be the difference between \(h^m_i\) and \(h^l_i\) for \(i = 1 \dots n_c\), i.e. \(\varDelta h_i = h^m_i - h^l_i\). From the statement of this theorem, \(\varDelta h_i \ge 0\). The system matrices can be expressed as \(\varvec{A}^m_d = \varvec{A}^l_d + \sum _{i=1}^{n_c}\varDelta h_i\varvec{D}_i\). Applying the particularization of the Theorem 2 to negative semi-definite matrices of Eq. (70), the following result is obtained

\(\square \)

The result of Theorem 3 states that the higher the HTC, the more negative the eigenvalues of the system are. The physical interpretation of this theorem is that the higher the convective heat exchange with the surrounding, the faster the time constants of the system are. On the other side, a system with low HTC evacuates less efficiently the heat and thus has slower time constants.

Theorem 3 can be particularized for the case that the initial HTC are equal to zero, i.e. \(h^l_i = 0\) for all \(i = 1 \dots n_c\). This is the case of the first iteration of the bilinearization, as shown in Eq. (63). The eigenvalues of the system without convective boundary conditions are less negative. Thus, the eigenfrequcies of the selected eigenmodes in \(\varvec{V}_{\mu }\) increase in magnitude after including the convection matrices. Therefore, the reduced system with proposed bilinearization remains valid in the frequency range of interest.

The proposed parametric MOR approach extends the KMS projection basis to include the parametric dependency of the HTC. Therefore, the dimension of the reduced system increases with respect to the non-parametric reduced model. The dimension of the parametric model, \(r_{p}\), depends on the dimension of the number of convective boundary conditions, \(n_c\), the number of iteration, \(n_{me}\), and the dimension of the non-parametric reduced system, r, as

6 Thermo-mechanical coupling

The KMS reduction constructs a projection basis that reduces the thermal system of Eq. (14). The thermal model determines the transient behavior of the system. However, the main output of interest of the thermo-mechanical model is the mechanical deformation of the structure. Therefore, a reduction basis for the system of Eq. (17) and (18) needs to be defined.

The mechanical model only considers the quasi-static response of the structure. Therefore, the reduced subspace for the mechanical system can be constructed using a Krylov subspace with one moment and one expansion point at a low frequency. The reduced system matches the static gain of the mechanical system. In order to construct the projection basis, the number of inputs of Eq. (17) needs to be considered. The number of mechanical inputs is equal to the number of possible independent temperature distributions and the number of external loads. In principle, the number of independent temperatures is n, i.e. the dimension of the original thermal system. However, the reduced thermal model determines the temperature distribution. Thus, the possible temperature distributions are limited to a linear combination of the vectors of the reduction basis \(\varvec{V}\). All the possible linearly independent thermal body forces \(\varvec{F}_{th}\) are

Therefore, the state space representation of Eq. (17) can be expressed in terms of the reduced temperature state \(\varvec{\tilde{x}}\) instead of the full state \(\varvec{x}\) as

where the number of mechanical inputs is the order of the thermal reduced system and the external forces. The reduction basis \(\varvec{V_{mech}}\) for the mechanical system can be calculated using the Krylov subspace method as

where the expansion point \(s_e\) is chosen close to zero. The Krylov subspace matches exactly static response of the system of Eq. (74) for the different temperature distributions considered in the thermal system projected by \(\varvec{V}\).

7 Numerical results

This section illustrates the MOR methods developed in this paper with numerical examples. Figure 2 shows the geometry and FE-discretization of a 3-axis milling machine. The thermo-mechanical model represents the thermal response of the machine tool under internal heat sources, such as the heat dissipated in the linear drives, and external effects, such as fluctuations of the environmental temperature.

Figure 3 shows the geometry and FE-mesh of the table of the machine tool, which can move in Y-direction. The simple geometry under consideration leads to an original system with a small number of DOF, i.e. 4157 thermal DOF. The small size of the original system facilitates the evaluation of its thermal response in order to compare it with the reduced system. The convective boundary conditions of the machine tool table are separated into two regions, as shown in Fig. 3. Each of the convective boundary conditions has an independent value of the HTC, namely \(\text {HTC}_{\text {top}}\) and \(\text {HTC}_{\text {bot}}\). The value of the HTC is considered to be homogeneous spatial distribution over the surfaces. Thus, the function descrbing the spatial distribution of the HTC, described in Eq. 10 and 11 as \(w(\varvec{z})\), is set to 1. Additionally, the bottom of the table is exposed to a heat flux, representing the heat losses of a linear drive. Similarly to the convective boundary conditions, the spatial distribution of the heat flux is also homogeneous.

Convective boundary conditions of the thermal model of the table, i.e. Y-axis, of the machine tool of Fig. 2

The a priori error estimator of Eq. (59) facilitates the selection of the parameters for the KMS reductions. Given a maximum error between the reduced and original system, \(\epsilon \), and a frequency range of interest, \(\omega \in [0, \omega _{max}]\), the error estimator provides the value of \(\omega _m\). The reduction basis includes all eigenvectors with eigenfrequencies below the frequency \(\omega _m\), i.e. \(\omega _{\mu } < \omega _m\). For the FE thermal model under consideration, the following parameters are chosen:

-

\(\epsilon = \) 0.05

-

\(\omega _{max} = \) 0.01 rad/s

FRF of the relative error between reduced and original system for different combinations of values of \(\text {HTC}_{\text {top}}\) and \(\text {HTC}_{\text {bot}}\) in \(\frac{\text {W}}{\text {m}^2\text {K}}\). The input is the environmental temperature and the output the mean temperature of the drive. Comparison with the error estimator of Eq. (59)

The maximum frequency of interest, \(\omega _{max}\), determines the frequency range containing the most relevant part of the response to the considered thermal inputs. For the thermal model of Fig. 3, inputs at a higher excitation frequency than 0.01 rad/s have a small effect on the magnitude of the FRF, adding no relevant information to the thermal response of the reduced-order model. The selected maximum frequency of interest leads to a value of \(\omega _m \) is 0.04367 rad/s. This results in including 97 modes in the KMS projection basis \(\varvec{V}_{KMS}\). The KMS reduction requires additionally an expansion point at a low frequency in order to match the steady state response of the system. For this thermal model, the reductions basis \(\varvec{V}_{KMS}\) considers an expansion point \(s_e = 10^{-8}\) rad/s. The bilinear reduction extends the basis \(\varvec{V}_{KMS}\) with the information about the convective boundary conditions. For the investigated thermal model, the number of parametric convective boundary conditions, \(n_c\), is 2. The number of iterations for the bilinear reduction (\(m_d\)) is set to 2. The resulting reduced model has 496 DOF, which is considerably smaller than the dimension of the original system.

FRF of the relative error between reduced and original system for different combinations of inputs (heat flow at the drives and environment) and outputs (mean temperature at drive and table). The values of \(\text {HTC}_{\text {top}}\) and \(\text {HTC}_{\text {bot}}\) are 4 and 8 \(\frac{\text {W}}{\text {m}^2\text {K}}\) respectively. Comparison with the error estimator of Eq. (59)

In order to evaluate the performance of the reduction method, the FRF of the original and reduced systems are compared. Equation 14 describes the dynamical evolution of the thermal system. For this numerical example, a single input is considered, namely the environmental temperature. The output of the FRF is the temperature measured at the drive of the Y-axis, namely \(y(j\omega )\). For the thermo-mechanical model under consideration, the FRF can be expressed according to Eq. 14 as

where the input convective heat fluxes, \(u_1\) and \(u_2\), of each convective boundary condition can be expressed in terms of the external environmental temperature, \(T_{ext}(j\omega )\), and the values of the HTC. The definition of the FRF leads to a single input single output (SISO) system, where the input is the environmental temperature and the output is measured at the drive.

The FRFs are evaluated for different values of the parameters describing the convective boundary conditions. The error between the reduced and the original system is calculated between \(10^{-5}\) and 1 rad/s according to Eq. (27) for the considered system input and output. Figure 4 depicts the relative error between original and the system reduced by means of the parametric KMS method. The error is negligibly small at low frequencies, as the chosen expansion point matches the steady state response. At high frequencies, the error increases reaching values close to 1. The reduced system succeeds in reproducing the thermal response of the system in the frequency range of interest, i.e. up to 0.01 rad/s. Additionally, Fig. 4 shows that the error estimator of Eq. (59) is an upper bound of the relative error for all frequencies.

Figure 4 shows the reduction errors for one single combination of the input, environmental temperature, and output, mean temperature at the drive. Figure 5 extends the evaluation of the relative error of the reduced and original system to other input and output combinations, fixing the values of \(\text {HTC}_{\text {top}}\) and \(\text {HTC}_{\text {bot}}\) to 4 and 8 \(\frac{\text {W}}{\text {m}^2\text {K}}\) respectively. For the considered numerical example, the relative errors for different inputs and output combinations show more variability than the errors for Fig. 4. The proposed error estimator remains an upper bound of the relative error for the FRFs of the reduction error.

In order to study the thermal response of the reduced system, it is interesting to compare the eigenvalues of the reduced and the original systems. Let \(\omega _i\) be the ith eigenvalue of the system of Eq. (14) and let \({\tilde{\omega }}_i\) be the ith eigenvalue of the system projected to the subspace spanned by the projection basis \(\varvec{V}\) of Eq. (67). The relative error for the ith eigenvalue can be defined as

Figure 6 shows the relative error of the first 90 eigenvalues between the original and reduced system. The eigenvalues of the systems lie in the frequency range of interest, ranging from \(7 \times 10^{-5}\) to 0.042 rad/s. The relative errors are evaluated for different combinations of the HTC, considering the same values of the HTC parameters as in Fig. 4. The relative error of the eigenvalues increases at higher mode numbers, as illustrated in Fig. 6. Theoretically, if the values of the HTC are all zero, i.e. if no convective boundary conditions are applied, the KMS reduction basis matches exactly the eigenvalues of the original system. However, including values of the HTC different than zero results in a difference between the reduced and the original eigenvalues due to the bilinearization process. For the thermal FE model under consideration, the maximum relative error between the eigenvalues of the reduced and original system remain below \(2 \times 10 ^{-5}\). Therefore, the reduced system with parametric convective boundary conditions succeeds in approximating the eigenvalues of the original system for different combinations of values of the HTC.

The parametric KMS method creates a reduced thermal model, which reproduces the dynamics of the thermal behavior. However, the thermally induced structural deformation is the output of interest of the thermo-mechanical models of machine tools. In order to evaluate the mechanical response of the model of Fig. 3, the mechanical boundary conditions are defined as follows

-

The structure is fixed at the location of the guide carriages

-

The stiffness value is \(10^{10}\) \(\frac{\text {N}}{\mathrm{m}}\) in all directions

Equation (75) defines the projection basis for the thermo-mechanical system. The expansion point \(s_0\) for the Krylov subspace of the mechanical system is 30 rad/s. Starting from an original mechanical system with 12471 DOF, the coupling to thermal parametric reduced system results in a thermo-mechanical reduced system of dimension 532.

In order to evaluate the accuracy of the reduction, the FRF of the thermo-mechanical the original and reduced system are compared. The thermal input is the fluctuation of the environmental temperature, which corresponds to the thermal input shown in Fig. 4. The mechanical outputs are the linear displacements in X-, Y-, and Z-direction measured at the center of the machine tool table, where the workpiece is placed. The relative error between the two systems are calculated in the frequency range between \(10^{-5}\) and 1 rad/s. Figure 7 shows the FRFs of the relative error between the original and reduced systems for different values of the HTC, considering the displacement in Y-direction as the output of the FRF. Figure 7 illustrates that the resulting relative errors between reduced and original system remain below 0.01 for up to the maximum frequency of interest, 0.01 rad/s. Thus, the thermo-mechanical coupled reduced system reproduces accurately the response of the original system for different values of the HTC.

Figure 8 extends the evaluation to different outputs, namely the deviations in X- , Y-, and Z-direction. The mechanical response of the system is evaluated for a value of the parameters \(\text {HTC}_{\text {top}}\) and \(\text {HTC}_{\text {bot}}\) of 4 and 8 \(\frac{\text {W}}{\text {m}^2\text {K}}\) respectively. Figure 8 illustrates that the relative error of the mechanical response remains below 0.01 for the frequency range of interest. Therefore, this numerical example illustrates that the reduced thermo-mechanical system captures the response of the original system.

8 Conclusions

This work presents a MOR approach to deal with the parametric dependency of the convective boundary conditions in weakly coupled thermo-mechanical models. The KMS method proposes a reduction basis combining the information of the Krylov subspace basis with an expansion point at a low frequency and the thermal eigenmodes. The reduced system reproduces the steady state response of the system as well as the transient behavior up a to a certain frequency of interest. This method is especially suited for thermal models of machine tools and other similar mechatronic systems, which show a decaying amplitude of the response at higher frequencies. This work proposes an upper bound of the magnitude of the reduction error. The error bound provides an a-priori estimation of the KMS reduction error and determines the maximum eigenfrequecy to be included in the reduction basis so that the error remains below a certain value for the frequency range of interest.

Among the different parameters describing thermal models of machine tools, this work focuses on the HTC, which defines the convective heat exchange between the structure and the surrounding fluid media. This paper proposes a parametric MOR method to enable the modification of the value of the HTC after reduction. The KMS reduction basis is extended using the concept of system bilinearization. The numerical results show that the parametrically reduced model reproduces the thermal response of the original system for different values of the HTC. Additionally, the parametric reduction retains the thermal eigenfrequencies of the original model. On one hand, this MOR method facilitates the use of a unique model for different values of the HTC, i.e. a parametric Linear Time Invariant (LTI) system. On the other hand, the MOR method enables the modification of the HTC during the simulation, i.e Linear Time Variant (LVT) system. The latter option is of special practical interest, as the boundary conditions might vary over time.

The main objective of thermal error models of machine tools is the prediction of the thermally induced deviations. Therefore, the model needs to evaluate structural deformations associated to inhomogeneous, time-varying temperature distributions. This work presents a method to couple a reduced thermal and mechanical system. The developed coupling method creates a dedicated reduced mechanical system. On one hand, the mechanical system describes the response to any mechanical input, e.g. preloads or gravity, for any combination of the position of the axes. On the other hand, the mechanical system provides the response to any temperature distribution computed by the reduced system. The numerical results show that the reduced system succeeds in capturing the mechanical response of the original system for several values of the HTC and different outputs. The relative error of the reduction remains below 0.01 for the reduced thermo-mechanical system in the frequency range of interest.

The developed MOR methods are implemented in a simulation software MORe [15], which is designed to facilitate the development of physical models of machine tools. Future work will investigate the theoretical error bounds of the KMS method for arbitrary expansion points as well as generalize the developed error estimator to different input and output combinations. Future research will develop thermo-mechanical models of the whole machine tool assembly with parametric convective boundary conditions. These models will evaluate the thermo-mechanical response of machine tools to varying conditions in the surrounding environment.

References

Altintas Y, Brecher C, Weck M, Witt S (2005) Virtual machine tool. CIRP Ann 54(2):115–138. https://doi.org/10.1016/S0007-8506(07)60022-5

Amsallem D, Farhat C (2008) Interpolation method for adapting reduced-order models and application to aeroelasticity. AIAA J 46:1803–1813. https://doi.org/10.2514/1.35374

Antoulas AC (2005) Approximation of large-scale dynamical systems. Society for Industrial and Applied Mathematics, Philadelphia. https://doi.org/10.1137/1.9780898718713

Bai Z, Skoogh D (2006) A projection method for model reduction of bilinear dynamical systems. Linear Algebra Appl 415(2):406–425. https://doi.org/10.1016/j.laa.2005.04.032 Special Issue on Order Reduction of Large-Scale Systems

Bathe K (1982) Finite element procedures in engineering analysis. Prentice Hall, Upper Saddle River. https://doi.org/10.1002/nag.1610070412

Baur U, Benner P, Greiner A, Korvink J, Lienemann J, Moosmann C (2011) Parameter preserving model order reduction for MEMS applications. Math Comput Model Dyn Syst 17(4):297–317. https://doi.org/10.1080/13873954.2011.547658

Benner P, Breiten T (2012) Interpolation-based \({\cal{h}}_2\)-model reduction of bilinear control systems. SIAM J Matrix Anal Appl 33(3):859–885. https://doi.org/10.1137/110836742

Benner P, Gugercin S, Willcox K (2015) A survey of projection-based model reduction methods for parametric dynamical systems. SIAM Rev 57(4):483–531. https://doi.org/10.1137/130932715

Bhatia R (1997) Matrix analysis, vol 169. Springer, Berlin. https://doi.org/10.1007/978-1-4612-0653-8

Breiten T, Damm T (2010) Krylov subspace methods for model order reduction of bilinear control systems. Syst Control Lett 59(8):443–450. https://doi.org/10.1016/j.sysconle.2010.06.003

Bruns A, Benner P (2015) Parametric model order reduction of thermal models using the bilinear interpolatory rational krylov algorithm. Math Comput Model Dyn Syst 21(2):103–129. https://doi.org/10.1080/13873954.2014.924534

Bruns T (2007) Topology optimization of convection-dominated, steady-state heat transfer problems. Int J Heat Mass Transf 50(15):2859–2873. https://doi.org/10.1016/j.ijheatmasstransfer.2007.01.039

Bryan J (1990) International status of thermal error research (1990). CIRP Ann Manuf Technol 39(2):645–656. https://doi.org/10.1016/S0007-8506(07)63001-7

Herzog R, Riedel I, Uciński D (2018) Optimal sensor placement for joint parameter and state estimation problems in large-scale dynamical systems with applications to thermo-mechanics. Optim Eng 19(3):591–627. https://doi.org/10.1007/s11081-018-9391-8

inspire AG: MORe (2020). https://www.more-simulations.ch/

Lang N, Saak J, Benner P (2014) Model order reduction for systems with moving loads. at-Automatisierungstechnik 62(7):512–522. https://doi.org/10.1515/auto-2014-1095

Lee J, Cho M (2017) An interpolation-based parametric reduced order model combined with component mode synthesis. Comput Methods Appl Mech Eng 319:258–286. https://doi.org/10.1016/j.cma.2017.02.010

Mayr J, Jedrzejewski J, Uhlmann E, Donmez MA, Knapp W, Härtig F, Wendt K, Moriwaki T, Shore P, Schmitt R, Brecher C, Würz T, Wegener K (2012) Thermal issues in machine tools. CIRP Ann Manuf Technol 61(2):771–791. https://doi.org/10.1016/j.cirp.2012.05.008

Mian NS, Fletcher S, Longstaff A, Myers A (2013) Efficient estimation by FEA of machine tool distortion due to environmental temperature perturbations. Precis Eng 37(2):372–379. https://doi.org/10.1016/j.precisioneng.2012.10.006

Panzer H, Mohring J, Eid R, Lohmann B (2010) Parametric model order reduction by matrix interpolation. Automatisierungstechnik 58:475–484. https://doi.org/10.1524/auto.2010.0863

Phillips JR (2003) Projection-based approaches for model reduction of weakly nonlinear, time-varying systems. IEEE Trans Comput Aided Des Integr Circuits Syst 22(2):171–187. https://doi.org/10.1109/TCAD.2002.806605

Shi X, Zhu K, Wang W, Fan L, Gao J (2018) A thermal characteristic analytic model considering cutting fluid thermal effect for gear grinding machine under load. Int J Adv Manuf Technol 99(5):1755–1769. https://doi.org/10.1007/s00170-018-2562-0

Spescha D (2018) Framework for efficient and accurate simulation of the dynamics of machine tools. Ph.D. thesis, TU Clausthal. https://doi.org/10.21268/20181119-132644

Weng L, Gao W, Lv Z, Zhang D, Liu T, Wang Y, Qi X, Tian Y (2018) Influence of external heat sources on volumetric thermal errors of precision machine tools. Int J Adv Manuf Technol 99(1):475–495. https://doi.org/10.1007/s00170-018-2462-3

Acknowledgements

The authors would like to thank the Swiss Innovation Agency (Innosuisse) and the Swiss National Science Foundation (SNSF) for their financial support.

Funding

Open access funding provided by Swiss Federal Institute of Technology Zurich.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Hernández-Becerro, P., Spescha, D. & Wegener, K. Model order reduction of thermo-mechanical models with parametric convective boundary conditions: focus on machine tools. Comput Mech 67, 167–184 (2021). https://doi.org/10.1007/s00466-020-01926-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00466-020-01926-x