Abstract

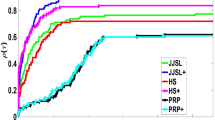

The moving asymptote method is an efficient tool to solve structural optimization. In this paper, a new scaled three-term conjugate gradient method is proposed by combining the moving asymptote technique with the conjugate gradient method. In this method, the scaling parameters are calculated by the idea of moving asymptotes. It is proved that the search directions generated always satisfy the sufficient descent condition independent of the line search. We establish the global convergence of the proposed method with Armijo-type line search. The numerical results show the efficiency of the new algorithm for solving large-scale unconstrained optimization problems.

Similar content being viewed by others

References

Amini, K., Faramarzi, P., Pirfalah, N.: A modified Hestenes–Stiefel conjugate gradient method with an optimal property. Optim. Methods. Softw. 34(4), 770–782 (2019)

Andrei, N.: An unconstrained optimization test functions collection. Adv. Model. Optim. 10(1), 147–161 (2008)

Bongartz, I., Conn, A.R., Gould, N.I.M., Toint, P.L.: CUTE: constrained and unconstrained testing environments. ACM Trans. Math. Softw. 21, 123–160 (1995)

Dai, Y.H., Yuan, Y.X.: A nonlinear conjugate gradient method with a strong global convergence property. SIAM. J. Optim. 10, 177–182 (1999)

Dolan, E.D., Moré, J.J.: Benchmarking optimization software with performance profiles. Math. Program. 91, 201–213 (2002)

Faramarzi, P., Amini, K.: A modified spectral conjugate gradient method with global convergence. J. Optim. Theory Appl. (2019). https://doi.org/10.1007/s10957-019-01527-6

Fletcher, R., Reeves, C.M.: Function minimization by conjugate gradients. Comput. J. 7, 149–154 (1964)

Giripo, L., Lusidi, S.: A globally convergent version of the Polak–Ribiére gradient method. Math. Program. 78, 375–391 (1997)

Hager, W.W., Zhang, H.: A new conjugate gradient method with guaranteed descent and an efficient line search. SIAM J. Optim. 16(1), 170–192 (2005)

Hestenes, M.R., Stiefel, E.: Method of conjugate gradient for solving linear system. J. Res. Nat. Bur. Stand. 49, 409–436 (1952)

Narushima, Y., Yabe, H., Ford, J.A.: A three-term conjugate gradient method with sufficient descent property for unconstrained optimization. SIAM J. Optim. 21, 212–230 (2011)

Ni, Q.: A globally convergent method of moving asymptotes with trust region technique. Optim. Methods Softw. 18, 283–297 (2003)

Nocedal, J., Wright, S.J.: Numerical Optimization, Springer Series in Operation Research. Springer, New York (1999)

Perry, J.M.: A class of conjugate gradient algorithms with a two-step variable-metric memory, Discussion Paper 269, Center for Mathematical Studies in Economics and Management Sciences, Northwestern University (Evanston, Illinois) (1977)

Polak, E., Ribiére, G.: Note sur la convergence de methods de directions conjugées. Rev. Franccaise Informatique Recherche Opiérationnelle. 16, 35–43 (1969)

Polyak, B.T.: The conjugate gradient method in extremal problems. USSR Comput. Math. Math. Phys. 9, 94–112 (1969)

Shanno, D.F.: On the convergence of a new conjugate gradient algorithm. SIAM J. Numer. Anal. 15, 1248–1257 (1978)

Sugiki, K., Narushima, Y., Yabe, H.: Globally convergent three-term conjugate gradient methods that use secant conditions and generate descent search directions for unconstrained Optimization. J. Optim. Theory Appl. 153, 733–757 (2012)

Svanberg, K.: The method of moving asymptotes: a new method for structural optimization. Int. J. Numer. Methods Eng. 24, 359–373 (1987)

Wang, H., Ni, Q.: A new method of moving asymptotes for large-scale unconstrained optimization. Appl. Math. Comput 203, 62–71 (2008)

Wei, Z.X., Li, G.Y., Qi, L.Q.: Global convergence of the Polak–Ribiére–Polyak conjugate gradient method with an Armijo-type inexact line searches for non-convex unconstrained optimization problems. Math. Comput. 77, 2173–2193 (2008)

Yu, G., Guan, L., Li, G.: Global convergence of modified Polak–Ribiére–Polyak conjugate gradient methods with sufficient descent property. J. Ind. Manag. Optim 4(3), 565–579 (2008)

Zhang, L., Zhou, W., Li, D.: Global convergence of a modified Fletcher–Reeves conjugate gradient method with Armijo-type line search. Numer. Math. 104, 561–572 (2006)

Zhang, L., Zhou, W., Li, D.H.: A descent modified Polak–Ribiére–Polyak conjugate gradient method and its global convergence. IMA J. Numer. Anal 26, 629–640 (2006)

Zhang, L., Zhou, W., Li, D.H.: Some descent three-term conjugate gradient methods and their global convergence. Optim. Methods Softw. 22, 697–711 (2007)

Zhou, G., Ni, Q., Zeng, M.: A scaled conjugate gradient method with moving asymptotes for unconstrained optimization problems. J. Ind. Manag. Optim. 13(2), 595–608 (2017)

Zillober, C.: A globally convergent version of the method of moving asymptotes. Struct. Optim. 6, 166–174 (1993)

Zillober, C.: Global convergence of a nonlinear programming method using convex approximations. Numer. Algorithms 27, 256–289 (2001)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Faramarzi, P., Amini, K. A scaled three-term conjugate gradient method for large-scale unconstrained optimization problem. Calcolo 56, 35 (2019). https://doi.org/10.1007/s10092-019-0333-4

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10092-019-0333-4

Keywords

- Scaling parameter

- Three-term conjugate gradient method

- Moving asymptotes

- Sufficient descent property

- Armijo-type line search