Figure 1.

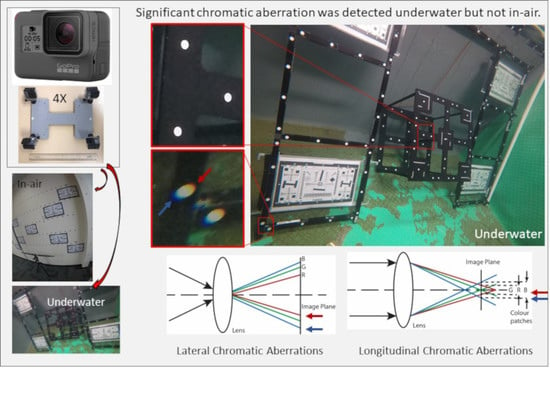

Longitudinal (

left) and lateral (

right) chromatic aberrations. Figure taken from [

11].

Figure 1.

Longitudinal (

left) and lateral (

right) chromatic aberrations. Figure taken from [

11].

Figure 2.

Camera frame with GoPro housings. The ruler length is 30 cm.

Figure 2.

Camera frame with GoPro housings. The ruler length is 30 cm.

Figure 3.

Calibration setup: underwater (left) and in-air (right).

Figure 3.

Calibration setup: underwater (left) and in-air (right).

Figure 4.

Comparison of the CMB calculated principal distances for the four different cameras (rows) in the underwater (left column) and the in-air environment (right column). The order in all diagrams is blue band, green band and red band (indicated by colour).

Figure 4.

Comparison of the CMB calculated principal distances for the four different cameras (rows) in the underwater (left column) and the in-air environment (right column). The order in all diagrams is blue band, green band and red band (indicated by colour).

Figure 5.

Comparison of the CMB calculated principal point offsets for the four different cameras (rows) in underwater (left column) and in-air environment (right column). The colours indicate the results for the different bands (blue, green, red).

Figure 5.

Comparison of the CMB calculated principal point offsets for the four different cameras (rows) in underwater (left column) and in-air environment (right column). The colours indicate the results for the different bands (blue, green, red).

Figure 6.

Comparison of the influence of the CMB calculated radial lens distortion parameters for the four different cameras (rows) in the underwater (left column) and in-air environment (right column). The colours indicate the results for the different bands (blue, green, red). Black indicates that the bands are so close to each other that the differences cannot be shown. The coloured non-italics numbers indicate the distortion in µm at the maximum radial distance. The italic numbers indicate the distortion in µm considering the scale change from underwater to in-air.

Figure 6.

Comparison of the influence of the CMB calculated radial lens distortion parameters for the four different cameras (rows) in the underwater (left column) and in-air environment (right column). The colours indicate the results for the different bands (blue, green, red). Black indicates that the bands are so close to each other that the differences cannot be shown. The coloured non-italics numbers indicate the distortion in µm at the maximum radial distance. The italic numbers indicate the distortion in µm considering the scale change from underwater to in-air.

Figure 7.

(Left) lateral CA visible in the underwater image. The centre of the image is to the bottom right. (Right) no CA is visible at points located at the centre of the image.

Figure 7.

(Left) lateral CA visible in the underwater image. The centre of the image is to the bottom right. (Right) no CA is visible at points located at the centre of the image.

Figure 8.

Comparison of the influence of the calculated decentering lens distortion parameters for the four different cameras (rows) in underwater (left column) and in-air environment (right column). The colours indicate the results for the different bands (blue, green, red). Black indicates that the bands are so close to each other that the difference cannot be shown. The numbers indicate the distortion in µm at the maximum radial distance.

Figure 8.

Comparison of the influence of the calculated decentering lens distortion parameters for the four different cameras (rows) in underwater (left column) and in-air environment (right column). The colours indicate the results for the different bands (blue, green, red). Black indicates that the bands are so close to each other that the difference cannot be shown. The numbers indicate the distortion in µm at the maximum radial distance.

Figure 9.

Comparison of the calculated principal distances (right column) and principal point offsets (left column) for the three different adjustment constraints (rows) for the camera FL calibrated under water. The colours indicate the results for the different bands (blue, green, red).

Figure 9.

Comparison of the calculated principal distances (right column) and principal point offsets (left column) for the three different adjustment constraints (rows) for the camera FL calibrated under water. The colours indicate the results for the different bands (blue, green, red).

Figure 10.

Comparison of the influence of the radial (right column) and decentering (left column) lens distortion parameters for three different adjustment constraints (rows) for the camera FL underwater. The colours indicate the results for the different bands (blue, green, red). The numbers indicate the distortion in µm at the maximum radial distance.

Figure 10.

Comparison of the influence of the radial (right column) and decentering (left column) lens distortion parameters for three different adjustment constraints (rows) for the camera FL underwater. The colours indicate the results for the different bands (blue, green, red). The numbers indicate the distortion in µm at the maximum radial distance.

Figure 11.

Comparison of the calculated principal distances for the camera FL underwater (left column) and the camera BL (right column) for the overall three repeats (rows) using the CMB adjustment. The order of all diagrams is blue band, green band, red band (indicated by colour).

Figure 11.

Comparison of the calculated principal distances for the camera FL underwater (left column) and the camera BL (right column) for the overall three repeats (rows) using the CMB adjustment. The order of all diagrams is blue band, green band, red band (indicated by colour).

Figure 12.

Comparison of the calculated principal point offsets for the camera FL underwater (left column) and the camera BL (right column) for the overall three repeats (rows) using the CMB adjustment. The colours indicate the results for the different bands (blue, green, red).

Figure 12.

Comparison of the calculated principal point offsets for the camera FL underwater (left column) and the camera BL (right column) for the overall three repeats (rows) using the CMB adjustment. The colours indicate the results for the different bands (blue, green, red).

Figure 13.

Comparison of the influence of the calculated radial lens distortion parameters for the camera FL underwater (left column) and the camera BL (right column) for the overall three repeats (rows). The colours indicate the results for the different bands (blue, green, red) using the CMB adjustment. Black indicates that the bands are so close to each other that the difference cannot be shown. The coloured non-italics numbers indicate the distortion in µm at the maximum radial distance. The italics numbers indicate the distortion in µm considering the scale change from underwater to in-air.

Figure 13.

Comparison of the influence of the calculated radial lens distortion parameters for the camera FL underwater (left column) and the camera BL (right column) for the overall three repeats (rows). The colours indicate the results for the different bands (blue, green, red) using the CMB adjustment. Black indicates that the bands are so close to each other that the difference cannot be shown. The coloured non-italics numbers indicate the distortion in µm at the maximum radial distance. The italics numbers indicate the distortion in µm considering the scale change from underwater to in-air.

Figure 14.

Comparison of the influence of the calculated decentering lens distortion parameters for the camera FL underwater (left column) and the camera BL (right column) with overall three repeats (rows) using the CMB adjustment. The colours indicate the results for the different bands (blue, green, red). The numbers indicate the distortion in µm at the maximum radial distance.

Figure 14.

Comparison of the influence of the calculated decentering lens distortion parameters for the camera FL underwater (left column) and the camera BL (right column) with overall three repeats (rows) using the CMB adjustment. The colours indicate the results for the different bands (blue, green, red). The numbers indicate the distortion in µm at the maximum radial distance.

Table 1.

Overview of the used adjustment types (left column), the abbreviation used in this paper (centre column) and a brief explanation (right column).

Table 1.

Overview of the used adjustment types (left column), the abbreviation used in this paper (centre column) and a brief explanation (right column).

| Independent | (IDP) | No constraints are added. Self-calibration of all bands independently. |

| Combined | (CMB) | Constraining that the locations of common object points to be the same. |

| Exterior Parameter Constraint | (EPC) | Constraining the camera locations and orientations (EOPs) of the different spectral bands images in addition to the CMB constraint |

Table 2.

Least square adjustment results of the independent (IDP) adjustment for all in-air datasets showing for each dataset the #images, #object points observed, the degree of freedoms (DoF) and the Root Mean Square (RMS) values. Only one repeat of BL-A is shown. All other BL-A repeats achieved similar results.

Table 2.

Least square adjustment results of the independent (IDP) adjustment for all in-air datasets showing for each dataset the #images, #object points observed, the degree of freedoms (DoF) and the Root Mean Square (RMS) values. Only one repeat of BL-A is shown. All other BL-A repeats achieved similar results.

| In-Air | FL-A | | | BL-A | | |

| Bands | R | G | B | R | G | B |

| #images | 10 | 10 | 10 | 10 | 10 | 10 |

| #object pts | 159 | 159 | 159 | 155 | 157 | 158 |

| DoF | 1484 | 1440 | 1430 | 1510 | 1478 | 1455 |

| RMS (μm) | 1.06 | 0.94 | 0.94 | 0.90 | 0.88 | 0.83 |

| In-Air | FR-A | | | BR-A | | |

| Bands | R | G | B | R | G | B |

| #images | 10 | 10 | 10 | 10 | 10 | 10 |

| #object pts | 160 | 159 | 158 | 158 | 159 | 158 |

| DoF | 1525 | 1618 | 1501 | 1395 | 1404 | 1451 |

| RMS (μm) | 0.77 | 0.80 | 0.77 | 0.79 | 0.79 | 0.84 |

Table 3.

Least square adjustment results of the independent (IDP) adjustment for all underwater datasets showing for each dataset the #images, #object points observed, the Degree of Freedoms (DoF) and the RMS values. Only one repeat of FL-W is shown. All other FL-W repeats achieved similar results.

Table 3.

Least square adjustment results of the independent (IDP) adjustment for all underwater datasets showing for each dataset the #images, #object points observed, the Degree of Freedoms (DoF) and the RMS values. Only one repeat of FL-W is shown. All other FL-W repeats achieved similar results.

| Water | FL-W | | | BL-W | | |

| Bands | R | G | B | R | G | B |

| #images | 15 | 15 | 15 | 14 | 14 | 14 |

| #object pts | 139 | 139 | 139 | 147 | 148 | 144 |

| DoF | 1498 | 1482 | 1460 | 1354 | 1553 | 1323 |

| RMS (μm) | 1.65 | 1.63 | 1.73 | 1.27 | 1.31 | 1.34 |

| Water | FR-W | | | BR-W | | |

| Bands | R | G | B | R | G | B |

| #images | 14 | 14 | 14 | 14 | 14 | 14 |

| #object pts | 140 | 136 | 140 | 141 | 140 | 146 |

| DoF | 1631 | 1513 | 1551 | 1560 | 1555 | 1531 |

| RMS (μm) | 1.32 | 1.22 | 1.36 | 1.41 | 1.34 | 1.87 |

Table 4.

Summary of the maximum correlation coefficients (in absolute values) from the 12 in-air and 12 underwater independent self-calibration adjustments.

Table 4.

Summary of the maximum correlation coefficients (in absolute values) from the 12 in-air and 12 underwater independent self-calibration adjustments.

| | Maximum Correlation |

|---|

| Parameter Group | In Air | In Water |

|---|

| Principal point offset and perspective centre coordinates | 0.86 | 0.80 |

| Principal point offset and rotation angles | 0.79 | 0.78 |

| Principal distance and perspective centre coordinates | 0.63 | 0.81 |

| Radial lens distortion coefficients and perspective centre coordinates | 0.09 | 0.28 |

| Radial lens distortion coefficients and principal distance | 0.73 | 0.34 |

| Decentering lens distortion coefficients and rotation angles | 0.40 | 0.77 |

Table 5.

Principal distances and their standard deviations from the CMB adjustments.

Table 5.

Principal distances and their standard deviations from the CMB adjustments.

| Medium | Camera | Principal Distance |

|---|

| Red (mm) | σ (µm) | Green (mm) | σ (µm) | Blue (mm) | σ (µm) |

|---|

| Underwater | BL | 3.633 | 0.8 | 3.631 | 0.8 | 3.627 | 0.8 |

| | BR | 3.569 | 0.9 | 3.567 | 0.9 | 3.563 | 0.9 |

| | FL | 3.569 | 1.0 | 3.568 | 1.0 | 3.565 | 1.0 |

| | FR | 3.570 | 0.8 | 3.570 | 0.9 | 3.566 | 0.8 |

| In-Air | BL | 2.684 | 0.5 | 2.684 | 0.5 | 2.683 | 0.5 |

| | BR | 2.675 | 0.5 | 2.675 | 0.5 | 2.675 | 0.5 |

| | FL | 2.674 | 0.5 | 2.673 | 0.5 | 2.674 | 0.5 |

| | FR | 2.674 | 0.4 | 2.674 | 0.4 | 2.674 | 0.4 |

Table 6.

Principal point standard deviations from the CMB adjustments.

Table 6.

Principal point standard deviations from the CMB adjustments.

| Medium | Camera | Principal Point |

|---|

| Red | Green | Blue |

|---|

| σxp | σyp | σxp | σyp | σxp | σyp |

|---|

| Underwater | BL | 1.0 | 1.0 | 0.9 | 1.0 | 1.0 | 1.1 |

| | BR | 1.1 | 1.1 | 1.1 | 1.1 | 1.1 | 1.1 |

| | FL | 1.2 | 1.4 | 1.2 | 1.4 | 1.3 | 1.5 |

| | FR | 1.0 | 1.1 | 1.0 | 1.1 | 1.0 | 1.1 |

| In-Air | BL | 0.3 | 0.5 | 0.3 | 0.4 | 0.3 | 0.4 |

| | BR | 0.3 | 0.4 | 0.3 | 0.4 | 0.3 | 0.4 |

| | FL | 0.3 | 0.5 | 0.3 | 0.5 | 0.3 | 0.5 |

| | FR | 0.2 | 0.3 | 0.2 | 0.3 | 0.3 | 0.3 |

Table 7.

EOP differences (min, max, RMS) of all cameras underwater (UW) and in-air when applying the CMB adjustment.

Table 7.

EOP differences (min, max, RMS) of all cameras underwater (UW) and in-air when applying the CMB adjustment.

| | Cameras | BL | | BR | | FL | | FR | |

|---|

| | Position | Angles | Position | Angles | Position | Angles | Position | Angles |

|---|

| | (mm) | (dd) | (mm) | (dd) | (mm) | (dd) | (mm) | (dd) |

|---|

| UW | Min | −4.1 | −0.090 | −4.4 | −0.129 | −4.1 | −0.113 | −4.3 | −0.024 |

| Max | 3.8 | 0.079 | 3.5 | 0.139 | 3.2 | 0.035 | 3.9 | 0.076 |

| RMS | 1.8 | 0.031 | 1.9 | 0.061 | 1.7 | 0.030 | 1.7 | 0.024 |

| Air | Min | −0.2 | −0.019 | −0.2 | −0.016 | −0.3 | −0.029 | −-0.2 | −0.018 |

| Max | 0.2 | 0.038 | 0.3 | 0.010 | 0.4 | 0.024 | 0.3 | 0.006 |

| RMS | 0.1 | 0.015 | 0.1 | 0.005 | 0.1 | 0.010 | 0.1 | 0.008 |

Table 8.

Mean EOP precision of all cameras underwater and in-air when applying the CMB adjustment.

Table 8.

Mean EOP precision of all cameras underwater and in-air when applying the CMB adjustment.

| | Camera | BL | BR | FL | FR |

|---|

| Underwater | Xc (mm) | 0.3 | 0.4 | 0.4 | 0.3 |

| Yc (mm) | 0.4 | 0.4 | 0.5 | 0.4 |

| Zc (mm) | 0.3 | 0.4 | 0.4 | 0.4 |

| omega (dd) | 0.019 | 0.022 | 0.025 | 0.022 |

| phi (dd) | 0.018 | 0.020 | 0.022 | 0.019 |

| kappa (dd) | 0.010 | 0.012 | 0.014 | 0.014 |

| Air | Xc (mm) | 0.1 | 0.1 | 0.1 | 0.1 |

| Yc (mm) | 0.1 | 0.1 | 0.1 | 0.1 |

| Zc (mm) | 0.1 | 0.1 | 0.1 | 0.1 |

| omega (dd) | 0.014 | 0.009 | 0.010 | 0.008 |

| phi (dd) | 0.008 | 0.008 | 0.008 | 0.006 |

| kappa (dd) | 0.011 | 0.006 | 0.007 | 0.005 |

Table 9.

Mean tie point precision (3D). All results are shown in mm.

Table 9.

Mean tie point precision (3D). All results are shown in mm.

| Case | IDP | CMB | EPC |

|---|

| 6EOP | 5.6 | 3.6 | 3.5 |

| 7EOP | 9.2 | 6.0 | 5.8 |

Table 10.

The 6EOP accuracy assessment results. All results are shown in mm.

Table 10.

The 6EOP accuracy assessment results. All results are shown in mm.

| | | IDP | CMB | EPC |

|---|

| | 3D | B | G | R |

|---|

| BL | MEAN | 1.1 | 0.8 | 0.6 | 1.3 | 0.6 |

| RMS | 1.5 | 1.2 | 1.2 | 1.5 | 0.9 |

| MIN | 2.7 | 2.0 | 1.7 | 2.3 | 1.5 |

| MAX | 0.8 | 1.9 | 2.6 | 0.7 | 0.6 |

| BR | MEAN | 4.0 | 0.9 | 0.9 | 1.3 | 1.3 |

| RMS | 4.9 | 1.5 | 1.4 | 1.5 | 1.5 |

| MIN | 6.5 | 2.9 | 2.3 | 2.1 | 2.0 |

| MAX | 2.9 | 1.0 | 0.8 | 2.2 | 2.1 |

| FL1 | MEAN | 6.3 | 6.5 | 9.9 | 7.8 | 7.5 |

| RMS | 6.9 | 7.0 | 10.6 | 8.3 | 8.0 |

| MIN | 7.6 | 8.0 | 12.3 | 9.3 | 9.2 |

| MAX | 6.3 | 6.7 | 7.4 | 7.0 | 6.4 |

| FL2 | MEAN | 12.8 | 7.6 | 8.0 | 10.9 | 8.0 |

| RMS | 13.0 | 7.7 | 8.2 | 11.0 | 8.1 |

| MIN | 14.8 | 8.6 | 10.1 | 12.7 | 9.5 |

| MAX | 12.1 | 7.1 | 9.2 | 9.8 | 7.4 |

Table 11.

The 7EOP accuracy assessment results. All results are shown in mm.

Table 11.

The 7EOP accuracy assessment results. All results are shown in mm.

| | | IDP | CMB | EPC |

|---|

| | 3D | B | G | R |

|---|

| BL | MEAN | 2.7 | 3.6 | 1.5 | 2.8 | 2.4 |

| RMS | 3.1 | 4.0 | 1.9 | 3.1 | 2.7 |

| MIN | 2.9 | 3.4 | 1.8 | 2.9 | 2.3 |

| MAX | 4.0 | 5.7 | 3.8 | 4.0 | 3.4 |

| BR | MEAN | 16.9 | 7.9 | 7.3 | 10.6 | 10.0 |

| RMS | 17.3 | 8.1 | 7.5 | 10.8 | 10.2 |

| MIN | 19.5 | 9.6 | 8.7 | 11.2 | 10.6 |

| MAX | 13.2 | 7.4 | 6.6 | 9.8 | 9.3 |

| FL1 | MEAN | 3.7 | 4.4 | 8.9 | 5.1 | 3.7 |

| RMS | 5.0 | 5.3 | 11.1 | 6.7 | 5.3 |

| MIN | 2.2 | 4.7 | 10.1 | 4.4 | 2.9 |

| MAX | 7.4 | 6.4 | 17.5 | 11.1 | 9.1 |

| FL2 | MEAN | 10.2 | 4.0 | 2.7 | 7.8 | 6.2 |

| RMS | 11.9 | 5.2 | 3.8 | 9.4 | 7.3 |

| MIN | 10.7 | 4.9 | 4.5 | 8.7 | 5.8 |

| MAX | 19.8 | 9.0 | 6.5 | 15.4 | 12.3 |