Abstract

Many models of evolution are implicitly causal processes. Features such as causal feedback between evolutionary variables and evolutionary processes acting at multiple levels, though, mean that conventional causal models miss important phenomena. We develop here a general theoretical framework for analyzing evolutionary processes drawing on recent approaches to causal modeling developed in the machine-learning literature, which have extended Pearls do-calculus to incorporate cyclic causal interactions and multilevel causation. We also develop information-theoretic notions necessary to analyze causal information dynamics in our framework, introducing a causal generalization of the Partial Information Decomposition framework. We show how our causal framework helps to clarify conceptual issues in the contexts of complex trait analysis and cancer genetics, including assigning variation in an observed trait to genetic, epigenetic and environmental sources in the presence of epigenetic and environmental feedback processes, and variation in fitness to mutation processes in cancer using a multilevel causal model respectively, as well as relating causally-induced to observed variation in these variables via information theoretic bounds. In the process, we introduce a general class of multilevel causal evolutionary processes which connect evolutionary processes at multiple levels via coarse-graining relationships. Further, we show how a range of fitness models can be formulated in our framework, as well as a causal analog of Prices equation (generalizing the probabilistic Rice equation), clarifying the relationships between realized/probabilistic fitness and direct/indirect selection. Finally, we consider the potential relevance of our framework to foundational issues in biology and evolution, including supervenience, multilevel selection and individuality. Particularly, we argue that our class of multilevel causal evolutionary processes, in conjunction with a minimum description length principle, provides a conceptual framework in which identification of multiple levels of selection may be reduced to a model selection problem.

Similar content being viewed by others

Introduction

Causality is typically invoked in accounts of evolutionary processes. For instance, for a variant to be subject to direct selection, it is necessary that it has a causal impact on fitness. The role of causality is made explicit in axiomatic accounts of evolution (Rice 2004). Further, the formal framework of Pearl’s ‘do’-calculus (Pearl 2009) has been used explicitly in analyzing Mendelian Randomization (Lawlor et al. 2008), the relationship between kin and multilevel selection (Okasha 2015), and information derived from genetic and epigenetic sources in gene expression (Griffith and Koch 2014b). A number of features of evolutionary processes, however, limit the potential for direct formalization in the ‘do’-calculus framework, which requires causal relationships to be specified by a directed acyclic graph (DAG), and cannot represent causal processes at multiple levels. In contrast, cyclical causal interactions are ubiquitous in natural processes, for instance in regulatory and signaling networks which lead to high levels of epistasis in the genotype-phenotype map (Wagner 2015). Further examples of cyclical causal interactions arise through environmental feedback, both in the generation of traits, leading to an extended genotype-environment-phenotype map (Houle et al. 2010), and across generations in the form of niche construction (Krakauer et al. 2008). Hierarchy is also ubiquitous in evolution, and many phenomena, such as multicellularity and eusociality, seem to require a multilevel selection framework for analysis, implicitly invoking causal processes at multiple levels (Okasha 2006). Such a framework would also seem necessary in analyzing major transitions in evolution (Calcott and Sterelny 2011).

A number of frameworks have been proposed in the machine-learning literature for extending Pearl’s ‘do’-calculus to allow for cyclic causal interactions. These include stochastic models with discrete variables (Itani et al. 2010), and deterministic (Mooij et al. 2013) and stochastic (Rubenstein et al. 2017) models with continuous variables. Further, approaches have been introduced for analyzing causal processes at multiple levels using the ‘do’-calculus (Chalupka et al. 2016; Rubenstein et al. 2017). In Rubenstein et al. (2017), both of these phenomena are related through the notion of a transformation, which is a mapping between causal models which preserves causal structure. Coarse-graining is a particular kind of transformation, special cases of which involve mapping a causal model over micro-level variables into one over macro-level variables, and mapping a directed causal model which is extended across time into a cyclical model which summarizes its possible equilibrium states (subject to interventions).

In addition, the ‘do’-calculus has been combined with information theory in order to define notions of information specifically relevant to causal models, such as information flow (Ay and Polani 2008), effective information (Hoel 2017), causal specificity (Griffith and Koch 2014b) and causal strength (Janzing et al. 2013). Although not explicitly cast in causal terms, there has also been much interest in defining non-negative multivariate decompositions of the mutual information between a dependent variable and a set of independent variables, which may be collectively described as types of Partial Information Decomposition (PID) (Bertschinger et al. 2014; Griffith and Koch 2014b; Williams and Beer 2010). Such definitions, however, can only be applied in causal models with a DAG structure, leaving open the question of how causal information should be defined and decomposed in a system with cyclic interactions.

Motivated by the above, we propose a general causal framework for formulating models of evolutionary processes which allows for cyclic interactions between evolutionary variables and multiple causal levels, drawing on the transformation framework of Rubenstein et al. (2017) as described above. Further, we propose a causal generalization of the Partial Information Decomposition (Causal Information Decomposition, or CID) appropriate for such cyclic causal models, and show that our definition has a number of desirable properties and can be related to previous measures of causal information. We analyze a number of specific evolutionary models within our framework, including first, a model with epigenetics and environmental feedback, and second, a model of multilevel selection which we apply to the particular cases of group selection and selection between mutational processes in cancer. We analyze the CID in the context of both models, and demonstrate the conditions under which bounds can be derived between components of the CID and components of the PID associated with the observed distribution. Finally, we discuss the causal interpretation of Price’s equation and related results in our causal framework. In general, our analysis is intended both to help clarify conceptual issues regarding the role of causation in the models analyzed, as well as to aid in the interpretation of data when the assumptions of these (or similar) models are adopted, via the bounds introduced.

We begin in “Cyclic and multilevel causality in biology” by providing an overview of the issues related to cyclic and multilevel causality in evolution which motivate our approach, and informally introduce the Discrete Causal Network (DCN) and Causal Information Decomposition (CID) frameworks which form the basis of subsequent analyses (full definitions are given in Appendix A). “Causal evolutionary processes” then outlines our general framework for Causal Evolutionary Processes (CEP). “Model 1: Genetics, epigenetics and environmental feedback” and “Model 2: Multilevel selection” analyze specific models of epigenetics with environmental feedback and multilevel selection within this framework respectively, and “Causal interpretation of Price’s equation and related results” provides a causal interpretation of Price’s equation and outlines related information-theoretic results. We then concludes with a discussion (“Discussion”), including the potential relevance of our framework to foundational issues in the philosophy of biology and evolution.

Summary of models. A Shows the relationships between the main models defined in the paper, where the arrows point from larger to smaller model classes, the latter being a special case (subset) of the former (see Appendix A for DCN and DDCN definitions). B Shows a schematic of a Causal Evolutionary Process, showing a population of size \(N=3\) at two time steps (nodes representing all variables associated with an individual at time t). \(\phi\), e, w and \(\pi\) represent phenotype, environment, fitness and parental map respectively, where the latter is also represented explicitly by the dotted arrows, which connect each individual at \(t=1\) to its parent at \(t=0\). C and D illustrate the CTCM* and \(\hbox {MCEP}_{\text {mut-proc}}\) models described in “Model 1: Genetics, epigenetics and environmental feedback” and “Model 2: Multilevel selection” respectively. The first is a model of a complex trait, with genetics (X), epigenetics (Y) and feedback between behavior (Z) and environment (e), while the second is a model of multilevel selection in cancer with genetics (X) and mutational processes (m). Solid arrows here represent the directed graphical structure of the underlying discrete causal network [the Pa relation, which is distinct from the \(\pi\) evolutionary variable in (B)]. Nodes and edges are labeled with variable and kernel names respectively as defined in these models

Cyclic and multilevel causality in biology

We begin by outlining and motivating some of the basic concepts that will be used to develop our framework. We do so here in an informal way: technical definitions and proofs are given in Appendix A.

Cyclic causality. The do-calculus provides a compelling formalization of the mathematical structure of causation (Pearl 2009). However, a requirement of this framework is that causal relationships between variables must form a Directed Acyclic Graph (DAG). A DAG is any graph (a collection of nodes and edges) which contains no directed loops (cycles). For instance, if gene A regulates gene B, and gene B regulates genes C and D (for example, the genes are transcription factors, and regulatory relationships are established via promoter binding), variables corresponding to the expression levels of these genes can be arranged in a graph with no cycles. Performing manipulations on gene B (do-operations) will thus affect the expression of genes C and D but not A. Pearl’s calculus provides exact rules for deducing the distribution of all variables after an intervention, which will always return a valid distribution; formally, the graph is altered by cutting all incoming edges to the manipulated node, and the joint distribution is recalculated using a delta distribution to represent the intervention.

However, such well-ordered networks are the exception rather than the rule in gene regulatory networks (GRNs). For instance, we could add to the above network a feedback interaction by assuming that gene D regulates A. Here, although A causes B’s expression locally, B also causes A’s expression via D. In fact, in this case no harm is done assuming the system has a solution as a whole, since any intervention will either split the cycle (genes A, B and D), or have no effect on it (gene C), and hence all distributions are well defined. Alternatively, though, we could consider starting with the original graph, and in addition let C regulate D and D regulate C. Now, by intervening on B we are not guaranteed to find a solution for every intervention, even if the original system has a solution. Recent work has investigated the extension of Pearl’s calculus to graphs with cycles (where the graph manipulations are identical to the acyclic case), characterizing the situations in which solutions exist, and allowing systems to be defined which have a restricted set of interventions allowed (Itani et al. 2010; Mooij et al. 2013; Rubenstein et al. 2017). A particular example which has a clear solution is a deterministic network governed by linear differential equations: \(\dot{x_i} = f_i(x_1,\ldots ,x_N)\), where the \(x_i\)’s may be gene expression values for instance. Here, ‘solutions’ are taken to be the equilibrium points of the system, and these exist for any intervention provided the original system of equations \(f_1,\ldots f_N\) is contractive, meaning that it maps a given region of state-space to one with a smaller volume with time (Rubenstein et al. 2017). Stochasticity can be added as long as the functions are contractive almost surely.

In these examples, we have considered feedback processes in gene regulatory networks. However, similar feedback processes can occur at many levels. For instance, consider a psychological trait, such as depression. This trait may be ultimately caused by numerous aspects of brain structure and gene expression patterns; however, since we have pharmacological interventions which can control the severity of depression, these can introduce a feedback process from the environment to the molecular layer. In general, the expression of any trait may be subject to environmental feedback in this way.

We now note some features about the above. First, we have phrased both the GRN and the environmental feedback example in terms of a process in time. One way to deal with such cyclic structures is to ‘unroll’ them over time, that is, consider a discretized set of time points, and repeat all variables at each time, while connecting a given variable, say a gene, to its regulatory parents at the previous time-step. This will automatically generate an acyclic causal graph. The cyclic causal system corresponding to this temporal process can be considered to be that formed by the equilibrium distributions (assuming they exist) after certain ‘macro’ interventions are applied, which fix a particular variable to a given value across all times. The cyclic system can thus be considered a coarse-graining of the temporal acyclic system. Not all cyclical causal systems can be formed this way (see Rubenstein et al. 2017), but our emphasis will be on such systems, given their prevalence in biology (although the framework is agnostic to the system’s origins). For convenience, in setting up our framework, we use a ‘Discrete Causal Network’ model (DCN, Appendix A; see also Fig. 1A for the relationships between all models in the paper), which allows cycles and variables which take discrete values, and is parameterized by a set of probability kernels, one for each variable, specifying the conditional distribution of the variable on the values of its graphical parents. We further introduce dynamic-DCN and equilibrium-DCN models (Appendix A, Definition 2.6), corresponding respectively to an underlying temporal process and coarse-grained equilibrium cyclic causal model as discussed above.

Multilevel causality. A further limitation common to straightforward applications of Peal’s do-calculus is its seeming reliance on a single level of causal analysis. For instance, in analyzing the causes of an action, it seems appropriate to identify causes at multiple levels, such as nerves firing, muscles contracting, psychological beliefs, desires and past learning. Indeed, in analyzing group selection using causal graphs, a recent approach has distinguished between causal and supervenient relationships between variables, while maintaining a DAG graphical structure overall (Okasha 2015). Recent approaches have formalized the idea that a causal system can be described at multiple levels, by introducing coarse-graining mappings between causal structures at different scales (Rubenstein et al. 2017). Such approaches capture the relation of supervenience, since multiple fine-grained interventions in one model may be mapped to the same intervention in another provided the causal structure is preserved by the mapping.

The possibility of multiple levels of causation is arguably of central importance in evolution. In particular, we argue that multilevel selection should be seen as a special case of multilevel causality, and introduce a ‘Multilevel Causal Evolutionay Process’ model (MCEP) as a general framework for analyzing such processes. In particular, fitness is treated as a causal variable at each level of the MCEP, allowing coarse-grained fitness to supervene on lower-level fitness values (and other evolutionary variables). A particular case we consider is cancer evolution. As has been recently demonstrated (Alexandrov 2013; Dentro et al. 2020; Temko et al. 2018), tumors not only acquire particular sets of mutations (with positive, negative and neutral effects on growth) over their development, but also acquire prototypical mutational processes. These processes are caused by factors such as disruption of the DNA repair machinery or other cellular mechanisms such as DNA methylation, or environmental effects such as carcinogens, which cause particular mutations to become more prevalent depending on local sequence characteristics or chromosomal position. The fact that such processes introduce bias into the way variation is acquired in the tumor means that they can contribute towards the tumor’s evolution. At a fine-grained level, it is the individual mutations themselves which are responsible for fitness variations among cells, and the mutational processes are simply a source of variation. We show however, that by considering a multilevel model, fitness across larger time-scales can be driven by a combination of individual mutations and mutational processes, potentially even primarily by the latter as suggested by recent results (Dentro et al. 2020; Kumar et al. 2020; Temko et al. 2018).

Causal transformations. The models we develop in response to the above (cyclic and multilevel causation) both rely on the technical apparatus of a transformation between causal systems, as introduced in Rubenstein et al. (2017). In general, this can be thought of as a structure preserving map between causal systems, analogous to a homomorphic map between groups or other algebraic structures. A causal transformation requires that variables and interventions in one causal system are mapped to those in another, while preserving all causal relationships in the first system as seen ‘from the viewpoint’ of the second. Like a group homomorphism, a transformation of causal systems is not necessarily one-to-one or onto, and so the mapping may embed the first causal system in the second, or map many variables in the first onto a single variable in the second. The transformations we consider typically correspond to the latter possibility, and thus can be seen as forms of coarse-graining. However, it is important to stress that transformations are not limited to coarse-graining relationships, and are a general mechanism for relating causal systems. We explicitly define the notion of transformation we need for the special case of discrete causal networks in Appendix A, Definition 2.2.

Information in causal systems. An advantage of framing evolutionary models in explicitly causal terms is that it becomes possible to make distinctions between different ways in which evolutionary variables may interact, which are difficult to make otherwise. For instance, it is common to trace variation in a particular trait (such as height) to genetic and environmental sources. With genetics, the (broadly justified) assumption is made that variation in the trait is in response to genetic variation, and thus intervention is not required to assess the causal impact (having controlled for confounders such as population structure). However, the situation is less clear when variation at other levels such as epigentics (transcriptomics, DNA methylation), or environmental factors are considered in relation to high-level trait variation (height, depression). Here, we would like to be able to trace variation to sources which may be involved in cyclic interactions. In general, interventions may be required to assess the causal impact of one variable on another, but it may also be possible to combine observations and assumptions to infer aspects of the causal structure.

For this reason, we also consider how to define a general notion of causal impact in cyclic causal systems, by generalizing the Partial Information Decomposition framework (PID, Williams and Beer 2010), which cannot handle cyclic interactions, to a Causal Information Decomposition framework (Appendix A, Definition 2.4). We show that bounds may be derived in this framework that potentially allow direct causal relations between variables to be inferred from observational data by observing non-zero unique information, and differences in observed and causal information between variables to be predicted given assumptions about feedback and interference. We provide technical background for these bounds in Appendix A (Theorems 2.10 and 4.3), draw connections with alternative definitions of causal impact and related bounds (Proposition 2.5), and summarize the implications as they apply to models of epigenetics and multilevel selection in the relevant sections (Theorem 4.3 and Proposition 5.5). These results are intended both to motivate the application of models using PID and CID frameworks in analyzing data, and also contextualize the issues underlying existing approaches, even when not couched explicitly in information-theoretic terms.

One application may be in decomposing the genetic, epigenetic and environmental causal factors underlying observed traits, for instance, psychological traits such as depression in the example mentioned above. In this context, methods such as TWAS (Zhu et al. 2016) and related non-linear models (Wang et al. 2018) aim to isolate the genetic component which is causative for a given trait (for the latter, as mediated by gene expression). However, a more complete picture of underlying causation for a given trait would consider the complete causal effect of (say) gene expression on a trait, and not only the component which mediates genetic risk (since therapeutic interventions are not limited to targeting mediated genetic effects). Environmental variables may also play a role, whose effects may be mediated by epigenetic factors, be independently mediated, or be reflective of rather than causal for a trait (for instance, medication effects). The PID provides a guiding framework for partitioning trait-relevant information between genetics, epigenetics and environment (say). However, it cannot distinguish between causal feedback and feedforward influences of variables on the trait, which is our motivation for introducing the CID; our Theorem 4.3 characterizes the discrepancy between these two frameworks, and thus places a limit on how far the PID can fail to estimate the CID, which (as we show) is characterized by the relative strength of feedforward and feedback processes. While precise estimates of the quantities involved may be difficult, it may be possible to provide plausible empirical approximations; for instance, the feedforward causal epigentic component is bounded by mediated genetic effects, and the feedback component from medication effects can be estimated using animal models, as in recent studies of psychotic medications (Benton et al. 2012; Fromer et al. 2016). In “Model 2: Multilevel selection” we also discuss an application in cancer genomics, where we argue that mutation-process specific effects on subclonal fitness may be identified using non-zero mutual information between such processes and growth rate [which can be estimated from sequencing data, as in Salichos et al. (2020)] via Theorem 2.10 and Proposition 5.5.

Deterministic, stochastic and causal models. Finally, we wish to emphasize the intrinsic differences between the mathematical structures underlying deterministic, stochastic (or probabilistic) and causal models. These kinds of models can be seen as strictly nested inside one another: deterministic models are simply stochactic models whose probabilities are all taken to be either 0 or 1, while stochastic models are causal models which are not subject to any interventions (i.e. subject to the null intervention). In this sense, causal models contain strictly more information than stochastic models, since they represent a family of distributions parameterized by all possible interventions, rather than a single distribution. Alternatively, we can say that causal models contain counterfactual as well as probabilistic information. As stressed in axiomatic accounts of evolution (Rice 2004), we view causal structure as intrinsic to the definition of an evolutionary process, and thus causal models as the appropriate mathematical structure for a complete description of such a process. In “Causal interpretation of Price’s equation and related results”, we briefly consider this viewpoint in relation Price’s Equation and a related information-theoretic result based on the Kullback–Leiber divergence (used in information theory as a quasi-distance measure between probability distributions, here the trait distribution at two time-points), discussing their analogues in stochastic and causal models and stressing how the causal viewpoint offers a more complete picture (albeit, one implicit in other modeling frameworks).

Causal evolutionary processes

We begin by introducing a general model of a causal evolutionary process. In its general form, the model is a formalized ‘phenotype-based theory’ of evolution (see Rice 2004), which is agnostic about underlying mechanisms. As argued in Rice (2004) (and as we will be elaborated in subsequent sections), such a perspective naturally embeds traditional population genetics models as a special case, since genotypes may be treated as special kinds of discrete phenotypes, while offering a more general viewpoint. For notational convenience, we introduce all definitions and examples below in the context of an asexual population of constant population size, although the model naturally generalizes to mating populations and varying population sizes. Figure 1B illustrates the model definition below.

Definition 3.1

(Causal Evolutionary Process (CEP)): A CEP is a Discrete Causal Network (DCN) over the variables \(\phi _{nt}\), \(e_{nt}\), \(w_{nt}\), \(\pi\) (all variables discretized, and \(\pi _{nt}\in \{1\ldots N\}\)), representing the phenotype, environment, fitness and parent of the n’th individual in the population at time t respectively, where ‘fitness’ and ‘parent’ are to be understood in a structural sense to be defined, and \(n\in \{1\ldots N\}\), \(t\in \{0\ldots T\}\). We write \(\phi _{t}\), \(e_{t}\), \(w_{t}\), \(\pi _{t}\) for the collective settings of these variables at t, and for convenience use identical notation for names and values taken by random variables. Further, \(\phi _{nt}\) and \(e_{nt}\) may be viewed as a collection of sub-phenotypes and sub-environmental variables, in which case we write \(\phi _{nst}\) and \(e_{nst}\) for the value of sub-phenotype (resp. environment) s of individual n at time t. We set \(Pa(\phi _0)=Pa(e_0)=Pa(\pi _0)=\{\}\) (noting that Pa stands for the ‘graphical parents’ of a variable in the causal graph, while \(\pi _{nt}\) is the evolutionary variable representing the parent of individual n at time t, whose values are indices of individuals at \(t-1\)). For all other variables, we set \(Pa(w_t)=\{\phi _t,e_t\}\), \(Pa(\phi _t)=\{\phi _{t-1},e_t,\pi _t\}\), \(Pa(e_t)=\{e_{t-1},\phi _t,\pi _t\}\), \(Pa(\pi _t)=\{w_{t-1}\}\). A model is specified by defining the following kernel forms, where we use \(\prod\) to denote a ‘kernel product’ (this corresponds to multiplication for directed acyclic causal graphs, but is defined more generally for cyclic graphs as described in Appendix E), \((=)\) for an optional kernel factorization, and set the underlying variables of the DCN to correspond to the lowest-level factorization consistent with the model kernels:

Fitness kernel:

Heritability kernel:

Structure kernel:

For the environmental variables, we have the following alternative kernel forms:

In Eq. 4, we refer to factorizations (a) and (b) as independent and interactive environmental kernels respectively, and (c) as an environmental heritability kernel (represented by the symbol \(\hbar\)). Additionally, kernels must be given over the remaining variables for which \(Pa(.)=\{\}\) to completely specify a CEP model, and \(\bar{s}\) denotes the complement of \(s.\)

The interaction of the fitness and structure kernels (f and i resp.) give rise to different possible fitness models. We summarize some of these possibilities below:

Definition 3.2

(Fitness models): We define the following CEP fitness models:

Classical fitness representation:

Multinomial model (\(\omega _{nt}=w_{nt}/(\sum _n w_{nt})\) denotes normalized fitness):

Moran model:

where \(S_{n^*}\) is the set of all vectors in \(N^N\) containing exactly two entries with the value \(n^*\), and all other values appear at most once.

In the classical fitness representation, the variable \(w_{nt}\) directly represents the number of descendants of individual n at time t in the following generation, and the structure kernel i simply ensures the parent map \(\pi\) is consistent with these values. In the multinomial model, the values \(w_{nt}\) determine the relative fitnesses of individuals at time t and the actual numbers of descendants are determined by multinomial sampling, implemented by i (as in the Wright–Fisher model with selection Felsenstein 2016). In contrast, in the Moran model, the \(w_{nt}\)’s determine the probability that an individual is chosen to reproduce, and i implements the constraint that only one individual reproduces and dies per generation, with the latter being chosen uniformly. Although both Multinomial and Moran models could be represented in the classical fitness representation, this would be at the expense of using a non-factorized form of the f kernel; hence we argue that the representations in Eqs. 6 and 7 are more natural parameterizations of these models (so that, in general, ‘fitness’ is not exclusively interpreted as the number of offspring at time \(t+1\), but rather a set of sufficient statistics for generating the parental map at time \(t+1\)). Further, we note that, as in the case of the Moran model, the time steps t need not correspond to discrete generations.

Finally, we define a transformation between CEPs:

Definition 3.3

(Transformation between CEPs): A transformation between CEPs is defined as a transformation between their underlying DCNs in the sense of Definition 2.2(Appendix A).

In relation to Definition 3.3, we note that a transformation between CEPs need only preserve the causal structure; hence, it may (for instance) map environmental onto phenotypic variables, or a large population onto a small population by merging individuals. All that is necessary is that the resulting causal structure may be interpreted as an evolutionary process in some way. We shall give examples in the following sections of transformations with characteristics such as above.

Model 1: Genetics, epigenetics and environmental feedback

The CEP model as introduced in “Causal evolutionary processes” does not include a model of genetics. However, as noted, the genotype may be regarded as a special type of discrete phenotype, and the process of genetic transmission with mutation can be naturally modeled in the heritability kernel. Here, we describe a type of CEP which includes both genetics and epigenetics, along with potential effects from and impacts on the environment (environmental feedback), for instance via behavior or drugs used in treating diseases. The model thus formalizes a gene-environment-phenotype map (G–E–P map) of the kind described informally in Houle et al. (2010) (for simplicity, we refer to any ‘intermediate phenotype’ as epigenetic, including indicators of cell/tissue state such as the the transcriptome). Our purpose is to provide a general model appropriate for analyzing the causal factors underlying complex traits, such as psychiatric disorders. As we show, using this model, the causal information decomposition (CID) described in “Cyclic and multilevel causality in biology” and Appendix A provides a principled framework for breaking down the variation in a complex trait due to genetic, epigenetic and environmental factors; the model is more general than other models with similar goals, for instance TWAS (see Zhu et al. 2016), since it aims to model both genetic and other causes of a trait, allowing feedback with the environment at the epigenetic level, and uses an information theoretic framework to decompose the variation and hence is appropriate in the context of arbitrary (non-linear) dependencies.

We first define a general CEP model with the above characteristics:

Definition 4.1

(Complex Trait Causal Model (CTCM)): We define a CTCM as a CEP with the following special structure. In terms of phenotypes, we require three sub-phenotypes which we denote X, Y and Z, hence \(\phi _{nt}=\{x_{nt},y_{nt},\) \(z_{nt}\}\), which represent genotype, epigenome (including transcriptome), and observed trait(s) respectively. Environmental variables are referred to collectively as e. Further, we use the factorized form of the heritability kernel in Eq. 2, and require the following special forms for the sub-kernels (using the kernel product notation from Appendix E):

where \(g_1\) and \(g_2\) are referred to collectively as the genotype-phenotype map, and independently as the genetic-epigenetic and epigenetic-observed kernels respectively, while \(h_x\) is referred to as the genetic transmission kernel. Further, the CTCM uses an environmental kernel having either an independent factorization, or an interactive form (Eq. 4(a) and (b) resp.); the latter takes the form:

and \(g_1\), \(g_2\) and \(g_3\) are referred to collectively (when all present) as the genotype-environment-phenotype map. [We note that ‘map’ here and following Eq. 8refers in general to a stochastic map.]

We next define a special class of CTCMs which embed a DDCM over the \(\phi _{nt}\) and \(e_{nt}\) variables at each time-point; hence, we model the genetic, epigenetic, observed trait and environmental interactions by an embedded dynamic causal process. We can then coarse-grain this process to a cyclical CTCM over these variables at equilibrium (see Fig. 1C for a related schematic). For conciseness, the full definition of the CTCM* is given in Appendix B, Definition 4.2.

Definition 4.2

(CTCM with embeded DDCM (CTCM*)): See Appendix B.

The CTCM* model gives us a convenient way of decomposing the variation/information in a trait into components which depend on unique, redundant and synergistic combinations of genetic, epigenetic and environmental factors. Using the CID definition and notation from Appendix A, we propose that, for a population at time \(t>0\), this is achieved by selecting an arbitrary individual n, and calculating the backward causal information decomposition \(CID(S\rightarrow \underline{Z_{nt}})\), where \(S\subset \{X_{nt},Y_{nt},e_{nt}\}\), in the eq-CTCM* associated with the original CTCM* (assuming it exists, and that the structure kernel i is invariant to permutations of the population indices). Since we specify in Definition 4.2 that the embedded DDCM kernels have a self-separability property, Theorem 2.9 implies that this is a strict decomposition of the variation in \(Z_{nt}\), i.e. \(CID(S\rightarrow \underline{Z_{nt}})\le H(Z_{nt})\). Further, if \(g_3\) is an independent rather than an interactive environmental kernel, Theorem 2.10 implies that we can lower-bound the forward-CIDs \(CID(\underline{X_{nt}}\rightarrow Z_{nt})\), \(CID(\underline{e_{nt}}\rightarrow Z_{nt})\), and \(CID(\underline{Y_{nt}}\rightarrow \{Z_{nt},X_{nt},e_{nt}\})\), using the observed unique information between each variable and Z (i.e. the observed unique information is predictive of the consequences with respect to an observed trait of performing manipulations on each variable). We note that the PID of the observed distribution in this case is identical to the backward-CID as above.

In the case that \(g_3\) is an interactive kernel, we have environmental feedback from the observed trait to the epigenetic levels, making it harder to relate the observed phenotype distribution to the proposed causal decomposition. However, we can outline a number of possible relationships. For this purpose, we introduce an alternative representation of a CTCM*. We consider that all transition kernels share a common parameter \(\alpha\) from the self-separable representation, Eq. 28 (with \(K_1\) set to the identity). Since Eq. 28 has the form of a mixture distribution, an equivalent representation of a CTCM* is formed by introducing latent variables, \(C_Y(\tau )\), \(C_Z(\tau )\), \(C_e(\tau )\), which are Bernoulli variables (or collections of Bernoulli variables if Y, Z or e are factorized) with mean \(1-\alpha\). If \(C_V(\tau )=0\), variable \(V\in \{Y,Z,e\}\) does not update at time-step \(\tau\), otherwise V updates according to the \(K_2\) component of the self-separable representation in Eq. 28. If \(\alpha\) is set large enough with respect to the number of variables, we can ensure that with probability \(1-\epsilon\), with \(\epsilon\) arbitrarily small, \(\sum _V C_V(\tau ) \le 1\), i.e. at most one variable updates at a given time-step. Conceptually, we can view an increase in \(\alpha\) as effectively a reduction in the duration of the time-step \(\tau\). We also introduce \(C^*(\tau )\in \{X,Y,Z,e,\emptyset \}\), writing \(C^*(\tau )=V\) when V is the most recent variable for which \(C_V(\tau ^{\prime }<\tau )=1\) assuming V is unique, and \(C^*(\tau )=\emptyset\) when V is not unique. We can then make the following observation (see Appendix B for the proof):

Theorem 4.3

(Backward-CID bounds): For a CTCM* represented as above with latent factors C, and associated eq-CTCM*, where \(S\subset \{X,Y,e\}\), \(V\in \{X,Y,Z,e\}\), \(\overline{(.)}_V\) denotes the mean over values of V, and II is the interaction information (\(II(S;Z;C^*)=I(S;Z|C^*)-I(S;Z)\)), in the limit \(\alpha \rightarrow 1\) we have that:

and similarly:

where all II, CID and PID quantities are evaluated in the eq-CTCM* model (at a given n and t, where \(C^*\) is treated as an additional phenotype). Further, \(Q_V=P_{eq}(\lnot V)K^V_2(V|\lnot V)\) (unrelated to the notation \(Q_{X_A}\) used in Definition 2.4) with \(K^V_2\) the second component of V’s kernel, as in Eq. 28, and we assume Y, Z and e are not factorized. For the case that Y, Z or e are factorized, S and V are subsets and elements of the sets of relevant factorized variables respectively, and Eqs. 10and11hold identically.

Proof. See Appendix B.

Theorem 4.3 shows that we can identify certain situations in which the observed PID components at equilibrium consistently under or over-estimate the equivalent components of the CID. The LHS of Eqs. 10 and 11 each contain two conditions, the first depending on the sign of an Interaction Information (II) term, and the second comparing two CID terms. Broadly, the latter condition implies that if the feed-forward interaction between S and Z is strong compared to any feedback interactions, the PID will tend to underestimate the CID (Eq. 11), and vice-versa if the feedback is stronger (Eq. 10). However, the first condition makes this dependent on the type of feedback interactions present: if these tend to interfere with the feedforward interactions so that the net effect is to reduce the mutual information between S and Z (I(S; Z)), the II term will be positive as in Eq. 11, while non-interfering interactions will tend to increase I(S; Z), potentially leading to a negative II term as in Eq. 10 (note that we use the same sign convention for the interaction information as in Williams and Beer (2010)). Potentially, the situation in Eq. 11 may apply when Z is a disease trait, and S is the transcriptome in a relevant tissue, where the feedforward interaction is strong (the trait is strongly determined by S), and the feedback (in terms of treatment) reduces the severity of the disease by directly interfering with the underlying mechanisms. In contrast, the situation in Eq. 10 will apply if the feedforward effects are weak, and there is not a strong interference with feedback interactions at the level of S (for instance, a disease treatment which targets symptoms in a different tissue, inducing variation in S orthogonal to the causal factors for the disease).

Model 2: Multilevel selection

Multilevel selection has been identified as an important component in a number of evolutionary contexts, such as eusociality in insects (Nowak et al. 2010), bacterial plasmid evolution (Paulsson 2002), and group selection (Traulsen and Nowak 2006). It has also been proposed that multilevel selection is a driving force behind major evolutionary transitions, such as the transition to multicellularity (Calcott and Sterelny 2011; Okasha 2006). Here, we propose a basic definition of a multilevel causal evolutionary process (MCEP) in the framework introduced above, which naturally connects the notion of evolution occurring at multiple levels to coarse-graining transformations in the sense of Defs. 2.2 and 3.3. We then show how two types of multilevel evolutionary process are special cases of our model (group selection, and selection acting on mutational processes in cancer). Our general definition takes the following form:

Definition 5.1

(Multilevel Causal Evolutionary Process (MCEP)): An M-level MCEP is a collection of causal evolutionary processes, \(E_1, E_2,\ldots ,E_M\), such that each pair of adjacent processes forms a 2-level MCEP in the following sense. CEPs E and F form a 2-level CEP iff there exists a transformation from E to F (denoted \((\tau ,\omega )\)) in the sense of Definition 3.3, along with a partial map \(\mu\) of time-points in F to time-points in E (which may depend on \((\phi ,e,w,\pi )\) across all variables in E), and the following conditions apply. (1) We have that \(|F|_{N(t)} \le |E|_{N(\mu (t))} \; \forall t\), and \(|F|_T \le |E|_T\), where we write \(|A|_{N(t)}\) for the ‘actual population size’ of process A at time t, and \(|A|_T\) for the ‘actual number of time-points’ in process A. Each of these may be different from the values of N and T in A, since we will allow a \({{\,\mathrm{null}\,}}\) phenotype value to be declared in each CEP (whose parents are arbitrary, and whose offspring are all \({{\,\mathrm{null}\,}}\)): any individuals having \(\phi _{nt}={{\,\mathrm{null}\,}}\) will not count towards \(|A|_{N(t)}\), and time-points for which all individuals are \({{\,\mathrm{null}\,}}\) do not count towards \(|A|_T\), these being the only time-points excluded from the domain of \(\mu\); further, time-points beyond \(\max _t\{t|\exists t^{\prime } \; \mu (t^{\prime })=t\}\) do not count towards \(|E|_T\). (2) We require at least one of the inequalities in (1) to be strict. (3) We require that for any time-point t in E for which \(\mu (t^{\prime })=t\), the projection of the map \(\tau\) onto \(\phi _{t^{\prime }}\) in F is not independent of \(\phi _{nt}\) in E for any (non-null) individual n (i.e. it does not take the same value for all settings of \(\phi _{nt}\) given a joint setting of all other variables in E), so that no individual’s phenotype is entirely ‘projected out’ at these time-points by \(\tau\).

We now show how the group-selection model of Traulsen and Nowak (2006) can be represented as an MCEP:

Example 5.2

(Group Selection model (\(\hbox {MCEP}_{\text {group}}\))): We fix an N and T for process E. For all individuals in E, \(\phi _{nt}\in \{C,D,{{\,\mathrm{null}\,}}\}\), where C and D represent cooperators and defectors respectively. \(e_{nt}\in \{1\ldots M\}\) represents the group membership of an individual (where M is the maximum number of groups), and fitness \(w_{nt}\) is determined by the expected pay-off for an individual when interacting with other members of the same group according to a fixed game matrix (see Traulsen and Nowak 2006). A maximum group-size is fixed at \(N_G\), such that \(N=N_G M\). The heritability and environmental kernels (h and \(\hbar\)) enforce strict inheritance of phenotype and group membership (with the exception noted below), while structure kernel i implements Moran dynamics (Eq. 7), so long as doing so will not allow a group to exceed \(N_G\); otherwise, with probability \((1-q)\) a random individual from the same group dies, and with probability q the group divides (implemented by the environmental kernel as a random partition) while all members of another uniformly chosen group die. Since the population number may fluctuate below N, \({{\,\mathrm{null}\,}}\) values are used to ‘pad’ the population as required.

For process F, we set the population size to be M and the number of time-steps to be T. We map \(t=1\) in F to the first time-point in E, and subsequent time-points in F to the times at which the 1st, 2nd, 3rd... group divisions occurred in E. The ‘individuals’ in F correspond to the groups in E; hence, we let \(\phi _{nt}=(\nu _n,C_n)\) , where \(\nu _n\) is the number of individuals in group n at time \(\mu (t)\) in E, and \(C_n\) is the proportion of cooperators in group n (we set \(\phi _{nt}={{\,\mathrm{null}\,}}\) if \(\nu _n=0\) ). In F, \(e_{nt}=\{\}\) . We can naturally specify the parental map \(\pi _{nt}\) on F by mapping a group at t to the group at \(t-1\) when the groups they correspond to at \(\mu (t)\) and \(\mu (t-1)\) in E are either the same or split from one another. The fitness model can be specified by letting \(w_{nt}\) be the probability that group n will split first (in the context of all other groups). The structure kernel i then simply needs to implement Moran dynamics, unless the number of groups is less than \(N_G\) , in which case a \({{\,\mathrm{null}\,}}\) group is chosen for replacement in place of uniform sampling. The heritability kernel in F then needs to implement a conditional distribution over \(\phi _t\) corresponding to the joint distribution over the sizes and cooperator prevalences in group at t, given that a particular group from the previous generation divided first (we note that since, in general, dependencies will be induced between the group phenotypes by the intervening dynamics in E, the unfactorized version of the heritability kernel in Eq. 2 must be used). Time-points in F following the last time-point for which \(\mu (t)\) is assigned are padded with \({{\,\mathrm{null}\,}}\) phenotype values (clearly, \(|F|_T<T\) , since at most \(T-1\) group divisions can occur in E).

By construction, the pair of CEPs E and F above form a 2-level MCEP. For the required transformation, we simply take \(\tau\) to map a configuration in E to the configuration in F which consistently represents the sizes and proportions of cooperators in each group a at the times \(\mu (0), \mu (1)\ldots\) by \((\nu _{a0},C_{a0}),\) \((\nu _{a1},C_{a1}),\ldots\). For the mapping \(\omega\) we must be careful to restrict the interventions allowed on E to those which fix all phenotypes and environments at a given time t. With this restriction, these can be mapped many-to-one onto interventions in F which match the induced group characteristics. The first two conditions in Definition 5.1are satisfied by construction, while the third follows since a change in phenotype of a individual in E at a time point \(\mu (t)\) necessarily induces a change in the proportion of cooperators in one group, and hence changes \(\phi _t\) in F.

We note that the \(\hbox {MCEP}_{\text {group}}\) example above illustrates how the division and complexity of interactions between individuals and environment may depend on the level at which an evolutionary process is viewed (as well as the particular representation): In process E, group indices are considered environmental variables, which induce complex inter-dependencies in the fitnesses and heritabilities (phenotypic and environmental) between individuals; however, in process F, the groups are themselves considered individuals with their own properties, and much of the complexity at the underlying level is folded into the heritability of group phenotypes, along with a simpler fitness model.

Before outlining our final example, we introduce a general kind of MCEP over multiple temporal levels:

Definition 5.3

(Regular MCEP with multiple time-scales (\(\hbox {MCEP}_{\text {temp}}\))): An \(\hbox {MCEP}_{\text {temp}}\) is an MCEP over processes E and F with total time-steps \(T_E\) and \(T_F\), where \(T_E=T_F T_S\) (with \(T_S>1\) a ‘temporal scaling factor’), and \(\mu (t) = t\cdot T_S\). The transformation \(\tau\) involves the projection of all phenotype and environmental variables in E onto their values at \(\{\mu (0),\mu (1),\ldots \}\), while the variable \(\pi _{nt}\) in F is set to the ancestor of n at time-step \(\mu (t-1)\) in E. The fitness variable \(w_{nt}\) in F is set to the absolute number of offspring of n at \(t+1\), and a classical fitness model is used as in Eq. 5. In general, the fitness, heritability and environmental kernels in F will need to take unfactorized forms to capture the complex dependencies induced by the low-level dynamics in E. Further, we restrict interventions in E to interventions on the phenotypes and environments of variables at \(\{\mu (0),\mu (1),\ldots \}\), and map these to corresponding interventions in F.

We note that any CEP may be converted to an \(\hbox {MCEP}_{\text {temp}}\) by simply fixing a temporal scaling \(T_S\), setting the original CEP as E, and following the construction above to form F. In this context, the values \(w_{nt}\) represent a limited form of ‘inclusive fitness’ over the period \(\mu (t)\) to \(\mu (t+1)\) in E, with respect to genealogical relatedness relative to a base population at \(\mu (t)\) (see Okasha 2015 for a discussion of genealogical relatedness and genetic similarity based definitions of inclusive fitness, the former corresponding to Hamilton’s formulation). As our final example, we use the above to illuminate the interaction of mutational processes and selection in cancer. As cancers evolve, subclones acquire not only distinct sets of mutations, but also distinct mutational processes governing the random process by which mutations are generated (see Alexandrov 2013). For instance, by disrupting the DNA repair machinery, certain mutations may increase the mutation rate, or make it more likely that specific mutations (e.g. in particular trimer or pentamer contexts) are acquired in the future. Recent evidence has emerged that cancer driver mutations are differentially associated with the presence of particular mutational processes, and that the prevalence of particular mutational processes change in prototypical ways across the development of particular cancers (Dentro et al. 2020; Temko et al. 2018). To analyse the interaction of mutational processes with subclonal fitness, we introduce the following MCEP model (see Fig. 1D for related schematic):

Example 5.4

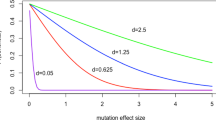

(MCEP with mutational processes (\(\hbox {MCEP}_{\text {mut-proc}}\))): We build an \(\hbox {MCEP}_{\text {mut-proc}}\) model by introducing a CEP model of mutational processes as E, and forming F directly by applying the \(\hbox {MCEP}_{\text {temp}}\) definition in Definition 5.3. For E, we set the phenotype variables as \(\phi _{nt}=\{x_{nt},m_{nt}\}\), where x and m represents the genotype and mutational processes acting in cell n at time t respectively. We use the factorized form of heritability kernel in Eq. 2, setting \(h_{n} = h_x(x_{nt}|x_{(\pi _t(n),t-1)},m_{(\pi _t(n),t-1)})\cdot h_m(m_{nt}|x_{nt},m_{(\pi _t(n),t-1)})\). We note that this incorporates a genetic transmission kernel \(h_x\) which is influenced by the mutational processes operating in the parent cell, and a mutational process kernel \(h_m\) which allows for potential epigenetic inheritance of mutational processes across generations (as well as determination from the genotype). Further, we use a factorized fitness kernel of the form \(f_n(w_{nt}|x_{nt})\); hence we assume that the genotype acts as a ‘common cause’ to the mutation processes and fitness of a given cell, but that the latter two variables are not directly causally linked. The structure kernel can be of arbitrary form, and all environments are empty.

The \(\hbox {MCEP}_{\text {mut-proc}}\) example above illustrates the following points. First, we note that for any individual in the lower-level process E, the mutational processes \(m_{nt}\) are causally indendent of fitness \(w_{nt}\); that is, intervening on \(m_{nt}\) will not affect \(w_{nt}\) (by definition). However, this is no longer the case in the higher-level process F; here, because of the intervening lower-level dynamics, there is a feedback between the mutational processes and fitness in E across multiple time-steps, meaning that \(w_{nt}\) for an individual in F is affected by interventions on both \(x_{nt}\) and \(m_{nt}\). In fact, in F we have the following:

Proposition 5.5

(Unique Information bounds for \(\hbox {MCEP}_{\text {mut-proc}}\)): For an individual n at time t in the high-level component process (F) of an \(\hbox {MCEP}_{\text {mut-proc}}\) as above, we have that \(UI(w_{nt}:x_{nt}\backslash m_{nt}) \le CID(\underline{x_{nt}}\rightarrow w_{nt})\), and \(UI(w_{nt}:m_{nt}\backslash x_{nt}) \le CID(\underline{m_{nt}}\rightarrow w_{nt})+C\), with C defined as in Theorem 2.10. (Appendix A)

The proof of Proposition 5.5 follows directly from Theorem 2.10, along with the \(\hbox {MCEP}_{\text {mut-proc}}\) definition, which implies that \(x_{nt}\) causally influences, but is not influenced by \(m_{nt}\) (in both E and F), and both influence and are not influenced by \(w_{nt}\) (in F). We note that Proposition 5.5 implies that the unique information components of the observed distribution PID over \(x_{nt},m_{nt},w_{nt}\) across multiple generations are informative about the potential contributions of \(x_{nt}\) and \(m_{nt}\) on subclone fitness. Particularly, \(UI(w_{nt}:m_{nt}\backslash x_{nt})>0\) implies that there is a generic impact on fitness from the mutational processes across a particular time-scale, providing a lower-bound up to the additive constant C. Further, we note that while the \(\hbox {MCEP}_{\text {mut-proc}}\) model above postulates no intra-generational feedback of the mutational processes on the genotype (only inter-generational feedback), the analysis above can be elaborated to include such feedback within generations, and can be expected to hold so long as the intra-generational feedback is weaker than that across generations.

Causal interpretation of Price’s equation and related results

We finish by outlining a number of relationships which can be shown to hold in our CEP framework, including Price’s equation an a number of analogous results. First, we can show:

Theorem 6.1

(Price’s Equation with probabilistic and causal analogues (assuming perfect transmission)): In a CEP, with empty environmental contexts and perfect transmission (hence, the heritability kernel factorizes and takes the form \(h_n(\phi _{nt}|\phi _{(\pi _t(n),t-1)})=\delta (\phi _{nt}|\phi _{(\pi _t(n),t-1)})\), where \(\delta (.|a)\) is a delta distribution centered on a), and a classical fitness model as in Definition 3.2, we have:

(a) Price’s Equation:

(b) Probabilistic Price Equation (Rice’s Equation, Calcott and Sterelny 2011; Rice 2004):

(c) Causal Price Equation:

where we write\({\bar{a}}\) for the average of a across individuals in a single, observed population, and \({\hat{a}}\) for the expected average of a across the ensemble of populations modeled by the CEP (following Rice 2004), \(\varOmega _n=(w_n/{\bar{w}}|{\bar{w}}\ne 0)\) is relative fitness (see Rice 2004); \({{\,\mathrm{Cov}\,}}(.|.)\) is the covariance; and the subscripts \({{\,\mathrm{do}\,}}(\phi =\phi _0)\) indicate that a given quantity is evaluated under the distribution after intervening on \(\phi\) (setting \(\phi\) for all individuals at a given time-step).

Proof. For proofs of (a) and (b) see Okasha (2006) and Rice (2004), which can be applied directly since no interventions are specified. For (c), we note that, having applied the operation \({{\,\mathrm{do}\,}}(\phi =\phi _0)\), we produce a derived CEP whose underlying distribution is \(P_{{{\,\mathrm{do}\,}}(\phi =\phi _0)}\). Eq. 14 then follows directly by applying (b) to this derived CEP. \(\square\)

We note that the distinctions between the original, probabilistic and causal versions of Price’s equation in Theorem 6.1 allow us to make fine distinctions corresponding to direct and indirect selection on traits. For instance, although \(\phi +\varDelta {\bar{\phi }}\) and \(\phi +\varDelta {\hat{\phi }}\) will vary with \(\phi\) for any trait which covaries with fitness or expected fitness, for \(\phi _0+\varDelta _{{{\,\mathrm{do}\,}}(\phi =\phi _0)} {\hat{\phi }}\) this will only be the case for traits which have a causal impact on fitness (provided the intervention \(\phi _0\) does not fix all individuals to a single phenotype).

Finally, we note an information-theoretic analogue of the Price equation based on the KL-divergence, which we believe has not been previously observed:

Theorem 6.2

(Analogue of Price’s Equation based on KL-divergences): In a CEP with restrictions and notation as in Theorem 6.1, we have:

where \(P_\phi\) and \(P^{\prime }_\phi\) are the observed (sample-level) distributions across trait \(\phi\) at arbitrary time-points t and \(t+1\) resp., \(KL(A||B)=\sum _i A_i \log (A_i/B_i)\) is the KL-divergence between (possibly unnormalized) distributions A and B, H(.) is the Shannon entropy, and \(\varOmega ^\dagger\) is a vector of relative fitness values for each value of the phenotype.

Proof. From the replicator equation, we have:

where the subscript i ranges across values of the phenotype. The result follows by substituting Eq. 16 into the LHS of Eq. 15 and rearranging:

\(\square\)

Using a similar argument to Theorem 6.1 part (c), we can also state a causal analogue to Eq. 15:

Corollary 6.3

(Causal analogue of Theorem 6.2): Using the notation of 6.2, and writing \({{\,\mathrm{do}\,}}(\phi _0)\) for \({{\,\mathrm{do}\,}}(\phi =\phi _0)\):

Eqs. 15 and 18 are similar in form to the Price equation, since they determine the distance a trait will move (measured using displacement of its mean or KL divergence over the population-level distribution, for the Price equation and KL-analogue respectively) based on the similarity between the distributions of the trait and relative fitness (measured using the covariance or KL divergence respectively). For complex traits and evolutionary dynamics, the KL-analogues may be more informative, since they model the change in the whole trait distribution, as opposed to only its mean. For instance, at an evolutionary fixed point, we require not only that \(\varDelta {\bar{\phi }}=0\) but also \({\widehat{KL}}(P_\phi || P^{\prime }_\phi )=0\) (assuming a large population). We also note the following properties of Eq. 15: Unlike the Price equation, Eq. 15 includes a dependency on the trait’s entropy; further, the KL-‘distance’ moved by a trait’s distribution increases as the (unnormalized) KL distance between trait and fitness distributions increases, or the entropy increases; the KL divergence thus need not go to 0 to reach a fixed point, but may be balanced by the entropy term, which may occur since the unnormalized KL divergence is not strictly positive, although it is bounded below by \(-H(P_\phi )\) since the LHS of Eq. 15 is non-negative (for instance, the uniform distribution on a trait whose values all have equal fitness is a fixed point for which \({\widehat{KL}}(P_\phi ||\varOmega ^\dagger )=-H(P_\phi )\)). Finally, we briefly compare the relationship in Theorem 6.2 with the associations between Price’s equation and information theory that have been drawn in Frank (2009): There, the mean change in a trait associated with Price’s equation is re-expressed in terms of the Fisher Information between the trait and the environment (or an equivalent form involving the Shannon information), and it is shown that Fisher’s Fundamental Theorem (FFT) arises by maximizing the information captured by the population; in contrast, Theorem 6.2 does not rederive Price’s equation or FFT, but rather relates two KL divergences involving analogous quantities to those in the former, leading to a distinct view of trait evolution at the distribution level as discussed.

Discussion

The framework of Causal Evolutionary Processes introduced in this paper provides a principled way to formulate evolutionary models, allowing both for cyclical interactions between evolutionary variables, and the analysis of evolutionary processes at multiple levels. We have developed a technical apparatus appropriate for this analysis in the form of Discrete Causal Networks and the Causal Information Decomposition, and have shown how a diverse range of evolutionary phenomena can be captured in our framework, including complex traits produced by feedback processes acting between epigenetic, behavioral and environmental levels, and multilevel selection models, including the selection of mutational processes in cancer. We have explored the properties of these models and our general framework, showing that under certain circumstances the causal impact of a given variable on another (for instance, a variant’s impact on a trait) can be bounded by observed information-theoretic quantities, and that a number of generalizations of Price’s equation hold in our framework.

Our framework may be extended in various ways. For convenience, we have restricted our attention to discrete models in the above analysis (having both discrete time and discrete evolutionary variables). Our current framework may be formulated in the more general context of Cyclical Structural Causal Models (Bongers et al. 2020) (see Appendix C), allowing for a measure-theoretic analysis including continuous variables and time. Further, we have restricted attention to the case of evolutionary processes with asexual reproduction; generalization to processes involving sexual reproduction is straightforwardly handled by altering the structure of the parental map \(\pi\) so that individuals are mapped to subsets of individuals in the previous generation as opposed to single individuals, while processes such as recombination and assortative mating can be modeled by using particular forms of heritability and structure kernels.

Further, we have not considered the problem of learning the causal structure and kernel forms from data. Methods relying on Mendelian randomization (e.g. Lawlor et al. 2008) can estimate the causal effects of variants on a trait assuming a linear relationship, but in general we may be interested in the causal effects of variables above the genetic level (e.g. epigenetics) and environmental factors on a trait, as well as non-linear models. In general, this is a hard problem, but general methods have been proposed, for instance the multi-level approach of Chalupka et al. (2016), or the information-theoretic approach of Janzing et al. (2013), which may be imported into our framework. Further, we intend the unique information and backward-CID bounds in Theorem 2.10 and 4.3 to be relevant for approximating the causal impacts of variables when certain assumptions are made, and in general these may be seen in the context of a host of bounds which relate various kinds of causal effect to observables (without interventions) under a range of assumptions (see Geiger et al. 2014; Janzing and Schölkopf 2010).

Finally, we intend our framework to be useful in clarifying foundational conceptual issues regarding causation and evolution. For instance, identifying a realized evolutionary process in nature requires providing criteria for identifying ‘individuals’ on which the process acts, and separating these from an ‘environment’; attempts have been made to cast such criteria in information-theoretic terms (see for instance Krakauer et al. 2020), and our framework provides a natural language for expressing such ‘connecting principles’. We may for instance declare that, to a first approximation, a single level evolutionary process requires environmental variables which are independent of an individual’s identity given its generation, acting as a ‘thermal-bath’ to the system; features such as spatial population structure, behavior-environmental feedback and niche construction (leading to more complex forms of heritability kernel) would then be taken as second-order principles which, if strong enough, may disrupt the ‘individuality’ of the entities in the original system (and hence the system’s ‘existence’ qua system). Such considerations may also help sharpen questions regarding multilevel selection, whose role has been called into question in explanations of evolutionary processes (see Okasha 2006, 2015 for a summary of the issues). Potentially, a model such as the multilevel CEP we outline, along with principles concerning which types of kernels are more or less ‘preferred’ at each level (for instance, in terms of description length, where lower-level kernels may inherit structure from kernels at higher-levels), could allow us to perform model selection among MCEPs with different numbers of levels. The problem of identifying multilevel selection can thus be cast in the more general framework of identifying causality at multiple levels, where we may have multple levels of variables which supervene on one another (for instance, see Chalupka et al. 2016; Hoel 2017; Okasha 2015); the existence of selection at multiple levels is the particular case of this problem when the causal relationships are constrained to have a special structure (such as an MCEP). In summary, we believe that consideration of explicit causal models of the kind we have outlined will be useful when approaching both computational and conceptual issues in models of evolution.

References

Alexandrov LB, Nik-Zainal S, Wedge DC, Aparicio SAJR, Behjati S, Biankin AV, Bignell GR, Bolli N, Borg A, Børresen-Dale A-L, Boyault S, Burkhardt B, Butler AP, Caldas C, Davies HR, Desmedt C, Eils R, Eyfjörd JE, Foekens JA, Greaves M, Hosoda F, Hutter B, Ilicic T, Imbeaud S, Imielinski M, Jäger N, Jones DTW, Jones D, Knappskog S, Kool M, Lakhani SR, López-Otín C, Martin S, Munshi NC, Nakamura H, Northcott PA, Pajic M, Papaemmanuil E, Paradiso A, Pearson JV, Puente XS, Raine K, Ramakrishna M, Richardson AL, Richter J, Rosenstiel P, Schlesner M, Schumacher TN, Span PN, Teague JW, Totoki Y, Tutt ANJ, Valdés-Mas R, van Buuren MM, van ‘t Veer L, Vincent-Salomon A, Waddell N, Yates LR, Australian Pancreatic Cancer Genome Initiative, ICGC Breast Cancer Consortium, ICGC MMML-Seq Consortium, ICGC PedBrain, Zucman-Rossi J, Futreal PA, McDermott U, Lichter P, Meyerson M, Grimmond SM, Siebert R, Campo E, Shibata T, Pfister SM, Campbell PJ, Stratton MR (2013) Signatures of mutational processes in human cancer. Nature 500(7463):415

Ay N, Polani D (2008) Information flows in causal networks. Adv Complex Syst 11(01):17–41

Bertschinger N, Rauh J, Olbrich E, Jost J, Ay N (2014) Quantifying unique information. Entropy 16(4):2161–2183

Benton CS, Miller BH, Skwerer S, Suzuki O, Schultz LE, Cameron MD, Marron JS, Pletcher MT, Wiltshire T (2012) Evaluating genetic markers and neurobiochemical analytes for fluoxetine response using a panel of mouse inbred strains. Psychopharmacology 221(2):297–315

Bongers S, Forré P, Peters J, Schölkopf B, Mooij JM (2020) Foundations of structural causal models with cycles and latent variables. arXiv preprint arXiv:1611.06221

Calcott B, Sterelny K (2011) The major transitions in evolution revisited. The MIT Press, Cambridge

Chalupka K, Eberhardt F, Perona P (2016) Multi-level cause-effect systems. In: Artificial intelligence and statistics, pp. 361–369

Dentro SC, Leshchiner I, Haase K, Tarabichi M, Wintersinger J, Deshwar AG, Yu K, Rubanova Y, Macintyre G, Demeulemeester J, Vázquez-García I, Kleinheinz K, Livitz DG, Malikic S, Donmez N, Sengupta S, Anur P, Jolly C, Cmero M, Rosebrock D, Schumacher S, Fan Y, Fittall M, Drews RM, Yao X, Lee J, Schlesner M, Zhu H, Adams DJ, Getz G, Boutros PC, Imielinski M, Beroukhim R, Sahinalp SC, Ji Y, Peifer M, Martincorena I, Markowetz F, Mustonen V, Yuan K, Gerstung M, Spellman PT, Wang W, Morris QD, Wedge DC, Van Loo P, on behalf of the PCAWG Evolution and Heterogeneity Working Group, the PCAWG consortium (2020) Characterizing genetic intra-tumor heterogeneity across 2658 human cancer genomes. bioRxiv, 312041

Felsenstein J (2016) Theoretical evolutionary genetics. Online book at: evolution.genetics.washington.edu/pgbook/pgbook.html

Frank SA (2009) Natural selection maximizes Fisher information. J Evol Biol 22(2):231–244

Fromer M, Roussos P, Sieberts SK, Johnson JS, Kavanagh DH, Perumal TM, Ruderfer DM, Oh EC, Topol A, Shah HR, Klei LL, Kramer R, Pinto D, Gümüş ZH, A Cicek E, Dang KK, Browne A, Lu C, Xie L, Readhead B, Stahl EA, Xiao J, Parvizi M, Hamamsy T, Fullard JF, Wang Y-C, Mahajan MC, Derry JMJ, Dudley JT, Hemby SE, Logsdon BA, Talbot K, Raj T, Bennett DA, De Jager PL, Zhu J, Zhang B, Sullivan PF, Chess A, Purcell SM, Shinobu LA, Mangravite LM, Toyoshiba H, Gur RE, Hahn C-G, Lewis DA, Haroutunian V, Peters MA, Lipska BK, Buxbaum JD, Schadt EE, Hirai K, Roeder K, Brennand KJ, Katsanis N, Domenici E, Devlin B, Sklar P (2016) Gene expression elucidates functional impact of polygenic risk for schizophrenia. Nat Neurosci 19(11):1442

Geiger P, Janzing D, Schölkopf B (2014) Estimating causal effects by bounding confounding. In: Proceedings of the annual conference on uncertainty in artificial intelligence (UAI)

Griffith V, Koch C (2014) Quantifying synergistic mutual information. In: Guided self-organization: inception. Springer, Berlin, pp 159–190

Griffiths PE, Pocheville A, Calcott B, Stotz K, Kim H, Knight R (2015) Measuring causal specificity. Philos Sci 82(4):529–555

Hoel EP (2017) When the map is better than the territory. Entropy 19(5):188

Houle D, Govindaraju DR, Omholt S (2010) Phenomics: the next challenge. Nat Rev Genet 11(12):855

Itani S, Ohannessian M, Sachs K, Nolan GP, Dahleh MA (2010) Structure learning in causal cyclic networks. In: JMLR workshop and conference proceedings, vol. 6, p 165176

Janzing D, Schölkopf B (2010) Causal inference using the algorithmic Markov condition. IEEE Trans Inf Theory 56(10):5168–5194

Janzing D, Balduzzi D, Grosse-Wentrup M, Schölkopf B (2013) Quantifying causal influences. Ann Stat 41(5):2324–2358

Koller D, Friedman N (2009) Probabilistic Graphical Models: Principles and Techniques. MIT Press, Cambridge

Krakauer DC, Page KM, Erwin DH (2008) Diversity, dilemmas, and monopolies of niche construction. Am. Nat. 173(1):26–40

Krakauer D, Bertschinger N, Olbrich E, Flack JC, Ay N (2020) The information theory of individuality. Theory in Biosciences, pp.1-15

Kumar S, Warrell J, Li S, McGillivray PD, Meyerson W, Salichos L, Harmanci A, Martinez-Fundichely A, Chan CWY, Nielsen MM, Lochovsky L, Zhang Y, Li X, Lou S, Pedersen JS, Herrmann C, Getz G, Khurana E, Gerstein MB (2020) Passenger mutations in more than 2500 cancer genomes: overall molecular functional impact and consequences. Cell 180(5):915–927

Lawlor DA, Harbord RM, Sterne JA, Timpson N, Davey Smith G (2008) Mendelian randomization: using genes as instruments for making causal inferences in epidemiology. Stat Med 27(8):1133–1163

Mooij JM, Janzing D, Schölkopf B (2013) From ordinary differential equations to structural causal models: the deterministic case. In: Proceedings of the twenty-ninth conference annual conference on uncertainty in artificial intelligence (UAI), pp 440–448

Nowak MA, Tarnita CE, Wilson EO (2010) The evolution of eusociality. Nature 466(7310):1057

Okasha S (2006) Evolution and the levels of selection. Oxford University Press, Oxford

Okasha S (2015) The relation between kin and multilevel selection: an approach using causal graphs. Br J Philos Sci 67(2):435–470

Paulsson J (2002) Multileveled selection on plasmid replication. Genetics 161(4):1373–1384

Pearl J (2009) Causality. Cambridge University Press, Cambridge

Rauh J, Bertschinger N, Olbrich E, Jost J (2014) Reconsidering unique information: towards a multivariate information decomposition. In: IEEE international symposium on information theory (ISIT), pp 2232–2236

Rice SH (2004) Evolutionary theory: mathematical and conceptual foundations. Sinauer Associates, Sunderland

Rubenstein PK, Weichwald S, Bongers S, Mooij JM, Janzing D, Grosse-Wentrup M, Schölkopf B (2017) Causal consistency of structural equation models. In: Proceedings of the annual conference on uncertainty in artificial intelligence (UAI)

Salichos L, Meyerson W, Warrell J, Gerstein M (2020) Estimating growth patterns and driver effects in tumor evolution from individual samples. Nat Commun 11(1):1–14

Temko D, Tomlinson IP, Severini S, Schuster-Böckler B, Graham TA (2018) The effects of mutational processes and selection on driver mutations across cancer types. Nat Commun 9(1):1857

Tononi G, Sporns O (2003) Measuring information integration. BMC Neurosci 4(1):31

Traulsen A, Nowak MA (2006) Evolution of cooperation by multilevel selection. Proc Natl Acad Sci 103(29):10952–10955

Wagner A (2015) Causal drift, robust signaling, and complex disease. PLoS ONE 10(3):e0118413

Wang D, Liu S, Warrell J, Won H, Shi X, Navarro FCP, Clarke D, Gu M, Emani P, Yang YT, Xu M, Gandal MJ, Lou S, Zhang J, Park JJ, Yan C, Rhie SK, Manakongtreecheep K, Zhou H, Nathan A, Peters M, Mattei E, Fitzgerald D, Brunetti T, Moore J, Jiang Y, Girdhar K, Hoffman GE, Kalayci S, Gumus ZH, Crawford GE, PsychENCODE Consortium, Roussos P, Akbarian S, Jaffe AE, White KP, Weng Z, Sestan N, Geschwind DH, Knowles JA, Gerstein MB (2018) Comprehensive functional genomic resource and integrative model for the human brain. Science 362(6420):eaat8464

Williams PL, Beer RD (2010) Nonnegative decomposition of multivariate information. CoRR, abs/1004.2515

Zhu Z, Zhang F, Hu H, Bakshi A, Robinson MR, Powell JE, Montgomery GW, Goddard ME, Wray NR, Visscher PM, Yang J (2016) Integration of summary data from GWAS and eQTL studies predicts complex trait gene targets. Nat Genet 48(5):481

Acknowledgements

The authors would like to acknowledge funding from NSF award #1660468. M.G. acknowledges support from the A.L. Williams Professorship fund.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: Discrete cyclic causal systems and causal information

We present here a technical summary of the basic concepts we need for our framework. In the process, we introduce the Causal Information Decomposition (CID), and summarize a number of its properties. First, we define a model of discrete cyclic causal systems, a Discrete Causal Network (DCN), which we will use as our basic model throughout the paper (see Fig. 1A for a summary of the relationships between the main models of the paper). We focus on discrete models to avoid the need to use differential entropy when defining information theoretic quantities, and leave generalization of our framework to the continuous case for future work.

Definition 2.1

(Discrete Causal Network (DCN)): Let \({\mathcal {X}}=\{X_i\}\) be a set of discrete random variables indexed by \(i\in {\mathbb {I}} =\{1\ldots I\}\), each taking values in the set \({\mathcal {V}}={1\ldots V}\), and \(Pa:{\mathbb {I}}\rightarrow {\mathcal {P}}({\mathbb {I}})\) be a function which returns a set of parents for each index (where \({\mathcal {P}}(.)\) denotes the powerset, and the underlying graph of Pa may contain cycles). Then, a DCN over \({\mathcal {X}}\) consists of a collection of probability kernels \(K_i(X_i=x_i|X_{Pa(i)}=x_{Pa(i)})\) specifying the conditional distribution of each variable on its parents, and a partial ordering \(({\mathcal {I}},\le )\) where \({\mathcal {I}}\) is a subset of all perfect interventions on \({\mathcal {X}}\) with the inherited ordering on interventions (for \(\iota _1, \iota _2 \in {\mathcal {I}}\), \(\iota _1 \le \iota _2\) iff \(\iota _2\) fixes all variables fixed by \(\iota _1\) to matching values, and possibly fixes additional variables). Further, a solution to a DCN is a set of joint distributions \(P_{{{\,\mathrm{do}\,}}(\iota \in {\mathcal {I}})}({\mathcal {X}})\) such that the conditional distributions of all non-intervened variables \(X_i\) on \(X_{Pa(i)}\) match \(K_i\), and the marginals of all other variables are delta distributions at their respective intervened values.

We note that our Definition 2.1 can be viewed as a Causal generalized Bayesian network as introduced in Itani et al. (2010) or a special case of a Structural Causal Model (SCM) as in Bongers et al. (2020), with an additional restriction in each case to a subset of interventions \(({\mathcal {I}},\le )\) (for an equivalent SCM formulation, see Appendix C; also note that for convenience we assume all variables in Definition 2.1 have a common discrete codomain, \({\mathcal {V}}\), which can be assumed without loss of generality, since V may be taken large enought to embed all codomains if they are differently sized). By adding the restriction on the interventions considered, we are able to define a notion of transformation between DCMs, following the notion of transformations between Structural Equation Models in Rubenstein et al. (2017):

Definition 2.2

(Transformations between DCNs): Suppose we have two DCNs over variables \({\mathcal {X}}=\{X_{i\in \{1\ldots I\}={\mathbb {I}}}\}\), \({\mathcal {Y}}=\{Y_{j\in \{1\ldots J\}={\mathbb {J}}}\}\) taking values in \(\{1\ldots V_1\}^I\) and \(\{1\ldots V_2\}^J\) respectively, with kernels \(\{K_i^1\}\) and \(\{K_j^2\}\) (resp.) and intervention posets over \({\mathcal {I}}\) and \({\mathcal {J}}\) (resp.). Let \(\tau\) be a map from \(V_1^I\) to \(V_2^{J^{\prime }}\), where \(J^{\prime }=|A|\) for \(A\subseteq {\mathbb {J}}\), and \(\omega\) be an order preserving surjective map from \({\mathcal {I}}\) to \({\mathcal {J}}\). Then \((\tau ,\omega )\) is a transformation of DCMs iff there exists a pair of solutions for which:

where \(\tau ^{-1}(y)\) is the pre-image of y under \(\tau\).