Abstract

We investigate non-Gaussian noise second-order filtering and fixed-order smoothing problems for non-Gaussian stochastic discrete systems with packet dropouts. We present a novel Kalman-like nonlinear non-Gaussian noise estimation approach based on the packet dropout probability distribution and polynomial filtering technique. By means of properties of Kronecker product we first introduce a second-order polynomial extended system and then analyze the means and variances of the Kronecker powers of the extended system noises. To generate noise estimators in forms of filtering and smoothing, we use the innovation approach. We give an example to illustrate that the presented algorithm has better robustness against packet dropouts than conventional linear minimum variance estimation.

Similar content being viewed by others

1 Introduction

During the last three decades, the estimation problem of input noise has become an active field in industry and has a wide range of applications in fault detection, petroleum prospecting, image restoration, speech processing, and so forth [1–6]. The Kalman deconvolution filter approach applied to the reflection coefficient sequence estimate in oil exploration was first proposed in [3]. Later on, the optimal frequency domain deconvolution estimator was derived by employing the polynomial method [7]. Based on the innovation analysis approach, a unified white noise estimator design approach for the autoregressive moving average innovation model was given in [8]. Recently, deconvolution studies have mainly focused on multisensor systems [9, 10] and packet dropout systems [11–13]. Because of the limitation of the communication bandwidth, service capacity, and carrying capacity of the network control systems, packet dropouts inevitably exist in the data transmission, which lead to the performance degradation or even instability of the control systems [14]. If it cannot be detected and processed in a timely manner, then it inevitably results in huge losses of personnel and property. Besides, it is worth noting that the input Gaussian noise hypothesis is the basis of the results mentioned, but the non-Gaussian input noise is widespread in numerous important applications [15–19]. Inspired by this discussion, we design a novel nonlinear input noise observer for linear stochastic discrete systems with packet dropouts.

The estimation theory of non-Gaussian systems has become more and more influential in many technical fields, and therefore numerous meaningful attempts have been devoted to it [15, 20–23]. The non-Gaussian input noise and system state joint estimator was presented for discrete-time nonlinear non-Gaussian systems in [20], where the state posterior distribution was iteratively computed by utilizing the Gaussian sum filtering, and the noise parameter posterior distribution was calculated by applying the variational Bayesian method, respectively. By selecting a suitable system noise a particle estimator with higher efficiency was constructed for nonlinear non-Gaussian systems in [21]. Based on the mixed \(l_{1}\) and \(l_{2}\) norm minimum performance index, an adaptive filter was presented for a class of systems in non-Gaussian and nonlinear manner in [22], where the tuning factor γ was determined by using the projection statistics algorithm. A Tobit Kalman-like estimator was proposed by converting the system with time-correlated and non-Gaussian noises into a Gaussian system with unknown noises covariances in [23]. In [24], by exploiting the Kronecker power of the system states and measurements a nonlinear estimator (called polynomial filtering) was first given for linear non-Gaussian systems, and it was proved that the accuracy of the nonlinear algorithm was higher than that of the classical Kalman filter. Later on, the result in [24] was successfully applied to time-varying systems [25], stochastic bilinear systems [26], general nonlinear stochastic systems [27], and multisensor systems with uncertain observations [28]. In this paper, we use the polynomial filtering theory to investigate the second-order polynomial estimator design problem for a class of packet dropout systems in non-Gaussian manner.

Until now, the research of estimator design of systems in packet dropouts and non-Gaussian manner mainly focuses on state estimate case, but the input noise estimate case is rarely reported. In this study, we propose a design method of input noise nonlinear estimator for this kind of systems. We formulate a new augmented system based on the given system with stochastic packet dropouts by utilizing the original and second-order Kronecker products of the states and observations in the original system. Moreover, we derive the stochastic characteristics of the augmented system by employing some Kronecker algebra rules. Then we adopt the classical Kalman projection theory to produce the recursive second-order polynomial non-Gaussian noise estimator. The main innovations and characteristics of this paper are as follows: (i) to the best of our knowledge, the nonlinear (second-order) estimation of input noise for linear stochastic systems with packet dropouts is investigated for the first time; (ii) a recursive analytical solution of the nonlinear (second-order) estimation of input noise is presented, which can not only realize real-time noise signal estimation, but also has more theoretical significance than the numerical solution.

The rest of our work is arranged as follows. In Sect. 2, we introduce the linear, non-Gaussian, discrete-time, and packet dropout state-space model and define the input noise second-order minimum variance estimation issue. In Sect. 3, we obtain the second-order least-squares filter and fixed-lag smoother of the input noise based on the parameters and stochastic characteristics of a fictitious second-order state-space model. In Sect. 4, we propose a linear input noise recursive estimator by utilizing the classical innovation orthogonal projection theory and compare the performances of linear and second-order noise estimators using an example. Finally, we summarize the research results.

2 Problem statement and preliminaries

Let us introduce the packet dropout state-space model to be discussed:

where \(i\in \mathbb{N}\) is the discrete-time index, \(x(i)\in \mathbb{R}^{n}\) is the unknown system state, \(y(i)\in \mathbb{R}^{m}\) is the system measurement, \(z(i)\in \mathbb{R}^{q}\) is the noise combination to be used for estimation, and \(\mathcal{A}(i)\in \mathbb{R}^{n\times n}\), \(\mathcal{H}(i)\in \mathbb{R}^{m\times n}\), \(\mathcal{B}(i)\in \mathbb{R}^{n\times r}\), \(\mathcal{D}(i)\in \mathbb{R}^{m\times r}\), and \(\mathcal{L}(i)\in \mathbb{R}^{q\times r}\) are real known time-varying matrices.

Assumption 1

The initial condition \(x_{0}\in \mathbb{R}^{n}\) is a non-Gaussian process independent of \(\{w(i)\}\) that satisfies

Assumption 2

The variable \(w(i)\in \mathbb{R}^{r}\) is a zero-mean real vector-valued finite known fourth-order non-Gaussian process satisfying

According to Assumption 2 and the stack operation, we have

Assumption 3

The binary (0 or 1) stochastic sequence \(\{\lambda (i)\}\in \mathbb{R}\) obeys the following probability distribution:

where \(p(i)\in \mathbb{R}\) is greater than or equal to zero.

The second-order non-Gaussian noise estimation under investigation is as follows: given \(s\in \mathbb{N}\) and based on a measurement sequence \(\{\{y(j)\}_{j=0}^{i+s}\}\), find a second-order polynomial estimator \(\hat{z}(i|i+s)\) of \(z(i)\) that minimizes the mean-squared estimation error.

Remark 1

Similarly to the Kalman filter case, the second-order polynomial estimator \(\hat{z}(i|i+s)\) is a second-order filter when \(s=0\) and a second-order fixed-order smoother when \(s>0\).

3 Main results

In this section, we first construct a second-order extended stochastic system based on Kronecker algebra and non-Gaussian noise hypothesis. Then we design the second-order non-Gaussian noise filter and smoother by utilizing the stochastic properties of the extended system and projection formula.

3.1 The second-order extended system

First, the second-order state vector and measurement vector are defined as follows:

By (1), (3), and (4) we arrive at

where

To construct a fictitious second-order state-space model, we further calculate the following quadratic measurement output:

The second-order extended system according to the following equations is yielded by substituting (1) and (7) into (5) and substituting (1) and (8) into (6):

where the system matrix parameters are

and

Then, from the preceding results and Kronecker algebra we come to the following conclusion.

Lemma 1

The driving noises \(\{u_{e}(i)\}\)and measurement noises \(\{w_{e}(i)\}\)in (9), \({i\in \mathbb{N}}\), satisfy the following conditions:

where the block matrices in (12)–(14) are

Proof

From the hypotheses of state-space model (1) it is known that the additive process \(w(i)\) is independent of \(x_{0}\) and satisfies

From (10) and (11) we can derive the following formulas:

Combining (10) and (12), \(\mathcal{Q}_{11}(i)\) can be given by

Substituting (10) into \(\mathcal{Q}(i)\) and utilizing \(E\{w(i)\cdot x_{0}^{T}\}=0\), we obtain

Moreover, we have

Employing the similar lines and properties of non-Gaussian noise \(w(i)\), \(x_{0}\), and \(\lambda (i)\), the covariance matrices \(\mathcal{R}_{11}(i)\), \(\mathcal{R}_{12}(i)\), \(\mathcal{R}_{22}(i)\), \(\mathcal{S}_{11}(i)\), \(\mathcal{S}_{12}(i)\), \(\mathcal{S}_{21}(i)\), and \(\mathcal{S}_{22}(i)\) are directly given by (15)–(21). This completes the proof. □

3.2 The input noise second-order least-squares estimator design

First, we define the innovation \(v_{e}(i)\) and its variance matrix \(R_{v_{e}}(i)\) by

Let \(\hat{x}_{e}(i|i-1)\) denote the least mean-square filter of \(x_{e}(i)\) based on the measurements up to \(i-1\). Then time update is presented as follows:

where

and

In addition, the initial conditions of \(\hat{x}_{e}(i|i-1)\) and \(\mathcal{P}(i)\) are given by

which can be easily computed by using (2) in Assumption 1.

Then we obtain a second-order non-Gaussian noise deconvolution filter.

Theorem 1

Under the stochastic packet dropout system (1) with Assumptions 1–3, we propose a filtering estimation of input noise by the following formula:

where \(v_{e}(i)\)is defined by (23), and

and the innovation variance matrix \(\mathcal{R}_{v_{e}}(i)\)is computed by (26).

Proof

It follows from Kalman projection formula that the deconvolution filtering of \(z(i)\) given measurements from time 0 to time i can be calculated by

where the derivation of \(E (\mathcal{L}(i)w(i)w_{e,21}^{T}(i) )\) is similar to the calculation process of (22). The proof is complete. □

Before presenting the input noise smoother, we need to define the covariance

where \(e_{x}(i+j)=x_{e}(i+j)-\hat{x}_{e}(i+j|i+j-1)\).

Theorem 2

Under the stochastic packet dropout system (1) with Assumptions 1–3and given \(s\in \mathbb{N}^{+}\), we propose a smoothing estimation of input noise by the following formula:

where \(v_{e}(i+j)\) (\(0\leq {j}\leq {s}\)) is defined by (23), \(\mathcal{T}(i)\)is calculated by (27), \(\mathcal{R}_{v_{e}}(i+j)\) (\(1\leq {j}\leq {s}\)) is given in (26), and \(\mathcal{R}_{i+j}^{i}\) (\(1\leq {j}\leq {s}\)) is recursively calculated by the following matrix formula:

with

and \(\mathcal{K}(i+j)\) (\(0\leq {j}\leq {s-1}\)) is calculated by (25).

Proof

Since \(z(i)\) is uncorrelated with \(\mathcal{L}\{v_{e}(0),v_{e}(1),\ldots ,v_{e}(i-1)\}\), the calculation of the smoother \(\hat{z}(i|i+s)\) is reduced to

Substituting (23) into \(E\{z(i)v_{e}^{T}(i+j)\}\) and using the definition in (28), we get the following expression:

Then we search for the expression of \(e_{x}(i+j+1)\). It follows from (9), (23), and (24) that

Taking into account that \(w(i)\), \(u_{e}(i+j)\), and \(w_{e}(i+j)\) are uncorrelated to each other, we can obtain (30) by substituting (33) into (28).

Furthermore, fixed-lag smoother expression (29) follows directly by substituting (32) into (31). This completes the proof. □

Remark 2

With the development of information technology and internet of things, many modern industrial systems are often based on network control, and random packet dropouts are inevitable in network control. The existing estimator design theory of conventional linear systems is not suitable for solving such complex problems. Although a design method of second-order estimator for non-Gaussian noise is proposed in [25], it is only suitable for conventional linear stochastic non-Gaussian systems, and it is only a particular case of this paper (that is, when the probability of the random variable \(\lambda (i)\) in system (1) is \(p(i)=1\), the system in this paper is equivalent to the system in [25]). Therefore the conclusion of this paper is more general than that in [25].

4 Linear input noise estimator and numerical example

In this section, by a numerical simulation we show the validity of the presented algorithm. Note that, in the mean-squared sense, the Kalman-based estimator is the best linear estimator when the input noise is assumed to be non-Gaussian [24]. For comparing the linear input noise estimator with the second-order input noise estimator, we first propose the linear deconvolution filtering of \(z(i)\) by applying the Kalman filtering theory in the following equations.

Define the innovation process

and \(\mathcal{R}_{m}(i)\triangleq E\{m(i)\cdot m^{T}(i)\}\), which can be computed by

where

and

Moreover, by applying the projection formula and innovation process we have

where

By (1), (34), and (35) we obtain

Thus the linear input noise filter \(\hat{z}(i|i)\) of \(z(i)\) is computed as follows:

and the linear smoother \(\hat{z}(i|i+s)\) (\(s\in \mathbb{N}^{+}\)) of \(z(i)\) is calculated by

where

with

Furthermore, we assume that the system matrix parameters in (1) are as follows:

where the system noise vector \(w(i)\) and the initial condition vector \(x_{0}\) are uncorrelated zero-mean non-Gaussian processes with distribution laws given in Table 1.

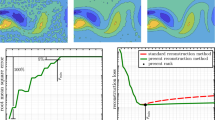

The Bernoulli stochastic variable \(\lambda (i)\) is assumed to have a nonzero probability \(p(i)=0.9\), and its variation law is shown in Fig. 1. With the same assumptions, the linear and second-order non-Gaussian noise estimates have been compared in this numerical simulation. The performances of linear estimators and second-order estimators have been plotted in Figs. 2–5. Obviously, the simulation of this example shows that the second-order estimators are better than the linear estimators.

5 Conclusions

A novel input noise nonlinear (second-order) estimate algorithm is put forward for non-Gaussian stochastic systems with packet dropouts, where the packet dropout characteristic is modeled as a multiplicative binary (0 or 1) distributed stochastic white sequence. By defining the original and second-order Kronecker products of the states and measurements in the original system we introduce a new augmented system. On this basis, we adopt the Kronecker algebra rules to analyze the stochastic characteristics of the augmented system. Then the input noise nonlinear (second-order) estimators are presented in the form of filtering and smoothing. In addition, we emphasize the effectiveness of the proposed algorithm by a numerical simulation.

As a matter of fact, only some basic results have been achieved in the study of complex systems, such as the stability analysis of switched impulsive systems [29] and stochastic stability analysis of nonlinear second-order stochastic differential delay systems [30]. Therefore, it would be of interest to extend the proposed method to investigate more complex switched impulsive systems and nonlinear stochastic delay systems. Another interesting open topic is the \(H_{\infty }\) nonlinear (second-order or higher-order) estimator design problem for systems with packet dropouts.

References

Liu, S., Li, X., Wang, H., Yan, J.: Adaptive fault estimation for T-S fuzzy systems with unmeasurable premise variables. Adv. Differ. Equ. 2018, 105 (2018). https://doi.org/10.1186/s13662-018-1571-5

Yu, X., Hsu, C.S., Bamberger, R.H., Reeves, S.J.: \(H_{\infty }\) deconvolution filter design and its application in image restoration. In: 1995 International Conference on Acoustics, Speech, and Signal Processing, Detroit, USA, pp. 2611–2614 (1995)

Mendel, J.M.: White-noise estimators for seismic data processing in oil exploration. IEEE Trans. Autom. Control 22(5), 694–706 (1977)

Yuan, W., Lin, J., An, W., Wang, Y., Chen, N.: Noise estimation based on time-frequency correlation for speech enhancement. Appl. Acoust. 74(5), 770–781 (2013)

Shi, F., Patton, R.J.: Fault estimation and active fault tolerant control for linear parameter varying descriptor systems. Int. J. Robust Nonlinear Control 25(5), 689–706 (2015)

Zhang, Z., Wu, Y., Zhang, R., Jiang, P., Liu, G., Ahmed, S., Dong, Z.: Novel transformer fault identification optimization method based on mathematical statistics. Mathematics 7, 288 (2019)

Ahlén, A., Sternad, M.: Optimal deconvolution based an polynomial methods. IEEE Trans. Acoust. Speech Signal Process. 37(2), 217–226 (1989)

Deng, Z.-L., Zhang, H.-S., Liu, S.-J., Zhou, L.: Optimal and self-tuning white noise estimators with applications to deconvolution and filtering problems. Automatica 32(2), 199–216 (1996)

Li, Y., Zhao, M., Hao, G., Li, J., Jin, H.: Multisensor distributed information fusion white noise Wiener deconvolution estimator. Int. J. Control. Autom. 8(4), 15–24 (2015)

Liu, W.-Q., Wang, X.-M., Deng, Z.-L.: Robust weighted fusion steady-state white noise deconvolution smoothers for multisensor systems with uncertain noise variances. Signal Process. 122, 98–114 (2016)

Wang, W., Han, C., He, F.: White noise estimation for discrete-time systems with random delay and packet dropout. J. Syst. Sci. Complex. 27(3), 476–493 (2014)

Duan, Z., Song, X., Yan, X.: Deconvolution estimation problem for measurement-delay systems with packet dropping. In: Proceedings of 2016 Chinese Intelligent Systems Conference. Lecture Notes in Electrical Engineering, vol. 404, pp. 321–334. Springer, Singapore (2016)

Li, H., Li, W.: Passive IIR deconvolution of two-dimensional digital systems subject to missing measurements. Neurocomputing 179, 37–43 (2016)

Caballero-Águila, R., Hermoso-Carazo, A., Linares-Pérez, J.: Fusion estimation from multisensor observations with multiplicative noises and correlated random delays in transmission. Mathematics 5, 45 (2017)

Hu, K., Song, A., Wang, W., Zhang, Y., Fan, Z.: Fault detection and estimation for non-Gaussian stochastic systems with time varying delay. Adv. Differ. Equ. 2013, 22 (2013)

Asadi, H., Seyfe, B.: Signal enumeration in Gaussian and non-Gaussian noise using entropy estimation of eigenvalues. Digit. Signal Process. 78, 163–174 (2018)

Li, L., Stetler, L., Cao, Z., Davis, A.: An iterative normal-score ensemble smoother for dealing with non-Gaussianity in data assimilation. J. Hydrol. 567, 759–766 (2018)

Braccesi, C., Cianetti, F., Palmieri, M., Zucca, G.: The importance of dynamic behaviour of vibrating systems on the response in the case of non-Gaussian random excitations. Proc. Struct. Int. 12, 224–238 (2018)

Kulikov, G.Yu., Kulikova, M.V.: Estimation of maneuvering target in the presence of non-Gaussian noise: a coordinated turn case study. Signal Process. 145, 241–257 (2018)

Xu, H., Xie, W., Yuan, H., Duan, K., Liu, W., Wang, Y.: Fixed-point iteration Gaussian sum filtering estimator with unknown time-varying non-Gaussian measurement noise. Signal Process. 153, 132–142 (2018)

Corbetta, M., Sbarufatti, C., Giglio, M., Todd, M.D.: Optimization of nonlinear, non-Gaussian Bayesian filtering for diagnosis and prognosis of monotonic degradation processes. Mech. Syst. Signal Process. 104, 305–322 (2018)

Zhu, B., Chang, L., Xu, J., Zha, F., Li, J.: Huber-based adaptive unscented Kalman filter with non-Gaussian measurement noise. Circuits Syst. Signal Process. 37(9), 3842–3861 (2018)

Geng, H., Wang, Z., Cheng, Y., Alsaadi, F.E., Dobaie, A.M.: State estimation under non-Gaussian Lévy and time-correlated additive sensor noises: a modified Tobit Kalman filtering approach. Signal Process. 154, 120–128 (2019)

Carravetta, F., Germani, A., Raimondi, M.: Polynomial filtering for linear discrete time non-Gaussian systems. SIAM J. Control Optim. 34(5), 1666–1690 (1996)

Zhao, H., Zhang, C.: Non-Gaussian noise quadratic estimation for linear discrete-time time-varying systems. Neurocomputing 174 Part B, 921–927 (2016)

Carravetta, F., Germani, A., Raimondi, M.: Polynomial filtering of discrete-time stochastic linear systems with multiplicative state noise. IEEE Trans. Autom. Control 42(8), 1106–1126 (1997)

Germani, A., Manes, C., Palumbo, P.: Polynomial extended Kalman filter. IEEE Trans. Autom. Control 50(12), 2059–2064 (2005)

Caballero-Águila, R., Hermoso-Carazo, A., Linares-Pérez, J.: Linear and quadratic estimation using uncertain observations from multiple sensors with correlated uncertainty. Signal Process. 91(2), 330–337 (2011)

Slyn’ko, V., Tunç, C.: Stability of abstract linear switched impulsive differential equations. Automatica 107, 433–441 (2019)

Tunç, O., Tunç, C.: On the asymptotic stability of solutions of stochastic differential delay equations of second order. J. Taibah Univ. Sci. 13(1), 875–882 (2019)

Acknowledgements

The authors express their sincere gratitude to the editors and two anonymous referees for the careful reading of the original manuscript and useful comments that helped to improve the presentation of the results and accentuate important details in the text.

Availability of data and materials

Data sharing not applicable to this paper as no datasets were generated or analyzed during the current study.

Funding

This work was supported in part by A Project of Shandong Province Higher Educational Science and Technology Program (Grant No. J18KB157), the Development Plan of Young Innovation Team in Colleges and Universities of Shandong Province (Grant No. 2019KJN011), Key Research and Development Program of Shandong Province (Grant No. 2018GGX103054), National Natural Science Foundation of P.R. China (Grant Nos. 61403061 and 61503171), and Natural Science Foundation of Shandong Province (Grant No. ZR2016JL021).

Author information

Authors and Affiliations

Contributions

All four authors contributed equally to this work. They all read and approved the final version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zhao, H., Li, Z., Li, B. et al. A study on input noise second-order filtering and smoothing of linear stochastic discrete systems with packet dropouts. Adv Differ Equ 2020, 474 (2020). https://doi.org/10.1186/s13662-020-02903-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-020-02903-7