Abstract

In simple instrumental-learning tasks, humans learn to seek gains and to avoid losses equally well. Yet, two effects of valence are observed. First, decisions in loss-contexts are slower. Second, loss contexts decrease individuals’ confidence in their choices. Whether these two effects are two manifestations of a single mechanism or whether they can be partially dissociated is unknown. Across six experiments, we attempted to disrupt the valence-induced motor bias effects by manipulating the mapping between decisions and actions and imposing constraints on response times (RTs). Our goal was to assess the presence of the valence-induced confidence bias in the absence of the RT bias. We observed both motor and confidence biases despite our disruption attempts, establishing that the effects of valence on motor and metacognitive responses are very robust and replicable. Nonetheless, within- and between-individual inferences reveal that the confidence bias resists the disruption of the RT bias. Therefore, although concomitant in most cases, valence-induced motor and confidence biases seem to be partly dissociable. These results highlight new important mechanistic constraints that should be incorporated in learning models to jointly explain choice, reaction times and confidence.

Similar content being viewed by others

Introduction

In the reinforcement learning context, reward-seeking and punishment-avoidance present an intrinsic and fundamental informational asymmetry. In the former situation, accurate choice (i.e., reward maximization) increases the frequency of the reinforcer (the reward). In the latter situation, accurate choice (i.e., successful avoidance), optimal behavior decreases the frequency of the response. Accordingly, most simple incremental “law-of-effect”-like models would predict higher performance in the reward seeking compared the punishment avoidance situation. Yet, humans learn to seek reward and to avoid punishment equally-well (Fontanesi et al., 2019; Guitart-Masip et al., 2012; Palminteri et al., 2015). This is not only robustly demonstrated in experimental data, but also nicely explained by context-dependent reinforcement-learning models (Fontanesi et al., 2019; Palminteri et al., 2015), which can be seen as formal computational instantiations of Mowrer’s two-factor theory (Mowrer, 1952). On top of this remarkable symmetry in choice accuracy between gain and loss contexts, two sets of recent studies independently reported that outcome valence asymmetrically affects confidence and response times (RTs). First, learning from punishment increases individuals’ RTs, slowing down the motor execution of the choice (Fontanesi et al., 2019; Jahfari et al., 2019). This robust phenomenon is consistent with a motor Pavlovian bias, which posits that desirable contexts favor motor execution and approach behavior, whereas undesirable contexts hinder them (Boureau and Dayan, 2011; Guitart-Masip et al., 2012).

Second, learning from punishment decreases individuals’ confidence in their choices (Lebreton et al., 2019). Confidence judgements can be defined and operationalized as the subjective estimations of the probability of being correct (Fleming and Daw, 2017; Pouget et al., 2016). As such, a confidence judgment is a metacognitive operation, which quantifies the degree to which an individual is aware of his or her success or failure (Fleming and Dolan, 2012; Yeung and Summerfield, 2012). Confidence judgments are thought to be critical in the context of meta-control—the flexible adjustment of behavior—because they are key to monitor and reevaluate previous decisions (Folke et al., 2016) to track changes in the environment (Heilbron and Meyniel, 2019; Vinckier et al., 2016) or to arbitrate between different strategies (Daw et al., 2005; Donoso et al., 2014). The demonstrations that confidence judgments can be biased by the outcome valence in different tasks (Lebreton et al., 2018, 2019) suggest that, similar to instrumental processes, metacognitive processes could be under the influence of Pavlovian processes.

Our goal was to investigate the link between the valence-induced motor and confidence biases. We focused on two research questions: first, are valence-induced motor and confidence biases robust and replicable? Second, can the confidence bias be observed in the absence of the motor bias? Regarding the second question, previous research has yielded conflicting results that generated two opposing predictions. On the one hand, numerous studies documented behavioral and neural dissociations between perceptual, cognitive or motor operations, and confidence or metacognitive judgments (Fleming et al., 2012; Miele et al., 2011; Qiu et al., 2018). Likewise, brain lesions and stimulation protocols have been shown to disrupt confidence ratings and metacognitive abilities without impairing cognitive or motor functions (Fleming et al., 2014, 2015; Rounis et al., 2010), although see also Bor et al. (2017). These dissociations between decision and metacognitive variables suggest that the valence-induced confidence bias could be observed in the absence of a response time bias.

On the other hand, several studies suggest that decision and metacognitive variables are tightly linked—both in perceptual (Geller and Whitman, 1973; Vickers et al., 1985) and value-based tasks (De Martino et al., 2013; Folke et al., 2016; Lebreton et al., 2015). This coupling is notably embedded in many sequential-sampling models which rely on a single mechanism to produce decisions, response times, and confidence judgments (van den Berg et al., 2016; De Martino et al., 2013; Moran et al., 2015; Pleskac and Busemeyer, 2010; Ratcliff and Starns, 2009, 2013; Yu et al., 2015). Beyond this mechanistic hypothesis, it has been recently suggested that people use their own RT as a proxy for stimulus strength and certainty judgments, creating a direct, causal link from RT to confidence (Desender et al., 2017; Kiani et al., 2014). These results could imply that our previously reported effects of valence on confidence (Lebreton et al., 2019) are no more than a spurious consequence of the effect of valence on RTs (Fontanesi et al., 2019; Jahfari et al., 2019). In other words, participants could have simply observed that they were slower in the loss context and used this information to generate lower confidence judgments in these contexts.

To address our research questions, we developed several versions of a probabilistic, instrumental-learning task, where participants have to learn to seek rewards or to avoid losses (Fontanesi et al., 2019; Lebreton et al., 2019; Palminteri et al., 2015). We attempted to cancel the effects of losses on RTs while recording confidence judgments to assess the presence of the valence-induced confidence bias. To this end, we modified the standard mapping between the available options and the way participants could select them, thereby disrupting the link between decision and motor execution of the choice. In another experiment, we also used a different strategy and imposed time pressure on the choice to constrain decision time.

In total, we used two published datasets (Lebreton et al., 2019) and original data collected from four new experiments, where we manipulated in several ways the option-action mapping (Experiments 3-5) and applied time pressure (Experiment 6). We then tested (1) the robustness of the valence-induced motor and confidence biases, and (2) whether the confidence bias could be observed in the absence of the motor bias. Overall, our results show that response times are slower in loss than gain contexts in almost all experiments. In other words, the motor bias is highly robust, because it survived most of our disruption attempts, despite being severely attenuated. In all datasets, confidence was lower in loss than in gain contexts, indicating that the confidence bias is highly replicable and is robust to variations in the motor bias effect sizes. The confidence bias also is observed in the condition where the motor bias was absent, suggesting that valence-induced motor and confidence biases are partly dissociable.

Materials and methods

Subjects

All studies were approved by the local Ethics Committee of the Center for Research in Experimental Economics and political Decision-making (CREED), at the University of Amsterdam. All subjects gave informed consent before partaking in the study. The subjects were recruited from the laboratory's participant database (www.creedexperiment.nl). A total of 108 subjects took part in this set of 6 separate experiments (Table 1). They were compensated with a combination of a show-up fee (5€), and additional gains and/or losses depending on their performance during the learning task: Experiment 1 had an exchange rate of 1 (in-game euros = payout); Experiments 2-6 had an exchange rate of 0.3 (in game euros = 0.3 payout euros, participants were clearly informed of this exchange rate). In addition, in experiments 2-6, three trials (one per session) were randomly selected for a potential 5 euros bonus each, attributed based on the confidence incentivization scheme (see below).

Power analysis and sample size determination

Power analysis were performed with GPower.3.1.9.2. The sample size for all experiments was determined prior to the start of the experiments based on the main effects of valence (gain – loss) on confidence judgments and RTs from Experiments 1 and 2 – reported in (Lebreton et al., 2019). For confidence judgments, Cohen’s d was estimated to be: Exp. 1: d = 1.340, t17 = 5.69, P = 2.67×10-4; Exp. 2: d = 0.926, t17 = 3.93, P = 1.08×10-3. For a similar within-subject design, a sample of N = 17 subjects is sufficient to reach a power greater than 95% with a two-tailed one-sample t-test.

For RTs, Cohen’s d was estimated to be: Exp. 1: d = 0.858, t17 = 3.64, P = 2.03×10-3; Exp. 2: d = 0.848, t17 = 3.60, P = 2.22×10-3. For a similar within-subject design, a sample of N = 17 subjects is sufficient to reach a power greater than 95% with a two-tailed one-sample t-test.

Learning task - general

In this study, we iteratively designed six experiments, aiming at investigating the impact of context valence and information on choice accuracy, confidence, and response times, in a reinforcement-learning task. All experiments were adapted from the same basic experimental paradigm (see also Fig. 1 and Figure S.1): participants repeatedly faced pairs of abstract symbols probabilistically associated with monetary outcomes (gains or losses), and they had to learn to choose the most advantageous symbol of each pair (also referred to as context), by trial and error. Two main factors were orthogonally manipulated (Palminteri et al., 2015): valence (i.e., some contexts only provide gains, and others losses) and information (some contexts provide information about the outcome associated with both chosen and unchosen options—complete information—, whereas others only provided information about the chosen option—partial information). In addition, at each trial, participants reported their confidence in their choice on a graded scale as the subjective probability of having made a correct choice (Fig. 1). In all experiments but one (Exp. 2-6), those confidence judgments were elicited in an incentive-compatible way (Ducharme and Donnell, 1973; Lebreton et al., 2018, 2019; Schlag et al., 2015).

Experimental design. (A) Behavioral tasks for Experiments 1-6. Successive screens displayed in one trial are shown from left to right with durations in ms. All tasks are based on the same principle, originally designed for experiments 1-2 (top line): after a fixation cross, participants are presented with a couple of abstract symbols displayed on a computer screen and have to choose between them. They are thereafter asked to report their confidence in their choice on a numerical scale. Note that experiment 1 featured a 0-10 scale, and experiments 2-6 featured a 50-100% scale. Outcome associated with the chosen symbol is revealed, sometimes paired with the outcome associated with the unchosen symbol—depending on the condition. For experiments 3-5 (bottom line), options are displayed on a vertical axis. Besides, the response mapping (how the left vs right arrow map to the upper vs lower symbol) is only presented after the symbol display, and the response has to be given within one second of the response mapping screen onset. A short empty screen is used as a mask, between the symbol display and the response mapping for Experiments 4-5. Experiment 6 is similar to experiment 2 (top line), except that a shorter duration is allowed from the symbol presentation to the choice Tasks specificities are indicated below each screen. See also Figure S1 for a complete overview of all 6 experiments. (B) Experiment 1 payoff matrix. (C) Experiments 2-6 payoff matrix

Results from Experiments 1 and 2 were previously reported in Lebreton et al. (2019): briefly, we found that participants exhibit the same level of choice accuracy in gain and loss contexts but are less confident in loss contexts. In addition, they appeared to be slower to execute their choices in loss contexts. In order to evaluate the interdependence between the effects of valence on RT and confidence, we successively designed three additional tasks (Fig. 1A and Figure S.1C-E). In those tasks, we modified the response setting to blur the effects of valence on RT, with the goal to assess the effects of valence on confidence in the absence of an effect on RT. In a sixth task we imposed a strict time pressure on decisions (Fig. 1A and Figure S.1.F). All subjects also performed a Transfer task (Lebreton et al., 2019; Palminteri et al., 2015). Data from this additional task is not relevant for our main question of interest and is therefore not analyzed in the present manuscript.

Learning task - details

All tasks were implemented using MatlabR2015a® (MathWorks) and the COGENT toolbox (http://www.vislab.ucl.ac.uk/cogent.php). In all experiments, the main learning task was adapted from a probabilistic instrumental learning task used in a previous study (Palminteri et al., 2015). Invited participants were first provided with written instructions, which were reformulated orally if necessary. They were explained that the aim of the task was to maximize their payoff and that gain seeking and loss avoidance were equally important. In each of the three learning sessions, participants repeatedly faced four pairs of cues, taken from Agathodaimon alphabet. The four cue pairs corresponded to four conditions and were presented 24 times in a pseudo-randomized and unpredictable manner to the subject (intermixed design). Of the four conditions, two corresponded to reward conditions, and two to loss conditions. Within each pair, and depending on the condition, the two cues of a pair were associated with two possible outcomes (1€/0€ for the gain and -1€/0€ for the loss conditions in Exp. 1; 1€/0.1€ for the gain and -1€/-0.1€ for the loss conditions in Exp. 2-6) with reciprocal (but independent) probabilities (75%/25% and 25%/75%); see Lebreton et al. (2019) for a detailed rationale.

Experiments 1, 2, and 6 were very similar (Fig. 1A and Figure S.1A-B & F): at each trial, participants first viewed a central fixation cross (500-1,500 ms). Then, the two cues of a pair were presented on each side of this central cross. Note that the side in which a given cue of a pair was presented (left or right of a central fixation cross) was pseudo-randomized, such as a given cue was presented an equal number of times on the left and the right of the screen. Subjects were required to select between the two cues by pressing the left or right arrow on the computer keyboard, within a 3,000 ms (Exp. 1-2) or 1,000 ms (Exp. 6) time window. After the choice window, a red pointer appeared below the selected cue for 500 ms. Subsequently, participants were asked to indicate how confident they were in their choice. In Experiment 1, confidence ratings were simply given on a rating scale without any additional incentivization. To perform this rating, they could move a cursor, which appeared at a random position, to the left or to the right using the left and right arrows and validate their final answer with the spacebar. This rating step was self-paced. Finally, an outcome screen displayed the outcome associated with the selected cue, accompanied with the outcome of the unselected cue if the pair was associated with a complete-feedback condition.

In Experiment 3, we dissociated the option display and motor response: symbols were first presented on a vertical axis (2s). During this period, participants could choose their preferred symbol but were uncertain about which button to press to select their preferred symbol. This uncertainty was resolved in the next task phase, in which two horizontal cues indicated which of the left versus right response button could be used to select the top versus bottom symbol (Fig. 1A and Figure S.1C). In addition, we imposed a time limit on the response selection (<1 s) to incentivize participants to make their decision during the symbol presentation and allow only an execution of a choice that was already made during the response mapping screen. In Experiment 4, we added a mask (empty screen 0.5-1 s) between the symbol presentation and the response mapping (Fig. 1A and Figure S.1D). This further strengthened the encouragement to make a decision during the symbol presentation to reduce task load, because participants would then only have to retain the information about the selected location (top vs. bottom) during the mask period. In Experiment 5, we introduced a jitter (variable time duration; 2-3 s) at the symbol presentation screen (Fig. 1A and Figure S.1E) to further discourage temporal expectations and motor preparedness during the decision period. Finally, Experiment 6 was adapted from Experiment 2, but additionally imposed a strict time pressure on the choice, in an attempt to incentive participants to counteract the slowing down due to the presence of losses (Fig. 1A and Figure S.1F). In all experiments, response time is defined as the time between the onset of the screen conveying the response mapping (Symbol for Exp. 1-2 & 6; Choice for Exp. 3-5; see Fig. 1A and Figure S.1), and the key press by the participant.

Matching probability and incentivization

In Experiment 2-6, participant’s reports of confidence were incentivized via a matching probability procedure that is based on the Becker-DeGroot-Marshak (BDM) auction (Becker et al., 1964) Specifically, participants were asked to report as their confidence judgment their estimated probability (p) of having selected the symbol with the higher average value (i.e., the symbol offering a 75% chance of gain (G75) in the gain conditions, and the symbol offering a 25% chance of loss (L25) in the loss conditions) on a scale between 50% and 100%. A random mechanism, which draws a number (r) in the interval [0.5 1], is then implemented to select whether the subject will be paid an additional bonus of 5 euros as follows: If p ≥ r, the selection of the correct symbol will lead to a bonus payment; if p < r, a lottery will determine whether an additional bonus is won. This lottery offers a payout of 5 euros with probability r and 0 with probability 1-r. This procedure has been shown to incentivize participants to truthfully report their true confidence regardless of risk preferences (Hollard et al., 2016; Karni, 2009). Participants were trained on this lottery mechanism and informed that up to 15 euros could be won and added to their final payment via the MP mechanism applied on one randomly chosen trial at the end of each learning session (3×5 euros). Therefore, the MP mechanism screens were not displayed during the learning sessions.

Variables

In all experiments, response time is defined as the time between the onset of the screen conveying the response mapping (Symbol for Exp. 1-2 & 6; Choice for Exp. 3-5; see Fig. 1A and Figure S.1), and the key press by the participant. Confidence ratings in Exp. 1 were transformed form their original scale (0-10) to a probability scale, (50-100%), using a simple linear mapping: confidence = (50 + 5 × rating)/100.

Statistics

All statistical analyses were performed using Matlab R2015a. All reported p-values correspond to two-sided tests. T-tests refer to a one sample t-test when comparing experimental data to a reference value (e.g., chance: 0.5) and paired t-tests when comparing experimental data from different conditions.

Two-way repeated measures ANOVAs testing for the role of valence, information, and their interaction were performed at the individual experiment level. One-way ANOVAs were used on main effects (e.g., individual averaged accuracy in gains minus losses) to test for the effect of experiments.

Generalized linear mixed-effect (glme) models include a full subject-level random-effects structure (intercepts and slopes for all predictor variables). The models were estimated using Matlab’s fitglme function, which maximize the maximum pseudo-likelihood of observed data under the model (Matlab’s default option). Choice accuracy was modelled using a binomial response function distribution (logistic regression), whereas confidence judgments and response times were modelled using a Normal response function distribution (linear regression). For instance, the linear mixed-effect models for choice accuracy can be written in Wilkinson-Rogers notation as:

Choice_accuracy ~ 1 + Val. + Inf. + Val. * Inf. + Fix. + Stim. + Mask. + Sess. + (1 + Val. + Inf. + Val. * Inf. + Fix. + Stim. + Mask. + Sess. |Subject),

With Val: valence; Inf: information; Fix.: fixation duration (only available in Experiments 4-5); Stim.; stimulus display duration (only available in Experiment 5); Mask: Mask duration (only available in Experiments 4-5); Sess: session number.

Note that Val. and Inf. are coded as 0/1, but that the interaction term Val*Inf was computed with Val. and Inf. coded as −1/1 and then rescaled to 0/1.

The robust regressions were performed with Matlab’s robustfit function, using default settings. The algorithm uses iteratively reweighted least squares with the bisquare weighting function to decrease the impact of extreme data-points (outliers) on estimated regression coefficients.

Results

First, we evaluated the effects of our manipulation of the display and response settings across the experiments on average levels of choice accuracy and confidence ratings using multiple independent one-way ANOVAs. We found significant effects of the experiments on the average levels of accuracy (F(5,102) = 5.72, P = 1.00×10-4, η2 = 0.21), mostly driven by a drop of accuracy in experiment 6 (see Table 1 and Figure S. 2), but no effects on average levels of confidence ratings (F(5,102) = 1.50, P = 0.1953, η2= 0.07; Table 1). We also computed, at the session level (participants underwent 3 separate learning sessions per experiment), the correlations between confidence ratings and RT. When averaged at the individual level and tested at the population level (one sample t-test), this measure of the linear relationship between RT and confidence was very significant in all experiments (Exp. 1-6: all Ps < 0.01; Table 1). The consistent negative and significant correlations across six experiments indicate that confidence is robustly associated with RT regardless of option-action mapping or time pressure manipulations, suggesting a strong link between instrumental and metacognitive processes. Yet, the correlation between confidence and RT was modulated by our experimental manipulations (effect of experiment: F(5, 102) = 9.91, P < 0.001, η2 = 0.32); post-hoc tests revealed that it was significantly altered by all our experimental manipulations in Exp. 3-6 (Figure S. 2).

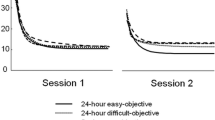

Next, we analyzed the effects of our experimental manipulation (valence and information) on the observed behavioral variables (choice accuracy, confidence, RT), using repeated measures ANOVAs in each individual study (Fig. 2; Table 2). The parallel analyses of choice accuracy and confidence ratings replicated the results reported in (Fontanesi et al., 2019; Lebreton et al., 2019; Palminteri et al., 2015). Indeed, participants were more accurate in complete information contexts in five of six experiments (Table 2; main effect of information on accuracy, Exp. 1-5: Ps < 0.05; Exp. 6: P = 0.1570). The effects of information on accuracy were actually not significantly different across our different experiments (Figure S. 3; effect of experiment: F(5, 102) = 0.52; P = 0.7289 η2 = 0.03). On the other hand, participants learned equally well in gain and loss contexts, as they exhibited similar levels of accuracy in gain and loss contexts in all experiments (Table 2; main effect of valence on accuracy, Exp. 1-6: all Ps > 0.3; Figure S. 3; effect of experiment: F(5, 102) = 0.35, P = 0.884, η2 = 0.02).

Behavioral results. Effects of the main manipulations (left: valence; middle: information; right: interaction) on relevant measures of choice-relevant behavior (top: performance; middle: confidence; bottom: response times). Analyses are independently performed in the six different experiments using repeated-measures ANOVAs. Empty dots with colored edges represent individual data points across different experiments; filled diamonds and error-bars represent sample mean ± SEM. The horizontal bar indicates a one-way ANOVA testing the effect of experiment on each manipulation (see supplementary materials, Figure S. 3 for details). ~P < 0.1; *P < 0.05; **P < 0.01; ***P < 0.001

Despite similar performances in gain and loss contexts, and despite our attempt to cancel the valence-induced motor bias with our manipulations of the option-action mapping and time pressure, participants were slower in loss contexts in experiments 1-4 & 6 (Table 2; main effect of valence on RT: all Ps < 0.01). These results not only replicate the results reported in (Fontanesi et al., 2019), but also assert the robustness of the valence-induced motor bias to the manipulation of response setups in human instrumental learning. Still, our experimental manipulations significantly reduced the motor bias in Exp. 3-5 (Figure S. 3; effect of experiment: F(5, 102) = 7.98, P < 0.001, η2 = 0.28).

Importantly, despite similar performance in gain and loss contexts, participants were less confident in loss contexts (Table 2; main effect of valence on confidence, Exp. 1-6: all Ps < 0.01), with very similar effect sizes across all experiments (Figure S. 3; F(5, 102) = 1.26, P = 0.289, η2 = 0.06). These effects were mitigated when more information was available (Table 2; interaction valence × information on confidence: all Ps < 0.05). These results not only replicate those reported in Lebreton et al. (2019) but also assert the robustness of the valence-induced confidence bias.

Overall, the analyses of the data collected in six different versions of our experiment (N = 108) clearly underline the remarkable robustness of the effects of outcome valence on both confidence and RT. Only one experimental condition succeeded in cancelling the valence-induced motor bias (Experiment 5). Note that in this experiment, we still observed the confidence bias as evidenced by a significant main effect of valence on confidence (Table 2; F(1,17) = 16.71, P < 0.001, η2 = 0.15), but not on RT (F(1,17) = 1.97, P = 0.178, η2 = 0.001). This suggests that the effects of outcome valence on confidence and RT are partly dissociable. In other words, we can observe a lower confidence in loss contexts, even when RTs are indistinguishable from gain contexts.

In order to give a comprehensive overview of the relationship between accuracy, confidence, and RT, and to quantify the effects of the different available predictors on these behavioral measures, we also ran generalized linear mixed-effect regressions. Independent variables included not only valence, information, and their interaction but also the different available timings (e.g., duration of the stimulus or mask display) and a linear trend accounting for the session effects (see Methods for details). These sensitive trial-by-trial analyses replicated the main ANOVA results reported above regarding the effects of valence and information on performance, confidence, and RT (Figure 3; Tables 3, 4, and 5). They also confirmed that, in Experiment 5, no effect of valence can be detected on RT and performance (P = 0.349 and P = 0.620), whereas a robust effect is observed on confidence (P = 0.002).

Generalized linear mixed-effects models. Estimated standardized regression coefficients (t-values) from generalized linear mixed-effects (GLME) models, fitted in the different experiments. Top: logistic GLME with performance as the dependent variable. Middle: linear GLME with confidence as the dependent variable. Bottom: linear GLME with RT as the dependent variable; Shaded area represent area where coefficients are not significantly different from 0 (abs(t-value) < 1.95; P > 0.05). ~P < 0.1; *P < 0.05; **P < 0.01; ***P < 0.001

We also ran an additional mixed model, which estimated the effect of our experimental factors on confidence, while controlling for RTs—i.e., including RTs in the dependent variables (Table 6). Importantly, and replicating previous findings (Lebreton et al., 2019), the main effect of valence on confidence remained significant in all experiments (P < 0.001), providing additional evidence that the valence-induced confidence bias is partially dissociable from the valence-induced motor bias.

Because the valence-induced motor bias—i.e., the slowing down of RTs in loss compared to gain contexts—was extremely robust to our experimental manipulations aiming at cancelling it, the ANOVA and regressions above provide only limited evidence on whether valence-induced decreasing on confidence can be observed in the absence of the valence-induced slowing of RT. In the following paragraphs, we therefore used a different analytical strategy leveraging inter-individual differences to test this hypothesis. We assessed the link between individual slowing down (RT in gain – loss) and individual decreases in confidence (confidence in gain – loss) in our full sample and in each individual study using robust linear regressions (see Methods for details). In those regressions, the coefficients for the intercept and slope quantify two different but equally important signals: First, the y-intercept represents a theoretical individual who exhibits no effect of valence on RT (RT in gain – loss = 0; Fig. 4A): an intercept significantly different from 0 therefore indicates that a significant effect of valence on confidence can be observed in the absence of an effect on RT. Second, the slope quantifies how the effect of valence on confidence linearly depends on the valence-induced slowing of RT. Both at the population level (i.e., combining data from all 6 experiments) and in each individual study, the intercepts of those regressions were estimated to be significantly positive (all Ps < 0.05; Fig. 4A-B; Table 7). This indicates that valence-induced changes on confidence are detectable when valence induced-changes on RT are absent. Note that at the population level, the slope of the regression also was significantly negative (β = −0.02 ± 0.01, t(106) = −3.75, P < 0.001), indicating that, compared with the gain context, the more participants were slowed down by the loss context, the less confident they were in their response. Therefore, the valence-induced motor and confidence biases are only partially dissociable.

Assessing the link between the effects of valence on confidence and response times. (A) Inter-individual correlations between the effects of valence on confidence (Y-axis) and response times (X-axis) across experiments. Dots represent data points from individual participants. Thick lines represent the mean ± 95%CI of the effects of valence on confidence (vertical lines) and response times (horizontal lines). Experiments are indicated by the dot edge and line color. The black shaded area represents the 95%CI of the inter-individual linear regression. Note that potential outliers did not bias the regression, given that simple and robust regressions gave very similar results. (B) Results from inter-individual regressions of the valence-induced RT slowing on the valence-induced confidence difference across different experiments. Top: estimated intercepts of the regressions. Bottom: estimated slopes of the regressions. Diamonds and error-bars represent the estimated regression coefficients (β) and their standard error. *P < 0.05; **P < 0.01; ***P < 0.001

Discussion

The present work investigated the relationship between valence-induced biases affecting two different behavioral outputs: response time and confidence. We confirmed, in six variations of a simple probabilistic reinforcement-learning task, that learning to avoid punishment increased participants’ response time (RT) and decreased their confidence in their choices, without affecting their actual performance (Fontanesi et al., 2019 ; Lebreton et al., 2019). The valence-induced bias on RT is currently interpreted as a manifestation of a motor—or instrumental—Pavlovian bias (Boureau and Dayan, 2011; Guitart-Masip et al., 2012). In the associative learning literature, similar Pavlovian effects—whereby the presentation of reward-associated stimuli can motivate behaviors that have produced rewards in the past—have been described (Mahlberg et al., 2019). One of the most studied effect is the Pavolvian-Instrumental Transfer (PIT), which is defined as an increased vigor in instrumentally trained responses when these are made in the context of Pavlovian, or reward-associated, cues (Cartoni et al., 2016; Holmes et al., 2010). While we did not employ standard PIT procedures in the current studies, which would involve separate Pavlovian and transfer phases (Colwill and Rescorla, 1988; Rescorla and Solomon, 1967; Watson et al., 2014), our findings nonetheless parallel those from Pavlovian-Instrumental Transfer studies, by showing faster reaction times in the context of reward, but not punishment cues.

The valence-induced decrease in confidence has been described as a value-to-confidence contamination, potentially generated by a mechanisms of affect-as-information (Lebreton et al., 2018; Schwarz and Clore, 1983). Note that some authors have warned about possible misidentifications between a true confidence bias and a change in metacognitive sensitivity (Fleming and Lau, 2014). Yet, because we previously established in a perceptual task that the outcome valence manipulation specifically impacts the confidence bias and not metacognitive sensitivity (Lebreton et al., 2018), we assume that the same experimental manipulation produces similar effects in a reinforcement-learning task.

One of the motivations behind the present study was to rule out a potential alternative explanation of the observed decrease in confidence: participants could derive confidence estimates by monitoring changes in their own response times. Indeed, because it has been suggested that humans can infer confidence levels from observing their RT (Desender et al., 2017; Kiani et al., 2014), the valence-induced bias on confidence could be spuriously driven by a valence-induced motor bias operating at the level of motor initiation (Boureau and Dayan, 2011; Guitart-Masip et al., 2012). As such, valence-induced confidence biases would then merely reflect a secondary effect of valence mediated by response time slowing, and not a primary meta-cognitive bias. Crucially, this possibility is not ruled out by previous studies, where effects of affective states on confidence judgments in perceptual or cognitive tasks typically lacked control over RT (Giardini et al., 2008; Koellinger and Treffers, 2015; Massoni, 2014, but see Lebreton et al., 2018). We address this issue in the current set of experiments by dissociating decisions from motor mapping, thereby partially removing the association between RT and confidence.

We analyzed six datasets composed of two published datasets (Exp. 1-2) and four new experiments (Exp. 3-6). Over those six experimental datasets, the first noticeable result is that we systematically replicated previous instrumental learning results using the same paradigm with very consistent effect sizes (Palminteri et al., 2015, 2016): participants learn equally well to seek reward and avoid punishment, and learning performance benefits from complete information (i.e., feedback about the counterfactual outcome). The reliability of the results extended beyond choice behavior as confidence and RT were, respectively, lower and slower in punishment contexts compared with reward contexts, as previously reported (Fontanesi et al. 2019; Lebreton et al., 2019), thus confirming the robustness of the valence bias.

The second important result is that the slowing down of RTs in loss contexts is extremely resilient, as it was still observed when the mapping between motor response and option selection was dissociated by our experimental design (Exp. 3-4) and when significant time pressure was applied on the decision (Exp. 6) – albeit with significantly lower effect sizes. This result speaks to the strength and the pervasiveness of the valence-induced bias operating at the motor level (Boureau and Dayan, 2011; Guitart-Masip et al., 2012).

Third, and importantly, we still observed a significant valence effect on confidence when the valence effects on RT were dramatically reduced (Exp. 3, 4, and 6) or absent (Exp. 5), indicating that the lower confidence observed in the loss-avoidance context is—at least partly—dissociable from the concomitant slowing down of motor responses. This was confirmed by additional evidence from inter-individual difference analyses, showing that in all six experiments, a theoretical subject exhibiting no valence-induced bias in RT would still exhibit a valence-induced bias in confidence. Note that the absence of a significant motor bias observed in Exp. 5 could be caused by the successful changes in the experimental setup, that were implemented with this specific goal in mind. Yet, it also could be a false negative: the experimental setup could still be inefficient to cancel the motor bias, but the sampled participants just happened—by chance—to not exhibit the motor bias. Regardless of the reason for this null-effect, the important point is that in this sample—where we failed to detect a significant effect of valence on reaction times—there was still an effect of valence on confidence. Altogether, these results suggests that it is unlikely that the valence-induced bias on confidence reported in human reinforcement-learning (Lebreton et al., 2019) is a mere consequence of a response time slowing caused by an aversive motor Pavlovian bias. Our results are consistent with recent findings (Dotan et al., 2018), challenging the notion that humans infer confidence levels purely from observing their own response times, and suggesting that decision reaction times are a consequence rather than a cause of the feeling of confidence (Desender et al., 2017; Kiani et al., 2014). It is worth noting that in most studies, decision-time (i.e., when participants reach a decision) and response times (when participants indicate their choice) are not experimentally dissociated and often conflated in the same measure. We delayed the mapping between decisions (in the option space) and action selection (motor space), which resulted in an effective control over response times. Future studies will investigate whether participants can keep track of an internal measure of decision time, which could influence confidence. Likewise, we cannot pretend that our experimental manipulations removed all valence (Pavlovian) effects on motor responses. We only managed to modulate one component of our participants’ response vigor: the response times (RT).

In our data, we also observed that confidence ratings and RT are robustly associated regardless of time pressure manipulation. The negative correlation between confidence and RT was consistently found in over six experiments. This coupling is consistent with predictions from most sequential-sampling models (van den Berg et al., 2016; De Martino et al., 2013; Navajas et al., 2016; Pleskac and Busemeyer, 2007; Ratcliff and Starns, 2009, 2013; Yu et al., 2015), which posit that confidence and RT jointly emerge from a single mechanism of evidence accumulation. Importantly, we still observed robust correlations between confidence and motor RTs when we dissociated action selection from the option evaluation. Therefore, the motor execution of a decision might be more important than previously thought in sequential-sampling models of confidence, which mostly focus on decision times.

The replicability and robustness of the valence-induced confidence bias implies that the manipulations of valence could prove useful to dissociate fundamental components of decision-making and metacognitive judgment, such as objective uncertainty and subjective confidence (Bang and Fleming, 2018). The dissociation between objective uncertainty and subjective confidence is anticipated by post-decisional and second-order models of confidence (Fleming and Daw, 2017; Pleskac and Busemeyer, 2007), which postulate that confidence is formed after the decision and thereby might be influenced by other internal or external variables (Moran et al., 2015; Navajas et al., 2016; Yu et al., 2015). It is worth noting that our results do not rule out the possibility that RT is used to guide metacognitive judgment of confidence before and after the decision. Actually, the fact that participants who exhibit the strongest valence-induced motor bias are also the ones that exhibit the strongest confidence bias (significant negative slope(s) in Fig. 4A-B and Table 3) indicates that their reaction times and confidence are linked. Observing one’s RTs could therefore be one of the factors that influences confidence after the decision was made, as posited in second-order models.

In a previous study (Fontanesi et al., 2019), we analyzed the effects of valence on RT, on a different dataset collected with a similar experimental design, although omitting confidence judgments. There, using an approach combining reinforcement-learning and decision-diffusion modelling, we reported that valence influences two critical parameters of the response time model: the nondecision-time, which typically represents perceptual and motor processes, and the decision threshold, which indexes response cautiousness. We speculate that this distinction is relevant to interpret the results of the present report. We propose that the portion of the valence-induced response time slowing that we were able to cancel through response-mapping manipulation could be linked to the nondecision-time modulation; on the other hand, the residual irreducible valence-induced response time slowing could be linked to the increased response cautiousness. Yet, given the disruption of the response mapping present in most experiments in the current study, the combined reinforcement-learning and decision-diffusion modelling approach cannot be applied to the present data to test this hypothesis. Further experiments are therefore needed to refine the computational description of valence-induced biases in reinforcement-learning, and their consequences on performance, confidence and response times.

Finally, the question arises to what extent incentive-related, confidence, and Pavlovian and instrumental processes, which all influence behavior in the current study, are supported by dissociable, or overlapping brain systems. Incentives are typically processed by the brain reward system, of which the ventral striatum (VS) and ventromedial prefrontal cortex (vmPFC) are key structures (Bartra et al., 2013; Haber and Knutson, 2009; Pessoa and Engelmann, 2010). The anterior insula also is often involved in incentive processing and seems to preferentially code negative incentive value (Bartra et al., 2013; Engelmann et al., 2015, 2017; Palminteri et al., 2012). This set of neural structures is also involved in the computation of positive (vmPFC, VS) and negative (anterior insula) reward prediction errors (RPE)s. RPEs are an essential part of reinforcement learning models of Pavlovian and instrumental learning, and reflect the difference in expected and observed rewards (or punishments), which is used to update future decision value estimates. Unsurprisingly, brain regions associated with Pavlovian Instrumental Transfer also involve these regions associated with processing predominantly appetitive stimuli, i.e., the ventral striatum and ventral region of the prefrontal cortex, but also regions associated with predominantly aversive stimuli, i.e., the amygdala (Cartoni et al., 2016; Holmes et al., 2010; Talmi et al., 2008). Interestingly, recent neuroimaging studies have shown that neural signals in the vmPFC correlate with confidence judgments in a variety of tasks (De Martino et al., 2013; Lebreton et al., 2015; Shapiro and Grafton, 2020). Taken together, there is significant overlap in the neural systems that support incentive processing (VS, vmPFC) and appetitive Pavlovian and instrumental learning (VS), on the one hand, and confidence (vmPFC) on the other. Note further that ventral striatum is situated in the basal ganglia and has direct projections with vmPFC (Haber and Knutson, 2009) and can therefore function as an interface between motor and affective/motivational systems. Regions encoding incentives and learning in the aversive domain, however, do not seem to share the same direct interconnectivity with vmPFC and motor regions (Cerliani et al., 2012). The concurrent representation of key cognitive processes in subregions of the reward system, together with its connectivity profile, make it a good candidate to explain the valence-induced motor and confidence biases observed in the current study. Note, however, that these are merely neuroanatomical hypotheses based on integrating results from related literatures on reward, reinforcement learning, and PIT. It is therefore essential that future neuroimaging research identifies the underlying neurobiological basis of the valence-induced motor and confidence biases that we demonstrated.

Author note

This work was supported by startup funds from the Amsterdam School of Economics, awarded to JBE. JBE and ML are grateful for support from Amsterdam Brain and Cognition (ABC). ML is supported by the Swiss National Fund Ambizione Grant (PZ00P3_174127). SP is supported by an ATIP-Avenir grant (R16069JS), the Programme Emergence(s) de la Ville de Paris, the Fyssen foundation and a Collaborative Research in Computational Neuroscience ANR-NSF grant (ANR-16-NEUC-0004).

Open practices statement

The data and materials for all experiments, as well as codes to reproduce all analyses and figures, are made available on figshare: 10.6084/m9.figshare.12555329. None of the experiments was preregistered.

References

Bang, D., and Fleming, S.M. (2018). Distinct encoding of decision confidence in human medial prefrontal cortex. Proceedings of the National Academy of Sciences 115, 6082–6087.

Bartra, O., McGuire, J.T., and Kable, J.W. (2013). The valuation system: A coordinate-based meta-analysis of BOLD fMRI experiments examining neural correlates of subjective value. NeuroImage 76, 412–427.

Becker, G.M., Degroot, M.H., and Marschak, J. (1964). Measuring utility by a single-response sequential method. Behavioral Science 9, 226–232.

Bor, D., Schwartzman, D.J., Barrett, A.B., and Seth, A.K. (2017). Theta-burst transcranial magnetic stimulation to the prefrontal or parietal cortex does not impair metacognitive visual awareness. PLoS One 12, e0171793.

Boureau, Y.-L., and Dayan, P. (2011). Opponency Revisited: Competition and Cooperation Between Dopamine and Serotonin. Neuropsychopharmacology 36, 74–97.

Cartoni, E., Balleine, B., and Baldassarre, G. (2016). Appetitive Pavlovian-instrumental Transfer: A review. Neuroscience and Biobehavioral Reviews 71, 829–848.

Cerliani, L., Thomas, R.M., Jbabdi, S., Siero, J.C.W., Nanetti, L., Crippa, A., Gazzola, V., D’Arceuil, H., and Keysers, C. (2012). Probabilistic tractography recovers a rostrocaudal trajectory of connectivity variability in the human insular cortex. Human Brain Mapping 33, 2005–2034.

Colwill, R.M., and Rescorla, R.A. (1988). Associations between the discriminative stimulus and the reinforcer in instrumental learning. Journal of Experimental Psychology. Animal Behavior Processes 14, 155.

Daw, N.D., Niv, Y., and Dayan, P. (2005). Uncertainty-based competition between prefrontal and dorsolateral striatal systems for behavioral control. Nature Neuroscience 8, 1704–1711.

De Martino, B., Fleming, S.M., Garrett, N., and Dolan, R.J. (2013). Confidence in value-based choice. Nature Neuroscience 16, 105–110.

Desender, K., Opstal, F.V., and Bussche, E.V. den (2017). Subjective experience of difficulty depends on multiple cues. Scientific Reports 7, 44222.

Donoso, M., Collins, A.G.E., and Koechlin, E. (2014). Foundations of human reasoning in the prefrontal cortex. Science 344, 1481–1486.

Dotan, D., Meyniel, F., and Dehaene, S. (2018). On-line confidence monitoring during decision making. Cognition 171, 112–121.

Ducharme, W.M., and Donnell, M.L. (1973). Intrasubject comparison of four response modes for “subjective probability” assessment. Organizational Behavior and Human Performance 10, 108–117.

Engelmann, J.B., Berns, G.S., and Dunlop, B.W. (2017). Hyper-responsivity to losses in the anterior insula during economic choice scales with depression severity. Psychological Medicine 47, 2879.

Engelmann, J.B., Meyer, F., Fehr, E., and Ruff, C.C. (2015). Anticipatory Anxiety Disrupts Neural Valuation during Risky Choice. The Journal of Neuroscience 35, 3085–3099.

Fleming, S.M., and Daw, N.D. (2017). Self-evaluation of decision-making: A general Bayesian framework for metacognitive computation. Psychological Review 124, 91–114.

Fleming, S.M., and Dolan, R.J. (2012). The neural basis of metacognitive ability. Philosophical Transactions of the Royal Society B: Biological 367, 1338–1349.

Fleming, S.M., Huijgen, J., and Dolan, R.J. (2012). Prefrontal Contributions to Metacognition in Perceptual Decision Making. The Journal of Neuroscience 32, 6117–6125.

Fleming, S.M., and Lau, H.C. (2014). How to measure metacognition. Frontiers in Human Neuroscience 8.

Fleming, S.M., Maniscalco, B., Ko, Y., Amendi, N., Ro, T., and Lau, H. (2015). Action-specific disruption of perceptual confidence. Psychological Science 26, 89–98.

Fleming, S.M., Ryu, J., Golfinos, J.G., and Blackmon, K.E. (2014). Domain-specific impairment in metacognitive accuracy following anterior prefrontal lesions. Brain 137, 2811–2822.

Folke, T., Jacobsen, C., Fleming, S.M., and Martino, B.D. (2016). Explicit representation of confidence informs future value-based decisions. Nature Human Behaviour 1, 0002.

Fontanesi, L., Palminteri, S., and Lebreton, M. (2019). Decomposing the effects of context valence and feedback information on speed and accuracy during reinforcement learning: a meta-analytical approach using diffusion decision modeling. Cognitive, Affective, & Behavioral Neuroscience 19, 490–502.

Geller, E.S., and Whitman, C.P. (1973). Confidence ill stimulus predictions and choice reaction time. Memory & Cognition 1, 361–368.

Giardini, F., Coricelli, G., Joffily, M., and Sirigu, A. (2008). Overconfidence in Predictions as an Effect of Desirability Bias. In Advances in Decision Making Under Risk and Uncertainty, P.M. Abdellaoui, and P.D.J.D. Hey, eds. (Springer Berlin Heidelberg), pp. 163–180.

Guitart-Masip, M., Huys, Q.J.M., Fuentemilla, L., Dayan, P., Duzel, E., and Dolan, R.J. (2012). Go and no-go learning in reward and punishment: Interactions between affect and effect. NeuroImage 62, 154–166.

Haber, S.N., and Knutson, B. (2009). The Reward Circuit: Linking Primate Anatomy and Human Imaging. Neuropsychopharmacology 35, 4–26.

Heilbron, M., and Meyniel, F. (2019). Confidence resets reveal hierarchical adaptive learning in humans. PLoS Computational Biology 15, e1006972.

Hollard, G., Massoni, S., and Vergnaud, J.-C. (2016). In search of good probability assessors: an experimental comparison of elicitation rules for confidence judgments. Theory and Decision 80, 363–387.

Holmes, N.M., Marchand, A.R., and Coutureau, E. (2010). Pavlovian to instrumental transfer: A neurobehavioural perspective. Neuroscience and Biobehavioral Reviews 34, 1277–1295.

Jahfari, S., Ridderinkhof, K.R., Collins, A.G.E., Knapen, T., Waldorp, L.J., and Frank, M.J. (2019). Cross-Task Contributions of Frontobasal Ganglia Circuitry in Response Inhibition and Conflict-Induced Slowing. Cerebral Cortex 29, 1969–1983.

Karni, E. (2009). A Mechanism for Eliciting Probabilities. Econometrica 77, 603–606.

Kiani, R., Corthell, L., and Shadlen, M.N. (2014). Choice Certainty Is Informed by Both Evidence and Decision Time. Neuron 84, 1329–1342.

Koellinger, P., and Treffers, T. (2015). Joy Leads to Overconfidence, and a Simple Countermeasure. PLoS ONE 10, e0143263.

Lebreton, M., Abitbol, R., Daunizeau, J., and Pessiglione, M. (2015). Automatic integration of confidence in the brain valuation signal. Nature Neuroscience 18, 1159–1167.

Lebreton, M., Bacily, K., Palminteri, S., and Engelmann, J. (2019). Contextual influence on confidence judgments in human reinforcement learning. 4, 27.

Lebreton, M., Langdon, S., Slieker, M.J., Nooitgedacht, J.S., Goudriaan, A.E., Denys, D., van Holst, R.J., and Luigjes, J. (2018). Two sides of the same coin: Monetary incentives concurrently improve and bias confidence judgments. Science Advances. 14.

Mahlberg, J., Seabrooke, T., Weidemann, G., Hogarth, L., Mitchell, C.J., and Moustafa, A.A. (2019). Human appetitive Pavlovian-to-instrumental transfer: a goal-directed account. Psychological Research.

Massoni, S. (2014). Emotion as a boost to metacognition: How worry enhances the quality of confidence. Consciousness and Cognition 29, 189–198.

Miele, D.B., Wager, T.D., Mitchell, J.P., and Metcalfe, J. (2011). Dissociating Neural Correlates of Action Monitoring and Metacognition of Agency. Journal of Cognitive Neuroscience 23, 3620–3636.

Moran, R., Teodorescu, A.R., and Usher, M. (2015). Post choice information integration as a causal determinant of confidence: Novel data and a computational account. Cognitive Psychology 78, 99–147.

Mowrer, O.H. (1952). Chapter V: Learning Theory. Review of Educational Research 22, 475–495.

Navajas, J., Bahrami, B., and Latham, P.E. (2016). Post-decisional accounts of biases in confidence. Current Opinion in Behavioral Sciences 11, 55–60.

Palminteri, S., Justo, D., Jauffret, C., Pavlicek, B., Dauta, A., Delmaire, C., Czernecki, V., Karachi, C., Capelle, L., and Durr, A. (2012). Critical roles for anterior insula and dorsal striatum in punishment-based avoidance learning. Neuron 76, 998–1009.

Palminteri, S., Khamassi, M., Joffily, M., and Coricelli, G. (2015). Contextual modulation of value signals in reward and punishment learning. Nature Communications. 6.

Palminteri, S., Kilford, E.J., Coricelli, G., and Blakemore, S.-J. (2016). The Computational Development of Reinforcement Learning during Adolescence. PLoS Computational Biology 12, e1004953.

Pessoa, L., and Engelmann, J.B. (2010). Embedding Reward Signals into Perception and Cognition. Frontiers in Neuroscience. 4.

Pleskac, T.J., and Busemeyer, J. (2007). A Dynamic and Stochastic Theory of Choice, Response Time, and Confidence. 7.

Pleskac, T.J., and Busemeyer, J.R. (2010). Two-stage dynamic signal detection: A theory of choice, decision time, and confidence. Psychological Review 117, 864–901.

Pouget, A., Drugowitsch, J., and Kepecs, A. (2016). Confidence and certainty: distinct probabilistic quantities for different goals. Nature Neuroscience 19, 366–374.

Qiu, L., Su, J., Ni, Y., Bai, Y., Zhang, X., Li, X., and Wan, X. (2018). The neural system of metacognition accompanying decision-making in the prefrontal cortex. PLoS Biology 16, e2004037.

Ratcliff, R., and Starns, J.J. (2009). Modeling confidence and response time in recognition memory. Psychological Review 116, 59–83.

Ratcliff, R., and Starns, J.J. (2013). Modeling confidence judgments, response times, and multiple choices in decision making: Recognition memory and motion discrimination. Psychological Review 120, 697–719.

Rescorla, R.A., and Solomon, R.L. (1967). Two-process learning theory: Relationships between Pavlovian conditioning and instrumental learning. Psychological Review 74, 151.

Rounis, E., Maniscalco, B., Rothwell, J.C., Passingham, R.E., and Lau, H. (2010). Theta-burst transcranial magnetic stimulation to the prefrontal cortex impairs metacognitive visual awareness. Cognitive Neuroscience 1, 165–175.

Schlag, K.H., Tremewan, J., and van der Weele, J.J. (2015). A penny for your thoughts: a survey of methods for eliciting beliefs. Experimental Economics 18, 457–490.

Schwarz, N., and Clore, G.L. (1983). Mood, misattribution, and judgments of well-being: Informative and directive functions of affective states. Journal of Personality and Social Psychology 45, 513–523.

Shapiro, A.D., and Grafton, S.T. (2020). Subjective value then confidence in human ventromedial prefrontal cortex. PLoS One 15, e0225617.

Talmi, D., Seymour, B., Dayan, P., and Dolan, R.J. (2008). Human Pavlovian–Instrumental Transfer. The Journal of Neuroscience 28, 360–368.

van den Berg, R., Anandalingam, K., Zylberberg, A., Kiani, R., Shadlen, M.N., and Wolpert, D.M. (2016). A common mechanism underlies changes of mind about decisions and confidence. ELife 5, e12192.

Vickers, D., Smith, P., Burt, J., and Brown, M. (1985). Experimental paradigms emphasising state or process limitations: II effects on confidence. Acta Psychologica 59, 163–193.

Vinckier, F., Gaillard, R., Palminteri, S., Rigoux, L., Salvador, A., Fornito, A., Adapa, R., Krebs, M.O., Pessiglione, M., and Fletcher, P.C. (2016). Confidence and psychosis: a neuro-computational account of contingency learning disruption by NMDA blockade. Molecular Psychiatry 21, 946–955.

Watson, P., Wiers, R.W., Hommel, B., and de Wit, S. (2014). Working for food you don’t desire. Cues interfere with goal-directed food-seeking. Appetite 79, 139–148.

Yeung, N., and Summerfield, C. (2012). Metacognition in human decision-making: confidence and error monitoring. Philosophical Transactions of the Royal Society B: Biological Sciences 367, 1310–1321.

Yu, S., Pleskac, T.J., and Zeigenfuse, M.D. (2015). Dynamics of postdecisional processing of confidence. Journal of Experimental Psychology. General 144, 489–510.

Funding

Open access funding provided by University of Geneva.

Author information

Authors and Affiliations

Contributions

Designed the study: CCT, ML, SP, and JBE. Collected the data: CCT; Analyzed the data: CCT, ML. Interpreted the results: CCT, ML, and JBE. Drafted the manuscript: ML. Edited and finalized the manuscript: CCT, ML, SP, and JBE.

Corresponding author

Ethics declarations

Conflict of interests

The authors declare that they have no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Jan B. Engelmann and Maël Lebreton Shared senior-authorship

Electronic supplementary material

ESM 1

(DOCX 955 kb)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ting, CC., Palminteri, S., Engelmann, J.B. et al. Robust valence-induced biases on motor response and confidence in human reinforcement learning. Cogn Affect Behav Neurosci 20, 1184–1199 (2020). https://doi.org/10.3758/s13415-020-00826-0

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13415-020-00826-0