Abstract

Reinforcement learning (RL) is a framework of particular importance to psychology, neuroscience and machine learning. Interactions between these fields, as promoted through the common hub of RL, has facilitated paradigm shifts that relate multiple levels of analysis in a singular framework (for example, relating dopamine function to a computationally defined RL signal). Recently, more sophisticated RL algorithms have been proposed to better account for human learning, and in particular its oft-documented reliance on two separable systems: a model-based (MB) system and a model-free (MF) system. However, along with many benefits, this dichotomous lens can distort questions, and may contribute to an unnecessarily narrow perspective on learning and decision-making. Here, we outline some of the consequences that come from overconfidently mapping algorithms, such as MB versus MF RL, with putative cognitive processes. We argue that the field is well positioned to move beyond simplistic dichotomies, and we propose a means of refocusing research questions towards the rich and complex components that comprise learning and decision-making.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 print issues and online access

$189.00 per year

only $15.75 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

References

Roiser, J. P. & Sahakian, B. J. Hot and cold cognition in depression. CNS Spectr. 18, 139–149 (2013).

Dickinson, A. Actions and habits: the development of behavioural autonomy. Philos. Trans. R. Soc. London. B Biol. Sci. 308, 67–78 (1985).

Sloman, S. A. The empirical case for two systems of reasoning. Psychol. Bull. 119, 3 (1996).

Daw, N. D., Gershman, S. J., Seymour, B., Dayan, P. & Dolan, R. J. Model-based influences on humans’ choices and striatal prediction errors. Neuron 69, 1204–1215 (2011).

Stanovich, K. E. & West, R. F. Individual differences in reasoning: implications for the rationality debate? Behav. Brain Sci. 23, 645–665 (2000).

Kahneman, D. & Frederick, S. in Heuristics and Biases: The Psychology of Intuitive Judgment Ch. 2 (eds Gilovich, T., Griffin, D. & Kahneman, D.) 49–81 (Cambridge Univ. Press, 2002).

Daw, N. in Decision Making, Affect, and Learning: Attention and Performance XXIII Ch. 1 (eds Delgado, M. R., Phelps, E. A. and Robbins, T. W.) 1–26 (Oxford Univ. Press, 2011).

Marr, D. & Poggio, T. A computational theory of human stereo vision. Proc. R. Soc. Lond. B. Biol. Sci. 204, 301–328 (1979).

Doll, B. B., Duncan, K. D., Simon, D. A., Shohamy, D. & Daw, N. D. Model-based choices involve prospective neural activity. Nat. Neurosci. 18, 767–772 (2015).

Daw, N. D. Are we of two minds? Nat. Neurosci. 21, 1497–1499 (2018).

Dayan, P. Goal-directed control and its antipodes. Neural Netw. 22, 213–219 (2009).

da Silva, C. F. & Hare, T. A. A note on the analysis of two-stage task results: how changes in task structure affect what model-free and model-based strategies predict about the effects of reward and transition on the stay probability. PLoS ONE 13, e0195328 (2018).

Moran, R., Keramati, M., Dayan, P. & Dolan, R. J. Retrospective model-based inference guides model-free credit assignment. Nat. Commun. 10, 750 (2019).

Akam, T., Costa, R. & Dayan, P. Simple plans or sophisticated habits? State, transition and learning interactions in the two-step task. PLoS Comput. Biol. 11, e1004648 (2015).

Shahar, N. et al. Credit assignment to state-independent task representations and its relationship with model-based decision making. Proc. Natl Acad. Sci. USA 116, 15871–15876 (2019).

Deserno, L. & Hauser, T. U. Beyond a cognitive dichotomy: can multiple decision systems prove useful to distinguish compulsive and impulsive symptom dimensions? Biol. Psychiatry https://doi.org/10.1016/j.biopsych.2020.03.004 (2020).

Schultz, W., Dayan, P. & Montague, P. R. A neural substrate of prediction and reward. Science 275, 1593–1599 (1997).

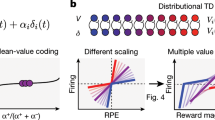

Dabney, W. et al. A distributional code for value in dopamine-based reinforcement learning. Nature 577, 671–675 (2020).

Thorndike, E. L. Animal Intelligence: Experimental Studies (Transaction, 1965).

Bush, R. R. & Mosteller, F. Stochastic models for learning (John Wiley & Sons, Inc. 1955).

Pearce, J. M. & Hall, G. A model for Pavlovian learning: variations in the effectiveness of conditioned but not of unconditioned stimuli. Psychol. Rev. 87, 532–552 (1980).

Rescorla, R. A. & Wagner, A. R. in Classical Conditioning II: Current Research and Theory Ch. 3 (eds Black, A. H. & Prokasy, W. F) 64–99 (Appleton-Century-Crofts, 1972).

Sutton, R. S. & Barto, A. G. Reinforcement learning: An Introduction (MIT Press, 2018).

Montague, P. R., Dayan, P. & Sejnowski, T. J. A framework for mesencephalic dopamine systems based on predictive Hebbian learning. J. Neurosci. 16, 1936–1947 (1996).

Bayer, H. M. & Glimcher, P. W. Midbrain dopamine neurons encode a quantitative reward prediction error signal. Neuron 47, 129–141 (2005).

Morris, G., Nevet, A., Arkadir, D., Vaadia, E. & Bergman, H. Midbrain dopamine neurons encode decisions for future action. Nat. Neurosci. 9, 1057–1063 (2006).

Roesch, M. R., Calu, D. J. & Schoenbaum, G. Dopamine neurons encode the better option in rats deciding between differently delayed or sized rewards. Nat. Neurosci. 10, 1615–1624 (2007).

Shen, W., Flajolet, M., Greengard, P. & Surmeier, D. J. Dichotomous dopaminergic control of striatal synaptic plasticity. Science 321, 848–851 (2008).

Steinberg, E. E. et al. A causal link between prediction errors, dopamine neurons and learning. Nat. Neurosci. 16, 966–973 (2013).

Kim, K. M. et al. Optogenetic mimicry of the transient activation of dopamine neurons by natural reward is sufficient for operant reinforcement. PLoS ONE 7, e33612 (2012).

O’Doherty, J. et al. Dissociable roles of ventral and dorsal striatum in instrumental conditioning. Science 304, 452–454 (2004).

McClure, S. M., Berns, G. S. & Montague, P. R. Temporal prediction errors in a passive learning task activate human striatum. Neuron 38, 339–346 (2003).

Samejima, K., Ueda, Y., Doya, K. & Kimura, M. Representation of action-specific reward values in the striatum. Science 310, 1337–1340 (2005).

Lau, B. & Glimcher, P. W. Value representations in the primate striatum during matching behavior. Neuron 58, 451–463 (2008).

Frank, M. J., Seeberger, L. C. & O’Reilly, R. C. By carrot or by stick: cognitive reinforcement learning in Parkinsonism. Science 306, 1940–1943 (2004).

Frank, M. J., Moustafa, A. A., Haughey, H. M., Curran, T. & Hutchison, K. E. Genetic triple dissociation reveals multiple roles for dopamine in reinforcement learning. Proc. Natl Acad. Sci. USA 104, 16311–16316 (2007).

Cockburn, J., Collins, A. G. & Frank, M. J. A reinforcement learning mechanism responsible for the valuation of free choice. Neuron 83, 551–557 (2014).

Frank, M. J., O’Reilly, R. C. & Curran, T. When memory fails, intuition reigns: midazolam enhances implicit inference in humans. Psychol. Sci. 17, 700–707 (2006).

Doll, B. B., Hutchison, K. E. & Frank, M. J. Dopaminergic genes predict individual differences in susceptibility to confirmation bias. J. Neurosci. 31, 6188–6198 (2011).

Doll, B. B. et al. Reduced susceptibility to confirmation bias in schizophrenia. Cogn. Affect. Behav. Neurosci. 14, 715–728 (2014).

Berridge, K. C. The debate over dopamine’s role in reward: the case for incentive salience. Psychopharmacology 191, 391–431 (2007).

Hamid, A. A. et al. Mesolimbic dopamine signals the value of work. Nat. Neurosci. 19, 117–126 (2016).

Sharpe, M. J. et al. Dopamine transients are sufficient and necessary for acquisition of model-based associations. Nat. Neurosci. 20, 735–742 (2017).

Tolman, E. C. Cognitive maps in rats and men. Psychol. Rev. 55, 189–208 (1948).

Economides, M., Kurth-Nelson, Z., Lübbert, A., Guitart-Masip, M. & Dolan, R. J. Model-based reasoning in humans becomes automatic with training. PLoS Comput. Biol. 11, e1004463 (2015).

Otto, A. R., Raio, C. M., Chiang, A., Phelps, E. A. & Daw, N. D. Working-memory capacity protects model-based learning from stress. Proc. Natl Acad. Sci. USA 110, 20941–20946 (2013).

Wunderlich, K., Smittenaar, P. & Dolan, R. J. Dopamine enhances model-based over model-free choice behavior. Neuron 75, 418–424 (2012).

Deserno, L. et al. Ventral striatal dopamine reflects behavioral and neural signatures of model-based control during sequential decision making. Proc. Natl Acad. Sci. USA 112, 1595–1600 (2015).

Gillan, C. M., Otto, A. R., Phelps, E. A. & Daw, N. D. Model-based learning protects against forming habits. Cogn. Affect. Behav. Neurosci. 15, 523–536 (2015).

Groman, S. M., Massi, B., Mathias, S. R., Lee, D. & Taylor, J. R. Model-free and model-based influences in addiction-related behaviors. Biol. Psychiatry 85, 936–945 (2019).

Doll, B. B., Simon, D. A. & Daw, N. D. The ubiquity of model-based reinforcement learning. Curr. Opin. Neurobiol. 22, 1075–1081 (2012).

Cushman, F. & Morris, A. Habitual control of goal selection in humans. Proc. Natl Acad. Sci. USA 112, 201506367 (2015).

O’Reilly, R. C. & Frank, M. J. Making working memory work: a computational model of learning in the prefrontal cortex and basal ganglia. Neural Comput. 18, 283–328 (2006).

Collins, A. G. & Frank, M. J. Cognitive control over learning: creating, clustering, and generalizing task-set structure. Psychol. Rev. 120, 190–229 (2013).

Momennejad, I. et al. The successor representation in human reinforcement learning. Nat. Hum. Behav. 1, 680–692 (2017).

Da Silva, C. F. & Hare, T. A. Humans are primarily model-based and not model-free learners in the two-stage task. bioRxiv https://doi.org/10.1101/682922 (2019).

Toyama, A., Katahira, K. & Ohira, H. Biases in estimating the balance between model-free and model-based learning systems due to model misspecification. J. Math. Psychol. 91, 88–102 (2019).

Iigaya, K., Fonseca, M. S., Murakami, M., Mainen, Z. F. & Dayan, P. An effect of serotonergic stimulation on learning rates for rewards apparent after long intertrial intervals. Nat. Commun. 9, 2477 (2018).

Mohr, H. et al. Deterministic response strategies in a trial-and-error learning task. PLoS Comput. Biol. 14, e1006621 (2018).

Hampton, A. N., Bossaerts, P. & O’Doherty, J. P. The role of the ventromedial prefrontal cortex in abstract state-based inference during decision making in humans. J. Neurosci. 26, 8360–8367 (2006).

Boorman, E. D., Behrens, T. E. & Rushworth, M. F. Counterfactual choice and learning in a neural network centered on human lateral frontopolar cortex. PLoS Biol. 9, e1001093 (2011).

Behrens, T. E., Woolrich, M. W., Walton, M. E. & Rushworth, M. F. Learning the value of information in an uncertain world. Nat. Neurosci. 10, 1214–1221 (2007).

Collins, A. G. E. & Koechlin, E. Reasoning, learning, and creativity: frontal lobe function and human decision-making. PLoS Biol. 10, e1001293 (2012).

Gershman, S. J., Norman, K. A. & Niv, Y. Discovering latent causes in reinforcement learning. Curr. Opin. Behav. Sci. 5, 43–50 (2015).

Badre, D., Kayser, A. S. & Esposito, M. D. Article frontal cortex and the discovery of abstract action rules. Neuron 66, 315–326 (2010).

Konovalov, A. & Krajbich, I. Mouse tracking reveals structure knowledge in the absence of model-based choice. Nat. Commun. 11, 1893 (2020).

Gläscher, J., Daw, N., Dayan, P. & O’Doherty, J. P. States versus rewards: dissociable neural prediction error signals underlying model-based and model-free reinforcement learning. Neuron 66, 585–595 (2010).

Huys, Q. J. et al. Interplay of approximate planning strategies. Proc. Natl Acad. Sci. USA 112, 3098–3103 (2015).

Suzuki, S., Cross, L. & O’Doherty, J. P. Elucidating the underlying components of food valuation in the human orbitofrontal cortex. Nat. Neurosci. 20, 1786 (2017).

Badre, D., Doll, B. B., Long, N. M. & Frank, M. J. Rostrolateral prefrontal cortex and individual differences in uncertainty-driven exploration. Neuron 73, 595–607 (2012).

Wilson, R. C., Geana, A., White, J. M., Ludvig, E. A. & Cohen, J. D. Humans use directed and random exploration to solve the explore–exploit dilemma. J. Exp. Psychol. Gen. 143, 2074 (2014).

Otto, A. R., Gershman, S. J., Markman, A. B. & Daw, N. D. The curse of planning: dissecting multiple reinforcement-learning systems by taxing the central executive. Psychol. Sci. 24, 751–761 (2013).

Niv, Y. et al. Reinforcement learning in multidimensional environments relies on attention mechanisms. J. Neurosci. 35, 8145–8157 (2015).

Badre, D. & Frank, M. J. Mechanisms of hierarchical reinforcement learning in cortico-striatal circuits 2: evidence from fMRI. Cereb. Cortex 22, 527–536 (2012).

Collins, A. G. E. Reinforcement learning: bringing together computation and cognition. Curr. Opin. Behav. Sci. 29, 63–68 (2019).

Collins, A. G. in Goal-directed Decision Making (eds Morris, R., Bornstein, A. & Shenhav, A) 105–123 (Elsevier, 2018).

Donoso, M., Collins, A. G. E. & Koechlin, E. Foundations of human reasoning in the prefrontal cortex. Science 344, 1481–1486 (2014).

Wilson, R. C., Takahashi, Y. K., Schoenbaum, G. & Niv, Y. Orbitofrontal cortex as a cognitive map of task space. Neuron 81, 267–278 (2014).

Schuck, N. W., Wilson, R. & Niv, Y. in Goal-directed Decision Making (eds Morris, R., Bornstein, A. & Shenhav, A) 259–278 (Elsevier, 2018).

Ballard, I. C., Wagner, A. D. & McClure, S. M. Hippocampal pattern separation supports reinforcement learning. Nat. Commun. 10, 1073 (2019).

Redish, A. D., Jensen, S., Johnson, A. & Kurth-Nelson, Z. Reconciling reinforcement learning models with behavioral extinction and renewal: implications for addiction, relapse, and problem gambling. Psychol. Rev. 114, 784 (2007).

Bouton, M. E. Context and behavioral processes in extinction. Learn. Mem. 11, 485–494 (2004).

Rescorla, R. A. Spontaneous recovery. Learn. Mem. 11, 501–509 (2004).

O’Reilly, R. C., Frank, M. J., Hazy, T. E. & Watz, B. PVLV: the primary value and learned value Pavlovian learning algorithm. Behav. Neurosci. 121, 31 (2007).

Gershman, S. J., Blei, D. M. & Niv, Y. Context, learning, and extinction. Psychol. Rev. 117, 197–209 (2010).

Wang, J. X. et al. Prefrontal cortex as a meta-reinforcement learning system. Nat. Neurosci. 21, 860–868 (2018).

Iigaya, K. et al. Deviation from the matching law reflects an optimal strategy involving learning over multiple timescales. Nat. Commun. 10, 1466 (2019).

Collins, A. G. E. & Frank, M. J. How much of reinforcement learning is working memory, not reinforcement learning? A behavioral, computational, and neurogenetic analysis. Eur. J. Neurosci. 35, 1024–1035 (2012).

Collins, A. G. E. The tortoise and the hare: interactions between reinforcement learning and working memory. J. Cogn. Neurosci. 30, 1422–1432 (2017).

Viejo, G., Girard, B. B., Procyk, E. & Khamassi, M. Adaptive coordination of working-memory and reinforcement learning in non-human primates performing a trial-and-error problem solving task. Behav. Brain Res. 355, 76–89 (2017).

Poldrack, R. A. et al. Interactive memory systems in the human brain. Nature 414, 546–550 (2001).

Foerde, K. & Shohamy, D. Feedback timing modulates brain systems for learning in humans. J. Neurosci. 31, 13157–13167 (2011).

Bornstein, A. M., Khaw, M. W., Shohamy, D. & Daw, N. D. Reminders of past choices bias decisions for reward in humans. Nat. Commun. 8, 15958 (2017).

Bornstein, A. M. & Norman, K. A. Reinstated episodic context guides sampling-based decisions for reward. Nat. Neurosci. 20, 997–1003 (2017).

Vikbladh, O. M. et al. Hippocampal contributions to model-based planning and spatial memory. Neuron 102, 683–693 (2019).

Decker, J. H., Otto, A. R., Daw, N. D. & Hartley, C. A. From creatures of habit to goal-directed learners: tracking the developmental emergence of model-based reinforcement learning. Psychol. Sci. 27, 848–858 (2016).

Dickinson, A. & Balleine, B. Motivational control of goal-directed action. Anim. Learn. Behav. 22, 1–18 (1994).

Balleine, B. W. & Dickinson, A. Goal-directed instrumental action: contingency and incentive learning and their cortical substrates. Neuropharmacology 37, 407–419 (1998).

Daw, N. D. & Doya, K. The computational neurobiology of learning and reward. Curr. Opin. Neurobiol. 16, 199–204 (2006).

Friedel, E. et al. Devaluation and sequential decisions: linking goal-directed and model-based behavior. Front. Hum. Neurosci. 8, 587 (2014).

de Wit, S. et al. Shifting the balance between goals and habits: five failures in experimental habit induction. J. Exp. Psychol. Gen. 147, 1043–1065 (2018).

Madrigal, R. Hot vs. cold cognitions and consumers’ reactions to sporting event outcomes. J. Consum. Psychol. 18, 304–319 (2008).

Peterson, E. & Welsh, M. C. in Handbook of Executive Functioning (eds Goldstein, S. & Naglieri, J. A.) 45–65 (Springer, 2014).

Barch, D. M. et al. Explicit and implicit reinforcement learning across the psychosis spectrum. J. Abnorm. Psychol. 126, 694–711 (2017).

Taylor, J. A., Krakauer, J. W. & Ivry, R. B. Explicit and implicit contributions to learning in a sensorimotor adaptation task. J. Neurosci. 34, 3023–3032 (2014).

Sloman, S. A. in Heuristics and biases: The psychology of intuitive judgment Ch. 22 (eds Gilovich, T., Griffin, D. & Kahneman D.) 379–396 (Cambridge Univ. Press, 2002).

Evans, J. S. B. T. in In two minds: Dual processes and beyond (eds J. S. B. T. Evans & K. Frankish) p. 33–54 (Oxford Univ. Press, 2009).

Stanovich, K. Rationality and the Reflective Mind (Oxford Univ. Press, 2011).

Dayan, P. The convergence of TD(λ) for general λ. Mach. Learn. 8, 341–362 (1992).

Caplin, A. & Dean, M. Axiomatic methods, dopamine and reward prediction error. Curr. Opin. Neurobiol. 18, 197–202 (2008).

van den Bos, W., Bruckner, R., Nassar, M. R., Mata, R. & Eppinger, B. Computational neuroscience across the lifespan: promises and pitfalls. Dev. Cogn. Neurosci. 33, 42–53 (2018).

Adams, R. A., Huys, Q. J. & Roiser, J. P. Computational psychiatry: towards a mathematically informed understanding of mental illness. J. Neurol. Neurosurg. Psychiatry 87, 53–63 (2016).

Miller, K. J., Shenhav, A. & Ludvig, E. A. Habits without values. Psychol. Rev. 126, 292–311 (2019).

Botvinick, M. M., Niv, Y. & Barto, A. Hierarchically organized behavior and its neural foundations: a reinforcement-learning perspective. Cognition 113, 262–280 (2009).

Konidaris, G. & Barto, A. G. in Advances in Neural Information Processing Systems 22 (eds Bengio, Y., Schuurmans, D., Lafferty, J. D., Williams, C. K. I. & Culotta, A.) 1015–1023 (NIPS, 2009).

Konidaris, G. On the necessity of abstraction. Curr. Opin. Behav. Sci. 29, 1–7 (2019).

Frank, M. J. & Fossella, J. A. Neurogenetics and pharmacology of learning, motivation, and cognition. Neuropsychopharmacology 36, 133–152 (2010).

Collins, A. G. E., Cavanagh, J. F. & Frank, M. J. Human EEG uncovers latent generalizable rule structure during learning. J. Neurosci. 34, 4677–4685 (2014).

Doya, K. What are the computations of the cerebellum, the basal ganglia and the cerebral cortex? Neural Netw. 12, 961–974 (1999).

Fermin, A. S. et al. Model-based action planning involves cortico-cerebellar and basal ganglia networks. Sci. Rep. 6, 31378 (2016).

Gershman, S. J., Markman, A. B. & Otto, A. R. Retrospective revaluation in sequential decision making: a tale of two systems. J. Exp. Psychol. Gen. 143, 182 (2014).

Pfeiffer, B. E. & Foster, D. J. Hippocampal place-cell sequences depict future paths to remembered goals. Nature 497, 74–79 (2013).

Peyrache, A., Khamassi, M., Benchenane, K., Wiener, S. I. & Battaglia, F. P. Replay of rule-learning related neural patterns in the prefrontal cortex during sleep. Nat. Neurosci. 12, 919–926 (2009).

Collins, A. G. E., Albrecht, M. A., Waltz, J. A., Gold, J. M. & Frank, M. J. Interactions among working memory, reinforcement learning, and effort in value-based choice: a new paradigm and selective deficits in schizophrenia. Biol. Psychiatry 82, 431–439 (2017).

Collins, A. G. E., Ciullo, B., Frank, M. J. & Badre, D. Working memory load strengthens reward prediction errors. J. Neurosci. 37, 2700–2716 (2017).

Collins, A. A. G. E. & Frank, M. J. M. Within- and across-trial dynamics of human EEG reveal cooperative interplay between reinforcement learning and working memory. Proc. Natl Acad. Sci. USA 115, 2502–2507 (2018).

Knowlton, B. J., Mangels, J. A. & Squire, L. R. A neostriatal habit learning system in humans. Science 273, 1399–1402 (1996).

Squire, L. R. & Zola, S. M. Structure and function of declarative and nondeclarative memory systems. Proc. Natl Acad. Sci. USA 93, 13515–13522 (1996).

Eichenbaum, H. et al. Memory, Amnesia, and the Hippocampal System (MIT Press, 1993).

Foerde, K. & Shohamy, D. The role of the basal ganglia in learning and memory: insight from Parkinson’s disease. Neurobiol. Learn. Mem. 96, 624–636 (2011).

Wimmer, G. E., Daw, N. D. & Shohamy, D. Generalization of value in reinforcement learning by humans. Eur. J. Neurosci. 35, 1092–1104 (2012).

Wimmer, G. E., Braun, E. K., Daw, N. D. & Shohamy, D. Episodic memory encoding interferes with reward learning and decreases striatal prediction errors. J. Neurosci. 34, 14901–14912 (2014).

Gershman, S. J. The successor representation: its computational logic and neural substrates. J. Neurosci. 38, 7193–7200 (2018).

Kool, W., Cushman, F. A. & Gershman, S. J. in Goal-directed Decision Making Ch. 7 (eds Morris, R. W. & Bornstein, A.) 153–178 (Elsevier, 2018).

Langdon, A. J., Sharpe, M. J., Schoenbaum, G. & Niv, Y. Model-based predictions for dopamine. Curr. Opin. Neurobiol. 49, 1–7 (2018).

Starkweather, C. K., Babayan, B. M., Uchida, N. & Gershman, S. J. Dopamine reward prediction errors reflect hidden-state inference across time. Nat. Neurosci. 20, 581–589 (2017).

Krueger, K. A. & Dayan, P. Flexible shaping: how learning in small steps helps. Cognition 110, 380–394 (2009).

Bhandari, A. & Badre, D. Learning and transfer of working memory gating policies. Cognition 172, 89–100 (2018).

Leong, Y. C. et al. Dynamic interaction between reinforcement learning and attention in multidimensional environments. Neuron 93, 451–463 (2017).

Farashahi, S., Rowe, K., Aslami, Z., Lee, D. & Soltani, A. Feature-based learning improves adaptability without compromising precision. Nat. Commun. 8, 1768 (2017).

Bach, D. R. & Dolan, R. J. Knowing how much you don’t know: a neural organization of uncertainty estimates. Nat. Rev. Neurosci. 13, 572–586 (2012).

Pulcu, E. & Browning, M. The misestimation of uncertainty in affective disorders. Trends Cogn. Sci. 23, 865–875 (2019).

Badre, D., Frank, M. J. & Moore, C. I. Interactionist neuroscience. Neuron 88, 855–860 (2015).

Krakauer, J. W., Ghazanfar, A. A., Gomez-Marin, A., MacIver, M. A. & Poeppel, D. Neuroscience needs behavior: correcting a reductionist bias. Neuron 93, 480–490 (2017).

Doll, B. B., Shohamy, D. & Daw, N. D. Multiple memory systems as substrates for multiple decision systems. Neurobiol. Learn. Mem. 117, 4–13 (2014).

Smittenaar, P., FitzGerald, T. H., Romei, V., Wright, N. D. & Dolan, R. J. Disruption of dorsolateral prefrontal cortex decreases model-based in favor of model-free control in humans. Neuron 80, 914–919 (2013).

Doll, B. B., Bath, K. G., Daw, N. D. & Frank, M. J. Variability in dopamine genes dissociates model-based and model-free reinforcement learning. J. Neurosci. 36, 1211–1222 (2016).

Voon, V. et al. Motivation and value influences in the relative balance of goal-directed and habitual behaviours in obsessive-compulsive disorder. Transl. Psychiatry 5, e670 (2015).

Voon, V., Reiter, A., Sebold, M. & Groman, S. Model-based control in dimensional psychiatry. Biol. Psychiatry 82, 391–400 (2017).

Gillan, C. M., Kosinski, M., Whelan, R., Phelps, E. A. & Daw, N. D. Characterizing a psychiatric symptom dimension related to deficits in goal-directed control. eLife 5, e11305 (2016).

Culbreth, A. J., Westbrook, A., Daw, N. D., Botvinick, M. & Barch, D. M. Reduced model-based decision-making in schizophrenia. J. Abnorm. Psychol. 125, 777–787 (2016).

Patzelt, E. H., Kool, W., Millner, A. J. & Gershman, S. J. Incentives boost model-based control across a range of severity on several psychiatric constructs. Biol. Psychiatry 85, 425–433 (2019).

Skinner, B. F. The Selection of Behavior: The Operant Behaviorism of BF Skinner: Comments and Consequences (CUP Archive, 1988).

Corbit, L. H., Muir, J. L. & Balleine, B. W. Lesions of mediodorsal thalamus and anterior thalamic nuclei produce dissociable effects on instrumental conditioning in rats. Eur. J. Neurosci. 18, 1286–1294 (2003).

Coutureau, E. & Killcross, S. Inactivation of the infralimbic prefrontal cortex reinstates goal-directed responding in overtrained rats. Behav. Brain Res. 146, 167–174 (2003).

Yin, H. H., Knowlton, B. J. & Balleine, B. W. Lesions of dorsolateral striatum preserve outcome expectancy but disrupt habit formation in instrumental learning. Eur. J. Neurosci. 19, 181–189 (2004).

Yin, H. H., Knowlton, B. J. & Balleine, B. W. Inactivation of dorsolateral striatum enhances sensitivity to changes in the action–outcome contingency in instrumental conditioning. Behav. Brain Res. 166, 189–196 (2006).

Ito, M. & Doya, K. Distinct neural representation in the dorsolateral, dorsomedial, and ventral parts of the striatum during fixed-and free-choice tasks. J. Neurosci. 35, 3499–3514 (2015).

Author information

Authors and Affiliations

Contributions

The authors contributed equally to all aspects of the article.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Peer review information

Nature Reviews Neuroscience thanks the anonymous reviewer(s) for their contribution to the peer review of this work.

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Collins, A.G.E., Cockburn, J. Beyond dichotomies in reinforcement learning. Nat Rev Neurosci 21, 576–586 (2020). https://doi.org/10.1038/s41583-020-0355-6

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41583-020-0355-6

This article is cited by

-

Goal-directed learning in adolescence: neurocognitive development and contextual influences

Nature Reviews Neuroscience (2024)

-

Precedent as a path laid down in walking: Grounding intrinsic normativity in a history of response

Phenomenology and the Cognitive Sciences (2024)

-

Mesolimbic dopamine adapts the rate of learning from action

Nature (2023)

-

Rethinking model-based and model-free influences on mental effort and striatal prediction errors

Nature Human Behaviour (2023)

-

AI-big data analytics for building automation and management systems: a survey, actual challenges and future perspectives

Artificial Intelligence Review (2023)