Abstract

We study the random geometry of first passage percolation on the complete graph equipped with independent and identically distributed positive edge weights. We consider the case where the lower extreme values of the edge weights are highly separated. This model exhibits strong disorder and a crossover between local and global scales. Local neighborhoods are related to invasion percolation that display self-organised criticality. Globally, the edges with relevant edge weights form a barely supercritical Erdős–Rényi random graph that can be described by branching processes. This near-critical behaviour gives rise to optimal paths that are considerably longer than logarithmic in the number of vertices, interpolating between random graph and minimal spanning tree path lengths. Crucial to our approach is the quantification of the extreme-value behavior of small edge weights in terms of a sequence of parameters \((s_n)_{n\ge 1}\) that characterises the different universality classes for first passage percolation on the complete graph. We investigate the case where \(s_n\rightarrow \infty \) with \(s_n=o(n^{1/3})\), which corresponds to the barely supercritical setting. We identify the scaling limit of the weight of the optimal path between two vertices, and we prove that the number of edges in this path obeys a central limit theorem with mean approximately \(s_n\log {(n/s_n^3)}\) and variance \(s_n^2\log {(n/s_n^3)}\). Remarkably, our proof also applies to n-dependent edge weights of the form \(E^{s_n}\), where E is an exponential random variable with mean 1, thus settling a conjecture of Bhamidi et al. (Weak disorder asymptotics in the stochastic meanfield model of distance. Ann Appl Probab 22(1):29–69, 2012). The proof relies on a decomposition of the smallest-weight tree into an initial part following invasion percolation dynamics, and a main part following branching process dynamics. The initial part has been studied in Eckhoff et al. (Long paths in first passage percolation on the complete graph I. Local PWIT dynamics. Electron. J. Probab. 25:1–45, 2020. https://doi.org/10.1214/20-EJP484); the current paper focuses on the global branching dynamics.

Similar content being viewed by others

1 Model and Summary of Results

In this paper, we study first passage percolation on the complete graph equipped with independent and identically distributed positive and continuous edge weights. In contrast to earlier work [11, 12, 16, 20, 27], we consider the case where the extreme values of the edge weights are highly separated.

We start by introducing first passage percolation (FPP). Given a graph \({\mathcal {G}}=(V({\mathcal {G}}),E({\mathcal {G}}))\), let \((Y_e^{\scriptscriptstyle ({\mathcal {G}})})_{e\in E({\mathcal {G}})}\) denote a collection of positive edge weights. Thinking of \(Y_e^{\scriptscriptstyle ({\mathcal {G}})}\) as the cost of crossing an edge e, we can define a metric on \(V({\mathcal {G}})\) by setting

where the infimum is over all paths \(\pi \) in \({\mathcal {G}}\) that join i to j, and \(Y^{\scriptscriptstyle ({\mathcal {G}})}\) represents the edge weights \((Y_e^{\scriptscriptstyle ({\mathcal {G}})})_{e\in E({\mathcal {G}})}\). We will always assume that the infimum in (1.1) is attained uniquely, by some (finite) path \(\pi _{i,j}\). We are interested in the situation where the edge weights \(Y_e^{\scriptscriptstyle ({\mathcal {G}})}\) are random, so that \(d_{{\mathcal {G}},Y^{({\mathcal {G}})}}\) is a random metric. In particular, when the graph \({\mathcal {G}}\) is very large, with \(\left| V({\mathcal {G}})\right| =n\) say, we wish to understand the scaling behavior of the following quantities for fixed \(i,j \in V({\mathcal {G}})\):

-

(a)

The distance \(W_n=d_{{\mathcal {G}},Y^{({\mathcal {G}})}}(i,j)\)—the total edge cost of the optimal path \(\pi _{i,j}\);

-

(b)

The hopcount \(H_n\)—the number of edges in the optimal path \(\pi _{i,j}\);

-

(c)

The topological structure—the shape of the random neighborhood of a point.

In this paper, we consider FPP on the complete graph, which acts as a mean-field model for FPP on finite graphs. In [11], the question was raised what the universality classes are for this model. We bring the discussion substantially further by describing a way to distinguish several universality classes and by identifying the limiting behavior of first passage percolation in one of these classes. The cost regime introduced in (1.1) uses the information from all edges along the path and is known as the weak disorder regime. By contrast, in the strong disorder regime the cost of a path \(\pi \) is given by \(\max _{e \in \pi } Y_e^{\scriptscriptstyle ({\mathcal {G}})}\). We establish a firm connection between the weak and strong disorder regimes in first passage percolation. Interestingly, this connection also establishes a strong relation to invasion percolation (IP) on the Poisson-weighted infinite tree (PWIT), which is the local limit of IP on the complete graph, and also arises in the context of the minimal spanning tree on the complete graph (see e.g. [1]).

Our main interest is in the case \({\mathcal {G}}=K_n\), the complete graph on n vertices \(V(K_n)=[n]:=\left\{ 1,\ldots ,n\right\} \), equipped with independent and identically distributed (i.i.d.) edge weights \((Y_e^{\scriptscriptstyle (K_n)})_{e \in E(K_n)}\). We write Y for a random variable with \(Y\overset{d}{=}Y_e^{\scriptscriptstyle ({\mathcal {G}})}\), and assume that the distribution function \(F_{\scriptscriptstyle Y}\) of Y is continuous. For definiteness, we study the optimal path \(\pi _{1,2}\) between vertices 1 and 2. First, we introduce some general notation:

Notation. All limits in this paper are taken as n tends to infinity unless stated otherwise. A sequence of events \(({\mathcal {A}}_n)_n\) happens with high probability (whp) if \({\mathbb {P}}({\mathcal {A}}_n) \rightarrow 1\). For random variables \((X_n)_n, X\), we write \(X_n {\mathop {\longrightarrow }\limits ^{d}}X\), \(X_n {\mathop {\longrightarrow }\limits ^{\scriptscriptstyle {{\mathbb {P}}}}}X\) and \(X_n {\mathop {\longrightarrow }\limits ^{a.s.}}X\) to denote convergence in distribution, in probability and almost surely, respectively. For real-valued sequences \((a_n)_n\), \((b_n)_n\), we write \(a_n=O(b_n)\) if the sequence \((a_n/b_n)_n\) is bounded; \(a_n=o(b_n)\) if \(a_n/b_n \rightarrow 0\); \(a_n =\Theta (b_n)\) if the sequences \((a_n/b_n)_n\) and \((b_n/a_n)_n\) are both bounded; and \(a_n \sim b_n\) if \(a_n/b_n \rightarrow 1\). Similarly, for sequences \((X_n)_n\), \((Y_n)_n\) of random variables, we write \(X_n=O_{\scriptscriptstyle {{\mathbb {P}}}}(Y_n)\) if the sequence \((X_n/Y_n)_n\) is tight; \(X_n=o_{\scriptscriptstyle {{\mathbb {P}}}}(Y_n)\) if \(X_n/ Y_n {\mathop {\longrightarrow }\limits ^{\scriptscriptstyle {{\mathbb {P}}}}}0\); and \(X_n =\Theta _{{\mathbb {P}}}(Y_n)\) if the sequences \((X_n/Y_n)_n\) and \((Y_n/X_n)_n\) are both tight. We denote by \(\lfloor x\rfloor \) the greatest integer not exceeding x. Moreover, E denotes an exponentially distributed random variable with mean 1. We often need to refer to results from [21], and we will write, e.g., [Part I, Lemma 2.18] for [21, Lemma 2.18].

For a brief overview of notation particular to this paper, see p. 81.

1.1 First Passage Percolation with Regularly-Varying Edge Weights

In this paper, we will consider edge-weight distributions with a heavy tail near 0, in the sense that the distribution function \(F_{\scriptscriptstyle Y}(y)\) decays slowly to 0 as \(y\downarrow 0\). It will prove more convenient to express this notion in terms of inverse \(F_{\scriptscriptstyle Y}^{-1}(u)\), since we can write

where U is uniformly distributed on [0, 1]. Expressed in terms of \(F_{\scriptscriptstyle Y}^{-1}\), saying that the edge-weight distribution is heavy-tailed near 0 means that \(F_{\scriptscriptstyle Y}^{-1}(u)\) decays rapidly to 0 as \(u\downarrow 0\). We will quantify this notion in terms of the logarithmic derivative of \(F_{\scriptscriptstyle Y}^{-1}\), which will become large as \(u\downarrow 0\).

In this section, we will assume that

where \(\alpha \ge 0\) and \(t\mapsto L(t)\) is slowly varying as \(t\rightarrow \infty \). That is, for all \(a>0\), \(\lim _{t \rightarrow \infty }L(at)/L(t) = 1\). In other words, we assume that \(u\mapsto u \frac{d}{du}\log F_{\scriptscriptstyle Y}^{-1}(u)= \frac{d}{d(\log u)}\log F_{\scriptscriptstyle Y}^{-1}(u)\) is regularly varying as \(u\downarrow 0\). Recall that a function \({\tilde{L}}: (0,\infty ) \rightarrow (0,\infty )\) is called regularly varying as \(u\downarrow 0\) if \(\lim _{u\downarrow 0}{\tilde{L}}(au)/{\tilde{L}}(u)\) is finite but nonzero for all \(a>0\).

Define a sequence \(s_n\) by setting \(u=1/n\):

The asymptotics of the sequence \((s_n)_n\) quantify how heavy-tailed the edge-weight distribution is. For instance, an identically constant sequence, say \(s_n=s\), corresponds to a pure power law \(F_{\scriptscriptstyle Y}(y)=y^{1/s}\), \(F_{\scriptscriptstyle Y}^{-1}(u)=u^s\); larger values of s correspond to heavier-tailed distributions.

In this paper, we are interested in the regime where \(s_n\rightarrow \infty \), which corresponds to a very heavy-tailed distribution function \(F_{\scriptscriptstyle Y}(y)\) that decays to 0 slower than any power of y, as \(y\downarrow 0\).

To describe our scaling results, define

Then, for i.i.d. random variables \((Y_i)_{i \in {\mathbb {N}}}\) with distribution function \(F_{\scriptscriptstyle Y}\),

In view of (1.6), the family \((u_n(x))_{x\in (0,\infty )}\) are the characteristic values for \(\min _{i\in [n]} Y_i\). See [22] for a detailed discussion of extreme value theory.

Theorem 1.1

(Weight and hopcount—regularly-varying logarithmic derivatives) Suppose that the edge weights \((Y_e^{\scriptscriptstyle (K_n)})_{e \in E(K_n)}\) follow an n-independent distribution \(F_{\scriptscriptstyle Y}\) that satisfies (1.3). If the sequence \((s_n)_n\) from (1.4) satisfies \(s_n/\log \log n\rightarrow \infty \) and \(s_n=o(n^{1/3}),\) then there exist sequences \((\lambda _n)_n\) and \((\phi _n)_n\) with \(\phi _n/s_n \rightarrow 1,\)\(\lambda _n u_n(1) \rightarrow {\mathrm e}^{-\gamma },\) where \(\gamma \) is Euler’s constant, such that

Here Z is standard normal, and \(M^{\scriptscriptstyle (1)}, M^{\scriptscriptstyle (2)}\) are i.i.d. random variables for which \({{\mathbb {P}}}(M^{\scriptscriptstyle (j)}\le x)\) is the survival probability of a Poisson Galton–Watson branching process with mean x.

Let us discuss the result in Theorem 1.1 in more detail. Under the hypotheses of Theorem 1.1, \(u_n(x)\) varies heavily in x in the sense in that \(u_n(x+\delta )/u_n(x)\rightarrow \infty \) for every \(x,\delta >0\). Consequently, the extreme values are widely separated, which is characteristic of the strong disorder regime.

We see in (1.7) that \(W_n-\frac{1}{\lambda _n}\log {(n/s_n^3)}\approx u_n(M^{\scriptscriptstyle (1)}\vee M^{\scriptscriptstyle (2)})\), which means that the weight of the smallest-weight path has a deterministic part \(\frac{1}{\lambda _n}\log {(n/s_n^3)}\), while its random fluctuations are of the same order of magnitude as some of the typical values for the minimal edge weight adjacent to vertices 1 and 2. For \(j \in \left\{ 1,2\right\} \), one can think of \(M^{\scriptscriptstyle (j)}\) as the time needed to “escape” from the local neighborhood of vertex j. The sequences \((\lambda _n)_n\) and \((\phi _n)_n\) will be identified in (3.17)–(3.18), subject to slightly stronger assumptions.

The optimal paths in Theorem 1.1 are long paths because the asymptotic mean of the path length \(H_n\) in (1.8) is of larger order than \(\log n\), the path length that arises in many random graph contexts. See Sect. 2.2 for a comprehensive literature overview. The following example collects some edge-weight distributions that are covered by Theorem 1.1:

Example 1.2

(Examples of weight distributions)

-

(a)

Let \(a,\gamma >0\). Take \(Y_e^{\scriptscriptstyle (K_n)} \overset{d}{=}\exp (-a E^\gamma )\), for which \(\log F_{\scriptscriptstyle Y}^{-1}(u)=-a(\log (1/u))^\gamma \) and

$$\begin{aligned} s_n = a\gamma (\log n)^{\gamma -1}. \end{aligned}$$(1.9)The hypotheses of Theorem 1.1 are satisfied whenever \(\gamma >1\).

-

(b)

Let \(a,\gamma >0\). Take \(Y_e^{\scriptscriptstyle (K_n)} \overset{d}{=}U^{a(\log (1+\log (1/U)))^\gamma }\), for which \(\log F_{\scriptscriptstyle Y}^{-1}(u)=a\log u (\log (1+\log (1/u)))^\gamma \) and

$$\begin{aligned} s_n = a(\log (1+\log n))^\gamma + a\gamma \frac{\log n}{1+\log n}(\log (1+\log n))^{\gamma -1}. \end{aligned}$$(1.10)We note that \(s_n\sim a(\log \log n)^\gamma \) as \(n\rightarrow \infty \). The hypotheses of Theorem 1.1 are satisfied whenever \(\gamma >1\). We shall see, however, that the conclusions of Theorem 1.1 also hold when \(0<\gamma \le 1\); see Sect. 2.1 and Lemma 4.8.

-

(c)

Let \(a,\beta >0\). Take \(Y_e^{\scriptscriptstyle (K_n)} \overset{d}{=}\exp (-a U^{-\beta }/\beta )\), for which \(\log F_{\scriptscriptstyle Y}^{-1}(u) = -au^{-\beta } /\beta \) and

$$\begin{aligned} s_n = a n^\beta . \end{aligned}$$(1.11)The hypotheses of Theorem 1.1 are satisfied when \(0<\beta <1/3\). When \(\beta \ge 1/3\), we conjecture that the hopcount scaling (1.8) fails; see the discussion in Sect. 2.2. An analogue of the weight convergence (1.7) holds in a modified form; see [Part I, Theorem 1.1 and Example 1.4 (c)].

Notice that every sequence \((s_n)_n\) of the form \(s_n=n^{\alpha } L(n)\), for \(\alpha \ge 0\) and L slowly varying at infinity, can be obtained from a distribution by taking \(\log F_{\scriptscriptstyle Y}^{-1}(u)=\int u^{-1-\alpha }L(1/u)du\), i.e., the indefinite integral of the function \(u\mapsto u^{-1-\alpha }L(1/u)\). In Sect. 2.1 we will weaken the requirement \(s_n /\log \log n\rightarrow \infty \) to the requirement \(s_n\rightarrow \infty \) subject to an additional regularity assumption.

1.2 First Passage Percolation with n-Dependent Edge Weights

In Theorem 1.1, we started with a fixed edge-weight distribution and extracted a specific sequence \((s_n)_n\). For an essentially arbitrary distribution [subject to the relatively modest regular variation assumption in (1.3)], its FPP properties are fully encoded, at least for the purposes of the conclusions of Theorem 1.1, by the scaling properties of this sequence \((s_n)_n\). Thus, Theorem 1.1 shows the common behaviour of a universality class of edge-weight distributions, and shows that this universality class is described in terms of a sequence of real numbers \((s_n)_n\) and its scaling behaviour.

In this section, we reverse this setup. We take as input a sequence \((s_n)_n\) and consider the n-dependent edge-weight distribution

where E is exponentially distributed with mean 1. (For legibility, our notation will not indicate the implicit dependence of \(Y_e^{\scriptscriptstyle (K_n)}\) on n.) Then the conclusions of Theorem 1.1 hold verbatim:

Theorem 1.3

(Weight and hopcount—n-dependent edge weights) Let \(Y_e^{\scriptscriptstyle (K_n)}\overset{d}{=}E^{s_n},\) where \((s_n)_n\) is a positive sequence with \(s_n \rightarrow \infty ,\)\(s_n=o(n^{1/3}).\) Then

and

where Z is standard normal and \(M^{\scriptscriptstyle (1)}, M^{\scriptscriptstyle (2)}\) are i.i.d. random variables for which \({{\mathbb {P}}}(M^{\scriptscriptstyle (j)}\le x)\) is the survival probability of a Poisson Galton–Watson branching process with mean x.

We note that Theorem 1.3 resolves a conjecture in [11]. This problem is closely related to the problem of strong disorder on the complete graph, and has attracted considerable attention in the physics literature [18, 23, 33]. The convergence in (1.13) was proved in [Part I, Theorem 1.5 (a)] without the subtraction of the term \(\frac{1}{n^{s_n} \Gamma (1+1/s_n)^{s_n}}\log {(n/s_n^3)}\) in the argument, and under the stronger assumption that \(s_n/\log \log {n}\rightarrow \infty .\)Footnote 1

The edge-weight distribution in Theorem 1.3 allows for a simpler intuitive explanation of (1.7), while the convergence (1.14) verifies the heuristics for the strong disorder regime in [11, Sect. 1.4]. See Remark 4.6 for a discussion of the relation between these two results. As mentioned in Sect. 1.1, strong disorder here refers to the fact that when \(s_n\rightarrow \infty \) the values of the random weights \(E_e^{s_n}\) depend strongly on the disorder \((E_e)_{e\in E({\mathcal {G}})}\), making small values increasingly more, and large values increasingly less, favorable. Mathematically, the elementary limit

expresses the convergence of the \(\ell ^s\) norm towards the \(\ell ^\infty \) norm and establishes a relationship between the weak disorder regime and the strong disorder regime of FPP.

Remarkably, a similar argument actually also applies to Theorem 1.1, exemplifying that these settings are in the same universality class. Indeed, Theorem 1.3 shows that n-dependent distributions can be understood in the same framework as the n-independent distributions in Example 1.2. We next explain this comparison and generalize our results further by explaining the universal picture behind them.

2 The Universal Picture

In Sect. 2.1, we generalize the results in Theorems 1.1 and 1.3 to a larger class of edge weights and provide a common language that allows us to prove these results in one go. Having reached this higher level of abstraction, in Sect. 2.2, we will embed the results achieved here in the wider picture of universality classes of FPP, and provide conjectures or results how to describe all universality classes and what the scaling behaviour of each of them might be. Links to the relevant literature and existing results are provided. For a short guide to notation, see p. 81.

2.1 Description of the Class of Edge Weights to Which Our Results Apply

In this section, we describe a general framework containing both Theorem 1.1 as well as Theorem 1.3. This framework, which is in terms of i.i.d. exponential random variables, determines the precise conditions that the edge weights need to satisfy for the results in Theorems 1.1–1.3 to apply. Interestingly, due to the parametrization in terms of exponential random variables, this general framework also provides a clear link between the near-critical Erdős–Rényi random graph and our first passage percolation problem where the lower extremes of the edge-weight distribution are highly separated. Finally and conveniently, this framework allows us to prove these theorems simultaneously. In particular, both the n-independent edge weights in Theorem 1.1, as well as the n-dependent ones in Theorem 1.3, are key examples of the class of edge weights that we will study in this paper.

For fixed n, the edge weights \((Y_e^{\scriptscriptstyle (K_n)})_{e\in E(K_n)}\) are independent for different e. However, there is no requirement that they are independent over n, and in fact in Sect. 5, we will produce \(Y_e^{\scriptscriptstyle (K_n)}\) using a fixed source of randomness not depending on n. Therefore, it will be useful to describe the randomness on the edge weights \(((Y_e^{\scriptscriptstyle (K_n)})_{e\in E(K_n)}:n \in {\mathbb {N}})\) uniformly across the sequence. It will be most useful to give this description in terms of exponential random variables. Fix independent exponential mean 1 variables \((X_e^{\scriptscriptstyle (K_n)})_{e\in E(K_n)}\), and define

where \(g:(0,\infty )\rightarrow (0,\infty )\) is a strictly increasing function. The relation between g and the distribution function \(F_{\scriptscriptstyle Y}\) is given by

We define

Let \(Y_1,\dotsc ,Y_n\) be i.i.d. with \(Y_i=g(E_i)\) as in (2.1). Since g is increasing,

Because of this convenient relation between the edge weights \(Y_e^{\scriptscriptstyle (K_n)}\) and exponential random variables, we will express our hypotheses about the distribution of the edge weights in terms of conditions on the functions \(f_n(x)\) as \(n\rightarrow \infty \).

Consider first the case \(Y_e^{\scriptscriptstyle (K_n)} \overset{d}{=}E^{s_n}\) from Theorem 1.3. From (2.1), we have \(g(x)=g_n(x)=x^{s_n}\), so that (2.3) yields

Thus, (2.4)–(2.5) show that the parameter \(s_n\) measures the relative sensitivity of \(\min _{i\in [n]}Y_i\) to fluctuations in the variable E. In general, we will have \(f_n(x)\approx f_n(1)x^{s_n}\) if x is appropriately close to 1 and \(s_n\approx f_n'(1)/f_n(1)\). These observations motivate the following conditions on the functions \((f_n)_n\), which we will use to relate the distributions of the edge weights \(Y_e^{\scriptscriptstyle (K_n)}\), \(n\in {\mathbb {N}}\), to a sequence \((s_n)_n\):

Condition 2.1

(Scaling of \(f_n\)) For every \(x\ge 0,\)

Even though we will rely on Condition 2.1 when \(s_n\rightarrow \infty \) and \(s_n=o(n^{1/3})\), we strongly believe that the scaling of the sequence \((s_n)_n\) actually characterises the universality classes, in the sense that the behaviour of \(H_n\) and \(W_n\) is similar for edge weights for \((s_n)_n\) with similar scaling behaviour, and different for sequences that have different scaling. We elaborate on this in Sect. 2.2.1, where we identify eight different universality classes and the expected and/or proved results in them.

Condition 2.2

(Density bound for small weights) There exist \(\varepsilon _0>0,\)\(\delta _0\in \left( 0,1\right] \) and \(n_0 \in {\mathbb {N}}\) such that

Condition 2.3

(Density bound for large weights)

-

(a)

For all \(R>1,\) there exist \(\varepsilon >0\) and \(n_0\in {\mathbb {N}}\) such that for every \(1\le x\le R,\) and \(n \ge n_0,\)

$$\begin{aligned} \frac{xf_n'(x)}{f_n(x)}\ge \varepsilon s_n. \end{aligned}$$(2.8) -

(b)

For all \(C>1,\) there exist \(\varepsilon >0\) and \(n_0\in {\mathbb {N}}\) such that (2.8) holds for every \(n\ge n_0\) and every \(x\ge 1\) satisfying \(f_n(x)\le C f_n(1) \log n.\)

Notice that Condition 2.1 implies that \(f_n(1) \sim u_n(1)\) [recall the definition of \(u_n(x)\) in (1.5)] whenever \(s_n=o(n)\). Indeed, by (2.3) we can write \(u_n(1)=f_n(x_n^{1/s_n})\) for \(x_n = (-n \log (1-1/n))^{s_n}\). Since \(s_n=o(n)\), we have \(x_n=1-o(1)\) and the monotonicity of \(f_n\) implies that \(f_n(x_n^{1/s_n})/f_n(1)\rightarrow 1\). We remark also that (1.6) remains valid if \(u_n(x)\) is replaced by \(f_n(x)\).

We are now in a position to state our main theorem:

Theorem 2.4

(Weight and hopcount—general edge weights) Assume that Conditions 2.1–2.3 hold for a positive sequence \((s_n)_n\) with \(s_n\rightarrow \infty \) and \(s_n=o(n^{1/3}).\) Then there exist sequences \((\lambda _n)_n\) and \((\phi _n)_n\) such that \(\phi _n/s_n \rightarrow 1,\)\(\lambda _n f_n(1) \rightarrow {\mathrm e}^{-\gamma },\) where \(\gamma \) is Euler’s constant, and

where Z is standard normal, and \(M^{\scriptscriptstyle (1)}, M^{\scriptscriptstyle (2)}\) are i.i.d. random variables for which \({{\mathbb {P}}}(M^{\scriptscriptstyle (j)}\le x)\) is the survival probability of a Poisson Galton–Watson branching process with mean x. The convergences in (2.9)–(2.10) hold jointly and the limiting random variables are independent.

The sequences \((\lambda _n)_n\) and \((\phi _n)_n\) are identified in (3.17)–(3.18), subject to the additional Condition 2.6. The proof of Theorem 2.4 is given in Sect. 3.7.

Relation between Theorem 2.4and Theorems 1.1and 1.3. Theorems 1.1 and 1.3 follow from Theorem 2.4: in the case \(Y_e^{\scriptscriptstyle (K_n)}\overset{d}{=}E^{s_n}\) from Theorem 1.3, (2.6)–(2.8) hold identically with \(\varepsilon _0=\varepsilon =1\) and we explicitly compute \(\lambda _n=n^{s_n} \Gamma (1+1/s_n)^{s_n}\) and \(\phi _n=s_n\) in Example 6.1. We will prove in Lemma 4.8 that the distributions in Theorem 1.1 satisfy the assumptions of Theorem 2.4. The convergence (1.7) in Theorem 1.1 is equivalent to (2.9) in Theorem 2.4 by the observation that, for any non-negative random variables \((T_n)_n\) and \({\mathcal {M}}\),

where the convergence is in distribution, in probability or almost surely; see e.g. [Part I, Lemma 5.5] for an example.

The following example describes a generalization of Theorem 1.3:

Example 2.5

Let \((s_n)_n\) be a positive sequence with \(s_n \rightarrow \infty \), \(s_n=o(n^{1/3})\). Let Z be a positive-valued continuous random variable with distribution function G such that \(G'(z)\) exists and is continuous at \(z=0\) with \(G'(0)>0\) [with \(G'(0)\) interpreted as a right-hand derivative]. Take \(Y_e^{\scriptscriptstyle (K_n)} \overset{d}{=}Z^{s_n}\), i.e., \(F_{\scriptscriptstyle Y}(y)=G(y^{1/s_n})\). Then Conditions 2.1–2.3 hold and Theorem 2.4 applies.

For instance, we can take Z to be a uniform distribution on an interval (0, b), for any \(b>0\). We give a proof of this assertion in Lemma 4.8.

Condition 2.3 can be strengthened to the following condition that will be equivalent for our purposes:

Condition 2.6

(Extended density bound) There exist \(\varepsilon _0>0\) and \(n_0 \in {\mathbb {N}}\) such that

Lemma 2.7

It suffices to prove Theorem 2.4 assuming Conditions 2.1, 2.2 and 2.6.

Lemma 2.7, which is proved in Sect. 4.3, reflects the fact that the upper tail of the edge-weight distribution does not substantially influence the first passage percolation problem.

Henceforth, except where otherwise noted, we will assume Conditions 2.1, 2.2 and 2.6. We will reserve the notation \(\varepsilon _0, \delta _0\) for some fixed choice of the constants in Conditions 2.2 and 2.6, with \(\varepsilon _0\) chosen small enough to satisfy both conditions.

2.2 Discussion of Our Results

In this section we discuss our results and state open problems.

2.2.1 The Universality Class in Terms of \(s_n\)

In Sect. 2.1, we have described an edge-weight universality class in terms of \(s_n\). In this paper, we investigate the case where \(s_n\rightarrow \infty \) with \(s_n=o(n^{1/3})\). We conjecture that all universality classes can be described in terms of the scaling behaviour of the sequence \((s_n)_n\) and below identify the eight universality classes that describe the different scaling behaviours. These eight cases are defined by how fast \(s_n\rightarrow 0\) (this gives rise to four cases), the case where \(s_n\) converges to a positive and finite constant, and by how \(s_n\rightarrow \infty \) (giving rise to three cases, including the one that is studied in this paper). We believe that this paper represents a major step forward in this direction in that it describes the scaling behaviour in a large regime of \((s_n)_n\) sequences. We next describe the eight scaling regimes of \(s_n\) and the results proved and/or predicted for them. We conjecture that these eight cases describe all universality classes for FPP on the complete graph, and it would be of interest to make this universal picture complete. Let us now describe these eight cases.

The regime \(s_n \rightarrow 0\). In view of (2.5), for \(s_n \rightarrow 0\), the first passage percolation problem approximates the graph metric, where the approximation is stronger the faster \(s_n\) tends to zero. We distinguish four different scaling regimes according to how fast \(s_n \rightarrow 0\):

-

(i)

Firstly, \(s_n\log {n}\rightarrow \gamma \in \left[ 0,\infty \right) \): the case that \(Y\overset{d}{=}E^{-\gamma }\) for \(\gamma \in (0,\infty )\) falls into this class with \(s_n=\gamma /\log n\) (see [20, Sect. 1.2]) and was investigated in [16], where it is proved that \(H_n\) is concentrated on at most two values. For the case of n-dependent edge weights \(Y_e^{\scriptscriptstyle (K_n)} \overset{d}{=}E^{s_n}\), it was observed in [20] that when \(s_n \log n\) converges to \(\gamma \) fast enough, the methods of [16] can be imitated and the concentration result for the hopcount continues to hold.

-

(ii)

When \(s_n\log {n}\rightarrow \infty \) but \(s_n^2\log {n}\rightarrow 0\), the variance factor \(s_n^2 \log (n/s_n^3)\) from the central limit theorem (CLT) in (2.10) tends to zero. Since \(H_n\) is integer-valued, it follows that (2.10) must fail in this case. First order asymptotics are investigated in [20], and it is shown that \(H_n/(s_n\log {n}){\mathop {\longrightarrow }\limits ^{\scriptscriptstyle {{\mathbb {P}}}}}1\), \(W_n/(u_n(1) s_n\log {n}){\mathop {\longrightarrow }\limits ^{\scriptscriptstyle {{\mathbb {P}}}}}{\mathrm e}\). It is tempting to conjecture that there exists an integer \(k=k_n\approx s_n\log {n}\) such that \(H_n\in \left\{ k_n,k_n+1\right\} \) whp.

-

(iii)

The regime where \(s_n\log n \rightarrow \infty \) but \(s_n^2\log n\rightarrow \gamma \in (0,\infty )\) corresponds to a critical window between the one- or two-point concentration conjectured in (ii) and the CLT scaling conjectured in (iv). It is natural to expect that \(H_n-\lfloor \phi _n\log n\rfloor \) is tight for an appropriately chosen sequence \(\phi _n\sim s_n\), although the distribution of \(H_n-\lfloor \phi _n \log n\rfloor \) might only have subsequential limits because of integer effects. Moreover, we would expect these subsequential limits in distribution to match with (ii) and (iv) in the limits \(\gamma \rightarrow 0\) or \(\gamma \rightarrow \infty \), respectively.

-

(iv)

When \(s_n \rightarrow 0, s_n^2\log {n}\rightarrow \infty \), we conjecture that the CLT for the hopcount in Theorem 2.4 remains true, and that \(u_n(1)^{-1}W_n-\frac{1}{\lambda _n}\log n\) converges to a Gumbel distribution for a suitable sequence \(\lambda _n\) and \(u_n(1)\). Unlike in the fixed s case, we expect no martingale limit terms to appear in the limiting distribution.

The fixed s regime. The fixed s regime was investigated in [11] in the case where \(Y\overset{d}{=}E^s\), and describes the boundary case between \(s_n\rightarrow 0\) and \(s_n\rightarrow \infty \). We conjecture that for other sequences of random variables for which Condition 2.1 is valid for some \(s\in (0,\infty )\) the CLT for the hopcount remains valid, while there exist V and \(\lambda (s)\) depending on the distribution Y such that \(u_n(1)^{-1}W_n-\frac{1}{\lambda (s)}\log {(n/s^3)}{\mathop {\longrightarrow }\limits ^{d}}V\). In general, V will not be equal to \((M^{\scriptscriptstyle (1)}\vee M^{\scriptscriptstyle (2)})^s\), see for example [11]. Instead, it is described by a sum of different terms involving Gumbel distributions and the martingale limit of a certain continuous-time branching process depending on the distribution. Our proof is inspired by the methods developed in [11]. The CLT for \(H_n\) in the fixed s regime can be recovered from our proofs; in fact, the reasoning in that case simplifies considerably compared to our more general setup.

The results in [11] match up nicely with ours. Indeed, in [11], it was shown that

where \(\lambda (s)=\Gamma (1+1/s)^s\), \(\Lambda _{1,2}\) is a Gumbel variable so that \({{\mathbb {P}}}(\Lambda _{1,2}\le x)={\mathrm e}^{-{\mathrm e}^{-x}}\) and \(L_s^{\scriptscriptstyle (1)}, L_s^{\scriptscriptstyle (2)}\) are two independent copies of the random variable \(L_s\) with \({{\mathbb {E}}}(L_s)=1\) solving the distributional equation

where \((L_{s,i})_{i\ge 1}\) are i.i.d. copies of \(L_s\) and \((E_i)_{i\ge 1}\) are i.i.d. exponentials with mean 1. We claim that the right-hand side of (2.13) converges to \(M^{\scriptscriptstyle (1)}\vee M^{\scriptscriptstyle (2)}\) as \(s\rightarrow \infty \), where \(M^{\scriptscriptstyle (1)}, M^{\scriptscriptstyle (2)}\) are as in Theorem 2.4. This is equivalent to the statement that \((-\log L_s^{\scriptscriptstyle (j)})^{1/s} {\mathop {\longrightarrow }\limits ^{d}}M^{\scriptscriptstyle (j)}\) as \(s\rightarrow \infty \). Assume that \((-\log L_s^{\scriptscriptstyle (j)})^{1/s}\) converges in distribution to a random variable \({\mathcal {M}}\). Then

and using (2.14) we deduce that \({\mathcal {M}}\) is the solution of the equation

where \(({\mathcal {M}}_i)_{i\ge 1}\) are i.i.d. copies of \({\mathcal {M}}\) independent of \((E_i)_{i\ge 1}\). The unique solution to (2.16) is the random variable with \({{\mathbb {P}}}({\mathcal {M}}\le x)\) being the survival probability of a Poisson Galton–Watson process with mean x, so that \({\mathcal {M}}{\mathop {=}\limits ^{d}}M^{\scriptscriptstyle (1)}\).

The regime \(s_n\rightarrow \infty \). The regime \(s_n\rightarrow \infty \) can be further separated into three cases.

-

(i)

Firstly, the case where \(s_n\rightarrow \infty \) with \(s_n/n^{1/3}\rightarrow 0\) is the main topic of this paper.

-

(ii)

Secondly, the regime where \(s_n/n^{1/3}\rightarrow \gamma \in (0,\infty )\) corresponds to the critical window between the minimal spanning tree case discussed below and the case (i) studied here. It is natural to expect (see also Theorems 1.1 and 1.3) that \(H_n/n^{1/3}\) converges to a non-trivial limit that depends sensitively on \(\gamma \), and that, when \(\gamma \rightarrow 0\) and \(\gamma \rightarrow \infty \) matches up with the cases (i) and (iii) discussed above and below, respectively.

-

(iii)

Finally, the regime \(s_n/n^{1/3} \rightarrow \infty \). Several of our methods do not extend to the case where \(s_n/n^{1/3} \rightarrow \infty \); indeed, we conjecture that the CLT in Theorem 2.4 ceases to hold in this regime. In this case, our proof clearly suggests that first passage percolation (FPP) on the complete graph is closely approximated by invasion percolation (IP) on the Poisson-weighted infinite tree (PWIT), studied in [2], whenever \(s_n\rightarrow \infty \), see also [21]. It it tempting to predict that \(H_n/n^{1/3}\) converges to the same limit as the graph distance between two vertices for the minimal spanning tree on the complete graph as identified in [3].

2.2.2 First Passage Percolation on Random Graphs

FPP on random graphs has attracted considerable attention in the past years, and our research was strongly inspired by its studies. In [17], the authors show that for the configuration model with finite-variance degrees (and related graphs) and edge weights with a continuous distribution not depending on n, there exists only a single universality class. Indeed, if we define \(W_n\) and \(H_n\) to be the weight of and the number of edges in the smallest-weight path between two uniform vertices in the graph, then there exist positive, finite constants \(\alpha , \beta , \lambda \) and sequences \((\alpha _n)_n,(\lambda _n)_n\), with \(\alpha _n\rightarrow \alpha \), \(\lambda _n\rightarrow \lambda \), such that \(W_n-(1/\lambda _n)\log {n}\) converges in distribution, while \(H_n\) satisfies a CLT with asymptotic mean \(\alpha _n \log {n}\) and asymptotic variance \(\beta \log {n}\).

Related results for exponential edge weights appear for the Erdős–Rényi random graph in [15], to certain inhomogeneous random graphs in [28] and to the small-world model in [30]. The diameter of the weighted graph is studied in [6], and relations to competition on r-regular graphs are examined in [7]. Finally, the smallest-weight paths with most edges from a single source or between any pair in the graph are investigated in [5].

We conjecture that our results are closely related to FPP on random graphs with infinite-variance degrees. Such graphs, sometimes called scale-free random graphs, have been suggested in the networking community as appropriate models for various real-world networks. See [8, 31] for extended surveys of real-world networks, and [19, 24, 32] for more details on random graph models of such real-world networks. FPP on infinite-variance random graphs with exponential weights was first studied in [13, 14], of which the case of finite-mean degrees studied in [14] is most relevant for our discussion here. There, it was shown that a linear transformation of \(W_n\) converges in distribution, while \(H_n\) satisfies a CLT with asymptotic mean and variance \(\alpha \log {n}\), where \(\alpha \) is a simple function of the power-law exponent of the degree distribution of the configuration model. Since the configuration model with infinite-variance degrees whp contains a complete graph of size a positive power of n, it can be expected that the universality classes on these random graphs are closely related to those on the complete graph \(K_n\). In particular, the strong universality result for finite-variance random graphs is false, which can be easily seen by observing that for the weight distribution \(1+E\), where E is an exponential random variable, the hopcount \(H_n\) is of order \(\log \log {n}\) (as for the graph distance [26]), rather than \(\log {n}\) as it is for exponential weights. See [9] for two examples proving that strong universality indeed fails in the infinite-variance setting, and [4, 10] for further results. The area has attracted substantial attention through the work of Komjáthy and collaborators, see also [25, 29] for recent work in geometric contexts.

2.2.3 Extremal Functionals for FPP on the Complete Graph

Many more fine results are known for FPP on the complete graph with exponential edge weights. In [27], the weak limits of the rescaled path weight and flooding are determined, where the flooding is the maximal smallest weight between a source and all vertices in the graph. In [12] the same is performed for the diameter of the graph. It would be of interest to investigate the weak limits of the flooding and diameter in our setting.

3 Detailed Results, Overview and Classes of Edge Weights

In this section, we provide an overview of the proof of our main results.

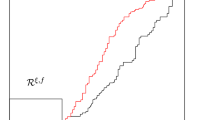

This section is organised as follows. In Sect. 3.1, we explain how FPP clusters can be described in terms of an appropriate exploration process, both from one as well as from two sources. In Sect. 3.2, we discuss how this exploration process can be coupled to first passage percolation on the Poisson-weighted infinite tree (PWIT). In Sect. 3.3, we interpret the FPP dynamics on the PWIT as a continuous-time branching process, and study one- and two-vertex characteristics associated with it. The two-vertex characteristics are needed since we explore from two sources. Due to the near-critical behavior of the involved branching processes, the FPP clusters may run at rather different speeds, and we need to make sure that their sizes are comparable. This is achieved by freezing the fastest growing one, which is explained in detail in Sect. 3.4, both for the time at which this happens as well as the sizes of the FPP cluster at the freezing times. There, we also investigate the collision times between the two exploration processes, which correspond to (near-) shortest paths between the two sources. In Sect. 3.5, we couple FPP on the complete graph from two sources to a continuous-time branching process from which we can retrieve the FPP clusters by a thinning procedure. In Sect. 3.6, we use the explicit distribution of the collision edge (whether thinned or not) to derive its scaling properties, both for the time at which it occurs, as well as the generations of the vertices it consist of. Finally, in Sect. 3.7, we show that the first point of the Cox process that describes the collision edge is with high probability unthinned and complete the proof of our main results.

3.1 FPP Exploration Processes

To understand smallest-weight paths in the complete graph, we study the first passage exploration process from one or two sources. Recall from (1.1) that \(d_{K_n,Y^{\scriptscriptstyle (K_n)}}(i,j)\) denotes the total cost of the optimal path \(\pi _{i,j}\) between vertices i and j.

3.1.1 One-Source Exploration Process

For a vertex \(j\in V(K_n)\), let the one-source smallest-weight tree \(\mathsf{SWT}_t^{\scriptscriptstyle (j)}\) be the connected subgraph of \(K_n\) defined by

Note that \(\mathsf{SWT}_t^{\scriptscriptstyle (j)}\) is indeed a tree: if two optimal paths \(\pi _{j,k},\pi _{j,k'}\) pass through a common vertex i, both paths must contain \(\pi _{j,i}\) since the minimizers of (1.1) are unique. Moreover, by construction, FPP distances from the source vertex j can be recovered from arrival times in the process \(\mathsf{SWT}_t^{\scriptscriptstyle (j)}\):

To visualize the process \((\mathsf{SWT}_t^{\scriptscriptstyle (j)})_{t\ge 0}\), think of the edge weight \(Y_e^{\scriptscriptstyle (K_n)}\) as the time required for fluid to flow across the edge e. Place a source of fluid at j and allow it to spread through the graph. Then \(V(\mathsf{SWT}_t^{\scriptscriptstyle (j)})\) is precisely the set of vertices that have been wetted by time t, while \(E(\mathsf{SWT}_t^{\scriptscriptstyle (j)})\) is the set of edges along which, at any time up to t, fluid has flowed from a wet vertex to a previously dry vertex. Equivalently, an edge is added to \(\mathsf{SWT}_t^{\scriptscriptstyle (j)}\) whenever it becomes completely wet, with the additional rule that an edge is not added if it would create a cycle.

Because fluid begins to flow across an edge only after one of its endpoints has been wetted, the age of a vertex—the length of time that a vertex has been wet—determines how far fluid has traveled along the adjoining edges. Given \(\mathsf{SWT}_t^{\scriptscriptstyle (j)}\), the future of the exploration process will therefore be influenced by the current ages of vertices in \(\mathsf{SWT}_t^{\scriptscriptstyle (j)}\), and the nature of this effect depends on the probability law of the edge weights \((Y_e^{\scriptscriptstyle (K_n)})_e\). In the sequel, for a subgraph \({\mathcal {G}}=(V({\mathcal {G}}),E({\mathcal {G}}))\) of \(K_n\), we write \({\mathcal {G}}\) instead of \(V({\mathcal {G}})\) for the vertex set when there is no risk of ambiguity.

3.1.2 Two-Source Exploration Process

Consider now two vertices from \(K_n\), which for simplicity we take to be vertices 1 and 2. The two-source smallest-weight tree \(\mathsf{SWT}_t^{\scriptscriptstyle (1,2)}\) is the subgraph of \(K_n\) defined by

where

In other words, \(\mathsf{SWT}_t^{\scriptscriptstyle (1,2)}\) is the union, over all vertices i within FPP distance t of vertex 1 or vertex 2, of an optimal path, either \(\pi _{1,i}\) or \(\pi _{2,i}\) whichever has smaller weight.

Because the edge weight distribution has no atoms, no two optimal paths have the same length. It follows that, a.s., \(\mathsf{SWT}_t^{\scriptscriptstyle (1,2)}\) is the union of two vertex-disjoint trees for all t. (To see this, suppose vertex i is closer to vertex j than to vertex \(j'\), where \(\left\{ j,j'\right\} =\left\{ 1,2\right\} \). Then, given another vertex \(i'\) and a path \(\pi \) passing from \(i'\) to i to \(j'\), there must be a strictly shorter path passing from \(i'\) to i to j.) We note that

with strict containment for sufficiently large t.

To visualize the process \((\mathsf{SWT}_t^{\scriptscriptstyle (1,2)})_{t\ge 0}\), place sources of fluid at vertices 1 and 2 and allow both fluids to spread through the graph. Then, as before, \(V(\mathsf{SWT}_t^{\scriptscriptstyle (1,2)})\) is precisely the set of vertices that have been wetted by time t, while \(E(\mathsf{SWT}_t^{\scriptscriptstyle (1,2)})\) is the set of edges along which, at any time up to t, fluid has flowed from a wet vertex to a previously dry vertex. Equivalently, an edge is added to \(\mathsf{SWT}_t^{\scriptscriptstyle (1,2)}\) whenever it becomes completely wet, with the additional rules that an edge is not added if it would create a cycle or if it would connect the two connected components of \(\mathsf{SWT}_t^{\scriptscriptstyle (1,2)}\).

From the process \(\mathsf{SWT}_t^{\scriptscriptstyle (1,2)}\), we can partially recover FPP distances. Denote by \(T^{\scriptscriptstyle \mathsf{SWT}^{\scriptscriptstyle (1,2)}}(i)=\inf \left\{ t\ge 0:i\in \mathsf{SWT}_t^{\scriptscriptstyle (1,2)}\right\} \) the arrival time of a vertex \(i\in [n]\). Then, for \(j\in \left\{ 1,2\right\} \),

More generally, observing the process \((\mathsf{SWT}_t^{\scriptscriptstyle (1,2)})_{t\ge 0}\) allows us to recover the edge weights \(Y_e^{\scriptscriptstyle (K_n)}\) for all \(e\in \cup _{t\ge 0} E(\mathsf{SWT}_t^{\scriptscriptstyle (1,2)})\). However, in contrast to the one-source case, the FPP distance \(W_n=d_{K_n,Y^{\scriptscriptstyle (K_n)}}(1,2)\) cannot be determined by observing the process \((\mathsf{SWT}_t^{\scriptscriptstyle (1,2)})_{t\ge 0}\). Indeed, if vertices \(i_1,i_2\) satisfy \(i_1\in \mathsf{SWT}_t^{\scriptscriptstyle (1,2;1)}\) and \(i_2\in \mathsf{SWT}_t^{\scriptscriptstyle (1,2;2)}\) for some t, then by construction the edge \(\left\{ i_1,i_2\right\} \) between them will never be added to \(\mathsf{SWT}^{\scriptscriptstyle (1,2)}\) and there is no arrival time from which to determine the edge weight \(Y_{\left\{ i_1,i_2\right\} }^{\scriptscriptstyle (K_n)}\).

The optimal weight \(W_n\) is the minimum value

which is uniquely attained a.s. by our assumptions on the edge weights.

Definition 3.1

(Collision time and edge) The SWT collision time is \(T_\mathrm{coll}^{\scriptscriptstyle \mathsf{SWT}}=\tfrac{1}{2}W_n\). Let \(I_1^{\scriptscriptstyle \mathsf{SWT}},I_2^{\scriptscriptstyle \mathsf{SWT}}\) denote the (a.s. unique) minimizers in (3.7). The edge \(\left\{ I_1^{\scriptscriptstyle \mathsf{SWT}},I_2^{\scriptscriptstyle \mathsf{SWT}}\right\} \) is called the SWT collision edge.

In the fluid flow description above, \(T_\mathrm{coll}^{\scriptscriptstyle \mathsf{SWT}}\) is the time when the fluid from vertex 1 and the fluid from vertex 2 first collide, and this collision takes place inside the collision edge. Note that since fluid flows at rate 1 from both sides simultaneously, the overall distance is given by \(W_n=2T_\mathrm{coll}^{\scriptscriptstyle \mathsf{SWT}}\).

Proposition 3.2

(Smallest-weight path) The end points of the collision edge are explored before the collision time, \(T^{\scriptscriptstyle \mathsf{SWT}^{(1,2)}}(I_j^{\scriptscriptstyle \mathsf{SWT}}) < T_\mathrm{coll}^{\scriptscriptstyle \mathsf{SWT}}\) for \(j\in \left\{ 1,2\right\} .\) The optimal path \(\pi _{1,2}\) from vertex 1 to vertex 2 is the union of the unique path in \(\mathsf{SWT}^{\scriptscriptstyle (1,2;1)}_{T_\mathrm{coll}^{\scriptscriptstyle \mathsf{SWT}}}\) from 1 to \(I_1^{\scriptscriptstyle \mathsf{SWT}};\) the collision edge \(\left\{ I_1^{\scriptscriptstyle \mathsf{SWT}},I_2^{\scriptscriptstyle \mathsf{SWT}}\right\} ;\) and the unique path in \(\mathsf{SWT}^{\scriptscriptstyle (1,2;2)}_{T_\mathrm{coll}^{\scriptscriptstyle \mathsf{SWT}}}\) from \(I_2^{\scriptscriptstyle \mathsf{SWT}}\) to 2. Furthermore

We will not use Proposition 3.2 and the formula (3.7), which are a special case of Lemma 3.20 and Theorem 3.22. These generalizations deal with a freezing procedure that we will explain below. Note that the conditioning in (3.8) reflects the information about \(Y_{\{i_1,i_2\}}\) gained by knowing that \(i_1\) and \(i_2\) belong to different connected components of \(\mathsf{SWT}^{\scriptscriptstyle (1,2)}_t\): during the period of time when one vertex was explored but not the other, the fluid must not have had time to flow from the earlier-explored vertex to the later-explored vertex.

3.2 Coupling FPP on \(K_n\) to FPP on the Poisson-Weighted Infinite Tree

In this section, we state results that couple FPP on \(K_n\) to FPP on the Poisson-weighted infinite tree (PWIT). We start by explaining the key idea, coupling of order statistics of exponentials to Poisson processes, in Sect. 3.2.1. We continue to define the PWIT in Sect. 3.2.2. We then couple FPP on \(K_n\) to FPP on the PWIT, for one source in Sect. 3.2.3 and finally for two sources in Sect. 3.2.4.

3.2.1 Order Statistics of Exponentials and Poisson Processes

To study the smallest-weight tree from a vertex, say vertex 1, let us consider the time until the first vertex is added. By construction, \(\min _{i\in [n]\setminus \left\{ 1\right\} } Y_{\left\{ 1,i\right\} }^{\scriptscriptstyle (K_n)} \overset{d}{=}f_n\left( \frac{n}{n-1}E\right) \) [cf. (2.4)], where E is an exponential random variable of mean 1. We next extend this to describe the distribution of the order statistics \(Y^{(K_n)}_{(1)}< Y^{(K_n)}_{(2)}< \cdots < Y^{(K_n)}_{(n-1)}\) of the weights of edges from vertex 1 to all other vertices.

By (2.1) and (2.3), we can write \(Y_{\left\{ 1,i\right\} }^{\scriptscriptstyle (K_n)} = f_n(E'_i )\), where \(E'_i=nX_{\left\{ 1,i\right\} }^{\scriptscriptstyle (K_n)}\) are independent, exponential random variables with rate 1/n. We can realize \(E'_i\) as the first point of a Poisson point process \({\mathcal {P}}^{\scriptscriptstyle (i)}\) with rate 1/n, with points \(X^{\scriptscriptstyle (i)}_1<X^{\scriptscriptstyle (i)}_2<\cdots \), chosen independently for different \(i=2,\ldots ,n\). We can also form the Poisson point process \({\mathcal {P}}^{\scriptscriptstyle (1)}\) with rate 1/n, corresponding to \(i=1\), although this Poisson point process is not needed to produce an edge weight. To each point of \({\mathcal {P}}^{\scriptscriptstyle (i)}\), associate the mark i.

Now amalgamate all n Poisson point processes to form a single Poisson point process of intensity 1, with points \(X_1<X_2<\cdots \). Each point \(X_k\) has an associated mark \(M_k\). By properties of Poisson point processes, given the points \(X_1<X_2<\cdots \), the marks \(M_k\) are chosen uniformly at random from [n], different marks being independent.

To complete the construction of the edge weights \(Y^{\scriptscriptstyle (K_n)}_{\left\{ 1,i\right\} }\), we need to recover the first points \(X^{\scriptscriptstyle (i)}_1\), for all \(i=2,\ldots ,n\), from the amalgamated points \(X_1<X_2<\cdots \). Thus we will thin a point \(X_k\) when \(M_k=1\) (since \(i=1\) is not used to form an edge weight) or when \(M_k=M_{k'}\) for some \(k'<k\) (since such a point is not the first point of its corresponding Poisson point process). Then

In the next step, we extend this result to the smallest-weight tree \(\mathsf{SWT}^{\scriptscriptstyle (1)}\) using a relation to FPP on the Poisson-weighted infinite tree.

3.2.2 The Poisson-Weighted Infinite Tree

The Poisson-weighted infinite tree is an infinite edge-weighted tree in which every vertex has infinitely many (ordered) children. Before giving the definitions, we recall the Ulam–Harris notation for describing trees.

Define the tree \({\mathcal {T}}^{\scriptscriptstyle (1)}\) as follows. The vertices of \({\mathcal {T}}^{\scriptscriptstyle (1)}\) are given by finite sequences of natural numbers headed by the symbol \(\varnothing _1\), which we write as \(\varnothing _1 j_1 j_2\cdots j_k\). The sequence \(\varnothing _1\) denotes the root vertex of \({\mathcal {T}}^{\scriptscriptstyle (1)}\). We concatenate sequences \(v=\varnothing _1 i_1\cdots i_k\) and \(w=\varnothing _1 j_1\cdots j_m\) to form the sequence \(vw=\varnothing _1 i_1\cdots i_k j_1\cdots j_m\) of length \(\left| vw\right| =\left| v\right| +\left| w\right| =k+m\). Identifying a natural number j with the corresponding sequence of length 1, the \(j^\text {th}\) child of a vertex v is vj, and we say that v is the parent of vj. Write \(p\left( v\right) \) for the (unique) parent of \(v\ne \varnothing _1\), and \(p^{k}\!\left( v\right) \) for the ancestor k generations before, \(k\le \left| v\right| \).

We can place an edge (which we could consider to be directed) between every \(v\ne \varnothing _1\) and its parent; this turns \({\mathcal {T}}^{\scriptscriptstyle (1)}\) into a tree with root \(\varnothing _1\). With a slight abuse of notation, we will use \({\mathcal {T}}^{\scriptscriptstyle (1)}\) to mean both the set of vertices and the associated graph, with the edges given implicitly according to the above discussion, and we will extend this convention to any subset \(\tau \subset {\mathcal {T}}^{\scriptscriptstyle (1)}\). We also write \(\partial \tau =\left\{ v\notin \tau :p\left( v\right) \in \tau \right\} \) for the set of children one generation away from \(\tau \).

To describe the PWIT formally, we associate weights to the edges of \({\mathcal {T}}^{\scriptscriptstyle (1)}\). By construction, we can index these edge weights by non-root vertices, writing the weights as \(X=(X_v)_{v\ne \varnothing _1}\), where the weight \(X_v\) is associated to the edge between v and its parent p(v). We make the convention that \(X_{v0}=0\).

Definition 3.3

(Poisson-weighted infinite tree) The Poisson-weighted infinite tree (PWIT) is the random tree \(({\mathcal {T}}^{\scriptscriptstyle (1)},X)\) for which \(X_{vk}-X_{v(k-1)}\) is exponentially distributed with mean 1, independently for each \(v\in {\mathcal {T}}^{\scriptscriptstyle (1)}\) and each \(k\in {\mathbb {N}}\). Equivalently, the weights \((X_{v1},X_{v2},\ldots )\) are the (ordered) points of a Poisson point process of intensity 1 on \((0,\infty )\), independently for each v.

Motivated by (3.9), we study FPP on \({\mathcal {T}}^{\scriptscriptstyle (1)}\) with edge weights \((f_n(X_v))_v\):

Definition 3.4

(First passage percolation on the Poisson-weighted infinite tree) For FPP on \({\mathcal {T}}^{\scriptscriptstyle (1)}\) with edge weights \((f_n(X_v))_v\), let the FPP edge weight between \(v\in {\mathcal {T}}^{\scriptscriptstyle (1)}\setminus \left\{ \varnothing _1\right\} \) and \(p\left( v\right) \) be \(f_n(X_v)\). The FPP distance from \(\varnothing _1\) to \(v\in {\mathcal {T}}^{\scriptscriptstyle (1)}\) is

and the FPP exploration process \(\mathsf{BP}^{\scriptscriptstyle (1)}=(\mathsf{BP}^{\scriptscriptstyle (1)}_t)_{t\ge 0}\) on \({\mathcal {T}}^{\scriptscriptstyle (1)}\) is defined by \(\mathsf{BP}^{\scriptscriptstyle (1)}_t=\left\{ v\in {\mathcal {T}}^{\scriptscriptstyle (1)}:T_v\le t\right\} \).

Note that the FPP edge weights \((f_n(X_{vk}))_{k\in {\mathbb {N}}}\) are themselves the points of a Poisson point process on \((0,\infty )\), independently for each \(v\in {\mathcal {T}}^{\scriptscriptstyle (1)}\). The intensity measure of this Poisson point process, which we denote by \(\mu _n\), is the image of Lebesgue measure on \((0,\infty )\) under \(f_n\). Since \(f_n\) is strictly increasing by assumption, \(\mu _n\) has no atoms and we may abbreviate \(\mu _n(\left( a,b\right] )\) as \(\mu _n(a,b)\) for simplicity. Thus \(\mu _n\) is characterized by

for any measurable function \(h:\left[ 0,\infty \right) \rightarrow \left[ 0,\infty \right) \).

Clearly, and as suggested by the notation, the FPP exploration process \(\mathsf{BP}\) is a continuous-time branching process:

Proposition 3.5

(FPP on PWIT is CTBP) The process \(\mathsf{BP}^{\scriptscriptstyle (1)}\) is a continuous-time branching process (CTBP), started from a single individual \(\varnothing _1,\) where the ages at childbearing of an individual form a Poisson point process with intensity \(\mu _n,\) independently for each individual. The time \(T_v\) is the birth time \(T_v=\inf \left\{ t\ge 0:v\in \mathsf{BP}^{\scriptscriptstyle (1)}_t\right\} \) of the individual \(v\in {\mathcal {T}}^{\scriptscriptstyle (1)}.\)

3.2.3 Coupling One-Source Exploration to the PWIT

Similar to the analysis of the weights of the edges containing vertex 1, we now introduce a thinning procedure that allows us to couple \(\mathsf{BP}^{\scriptscriptstyle (1)}\) and \(\mathsf{SWT}^{\scriptscriptstyle (1)}\). Define \(M_{\varnothing _1}=1\) and to each other \(v\in {\mathcal {T}}^{\scriptscriptstyle (1)}\setminus \left\{ \varnothing _1\right\} \) associate a mark \(M_v\) chosen independently and uniformly from [n].

Definition 3.6

(Thinning—one CTBP) The vertex \(v\in {\mathcal {T}}^{\scriptscriptstyle (1)}\setminus \left\{ \varnothing _1\right\} \) is thinned if it has an ancestor \(v_0=p^{k}\!\left( v\right) \) (possibly v itself) such that \(M_{v_0}=M_w\) for some unthinned vertex \(w\in {\mathcal {T}}^{\scriptscriptstyle (1)}\) with \(T_w<T_{v_0}\).

This definition also appears as [Part I, Definition 2.8]. As explained there, this definition is not circular since whether or not a vertex v is thinned can be assessed recursively in terms of earlier-born vertices. Write \({\widetilde{\mathsf{BP}}}_t^{\scriptscriptstyle (1)}\) for the subgraph of \(\mathsf{BP}_t^{\scriptscriptstyle (1)}\) consisting of unthinned vertices.

Definition 3.7

Given a subset \(\tau \subset {\mathcal {T}}^{\scriptscriptstyle (1)}\) and marks \(M=(M_v :v \in \tau )\) with \(M_v\in [n]\), define \(\pi _M(\tau )\) to be the subgraph of \(K_n\) induced by the mapping \(\tau \rightarrow [n]\), \(v\mapsto M_v\). That is, \(\pi _M(\tau )\) has vertex set \(\left\{ M_v:v\in \tau \right\} \), with an edge between \(M_v\) and \(M_{p\left( v\right) }\) whenever \(v,p\left( v\right) \in \tau \).

Note that if the marks \((M_v)_{v\in \tau }\) are distinct then \(\pi _M(\tau )\) and \(\tau \) are isomorphic graphs.

The following theorem, taken from [Part I, Theorem 2.10] and proved in [Part I, Sect. 3.3], establishes a close connection between FPP on \(K_n\) and FPP on the PWIT with edge weights \((f_n(X_v))_{v\in \tau }\):

Theorem 3.8

(Coupling to FPP on PWIT—one source) The law of \((\mathsf{SWT}_t^{\scriptscriptstyle (1)})_{t\ge 0}\) is the same as the law of \(\Bigl ( \pi _M\bigl ( {\widetilde{\mathsf{BP}}}_t^{\scriptscriptstyle (1)} \bigr ) \Bigr )_{t\ge 0}\).

Theorem 3.8 is based on an explicit coupling between the edge weights \((Y_e^{\scriptscriptstyle (K_n)})_e\) on \(K_n\) and \((X_v)_v\) on \({\mathcal {T}}^{\scriptscriptstyle (1)}\). We will describe a related coupling in Sect. 3.5. A general form of those couplings is given in Sect. 5.

3.2.4 Coupling Two-Source Exploration to the PWIT

Let \({\mathcal {T}}^{\scriptscriptstyle (1,2)}\) be the disjoint union of two independent copies \(({\mathcal {T}}^{\scriptscriptstyle (j)},X^{\scriptscriptstyle (j)})\), \(j \in \left\{ 1,2\right\} \), of the PWIT. We shall assume that the copies \({\mathcal {T}}^{\scriptscriptstyle (j)}\) are vertex-disjoint, with roots \(\varnothing _j\), so that we can unambiguously write \(X_v\) instead of \(X^{\scriptscriptstyle (j)}_v\) for \(v\in {\mathcal {T}}^{\scriptscriptstyle (j)}\), \(v\ne \varnothing _j\). We set \(M_{\varnothing _j}=j\) for \(j=1,2\), and otherwise the notation introduced for \({\mathcal {T}}^{\scriptscriptstyle (1)}\) is used on \({\mathcal {T}}^{\scriptscriptstyle (1,2)}\), verbatim. For example, for any subset \(\tau \subseteq {\mathcal {T}}^{\scriptscriptstyle (1,2)}\), we write \(\partial \tau =\left\{ v \not \in \tau :p\left( v\right) \in \tau \right\} \) for the boundary vertices of \(\tau \), and we define the subgraph \(\pi _M(\tau )\) for \(\tau \subset {\mathcal {T}}^{\scriptscriptstyle (1,2)}\) just as in Definition 3.7.

As in Proposition 3.5, the two-source FPP exploration process on \({\mathcal {T}}^{\scriptscriptstyle (1,2)}\) with edge weights \((f_n(X_v))_v\) starting from \(\varnothing _1\) and \(\varnothing _2\) is equivalent to the union \(\mathsf{BP}=\mathsf{BP}^{\scriptscriptstyle (1)} \cup \mathsf{BP}^{\scriptscriptstyle (2)}\) of two CTBPs. (In the fluid-flow formulation, the additional rule—an edge is not explored if it would join the connected components containing the two sources—does not apply.)

Definition 3.9

(Thinning—two CTBPs) The vertex \(v\in {\mathcal {T}}^{\scriptscriptstyle (1,2)}\setminus \left\{ \varnothing _1,\varnothing _2\right\} \) is thinned if it has an ancestor \(v_0=p^{k}\!\left( v\right) \) (possibly v itself) such that \(M_{v_0}=M_w\) for some unthinned vertex \(w\in {\mathcal {T}}^{\scriptscriptstyle (1,2)}\) with \(T_w<T_{v_0}\).

Note that this two-CTBP thinning rule is applied simultaneously across both trees: for instance, a vertex \(v\in {\mathcal {T}}^{\scriptscriptstyle (1)}\) can be thinned due to an unthinned vertex \(w\in {\mathcal {T}}^{\scriptscriptstyle (2)}\). Henceforth we will be concerned with the two-CTBP version of thinning. Write \({\widetilde{\mathsf{BP}}}_t\) for the subgraph of \(\mathsf{BP}_t=\mathsf{BP}_t^{\scriptscriptstyle (1)}\cup \mathsf{BP}_t^{\scriptscriptstyle (2)}\) consisting of unthinned vertices.

The following theorem is a special case of Theorem 3.26:

Theorem 3.10

(Coupling to FPP on PWIT—two sources) The law of \((\mathsf{SWT}_t^{\scriptscriptstyle (1,2)})_{t\ge 0}\) is the same as the law of \(\Bigl ( \pi _M\bigl ( {\widetilde{\mathsf{BP}}}_t \bigr ) \Bigr )_{t\ge 0}\).

We will not use Theorem 3.10, but instead rely on its generalization Theorem 3.26, since, in our setting, \(\mathsf{BP}^{\scriptscriptstyle (1)}\) and \(\mathsf{BP}^{\scriptscriptstyle (2)}\) can grow at rather different speeds. We will counteract this unbalance by an appropriate freezing procedure, as explained in more detail later on. Theorem 3.26 generalizes Theorem 3.10 to include this freezing.

We next state an equality in law for the collision time and collision edge. As a preliminary step, note that (3.8) can be rewritten in terms of the measure \(\mu _n\) as

By a Cox process with random intensity measure Z (with respect to a \(\sigma \)-algebra \({\mathscr {F}}\)) we mean a random point measure \({\mathcal {P}}\) such that Z is \({\mathscr {F}}\)-measurable and, conditionally on \({\mathscr {F}}\), \({\mathcal {P}}\) has the distribution of a Poisson point process with intensity measure Z. For notational convenience, given a sequence of intensity measures \(Z_n\) on \({\mathbb {R}}\times {\mathcal {X}}\), for some measurable space \({\mathcal {X}}\), we write \(Z_{n,t}\) for the measures on \({\mathcal {X}}\) defined by \(Z_{n,t}(\cdot )=Z_n(\left( -\infty , t\right] \times \cdot )\).

Thus (3.12) states that \(T_\mathrm{coll}^{\scriptscriptstyle \mathsf{SWT}}\) has the law of the first point of a Cox process on \({\mathbb {R}}\), where the intensity measure is given by a sum over \(\mathsf{SWT}^{\scriptscriptstyle (1,2;1)}\times \mathsf{SWT}^{\scriptscriptstyle (1,2;2)}\) (see also [11, Proposition 2.3]). Using Theorem 3.10, we can lift this equality in law to apply to the collision time and collision edge.

Theorem 3.11

(Cox process for collision edge) Let \({\mathcal {P}}_n^{\scriptscriptstyle \mathsf{SWT}}\) be a Cox process on \(\left[ 0,\infty \right) \times {\mathcal {T}}^{\scriptscriptstyle (1)} \times {\mathcal {T}}^{\scriptscriptstyle (2)}\) (with respect to the \(\sigma \)-algebra generated by \(\mathsf{BP}\) and \((M_v)_{v\in {\mathcal {T}}^{\scriptscriptstyle (1,2)}})\) with random intensity \(Z_n^{\scriptscriptstyle \mathsf{SWT}}=(Z_{n,t}^{\scriptscriptstyle \mathsf{SWT}})_{t\ge 0}\) defined by

for all \(t\ge 0\). Let \((T_\mathrm{coll}^{\scriptscriptstyle {\mathcal {P}}_n,\mathsf{SWT}},V_\mathrm{coll}^{\scriptscriptstyle (1,\mathsf{SWT})},V_\mathrm{coll}^{\scriptscriptstyle (2,\mathsf{SWT})})\) denote the first point of \({\mathcal {P}}_n^{\scriptscriptstyle \mathsf{SWT}}\) for which \(V_\mathrm{coll}^{\scriptscriptstyle (1,\mathsf{SWT})}\) and \(V_\mathrm{coll}^{\scriptscriptstyle (2,\mathsf{SWT})}\) are unthinned. Then the law of \((T_\mathrm{coll}^{\scriptscriptstyle {\mathcal {P}}_n,\mathsf{SWT}},\pi _M({\widetilde{\mathsf{BP}}}_{T_\mathrm{coll}^{\scriptscriptstyle {\mathcal {P}}_n,\mathsf{SWT}}}){,}M_{V_\mathrm{coll}^{\scriptscriptstyle (1{,}\mathsf{SWT})}}{,}M_{V_\mathrm{coll}^{\scriptscriptstyle (2{,}\mathsf{SWT})}})\) is the same as the joint law of \(T_\mathrm{coll}^{\scriptscriptstyle \mathsf{SWT}}=\tfrac{1}{2}W_n;\) the smallest-weight tree \(\mathsf{SWT}_{T_\mathrm{coll}^{\scriptscriptstyle \mathsf{SWT}}}\) at the time \(T_\mathrm{coll}^{\scriptscriptstyle \mathsf{SWT}};\) and the endpoints \(I_1^{\scriptscriptstyle \mathsf{SWT}},I_2^{\scriptscriptstyle \mathsf{SWT}}\) of the SWT collision edge. In particular, the hopcount \(H_n\) has the same distribution as \(\left| V_\mathrm{coll}^{\scriptscriptstyle (1,\mathsf{SWT})}\right| +\left| V_\mathrm{coll}^{\scriptscriptstyle (2,\mathsf{SWT})}\right| +1\).

3.3 FPP on the PWIT as a CTBP

In this section, we relate FPP on the PWIT to a continuous-time branching process (CTBP). In Sect. 3.3.1, we investigate the exploration from one vertex and describe this in terms of one-vertex characteristics. In Sect. 3.3.2, we extend this to the exploration from two vertices and relate this to two-vertex characteristics of CTBPs, which will be crucial to analyse shortest-weight paths in FPP on \(K_n\) which we explore from two sources.

3.3.1 FPP on the PWIT as a CTBP: One-Vertex Characteristics

In this section, we analyze the CTBP \(\mathsf{BP}^{\scriptscriptstyle (1)}\) introduced in Sect. 3.2. Notice that \((\mathsf{BP}_t^{\scriptscriptstyle (1)})_{t\ge 0}\) depends on n through its offspring distribution. We have to understand the coupled double asymptotics of n and t tending to infinity simultaneously.

Recall that we write \(\left| v\right| \) for the generation of v (i.e., its graph distance from the root in the genealogical tree). To count particles in \(\mathsf{BP}_t^{\scriptscriptstyle (1)}\), we use a non-random characteristic \(\chi :\left[ 0,\infty \right) \rightarrow \left[ 0,\infty \right) \). Following [11], define the generation-weighted vertex characteristic by

We make the convention that \(\chi (t)=z_t^\chi (a)=0\) for \(t<0\). For characteristics \(\chi \), \(\eta \) and for \(a,b,t,u\ge 0\), write

Let \({\hat{\mu }}_n(\lambda )=\int {\mathrm e}^{-\lambda y}d\mu _n(y)\) denote the Laplace transform of \(\mu _n\). For \(a>0\), define \(\lambda _n(a)>0\) by

whenever (3.16) has a unique solution. The parameters \(\lambda _n\) and \(\phi _n\) in Theorem 2.4 are given by

The asymptotics of \(\lambda _n\) and \(\phi _n\) stated in Theorem 2.4 is the content of the following lemma:

Lemma 3.12

(Asymptotics of BP-parameters) As \(n\rightarrow \infty ,\)\(\phi _n/s_n \rightarrow 1\) and \(\lambda _n f_n(1) \rightarrow {\mathrm e}^{-\gamma },\) where \(\gamma \) is Euler’s constant.

Lemma 3.12 is proved in Sect. 6.3.

Typically, \(z_t^\chi (a)\) grows exponentially in t at rate \(\lambda _n(a)\). Therefore, we write

In the following theorem, we investigate the asymptotics of such generation-weighted one-vertex characteristics:

Theorem 3.13

(Asymptotics of one-vertex characteristics) Given \(\varepsilon >0\) and a compact subset \(A\subset (0,2),\) there is a constant \(K<\infty \) such that for n sufficiently large, uniformly for \(a,b\in A\) and for \(\chi \) and \(\eta \) bounded, non-negative, non-decreasing functions, \(\lambda _n(1)[t\wedge u] \ge K,\)

Moreover, there is a constant \(K'<\infty \) independent of \(\varepsilon \) such that \({\bar{m}}_t^\chi (a^{1/s_n}) \le K' \left\| \chi \right\| _\infty s_n\) and \({\bar{M}}_{t,u}^{\chi ,\eta }(a^{1/s_n},b^{1/s_n}) \le K' \left\| \chi \right\| _\infty \left\| \eta \right\| _\infty s_n^3\) for all n sufficiently large, uniformly over \(u,t\ge 0\) and \(a,b\in A\).

Corollary 3.14

(Asymptotics of means and variance of population size) The population size \(\left| \mathsf{BP}^{\scriptscriptstyle (1)}_t\right| \) satisfies \({\mathbb {E}}(\left| \mathsf{BP}^{\scriptscriptstyle (1)}_t\right| )\sim s_n {\mathrm e}^{\lambda _n(1)t}\) and \({{\,\mathrm{Var}\,}}(\left| \mathsf{BP}^{\scriptscriptstyle (1)}_t\right| )\sim s_n^3 {\mathrm e}^{2\lambda _n(1)t}/\log 2\) in the limit as \(\lambda _n(1)t\rightarrow \infty ,\)\(n\rightarrow \infty \).

Theorem 3.13 is proved in Sect. 6.4. Generally, we will be interested in characteristics \(\chi =\chi _n\) for which \(\chi _n\left( \lambda _n(1)^{-1}\cdot \right) \) converges as \(n\rightarrow \infty \), so that the integral in (3.20) acts as a limiting value. In particular, Corollary 3.14 is the special case \(\chi ={\mathbb {1}}_{\left[ 0,\infty \right) }\), \(a=1\).

Since \(s_n\rightarrow \infty \), Theorem 3.13 and Corollary 3.14 show that the variance of \({\bar{z}}^\chi _t(a^{1/s_n})\) is larger compared to the square of the mean, by a factor of order \(s_n\). This suggests that \(\mathsf{BP}^{\scriptscriptstyle (1)}_t\) is typically of order 1 when \(\lambda _n(1)t\) is of order 1 [i.e., when t is of order \(f_n(1)\), see Lemma 3.12] but has probability of order \(1/s_n\) of being of size of order \(s_n^2\). See also [Part I, Proposition 2.17] which confirms this behavior.

3.3.2 FPP on the PWIT as a CTBP: Two-Vertex Characteristics

Theorem 3.11 expresses the collision time \(T_\mathrm{coll}^{\scriptscriptstyle \mathsf{SWT}}\) as the first point of a Cox process whose cumulative intensity is given by a double sum over two branching processes. To study such intensities, we introduce generation-weighted two-vertex characteristics. Let \(\chi \) be a non-random, non-negative function on \(\left[ 0,\infty \right) ^2\) and recall that \(T_v=\inf \left\{ t \ge 0:v \in \mathsf{BP}_t\right\} \) denotes the birth time of vertex v and \(\left| v\right| \) the generation of v. The generation-weighted two-vertex characteristic is given by

for all \(t_1,t_2,a_1,a_2 \ge 0\), where we use vector notation \(\vec {a}=(a_1,a_2)\), \(\vec {t}=(t_1,t_2)\), and so on. We make the convention that \(\chi (t_1,t_2)=z_{t_1,t_2}^\chi (\vec {a})=0\) for \(t_1\wedge t_2<0\). As in (3.19), we rescale and write

In (3.13), the cumulative intensity \(Z_{n,t}^{\scriptscriptstyle \mathsf{SWT}}\) can be expressed in terms of a two-vertex characteristic. If we define

then the total cumulative intensity is given by

We will use the parameters \(a_1,a_2\) to compute moment generating functions corresponding to \(Z_{n,t}^{\scriptscriptstyle \mathsf{SWT}}\).

The characteristic \(\chi _n\) will prove difficult to control directly, because its values fluctuate significantly in size: for instance, \(\chi _n\left( \tfrac{1}{2}f_n(1),\tfrac{1}{2}f_n(1)\right) =1\) whereas \(\chi _n\left( \tfrac{1}{2}f_n(1),f_n(1)\right) =O(1/s_n)\). Therefore, for \(K\in (0,\infty )\), we define the truncated measure

and again write \(\mu _n^{\scriptscriptstyle (K)}(\left( a,b\right] )=\mu _n^{\scriptscriptstyle (K)}(a,b)\) to shorten notation. For convenience, we will always assume that n is large enough that \(s_n\ge K\). By analogy with (3.24), define

By construction, the total mass of \(\mu _n^{\scriptscriptstyle (K)}\) is \(2K/s_n\), so that \(s_n \chi _n^{\scriptscriptstyle (K)}\) is uniformly bounded.

The following results identify the asymptotic behavior of \({\bar{z}}_{t_1,t_2}^{\chi ^{\scriptscriptstyle (K)}_n}(\vec {a})\) and show that, for \(K\rightarrow \infty \), the contribution due to \(\chi _n-\chi ^{\scriptscriptstyle (K)}_n\) becomes negligible. These results are formulated in Theorem 3.15, which investigates the truncated two-vertex characteristic, and Theorem 3.16, which studies the effect of truncation:

Theorem 3.15

(Convergence of truncated two-vertex characteristic) For every \(\varepsilon >0\) and every compact subset \(A\subset (0,2),\) there exists a constant \(K_0<\infty \) such that for every \(K \ge K_0\) there are constants \(K'<\infty \) and \(n_0 \in {\mathbb {N}}\) such that for all \(n \ge n_0,\)\(a_1,a_2,b_1,b_2\in A\) and \(\lambda _n(1)[t_1\wedge t_2\wedge u_1\wedge u_2] \ge K',\)

and

where \(\zeta :(0,\infty )\rightarrow {\mathbb {R}}\) is the continuous function defined by

Moreover, for every \(K<\infty \) there are constants \(K''<\infty \) and \(n_0'\in {\mathbb {N}}\) such that for all \(n \ge n_0',\)\(t_1,t_2,u_1,u_2\ge 0\) and \(a_1,a_2,b_1,b_2\in A,\)\({\bar{m}}_{\vec {t}}^{\chi _n^{\scriptscriptstyle (K)}}(\vec {a}^{1/s_n}) \le K'' s_n\) and \({\bar{M}}_{\vec {t},\vec {u}}^{\chi _n^{\scriptscriptstyle (K)},\chi _n^{\scriptscriptstyle (K)}}(\vec {a}^{1/s_n},\vec {b}^{1/s_n})\le K'' s_n^4.\)

The exponents in Theorem 3.15 can be understood as follows. By Theorem 3.13, the first and second moments of a bounded one-vertex characteristic are of order \(s_n\) and \(s_n^3\), respectively. Therefore, for two-vertex characteristics, one can expect \(s_n^2\) and \(s_n^6\). Since \(\chi _n^{\scriptscriptstyle (K)}=\frac{1}{s_n}s_n\chi _n^{\scriptscriptstyle (K)}\) appears once in the first and twice in the second moment, we arrive at \(s_n\) and \(s_n^4\), respectively.

Theorem 3.16

(The effect of truncation) For every \(K>0,\)\({\bar{m}}_{\vec {t}}^{\chi _n-\chi _n^{(K)}}(\vec {1})=O(s_n),\) uniformly over \(t_1,t_2\). Furthermore, given \(\varepsilon >0,\) there exists \(K<\infty \) such that, for all n sufficiently large, \({\bar{m}}_{\vec {t}}^{\chi _n-\chi _n^{\scriptscriptstyle (K)}}(\vec {1})\le \varepsilon s_n\) whenever \(\lambda _n(1)[t_1\wedge t_2]\ge K.\)

Theorems 3.15 and 3.16 are proved in Sect. 7.

3.4 CTBP Growth and the Need for Freezing: Medium Time Scales

Theorem 3.11 shows how to analyze the weight \(W_n\) and hopcount \(H_n\) in terms of a Cox process driven by two (n-dependent) branching processes. In this section, we describe how this analysis works when the branching processes grow normally (i.e., exponentially with a fixed prefactor). In the CTBP scaling results from Sect. 3.3, we have seen that the class of edge weights we consider gives rise to a more complicated scaling, with n-dependent prefactors that diverge to infinity. As we will explain, this causes a direct analysis to break down, and we define an appropriate freezing mechanism that we use to overcome this obstacle. In Sect. 3.4.1, we first explain what we mean with freezing and why we need it, and in Sect. 3.4.2 we explain how FPP from two sources can be frozen, and then later unfrozen, such that CTBP asymptotics can be used and collision times between the two FPP clusters can be analyzed.

3.4.1 Frozen FPP Exploration Process

Under reasonable hypotheses, a fixed CTBP grows exponentially under all measures of size. More precisely, there will be a single constant \(\lambda \) such that \(m^\eta _t(1)\sim A_\eta {\mathrm e}^{\lambda t}\) and \(M^{\eta ,\eta }_{t,t}(1,1)\sim B_\eta {\mathrm e}^{2\lambda t}\) for constants \(A_\eta ,B_\eta \), over a wide class of one-vertex characteristics \(\eta :\left[ 0,\infty \right) \rightarrow {\mathbb {R}}\), including the choice \(\eta =1\) that encodes the population size. Similarly, if \(\chi \) is a two-vertex characteristic, we can expect that \(m^\chi _{t,t}(\vec {1})\sim C_\chi {\mathrm e}^{2\lambda t}\) and \(M^\chi _{(t,t),(t,t)}\sim D_\chi {\mathrm e}^{4\lambda t}\). Taking \(\left| Z_{n,t}\right| =\tfrac{1}{n}z^\chi _{t,t}(1)\) as in (3.25), we would then expect the first point of the Cox process from Theorem 3.11 to appear at times t for which \({\mathrm e}^{2\lambda t}\approx n\). For such t, we have \({\mathrm e}^{\lambda t}\approx \sqrt{n}\), so that each branching process has of order \(\sqrt{n}\) individuals and a typical individual is of order \(\log n\) generations away from the root.

If these asymptotics hold, then a typical vertex v alive at such times t is unthinned whp. Indeed, for each of the \(\approx \log n\) ancestors, there are at most \(\approx \sqrt{n}\) other vertices that might have the same mark. Each pair of vertices has probability 1/n of having the same mark, leading to an upper bound of \(\approx \frac{\sqrt{n}\log n}{n}\) on the probability that v is thinned.

In particular, the first point of \({\mathcal {P}}^{\scriptscriptstyle \mathsf{SWT}}_n\) coincides whp with \((T_\mathrm{coll}^{\scriptscriptstyle ({\mathcal {P}}_n,\mathsf{SWT})},V_\mathrm{coll}^{\scriptscriptstyle (1,\mathsf{SWT})},V_\mathrm{coll}^{\scriptscriptstyle (2,\mathsf{SWT})})\), the first unthinned point of \({\mathcal {P}}^{\scriptscriptstyle \mathsf{SWT}}_n\). Using Theorem 3.11, it is therefore possible to derive asymptotics of \(W_n\) and \(H_n\) by analysing the first point of \({\mathcal {P}}^{\scriptscriptstyle \mathsf{SWT}}_n\), which in turn can be done by a first- and second-moment analysis of the intensity measure \(Z_{n,t}^{\scriptscriptstyle \mathsf{SWT}}\).

In the setting of this paper, however, this analysis breaks down. The branching processes now themselves depend on n, and their behaviour becomes irregular when n is large. One-vertex characteristics satisfy \(m^\eta _t(1) \sim A'_\eta s_n {\mathrm e}^{\lambda _n(1) t}\) and \(M^{\eta ,\eta }_{t,t}(1,1) \sim B'_\eta s_n^3 {\mathrm e}^{2\lambda _n(1) t}\). The mismatch of prefactors, \(s_n\) versus \(s_n^3\), suggests that the branching process has probability of order \(1/s_n\) of growing to size \(s_n^2\) in a time of order \(1/\lambda _n(1)\approx f_n(1)\), and that this unlikely event is important to the long-run growth of the branching process.

We can balance the mismatched first and second moments by aggregating \(s_n\) independent copies of the branching process. The sum of \(s_n\) independent copies of \(z^\eta _t(1)\) will have mean of order \(A'_\eta s_n^2 {\mathrm e}^{\lambda _n(1)t}\) and second moment of order \(B''_\eta s_n^4 {\mathrm e}^{2\lambda _n(1)t}\), where now the second moment is on the order of the square of the mean. [With proper attention to correlations, it is also possible to show that the two-vertex characteristics \(z^{\chi _n}_{t,t}(1)\), summed over two groups of \(s_n\) independent branching processes each, will have mean of order \(C' s_n^3 {\mathrm e}^{2\lambda _n(1)t}\) and second moment \(D' s_n^6 {\mathrm e}^{4\lambda _n(1)t}\).] This balancing makes a first- and second-moment analysis possible.

To achieve the same effect starting from two branching processes, wait until each branching process is large enough that it has of order \(s_n\) new children in time of order 1. Then the collection of all individuals born after that time (and their descendants) will again have balanced first and second moments. However, as we will see, the time when each branching process becomes large enough is highly variable. In particular, by the time the slower-growing of the two branching processes is large enough, the faster-growing branching process will have become much too large. For this reason, we will need to freeze the faster-growing branching process to allow the other to catch up. In the following sections we explain how freezing affects the FPP exploration process, the coupling to the PWIT, the Cox process representation for the optimal path, and the effect of thinning. We now first explain precisely how we freeze our two branching processes.