A Practical EEG-Based Human-Machine Interface to Online Control an Upper-Limb Assist Robot

- Shien-Ming Wu School of Intelligent Engineering, South China University of Technology, Guangzhou, China

Background and Objective: Electroencephalography (EEG) can be used to control machines with human intention, especially for paralyzed people in rehabilitation exercises or daily activities. Some effort was put into this but still not enough for online use. To improve the practicality, this study aims to propose an efficient control method based on P300, a special EEG component. Moreover, we have developed an upper-limb assist robot system with the method for verification and hope to really help paralyzed people.

Methods: We chose P300, which is highly available and easily accepted to obtain the user's intention. Preprocessing and spatial enhancement were firstly implemented on raw EEG data. Then, three approaches– linear discriminant analysis, support vector machine, and multilayer perceptron –were compared in detail to accomplish an efficient P300 detector, whose output was employed as a command to control the assist robot.

Results: The method we proposed achieved an accuracy of 94.43% in the offline test with the data from eight participants. It showed sufficient reliability and robustness with an accuracy of 80.83% and an information transfer rate of 15.42 in the online test. Furthermore, the extended test showed remarkable generalizability of this method that can be used in more complex application scenarios.

Conclusion: From the results, we can see that the proposed method has great potential for helping paralyzed people easily control an assist robot to do numbers of things.

Introduction

Electroencephalography (EEG), a meaningful attempt to explore the secrets of the brain which is made up of neurons (Noctor et al., 2001). It also can be used to reflect the human intention under different physiological conditions because the transfer of information between neurons produces changes in electrical potentials (Schomer and da Silva, 2012). High-resolution EEG (Mitzdorf, 1985) is quickly becoming a powerful tool in human-machine interface (HMI), with which people is able to work by internal intention and external equipment instead of their own limbs (Gao et al., 2003). This technology plays a vital role in helping disabled people out of the dilemma that they have to rely on the help of others all the time. Moreover, robots can be controlled by EEG-based HMI to assist paralyzed people with neuromuscular disorders such as stroke or amyotrophic lateral sclerosis in performing rehabilitation training (Prasad et al., 2010; Ang et al., 2015). A large amount of evidence shows that EEG-based assist robots effectively promote patients recover (Bhattacharyya et al., 2014; Chaudhary et al., 2016; Alia et al., 2017; Monge-Pereira et al., 2017; Cervera et al., 2018) by essentially helping reconstruct the neural circuit between the brain and the muscles compared to the traditional method of repetitive motion (Dobkin, 2004; Belagaje, 2017).

Many control methods for assist robots have emerged but there are still some challenges such as causing fatigue, requiring too much pre-training, or poor online testing results (Tariq et al., 2018), which allows us to question how assist robots can be more practical for assistance purpose. Steady-state visually evoked potentials (SSVEPs) are adopted to extract effective components of EEG with too much, and cluttered information. An SSVEP-controlled assist robot has been developed to perform pick and place tasks (Chen et al., 2019), which are completed by showing the user four targets flickering at 30, 31, 32, 33 Hz to trigger different commands. In the same regard, SSVEPs are used to control functional electrical stimulation for rehabilitation (Han-Pang, 2015). This paradigm has good information transfer rate (ITR) and accuracy (Vialatte et al., 2010; Chen et al., 2019), but is restricted by the limited number of control commands (Norcia et al., 2015; Zhao et al., 2015), and constant flickering easily causes fatigue, which is not appropriate for people in rehabilitation (Duszyk et al., 2014). Some research turns its attention to motor imagery (MI) for closely correlating brain commands and body movement responses. A system with MI for after-stroke rehabilitation exercises has been presented (Wang et al., 2009), that controls a robot to drive the arm by letting subjects imagine hands moving. In another work, the left, right, up, and down robot arm movements are driven by imagining the movement of their left hand, right hand, both hands, and the relaxation of both hands (Meng et al., 2016). Nevertheless, MI is not practical enough because the imaginary movements with distinct individual differences are not definite, causing participants to need extra pre-training and resulting in lower accuracy (Dahm and Rieger, 2016).

Event-related potentials (ERPs), brain voltage fluctuations in response to specific stimuli such as images or sounds (Sams et al., 1985; Picton et al., 2000), provide a more straightforward mean to control the assist robot. A lower limb prosthesis based on P300, the peak observed 300 ms (250–500 ms) after a specific event (Picton, 1992; Polich, 2007), has been developed to help people walk (Duvinage et al., 2012). Four letters for simulation are used to represent low-, medium-, and high-speed states and stop states. As one of the most easily observed ERP, P300 is also used for spelling by constantly flashing the rows and columns of a 6 × 6 alphabet matrix when the subjects are focusing on a target letter, which stimulates the P300 response (Velasco-Álvarez et al., 2019). Therefore, we chose P300 because it was more stable to be observed in most people and less prone to fatigue.

Researchers have tried some methods to detect P300 (Raksha et al., 2018; Tal and Friedman, 2019; de Arancibia et al., 2020) and no good conclusion yet as to which method is really acceptable to practical online use of assist robot due to the rigorous requirement for accuracy and stability. Many good works with impressive performance have been obtained (Cecotti and Graser, 2011) only from offline experiments (Kobayashi and Sato, 2017; Mao et al., 2019; Kundu and Ari, 2020) or BCI competition (Kundu and Ari, 2018; Arican and Polat, 2019; Ramele et al., 2019). However, we cannot risk using offline results directly in real life. Serious differences may be caused by complex objective factors such as impedance (Ferree et al., 2001) and specificity (Chowdhury et al., 2015). Some researchers have also tried online experiments, but many of them focus on new frameworks or user interfaces while ignoring the performance of the method itself (Achanccaray et al., 2019; Lu et al., 2019; Mao et al., 2019).

Consider these issues, we propose a highly practical control system with remarkable accuracy and nice ITR after comparing three P300 detection methods offline and online, that can be used for the control of assist robots. Moreover, with the method, a complete upper-limb assist robot has been built to verify the feasibility and effectiveness of the system, as well as an exploratory mobile robot controlled together with computer vision to confirm the robustness and generalizability.

The main contributions of this research are as follows: (i) a handy and efficient EEG-based method to steadily control assist robots for helping disabled people practically was proposed; (ii) With the method, we developed a safe robot that can assist user to perform up to 36 preset upper-limb movements such as rehabilitation exercises; (iii) both offline and online tests were performed to verify the practicability of the finalized system with detailed comparisons of three classifiers as intention detector; and (iv) good reference for future research was provided by a novel assistive mobile robot with shared control of our method and computer vision.

The sections of this paper are given as follows. We introduce data collection, the framework of our control method, the theoretical details of three comparison methods, the composition of the upper-limb assist robot and the extended test in section Materials And Methods. The experiments and the results are presented in section Results. Finally, the study is discussed in section Discussion, and concluded in section Conclusions.

Materials and Methods

Data Acquisition

Eight healthy volunteers (six males and two females), aged from 19 to 28 years old, joined in these experiments. All participants without any experience of an EEG experiment gave informed consent before the experiment. All the procedures were approved by the Guangzhou First People's Hospital Department of Ethics Committee. It took <2 h for each one to complete the experiment. EEG signals recorded from 64 scalp electrodes on BrainCap MR (Brain Products GmbH) were amplified using BrainAmp MR (Brain Products GmbH), with all impedances kept at approximately 20 kΩ.

The P300 speller paradigm (Farwell and Donchin, 1988) composed of 26 letters and 10 numbers in a 6 × 6 matrix was used for stimulation. Each participant was instructed to watch a 15.6" LCD monitor 0.7 m away with a 6 × 6 character matrix and focus on one of them. The character flashed within the entire row or column, and each row or column flashed once for a total of 12 times per repetition. Twelve repetitions were conducted to recognize one character. In the training portion of the experiment, the rows and columns flashed in random order with a flashing duration of 100 ms and a no-flash duration of 50 ms, leading to an interstimulus interval (ISI) of 150 ms. Besides, we set the inter-repetition delay to 0.5 s and the inter-character delay to 5 s. Fifteen characters were collected for training classifiers, which took 476.5 s for each participant to perform. A dataset for specific people and objective factors was obtained with 360 target samples and 1,800 no-target samples, comprising 2,160 samples in total.

Method

The schematic diagram of the proposed method is shown in Figure 1. It consists of four parts: the user module to stimulate participant and acquire EEG signal, the signal processing part to conduct pre-processing and feature enhancement, the classifier to detect P300 from continuous EEG, and the execution module to control the robot. In the short-term training phase, the acquired data were pre-processed, and a spatial filter was used to enhance the features. Then, we used the processed data to train the P300 classifier. In the online test phase, the data were classified to obtain the user's intention as control commands for the assist robot.

Pre-processing and Data Enhancement

First, the signals collected at a sampling rate of 500 Hz were temporally filtered using a fourth-order Butterworth bandpass filter with a 1 Hz lower cut-off frequency and a 20 Hz upper cut-off frequency. Then, down sampling with a factor of four was used to reduce the computational cost. Next, the signal was divided into 500 ms epochs, including 250 ms duration to preserve the P300 wave and a 250 ms interval to avoid overlap.

After the above steps, the spatial filter based on xDAWN algorithm (Rivet et al., 2009) was used to enhance the evoked potential of the data which means to improve the signal to signal-plus-noise ratio (SSNR). It can be expressed as follows:

where EEGpp is the matrix of preprocessed EEG signal. A1 and A2 indicate two states with evoked potential and no evoked potential. D1 and D2 are Toeplitz matrices whose first column are defined to zero except for those corresponding to the two states. N is the sum of the original noise. A1 can be factorized as , where a1 is the temporal distribution and W1 is the spatial distribution. Now the SSNR can be defined as:

where U is a spatial filter, E1 and EE represent the expectation of ( and ( separately. Assuming that A1 is uncorrelated to other parts, the SSNR can be rewritten as:

where is the expectation of (. The spatial filter can be calculated by maximizing SSNR. The A1 can be obtained by least mean square estimation, then the estimated spatial filter Û. The final output after pre-processing and spatial enhancement can be calculated by:

where EEGp is the matrix of processed EEG signal. The signal space is compressed to three dimensions, which serves as an input to make the classifiers of the next part substantially more efficient.

Due to the good performance of xDAWN, channel selection was no longer a problem that seriously affected the experiments. All 64 channels were used to provide sufficient information without worrying about too much computation for the classifier. From another perspective, we can settle for impedances at approximately 20 kΩ to cut down the preparation time before the experiment.

Classifiers

The EEG with complex structure is easily affected by slight changes in human thoughts. Therefore, a highly efficient and robust classifier is essential for P300 detection. Some classification methods have been used for P300 detection, such as linear discriminant analysis (LDA), random forest (RF), and convolutional neural network (Cecotti and Graser, 2011; Duvinage et al., 2012; Akram et al., 2015; Hong and Khan, 2017). Some good results have been achieved with some datasets but testing using real-time data is insufficient. As we all know, there are many external factors that affect the signal quality of non-invasive EEG. It makes us confused that what is most suitable for assist robot online control. Besides, too much time is unacceptable to train a large amount of data with a complex classifier in rehabilitation scenario. Thus, the classifier design is a serious problem in our work.

To propose a method capable of accurately detecting the P300 signal at a fast speed, we used three types of classifiers– SVM, LDA, and multilayer perceptron (MLP) –to classify the data into two classes, Target meant P300 signal was detected and No Target meant no P300 signal was detected.

For every participant, only the data of 15 characters were used as the training set, which was acquired as described in section Data acquisition. Five-fold cross-validation was introduced to avoid overfitting. The following content will explain the structures and parameter settings of the three classifiers in detail.

LDA Classifier

Linear discriminant analysis is a classification algorithm which performs well for EEG signals (Xu et al., 2019) despite its simple structure. It looks for a vector to preserve as much information indicating the discrimination of class Target and No Target as possible, which means to make the samples in the same class more aggregated and those from different classes more separated. LDA was used to verify whether the cost-effective linear classifier could be used well for EEG signals. The low input dimensions made the dimensionality reduction mapping smoother as well. For better classification results, a metric function was used as:

where VEEG is the vector used to project the output from EEG signal processing part to a low-dimensional sample space for discrimination; Sb is the between-class scatter representing the distance between the means of the classes; and Sw is the within-class scatter, which is the variance within a class. These two scatters were used to control Target away from No Target and to ensure that each class was dense enough. The partial derivative given as follows was used to find VEEG, which can obtain a maximum J(VEEG ):

Through the determinant operation, we can obtain the values of J(VEEG), further finding VEEG which could be used for classification directly.

SVM Classifier

Support vector machine is a supervised machine learning method that is used for classification or regression. Similar to LDA, the processed EEG signal is mapped to high-dimensional feature space and is divided into two regions by a hyperplane. Considering the significant individual differences of different people's EEG signal, SVM, which does not require too many parameters or local optimization but has low generalization error, is particularly well-suited.

The training data is given as , in which EEGi is the processed EEG signal segment and Li ∈ {−1, 1} is the class label. We can determine the hyperplane by maximizing the separation margin of class Target and No Target and minimizing the classification error. It can be expressed mathematically as:

where w is the weight and b is the bias, φ() is the mapping function. After modification we got:

where ξi ≥ 0 is the slack variable representing the magnitude of the error. Further, the dual representation obtained with Lagrange multiplier method is:

where multiplier an ≥ 0. A non-linear kernel as follows was used in the classifier to replace the simple inner product of the data mapping which makes it more robust for the linearly inseparable case and differentiate from the previous linear method LDA. Then, the decision could be made with the hyperplane.

MLP Classifier

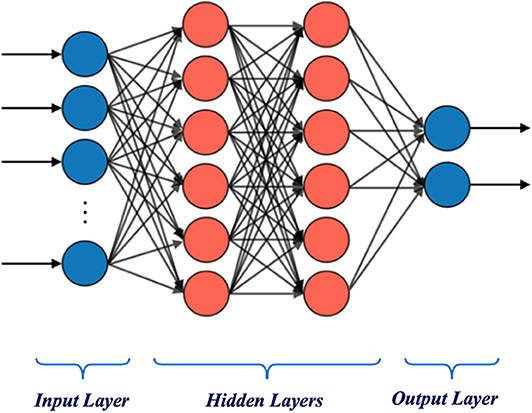

Recently, deep learning based on neural networks has achieved amazing performance in some classification problems (Lecun et al., 2015). However, too deep network on small data is prone to problems like overfitting. MLP, a classic model with most characteristics of neural networks, was chosen for comparison. The structure of MLP shown in Figure 2 is made of three parts with layers containing many neurons that perform linear weighting calculation separately. Similar to the information step-by-step transfer in the brain, the processed EEG data input from the first layer is used to obtain few abstract features in the two hidden layers, and these features are integrated by the output layer to obtain the final classification result.

The tanh function given as follows was used as the activation function in the neurons to introduce non-linear properties after performing a linear operation on each neuron.

where FEEG indicates the abstract feature of EEG in different layers. The weights and biases were corrected by gradient descent and calculated by backpropagation in training. With continuous optimization, this network was able to classify a sample to class Target or No Target at last.

Upper-Limb Assist Robot System and Practical Test

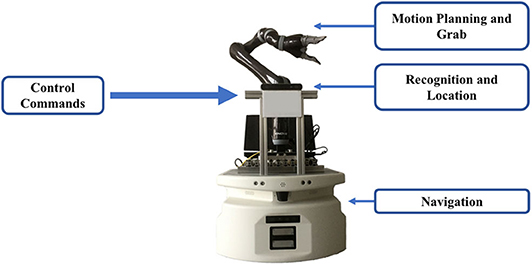

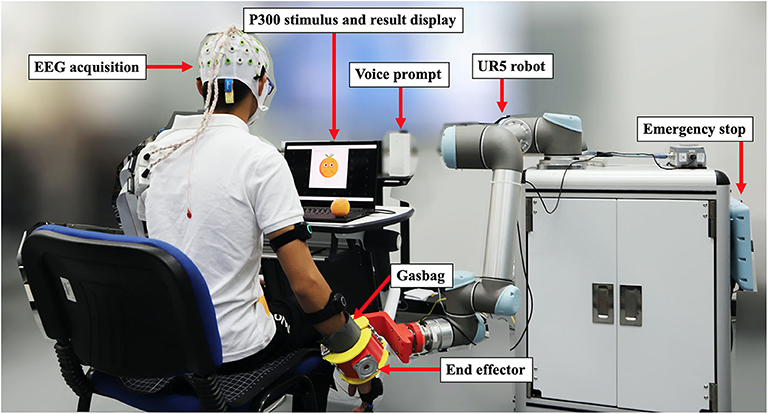

We built an integrated control system to verify the actual performance of our methods and an upper-limb assist robot that can be used in practice, shown in Figure 3, which we hoping to employ to replace repeating mechanical rehabilitation that is commonly used in hospitals with general results but massive manpower. An example is given to illustrate the use, when the participant keeps focusing on the letter “O,” it would be detected by the system and accompany the screen displayed the corresponding indicative picture with a voice prompt to remind the user that the assistive movement of grasping orange was about to be done. After that, the robot helped the participant pick up the orange in front of him and put it down in another place. We could also program different actions for rehabilitation corresponding to the 36 characters. Besides, different quantities and forms of P300 stimulation, such as vivid pictures instead of characters, could be customized according to actual needs.

Figure 3. The upper-limb assist robot controlled by our method for testing. Written informed consent was obtained from the individual for the publication of this image.

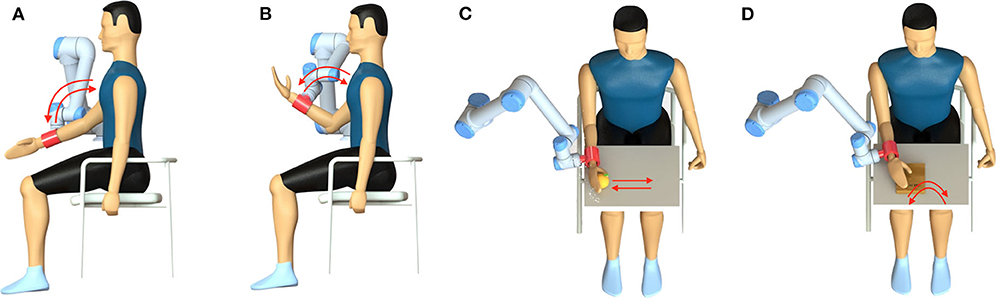

The control module was a laptop with an Intel Core i7-8750H processor and 16 GB RAM. The EEG signal was transmitted to this laptop after acquisition and amplification. We used OpenViBE (Renard et al., 2010) and MATLAB R2018b for data collection, signal processing, and user interface building. After detecting P300, the participant's intention is converted to a control command and sent to the execution module through the TCP protocol. The execution module was mainly composed of a UR5 robot (Universal Robots) for performing preset movements and a 3D printed end effector with a gasbag to assist the user in doing tasks by driving user's upper limb. As shown in Figure 4, we preset four tasks related to the shoulder and elbow muscle groups, shoulder flexion in Figure 4A, elbow flexion in Figure 4B, orange grasping in Figure 4C and book turning in Figure 4D, which were triggered separately by focusing on the corresponding character. Up to 36 movements that can be set give the assist robot a lot of possibilities.

Figure 4. Four preset tasks of the upper-limb assist robot. (A) Shoulder flexion; (B) elbow flexion; (C) orange grasping; (D) book turning.

We must pay attention to the security issue. The security mechanism of our assist robot included several aspects. There was an emergency stop button on the control panel with which the operator can stop the robot at any time. Besides, the force that the UR5 can withstand was limited to 80 N. If unexpected movement occurs, it will automatically stop without harming the user due to the small resistance generated by the user. Moreover, the preset movements would be recorded manually for each new user based on his exclusive status.

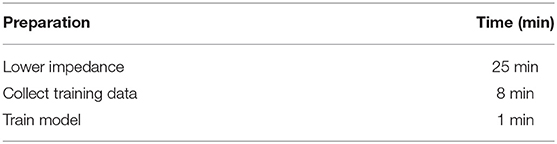

In practical use, it is not necessary to reduce the impedance to an excessively low level because of the good performance of our control strategy, preparation can be finished within a short time by an operator familiar with the process. According to the results of the pre-experiment, we reduced the ISI to 75 ms, consisting of a flashing duration of 50 ms and a no-flash duration of 25 ms, as well as an inter-repetition duration of 0.25 s. This measure was a substantially effective use of the P300 signal.

Extended Test

We have built a control system with an upper-limb assist robot, and hope that the control strategy can be applied in a wild range of scenarios. Therefore, we develop a more novel mobile robot controlled by our HMI and computer vision for further verification.

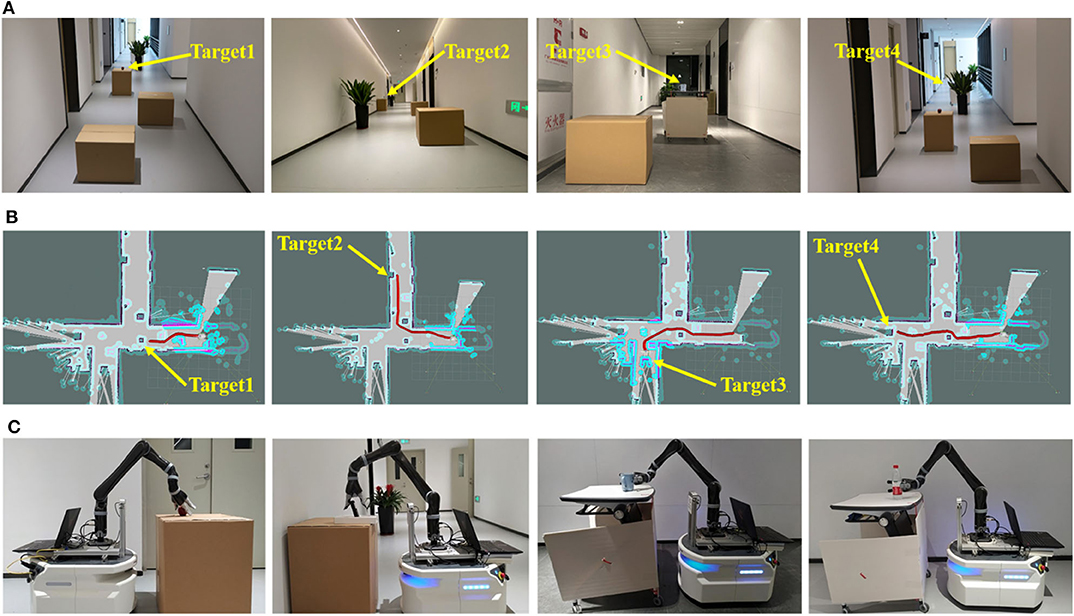

As in the previous section, the control system was used to obtain the user's intention and control the mobile robot to go to another place to grasp items and return to the user. The mobile robot given in Figure 5 has three parts, a mobile platform with radar for navigation, a stereo camera (Stereolabs Inc.) for recognition and a robot arm (Kinova Inc.) for grasp. As shown in Figure 6A, we selected an area in which four positions had placed an apple, a mobile phone, a cup and a bottle as four targets to be grasped. Some cartons were randomly placed as obstacles.

Figure 6. Scenarios of four targets for mobile robot testing. (A) actual scene; (B) route planning; (C) grasp motion.

We set four characters to correspond to the four targets and built a map of the environment at first in actual use. When the control system detected the participant's intention, the command would be wirelessly transmitted to the mobile robot. Then the mobile platform planned a global route to avoid the obstacles and reach the target with A* algorithm as in Figure 6B. Near the target, the stereo camera used computer vision method (Redmon and Farhadi, 2017) to detect the target and calculate the three-dimensional coordinates, with which the robot arm could grasp the target as in Figure 6C. Finally, the mobile robot returns to the participant's position.

This part is an imperfect exploratory attempt. We hope to use it to further test the practicality and robustness of our control method, which showed a very high application value. There is no very detailed implementation description because the robot and computer vision technology we used is quite mature.

Results

The 64-channel EEG data of eight healthy participants with no experience of P300 experiments were used to test our method. In each experiment, we collected data from 15 characters with a total of 2,160 samples for training and offline testing, followed by nine groups with 10 characters each for online testing. The linear LDA, non-linear SVM and neural network method MLP were compared with three experimental groups to obtain an effective method for the HMI.

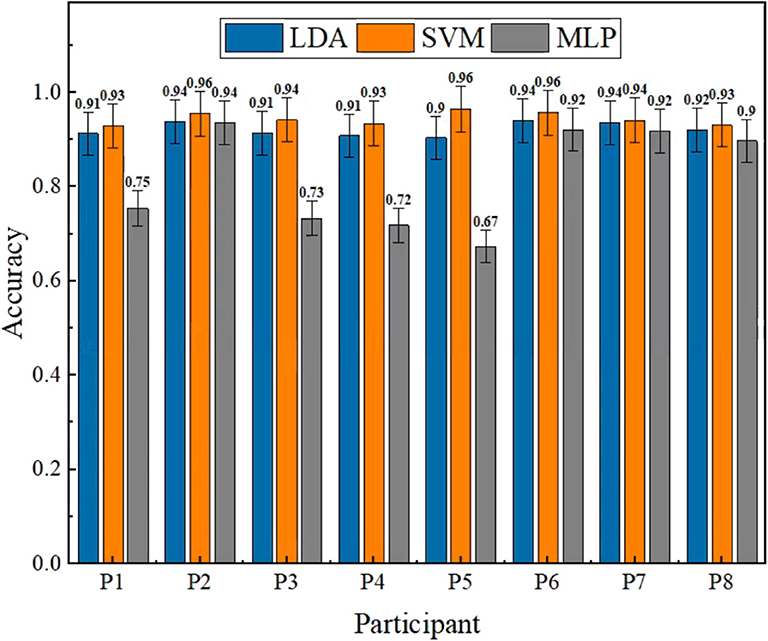

The accuracy was used as the primary criterion to guarantee the reliability of the entire system after selecting the ISI and the number of repetitions. The training results of the three methods for each participant are presented in Figure 7 with average accuracies and standard deviations of 92.15 ± 1.42, 94.43 ± 1.32, and 81.87 ± 10.9%, respectively, in which we can see that LDA and SVM were always high and stable. The results of MLP were unstable and significantly lower than LDA (p < 0.05) and SVM (p < 0.05), even though it could achieve good accuracy in some cases.

Figure 7. Offline results of three methods in eight different participants. The average accuracies with standard deviations of LDA, SVM, and MLP are 92.15 ± 1.42, 94.43 ± 1.32, and 81.87 ± 10.9%, separately. The results of MLP are significantly lower than LDA (p < 0.05) and SVM (p < 0.05).

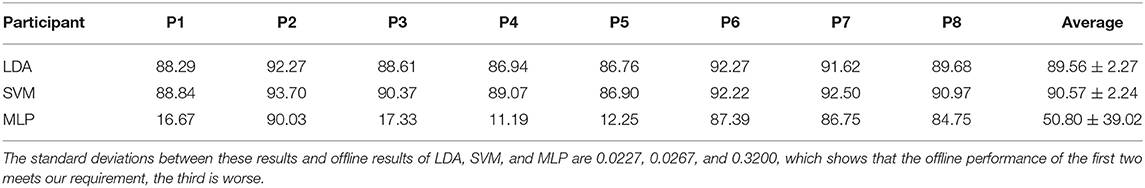

Five-fold cross-validation was used to avoid overfitting and confirm the validity of our offline test because EEG with an amount of noise was easily affected by the limited data we used to reduce the detection ability of the method. The data of each participant was randomly divided into five equal parts. The five results obtained by using one of them as the test data and the other four as the training data were combined into the final accuracy. As shown in Table 1, the cross-validation accuracy of LDA and SVM remain stable with low standard deviation. However, MLP still shows large differences in different participants, sometimes having rather poor results. Besides, we used the standard deviation of the classification accuracy and cross-validation accuracy to evaluate the performance of three classifiers. The results of LDA, SVM and MLP are 0.0227, 0.0267, and 0.3200. It can be seen that the first two meet our demand, while the third is worse.

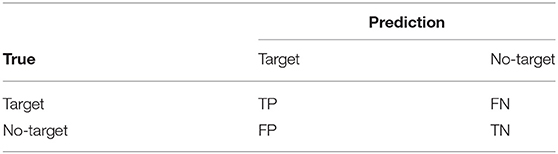

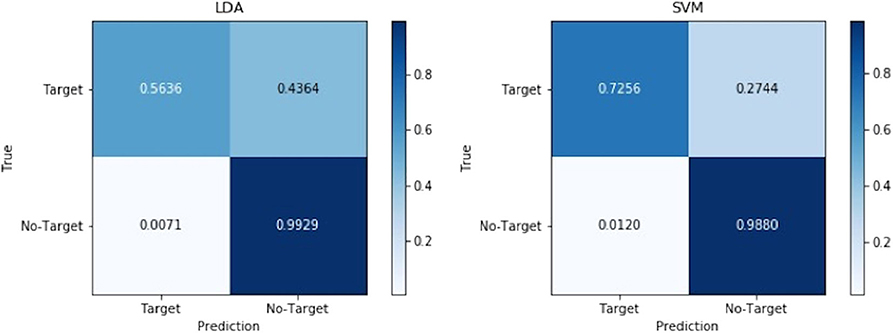

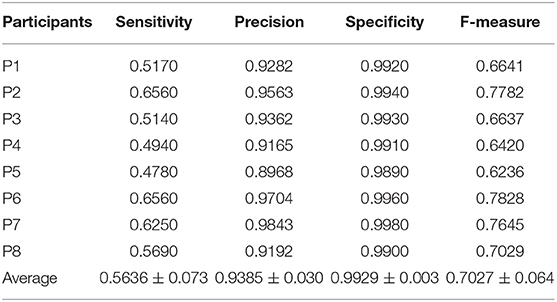

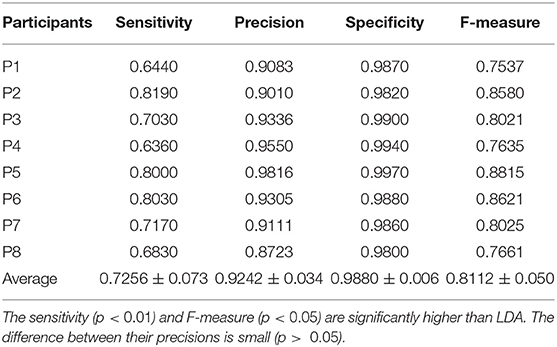

Then the confusion matrixes shown in Table 2 were used to compare LDA and SVM and further confirm the reliability of the finalized control method. Signals of target stimuli are referred to as positive samples, and signals of no-target stimuli are referred to as negative samples. TP indicates the number of positive samples correctly predicted. FN indicates the number of positive samples incorrectly predicted as negative samples. FP indicates the number of negative samples incorrectly predicted as positive samples. TN indicates the number of negative samples correctly predicted. For the convenience of observation, the confusion matrices reflecting the overall performance of LDA and SVM are given in Figure 8 after normalization.

We used another four exact criteria derived from the confusion matrix, sensitivity, precision, specificity, and F-measure, to evaluate the performance of the method as follows:

Sensitivity and specificity mean the ability to predict positive samples and negative samples, separately. Precision refers to the proportion of samples predicted positive are predicted correctly. Sometimes Sensitivity and Precision will contradict, so we choose F-measure that combines both of them. The results of LDA and SVM for each participant are shown in Tables 3, 4, respectively. From the results, the sensitivity of SVM is significantly higher than LDA (p < 0.01). The difference of precision is small (p > 0.05) but the F-measure of SVM is higher obviously (p < 0.05). Both specificities of them are very high.

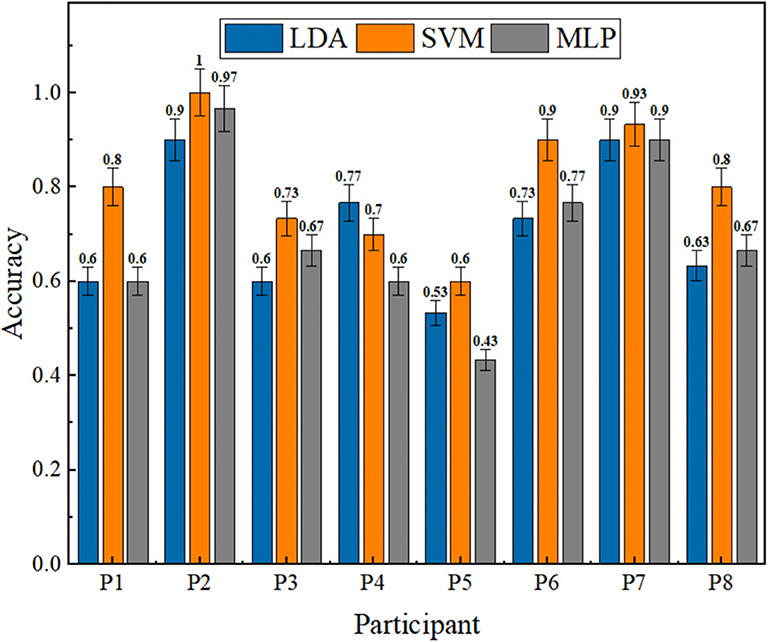

The online tests with 30 characters in three groups per classifier were also conducted for each participant to test the actual performance of the method. As shown in Figure 9, the accuracies of the three classifiers are 70.83±14.00, 80.83±13.18, and 70.00±17.28%. The online results are lower and more unstable than offline, possibly due to different external factors and brain changes during thinking. The trends are similar to the offline test, in which SVM shows distinct superiority and robustness than LDA and SVM.

Figure 9. Online results of the three methods in eight different participants. The average accuracies with standard deviations of LDA, SVM, and MLP are 70.83±14.00, 80.83±13.18, and 70.00±17.28%.

Discussion

This paper aims to design a practical HMI to help disabled people control assist robot for some daily movements or rehabilitation exercises on their own. An EEG-based method is proposed with an effective spatial filter followed by a robust P300 classifier to obtain the user's intention and control the robot.

Since the characteristics of the EEG signal are still not well-understood, three representative classifiers, including LDA and SVM, that have distinct linear and non-linear features, as well as the MLP with neural network properties were chosen. The parameters of the classifiers determined by pre-experiment were consistent across all experiments to make certain the universality of the method. According to offline and online results, both LDA and SVM remained stable, but MLP varied wildly across different participants. Although the neural network has achieved many excellent classifications, it depends heavily on data size and hyperparameter fine-tuning. If the structure is simple, this method may not be able to handle all the features; however, if the structure is too complicated, it may consume too much time and also have the individual specificity problem, which is unacceptable in this control scenario. In further reference to the metrics derived from the confusion matrix, the overall performance of SVM is indeed preferable.

Our finalized method has achieved an offline accuracy of 94.43% and an online accuracy of 80.83%. Besides, the extended test of the mobile robot reached an online accuracy of 81.67%. The reliability and stability of our method make it more likely to be used in practice. Another valuable achievement is that the specificity is very high. This means that our control system hardly recognizes unexpected intention when the user does not need it, which may cause sudden movement of the assist robot. This character greatly guarantees the safety of users.

For the practical application of EEG for rehabilitation, time consumption is a problem that cannot be ignored. As shown in Table 5, the experiment takes only approximately half an hour for preparation, which is very convenient for the users, especially for people with mobility impairment and their escorts. Moreover, the ITR introduced by Shannon (1948) is used to quantitatively evaluate the efficiency and speediness. This metric, commonly used for measuring control systems, is defined as:

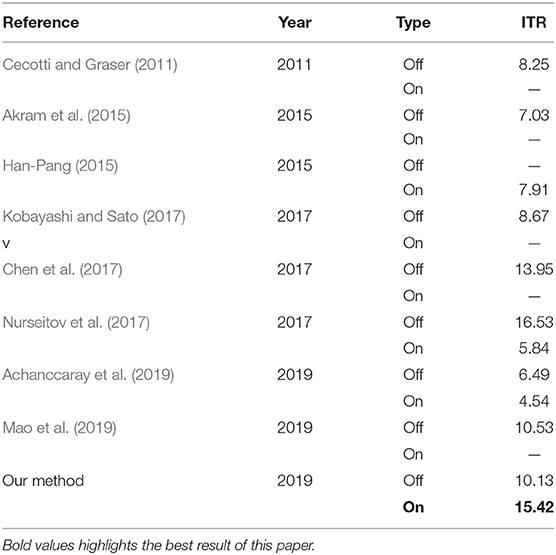

where P is the probability of correctly recognizing a command (character), N is the number of classes, and T is the time needed to detect a command. Table 6 shows a comparison between previous research using the P300 signal and our method. Here, the N is 36, which supported our method to meet the practical needs of versatility. However, in this case, how to balance the accuracy and detection time becomes a difficult problem. To ensure stability and safety, ISI is set to 150 ms during training to help the participant adapt easier and is set to 75 ms during the online testing and actual use for faster speed. In comparison, we can see that although the offline performance is average due to the conservative T setting, the online performance has achieved a significant advantage. The method proposed by Cecotti et al. uses a massive data set to train a convolutional neural network with great potential (Cecotti and Graser, 2011). But as seen in this paper, there may be serious instability in online use.

Our method has been significantly improved compared with some nice research based on P300, which was not limited to the use of assist robot. It can be seen that it achieves not only a high-performance method but also a practical and easy-to-use system for target groups from the experimental test, statistical inference, and comparison with other methods.

There are still some limitations. Some parameters in the method were not detailed for demonstration because we chose the best of the ones we had considered through a series of pre-experiments for comprehensive performance. And the number of repetitions used to detect a character was set to medium, that also made the T slightly long, to ensure our method was robust enough for any user. Besides, the inherent characteristics of P300 made the ITR less than some methods based on SSVEP. Nevertheless, SSVEP was not very acceptable for some applicable scenarios, such that some cases do not need to be too fast but need to be more stable and comfortable to use. A shortcoming cannot be ignored is that we did not compare our method in-depth with some recent great P300 detection methods based on deep learning (Ditthapron et al., 2019). However, we think our methods on the basis of classical machine learning also have decent performance and are convenient to be implemented with lower computation cost, which is easier to be used in practice for relevant developers. We will try to use deep learning to improve our online control system in future work.

Conclusions

In this paper, a remarkable EEG-based human-machine interface is proposed to online control assist robots for disabled people. We have accomplished a high-performance method using the P300 component to detect the user's intentions for control. This method with good accuracy and ITR proved to be effective and practical enough for real life by offline and online tests. Moreover, based on the control method, an upper-limb assist robot is developed to assist users to perform some activities such as grasping, book turning with security measures and a user-friendly interactive program, which gives a meaningful reference to future work. Besides, a novel mobile robot controlled by our method and computer vision proves the robustness and generalizability. Further tests on stroke patients performing therapeutic exercises will be considered with the upper-limb assist robot.

Data Availability Statement

The datasets generated for this study are available on request to the corresponding author.

Ethics Statement

This study was carried out in accordance with the recommendations of SCUT Research Ethics Guidelines and Researcher's Handbook, Ethic Board of Medical school, South China University of Technology with written informed consent from all subjects. All subjects gave written informed consent in accordance with the Declaration of Helsinki. The protocol was reviewed and approved by the Ethic Board of Medical school, South China University of Technology.

Author Contributions

YS, SC, and LX contributed conception and design of the study. YS, LY, and GL carried out the experiments, data processing, and assist robot design. YS wrote the first draft of the manuscript. SC, LY, GL, WW, and LX wrote sections of the manuscript. All authors contributed to manuscript revision, read, and approved the submitted version.

Funding

This work was supported in part by the National Natural Science Foundation of China (Grant No. 51575188), National Key R&D Program of China (Grant No. 2018YFB1306201), Research Foundation of Guangdong Province (Grant Nos. 2016A030313492 and 2019A050505001), and Guangzhou Research Foundation (Grant No. 201903010028).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

Achanccaray, D., Chau, J. M., Pirca, J., Sepulveda, F., and Hayashibe, M. (2019). “Assistive robot arm controlled by a P300-based brain machine interface for daily activities,” in 2019 9th International IEEE/EMBS Conference on Neural Engineering (NER) (San Francisco, CA: IEEE), 1171–1174.

Akram, F., Han, S. M., and Kim, T. S. (2015). An efficient word typing P300-BCI system using a modified T9 interface and random forest classifier. Comput. Biol. Med. 56, 30–36. doi: 10.1016/j.compbiomed.2014.10.021

Alia, C., Spalletti, C., Lai, S., Panarese, A., Lamola, G., Bertolucci, F., et al. (2017). Neuroplastic changes following brain ischemia and their contribution to stroke recovery: novel approaches in neurorehabilitation. Front. Cell. Neurosci. 11:76. doi: 10.3389/fncel.2017.00076

Ang, K. K., Chua, K. S. G., Phua, K. S., Wang, C., Chin, Z. Y., Kuah, C. W. K., et al. (2015). A randomized controlled trial of EEG-based motor imagery brain-computer interface robotic rehabilitation for stroke. Clin. EEG Neurosci. 46, 310–320. doi: 10.1177/1550059414522229

Arican, M., and Polat, K. (2019). Pairwise and variance based signal compression algorithm (PVBSC) in the P300 based speller systems using EEG signals. Comput. Methods Programs Biomed. 176, 149–157. doi: 10.1016/j.cmpb.2019.05.011

Belagaje, S. R. (2017). Stroke rehabilitation. Contin. Lifelong Learn. Neurol. 23, 238–253. doi: 10.1212/CON.0000000000000423

Bhattacharyya, S., Konar, A., and Tibarewala, D. N. (2014). Motor imagery, P300 and error-related EEG-based robot arm movement control for rehabilitation purpose. Med. Biol. Eng. Comput. 52, 1007–1017. doi: 10.1007/s11517-014-1204-4

Cecotti, H., and Graser, A. (2011). Convolutional neural networks for P300 detection with application to brain-computer interfaces. IEEE Trans. Pattern Anal. Mach. Intell. 33, 433–445. doi: 10.1109/TPAMI.2010.125

Cervera, M. A., Soekadar, S. R., Ushiba, J., Millán, J., del, R., Liu, M., et al. (2018). Brain-computer interfaces for post-stroke motor rehabilitation: a meta-analysis. Ann. Clin. Transl. Neurol. 5, 651–663. doi: 10.1002/acn3.544

Chaudhary, U., Birbaumer, N., and Ramos-Murguialday, A. (2016). Brain-computer interfaces for communication and rehabilitation. Nat. Rev. Neurol. 12, 513–525. doi: 10.1038/nrneurol.2016.113

Chen, X., Zhao, B., Wang, Y., and Gao, X. (2019). Combination of high-frequency SSVEP-based BCI and computer vision for controlling a robotic arm. J. Neural Eng. 16:026012. doi: 10.1088/1741-2552/aaf594

Chen, Y., Ke, Y., Meng, G., Jiang, J., Qi, H., Jiao, X., et al. (2017). Enhancing performance of P300-Speller under mental workload by incorporating dual-task data during classifier training. Comput. Methods Programs Biomed. 152, 35–43. doi: 10.1016/j.cmpb.2017.09.002

Chowdhury, A. H., Chowdhury, R. N., Khan, S. U., Ghose, S. K., Wazib, A., Alam, I., et al. (2015). Sensitivity and specificity of electroencephalography (EEG) among patients referred to an electrophysiology lab in Bangladesh. J. Dhaka Med. Coll. 23, 215–222. doi: 10.3329/jdmc.v23i2.25394

Dahm, S. F., and Rieger, M. (2016). Cognitive constraints on motor imagery. Psychol. Res. 80, 235–247. doi: 10.1007/s00426-015-0656-y

de Arancibia, L., Sánchez-González, P., Gómez, E. J., Hernando, M. E., and Oropesa, I. (2020). Linear vs Nonlinear Classification of Social Joint Attention in Autism Using VR P300-based Brain Computer Interfaces. Coimbra: Springer International Publishing, 1869–1874. doi: 10.1007/978-3-030-31635-8_227

Ditthapron, A., Banluesombatkul, N., Ketrat, S., Chuangsuwanich, E., and Wilaiprasitporn, T. (2019). Universal joint feature extraction for P300 EEG classification using multi-task autoencoder. IEEE Access 7, 68415–68428. doi: 10.1109/ACCESS.2019.2919143

Dobkin, B. H. (2004). Strategies for stroke rehabilitation. Lancet Neurol. 3, 528–536. doi: 10.1016/S1474-4422(04)00851-8

Duszyk, A., Bierzynska, M., Radzikowska, Z., Milanowski, P., Ku,ś, R., Suffczynski, P., et al. (2014). Towards an optimization of stimulus parameters for brain-computer interfaces based on steady state visual evoked potentials. PLoS ONE 9:e112099. doi: 10.1371/journal.pone.0112099

Duvinage, M., Castermans, T., Jimenez-Fabian, R., Hoellinger, T., De Saedeleer, C., Petieau, M., et al. (2012). “A five-state P300-based foot lifter orthosis: proof of concept,” in 2012 ISSNIP Biosignals and Biorobotics Conference: Biosignals and Robotics for Better and Safer Living (BRC) (Manaus: IEEE), 1–6. doi: 10.1109/BRC.2012.6222193

Farwell, L. A., and Donchin, E. (1988). Talking off the top of your head: toward a mental prosthesis utilizing event-related brain potentials. Electroencephalogr. Clin. Neurophysiol. 70, 510–523. doi: 10.1016/0013-4694(88)90149-6

Ferree, T. C., Luu, P., Russell, G. S., and Tucker, D. M. (2001). Scalp electrode impedance, infection risk, and EEG data quality. Clin. Neurophysiol. 112, 536–544. doi: 10.1016/S1388-2457(00)00533-2

Gao, X., Xu, D., Cheng, M., and Gao, S. (2003). A BCI-based environmental controller for the motion-disabled. IEEE Trans. Neural Syst. Rehabil. Eng. 11, 137–140. doi: 10.1109/TNSRE.2003.814449

Han-Pang, H. (2015). Development of a P300 brain–machine interface and design of an elastic mechanism for a rehabilitation robot. Int. J. Autom. Smart Technol. 5, 91–100. doi: 10.5875/ausmt.v5i2.518

Hong, K.-S., and Khan, M. J. (2017). Hybrid brain-computer interface techniques for improved classification accuracy and increased number of commands: a review. Front. Neurorobot. 11:35. doi: 10.3389/fnbot.2017.00035

Kobayashi, N., and Sato, K. (2017). “P300-based control for assistive robot for habitat,” in 2017 IEEE 6th Global Conference on Consumer Electronics (GCCE) (Las Vegas, NV: IEEE), 1–5. doi: 10.1109/GCCE.2017.8229318

Kundu, S., and Ari, S. (2018). P300 detection with brain–computer interface application using PCA and ensemble of weighted SVMs. IETE J. Res. 64, 406–414. doi: 10.1080/03772063.2017.1355271

Kundu, S., and Ari, S. (2020). MsCNN: a deep learning framework for P300-based brain–computer interface speller. IEEE Trans. Med. Robot. Bionics 2, 86–93. doi: 10.1109/TMRB.2019.2959559

Lecun, Y., Bengio, Y., and Hinton, G. (2015). Deep learning. Nature 521, 436–444. doi: 10.1038/nature14539

Lu, Z., Li, Q., Gao, N., Yang, J., and Bai, O. (2019). A novel audiovisual P300-speller paradigm based on cross-modal spatial and semantic congruence. Front. Neurosci. 13:1040. doi: 10.3389/fnins.2019.01040

Mao, X., Li, W., Lei, C., Jin, J., Duan, F., and Chen, S. (2019). A brain–robot interaction system by fusing human and machine intelligence. IEEE Trans. Neural Syst. Rehabil. Eng. 27, 533–542. doi: 10.1109/TNSRE.2019.2897323

Meng, J., Zhang, S., Bekyo, A., Olsoe, J., Baxter, B., and He, B. (2016). Noninvasive electroencephalogram based control of a robotic arm for reach and grasp tasks. Sci. Rep. 6:38565. doi: 10.1038/srep38565

Mitzdorf, U. (1985). Current source-density method and application in cat cerebral cortex: investigation of evoked potentials and EEG phenomena. Physiol. Rev. 65, 37–100. doi: 10.1152/physrev.1985.65.1.37

Monge-Pereira, E., Ibañez-Pereda, J., Alguacil-Diego, I. M., Serrano, J. I., Spottorno-Rubio, M. P., and Molina-Rueda, F. (2017). Use of electroencephalography brain-computer interface systems as a rehabilitative approach for upper limb function after a stroke: a systematic review. PM&R 9, 918–932. doi: 10.1016/j.pmrj.2017.04.016

Noctor, S. C., Flint, A. C., Weissman, T. A., Dammerman, R. S., and Kriegstein, A. R. (2001). Neurons derived from radial glial cells establish radial units in neocortex. Nature 409, 714–720. doi: 10.1038/35055553

Norcia, A. M., Gregory Appelbaum, L., Ales, J. M., Cottereau, B. R., and Rossion, B. (2015). The steady-state visual evoked potential in vision research: a review. J. Vis. 15:4. doi: 10.1167/15.6.4

Nurseitov, D., Serekov, A., Shintemirov, A., and Abibullaev, B. (2017). “Design and evaluation of a P300-ERP based BCI system for real-time control of a mobile robot,” in 5th International Winter Conference on Brain-Computer Interface, BCI (IEEE), 115–120. doi: 10.1109/IWW-BCI.2017.7858177

Picton, T. W. (1992). The P300 wave of the human event-related potential. J. Clin. Neurophysiol. 9, 456–479. doi: 10.1097/00004691-199210000-00002

Picton, T. W., Bentin, S., Berg, P., Donchin, E., Hillyard, S. A., Johnson, R., et al. (2000). Guidelines for using human event-related potentials to study cognition: recording standards and publication criteria. Psychophysiology 37, 127–152. doi: 10.1111/1469-8986.3720127

Polich, J. (2007). Updating P300: an integrative theory of P3a and P3b. Clin. Neurophysiol. 118, 2128–2148. doi: 10.1016/j.clinph.2007.04.019

Prasad, G., Herman, P., Coyle, D., McDonough, S., and Crosbie, J. (2010). Applying a brain-computer interface to support motor imagery practice in people with stroke for upper limb recovery: a feasibility study. J. Neuroeng. Rehabil. 7:60. doi: 10.1186/1743-0003-7-60

Raksha, N., Srihari, S., Joshi, S. P., and Krupa, B. N. (2018). “Stepwise and quadratic discriminant analysis of P300 signals for controlling a robot,” in 2018 International Conference on Networking, Embedded and Wireless Systems, ICNEWS 2018 - Proceedings (Karnataka: IEEE), 1–4.

Ramele, R., Villar, A. J., and Santos, J. M. (2019). Histogram of gradient orientations of signal plots applied to P300 detection. Front. Comput. Neurosci. 13:43. doi: 10.3389/fncom.2019.00043

Redmon, J., and Farhadi, A. (2017). YOLO9000: better, faster, stronger. Proceeding - 30th IEEE Conference Computation Visual Pattern Recognition, CVPR 2017 (Honolulu), 6517–6525.

Renard, Y., Lotte, F., Gibert, G., Congedo, M., Maby, E., Delannoy, V., et al. (2010). OpenViBE: An open-source software platform to design, test, and use brain-computer interfaces in real and virtual environments. Presence Teleoperators Virtual Environ. 19, 35–53. doi: 10.1162/pres.19.1.35

Rivet, B., Souloumiac, A., Attina, V., and Gibert, G. (2009). xDAWN algorithm to enhance evoked potentials: application to brain-computer interface. IEEE Trans. Biomed. Eng. 56, 2035–2043. doi: 10.1109/TBME.2009.2012869

Sams, M., Paavilainen, P., Alho, K., and Näätänen, R. (1985). Auditory frequency discrimination and event-related potentials. Electroencephalogr. Clin. Neurophysiol. Evoked Potentials 62, 437–448. doi: 10.1016/0168-5597(85)90054-1

Schomer, D. L., and da Silva, F. H. L. (2012). Niedermeyer's Electroencephalography: Basic Principles, Clinical Applications, and Related Fields: 6th Edn. Oxford: Oxford University Press.

Shannon, C. E. (1948). A mathematical theory of communication. Bell Syst. Tech. J. 27, 379–423 doi: 10.1002/j.1538-7305.1948.tb01338.x

Tal, O., and Friedman, D. (2019). Recurrent Neural Networks for P300-based BCI. Available Online at: http://arxiv.org/abs/1901.10798 (accessed January 30, 2019).

Tariq, M., Trivailo, P. M., and Simic, M. (2018). EEG-based BCI control schemes for lower-limb assistive-robots. Front. Hum. Neurosci. 12:312. doi: 10.3389/fnhum.2018.00312

Velasco-Álvarez, F., Sancha-Ros, S., García-Garaluz, E., Fernández-Rodríguez, Á., Medina-Juliá, M. T., and Ron-Angevin, R. (2019). UMA-BCI speller: an easily configurable P300 speller tool for end users. Comput. Methods Programs Biomed. 172, 127–138. doi: 10.1016/j.cmpb.2019.02.015

Vialatte, F. B., Maurice, M., Dauwels, J., and Cichocki, A. (2010). Steady-state visually evoked potentials: focus on essential paradigms and future perspectives. Prog Neurobiol. 90, 418–438. doi: 10.1016/j.pneurobio.2009.11.005

Wang, C., Kok, S. P., Kai, K. A., Guan, C., Zhang, H., Lin, R., et al. (2009). “A feasibility study of non-invasive motor-imagery BCI-based robotic rehabilitation for stroke patients,” in 2009 4th International IEEE/EMBS Conference on Neural Engineering, NER '09 (Antalya), 271–274.

Xu, Y., Ding, C., Shu, X., Gui, K., Bezsudnova, Y., Sheng, X., et al. (2019). Shared control of a robotic arm using non-invasive brain–computer interface and computer vision guidance. Rob. Auton. Syst. 115, 121–129. doi: 10.1016/j.robot.2019.02.014

Keywords: EEG, human-machine interface, assist robot, online control, practicability

Citation: Song Y, Cai S, Yang L, Li G, Wu W and Xie L (2020) A Practical EEG-Based Human-Machine Interface to Online Control an Upper-Limb Assist Robot. Front. Neurorobot. 14:32. doi: 10.3389/fnbot.2020.00032

Received: 25 February 2020; Accepted: 06 May 2020;

Published: 10 July 2020.

Edited by:

Francesca Cordella, Campus Bio-Medico University, ItalyReviewed by:

Theerawit Wilaiprasitporn, Vidyasirimedhi Institute of Science and Technology, ThailandGiacinto Barresi, Italian Institute of Technology (IIT), Italy

Copyright © 2020 Song, Cai, Yang, Li, Wu and Xie. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Longhan Xie, melhxie@scut.edu.cn

Yonghao Song

Yonghao Song Siqi Cai

Siqi Cai Lie Yang

Lie Yang Guofeng Li

Guofeng Li Weifeng Wu

Weifeng Wu Longhan Xie

Longhan Xie