Abstract

Knowledge on the spatio-temporal distribution of invasive plant species is vital to maintain biodiversity in grasslands which are threatened by the invasion of such plants and to evaluate the effect of control activities conducted. Manual digitising of aerial images with field verification is the standard method to create maps of the invasive Lupinus polyphyllus Lindl. (Lupine) in semi-natural grasslands of the UNESCO biosphere reserve “Rhön”. As the standard method is labour-intensive, a workflow was developed to map lupine coverage using an unmanned aerial vehicle (UAV)-borne remote sensing (RS) along with object-based image analysis (OBIA). UAV-borne red, green, blue and thermal imaging, as well as photogrammetric canopy height modelling (CHM) were applied. Images were segmented by unsupervised parameter optimisation into image objects representing lupine plants and grass vegetation. Image objects obtained were classified using random forest classification modelling based on objects’ attributes. The classification model was employed to create lupine distribution maps of test areas, and predicted data were compared with manually digitised lupine coverage maps. The classification models yielded a mean prediction accuracy of 89%. The maximum difference in lupine area between classified and digitised lupine maps was 5%. Moreover, the pixel-wise map comparison showed that 88% of all pixels matched between classified and digitised maps. Our results indicated that lupine coverage mapping using UAV-borne RS data and OBIA provides similar results as the standard manual digitising method and, thus, offers a valuable tool to map invasive lupine on grasslands.

Zusammenfassung

Kartierung der invasiven Lupinus Polyphyllus Lindl. auf naturnahem Grünland mit UAV-gestützten Bildern und objektbasierter Bildanalyse. Die Kenntnis der raum-zeitlichen Verteilung invasiver Pflanzenarten ist unerlässlich a) für die Exhaltung der Artenvielfalt in Grünland, das durch das Eindringen solcher Pflanzen bedroht ist, und b) für die Kontrolle der Wirksamkeit von Gegenmaßnahmen. Die manuelle Digitalisierung von Luftbildern mit Feldvergleich ist bisher die Standardmethode dardmethode zur Erstellung von Karten der invasiven Lupinus polyphyllus Lindl. (Lupine) in naturnahen Magerrasen des UNESCO-Biosphärenreservats "Rhön". Da dieses Verfahren arbeitsaufwändig ist, wurde eine neue Methode zur Kartierung der Verbreitung der Lupine auf Basis von UAV-gestützter Fernerkundung (RS) und objektbasierter Bildanalyse (OBIA) entwickelt. Dabei kommen rgb-Bilder, Thermalbilder und Oberflächenmodelle der Vegetation zum Einsatz. Die Bilder wurden durch eine parameteroptimierte, unüberwachte Segmentierung so zerlegt, dass die Segmente die Objektklassen „Lupine“ und „Gras“ repräsentieren. Die Segmente wurden dann anhand der Objektattribute mit einer Random-Forest- Klassifizierung einzeln den Klassen „Lupine“ und „Gras“ zugeordnet. Eine aus dem Klassifikationsmodell abgeleitete Karte zur Lupinenverteilung wurde mit einer manuell digitalisierten Karte der Lupinenverteilung verglichen. Dabei zeigte sich eine mittlere Vorhersagegenauigkeit (prediction accuracy) von 89 %. Der größte Unterschied zwischen klassifizierter und digitalisierter Lupinenfläche betrug 5%. Darüber hinaus zeigte der pixelweise Vergleich, dass 88% aller klassifizierten Pixel mit den digitalisierten Pixeln übereinstimmten. Unsere Ergebnisse zeigen, dass die Kartierung der Lupinenverteilung mit UAV-basierten RS-Daten und OBIA ähnliche Ergebnisse liefert wie die bisherige manuelle Digitalisierung und damit ein wertvolles Werkzeug zur Kartierung invasiver Lupinen im Grünland darstellt.

Similar content being viewed by others

1 Introduction

Biological invasion is threatening to biodiversity in many ecosystems in the world. The invasion by alien plant species is considered as one of the significant drivers for loss of biodiversity and ecosystem functionality. The leading cause for the introduction of alien plant species is human activities. After a new plant species is introduced to the ecosystem, depending on the adaptation capability of the plant, it obtains a naturalisation status which is the ability to self-sustain without human involvement (Pyšek and Richardson 2011). At present, there are 3749 naturalised alien plant species in Europe, and 37.4% out of them occur in grassland habitats (Lambdon et al. 2008).

Processes and dynamics of invasion of plant species are complex (Courchamp et al. 2017). However, knowledge on the spatial, temporal distribution of invasive plant species in a given habitat is critical to understand invasion pattern (Müllerová et al. 2017), and maps showing invasive plants’ distribution are helpful for effective control activities. Aerial image digitising verified with field survey is the standard method to create invasive plant species distribution maps. Such maps are time-consuming and labour-intensive to produce due to aerial image acquisition, manual digitising, and field verification steps.

Classification of remotely sensed images to map invasive species is a well-adopted technology for many invasive species in different parts of the world and various ecosystems (Royimani et al. 2018). In grasslands, invasive woody (Mirik et al. 2013) and shrubby (Laliberte et al. 2004; Ishii and Washitani 2013) species mapping has shown excellent results with satellite and airborne image classification. Meanwhile, using an unmanned aerial vehicle (UAV)-borne remotely sensed (RS) imaging was increasingly applied for invasive species mapping in the recent years in flood plains and coastal regions (de Sá et al. 2018; Martin et al. 2018; Abeysinghe et al. 2019; Jianhui et al. 2019). Cost-effectiveness, high spatial and temporal resolution and the increasing availability of various miniature sensors (e.g. RGB cameras, spectral sensors, thermal cameras) are the main advantages of the UAV-borne imaging compared to satellite RS data (Michez et al. 2016). Apart from the spectral or thermal information, UAV-borne RS data can also provide 3D point cloud data, which can be employed to derive canopy height models (CHM) for grassland canopies (Grüner et al. 2019; Wijesingha et al. 2019).

UAV-borne RS images are very high spatial resolution images (less than 50 cm), where one object in the real world is represented by many pixels. Object-based image analysis (OBIA) can better be employed when pixels are considerably smaller than the object to be identified (Blaschke 2010). Several studies indicated that OBIA provides substantial advantages for mapping invasive plant species based on UAV-borne RS data (Michez et al. 2016; Martin et al. 2018; Abeysinghe et al. 2019). Typical OBIA employs rules derived from the object’s attributes to classify an object, which requires expert knowledge and limits the transferability of the classification model (Belgiu et al. 2014). Contrary, machine learning classification algorithms (e.g. random forest, support vector machine) have shown their capability in OBIA procedures, as they do not require prior knowledge of the objects (Grippa et al. 2017).

Lupinus polyphyllus Lindl. (hereafter referred to as lupine) is on the list of the most 150 widespread alien plant species in Europe (Lambdon et al. 2008). The lupine plant is about 50–150 cm tall and contains 1–2 cm broad leaflets. Lupine flowers are up to 80 cm long in a single terminal, and they are in shades of blue, pink and white (Fremstad 2010). Lupine is native to the western parts of North America and has been recorded in many habitats in Europe, such as grasslands in Germany, Lithuania and at road verges as well as in ruderal areas in Scandinavia (Fremstad 2010). The UNESCO biosphere reserve Rhön in Germany covers a total area of 2433 km2 and mainly consists of low mountain semi-natural grasslands (e.g. NATURA habitat types 6520—mountain hay meadows, and 6230—species-rich Nardus grasslands) (Biosphärenreservat Rhön 2019). In the last few decades, lupine invaded significant parts of the grasslands in the biosphere reserve and substantially changed the habitat functionality mainly through the ability to fix atmospheric nitrogen, which transforms the low-growing, open and species-rich grasslands into dense and productive, but species-poor dominance stands (Otte and Maul 2005; Klinger et al. 2019). Individual lupine plants are controlled manually at early stages of invasion to prevent a massive invasion by lupine, and grassland mowing is allowed after 1st July to maintain a broad diversity of insects and ground breeding birds (Biosphärenreservat Rhön 2019).

The knowledge of the spatial distribution of lupine in the grasslands is vital to conduct control activities and to monitor their efficacy. The first lupine distribution map of the region was created in 1998 using manually digitised aerial photographs at a 1:2.500 scale (Otte and Maul 2005). The latest map of the spatial distribution was generated in 2016 using 20 cm digital ortho-mosaics from aerial photographs (Klinger et al. 2019). Those maps were useful to monitor changes in the lupine distribution in the long term. The interval between the two maps of 18 years is partly due to the time- and labour-demanding processes involved. Therefore, a repeatable, transferable methodology is needed, that produces lupine distribution maps at different spatial and temporal scales to monitor the lupine distribution and to assess the benefit of control activities.

According to Skowronek et al. (2018), RS-based mapping of the spatial distribution of Lupine was not successfully implemented until now. In order to fill this gap, we propose an approach to map invasive lupine in grassland, which is based on UAV-borne RS data and OBIA. We hypothesise that the proposed procedure could categorise lupine and non-lupine vegetation in grasslands with the same precision as the standard digitising method. This study presents an operational workflow to create maps of lupine cover and compares lupine distribution maps from the developed workflow with manually digitised lupine coverage maps.

2 Materials and Methods

2.1 Study Area

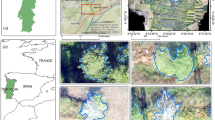

The study was carried out in two grassland fields at UNESCO biosphere reserve Rhön in Germany, which were invaded by lupine (Figs. 1a, 1b, 2). One field was classified as a former mountain hay meadow (hereafter referred to as G1), and the other was an old Nardus stricta grassland (hereafter referred to as G2). In both fields, rectangle plots of 1500 m2 (50 m by 30 m) were chosen as study areas, and 15 small plots of 64m2 (8 m by 8 m) were established within a grid (Fig. 1c, 1d). Three cutting dates (12th June, 26th June, 09th July, hereafter referred to as D1, D2, and D3, respectively) were randomly assigned to 5 replicated plots (Fig. 1c, 1d). At each date, plots were mowed at a stubble height of 5 cm, and biomass was removed from the field.

2.2 Data Collection

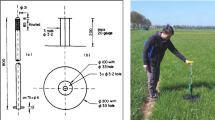

At each sampling date in each grassland field, UAV-borne images were acquired. A DJI-Phantom IV quadcopter (DJI, China) with an inbuilt off-the-shelf camera (FC330) was employed to obtain UAV-borne RGB images. The camera (FC330) captures a 12-megapixel image in red (R), green (G), and blue (B) bands. The UAV was flown at 20 m flying height, and it resulted in 0.009 m ground sampling distance. The UAV flight mission was designed using Pix4D capture app for Android (App version 4.4.0, Pix4D, Switzerland). The UAV was flown as double grid mission (two perpendicular missions), and the camera was triggered automatically to capture nadir-looking images based on the image overlap configuration (80% both forward and side overlap). All the flight sessions were conducted between 12:00 and 14:00. Before each flight session, nine black and white 1 m2 ground control points were distributed over the study area. Just after the UAV flights, the position of each ground control point was measured using a Leica RTK GNSS (Leica Geosystems GmbH, Germany) with 2 cm 3D coordinate precision. Additional UAV-borne RGB image was taken on 16 August 2019, when the whole fields were mowed.

A FLIR Vue Pro R (FLIR Systems Incorporation, USA) thermal camera was attached to the UAV parallel to the RGB camera. The camera has a 19 mm lens, and it has a spectral sensitivity between 7500 and 13,500 nm. With a single UAV flight, both thermal and RGB images were captured simultaneously. The thermal camera captures images as a radiometric JPEG which contains radiometrically calibrated temperature data. The thermal image has 640 by 512 pixels (FLIR 2016). The thermal camera was triggered every second throughout the whole UAV mission. Before each thermal data collection, metadata related to the thermal camera were collected using the FLIR UAS 2 app (App version 2.2.4, FLIR Systems Incorporation, USA), such as distance to the target (20 m), relative humidity, air temperature, and emissivity (0.98). All the metadata were saved in each captured image’s EXIF data.

A total of six UAV-borne RGB and six thermal datasets were collected. Hereafter, each dataset is labelled according to cutting date and grassland type (DiGj: where i = 1, 2, 3 and j = 1, 2). In each dataset, maturity stages of grasslands were different due to mowing activities in 64 m2 small plots. Maturity stage was lowest (V0) in the D1 dataset and was the same for all 30 small plots. At the 2nd cutting date (D2), 20 small plots out of 30 were covered by 2 weeks older vegetation (V2 weeks), while 10 small plots (which were cut at D1) had vegetation which was regrown for 2 weeks (VR2 weeks). The D3 dataset was composed of 10 plots with undisturbed vegetation (V4 weeks) which was 4 weeks older than V0, 10 plots of VR2weeks, and further 10 plots with vegetation regrown for 4 weeks (VR4 weeks) after D1.

2.3 Object-Based Image Analysis

2.3.1 Canopy Height Model and Point Density

Each collected dataset was processed separately, and the same procedure was applied, as explained below. The UAV-borne RGB images and coordinates of ground control points were processed with the Agisoft PhotoScan Professional version 1.4.4 software (Agisoft LLC, Russia). The software applied structure from motion (SFM) technique to align multi-view overlapping images and to build a dense 3D point cloud. The procedure of point cloud generation and canopy height computation was adopted from Wijesingha et al. (2019), and further details of the process can be found there.

The point density (PD) raster was created from the dense point cloud by binning into a raster with 2 cm. The PD raster contained point count under a cell area (4 cm2). The digital terrain model (reference plane) was generated using the August RGB images with a cell size raster of 5 cm. The z values of 3D point cloud and the digital terrain model based on x, y locations were subtracted to generate a point cloud with canopy height. The point cloud with canopy height was binned into the 2 cm cell size raster, where each cell contained mean canopy height value and hereafter it was considered as canopy height model (CHM) raster.

2.3.2 RGB Ortho-Mosaic

RGB ortho-mosaic was obtained after further processing of the dense point cloud in PhotoScan software. The output RGB ortho-mosaic was geo-referenced with a 1 cm spatial resolution. The RGB ortho-mosaic was converted into hue (H), intensity (I), and saturation (S) colour model using GRASS GIS and hereafter it was considered as HIS ortho-mosaic (Gonzalez and Woods 2008; GRASS Development Team 2017).

2.3.3 Thermal Digital Ortho-mosaic

The single JPEG thermal image contained 8-bit digital numbers. Following workflow and equations were adapted from Turner et al. (2017) to convert digital numbers to temperature values. The conversion workflow was conducted with EXIFtools and R programming language (Phil Harvey 2016; Dunnington and Harvey 2019; R Core Team 2019). A raw thermal TIFF image was exported from the JPEG image. Metadata of image were extracted from the JPEG EXIF header for each image. Based on the metadata and raw TIFF image values, the image with temperature was computed and exported as a TIFF file. The exported TIFF image contained a calibrated temperature value in degree Celsius (°C). Like RGB ortho-mosaic generation, thermal ortho-mosaic with 2 cm spatial resolution was generated using calibrated thermal images.

2.3.4 Spectral Shape Index and Texture Images

A spectral shape index (SSI) (Eq. 1) based on RGB image values was computed, and it showed excellent results for isolation of shadows within the vegetation (Chen et al. 2009). Moreover, two texture features (second-order statistics texture namely angular second moment (ASM)—uniformity, and inverse difference moment (IDM)—homogeneity) (Haralick 1979) from both intensity image and thermal image were computed.

where R, G, and B are red, green, and blue values, respectively.

2.3.5 Segmentation

Segmentation and classification are the two main steps in OBIA (Silver et al. 2019). The segmentation is the first step and by definition “it divides an image or any raster or point data into spatially continuous, disjoint and homogeneous regions, referred to as segments or image objects” (Blaschke et al. 2014). The quality of segmentation depends on the balance between intersegment homogeneity and heterogeneity (Espindola et al. 2006). Variance and spatial autocorrelation (Moran’s I) between segments are utilised as measures to evaluate intersegment homogeneity and heterogeneity, respectively. The segmentation threshold (also referred to as scale) can control the balance between intersegment variance and spatial autocorrelation. Therefore, finding the optimum threshold is essential to produce segments which are matching to the real-world objects (Espindola et al. 2006). Johnson et al. (2015) established an F measure to identify the quality of the segmentation result for a given threshold value. The F measure is based on variance and spatial autocorrelation and calculated using Eq. 2 (Johnson et al. 2015). A weight value (a) must be defined in the F measure, with 0.5 is half weighting, and 2 is double weighting. The higher the F measure, the higher the quality of the segmentation.

where MInorm is the normalised Moran’s I value, Vnorm is the normalised variance value, a is the alpha weight, and F is the F measure.

Espindola et al. (2006) introduced the Unsupervised Parameter Optimisation (USPO) procedure to identify the optimum threshold value for the given image from a range of threshold values based on one of the quality measures mentioned above. The USPO procedure was implemented as an add-on tool called i.segment.uspo in GRASS GIS software (Lennert and GRASS Development Team 2019a). The CHM raster, PD raster and hue image from HIS ortho-mosaic were used in the segmentation process (Fig. 3). According to Georganos et al. (2018), finding the optimum threshold values for different local image regions gives superior results compared to the use of a single threshold for the whole image. Hence, the image was divided into sixteen small zones (15 zones overlapping with the 64 m2 plots for each study plot and one zone for the paths between the plots). Specific local threshold (ranging from 0.01 to 0.15) was determined for each region based on the F measure. The alpha value in the F measure calculation was kept at 0.5. Python Jupyter Notebook codes from Grippa (2018) were adopted and modified according to this study for automating the segmentation process using i.segment.uspo for each zone. The segmentation procedure was applied separately for each study plot and sampling date. A total of six different segmented rasters were created according to six datasets.

2.3.6 Attribute Calculation for Segmented Image Objects

The segmented raster was vectorised, and each segmented object was created as a polygon. Four geometric attributes [area (A), perimeter (P), fractional dimension (FD) (Eq. 3), and circle compactness (CC) (Eq. 4)] for the segmented objects were calculated. Based on all raster data (RGB image, HIS image, CHM raster, PD raster, thermal image, SSI image, and texture raster), the mean and standard deviation values for each polygon were computed as image-based attributes. Attribute calculation was done using i. segment.stats add-on in GRASS GIS (Lennert and GRASS Development Team 2019b). In total, 32 attributes (4 geometric and 28 image-based) were generated (Table 1).

2.3.7 Classification Model

Ten percent of the segmented objects (3698 out of a total of 81,704 objects) were manually labelled as either lupine (L) or non-lupine (NL) based on visual observation using the RGB ortho-mosaics. In each dataset, the number of L and NL labels was very similar (L = 1892 and NL = 1806), and labelled objects were spatially randomised. The labelled objects with attributes were utilised to develop a supervised classification model.

Classification model training and testing were conducted using R statistical software (R Core Team 2019). The random forest (RF) machine learning classification algorithm was employed to build a classification model using the mlr package in R software (Bischl et al. 2016). The RF has proven its efficiency for image–object classification using objects’ attribute data (Belgiu and Drăgu 2016). The RF algorithm utilises both decision trees and bagging (Breiman 2001). The decision trees are created from a subset of the training samples with replacement (known as bagging). Based on the average outcome from the decision trees, the sample is assigned to a majority class (Belgiu and Drăgu 2016).

A total of six RF classification models were built, and in each model, five datasets were employed to train the model, while the remaining dataset was used to test the model (Table 2). All the attributes (32) were employed as an input of the model alone with objects’ labels. Two hyperparameters, namely mtry (number of selected variables in each split) and node size (number of observations in a terminal node) (Probst et al. 2019) were tuned in the model training phase using random search. The model was trained with repeated spatial cross-validation resampling (five-folds and two repeats) to classify objects. The spatial cross-validation reduces the effect of spatial autocorrelation for model accuracy (Brenning 2012). Under the spatial cross-validation, the resampling is done based on the location of the observations. The location was based on the centroid of the objects. The trained model employed to predict objects’ labels of the holdout dataset. According to predicted labels and actual labels, the model performance was evaluated by calculating overall accuracy (OA), true-positive-rate (TPR), and false-positive-rate (FPR) (Eqs. 5, 6, and 7, respectively).

where TP is true positive, TN is true negative, FP is false positive, and FN is false negative.

2.4 Lupine Coverage Mapping

A single RF classification model (Mall) was trained using all labelled objects from the six datasets. Based on predicted labels from Mall, a lupine coverage map was generated (hereafter referred to as classification-based lupine coverage map). Additionally, the importance of the objects’ attributes to the Mall classification model was assessed based on the mean decrease Gini value. It is based on “the total decrease in node impurities from splitting on the variable, averaged over all trees” (Liaw and Wiener 2002). The higher mean decreased value indicated higher importance of the particular feature in the RF model.

A reference lupine coverage map for each dataset was created by digitising each RGB ortho-mosaic and was compared to the lupine area from the classification-based lupine coverage map. A relative number of no-difference pixels from two maps were computed as a measure for map accuracy (MA) (Eq. 8). Additionally, the pixel-wise correlation coefficient (PCC) was calculated. Each 64 m2 plot was divided into four equal areas of 16m2 each, and the relationship between relative digitised lupine area (LA) and MA of subdivided plots was analysed to understand the MA at different levels of LA.

3 Results

3.1 Image Segmentation

Ortho-mosaics from RGB and thermal camera images were created in this study as well as SSI, and hue images were computed from the RGB ortho-mosaic. RGB images were processed with SFM technique to generate CHM raster and PD raster. Exemplary images and raster from D1 of G1 are shown in Fig. 4.

The CHM raster, PD raster, and hue image were utilised to create image objects. The optimum threshold values for different image regions were determined using USPO. As shown in Fig. 5, three image regions with different vegetation maturity obtained distinct optimum threshold values. Example of segmented image is also shown in Fig. 6.

Course of (a) variance, (b) spatial autocorrelation, and (c) F measure values for different threshold values in three different image regions where V4weeks: vegetation four weeks older than 12th June vegetation, VR4weeks: regrown vegetation 4 weeks after mowing, and VR2weeks: regrown vegetation 2 weeks after mowing

3.2 Classification Model Training and Testing

Six classification models were trained while holding out one dataset at each time. The model results are summarised in Table 3. Based on the all performance measures in model testing phase, model M12 (model tested with D1G2 data) obtained the lowest performances (OA = 78.2%) and model M32 (model tested with D3G2 data) achieved the highest values (OA = 97.2%). Although model M12 accurately classified all lupine objects (100% TPR), it also categorised nearly half of the non-lupine objects as lupine objects (47.3% FPR). Additionally, models that tested with D1 data (M11 and M12) obtained slightly lower performances compared to other models.

3.3 Final Classification Model and Important Attributes

After testing six classification models with the different spatial–temporal dataset, the complete classification model (Mall) was trained using all available data (3698 objects) with spatial cross-validation. The Mall model achieved 94.2% training accuracy.

The variable importance of the Mall model based on mean decrease Gini value is shown in Fig. 7. Attributes related to CHM, SSI and object’s geometry (area and perimeter) were the top most essential attributes in the model. Texture features from both thermal and intensity images were the least essential prediction variables to the Mall model.

Important object’s attributes for the Mall classification model (complete model) based on mean decrease Gini values. (FD fractional dimension, CC circle compactness, SD: standard deviation, SSI spectral shape index, ASM angular second moment, IDM inverse difference moment, CHM canopy height model, PD point density)

3.4 Lupine Coverage Maps

Based on visual observation between digitised lupine map and classified lupine map, both maps showed similar visual representation. Figure 8 illustrates lupine coverage maps from both digitising and classification for three sampling dates (D1, D2, D3) in G1 field. However, the area-based comparison showed maximum ± 5% of the change in total lupine coverage (Table 4).

The classification-based lupine coverage was assessed against reference lupine coverage map using comparing pixels in two raster maps. From comparison results, map accuracy relative to the reference map and PCC was computed (Table 4). According to raster comparison results, D1 dataset obtained the lowest MA (80.4%, 80.9%) and PCC (0.40, 0.50) values in G1 and G2 fields, respectively. The MA and PCC values tended to increase with reducing lupine coverage. However, comparison results for G2 dataset always indicated slightly better results than G1.

Relationship between the relative LA and MA indicated a negative exponential trend (Fig. 9). The correlation coefficient between relative LA and MA was −0.88, and trend line had 0.80 goodness of fit. Regardless of the vegetation maturity, the explained relationship was valid. Until LA reached 25%, it showed a strong relationship with MA, but over 25% LA, the MA values were scattered around the regression curve.

4 Discussion

Invasion by lupine endangers biodiversity and ecosystem functionality (Otte and Maul 2005; Klinger et al. 2019). The spatial and temporal distribution of lupine is essential to understand the invasive pattern, to plan appropriate management strategies and to monitor the impact of control actions. While RS was utilised to map several invasive plant species (e.g. Impatiens glandulifera, Spartina anglica, Solidago canadensis), invasion patterns of lupine were not examined until now (Skowronek et al. 2018). This study aimed to develop an operational workflow to map the spatial distribution of invasive lupine in grasslands using UAV-borne imageries and OBIA.

OBIA has shown its effectiveness to work with very high-resolution (< 1 m spatial resolution) images, where several pixels represent one object rather than classifying each pixel separately. While OBIA allowed taking advantage of RGB images only, a pixel-based classification approach would have demanded spectral signatures. Segmentation is the critical step in the OBIA. The first step of the proposed workflow was to segment collected UAV-borne images into image objects that represent either lupine or non-lupine (i.e. mainly grass) plants. USPO based area-specific threshold values benefitted for obtaining good object delineation (Fig. 5). However, USPO for a multitude of image areas leads to an increased computational time corresponding to the size of the areas and the spatial resolution of the images (Georganos et al. 2018).

Apart from optimal threshold identification, different combinations of various raster types were tested (data not shown). Visual assessment of the segments obtained suggested that the combined raster with CHM, PD and hue data provided the best segmentation results. This is comprehensible, as the canopy height of lupine plants is usually taller than the surrounding grass vegetation (Otte and Maul 2005), also resulting in higher point densities at the edges of the lupine plant than in the plants’ centres. Therefore, CHM and PD raster data significantly contributed to the delineation of objects, and the contour-like pattern can be seen from segmented raster (Fig. 6). CHM data have been utilised recently as classification variable for invasive species mapping (Jones et al. 2011; Lehmann et al. 2017), but this is the first study where CHM data were employed to delineate objects in invasive species mapping. Additionally, hue data derived from RGB images characterised the degree of pureness of the colour compared to the primary colours (Gonzalez and Woods, 2008) also assisted in defining object boundaries.

Random forest classification models with 32 attributes as predictors were trained and tested based on different datasets. The model M12 obtained the highest TPR but got the lowest FPR and tended to over-classify non-lupine objects as lupine objects. As the M12 model was tested with datasets containing only young lupine plants, small objects that were not lupine may have been overestimated. This may also be true for the M11 model, which had a similarly low accuracy compared to the other models. Overall, the performance of the six models showed high model stability and robustness across time and space, which indicate that the models could be transferred easily to other grassland sites of varying maturity. As demonstrated with previous studies (Belgiu and Drăgu 2016), our results confirmed that RF modelling creates robust algorithms to use object classification in OBIA for vegetation mapping.

Several attributes related to plant structure or architecture as well as colour were essential predictors in the Mall model. The height difference between lupine plants and grass vegetation contributed to the classification of segmented objects. It points at a prominent advantage of UAV-borne RS, which allows the separation of two plant types by CHM attributes. This was also proven by other studies that utilised CHM from UAV-borne RS data to map invasive species mapping (i.e. Phragmites australis in estuaries by Abeysinghe et al. (2019) and Fallopia spp. in floodplains by Martin et al. (2018)).

Segment’s area and perimeter were further vital geometric features in the final classification model, whereas fractional dimensions and circle compactness were not useful. A closer look at the segmented objects shows that area (average values were 0.04 m2 and 0.17 m2 for L and NL objects, respectively) and perimeter (average values were 1.6 m and 3.5 m for L and NL objects, respectively) of lupine objects were substantially smaller compared to non-lupine objects, irrespective of the lupine coverage (Fig. 10). The automated process segmented lupine objects always in relatively small areas even when large parts of the area were covered by lupine. Our findings confirm results from other studies, where area and perimeter were essential variables in discrimination models, e.g. species mapping in arid areas as demonstrated by Silver et al. (2019).

Distribution of the values of the six most significant predictors in the classification model for Lupine (L), and non-Lupine (NL) category. (a) mean canopy height model values, (b) standard deviation values of spectral shape index, (c) area values, (d) standard deviation values of canopy height model, (e) perimeter values, and (f) mean hue values

To reduce the computation complexity, only two texture parameters (angular second moment, inverse difference moment) were computed out of many existing parameters. Surprisingly, all four texture attributes (intensity-based and thermal-based) were ineffective in our study. Previous studies (Chabot et al. 2018; Silver et al. 2019) with OBIA have proven that textural information was useful. However, they utilised many more texture attributes than employed in our study. Hence, the accuracy of our models might be increased by including further texture parameters.

Corresponding to the intense green colour of lupine leaves and pronounced red and blue shades of flowers, SSI values computed from RGB intensities indicated higher values in leafy areas and lower values in regions, where flowering lupines dominated. Consequently, an increased cover of lupine plants at different maturity stages resulted in increased standard deviations of SSI, due to a broader distribution of the SSI values (Fig. 10). This may explain why the variation of standard deviation values among objects supported the categorisation of lupine and non-lupine objects.

As lupine plants contain higher water contents compared to grasses (Hensgen and Wachendorf 2016), lupine-containing areas in thermal images showed lower temperatures compared to the surrounding grass area (Fig. 4b). Additionally, the bushy structure of lupine plants creates shaded areas in the surrounding, which may have further decreased temperature. Surprisingly, temperature-related attributes (temperature or texture attributes from thermal image) did not become significant predictors in the classification models. Evidently, other predictors were of superior relevance than the temperature attributes. However, this leads to reduced costs for sensors and platforms as well as model complexity and computing time.

As relative LA increased, the MA of the lupine coverage maps that generated from our workflow decreased (Fig. 9). The negative relationship was valid for both undisturbed vegetation of different maturity and regrown vegetation after mowing. With an increasing lupine area, the classification procedure tends to over-estimate lupine coverage due to difficulty to separate lupine and grass vegetation. In general, early detection of invasive plant species and rapid action are critical to control invasive species (Cock and Wittenberg 2001). Similarly, for lupine management activities, ecologists prefer to act in regions with lower lupine coverage, as at this stage of invasion, eradication and containment are easier than in high lupine coverage regions. Since maps with lower lupine coverage were accurate, ecologists can identify regions with relatively small lupine coverage and precisely locate single lupine plants for eradication.

Though it could be shown that the proposed method performed as well as the standard digitising method, it may be criticised that vegetation mapping based on UAV-borne RS data is challenging to scale up (e.g. Chabot et al. 2018). In this study, one UAV flight took 20 min (including ground preparation and flight time) to collect data of approximately 0.4 hectares (without thermal sensor). The conduction of additional flight sessions, as well as expected advance in sensor and platform technology, may lead to an increased data acquisition area in the future. The proposed method can be considered cost-efficient, as it only requires a standard UAV-mounted RGB camera, and as most of the utilised software is free and open-source (GRASS GIS, R, QGIS), except the Agisoft PhotoScan software, which could be replaced with available free software namely open drone map (Open Drone Map 2019).

Our proposed classification approach can easily be applied in other comparable environments, as the model was trained with heterogeneous datasets from commonly occurring grassland vegetation at different stages of maturity. The spatial lupine coverage maps that were created can be utilised (i) to identify the distribution of lupine in grasslands, (ii) to estimate the size and degree of lupine invasion by comparing maps generated in different years, and (iii) to evaluate the effectiveness of lupine control. As suggested by Kattenborn et al. (2019), UAV-borne lupine coverage maps can further be employed for the creation of field samples to train and test satellite image-based models for invasive lupine mapping in larger areas.

5 Conclusion

Gaining knowledge on the spatio-temporal distribution of lupine is vital to maintain biodiversity in grasslands which are threatened by the invasion of this plant. We successfully developed a workflow that can accurately map lupine coverage in a grassland using UAV-borne RS and OBIA. In our proposed workflow, we developed a robust RF classification model that can classify lupine and non-lupine image objects. The resulting maps showed a ± 5% discrepancy in the lupine area compared to the standard digitising method. Moreover, the classification model can be transferred to other regions, and thereby overcomes limitations of the standard way of lupine mapping. Finally, the developed procedure can be adopted for mapping other invasive species.

References

Abeysinghe T, Milas AS, Arend K et al (2019) Mapping invasive Phragmites australis in the old woman creek estuary using UAV remote sensing and machine learning classifiers. Remote Sens 11:23. https://doi.org/10.3390/rs11111380

Belgiu M, Drăgu L (2016) Random forest in remote sensing: a review of applications and future directions. ISPRS J Photogramm Remote Sens 114:24–31. https://doi.org/10.1016/j.isprsjprs.2016.01.011

Belgiu M, Drǎguţ L, Strobl J (2014) Quantitative evaluation of variations in rule-based classifications of land cover in urban neighbourhoods using WorldView-2 imagery. ISPRS J Photogramm Remote Sens 87:205–215. https://doi.org/10.1016/j.isprsjprs.2013.11.007

Biosphärenreservat Rhön (2019) Biosphärenreservat Rhön. https://biosphaerenreservat-rhoen.de/. Accessed 8 Oct 2019

Bischl B, Lang M, Kotthoff L et al (2016) Mlr: machine learning in R. J Mach Learn Res 17:1–5

Blaschke T (2010) Object based image analysis for remote sensing. ISPRS J Photogramm Remote Sens 65:2–16. https://doi.org/10.1016/j.isprsjprs.2009.06.004

Blaschke T, Hay GJ, Kelly M et al (2014) Geographic object-based image analysis—towards a new paradigm. ISPRS J Photogramm Remote Sens 87:180–191. https://doi.org/10.1016/j.isprsjprs.2013.09.014

Breiman L (2001) Random forests. Mach Learn 45:5–32

Brenning A (2012) Spatial cross-validation and bootstrap for the assessment of prediction rules in remote sensing: the R package sperrorest. Int Geosci Remote Sens Symp 5372–5375. https://doi.org/10.1109/IGARSS.2012.6352393

Chabot D, Dillon C, Shemrock A et al (2018) An object-based image analysis workflow for monitoring shallow-water aquatic vegetation in multispectral drone imagery. ISPRS Int J Geo-Information 7:1–15. https://doi.org/10.3390/ijgi7080294

Chen Y, Su W, Li J, Sun Z (2009) Hierarchical object oriented classification using very high resolution imagery and LIDAR data over urban areas. Adv Sp Res 43:1101–1110. https://doi.org/10.1016/j.asr.2008.11.008

Cock MJW, Wittenberg R (2001) Early detection. In: Cock MJW, Wittenberg R (eds) Invasive alien species: a toolkit of best prevention and management practices. CAB International, Wallingford, pp 101–123

Courchamp F, Fournier A, Bellard C et al (2017) Invasion biology: specific problems and possible solutions. Trends Ecol Evol 32:13–22. https://doi.org/10.1016/j.tree.2016.11.001

de Sá NC, Castro P, Carvalho S et al (2018) Mapping the flowering of an invasive plant using unmanned aerial vehicles: Is there potential for biocontrol monitoring? Front Plant Sci 9:1–13. https://doi.org/10.3389/fpls.2018.00293

Dunnington D, Harvey P (2019) exifr: EXIF Image Data in R

Espindola GM, Camara G, Reis IA et al (2006) Parameter selection for region-growing image segmentation algorithms using spatial autocorrelation. Int J Remote Sens 27:3035–3040. https://doi.org/10.1080/01431160600617194

FLIR (2016) FLIR Vue Pro and Vue Pro R User Guide

Fremstad E (2010) NOBANIS—Invasive alien species fact sheet. In: Online Database Eur. Netw. Invasive Alien Species—NOBANIS. https://www.nobanis.org/. Accessed 7 Oct 2019

Georganos S, Grippa T, Lennert M, et al (2018) Scale matters: Spatially partitioned unsupervised segmentation parameter optimisation for large and heterogeneous satellite images. Remote Sens 10:. https://doi.org/10.3390/rs10091440

Gonzalez RC, Woods RE (2008) Color image processing. In: Gonzalez RC, Woods RE (eds) Digital image processing, Third Edit. Pearson Education, New Jersy, pp 401–414

GRASS Development Team (2017) Geographic resources analysis support system (GRASS GIS) software. Version 7:2

Grippa T (2018) Opensource OBIA processing chain

Grippa T, Lennert M, Beaumont B et al (2017) An open-source semi-automated processing chain for urban object-based classification. Remote Sens 9:1–20. https://doi.org/10.3390/rs9040358

Grüner E, Astor T, Wachendorf M (2019) Biomass prediction of heterogeneous temperate grasslands using an SfM approach based on UAV imaging. Agronomy 9:54. https://doi.org/10.3390/agronomy9020054

Haralick RM (1979) Statistical and structural approaches to texture. Proc IEEE 67:786–804. https://doi.org/10.1109/PROC.1979.11328

Hensgen F, Wachendorf M (2016) The effect of the invasive plant species Lupinus polyphyllus Lindl. On energy recovery parameters of semi-natural grassland biomass. Sustain 8:1–14. https://doi.org/10.3390/su8100998

Ishii J, Washitani I (2013) Early detection of the invasive alien plant Solidago altissima in moist tall grassland using hyperspectral imagery. Int J Remote Sens 34:5926–5936. https://doi.org/10.1080/01431161.2013.799790

Jianhui L, Dingquan L, Gui Z, et al (2019) Study on extraction of foreign invasive species Mikania micrantha based on unmanned aerial vehicle (UAV) hyperspectral remote sensing. In: Fifth symposium on novel optoelectronic detection technology and application. p 53

Johnson BA, Bragais M, Endo I et al (2015) Image segmentation parameter optimisation considering within-and between-segment heterogeneity at multiple scale levels: Test case for mapping residential areas using landsat imagery. ISPRS Int J Geo-Information 4:2292–2305. https://doi.org/10.3390/ijgi4042292

Jones D, Pike S, Thomas M, Murphy D (2011) Object-based image analysis for detection of Japanese Knotweed s.l. taxa (polygonaceae) in Wales (UK). Remote Sens 3:319–342. https://doi.org/10.3390/rs3020319

Kattenborn T, Lopatin J, Förster M et al (2019) UAV data as alternative to field sampling to map woody invasive species based on combined Sentinel-1 and Sentinel-2 data. Remote Sens Environ 227:61–73. https://doi.org/10.1016/j.rse.2019.03.025

Klinger YP, Harvolk-scho S, Otte A, Ludewig K (2019) Applying landscape structure analysis to assess the spatio-temporal distribution of an invasive legume in the Rho UNESCO. Biosphere Reserve 21:2735–2749. https://doi.org/10.1007/s10530-019-02012-x

Laliberte AS, Rango A, Havstad KM et al (2004) Object-oriented image analysis for mapping shrub encroachment from 1937 to 2003 in southern New Mexico. Remote Sens Environ 93:198–210. https://doi.org/10.1016/j.rse.2004.07.011

Lambdon PW, Pyšek P, Basnou C et al (2008) Alien flora of Europe: species diversity, temporal trends, geographical patterns and research needs. Preslia 80:101–149

Lehmann JRKK, Prinz T, Ziller SR et al (2017) Open-source processing and analysis of aerial imagery acquired with a low-cost unmanned aerial system to support invasive plant management. Front Environ Sci 5:1–16. https://doi.org/10.3389/fenvs.2017.00044

Lennert M, GRASS Development Team (2019a) Addon i.segment.uspo. Geographic resources analysis support system (GRASS) software, version 7.6. https://grass.osgeo.org/grass76/manuals/addons/i.segment.uspo.html. Accessed 16 Oct 2019

Lennert M, GRASS Development Team (2019b) Addon i.segment.stats. Geographic resources analysis support system (GRASS) software, version 7.6. https://grass.osgeo.org/grass76/manuals/addons/i.segment.stats.html. Accessed 18 Oct 2019

Liaw A, Wiener M (2002) Classification and regression by randomForest. R J 2:18–22. https://doi.org/10.1177/154405910408300516

Martin FM, Müllerová J, Borgniet L et al (2018) Using single- and multi-date UAV and satellite imagery to accurately monitor invasive knotweed species. Remote Sens 10:1–15. https://doi.org/10.3390/rs10101662

Michez A, Piégay H, Jonathan L et al (2016) Mapping of riparian invasive species with supervised classification of unmanned aerial system ( UAS ) imagery. Int J Appl Earth Obs Geoinf 44:88–94. https://doi.org/10.1016/j.jag.2015.06.014

Mirik M, Chaudhuri S, Surber B et al (2013) Detection of two intermixed invasive woody species using color infrared aerial imagery and the support vector machine classifier. J Appl Remote Sens 7:073588. https://doi.org/10.1117/1.jrs.7.073588

Müllerová J, Brůna J, Bartaloš T et al (2017) Timing is important: Unmanned aircraft vs. Satellite imagery in plant invasion monitoring. Front Plant Sci 8:1–13. https://doi.org/10.3389/fpls.2017.00887

Open Drone Map (2019) Open Drone Map—Open Source Toolkit for processing Civilian Drone Imagery

Otte A, Maul P (2005) Verbreitungsschwerpunkte und strukturelle Einnischung der Stauden-Lupine (Lupinus polyphyllus Lindl.) in Bergwiesen der Rhön. Tuexenia 25:151–182

Phil Harvey (2016) ExifTool

Probst P, Wright MN, Boulesteix AL (2019) Hyperparameters and tuning strategies for random forest. Wiley Interdiscip Rev Data Min Knowl Discov 9:1–19. https://doi.org/10.1002/widm.1301

Pyšek P, Richardson DM (2011) Invasive plants. Ecol Eng 2011:2011–2020

R Core Team (2019) R: A Language and environment for statistical computing

Royimani L, Mutanga O, Odindi J et al (2018) Advancements in satellite remote sensing for mapping and monitoring of alien invasive plant species (AIPs). Phys Chem Earth. https://doi.org/10.1016/j.pce.2018.12.004

Silver M, Tiwari A, Karnieli A (2019) Identifying vegetation in arid regions using object-based image analysis with RGB-only aerial imagery. Remote Sens 11:1–26. https://doi.org/10.3390/rs11192308

Skowronek S, Stenzel S, Feilhauer H (2018) Detecting invasive species from above—How can we make use of remote sensing data to map invasive plant species in Germany? Natur und Landschaft 434–438

Turner D, Lucieer A, Parkes S (2017) Thermal Infrared remote sensing with a FLIR Vue Pro-R. In: UAS4RS 2017. Tasmania

Wijesingha J, Moeckel T, Hensgen F, Wachendorf M (2019) Evaluation of 3D point cloud-based models for the prediction of grassland biomass. Int J Appl Earth Obs Geoinf 78:352–359. https://doi.org/10.1016/J.JAG.2018.10.006

Acknowledgement

Open Access funding provided by Projekt DEAL. This research was supported by the German Federal Environmental Foundation (Deutsche Bundesstiftung Umwelt—DBU). The authors would like to thank Frank Hensgen, Felix Voll, and Oliver Becker for their help in the field data collection, and to Estefania Duque Perez for helping with image digitising. We are also grateful to the government of Bavaria for permission to conduct our measurements in a nature conservation area.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wijesingha, J., Astor, T., Schulze-Brüninghoff, D. et al. Mapping Invasive Lupinus polyphyllus Lindl. in Semi-natural Grasslands Using Object-Based Image Analysis of UAV-borne Images. PFG 88, 391–406 (2020). https://doi.org/10.1007/s41064-020-00121-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s41064-020-00121-0