Abstract

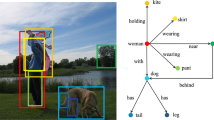

Relations between objects drive our understanding of images. Modelling them poses several challenges due to the combinatorial nature of the problem and the complex structure of natural language. This paper tackles the task of predicting relationships in the form of (subject, relation, object) triplets from still images. To address these issues, we propose a framework for learning relation prototypes that aims to capture the complex nature of relation distributions. Concurrently, a network is trained to define a space in which relationship triplets with similar spatial layouts, interacting objects and relations are clustered together. Finally, the network is compared to two models explicitly tackling the problem of synonymy among relations. For this, two well known scene-graph labelling benchmarks are used for training and testing: VRD and Visual Genome. Prediction of relations based on distance to prototype provides a significant increase in the diversity of predicted relations, improving the average relation recall from 40.3% to 41.7% on the first and 31.3% to 35.4% on the second.

Similar content being viewed by others

References

Chao YW, Wang Z, He Y, Wang J, Deng J (2015) HICO: A benchmark for recognizing human-object interactions in images. In: ICCV. https://doi.org/10.1109/ICCV.2015.122

Chao YW, Wang Z, Mihalcea R, Deng J (2015) Mining semantic affordances of visual object categories. In: CVPR. https://doi.org/10.1109/CVPR.2015.7299054

Cui Y, Zhou F, Lin Y, Belongie S (2016) Fine-grained Categorization and Dataset Bootstrapping using Deep Metric Learning with Humans in the Loop. In: CVPR

Dai B, Zhang Y, Lin D (2017) Detecting visual relationships with deep relational networks. In: CVPR. https://doi.org/10.1109/CVPR.2017.352

de Boer M, Schutte K, Kraaij W (2016) Knowledge based query expansion in complex multimedia event detection. Multimed Tools Appl 75(15):9025–9043. https://doi.org/10.1007/s11042-015-2757-4

Deng J, Ding N, Jia Y, Frome A, Murphy K, Bengio S, Li Y, Neven H, Adam H (2014) Large-Scale Object classification using label relation graphs. In: European conference on computer vision

Fang Y, Kuan K, Lin J, Tan C, Chandrasekhar V (2017) Object detection meets knowledge graphs. IJCAI, pp 1661–1667

Fellbaum C (1998) Wordnet: An Electronic Lexical database, vol 71. Bradford Books. https://doi.org/10.1139/h11-025

Girshick R (2015) Fast r-CNN. In: ICCV

Gkioxari G, Girshick R, Dollár P, He K (2018) Detecting and recognizing Human-Object interactions. In: CVPR

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: CVPR

Herzig R, Raboh M, Chechik G, Berant J, Globerson A (2018) Mapping images to scene graphs with Permutation-Invariant structured prediction. In: NIPS

Hu Z, Ma X, Liu Z, Hovy E, Xing E (2016) Harnessing deep neural networks with logic rules. In: ACL. https://doi.org/10.18653/v1/P16-1228

Johnson J, Douze M, Jégou H Billion-scale similarity search with GPUs

Kaiser L, Nachum O, Roy A, Bengio S (2017) Learning to remember rare events. In: ICLR

Koch G, Zemel R, Salakhutdinov R Siamese Neural Networks for One-shot Image Recognition. Technical report. https://www.cs.cmu.edu/~rsalakhu/papers/oneshot1.pdf

Krishna R, Zhu Y, Groth O, Johnson J, Hata K, Kravitz J, Chen S, Kalantidis Y, Li LJ, Shamma DA, Bernstein M, Fei-Fei L (2016) Visual genome: Connecting language and vision using crowdsourced dense image annotations. https://doi.org/10.1007/s11263-016-0981-7

Li Y, Ouyang W, Wang X, Tang X (2017) Vip-CNN: Visual Phrase Guided Convolutional Neural Network. In: CVPR. https://doi.org/10.1109/CVPR.2017.766

Liang K, Guo Y, Chang H, Chen X (2018) Visual relationship detection with deep structural ranking. In: AAAI

Liang X, Lee L, Xing EP (2017) Deep Variation-structured Reinforcement Learning for Visual Relationship and Attribute Detection. In: CVPR. 10.1109/CVPR.2017.469. arXiv:1703.03054

Long Y, Liu L, Shao L, Shen F, Ding G, Han J (2017) From zero-shot learning to conventional supervised classification: Unseen visual data synthesis. In: CVPR. https://doi.org/10.1109/CVPR.2017.653

Lu C, Krishna R, Bernstein M, Fei-Fei L (2016) Visual relationship detection with language priors. In: ECCV. https://doi.org/10.1007/978-3-319-46448-0_51

Macqueen J (1967) Some methods for classification and analysis of multivariate observations. In: Fifth berkeley symposium on mathematical statistics and probability

Marino K, Salakhutdinov R, Gupta A (2017) The more you know: Using knowledge graphs for image classification. In: CVPR. https://doi.org/10.1109/CVPR.2017.10

Mikolov T, Corrado G, Chen K, Dean J (2013) Efficient estimation of word representations in vector space. In: ICLR. https://doi.org/10.1162/153244303322533223

Newell A, Deng J (2017) Pixels to graphs by associative embedding. In: NIPS

Peyre J, Laptev I, Schmid C, Sivic J (2017) Weakly-supervised learning of visual relations. In: ICCV

Plesse F, Ginsca A, Delezoide B, Prêteux F (2020) Focusing visual relation detection on relevant relations with prior potentials. In: WACV

Plesse F, Ginsca A, Delezoide B, Prêteux F (2018) Visual relationship detection based on guided proposals and semantic knowledge distillation. In: ICME

Redmon J, Divvala S, Girshick R, Farhadi A (2016) You only look once: unified, Real-Time Object Detection. In: CVPR. https://doi.org/10.1109/CVPR.2016.91

Ren S, He K, Girshick R, Sun J (2015) Faster r-CNN: Towards Real-Time object detection with region proposal networks. In: NIPS

Sarullo A, Mu T (2019) On Class Imbalance and Background Filtering in Visual Relationship Detection

Schroff F, Philbin J (2015) Facenet: A Unified Embedding for Face Recognition and Clustering. In: CVPR

Simonyan K, Zisserman A (2015) Very deep convolutional networks for Large-Scale image recognition. In: ICLR. https://doi.org/10.1016/j.infsof.2008.09.005

Speer R, Havasi C (2012) Representing General Relational Knowledge in ConceptNet 5. In: LREC

Van Der Maaten L, Hinton G (2008) Visualizing Data using t-SNE. Journal of Machine Learning Research 9:2579–2605

Vinyals O, Deepmind G, Blundell C, Lillicrap T, Kavukcuoglu K, Wierstra D (2016) Matching networks for one shot learning. In: NIPS

Wang X, Ye Y, Gupta A (2018) Zero-shot Recognition via Semantic Embeddings and Knowledge Graphs. In: CVPR

Weinberger KQ, Blitzer J, Saul LK (2009) Distance metric learning for large margin nearest neighbor classification journal of machine learning research

Woo S, Kim D, Daejeon K, Cho DE, So Kweon IE (2018) LinkNet: Relational Embedding for Scene Graph. In: NIPS. arXiv:1811.06410.pdf

Xu D, Zhu Y, Choy CB, Fei-Fei L (2017) Scene graph generation by iterative message passing. In: CVPR. https://doi.org/10.1109/CVPR.2017.330

Yin G, Sheng L, Liu B, Yu N, Wang X, Shao J, Loy CC (2018) Zoom-Net: Mining Deep feature interactions for visual relationship recognition. In: ECCV

Yu R, Li A, Morariu VI, Davis LS (2017) Visual relationship detection with internal and external linguistic knowledge distillation. In: ICCV

Zellers R, Yatskar M, Thomson S, Choi Y (2018) Neural motifs: Scene graph parsing with global context. In: CVPR

Zhu Y, Jiang S, Li X (2017) Visual relationship detection with object spatial distribution. In: ICME

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Plesse, F., Ginsca, A., Delezoide, B. et al. Modelling relations with prototypes for visual relation detection. Multimed Tools Appl 80, 22465–22486 (2021). https://doi.org/10.1007/s11042-020-09001-6

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-020-09001-6