Abstract

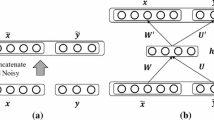

We introduce the hyper autoencoder architecture where a secondary, hypernetwork is used to generate the weights of the encoder and decoder layers of the primary, actual autoencoder. The hyper autoencoder uses a one-layer linear hypernetwork to predict all weights of an autoencoder by taking only one embedding vector as input. The hypernetwork is smaller and as such acts as a regularizer. Just like the vanilla autoencoder, the hyper autoencoder can be used for unsupervised or semi-supervised learning. In this study, we also present a semi-supervised model using a combination of convolutional neural networks and autoencoders with the hypernetwork. Our experiments on five image datasets, namely, MNIST, Fashion MNIST, LFW, STL-10 and CelebA, show that the hyper autoencoder performs well on both unsupervised and semi-supervised learning problems.

Similar content being viewed by others

References

Alain G, Bengio Y (2014) What regularized auto-encoders learn from the data-generating distribution. J Mach Learn Res 15(1):3563–3593

Bengio Y, Yao L, Alain G, Vincent P (2013) Generalized denoising auto-encoders as generative models. Adv Neural Inf Process Syst 899–907

Bharati A, Vatsa M, Singh R, Bowyer KW, Tong X (2017) Demography-based facial retouching detection using subclass supervised sparse autoencoder. In: IEEE International Joint Conference on Biometrics, pp 474–482

Bourlard H, Kamp Y (1988) Auto-association by multilayer perceptrons and singular value decomposition. Biol Cybern 59(4–5):291–294

Brabandere B, Jia X, Tuytelaars T, Gool LV (2016) Dynamic filter networks. Adv Neural Inf Process Syst 667–675

Brock A, Lim T, Ritchie JM, Weston N (2017) SMASH: one-shot model architecture search through hypernetworks, arXiv:1708.05344

Coates A, Lee H, Ng A (2011) An analysis of single layer networks in unsupervised feature learning. In: Proceedings of the fourteenth international conference on artificial intelligence, pp 215–223

Cottrell GW, Munro P, Zipser D (1987) Learning internal representations from gray-scale images: an example of extensional programming. In: Proceedings Ninth annual conference of the cognitive science society, pp 462–473

Dai A, Le Q (2015) Semi-supervised sequence learning. Adv Neural Inf Process Syst pp 3079–3087

Dhaka AK, Salvi G (2017) Sparse autoencoder based semi-supervised learning for phone classification with limited annotations. In: International workshop on grounding language understanding, pp 22–26

Goodfellow I, Bengio Y, Courville A (2016) Deep learning. MIT Press, Cambridge

Ha D, Dai A, Le QV (2017) Hypernetworks. In: International conference on learning representations (ICLR)

Hinton GE, Zemel RS (1994) Autoencoders, minimum description length, and Helmholtz free energy. Adv Neural Inf Process Syst pp 3–10

Huang G, Ramesh M, Berg T, Learned-Miller E (2007) Labeled faces in the wild: a database for studying face recognition in unconstrained environments, University of Massachusetts, Amherst, Technical Report, pp 07–49

Irsoy O, Alpaydın E (2016) Autoencoder trees. In: Asian conference on machine learning, pp. 378–390

Jiang L, Ge Z, Song Z (2017) Semi-supervised fault classification based on dynamic sparse stacked auto-encoders model. Chemometr Intell Lab Syst 168:72–83

Kamimura R, Takeuchi H (2017) Supervised semi-autoencoder learning for multi-layered neural networks. In: Joint 17th world congress of international fuzzy systems association and 9th international conference on soft computing and intelligent systems (IFSA-SCIS), IEEE pp 1–8

Kingma D, Ba J (2014) Adam: A method for stochastic optimization, arXiv:1412.6980

Krueger D, Huang CW, Islam R, Turner R, Lacoste A, Courville A (2017) Bayesian hypernetworks, arXiv:1710.04759

LeCun Y, Bottou L, Bengio Y, Haffner P (1998) Gradient-based learning applied to document recognition. Proc IEEE 86(11):2278–2324

Lee DH (2013) Pseudo-label: the simple and efficient semi-supervised learning method for deep neural networks. In: Workshop on Challenges in Representation Learning, ICML

Liu B, Yu X, Zhang P, Tan X, Yu A, Xue Z (2017) A semi-supervised convolutional neural network for hyperspectral image classification. Remote Sens Lett 8(9):839–848

Liu Z, Luo P, Wang X, Tang X (2015) Deep learning face attributes in the wild. In: Proceedings of the IEEE international conference on computer vision, pp 3730–3738

Lorraine J, Duvenaud D (2018) Stochastic hyperparameter optimization through hypernetworks, arXiv:1802.09419

Makhzani A, Frey BJ (2017) PixelGAN autoencoders, Adv Neural Inf Process Syst, 1975–1985

Makhzani A, Shlens J, Jaitly N, Goodfellow I, Frey B (2015) Adversarial autoencoders, arXiv:1511.05644

Ranzato M, Poultney C, Chopra S, LeCun Y (2007) Efficient learning of sparse representations with an energy-based model. Adv Neural Inf Process Syst 1137–1144

Mohammadi M, Al-Fuqaha A, Guizani M, Oh JS (2017) Semi-supervised deep reinforcement learning in support of IoT and smart city services. IEEE Int Things J 5(2):624–635

O’shea TJ, West N, Vondal M, Clancy TC (2017) Semi-supervised radio signal identification. In: 19th International conference on advanced communication technology, IEEE, pp 33–38

Park H, Yoo CD (2017) Early improving recurrent elastic highway network, arXiv:1708.04116

Rampasek L, Hidru D, Smirnov P, Haibe-Kains B, Goldenberg A (2017) Dr. VAE: Drug response variational autoencoder. arXiv:1706.08203

Ranzato MA, Szummer M (2008) Semi-supervised learning of compact document representations with deep networks. In: Proceedings of the 25th international conference on machine learning, pp 792–799

Rasmus A, Valpola H, Honkala M, Berglund M, Raiko T (2015) Semi-supervised learning with ladder networks. Adv Neural Inf Process Syst pp 3546–3554

Sanderson C, Lovell BC (2009) Multi-region probabilistic histograms for robust and scalable identity inference. In: International conference on biometrics, pp 199–208

Sheikh AS, Rasul K, Merentitis A, Bergmann U (2017) Stochastic maximum likelihood optimization via hypernetworks, arXiv:1712.01141

Socher R, Pennington J, Huang EH, Ng A, Manning CD (2011) Semi-supervised recursive autoencoders for predicting sentiment distributions. In: Proceedings of the conference on empirical methods in natural language processing, association for computational linguistics, pp 151–161

Soydaner D (2018) Training deep neural network based hyper autoencoders with machine learning methods, PhD dissertation, Mimar Sinan Fine Arts University, Institute of Science and Technology, İstanbul, Turkey

Suarez J (2017) Character-level language modeling with recurrent highway hypernetworks. Adv Neural Inf Process Syst 3267–3276

Sun Z, Ozay M, Okatani T (2017) Hypernetworks with statistical filtering for defending adversarial examples, arXiv:1711.01791

Tietz M, Alpay T, Twiefel J, Wermter S (2017) Semi-supervised phoneme recognition with recurrent ladder networks. In: International conference on artificial neural networks, Springer, Cham, pp 3–10

Vincent P, Larochelle H, Lajoie I, Bengio Y, Manzagol PA (2010) Stacked denoising autoencoders: Learning useful representations in a deep network with a local denoising criterion. J Mach Learn Res 11:3371–3408

Xiao H, Rasul K, Vollgraf R (2017) Fashion-MNIST: a novel image dataset for benchmarking machine learning algorithms, arXiv:1708.07747

Yang Z, Hu Z, Salakhutdinov R, Berg-Kirkpatrick T (2017) Improved variational autoencoders for text modeling using dilated convolutions. In: Proceedings of the 34th international conference on machine learning, vol 70, pp 3881–3890

Zhang Q, Liu X, Fu J (2018) Neural networks incorporating dictionaries for chinese word segmentation. In: Thirty-Second AAAI Conference on Artificial Intelligence

Zhang X, Jiang Y, Peng H, Tu K, Goldwasser D (2017) Semi-supervised structured prediction with neural CRF autoencoder. In: Proceedings of the 2017 conference on empirical methods in natural language processing, pp 1701–1711

Acknowledgements

I would like to thank Ethem Alpaydın for his guidance and helpful suggestions throughout this work.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Soydaner, D. Hyper Autoencoders. Neural Process Lett 52, 1395–1413 (2020). https://doi.org/10.1007/s11063-020-10310-y

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-020-10310-y