Abstract

The uniformization and hyperbolization transformations formulated by Bonk et al. in “Uniformizing Gromov Hyperbolic Spaces”, Astérisque, vol 270 (2001), dealt with geometric properties of metric spaces. In this paper we consider metric measure spaces and construct a parallel transformation of measures under the uniformization and hyperbolization procedures. We show that if a locally compact roughly starlike Gromov hyperbolic space is equipped with a measure that is uniformly locally doubling and supports a uniformly local p-Poincaré inequality, then the transformed measure is globally doubling and supports a global p-Poincaré inequality on the corresponding uniformized space. In the opposite direction, we show that such global properties on bounded locally compact uniform spaces yield similar uniformly local properties for the transformed measures on the corresponding hyperbolized spaces. We use the above results on uniformization of measures to characterize when a Gromov hyperbolic space, equipped with a uniformly locally doubling measure supporting a uniformly local p-Poincaré inequality, carries nonconstant globally defined p-harmonic functions with finite p-energy. We also study some geometric properties of Gromov hyperbolic and uniform spaces. While the Cartesian product of two Gromov hyperbolic spaces need not be Gromov hyperbolic, we construct an indirect product of such spaces that does result in a Gromov hyperbolic space. This is done by first showing that the Cartesian product of two bounded uniform domains is a uniform domain.

Similar content being viewed by others

1 Introduction

Studies of metric space geometry usually consider two types of synthetic (i.e. axiomatic) negative curvature conditions: Alexandrov curvature (known as CAT(\(-1\)) spaces) and Gromov hyperbolicity. While the Alexandrov condition governs both small and large scale behavior of triangles, the Gromov hyperbolicity governs only the large scale behavior. As such, the Gromov hyperbolicity was eminently suited to the study of hyperbolic groups, see e.g. Gromov [24], Coornaert–Delzant–Papadopoulos [20] and Ghys–de la Harpe [23], while Bridson–Haefliger [17] gives an excellent overview of both notions of curvature.

Since the ground-breaking work of Gromov [24], the notion of Gromov hyperbolicity has found applications in other parts of metric space analysis as well. In [14, Theorem 1.1], Bonk, Heinonen and Koskela gave a link between quasiisometry classes of locally compact roughly starlike Gromov hyperbolic spaces and quasisimilarity classes of locally compact bounded uniform spaces. In Buyalo–Schroeder [19] it was shown that every complete bounded doubling metric space is the visual boundary of a Gromov hyperbolic space, see also Bonk–Saksman [15].

While none of the above mentioned studies, involving Gromov hyperbolic spaces and uniform domains, considered how measures transform on such spaces (see also e.g. Buckley–Herron–Xie [18] and Herron–Shanmugalingam–Xie [31]), analytic studies on metric spaces require measures as well. Although [15] does consider function spaces on certain Gromov hyperbolic spaces, called hyperbolic fillings, these function spaces are associated with just the counting measure on the vertices of such hyperbolic fillings and so do not lend themselves to more general Gromov hyperbolic spaces. Similar studies were undertaken in Bonk–Saksman–Soto [16] and Björn–Björn–Gill–Shanmugalingam [8].

In this paper we seek to remedy this gap in the literature on analysis in Gromov hyperbolic spaces. Thus the primary focus of this paper is to construct transformations of measures under the uniformization and hyperbolization procedures, and to demonstrate how analytic properties of the measure are preserved by them. This does not seem to have been considered elsewhere. The analytic properties of interest here are the doubling property and the Poincaré inequality, assumed either globally on the uniform spaces, or uniformly locally (i.e. for balls up to some fixed radius) on the Gromov hyperbolic spaces. As trees are the quintessential models of Gromov hyperbolic spaces, the results in this paper are motivated in part by the results in [8].

The following is our main result, combining Theorems 4.9 and 6.2. Here, \(z_0\in X\) is a fixed uniformization center and \(\varepsilon _0(\delta )\) is as in Bonk–Heinonen–Koskela [14], see later sections for relevant definitions.

Theorem 1.1

Assume that (X, d) is a locally compact roughly starlike Gromov \(\delta \)-hyperbolic space equipped with a measure \(\mu \) which is doubling on X for balls of radii at most \(R_0\), with a doubling constant \(C_d\). Let \(X_\varepsilon =(X,d_\varepsilon )\) be the uniformization of X given for \(0<\varepsilon \le \varepsilon _0(\delta )\) by

with the infimum taken over all rectifiable curves \(\gamma \) in X joining x to y. Also let

Then the following are true:

-

(a)

\(\mu _\beta \) is globally doubling both on \(X_\varepsilon \) and its completion

.

. -

(b)

If \(\mu \) supports a p-Poincaré inequality for balls of radii at most \(R_0\), then \(\mu _\beta \) supports a global p-Poincaré inequality both on \(X_\varepsilon \) and

.

.

Along the way, we also show that if the assumptions hold with some value of \(R_0\) then they hold for any value of \(R_0\) at the cost of enlarging \(C_d\), see Proposition 3.2 and Theorem 5.3.

We also obtain the following corresponding result for the hyperbolization procedure, see Propositions 7.3 and 7.4.

Theorem 1.2

Let \((\Omega ,d)\) be a locally compact bounded uniform space, equipped with a globally doubling measure \(\mu \). Let k be the quasihyperbolic metric on \(\Omega \), given by

where \(d_\Omega (x)={{\,\mathrm{dist}\,}}(x,\partial \Omega )\) and the infimum is taken over all rectifiable curves \(\gamma \) in \(\Omega \) connecting x to y. For \(\alpha >0\) we equip the corresponding Gromov hyperbolic space \((\Omega ,k)\) with the measure \(\mu ^\alpha \) given by \(\mathrm{{d}}\mu ^\alpha =d_\Omega (\,\cdot \,)^{-\alpha }\,\mathrm{{d}}\mu \). Let \(R_0 >0\).

Then the following are true:

-

(a)

\(\mu ^\alpha \) is doubling on \((\Omega ,k)\) for balls of radii at most \(R_0\).

-

(b)

If \(\mu \) supports a global p-Poincaré inequality, then \(\mu ^\alpha \) supports a p-Poincaré inequality for balls of radii at most \(R_0\).

We use Theorem 1.1 to study potential theory on locally compact roughly starlike Gromov hyperbolic spaces, equipped with a locally uniformly doubling measure supporting a uniformly local Poincaré inequality. In particular, we characterize when the finite-energy Liouville theorem holds on such spaces, i.e. when there exist no nonconstant globally defined p-harmonic functions with finite p-energy. The characterization is given in terms of the nonexistence of two disjoint compact sets of positive p-capacity in the boundary of the uniformized space, see Theorem 10.5. This characterization complements our results in [12].

As already mentioned, an in-depth study of locally compact roughly starlike Gromov hyperbolic spaces, as well as links between them and bounded locally compact uniform domains, was undertaken in the seminal work Bonk–Heinonen–Koskela [14]. They showed [14, the discussion before Proposition 4.5] that the operations of uniformization and hyperbolization are mutually opposite:

-

A uniformization followed by a hyperbolization takes a given locally compact roughly starlike Gromov hyperbolic space X to a roughly starlike Gromov hyperbolic space which is biLipschitz equivalent to X, see [14, Proposition 4.37]. (Note that in [14] “quasiisometric” means biLipschitz.)

-

A hyperbolization of a bounded locally compact uniform space \(\Omega \), followed by a uniformization, returns a bounded uniform space which is quasisimilar to \(\Omega \), see [14, Proposition 4.28].

Here, a homeomorphism \(\Phi :X\rightarrow Y\) between two noncomplete metric spaces is quasisimilar if it is \(C_x\)-biLipschitz on every ball \(B(x,c_0 {{\,\mathrm{dist}\,}}(x,\partial X))\), for some \(0<c_0<1\) independent of x, and there exists a homeomorphism \(\eta :[0,\infty )\rightarrow [0,\infty )\) such that for each distinct triple of points \(x,y,z\in X\),

It was also shown in [14, Theorem 4.36] that two roughly starlike Gromov hyperbolic spaces are biLipschitz equivalent if and only if any two of their uniformizations are quasisimilar.

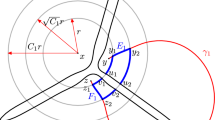

We continue the study of Gromov hyperbolic spaces in this spirit by considering pairs of Gromov hyperbolic spaces in Sect. 8. Note that the Cartesian product of two Gromov hyperbolic spaces need not be Gromov hyperbolic, as demonstrated by \({\mathbf {R}}\times {\mathbf {R}}\), which is not a Gromov hyperbolic space even though \({\mathbf {R}}\) is. On the other hand, in Sect. 8 we obtain the following result, see Proposition 8.3 for a more precise result.

Proposition 1.3

Let \((\Omega ,d)\) and \((\Omega ',d')\) be two bounded uniform spaces. Then \(\Omega \times \Omega '\) is a bounded uniform space with respect to the metric

We use this, together with the results of [14], to construct an indirect product \(X\times _\varepsilon Y\) of two Gromov hyperbolic spaces which is also Gromov hyperbolic, see Sect. 8. In this section we also study properties of such product hyperbolic spaces. For a fixed Gromov \(\delta \)-hyperbolic space X, there is a whole family of uniformizations \(X_\varepsilon \), one for each \(0<\varepsilon \le \varepsilon _0(\delta )\). As mentioned above, \(X_{\varepsilon }\) is quasisimilar to \(X_{\varepsilon '}\) when \(0<\varepsilon ,\varepsilon '\le \varepsilon _0(\delta )\).

On the other hand, we show in Proposition 8.5 that the canonical identity mapping between \(X\times _\varepsilon Y\) and \(X\times _{\varepsilon '} Y\) is never biLipschitz if \(\varepsilon \ne \varepsilon '\), and it is even possible that the two indirect products are not even quasiisometric. Here, a map \(\Phi :Z\rightarrow W\) is a quasiisometry (also called, perhaps more accurately, rough quasiisometry as in [14] and [8]) if there are \(C>0\) and \(L\ge 1\) such that the C-neighborhood of \(\Phi (Z)\) contains W and for all \(z,z'\in Z\),

It is not difficult to show that visual boundaries of quasiisometric locally compact roughly starlike Gromov hyperbolic spaces are quasisymmetric, see e.g. Bridson–Haefliger [17, Theorem 3.22]. We take advantage of this to show the quasiisometric nonequivalence of two indirect products of the hyperbolic disk and \({\mathbf {R}}\), see Example 8.7.

The broad organization of the paper is as follows. Background definitions and preliminary results are given in Sects. 2 and 3, while the definition of Poincaré inequalities is given in Sect. 5. The main aims in Sects. 4 and 6 are to deduce parts (a) and (b), respectively, of Theorem 1.1. The dual transformation of hyperbolization, via the quasihyperbolic metric (1.1), is discussed in Sect. 7, where also Theorem 1.2 is shown. The above sections fulfill the main goal of this paper, and form a basis for comparing the potential theories on Gromov hyperbolic spaces and on uniform spaces.

The remaining sections are devoted to applications of the results obtained in the preceding sections. In Sect. 8 we construct and study the indirect product, providing a family of new Gromov hyperbolic spaces from a pair of Gromov hyperbolic spaces. The subsequent sections are devoted to the impact of uniformization and hyperbolization procedures on nonlinear potential theory. In Sect. 9 we discuss Newton–Sobolev spaces and p-harmonic functions, and then in Sect. 10 we show that under certain natural conditions, the class of p-harmonic functions is preserved under the uniformization and hyperbolization procedures. In this final section, we also characterize which Gromov hyperbolic spaces with bounded geometry support the finite-energy Liouville theorem for p-harmonic functions.

In the beginning of each section, we list the standing assumptions for that section in italicized text; in Sects. 2 and 4 these assumptions are given a little later.

2 Gromov Hyperbolic Spaces

A curve is a continuous mapping from an interval. Unless stated otherwise, we will only consider curves which are defined on compact intervals. We denote the length of a curve \(\gamma \) by \(l_\gamma =l(\gamma )\), and a curve is rectifiable if it has finite length. Rectifiable curves can be parametrized by arc length \(\mathrm{{d}}s\).

A metric space \(X=(X,d)\) is L-quasiconvex if for each \(x,y\in X\) there is a curve \(\gamma \) with end points x and y and length \(l_\gamma \le L d(x,y)\). X is a geodesic space if it is 1-quasiconvex, and \(\gamma \) is then a geodesic from x to y. We will consider a related metric, called the inner metric, given by

where the infimum is taken over all curves \(\gamma \) from x to y. If (X, d) is quasiconvex, then d and \(d_{{{\,\mathrm{in}\,}}}\) are biLipschitz equivalent metrics on X. The space X is a length space if \(d_{{{\,\mathrm{in}\,}}}(x,y)=d(x,y)\) for all \(x,y\in X\). By Lemma 4.43 in [5], arc length is the same with respect to d and \(d_{{{\,\mathrm{in}\,}}}\), and thus \((X,d_{{{\,\mathrm{in}\,}}})\) is a length space. A metric space is proper if all closed bounded sets are compact. A proper length space is necessarily a geodesic space, by Ascoli’s theorem or the Hopf–Rinow theorem below. To avoid pathological situations, all metric spaces in this paper are assumed to contain at least two points.

We denote balls in X by \(B(x,r)=\{y \in X: d(y,x) <r\}\) and the scaled concentric ball by \(\lambda B(x,r)=B(x,\lambda r)\). In metric spaces it can happen that balls with different centers and/or radii denote the same set. We will however adopt the convention that a ball comes with a predetermined center and radius. Similarly, when we say that \(x \in \gamma \) we mean that \(x=\gamma (t)\) for some t. If \(\gamma \) is noninjective, this t may not be unique, but we are always implicitly referring to a specific such t.

Theorem 2.1

(Hopf–Rinow theorem) If X is a complete locally compact length space, then it is proper and geodesic.

This version is a generalization of the original theorem, see e.g. Gromov [25, p. 9] for a proof.

Definition 2.2

A complete unbounded geodesic metric space X is Gromov hyperbolic if there is a hyperbolicity constant \(\delta \ge 0\) such that whenever [x, y], [y, z] and [z, x] are geodesics in X, every point \(w\in [x,y]\) lies within a distance \(\delta \) of \([y,z]\cup [z,x]\).

The ideal Gromov hyperbolic space is a metric tree, which is Gromov hyperbolic with \(\delta =0\). A metric tree is a tree where each edge is considered to be a geodesic of unit length.

Definition 2.3

An unbounded metric space X is roughly starlike if there are some \(z_0\in X\) and \(M>0\) such that whenever \(x\in X\) there is a geodesic ray \(\gamma \) in X, starting from \(z_0\), such that \({{\,\mathrm{dist}\,}}(x,\gamma )\le M\). A geodesic ray is a curve \(\gamma :[0,\infty ) \rightarrow X\) with infinite length such that \(\gamma |_{[0,t]}\) is a geodesic for each \(t > 0\).

If X is a roughly starlike Gromov hyperbolic space, then the roughly starlike condition holds for every choice of \(z_0\), although M may change.

Definition 2.4

A nonempty open set \(\Omega \varsubsetneq X\) in a metric space X is an A-uniform domain, with \(A\ge 1\), if for every pair \(x,y\in \Omega \) there is a rectifiable arc length parametrized curve \(\gamma : [0,l_\gamma ] \rightarrow \Omega \) with \(\gamma (0)=x\) and \(\gamma (l_\gamma )=y\) such that \(l_\gamma \le A d(x,y)\) and

where

The curve \(\gamma \) is said to be an A-uniform curve. A noncomplete metric space \((\Omega ,d)\) is A-uniform if it is an A-uniform domain in its completion.

A ball B(x, r) in a uniform space \(\Omega \) is a subWhitney ball if \(r \le c_0 d_\Omega (x)\), where \(0<c_0<1\) is a predetermined constant. We will primarily use \(c_0=\frac{1}{2}\).

The completion of a locally compact uniform space is always proper, by Proposition 2.20 in Bonk–Heinonen–Koskela [14]. Unlike the definition used in [14], we do not require uniform spaces to be locally compact.

It follows directly from the definition that an A-uniform space is A-quasiconvex. One might ask if the uniformity assumption in Proposition 2.20 in [14] can be replaced by a quasiconvexity assumption, i.e. if the completion of a locally compact quasiconvex space is always proper, however the following example shows that this can fail even if the original space is geodesic. Thus the uniformity assumption in Proposition 2.20 in [14] is really crucial.

Example 2.5

Let

equipped with the \(\ell ^1\)-metric. Then X is a bounded locally compact geodesic space which is not totally bounded, and thus has a nonproper completion.

We assume from now on that X is a locally compact roughly starlike Gromov \(\delta \)-hyperbolic space. We also fix a point \(z_0 \in X\) and let M be the constant in the roughly starlike condition with respect to \(z_0\).

By the Hopf–Rinow Theorem 2.1, X is proper. The point \(z_0\) will serve as a center for the uniformization \(X_\varepsilon \) of X. Following Bonk–Heinonen–Koskela [14], we define

and, for a fixed \(\varepsilon >0\), the uniformized metric \(d_\varepsilon \) on X as

and the infimum is taken over all rectifiable curves \(\gamma \) in X joining x to y. Note that if \(\gamma \) is a compact curve in X, then \(\rho _\varepsilon \) is bounded from above and away from 0 on \(\gamma \), and in particular \(\gamma \) is rectifiable with respect to \(d_\varepsilon \) if and only if it is rectifiable with respect to d.

The set X, equipped with the metric \(d_\varepsilon \), is denoted by \(X_\varepsilon \). We let  be the completion of \(X_\varepsilon \), and let

be the completion of \(X_\varepsilon \), and let  . When writing e.g. \(B_\varepsilon \), \({{\,\mathrm{diam}\,}}_\varepsilon \) and \({{\,\mathrm{dist}\,}}_\varepsilon \) the \(\varepsilon \) indicates that these notions are taken with respect to

. When writing e.g. \(B_\varepsilon \), \({{\,\mathrm{diam}\,}}_\varepsilon \) and \({{\,\mathrm{dist}\,}}_\varepsilon \) the \(\varepsilon \) indicates that these notions are taken with respect to  . The length of the curve \(\gamma \) with respect to \(d_\varepsilon \) is denoted by \(l_\varepsilon (\gamma )\), and arc length \(\mathrm{{d}}s_\varepsilon \) with respect to \(d_\varepsilon \) satisfies

. The length of the curve \(\gamma \) with respect to \(d_\varepsilon \) is denoted by \(l_\varepsilon (\gamma )\), and arc length \(\mathrm{{d}}s_\varepsilon \) with respect to \(d_\varepsilon \) satisfies

It follows that \(X_\varepsilon \) is a length space, and thus also  is a length space. By a direct calculation (or

[14, (4.3)]),

is a length space. By a direct calculation (or

[14, (4.3)]),  . Note that as a set, \(\partial _\varepsilon X\) is independent of \(\varepsilon \) and depends only on the Gromov hyperbolic structure of X, see e.g.

[14, Sect. 3]. The notation adopted in

[14] is \(\partial _G X\).

. Note that as a set, \(\partial _\varepsilon X\) is independent of \(\varepsilon \) and depends only on the Gromov hyperbolic structure of X, see e.g.

[14, Sect. 3]. The notation adopted in

[14] is \(\partial _G X\).

The following important theorem is due to Bonk–Heinonen–Koskela [14].

Theorem 2.6

There is a constant \(\varepsilon _0=\varepsilon _0(\delta )>0\) only depending on \(\delta \) such that if \(0 < \varepsilon \le \varepsilon _0(\delta )\), then \(X_\varepsilon \) is an A-uniform space for some A depending only on \(\delta \), and  is a compact geodesic space.

is a compact geodesic space.

If \(\delta =0\), then \(\varepsilon _0(0)\) can be chosen arbitrarily large.

In the proof below we recall the relevant references from [14] and specify the dependence on \(\delta \).

Proof

By Proposition 4.5 in

[14] there is \(\varepsilon _0(\delta )>0\) such that if \(0< \varepsilon \le \varepsilon _0(\delta )\), then \(X_\varepsilon \) is an A-uniform space for some A depending only on \(\delta \). As \(X_\varepsilon \) is bounded, it follows from Proposition 2.20 in

[14] that  is a compact length space, which by Ascoli’s theorem or the Hopf–Rinow Theorem 2.1 is geodesic.

is a compact length space, which by Ascoli’s theorem or the Hopf–Rinow Theorem 2.1 is geodesic.

The bound \(\varepsilon _0(\delta )\) in Proposition 4.5 in [14] is only needed for the Gehring–Hayman lemma to be true, see [14, Theorem 5.1]. If \(\delta =0\), then any curve from x to y contains the unique geodesic [x, y] as a subcurve. From this the Gehring–Hayman lemma follows directly without any bound on \(\varepsilon \). Note that in this case it also follows that a curve in \(X=X_\varepsilon \) is simultaneously a geodesic with respect to d and \(d_\varepsilon \). \(\square \)

We recall, for further reference, the following key estimates from [14].

Lemma 2.7

( [14, Lemma 4.10]) There exists a constant \(C(\delta )\ge 1\) such that for every \(0<\varepsilon \le \varepsilon _0=\varepsilon _0(\delta )\) and all \(x,y\in X\),

Lemma 2.8

( [14, Lemma 4.16]) Let \(\varepsilon >0\). If \(x\in X\), then

where \(C_0=2e^{\varepsilon M}-1\). In particular, \(\varepsilon d_\varepsilon (x) \simeq \rho _\varepsilon (x)\), and \(x\rightarrow \partial _\varepsilon X\) with respect to \(d_\varepsilon \) if and only if \(d(x,z_0)\rightarrow \infty \).

Note that one may choose \(C_0 = 2e^{\varepsilon _0 M}-1\) for it to be independent of \(\varepsilon \), provided that \(0<\varepsilon \le \varepsilon _0\).

Corollary 2.9

Assume that \(0 < \varepsilon \le \varepsilon _0(\delta )\). Let \(x,y\in X\). If \(\varepsilon d(x,y)\ge 1\) then

where the comparison constants depend only on \(\delta \), M and \(\varepsilon _0\).

Proof

Since \(\varepsilon d(x,y)\ge 1\), (2.2) can be written as

where the comparison constants depend only on \(\delta \). Moreover, (2.3) gives

with comparison constants depending only on M and \(\varepsilon _0\). Dividing (2.4) by the last two formulas, and using the definition of \((x|y)_{z_0}\) concludes the proof. \(\square \)

We now wish to show that subWhitney balls in the uniformization \(X_\varepsilon \) are contained in balls of a fixed radius with respect to the Gromov hyperbolic metric d of X.

Theorem 2.10

For all \(0<\varepsilon \le \varepsilon _0(\delta )\), \(x\in X\) and \(0<r\le \tfrac{1}{2} d_\varepsilon (x)\), we have

where \(C_1=e^{-(1+\varepsilon M)}\) and \(C_2=2e(2e^{\varepsilon M}-1)\). If \(d_\varepsilon (x,y)< C_1d_\varepsilon (x)/2C_2\), then

Remark 2.11

As in Lemma 2.8, the constants \(C_1\) and \(C_2\) obtained for \(\varepsilon _0\) will do for \(\varepsilon <\varepsilon _0\) as well. The proof also shows that the condition \(0<r\le \tfrac{1}{2} d_\varepsilon (x)\) can be replaced by \(0<r\le c_0 d_\varepsilon (x)\) for any fixed \(0<c_0<1\), but then \(C_1\) and \(C_2\) also depend on \(c_0\) and get progressively worse as \(c_0\) approaches 1.

Proof

Assume that \(y\in B(x,C_1r/\rho _\varepsilon (x))\) and let \(\gamma \) be a d-geodesic from x to y. The assumption \(r\le \tfrac{1}{2} d_\varepsilon (x)\) and (2.3) then imply that for all \(z\in \gamma \),

The triangle inequality then yields \(d(z,z_0)\ge d(x,z_0)-d(x,z) \ge d(x,z_0)-1/\varepsilon e\) and hence

From this and (2.5) it readily follows that

To see the other inclusion, assume that \(d_\varepsilon (x,y)<r \le \tfrac{1}{2} d_\varepsilon (x)\) and let \(\gamma _\varepsilon \) be a geodesic curve in  connecting x to y. Then for all \(z\in \gamma _\varepsilon \), we have by the triangle inequality that

connecting x to y. Then for all \(z\in \gamma _\varepsilon \), we have by the triangle inequality that

in particular \(\gamma _\varepsilon \subset X_\varepsilon \). It now follows from (2.3) that

where \(C_2\) is as in the statement of the theorem. This implies that

and hence \(B_\varepsilon (x,r) \subset B(x,C_2r/\rho _\varepsilon (x))\).

Finally, if \(d_\varepsilon (x,y)<C_1d_\varepsilon (x)/2C_2\), then from the last inclusion above we see that \(y\in B(x,C_1s/\rho _\varepsilon (x))\) with \(s=\tfrac{1}{2}d_\varepsilon (x)\). Therefore we can apply (2.6) and (2.7) to obtain the last claim of the lemma. \(\square \)

In this paper, the letter C will denote various positive constants whose values may change even within a line. We write \(Y \lesssim Z\) if there is an implicit constant \(C>0\) such that \(Y \le CZ\), and analogously \(Y > rsim Z\) if \(Z \lesssim Y\). We also use the notation \(Y \simeq Z\) to mean \(Y \lesssim Z \lesssim Y\). We will point out how the comparison constants depend on various other constants related to the metric measure spaces under study.

3 Doubling Property

In the rest of this paper, we will continue to assume that X is a locally compact roughly starlike Gromov hyperbolic space. For general definitions and some results, we will assume that Y is a metric space equipped with a Borel measure \(\nu \).

Just as for X, we will denote the metric on Y by d, and balls in Y by B(x, r), but it should always be clear from the context in which space these concepts are taken.

Definition 3.1

A Borel measure \(\nu \), defined on a metric space Y, is globally doubling if

whenever \(x\in Y\) and \(r>0\). If this holds only for balls of radii \(\le R_0\), then we say that \(\nu \) is doubling for balls of radii at most \(R_0\), and also that \(\nu \) is uniformly locally doubling.

The following result shows that the last condition is independent of \(R_0\), provided that Y is quasiconvex. Without assuming quasiconvexity this is not true as shown by Example 3.3 below.

Proposition 3.2

Assume that Y is L-quasiconvex and that \(\nu \) is doubling on Y for balls of radii at most \(R_0\), with a doubling constant \(C_d\). Let \(R_1>0\). Then \(\nu \) is doubling on Y for balls of radii at most \(R_1\) with a doubling constant depending only on \(R_1/R_0\), L and \(C_d\).

Example 3.3

Let \(X=([0,\infty ) \times \{0,1\}) \cup (\{0\} \times [0,1])\) equipped with the Euclidean distance and the measure \(\mathrm{{d}}\mu =w\, d{\mathcal {L}}^1\), where \({\mathcal {L}}^1\) is the Lebesgue measure and

Then X is a connected nonquasiconvex space and \(\mu \) is doubling for balls of radii at most \(R_0\) if and only if \(R_0 \le \frac{1}{2}\). This shows that the quasiconvexity assumption in Proposition 3.2 cannot be dropped.

Before proving Proposition 3.2 we deduce the following lemmas. In particular, Lemma 3.5 covers Proposition 3.2 under the extra assumption that Y is a length space, but with better control of the doubling constant than what is possible in general quasiconvex spaces.

Lemma 3.4

Assume that \(\nu \) is doubling on Y for balls of radii at most \(R_0\), with a doubling constant \(C_d\). Then every ball B of radius \(r\le \tfrac{7}{4}R_0\) can be covered by at most \(C_d^7\) balls with centers in B and radius \(\frac{1}{7} r\).

Proof

Find a maximal pairwise disjoint collection of balls \(B_j\) with centers in B and radii \(\tfrac{1}{14}r\). Note that for each j,

The doubling property then implies that

From this and the pairwise disjointness of all \(B_j\) we thus obtain

i.e. there are at most \(C_d^7\) such balls. As the balls \(2B_j\) cover B, we are done. \(\square \)

Lemma 3.5

Assume that Y is a length space and that \(\nu \) is doubling on Y for balls of radii at most \(R_0\), with a doubling constant \(C_d\). Let n be a positive integer. Then the following are true:

-

(a)

If \(x,x'\in Y\), \(0<r\le R_0\) and \(d(x,x')< nr\), then

$$\begin{aligned} \nu (B(x',r)) \le C_d^n \nu (B(x,r)). \end{aligned}$$ -

(b)

Every ball B of radius nr, with \(r\le \tfrac{1}{4} R_0\), can be covered by at most \(C_d^{7(n+4)/6}\) balls of radius r, \(n=1,2, \ldots \)

In particular, for any \(R_1>0\), \(\nu \) is doubling on Y for balls of radii at most \(R_1\) with a doubling constant depending only on \(R_1/R_0\) and \(C_d\).

Proof

(a) Connect x and \(x'\) by a curve of length \(l_\gamma < nr\). Along this curve, we can find balls \(B_j\) of radius r, \(j=0,1,\ldots ,n\), such that \(B_0=B(x,r)\), \(B_n=B(x',r)\) and \(B_j\subset 2B_{j-1}\). An iteration of the doubling property gives the desired estimate.

(b) Assume that \(\varphi (n)\) is the smallest number such that each ball B(x, nr) is covered by \(\varphi (n)\) balls \(B_j\) of radius r. As Y is a length space, the balls \(7B_j\) cover \(B(x,(n+6)r)\). Using Lemma 3.4, each \(7B_j\) can in turn be covered by at most \(C_d^7\) balls of radius r, which implies that \(\varphi (n+6)\le C_d^{7} \varphi (n)\). Since \(\varphi (1)=1\) and \(\varphi \) is nondecreasing, the statement follows by induction. \(\square \)

Proof of Proposition 3.2

We will use the inner metric \(d_{{{\,\mathrm{in}\,}}}\), defined in (2.1), and denote balls with respect to \(d_{{{\,\mathrm{in}\,}}}\) by \(B_{{{\,\mathrm{in}\,}}}\). It follows from the inclusions

together with a repeated use of the doubling property for metric balls, that \(\nu \) is doubling for inner balls of radii at most \(R_0\). As \((X,d_{{{\,\mathrm{in}\,}}})\) is a length space, it thus follows from Lemma 3.5 that \(\nu \) is doubling for inner balls of radii at most \(LR_1\). Hence, using the inclusions (3.1) again, \(\nu \) is doubling for metric balls of radii at most \(R_1\). \(\square \)

4 The Measure \(\mu _\beta \) is Globally Doubling on \(X_\varepsilon \)

Standing assumptions for this section will be given after Example 4.3.

Given a uniformly locally doubling measure \(\mu \) on the Gromov hyperbolic space X, we wish to obtain a globally doubling measure on its uniformization \(X_\varepsilon \). We do so as follows.

Definition 4.1

Assume that X is a locally compact roughly starlike Gromov hyperbolic space equipped with a Borel measure \(\mu \), and that \(z_0 \in X\).

Fix \(\beta >0\), and set \(\mu _\beta \) to be the measure on \(X=X_\varepsilon \) given by

We also extend this measure to  by letting

by letting  .

.

Our aim in this section is to show that \(\mu _\beta \) is a globally doubling measure on  , under suitable assumptions (see Theorem 4.9).

, under suitable assumptions (see Theorem 4.9).

Bonk–Heinonen–Koskela [14, Theorem 1.1] showed that there is a kind of duality between Gromov hyperbolic spaces and bounded uniform domains, see the introduction for further details. Here we also equip these spaces with measures. The following examples illustrate what happens in a simple case.

Example 4.2

The Euclidean real line \(X={\mathbf {R}}\) is Gromov hyperbolic, because it is a metric tree. Since \(\delta =0\), any \(\varepsilon >0\) is allowed in the uniformization process, by Theorem 2.6. Setting \(z_0=0\), we now determine what \(X_\varepsilon \) is. For \(x,y\in {\mathbf {R}}\), the uniformized metric is given by

With \(y=0\) we get \(d_\varepsilon (x,0)=(1-e^{-\varepsilon |x|})/\varepsilon \). Hence the map \(\Phi :X_\varepsilon \rightarrow (-1/\varepsilon ,1/\varepsilon )\) given by

is an isometry, identifying \(X_\varepsilon \) with the open interval \((-1/\varepsilon ,1/\varepsilon )\).

However, when X is equipped with the Lebesgue measure \({\mathcal {L}}^1\), the measure \(\mu _\beta \) is not the Lebesgue measure on \((-1/\varepsilon ,1/\varepsilon )\). To determine \(\mu _\beta \), note that it is absolutely continuous with respect to the Lebesgue measure \({\mathcal {L}}^1\) on the interval \((-1/\varepsilon ,1/\varepsilon )\). So we compute the Radon–Nikodym derivative of \(\mu _\beta \) with respect to \({\mathcal {L}}^1\). By symmetry, it suffices to consider \(x>0\). Then

Substituting \(\Phi (x)=z\) in the above, we get

where \(d_\varepsilon (z)=1/\varepsilon -z\) is the distance from \(\Phi (x)=z\ge 0\) to the boundary \(\{\pm 1/\varepsilon \}\) of \(\Phi (X_\varepsilon )\).

Similarly, if \(X={\mathbf {R}}\) is equipped with a weighted measure

then as in (4.1),

The following example reverses the procedure in Example 4.2.

Example 4.3

The interval \(X=(-1,1)\) is a uniform domain and so, by Theorem 3.6 in Bonk–Heinonen–Koskela [14], it becomes a Gromov hyperbolic space when equipped with the quasihyperbolic metric k. The quasihyperbolic metric is for \(0\le y<z<1\) given by

cf. Sect. 7. With \(z_0=0\), by symmetry, we have \(k(z,z_0)=\log (1/(1-|z|))\) for \(z \in X\). Hence we consider the map \(\Psi :(-1,1)\rightarrow {\mathbf {R}}\) given by

and see that \(\Psi \) is an isometry between the Gromov hyperbolic space (X, k) and the Euclidean line \({\mathbf {R}}\). By Example 4.2 with \(\varepsilon =1\), the uniformization of \({\mathbf {R}}\) gives back the Euclidean interval \((-1,1)\).

We wish to find a measure \(\mu \) on \((X,k)={\mathbf {R}}\) such that the weighted measure \(\mu _\beta \) given by Definition 4.1 becomes the Lebesgue measure on \((-1,1)\). In view of (4.2) with \(\varepsilon =1\) and \(\Phi =\Psi ^{-1}\), \(\mu \) is given by \(\mathrm{{d}}\mu (x)=w(x)\,\mathrm{d}{\mathcal {L}}^1(x)\), where

In the rest of this section, we assume that X is a locally compact roughly starlike Gromov \(\delta \)-hyperbolic space equipped with a measure \(\mu \) which is doubling on X for balls of radii at most \(R_0\), with a doubling constant \(C_d\). We also fix a point \(z_0 \in X\), let M be the constant in the roughly starlike condition with respect to \(z_0\), and assume that

Finally, we let \(X_\varepsilon \) be the uniformization of X with uniformization center \(z_0\).

In specific cases one may want to consider how to optimally choose \(R_0\), and the corresponding \(C_d\), in the formula for \(\beta _0\). The factor \(\frac{17}{3}\) comes from various estimates leading up to the proof of Proposition 4.7, and is not likely to be optimal. The following example shows however that it is not too far from optimal and that it cannot be replaced by any constant \(<1\).

Example 4.4

Let X be the infinite regular K-ary metric tree, equipped with the Lebesgue measure \(\mu \), as in Björn–Björn–Gill–Shanmugalingam [8, Sect. 3]. Since it is a tree, any \(\varepsilon >0\) is allowed for uniformization.

If \(C_d(R)\) is the optimal doubling constant for radii \(\le R\), then a straightforward calculation shows that

and thus we are allowed, in this paper, to use any

In this specific case, it was shown in [8, Corollary 3.9] that \(\mu _\beta \) is globally doubling and supports a global 1-Poincaré inequality on \(X_\varepsilon \) whenever \(\beta > \log K\). For \(\beta \le \log K\), \(\mu _\beta (X_\varepsilon )= \infty \) and \(\mu _\beta \) cannot possibly be globally doubling on the bounded space \(X_\varepsilon \).

The following lemma gives us an estimate of \(\mu _\beta (B)\) for subWhitney balls B.

Lemma 4.5

Let \(x\in X\) and \(0<r\le \tfrac{1}{2} d_\varepsilon (x)\). Then

with comparison constants depending only on M, \(\varepsilon \), \(C_d\), \(R_0\) and \(\beta \).

Proof

By Lemma 2.8, we have for all \(y\in B_\varepsilon (x,r)\),

Moreover, Theorem 2.10 implies that

This yields

and similarly, \(\mu _\beta (B_\varepsilon (x,r)) > rsim \rho _\beta (x) \mu (B(x,C_1 r/\rho _\varepsilon (x))\). Finally, Lemma 3.5 shows that the last two balls in X have measure comparable to \(\mu (B(x,r/\rho _\varepsilon (x))\), which concludes the proof. \(\square \)

Remark 4.6

Lemma 4.5 implies that if \(\mu _\beta \) is globally doubling on \(X_\varepsilon \) then \(\mu \) is uniformly locally doubling on X, i.e. the converse of Theorem 1.1 (a) holds. Indeed, if \(0<r\le \frac{1}{4e\varepsilon }\) and \(x\in X\), then \(2r\rho _\varepsilon (x) \le \tfrac{1}{2} d_\varepsilon (x)\), by (2.3). Lemma 4.5, with r replaced by \(2r\rho _\varepsilon (x)\) and \(r\rho _\varepsilon (x)\), respectively, then gives

Similar arguments, combined with the arguments in the proof of Lemma 6.1, show that if \(\mu _\beta \) also supports a global p-Poincaré inequality on \(X_\varepsilon \) or  then \(\mu \) supports a uniformly local p-Poincaré inequality on X, i.e. the converse of Theorem 1.1 (b) holds.

then \(\mu \) supports a uniformly local p-Poincaré inequality on X, i.e. the converse of Theorem 1.1 (b) holds.

We shall now estimate \(\mu _\beta (B)\) for balls B centered at \(\partial _\varepsilon X\) in terms of the (essentially) largest Whitney ball contained in B. The existence of such balls is given by Lemma 4.8 below.

Proposition 4.7

Let \(\xi \in \partial _\varepsilon X\) and \(0<r\le 2{{\,\mathrm{diam}\,}}_\varepsilon X_\varepsilon \). Assume that \(a_0>0\) and \(z \in X\) are such that \(B_\varepsilon (z,a_0r)\subset B_\varepsilon (\xi ,r)\) and \(d_\varepsilon (z)\ge 2a_0 r\). Then,

where the comparison constants depend only on \(\delta \), M, \(\varepsilon \), \(C_d\), \(R_0\), \(\beta \) and \(a_0\).

Proof

For \(n=1,2,\ldots \) , define the boundary layers

Corollary 2.9 implies that for every \(x\in A_n\), either \(\varepsilon d(x,z)<1\) or

and hence \(\varepsilon d(x,z) < n+C\), where C depends only on \(\delta \), M, \(\varepsilon \) and \(a_0\).

Using Lemma 3.5 (b), we can thus cover each layer \(A_n\subset B(z,(n+C)/\varepsilon )\) by \(N_n\lesssim C_d^{14n/3\varepsilon R_0}\) balls \(B_{n,j}\) with centers in \(B(z,(n+C)/\varepsilon )\) and radius \(R_0\). Since \(X_\varepsilon \) is geodesic, Lemma 3.5 (a) implies that each of these balls satisfies

Moreover, as in (4.4) we see that \(\rho _\beta (z) = \rho _\varepsilon (z)^{\beta /\varepsilon } \simeq (\varepsilon d_\varepsilon (z))^{\beta /\varepsilon } \simeq (\varepsilon r)^{\beta /\varepsilon }\) and

It thus follows that

and hence for \(\beta >\beta _0=17(\log C_d)/3R_0\),

Since \(a_0 r\le \tfrac{1}{2} d_\varepsilon (z)\), Lemma 4.5 implies that

By (2.3) we see that

and hence, by the doubling property for \(\mu \) on X,

\(\square \)

The following lemma shows how to pick z and \(a_0\) in Proposition 4.7.

Lemma 4.8

Let \(0<a_0< a:=\min \{\tfrac{1}{8},\frac{1}{6A}\}\), where \(A=A(\delta )\) is as in Theorem 2.6. Then for every  and every \(0<r\le 2{{\,\mathrm{diam}\,}}_\varepsilon X_\varepsilon \) we can find a ball \(B_\varepsilon (z,a_0 r) \subset B_\varepsilon (x,r)\) such that \(d_\varepsilon (z)\ge 2a_0r\).

and every \(0<r\le 2{{\,\mathrm{diam}\,}}_\varepsilon X_\varepsilon \) we can find a ball \(B_\varepsilon (z,a_0 r) \subset B_\varepsilon (x,r)\) such that \(d_\varepsilon (z)\ge 2a_0r\).

Proof

First, assume that \(x\in X_\varepsilon \). By Theorem 2.6, there is an A-uniform curve \(\gamma \) from x to \(z_0\), parametrized by arc length \(\mathrm{{d}}s_\varepsilon \). If \(l_\varepsilon (\gamma ) \ge \tfrac{2}{3}r\) then for \(z=\gamma (\tfrac{1}{3}r)\) we have

Thus, any \(a_0\le \frac{1}{6A}\) will do in this case. If \(l_\varepsilon (\gamma ) < \tfrac{2}{3}r \), then letting \(z=z_0\) yields

and for \(a_0 \le \tfrac{1}{8}\),

This proves the lemma for \(x\in X_\varepsilon \). For \(x\in \partial _\varepsilon X\) and any \(0<a_0<a\), choose \(r'=a_0r/a\) and \(x'\in X_\varepsilon \) sufficiently close to x so that, with the corresponding z,

\(\square \)

Lemma 4.5 and Proposition 4.7 can be summarized in the following result, which roughly says that in \((X_\varepsilon ,\mu _\beta )\), the measure of every ball is comparable to the measure of the (essentially) largest Whitney ball contained in it.

Theorem 4.9

The measure \(\mu _\beta \) is globally doubling on  .

.

Moreover, with \(a_0\) and z provided by Lemma 4.8, we have for every  and \(0<r\le 2{{\,\mathrm{diam}\,}}_\varepsilon X_\varepsilon \),

and \(0<r\le 2{{\,\mathrm{diam}\,}}_\varepsilon X_\varepsilon \),

where the comparison constants depend only on \(\delta \), M, \(\varepsilon \), \(C_d\), \(R_0\), \(\beta \) and \(a_0\).

It follows directly that \(\mu _\beta \) is globally doubling also on \(X_\varepsilon \). The optimal doubling constants are the same, by Proposition 3.3 in Björn–Björn [7].

Proof

We start by proving the measure estimate (4.6). As \(a_0 r\le \tfrac{1}{2} d_\varepsilon (z)\), Lemma 4.5 applied to \(B_\varepsilon (z,a_0 r)\) implies that

If \(0<r\le \tfrac{1}{2} d_\varepsilon (x)\) then by (4.4), (4.5) and Lemma 2.8,

Lemma 3.5 then implies that

and another application of Lemma 4.5, this time to \(B_\varepsilon (x,r)\), proves (4.6) in this case.

If \(r\ge \tfrac{1}{2} d_\varepsilon (x)\) then \(B_\varepsilon (x,r) \subset B_\varepsilon (\xi ,3r)\) for some \(\xi \in \partial _\varepsilon X\). Proposition 4.7 (with \(a_0\) replaced by \(\frac{1}{3} a_0\)) then implies

which together with (4.7) proves (4.6) also in this case.

To conclude the doubling property, use the Whitney ball \(B_\varepsilon (z,a_0 r)\) for both \(B_\varepsilon (x,r)\) and \(B_\varepsilon (x,2r)\), with constants \(a_0\) and \(a'_0=\frac{1}{2} a_0\), respectively. Since

we have by (4.6), first used with \(a_0'\) and then with \(a_0\),

\(\square \)

We conclude this section with an estimate of the lower and upper dimensions for the measure \(\mu _\beta \) at \(\partial _\varepsilon X\).

Lemma 4.10

Let

Then for all \(\xi \in \partial _\varepsilon X\) and all \(0<r\le r'\le 2{{\,\mathrm{diam}\,}}_\varepsilon X_\varepsilon \),

with comparison constants depending only on \(\delta \), M, \(\varepsilon \), \(C_d\), \(R_0\), \(\beta \) and the constant \(a_0\) from Theorem 2.6.

Note that  , because \(\beta > \beta _0\), where \(\beta _0\) is as in (4.3).

, because \(\beta > \beta _0\), where \(\beta _0\) is as in (4.3).

Proof

Proposition 4.7 and Lemma 4.8 imply that there are \(z,z' \in X\) such that

where

From Corollary 2.9 we conclude that if \(\varepsilon d(z,z') \ge 1\) then

and hence \(d(z,z') \le \frac{1}{\varepsilon }(C+\log (r'/r))\) holds regardless of the value of \(d(z,z')\). Lemma 3.5 (a) with \(n = \lceil d(z,z')/R_0 \rceil \) (the smallest integer \(\ge d(z,z')/R_0\)) then implies that

which together with (4.8) proves the first inequality in the lemma. The second inequality follows similarly by interchanging z and \(z'\) in (4.9). \(\square \)

5 Upper Gradients and Poincaré Inequalities

We assume in this section that \(1 \le p<\infty \) and that \(Y=(Y,d,\nu )\) is a metric space equipped with a complete Borel measure \(\nu \) such that \(0<\nu (B)<\infty \) for all balls \(B \subset Y\).

We follow Heinonen and Koskela [29] in introducing upper gradients as follows (in [29] they are referred to as very weak gradients).

Definition 5.1

A Borel function \(g:Y \rightarrow [0,\infty ]\) is an upper gradient of an extended real-valued function u on Y if for all arc length parametrized curves \(\gamma : [0,l_{\gamma }] \rightarrow Y\),

where we follow the convention that the left-hand side is considered to be \(\infty \) whenever at least one of the terms therein is \(\pm \infty \). If g is a nonnegative measurable function on Y and if (5.1) holds for p-almost every curve (see below), then g is a p -weak upper gradient of u.

We say that a property holds for p-almost every curve if it fails only for a curve family \(\Gamma \) with zero p-modulus, i.e. there is a Borel function \(0\le \rho \in L^p(Y)\) such that \(\int _\gamma \rho \,\mathrm{{d}}s=\infty \) for every curve \(\gamma \in \Gamma \). The p-weak upper gradients were introduced in Koskela–MacManus [34]. It was also shown therein that if \(g \in L^p_{\mathrm{loc}}(Y)\) is a p-weak upper gradient of u, then one can find a sequence \(\{g_j\}_{j=1}^\infty \) of upper gradients of u such that \(\Vert g_j-g\Vert _{L^p(Y)} \rightarrow 0\).

If u has an upper gradient in \(L^p_{\mathrm{loc}}(Y)\), then it has a minimal p-weak upper gradient \(g_u \in L^p_{\mathrm{loc}}(Y)\) in the sense that for every p-weak upper gradient \(g \in L^p_{\mathrm{loc}}(Y)\) of u we have \(g_u \le g\) a.e., see Shanmugalingam [37] (or [5] or [30]). The minimal p-weak upper gradient is well-defined up to a set of measure zero.

Definition 5.2

Y (or \(\nu \)) supports a global p-Poincaré inequality if there exist constants \(\lambda \ge 1\) (called dilation) and \(C_{\mathrm{PI}}>0\) such that for all balls \(B \subset Y\), all bounded measurable functions u on Y and all upper gradients g of u,

where  .

.

If this holds only for balls B of radii \(\le R_0\), then we say that \(\nu \) supports a p-Poincaré inequality for balls of radii at most \(R_0\), and also that Y (or \(\nu \)) supports a uniformly local p-Poincaré inequality.

Multiplying bounded measurable functions by suitable cut-off functions and truncating integrable functions shows that one may replace “bounded measurable” by “integrable” in the definition. On the other hand, the proofs of [30, Lemma 8.1.5 and Theorem 8.1.53] show that (5.2) can equivalently be required for all (not necessarily bounded) measurable functions u on \(\lambda B\) and all upper (or p-weak upper) gradients g of u. See also [5, Proposition 4.13], [30, Theorem 8.1.49], Hajłasz–Koskela [26, Theorem 3.2], Heinonen–Koskela [29, Lemma 5.15] and Keith [32, Theorem 2] for further equivalent versions.

Theorem 5.3

Assume that \(\nu \) is doubling and supports a p-Poincaré inequality, both properties holding for balls of radii at most \(R_0\). Also assume that Y is L-quasiconvex and that \(R_1 >0\).

Then \(\nu \) supports a p-Poincaré inequality, with dilation constant L, for balls of radii at most \(R_1\).

The proof below can be easily adapted to show that the same is true for so-called (q, p)-Poincaré inequalities. The following examples show that the quasiconvexity assumption cannot be dropped even if one assumes that \(\nu \) is globally doubling, and that one cannot replace L in the conclusion by the dilation constant in the assumed p-Poincaré inequality, nor any fixed multiple of it.

Example 5.4

Let \(X=([0,\infty ) \times \{0,1\}) \cup (\{0\} \times [0,1])\) equipped with the Euclidean distance and the Lebesgue measure \({\mathcal {L}}^1\). Then X is a connected nonquasiconvex space and \({\mathcal {L}}^1\) is globally doubling on X. However, \({\mathcal {L}}^1\) supports a p-Poincaré inequality on X, \(p \ge 1\), for balls of radii at most \(R_0\) if and only if \(R_0 \le 1\). In this case one can choose the dilation constant \(\lambda =1\). This shows that the quasiconvexity assumption in Theorem 5.3 cannot be dropped.

Example 5.5

For \(a \ge 1\), let \(X=([0,a] \times \{0,1\}) \cup (\{0\} \times [0,1])\), equipped with the Euclidean distance and the Lebesgue measure \({\mathcal {L}}^1\). Then X is a connected \((2a+1)\)-quasiconvex space and \({\mathcal {L}}^1\) is globally doubling on X. In this case, \({\mathcal {L}}^1\) supports a p-Poincaré inequality on X, \(p \ge 1\), for balls of radii at most \(R_0\) for any \(R_0>0\), with the optimal dilation

This shows that the dilation constant L in the conclusion of Theorem 5.3 cannot in general be replaced by the dilation constant in the p-Poincaré inequality assumed for balls \(\le R_0\), nor any fixed multiple of it.

Proof of Theorem 5.3

The arguments are similar to the proof of Theorem 4.4 in Björn–Björn [6]. Let \(C_d\), \(C_{\mathrm{PI}}\) and \(\lambda \) be the constants in the doubling property and the p-Poincaré inequality for balls of radii \(\le R_0\). Let B be a ball of radius \(r_B\le \tfrac{5}{2} LR_1=:R_2\). We can assume that \(r_B>R_0\).

First, note that the conclusions in the first paragraph of the proof in [6] with \(B_0=B\), \(\sigma =L\), \(r'=R_0/\lambda \) and \(\mu \) replaced by \(\nu \), follow directly from our assumptions, without appealing to Lemma 4.7 nor Proposition 4.8 in [6]. This and the use of Lemma 3.4 explains why there is no need to assume properness here.

By Lemma 3.5, \(\nu \) is doubling for balls of radii \(\le 7LR_2\), with doubling constant \(C_d'\), depending only on \(C_d\) and \(LR_2/R_0\). Hence, using Lemma 3.4, we can cover B by at most \((C_d')^{7\lceil \log _7 (R_2/r')\rceil }\) balls \(B'_j\) with radius \(r'\). Their centers can then be connected by L-quasiconvex curves. As in the proof of [6, Theorem 4.4], we then construct along these curves a chain \(\{B_j\}_{j=1}^N\) of balls of radius \(r'\), covering B and with a uniform bound on N. It follows that the constant \(C''\) in the proof of [6, Theorem 4.4] only depends on \(C_d\), \(C_{\mathrm{PI}}\), \(\lambda \), L and \(R_2/R_0\). Thus we conclude from the last but one displayed formula in the proof of [6, Theorem 4.4] (with \(B_0=B\)) that \(\nu \) supports a p-Poincaré inequality for balls of radii at most \(R_2\), with dilation 2L.

That we can replace 2L by L now follows from [6, Theorem 5.1], provided that we decrease the bound on the radii to \(R_1\). \(\square \)

6 Poincaré Inequality on \(X_\varepsilon \)

In this section, we assume that X is a locally compact roughly starlike Gromov \(\delta \)-hyperbolic space equipped with a Borel measure \(\mu \). We also fix a point \(z_0 \in X\), let M be the constant in the roughly starlike condition with respect to \(z_0\), and assume that

Finally, we let \(X_\varepsilon \) be the uniformization of X with uniformization center \(z_0\).

The following lemma shows that the p-Poincaré inequality holds for \(\mu _\beta \) on sufficiently small subWhitney balls in \(X_\varepsilon \). Recall that \(\beta _0= (17\log C_d)/3R_0\) as in (4.3).

Lemma 6.1

Assume that \(\mu \) is doubling, with constant \(C_d\), and supports a p-Poincaré inequality, with constants \(C_{\mathrm{PI}}\) and \(\lambda \), both properties holding for balls of radii at most \(R_0\). Let \(\beta > \beta _0\).

Then there exists \(c_0>0\), depending only on \(\delta \), M, \(\varepsilon \), \(R_0\) and \(\lambda \), such that for all \(x\in X_\varepsilon \) and all \(0<r\le c_0 d_\varepsilon (x)\), the p-Poincaré inequality for \(\mu _\beta \) holds on \(B_\varepsilon =B_\varepsilon (x,r)\), i.e. for all bounded measurable functions u and upper gradients \(g_\varepsilon \) of u on \(X_\varepsilon \) we have

where \(\tau =C_2\lambda /C_1\), with \(C_1\) and \(C_2\) from Theorem 2.10, and C depends only on \(\delta \), M, \(\varepsilon \), \(C_d\), \(R_0\), \(\beta \), \(\lambda \) and \(C_{\mathrm{PI}}\).

Proof

Theorem 2.10 shows that if \(c_0\le C_1/2C_2\lambda \) then

Moreover, as in (4.4) we have for all \(y\in \tau B_\varepsilon \),

Hence, by Theorem 4.9, all the balls in (6.1) have comparable \(\mu _\beta \)-measures, as well as comparable \(\mu \)-measures.

Let u be a bounded measurable function on \(X_\varepsilon \), or equivalently on X, and let \(g_\varepsilon \) be an upper gradient of u on \(X_\varepsilon \). Since the arc length parametrization \(\mathrm{{d}}s_\varepsilon \) with respect to \(d_\varepsilon \) satisfies \(\mathrm{{d}}s_\varepsilon = \rho _\varepsilon \,\mathrm{{d}}s\), we conclude that for all compact rectifiable curves \(\gamma \) in \(X_\varepsilon \),

and thus \(g:= g_\varepsilon \rho _\varepsilon \) is an upper gradient of u on X. (Note that a compact curve in X is rectifiable with respect to d if and only if it is rectifiable with respect to \(d_\varepsilon \).) If \(c_0\le R_0\varepsilon /C_2 (2e^{\varepsilon _0M}-1)\), then by Lemma 2.8,

and thus the p-Poincaré inequality holds on B. Using (6.2) we then obtain

Finally, a standard argument based on the triangle inequality makes it possible to replace \(u_{B,\mu }\) on the left-hand side by \(u_{B_\varepsilon ,\mu _\beta }\). \(\square \)

Bonk–Heinonen–Koskela [14, Sect. 6] proved that if \(\Omega \) is a locally compact uniform space equipped with a measure \(\mu \) such that \((\Omega ,\mu )\) is uniformly Q-Loewner in subWhitney balls, then \(\Omega \) is globally Q-Loewner, where \(Q>1\). If \(\mu \) is locally doubling with \(\mu (B(x,r)) > rsim r^Q\) whenever B(x, r) is a subWhitney ball, then the local Q-Loewner condition is equivalent to an analogous local Q-Poincaré inequality, see [29, Theorems 5.7 and 5.9].

We have shown above that the measure \(\mu _\beta \) on the uniformized space \(X_\varepsilon \) is globally doubling and supports a p-Poincaré inequality for subWhitney balls. Following the philosophy of [14, Theorem 6.4], the next theorem demonstrates that the p-Poincaré inequality is actually global on \(X_\varepsilon \).

Theorem 6.2

Assume that \(\mu \) is doubling and supports a p-Poincaré inequality on X, both properties holding for balls of radii at most \(R_0\). Let \(\beta > \beta _0\) and \(\lambda >1\).

Then \(\mu _\beta \) is globally doubling and supports a global p-Poincaré inequality on  with dilation 1, and on \(X_\varepsilon \) with dilation \(\lambda \).

with dilation 1, and on \(X_\varepsilon \) with dilation \(\lambda \).

If \(X_\varepsilon \) happens to be geodesic, then it follows from the proof below that we can choose the dilation constant \(\lambda =1\) also on \(X_\varepsilon \).

Proof

The global doubling property follows from Theorem 4.9, both on \(X_\varepsilon \) and  . Since \(X_\varepsilon \) is a length space and Lemma 6.1 shows that the p-Poincaré inequality on \(X_\varepsilon \) holds for subWhitney balls, the global p-Poincaré inequality on \(X_\varepsilon \), with dilation \(\lambda >1\), follows from the following proposition. Moreover, as

. Since \(X_\varepsilon \) is a length space and Lemma 6.1 shows that the p-Poincaré inequality on \(X_\varepsilon \) holds for subWhitney balls, the global p-Poincaré inequality on \(X_\varepsilon \), with dilation \(\lambda >1\), follows from the following proposition. Moreover, as  is geodesic, the global p-Poincaré inequality on

is geodesic, the global p-Poincaré inequality on  , with dilation 1, also follows from the following proposition. \(\square \)

, with dilation 1, also follows from the following proposition. \(\square \)

Proposition 6.3

Let \((\Omega ,d)\) be a bounded A-uniform space equipped with a globally doubling measure \(\nu \), which supports a p-Poincaré inequality for all subWhitney balls corresponding to some fixed \(0<c_0<1\). Assume that \(\Omega \) is L-quasiconvex. Then \(\nu \) supports a global p-Poincaré inequality on \(\Omega \) with dilation L.

If moreover the completion \({\overline{\Omega }}\) is \(L'\)-quasiconvex, then \(\nu \), extended by \(\nu (\partial \Omega )=0\), supports a global p-Poincaré inequality on \({\overline{\Omega }}\) with dilation \(L'\).

Recall that \(\Omega \) is always A-quasiconvex by the A-uniformity condition, but that L may be smaller than A. Also, \({\overline{\Omega }}\) is always L-quasiconvex, but it is possible to have \(L'<L\).

Proof

Let \(x_0\in \Omega \), \(0<r\le 2{{\,\mathrm{diam}\,}}\Omega \) and \(B_0=B(x_0,r)\) be fixed. The balls in this proof are with respect to \(\Omega \). It is well known, and easily shown using the arguments in the proof of Lemma 4.8, that uniform spaces satisfy the corkscrew condition, i.e. there exists \(a_0\) (independent of \(x_0\) and r) and z such that \(d_\Omega (z)\ge 2a_0r\) and \(B(z,a_0r)\subset B_0\), cf. Björn–Shanmugalingam [13, Lemma 4.2]. With \(c_0\) as in the assumptions of the proposition, let

Since \(\Omega \) is A-uniform, [13, Lemma 4.3] with \(\rho _0=r_0\) and \(\sigma =1/c_0\) provides us for every \(x\in B_0\) with a chain

of balls connecting the ball \(B_{0,0} := B(z,r_0)\) to x as follows:

-

(a)

For all i and j we have \(m_i \le Ar/r_0 = 8A^2/a_0 c_0\),

$$\begin{aligned} 4r_i \le c_0 d_\Omega (x_{i,j}) \quad \text {and} \quad d(x_{i,j},x) \le 2^{-i}A d(x,z) < 2^{-i}Ar. \end{aligned}$$ -

(b)

For large i, we have \(m_i=0\) and the balls \({B_{i,0}}\) are centered at x.

-

(c)

The balls are ordered lexicographically, i.e. \({B_{i,j}}\) comes before \(B_{i',j'}\) if and only if \(i<i'\), or \(i=i'\) and \(j<j'\). If \(B^*\) denotes the immediate successor of \(B\in \mathcal{B}_x\) then \(B \cap B^*\) is nonempty.

Let u be a bounded measurable function on \(\Omega \) and g be an upper gradient of u in \(\Omega \). If \(x\in B_0\) is a Lebesgue point of u then

where \(u_{B} = u_{B,\nu }\) and similarly for other balls. Moreover, \(B^*\subset 3B\) and

As \(3r_i \le c_0 d_\Omega (x_{i,j})\) and the radii of B and \(B^*\) differ by at most a factor 2, an application of the p-Poincaré inequality on 3B shows that

where \(r_B\) is the radius of B and \(\lambda \) is the dilation constant in the assumed p-Poincaré inequality for subWhitney balls. The difference \(|u_{3B} - u_{B}|\) is estimated in the same way. Hence, inserting these estimates into (6.4),

We now wish to estimate the measure of level sets of the function \(x\mapsto |u(x)-u_{B_{0,0}}|\) in \(B_0\). Assume that \(|u(x)-u_{B_{0,0}}| \ge t\) and write \(t= C_\alpha N t \sum _{i=0}^{\infty } 2^{-i\alpha }\), where \(\alpha \in (0,1)\) will be chosen later, and \(N\le 1+Ar/R_0 = 1+8A^2/a_0 c_0 \) is the maximal number of balls in \(\mathcal{B}_x\) with the same radius. Then

Hence, there exists \(B_x = B(x_{i,j},r_i) \in \mathcal{B}_x\) such that

We have \(2^{-i} = r_i/r_0 = 8 Ar_i/a_0 c_0 r\), and inserting this into the last inequality yields

As \(\nu \) is globally doubling, there exists \(s>0\) independent of \(B_x\) such that

see e.g. [5, Lemma 3.3] or [30, (3.4.9)]. Hence

and choosing \(\alpha \in (0,1)\) so that \(\theta := 1- (1-\alpha )p/s \in (0,1)\), we obtain

Let \(E_t = \{x \in B_0: |u(x)-u_{B_{0,0}}| \ge t\}\) and \(F_t\) be the set of all points in \(E_t\) which are Lebesgue points of u. The global doubling property of \(\nu \) guarantees that a.e. x is a Lebesgue point of u, see Heinonen [27, Theorem 1.8]. By the above, for every \(x\in F_t\) there exists \(B_x \in \mathcal{B}_x\) satisfying (6.5). Note also that by construction of the chain, we have \(x\in B'_x:=8(a_0 c_0)^{-1} A^2 B_x\). The balls \(\{B'_x\}_{x\in F_t}\), therefore cover \(F_t\). The 5-covering lemma (Theorem 1.2 in Heinonen [27]) provides us with a pairwise disjoint collection \(\{\lambda {B'_{x_i}}\}_{i=1}^{\infty }\) such that the union of all balls \(5\lambda {B'_{x_i}}\) covers \(F_t\). Then the balls \(3\lambda {B_{x_i}}\subset \lambda {B'_{x_i}}\) are also pairwise disjoint and the global doubling property of \(\nu \), together with (6.5), yields

where \(\Lambda \) depends only on A, \(\lambda \), \(a_0\) and \(c_0\). Lemma 4.22 in Heinonen [27], which can be proved using the Cavalieri principle, now implies that

and a standard argument based on the triangle inequality allows us to replace \(u_{B_{0,0}}\) by \(u_{B_0}\).

Since \(\Omega \) is L-quasiconvex, it follows from [5, Theorem 4.39] that the dilation \(\Lambda \) in the obtained global p-Poincaré inequality can be replaced by L.

Finally, by Proposition 7.1 in Aikawa–Shanmugalingam [1] (or the proof above applied within \({\overline{\Omega }}\) and with \(x_0 \in {\overline{\Omega }}\)), \(\nu \) supports a global p-Poincaré inequality, where, again using [5, Theorem 4.39], the dilation constant can be chosen to be \(L'\). \(\square \)

7 Hyperbolization

We assume in this section that \((\Omega ,d)\) is a noncomplete L-quasiconvex space which is open in its completion \({\overline{\Omega }}\), and let \(\partial \Omega \) be its boundary within \({\overline{\Omega }}\).

We define the quasihyperbolic metric on \(\Omega \) by

\(\mathrm{{d}}s\) is the arc length parametrization of \(\gamma \), and the infimum is taken over all rectifiable curves in \(\Omega \) connecting x to y. It follows that \((\Omega ,k)\) is a length space. Balls with respect to the quasihyperbolic metric k will be denoted by \(B_k\).

Even though our main interest is in hyperbolizing uniform spaces, the quasihyperbolic metric makes sense in greater generality. In fact, the results in this section hold also if we let \(\Omega \varsubsetneq Y\) be an L-quasiconvex open subset of a (not necessarily complete) metric space Y and the quasihyperbolic metric k is defined using \(d_\Omega (x)={{\,\mathrm{dist}\,}}(x,Y \setminus \Omega )\).

If \(\Omega \) is a locally compact uniform space, then Theorem 3.6 in Bonk–Heinonen–Koskela [14] shows that the space \((\Omega ,k)\) is a proper geodesic Gromov hyperbolic space. Moreover, if \(\Omega \) is bounded, then \((\Omega ,k)\) is roughly starlike.

As described in the introduction, the operations of uniformization and hyperbolization are mutually opposite, by Bonk–Heinonen–Koskela [14, the discussion before Proposition 4.5].

Lemma 7.1

Let \(x,y \in \Omega \). Then the following are true:

Moreover,

If \(\Omega \) is A-uniform it is possible to get an upper bound similar to the one in (7.1) also when \(d(x,y) \le \frac{1}{2} d_\Omega (x)\), albeit with a little more complicated expression for the constant. As we will not need such an estimate, we leave it to the interested reader to deduce such a bound.

Proof

Without loss of generality we assume that \(x \ne y\).

Assume first that \(d(x,y) \le d_\Omega (x)\). Let \(\gamma :[0,l_\gamma ] \rightarrow \Omega \) be a curve from x to y. All curves in this proof will be arc length parametrized rectifiable curves in \(\Omega \). Then \(l_\gamma \ge d(x,y)\) and

Taking infimum over all such \(\gamma \) shows that \(k(x,y) \ge d(x,y)/2d_\Omega (x)\).

Suppose next that \(d(x,y) \ge d_\Omega (x)\). Let \(\gamma :[0,l_\gamma ] \rightarrow \Omega \) be a curve from x to y. Then \(l_\gamma \ge d(x,y) \ge d_\Omega (x)\) and

Taking infimum over all such \(\gamma \) shows that \(k(x,y) \ge \tfrac{1}{2}\).

Assume finally that \(d(x,y) \le d_\Omega (x)/2L\). As \(\Omega \) is L-quasiconvex, there is a curve \(\gamma :[0,l_\gamma ] \rightarrow \Omega \) from x to y with length \(l_\gamma \le L d(x,y) \le \frac{1}{2} d_\Omega (x)\). Then

The ball inclusions now follow directly from this. \(\square \)

We shall now equip \((\Omega ,k)\) with a measure determined by the original measure \(\mu \) on \(\Omega \). As before, for the results in this section it will be enough to assume that \(\Omega \) is quasiconvex.

Definition 7.2

Let \(\Omega \) be equipped with a Borel measure \(\mu \). For measurable \(A\subset \Omega \) and \(\alpha >0\), let

Proposition 7.3

Assume that \(\mu \) is globally doubling in \((\Omega ,d)\) with doubling constant \(C_\mu \). Then \(\mu ^\alpha \) is doubling for \(B_k\)-balls of radii at most \(R_0=\frac{1}{8}\), with doubling constant \(C_d=4^\alpha C_\mu ^m\), where \(m=\lceil \log _2 8L \rceil \).

Moreover, if \(R_1 >0\), then \(\mu ^\alpha \) is doubling for \(B_k\)-balls of radii at most \(R_1\).

Proof

Let \(x \in \Omega \), \(r \le \frac{1}{8}\), \(B_k=B_k(x,r)\) and \(B=B(x,r d_\Omega (x))\). By Lemma 7.1,

and hence, again using Lemma 7.1,

As \((\Omega ,k)\) is a length space, Lemma 3.5 shows that \(\mu ^\alpha \) is doubling for \(B_k\)-balls of radii at most \(R_1\) for any \(R_1>0\). \(\square \)

Proposition 7.4

Assume that \((\Omega ,d)\) is equipped with a globally doubling measure \(\mu \) supporting a global p-Poincaré inequality with dilation \(\lambda \) and \(p \ge 1\). Let \(\alpha >0\) and \(R_1>0\). Then \((\Omega ,k)\), equipped with the measure \(\mu ^\alpha \), supports a p-Poincaré inequality for balls of radii at most \(R_1\) with dilation L and the other Poincaré constant depending only on L, \(R_1\) and the global doubling and Poincaré constants.

Proof

Let u be a bounded measurable function on \(\Omega \) and \(\hat{g}\) be an upper gradient of u with respect to k. Since the arc length parametrization \(\mathrm{{d}}s_k\) with respect to k satisfies

we conclude that

and thus \(g(z):= \hat{g}(z)/d_\Omega (z)\) is an upper gradient of u with respect to d, see the proof of Lemma 6.1 for further details.

Next, let \(x\in \Omega \), \(0<r\le R_0:=1/8\lambda L\), \(B_k=B_k(x,r)\) and \(B=B(x,2r d_\Omega (x))\). We see, by Lemma 7.1, that

where all the above balls have comparable \(\mu \)-measures, as well as comparable \(\mu ^\alpha \)-measures. Note that \(d_\Omega (z) \simeq d_\Omega (x)\) for all \(z \in 4\lambda L B_k\). Thus,

A standard argument based on the triangle inequality makes it possible to replace \(u_{B,\mu }\) on the left-hand side by \(u_{B_k,\mu ^\alpha }\), and thus Y supports a p-Poincaré inequality for balls of radii \(\le R_0\), with dilation \(4\lambda L\). The conclusion now follows from Theorem 5.3. \(\square \)

Remark 7.5

Let X be a Gromov hyperbolic space, equipped with a measure \(\mu \), and consider its uniformization \(X_\varepsilon \), together with the measure \(\mu _\beta \), \(\beta >0\), as in Definition 4.1. With \(\alpha =\beta /\varepsilon \), it is then easily verified that the pull-back to X of the measure \((\mu _\beta )^\alpha \), defined on the hyperbolization \((X_\varepsilon ,k)\) of \(X_\varepsilon \), is comparable to the original measure \(\mu \).

8 An Indirect Product of Gromov Hyperbolic Spaces

We assume in this section that X and Y are two locally compact roughly starlike Gromov \(\delta \)-hyperbolic spaces. We fix two points \(z_X \in X\) and \(z_Y \in Y\), and let M be a common constant for the roughly starlike conditions with respect to \(z_X\) and \(z_Y\). We also assume that \(0 < \varepsilon \le \varepsilon _0(\delta )\) and that \(z_X\) and \(z_Y\) serve as centers for the uniformizations \(X_\varepsilon \) and \(Y_\varepsilon \).

In general, the Cartesian product \(X\times Y\) of two Gromov hyperbolic spaces X and Y need not be Gromov hyperbolic; for example, \({\mathbf {R}}\times {\mathbf {R}}\) is not Gromov hyperbolic. In this section, we shall construct an indirect product metric on \(X\times Y\) that does give us a Gromov hyperbolic space, namely we set \(X\times _\varepsilon Y\) to be the Gromov hyperbolic space \((X_\varepsilon \times Y_\varepsilon ,k)\). To do so, we first need to show that the Cartesian product of two uniform spaces, equipped with the sum of their metrics, is a uniform domain. This can be proved using Theorems 1 and 2 in Gehring–Osgood [22] together with Proposition 2.14 in Bonk–Heinonen–Koskela [14], but this would result in a highly nonoptimal uniformity constant. We instead give a more self-contained proof that also yields a better estimate of the uniformity constant for the Cartesian product.

Example 8.1

Recall that the uniformization \({\mathbf {R}}_\varepsilon \) of the hyperbolic 1-dimensional space \({\mathbf {R}}\) is isometric to \((-\tfrac{1}{\varepsilon },\tfrac{1}{\varepsilon })\), see Example 4.2. Hence, for all \(\varepsilon >0\), \({\mathbf {R}}_\varepsilon \times {\mathbf {R}}_\varepsilon \) is a planar square region, which is biLipschitz equivalent to the planar disk. Thus also its hyperbolization \({\mathbf {R}}\times _\varepsilon {\mathbf {R}}\) is biLipschitz equivalent to the hyperbolic disk, which is the model 2-dimensional hyperbolic space.

Lemma 8.2

Let \((\Omega ,d)\) be a bounded A-uniform space. Then for every pair of points \(x,y\in \Omega \) and for every L with \(d(x,y)\le L \le {{\,\mathrm{diam}\,}}\Omega \), there exists a curve \(\gamma \subset \Omega \) of length

connecting x to y and such that for all \(z\in \gamma \),

where \(\gamma _{x,z}\) and \(\gamma _{z,y}\) are the subcurves of \(\gamma \) from x to z and from z to y, respectively.

Proof

Choose \(x_0\in \Omega \) such that \(d_\Omega (x_0) \ge \tfrac{4}{5} \sup _{z\in \Omega } d_\Omega (z)\). Then for all \(z\in \Omega \), with \(\gamma _{z,x_0}\) being an A-uniform curve from z to \(x_0\), and \(z'\) its midpoint,

Hence \({{\,\mathrm{diam}\,}}\Omega \le 5A d_\Omega (x_0)\). Now, let \(x,y\in \Omega \) and L be as in the statement of the lemma. Let \(\gamma _{x,x_0}\) be an A-uniform curve from x to \(x_0\). We shall distinguish two cases:

-

1.

If \(L\le 5Al(\gamma _{x,x_0})\) then let \(\hat{\gamma }_x\) be the restriction of \(\gamma \) to [0, L/10A] and \(\hat{x}=\gamma (L/10A)\) be its new endpoint.

-

2.

If \(L\ge 5Al(\gamma _{x,x_0})\) then let \(\gamma _x\) be the restriction of \(\gamma \) to \([0,\tfrac{1}{2} l(\gamma _{x,x_0})]\) and \(\hat{x}=\gamma (\tfrac{1}{2} l(\gamma _{x,x_0}))\) be its new endpoint. Note that

$$\begin{aligned} d_\Omega (\hat{x}) \ge d_\Omega (x_0) - \frac{l(\gamma _{x,x_0})}{2} \ge \frac{{{\,\mathrm{diam}\,}}\Omega }{5A} - \frac{L}{10A} \ge \frac{L}{10A}. \end{aligned}$$Choose a curve \(\gamma '\) of length L/10A, which starts and ends at \(\hat{x}\). Then for all \(z\in \gamma '\),

$$\begin{aligned} d_\Omega (z) \ge d_\Omega (\hat{x}) - \frac{L}{20A} \ge \frac{L}{20A}. \end{aligned}$$Thus, concatenating \(\gamma '\) to \(\gamma _x\) we obtain a curve \(\hat{\gamma }_x\) from x to \(\hat{x}\) of length

$$\begin{aligned} \frac{L}{10A} \le l(\hat{\gamma }_x) \le \frac{L}{5A} \end{aligned}$$(8.2)and such that for all \(z\in \hat{\gamma }_x\),

$$\begin{aligned} d_\Omega (z) \ge \frac{1}{\max \{4,A\}} l(\hat{\gamma }_{x,z}) \ge \frac{1}{4A} l(\hat{\gamma }_{x,z}), \end{aligned}$$(8.3)where \(\hat{\gamma }_{x,z}\) is the part of \(\hat{\gamma }_x\) from x to z. The curve \(\hat{\gamma }_x\), obtained in case 1, clearly satisfies (8.2) and (8.3) as well.

A similar construction, using an A-uniform curve from y to \(x_0\), provides us with a curve \(\hat{\gamma }_y\) from y to \(\hat{y}\), satisfying (8.2) and (8.3) with x replaced by y.

Now, let \(\tilde{\gamma }\) be a uniform curve from \(\hat{x}\) to \(\hat{y}\) and let \(\gamma \) be the concatenation of \(\hat{\gamma }_x\) with \(\tilde{\gamma }\) and \(\hat{\gamma }_y\) (reversed). Since \(d(\hat{x},\hat{y}) \le d(x,y) + 2L/5A \le (1+2/5A)L\), we see that

and the right-hand side inequality in (8.1) holds, while the left-hand side follows from (8.2).

To prove the second property, in view of (8.3), it suffices to consider \(z\in \tilde{\gamma }\). Without loss of generality, assume that the part \(\tilde{\gamma }_{\hat{x},z}\) of \(\tilde{\gamma }\) from \(\hat{x}\) to z has length at most \(\tfrac{1}{2} l(\tilde{\gamma })\). Note that (8.3), applied to the choice \(z=\hat{x}\), gives

Again, we distinguish two cases.

1. If \(\tfrac{1}{2} d_\Omega (\hat{x})\ge l(\tilde{\gamma }_{\hat{x},z})\) then by (8.4),

and hence we obtain that

where \(\gamma _{x,z}\) is the part of \(\gamma \) from x to z.

2. On the other hand, if \(\tfrac{1}{2} d_\Omega (\hat{x})\le l(\tilde{\gamma }_{\hat{x},z})\) then by (8.4) again,

We conclude that

\(\square \)

Proposition 8.3

Let \((\Omega ,d)\) and \((\Omega ',d')\) be two bounded uniform spaces, with diameters and uniformity constants D, \(D'\), A and \(A'\), respectively. Then \(\widetilde{\Omega }=\Omega \times \Omega '\) is also a bounded uniform space with respect to the metric

with uniformity constant

Proof

The boundedness is clear. Let \(\tilde{x}=(x,x')\) and \(\tilde{y}=(y,y')\) be two distinct points in \(\widetilde{\Omega }\), and let

Note that

We use Lemma 8.2 to find curves \(\gamma \subset \Omega \) and \(\gamma '\subset \Omega '\), connecting x to y and \(x'\) to \(y'\), respectively, of lengths

and such that for all \(z\in \gamma \),

where \(\gamma _{x,z}\) and \(\gamma _{z,y}\) are the parts of \(\gamma \) from x to z and from z to y, respectively; similar statements holding true for \(z'\in \gamma '\) and \(A'\). Note that \(\Lambda >0\) since \(\tilde{x}\ne \tilde{y}\). Hence \(L,L'>0\) and, by (8.7), the curves \(\gamma \) and \(\gamma '\) are nonconstant.

Next, assuming that \(\gamma \) and \(\gamma '\) are arc length parametrized, we show that the curve

is an \(\tilde{A}\)-uniform curve in \(\widetilde{\Omega }\) connecting \(\tilde{x}\) to \(\tilde{y}\). To see this, note that we have by the definition (8.5) of \(\tilde{d}\) that for all \(0\le s\le t \le 1\), using (8.7) and then (8.6),

In particular, \(\tilde{\gamma }\) has the correct length. Since

we see that for all \(\tilde{\gamma }(t)=(z,z')\) with \(0 \le t \le \frac{1}{2}\), using (8.8) and then (8.7),

and similarly \(d_\Omega (z') \ge \Lambda D' t/80(A')^3\). Thus, using (8.7) for the last inequality,

As a similar estimate holds for \(\frac{1}{2} \le t \le 1\), we see that \(\tilde{\gamma }\) is indeed an \(\tilde{A}\)-uniform curve.

\(\square \)

We next see that the projection map \(\pi :X\times _\varepsilon Y\rightarrow X\) given by \(\pi ((x,y))=x\) is Lipschitz continuous.

Proposition 8.4

The above-defined projection map \(\pi :X\times _\varepsilon Y\rightarrow X\) is \((C/\varepsilon )\)-Lipschitz continuous, with C depending only on \(\varepsilon _0\) and M.

Proof

Since \(X\times _\varepsilon Y\) is geodesic, it suffices to show that \(\pi \) is locally \((C/\varepsilon )\)-Lipschitz with C independent of the locality. With \(C_1=e^{-(1+\varepsilon M)}\) and \(C_2=2e(2e^{\varepsilon M}-1)\) as in Theorem 2.10, for \((x,y)\in X\times Y\) let

The last part of Theorem 2.10 together with Lemma 2.8 then gives

with comparison constants depending only on \(\varepsilon _0\) and M.

Let \(k_\varepsilon \) denote the quasihyperbolic metric on \(\Omega :=X_\varepsilon \times Y_\varepsilon \). Note that since both \(X_\varepsilon \) and \(Y_\varepsilon \) are length spaces, so is \(\Omega \). As \(C_2/C_1 >2e\), we see that

and thus (7.1) in Lemma 7.1 with \(L=e\) yields

It follows that

\(\square \)

Next, we shall see how \(X\times _\varepsilon Y\) compares to \(X\times _{\varepsilon ^\prime }Y\).

Proposition 8.5

Let \(0< \varepsilon ' < \varepsilon \le \varepsilon _0(\delta )\). The canonical identity maps

are Lipschitz continuous. More precisely, there is a constant \(C'\), depending only on \(\varepsilon _0\) and M, such that \(\Phi \) is \((C'\varepsilon '/\varepsilon )\)-Lipschitz while \(\Psi \) is \(C'\)-Lipschitz.

Moreover, neither \(\Phi ^{-1}\) nor \(\Psi ^{-1}\) is Lipschitz continuous.

Proof

We first consider \(\Phi \). Since \(X\times _\varepsilon Y\) is geodesic, it suffices to show that \(\Phi \) is locally \((C'\varepsilon '/\varepsilon )\)-Lipschitz with \(C'\) independent of the locality. As in the proof of Proposition 8.4, for \((x,y)\in X\times Y\) and \(C_1, C_2\) from Theorem 2.10, let

Theorem 2.10 then gives

Let \(\tilde{d}_\varepsilon \), \(\tilde{d}_{\varepsilon '}\), \(k_\varepsilon \) and \(k_{\varepsilon '}\) denote the product metrics as in (8.5) and the quasihyperbolic metrics on \(X_\varepsilon \times Y_\varepsilon \) and \(X_{\varepsilon '}\times Y_{\varepsilon '}\) respectively. As in (8.9), we conclude that

Without loss of generality we assume that \(\rho _\varepsilon (x)\le \rho _\varepsilon (y)\), and then using Lemma 2.8,

in which case we also have that

Therefore, using (8.10),

with a similar statement holding true for \(\varepsilon '\). Since

we conclude from (8.11) that

which proves the Lipschitz continuity of \(\Phi \).

We now compare the product uniform domains \(X_\varepsilon \times Y_\varepsilon \) and \(X_{\varepsilon ^\prime }\times Y_{\varepsilon ^\prime }\). With \((x,y), (x',y') \in X\times Y\) as in the first part of the proof, we have by (8.10) and the assumption \(0<\varepsilon '<\varepsilon \) that

which proves the Lipschitz continuity of \(\Psi \). On the other hand, choosing \(y=y'=z_Y\), with \(\rho _\varepsilon (z_Y)=1\), gives

Since \(\varepsilon ' <\varepsilon \), letting \(d(x,z_X)\rightarrow \infty \) and so \(\rho _\varepsilon (x)\rightarrow 0\) shows that \(\Psi ^{-1}\) is not Lipschitz.

To show that \(\Phi ^{-1}\) is not Lipschitz, let \(x_j\in X\) be such that \(\rho _\varepsilon (x_j)\rightarrow 0\) (and equivalently, \(\rho _{\varepsilon '}(x_j)\rightarrow 0\)) as \(j\rightarrow \infty \). With \(C(\delta )\) as in (2.2) and \(C_1,C_2\) as in Theorem 2.10, for \(j=1,2,\ldots \) we choose \(y_j\in Y\) such that

This is possible since Y is geodesic. Then, for sufficiently large j, we have \( \varepsilon d(z_Y,y_j) \le 1 \) and hence by (2.2),

Since also \(\rho _\varepsilon (x_j) \le 1 = \rho _\varepsilon (z_Y)\), we thus conclude from (8.11), with the choice \(x=x'=x_j\), \(y=z_Y\) and \(y'=y_j\), that

with a similar statement holding also for \(\varepsilon '\). This shows that

i.e. \(\Phi ^{-1}\) is not Lipschitz. \(\square \)

Remark 8.6

If \(X=Y={\mathbf {R}}\) then, according to Example 8.1, all the indirect products \({\mathbf {R}}\times _\varepsilon {\mathbf {R}}\) are mutually biLipschitz equivalent. However, Proposition 8.5 shows that this equivalence cannot be achieved by the canonical identity map \(\Phi \).

By Theorem 1.1 in Bonk–Heinonen–Koskela [14], \(\Phi \) is biLipschitz if and only if \(\Psi \) is a quasisimilarity. Note that \(X_\varepsilon \) and \(X_{\varepsilon ^\prime }\) are quasisymmetrically equivalent by [14], and so are \(Y_\varepsilon \) and \(Y_{\varepsilon ^\prime }\). On the other hand, products of quasisymmetric maps need not be quasisymmetric, as exhibited by the Rickman rug \(([0,1],d_{{{\,\mathrm{Euc}\,}}})\times ([0,1], d_{{{\,\mathrm{Euc}\,}}}^\alpha )\) for \(0<\alpha <1\), see Bishop–Tyson [2, Remark 1, Sect. 5] and DiMarco [21, Sect. 1]. This seems to happen whenever one of the component spaces has dimension 1 and the other has dimension larger than 1.

Example 8.7

Let X be the unit disk in \({\mathbf {R}}^2\), equipped with the Poincaré metric k, making it a Gromov hyperbolic space. Let \(Y=(-1,1)\) be equipped with the quasihyperbolic metric (and so it is isometric to \({\mathbf {R}}\), see Examples 4.2 and 4.3). For both X and Y we can choose \(\varepsilon =1\), resulting in \(X_1\) being the Euclidean unit disk and \(Y_1\) being the Euclidean interval \((-1,1)\). Thus \(X_1\times Y_1\) is a solid 3-dimensional Euclidean cylinder, with boundary made up of \({\mathbf {S}}^1\times [-1,1]\) together with two copies of the disk.