Abstract

Global optimization problems whose objective function is expensive to evaluate can be solved effectively by recursively fitting a surrogate function to function samples and minimizing an acquisition function to generate new samples. The acquisition step trades off between seeking for a new optimization vector where the surrogate is minimum (exploitation of the surrogate) and looking for regions of the feasible space that have not yet been visited and that may potentially contain better values of the objective function (exploration of the feasible space). This paper proposes a new global optimization algorithm that uses inverse distance weighting (IDW) and radial basis functions (RBF) to construct the acquisition function. Rather arbitrary constraints that are simple to evaluate can be easily taken into account. Compared to Bayesian optimization, the proposed algorithm, that we call GLIS (GLobal minimum using Inverse distance weighting and Surrogate radial basis functions), is competitive and computationally lighter, as we show in a set of benchmark global optimization and hyperparameter tuning problems. MATLAB and Python implementations of GLIS are available at http://cse.lab.imtlucca.it/~bemporad/glis.

Similar content being viewed by others

1 Introduction

Many problems in machine learning and statistics, engineering design, physics, medicine, management science, and in many other fields, require finding a global minimum of a function without derivative information; see, e.g., the excellent survey on derivative-free optimization [32]. Some of the most successful approaches for derivative-free global optimization include deterministic methods based on recursively splitting the feasible space in rectangles, such as the DIRECT (DIvide a hyper-RECTangle) [22] and Multilevel Coordinate Search (MCS) [18] algorithms, and stochastic methods such as Particle Swarm Optimization (PSO) [12], genetic algorithms [43], and evolutionary algorithms [16].

The aforementioned methods can be very successful in reaching a global minimum without any assumption on convexity and smoothness of the function, but may result in evaluating the function a large number of times during the execution of the algorithm. In many problems, however, the objective function is a black box that can be very time-consuming to evaluate. For example, in hyperparameter tuning of machine learning algorithms, one needs to run a large set of training tests per hyperparameter choice; in structural engineering design, testing the resulting mechanical property corresponding to a given choice of parameters may involve several hours for computing solutions to partial differential equations; in control systems design, testing a combination of controller parameters involves running a real closed-loop experiment, which is time consuming and costly. For this reason, many researchers have been studying algorithms for black-box global optimization that aim at minimizing the number of function evaluations by replacing the function to minimize with a surrogate function [21]. The latter is obtained by sampling the objective function and interpolating the samples with a map that, compared to the original function, is very cheap to evaluate. The surrogate is then used to solve a (much cheaper) global optimization problem that decides the new point where the original function must be evaluated. A better-quality surrogate is then created by also exploiting the new sample and the procedure is iterated. For example, quadratic surrogate functions are used in the well known global optimization method NEWUOA [30].

As underlined by several authors (see, e.g., [21]), purely minimizing the surrogate function may lead to converge to a point that is not the global minimum of the black-box function. To take into account the fact that the surrogate and the true objective function differ from each other in an unknown way, the surrogate is typically augmented by an extra term that takes into account such an uncertainty. The resulting acquisition function is therefore minimized instead for generating a new sample of the optimization vector, trading off between seeking for a new vector where the surrogate is small and looking for regions of the feasible space that have not yet been visited.

Bayesian Optimization (BO) is a popular class of global optimization methods based on surrogates that, by modeling the black-box function as a Gaussian process, enables one to quantify in statistical terms the discrepancy between the two functions, an information that is taken into account to drive the search. BO has been studied since the sixties in global optimization [25] and in geostatistics [26] under the name of Kriging methods; it become popular to solve problems of Design and Analysis of Computer Experiments (DACE) [34], see for instance the popular Efficient Global Optimization (EGO) algorithm [23]. It is nowadays very popular in machine learning for tuning hyperparameters of different algorithms [9, 13, 35, 37].

Motivated by learning control systems from data [29] and self-calibration of optimal control parameters [14], in this paper we propose an alternative approach to solve global optimization problems in which the objective function is expensive to evaluate that is based on Inverse Distance Weighting (IDW) interpolation [24, 36] and Radial Basis Functions (RBFs) [17, 27]. The use of RBFs for solving global optimization problems was already adopted in [11, 15], in which the acquisition function is constructed by introducing a “measure of bumpiness”. The author of [15] shows that such a measure has a relation with the probability of hitting a lower value than a given threshold of the underlying function, as used in Bayesian optimization. RBFs were also adopted in [31], with additional constraints imposed to make sure that the feasible set is adequately explored. In this paper we use a different acquisition function based on two exploration components: an estimate of the confidence interval associated with the interpolant function, defined as in [24], and a new measure based on inverse distance weighting that is totally independent of the underlying black-box function and its surrogate. Both terms aim at exploring the domain of the optimization vector. Moreover, arbitrary constraints that are simple to evaluate are also taken into account, as they can be easily imposed during the minimization of the acquisition function.

Compared to Bayesian optimization, our non-probabilistic approach to global optimization is very competitive, as we show in a set of benchmark global optimization problems and on hyperparameter selection problems, and also computationally lighter than off-the-shelf implementations of BO.

A preliminary version of this manuscript was made available in [4] and later extended in [5] to solve preference-based optimization problems. MATLAB and a Python implementations of the proposed approach and of the one of [5] are available for download at http://cse.lab.imtlucca.it/~bemporad/glis. For an application of the GLIS algorithm proposed in this paper to learning optimal calibration parameters in embedded model predictive control applications the reader is referred to [14].

The paper is organized as follows. After stating the global optimization problem we want to solve in Sect. 2, Sects. 3 and 4 deal with the construction of the surrogate and acquisition functions, respectively. The proposed global optimization algorithm is detailed in Sect. 5 and several results are reported in Sect. 6. Finally, some conclusions are drawn in Sect. 7.

2 Problem formulation

Consider the following constrained global optimization problem

where \(f:{\mathbb R}^n\rightarrow {\mathbb R}\) is an arbitrary function of the optimization vector \(x\in {\mathbb R}^n\), \(\ell ,u\in {\mathbb R}^n\) are vectors of lower and upper bounds, and \(\mathcal {X}\subseteq {\mathbb R}^n\) imposes further arbitrary constraints on x. Typically \(\mathcal {X}=\{x\in {\mathbb R}^n:\ g(x)\le 0\}\), where the vector function \(g:{\mathbb R}^n\rightarrow {\mathbb R}^q\) defines inequality constraints, with \(q=0\) meaning that no inequality constraint is enforced; for example, linear inequality constraints are defined by setting \(g(x)=Ax-b\), with \(A\in {\mathbb R}^{q\times n}\), \(b\in {\mathbb R}^q\), \(q\ge 0\). We are particularly interested in problems as in (1) such that f(x) is expensive to evaluate and its gradient is not available, while the condition \(x\in \mathcal {X}\) is easy to evaluate. Although not comprehensively addressed in this paper, we will show that our approach also tolerates noisy evaluations of f, that is if we measure \(y=f(x)+\varepsilon\) instead of f(x), where \(\varepsilon\) is an unknown quantity. We will not make any assumption on f, g, and \(\varepsilon\). In (1) we do not include possible linear equality constraints \(A_{e}x=b_e\), as they can be first eliminated by reducing the number of optimization variables and therefore perform the exploration more easily in a lower dimensional space.

3 Surrogate function

Assume that we have collected a vector \(F=[f_1\ \ldots \ f_N]'\) of N samples \(f_i=f(x_i)\) of f, \(F\in {\mathbb R}^N\) at corresponding points \(X=[x_1\ \ldots \ x_N]'\), \(X\in {\mathbb R}^{N\times n}\), with \(x_i\ne x_j\), \(\forall i\ne j\), \(i,j=1,\ldots ,N\). We consider next two types of surrogate functions, namely Inverse Distance Weighting (IDW) functions [24, 36] and Radial Basis Functions (RBFs) [15, 27].

3.1 Inverse distance weighting functions

Given a generic new point \(x\in {\mathbb R}^n\) consider the squared Euclidean distance function \(d^2:{\mathbb R}^{n}\times {\mathbb R}^n\rightarrow {\mathbb R}\)

In standard IDW functions [36] the weight functions \(w_i:{\mathbb R}^n\backslash \{x_i\}\rightarrow {\mathbb R}\) are defined by the inverse squared distances

The alternative weighting function

suggested in [24] has the advantage of being similar to the inverse squared distance in (3a) for small values of \(d^2\), but makes the effect of points \(x_i\) located far from x fade out quickly due to the exponential term.

By defining for \(i=1,\ldots ,N\) the following functions \(v_i:{\mathbb R}^n\rightarrow {\mathbb R}\) as

the surrogate function \({\hat{f}}:{\mathbb R}^n\rightarrow {\mathbb R}\)

is an IDW interpolation of (X, F).

Lemma 1

The IDW interpolation function \({\hat{f}}\) defined in (5) enjoys the following properties:

-

P1.

\({\hat{f}}(x_j)=f_j\), \(\forall j=1,\ldots ,N\);

-

P2.

\(\min _j\{f_j\}\le {\hat{f}}(x)\le \max _j\{f_j\}\), \(\forall x\in {\mathbb R}^n\);

-

P3.

\({\hat{f}}\) is differentiable everywhere on \({\mathbb R}^n\) and in particular \(\nabla f(x_j)=0\) for all \(j=1,\ldots ,N\).

The proof of Lemma 1 is very simple and is reported in “Appendix A”.

Note that in [24] the authors suggest to improve the surrogate function by adding a regression model in (5) to take global trends into account. In our numerical experiments we found, however, that adding such a term does not lead to significant improvements of the proposed global optimization algorithm.

A one-dimensional example of the IDW surrogate \({\hat{f}}\) sampled at five different points of the scalar function

is depicted in Fig. 1. The global optimizer is \(x^*\approx -0.9599\) corresponding to the global minimum \(f(x^*)\approx 0.2795\).

A scalar example of f(x) as in (6) (blue) sampled at \(N=5\) points (blue circles), IDW surrogate \({\hat{f}}(x)\) (orange) with \(w_i(x)\) as in (3b), RBF inverse quadratic with \(\epsilon =0.5\) (red), RBF thin plate spline surrogate with \(\epsilon =0.01\) (green), global minimum (purple diamond) (Color figure online)

3.2 Radial basis functions

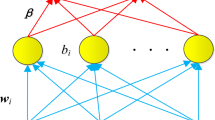

A possible drawback of the IDW function \({\hat{f}}\) defined in (5) is due to property P3: as the number N of samples increases, the surrogate function tends to ripple, having its derivative to always assume zero value at samples. An alternative is to use a radial basis function (RBF) [15, 27] as a surrogate function. These are defined by setting

where \(d:{\mathbb R}^{n}\times {\mathbb R}^n\rightarrow {\mathbb R}\) is the function defining the Euclidean distance as in (2), \(d(x,x_i)=\Vert x-x_i\Vert _2\), \(\epsilon >0\) is a scalar parameter, \(\beta _i\) are coefficients to be determined as explained below, and \(\phi :{\mathbb R}\rightarrow {\mathbb R}\) is a RBF. Popular examples of RBFs are

The coefficient vector \(\beta =[\beta _1\ \ldots \ \beta _N]'\) is obtained by imposing the interpolation condition

Condition (9) leads to solving the linear system

where M is the \(N\times N\) symmetric matrix whose (i, j)-entry is

with \(M_{ii}=1\) for all the RBF type listed in (8) but the linear and thin plate spline, for which \(M_{ii}=\lim _{d\rightarrow 0}\phi (\epsilon d)=0\). Note that if function f is evaluated at a new sample \(x_{N+1}\), matrix M only requires adding the last row/column obtained by computing \(\phi (\epsilon d(x_{N+1},x_j))\) for all \(j=1,\ldots ,N+1\).

As highlighted in [15, 21], matrix M might be singular, even if \(x_i\ne x_j\) for all \(i\ne j\). To prevent issues due to a singular M, [15, 21] suggest using a surrogate function given by the sum of a RBF and a polynomial function of a certain degree. To also take into account unavoidable numerical issues when distances between sampled points get close to zero, which will easily happen as new samples are added towards finding a global minimum, in this paper we suggest instead to use a singular value decomposition (SVD) \(M=U\Sigma V'\) of M.Footnote 1 By neglecting singular values below a certain positive threshold \(\epsilon _{\mathrm{SVD}}\), we can approximate \(\Sigma =\left[ {\begin{matrix}\Sigma _1 &{} 0\\ 0 &{} 0 \end{matrix}} \right]\), where \(\Sigma _1\) collects all singular values \(\sigma _i\ge \epsilon _{\mathrm{SVD}}\), and accordingly split \(V=[V_1\ V_2]\), \(U=[U_1\ U_2]\) so that

The threshold \(\epsilon _{\mathrm{SVD}}\) turns out to be useful when dealing with noisy measurements \(y=f(x)+\varepsilon\) of f. Figure 2 shows the approximation \({\hat{f}}\) obtained from 50 samples with \(\varepsilon\) normally distributed around zero with standard deviation 0.1, when \(\epsilon _\mathrm{SVD}=10^{-2}\).

Function f(x) as in (6) is sampled 50 times, with each sample corrupted by noise \(\varepsilon \sim {{\mathcal {N}}}(0,10^{-2})\) (blue). The RBF thin plate spline surrogate with \(\epsilon =0.01\) (green) is obtained by setting \(\epsilon _{\mathrm{SVD}}=10^{-2}\) (Color figure online)

A drawback of RBFs, compared to IDW functions, is that property P2 is no longer satisfied, with the consequence that the surrogate may extrapolate large values \({\hat{f}}(x)\) where f(x) is actually small, and vice versa. See the examples plotted in Fig. 1. On the other hand, while differentiable everywhere, RBFs do not necessarily have zero gradients at sample points as in P3, which is favorable to better approximate the underlying function with limited samples. For the above reasons, we will mostly focus on RBF surrogates in our numerical experiments.

3.3 Scaling

To take into account that different components \(x^j\) of x may have different ranges \(u^j-\ell ^j\), we simply rescale the variables in optimization problem (1) so that they all range in \([-1,1]\). To this end, we first possibly tighten the given box constraints \(B_{\ell ,u}=\{x\in {\mathbb R}^n: \ell \le x\le u\}\) by computing the bounding box \(B_{\ell _g,u_g}\) of the set \(\{x\in {\mathbb R}^n:\ x\in \mathcal {X}\}\) and replacing \(B_{\ell ,u}\leftarrow B_{\ell ,u}\cap B_{\ell _g,u_g}\). The bounding box \(B_{\ell _g,u_g}\) is obtained by solving the following 2n optimization problems

where \(e_i\) is the ith column of the identity matrix, \(i=1,\ldots ,n\). In case of linear inequality constraints \(\mathcal {X}=\{x\in {\mathbb R}^n\ Ax\le b\}\) , the problems in (11a) can be solved by linear programming (LP), as shown later in (17). Since now on, we assume that \(\ell ,u\) are replaced by

where “\(\min\)” and “\(\max\)” in (11b) operate component-wise. Next, we introduce scaled variables \({\bar{x}}\in {\mathbb R}^n\) whose relation with x is

for all \(j=1,\ldots ,n\) and finally formulate the following scaled global optimization problem

where \(f_s:{\mathbb R}^n\rightarrow {\mathbb R}\), \(\mathcal {X}_s\) are defined as

In case \(\mathcal {X}\) is a polyhedron we have

where \({\bar{A}}\), \({\bar{b}}\) are a rescaled version of A, b defined as

and \(\mathop {\mathrm{diag}}\nolimits (\frac{u-\ell }{2})\) is the diagonal matrix whose diagonal elements are the components of \(\frac{u-\ell }{2}\).

Note that, when approximating \(f_s\) with \({\hat{f}}_s\), we use the squared Euclidean distances

where the scaling factors \(\theta ^h=\frac{u^h-\ell ^h}{2}\) and \(p^h\equiv 2\) are constant. Therefore, finding a surrogate \({\hat{f}}_s\) of \(f_s\) in \([-1,1]\) is equivalent to finding a surrogate \({\hat{f}}\) of f under scaled distances. This is a much simpler scaling approach than computing the scaling factors \(\theta ^h\) and power p as it is common in stochastic process model approaches such as Kriging methods [23, 34]. As highlighted in [21], Kriging methods use radial basis functions \(\phi (x_i,x_j)=e^{-\sum _{h=1}^n\theta ^h|x_i^h-x_j^h|^{p^h}}\), a generalization of Gaussian RBF functions in which the scaling factors and powers are recomputed as the data set X changes.

Note also that the approach adopted in [5] for scaling automatically the surrogate function via cross-validation could be also used here, as well as other approaches specific for RBFs such as Rippa’s method [33]. In our numerical experiments we have found that adjusting the RBF parameter \(\epsilon\) via cross-validation, while increasing the computational effort, does not provide significant benefit. What is in fact most critical is the tradeoff between exploitation of the surrogate and exploration of the feasible set, that we discuss in the next section.

4 Acquisition function

As mentioned earlier, minimizing the surrogate function to get a new sample \(x_{N+1}\)\(=\)\(\arg \min {\hat{f}}(x)\) subject to \(\ell \le x\le u\) and \(x\in \mathcal {X}\) , evaluating \(f(x_{N+1})\), and iterating over N may easily miss the global minimum of f. This is particularly evident when \({\hat{f}}\) is the IDW surrogate (5), that by Property P2 of Lemma 1 has a global minimum at one of the existing samples \(x_i\). Besides exploiting the surrogate function \({\hat{f}}\), when looking for a new candidate optimizer \(x_{N+1}\) it is therefore necessary to add to \({\hat{f}}\) a term for exploring areas of the feasible space that have not yet been probed.

In Bayesian optimization, such an exploration term is provided by the covariance associated with the Gaussian process. A function measuring “bumpiness” of a surrogate RBF function was used in [15]. Here instead we propose two functions that provide exploration capabilities, that can be used in alternative to each other or in a combined way. First, as suggested in [24] for IDW functions, we consider the confidence interval function \(s:{\mathbb R}^n\rightarrow {\mathbb R}\) for \({\hat{f}}\) defined by

We will refer to function s as the IDW variance function associated with (X, F). Clearly, when \({\hat{f}}(x_i)=f(x_i)\) then \(s(x_i)=0\) for all \(i=1,\ldots ,N\) (no uncertainty at points \(x_i\) where f is evaluated exactly). See Fig. 3 for a noise-free example and Fig. 4 for the case of noisy measurements of f.

Zoomed plot of \({\hat{f}}(x)\pm s(x)\) for the scalar example as in Fig. 3 when 50 samples of f(x) are measured with noise \(\varepsilon \sim {{\mathcal {N}}}(0,10^{-2})\) and \(\epsilon _{\mathrm{SVD}}=10^{-2}\)

Second, we introduce the new IDW distance function \(z:{\mathbb R}^n\rightarrow {\mathbb R}\) defined by

where \(w_i(x)\) is given by either (3a) or (3b). The rationale behind (14) is that z(x) is zero at sampled points and grows in between. The arctangent function in (14) avoids that z(x) grows excessively when x is located far away from all sampled points. Figure 5 shows a scalar example of functions \(v_1\) and z.

Given parameters \(\alpha ,\delta \ge 0\) and N samples (X, F), we define the following acquisition function \(a:{\mathbb R}^n\rightarrow {\mathbb R}\)

where \(\Delta F={\text{max}} \{{\text{max}} _i\{f_i\}-{\text{min }}_i\{f_i\},\epsilon _{\Delta {\rm F}}\}\) is the range of the observed samples F and the threshold \(\epsilon _{\Delta {\rm F}}>0\) is introduced to prevent the case in which f is not a constant function but, by chance, all sampled values \(f_i\) are equal. Scaling z by \(\Delta F\) eases the selection of the hyperparameter \(\delta\), as the amplitude of \(\Delta F z\) is comparable to that of \({\hat{f}}\).

As we will detail next, given N samples (X, F) a global minimum of the acquisition function (15) is used to define the \((N+1)\)th sample \(x_{N+1}\) by solving the global optimization problem

The rationale behind choosing (15) for acquisition is the following. The term \({\hat{f}}\) directs the search towards a new sample \(x_{N+1}\) where the objective function f is expected to be optimal, assuming that f and its surrogate \({\hat{f}}\) have a similar shape, and therefore allows a direct exploitation of the samples F already collected. The other two terms account instead for the exploration of the feasible set with the hope of finding better values of f, with s promoting areas in which \({\hat{f}}\) is more uncertain and z areas that have not been explored yet. Both s and z provide exploration capabilities, but with an important difference: function z is totally independent on the samples F already collected and promotes a more uniform exploration, s instead depends on F and the surrogate \({\hat{f}}\). The coefficients \(\alpha\), \(\delta\) determine the exploitation/exploration tradeoff one desires to adopt.

For the example of scalar function f in (6) sampled at five random points, the acquisition function a obtained by setting \(\alpha =1\), \(\delta =\frac{1}{2}\), using a thin plate spline RBF with \(\epsilon _{\mathrm{SVD}}=10^{-6}\), and \(w_i(x)\) as in (3a), and the corresponding minimum are depicted in Fig. 6.

The following result, whose easy proof is reported in “Appendix A”, highlights a nice property of the acquisition function a.

Lemma 2

Function a is differentiable everywhere on \({\mathbb R}^n\).

Problem (16) is a global optimization problem whose objective function and constraints are very easy to evaluate. It can be solved very efficiently using various global optimization techniques, either derivative-free [32] or, if \(\mathcal {X}=\{x: g(x)\le 0\}\) and g is also differentiable, derivative-based. In case some components of vector x are restricted to be integer, (16) can be solved by mixed-integer programming.

5 Global optimization algorithm

Algorithm 1, that we will refer to as GLIS (GLobal minimum using Inverse distance weighting and Surrogate radial basis functions), summarizes the proposed approach to solve the global optimization problem (1) using surrogate functions (either IDW or RBF) and the IDW acquisition function (15).

As common in global optimization based on surrogate functions, in Step 4 Latin Hypercube Sampling (LHS) [28] is used to generate the initial set X of samples in the given range \(\ell ,u\). Note that the generated initial points may not satisfy the inequality constraints \(x\in \mathcal {X}\). We distinguish between two cases:

- (i):

-

the objective function f can be evaluated outside the feasible set \(\mathcal {F}\);

- (ii):

-

f cannot be evaluated outside \(\mathcal {F}\).

In the first case, initial samples of f falling outside \(\mathcal {F}\) are still useful to define the surrogate function and can be therefore kept. In the second case, since f cannot be evaluated at initial samples outside \(\mathcal {F}\), a possible approach is to generate more than \(N_{\mathrm{init}}\) samples and discard the infeasible ones before evaluating f. For example, the author of [6] suggests the simple method reported in Algorithm 2. This requires the feasible set \(\mathcal {F}\) to be full-dimensional. In case of linear inequality constraints \(\mathcal {X}=\{x:\ Ax\le b\}\), full-dimensionality of the feasible set \(\mathcal {F}\) can be easily checked by computing the Chebychev radius \(r_{\mathcal {F}}\) of \(\mathcal {F}\) via the LP [8]

where in (17) the subscript i denotes the ith row (component) of a matrix (vector). The polyhedron \(\mathcal {F}\) is full dimensional if and only if \(r_{\mathcal {F}}>0\). Clearly, the smaller the ratio between the volume of \(\mathcal {F}\) and the volume of the bounding box \(B_{\ell _g,u_g}\), the larger on average will be the number of samples generated by Algorithm 2.

Note that, in alternative to LHS, the IDW function (14) could be also used to generate \(N_{\mathrm{init}}\) feasible points by solving

for \(N=1,\ldots ,N_{\mathrm{init}}-1\), for any \(x_1\in \mathcal {F}\).

The examples reported in this paper use the Particle Swarm Optimization (PSO) algorithm [41] to solve problem (16) at Step 6.2, although several other global optimization methods such as DIRECT [22] or others [18, 32] could be used in alternative. Inequality constraints \(\mathcal {X}=\{x:\ g(x)\le 0\}\) can be handled as penalty functions, for example by replacing (16) with

where in (18) \(\rho \gg 1\). Note that due to the heuristic involved in constructing function a, it is not crucial to find global solutions of very high optimality accuracy when solving problem (16). Regarding feasibility, in case \(x_{N+1}\) violates the constraints and f cannot be evaluated outside \(\mathcal {X}\), a remedy would be to increase the penalty parameter \(\rho\) and/or to slightly tighten the constraints by penalizing \(\max \{g_i(x)+\epsilon _g,0\}\) in (18) instead of \(\max \{g_i(x),0\}\), for some small positive scalar \(\epsilon _g\).

The exploration parameter \(\alpha\) promotes visiting points in \([\ell ,u]\) where the function surrogate has largest variance, \(\delta\) promotes instead pure exploration independently on the surrogate function approximation, as it is only based on the sampled points \(x_1,\ldots ,x_N\) and their mutual distance. For example, if \(\alpha =0\) and \(\delta \gg 1\) Algorithm 1 will try to explore the entire feasible region, with consequent slower detection of points x with low cost f(x). On the other hand, setting \(\delta =0\) will make GLIS proceed only based on the function surrogate and its variance, that may lead to miss regions in \([\ell ,u]\) where a global optimizer is located. For \(\alpha =\delta =0\), GLIS will proceed based on pure minimization of \({\hat{f}}\) that, as observed earlier, can easily lead to converge away from a global optimizer.

Figure 7 shows the first six iterations of the GLIS algorithm when applied to minimize the function f given in (6) in \([-3,3]\) with \(\alpha =1\), \(\delta =0.5\).

GLIS steps when applied to minimize the function f given in (6) using the same settings as in Fig. 6 and \(\epsilon _{\mathrm{SVD}}=10^{-6}\). The plots show function f (blue), its samples \(f_i\) (blue circles), the thin plate spline interpolation \({\hat{f}}\) with \(\epsilon =0.01\) (green), the acquisition function a (yellow), and the minimum of the acquisition function reached at \(x_{N+1}\) (purple diamond) (Color figure online)

5.1 Computational complexity

The complexity of Algorithm 1, as a function of the number \(N_\mathrm{max}\) of iterations and dimension n of the optimization space and not counting the complexity of evaluating f, depends on Steps 6.1 and 6.2. The latter depends on the global optimizer used to solve Problem (16), which typically depends heavily on n. Step 6.1 involves computing \(N_{\mathrm{max}}(N_\mathrm{max}-1)\) RBF values \(\phi (\epsilon d(x_i,x_j))\), \(i,j=1,\ldots ,N_{\mathrm{max}}\), \(j\ne i\), compute the SVD decomposition of the \(N\times N\) symmetric matrix M in (10a), whose complexity is \(O(N^3)\), and solve the linear system in (10a) (\(O(N^2)\)) at each step \(N=N_\mathrm{init},\ldots ,N_{\mathrm{max}}\).

6 Numerical tests

In this section we report different numerical tests performed to assess the performance of the proposed algorithm (GLIS) and how it compares to Bayesian optimization (BO). For the latter, we have used the off-the-shelf algorithm bayesopt implemented in the Statistics and Machine Learning Toolbox for MATLAB [39], based on the lower confidence bound as acquisition function. All tests were run on an Intel i7-8550 CPU @1.8GHz machine. Algorithm 1 was run in MATLAB R2019b in interpreted code. The PSO solver [42] was used to solve problem (18). We focus our comparison on BO only as it one of the most efficient methods to deal with the optimization of expensive black-box functions.

6.1 GLIS optimizing its own parameters

We first use GLIS to optimize its own hyperparameters \(\alpha\), \(\delta\), \(\epsilon\) when solving the minimization problem with f(x) as in (6) and \(x\in [-3,3]\). In what follows, we use the subscript \(()_H\) to denote the parameters/function used in the execution of the outer instance of Algorithm 1 that is optimizing \(\alpha\), \(\delta\), \(\epsilon\). To this end, we solve the global optimization problem (1) with \(x=[\alpha \ \delta \ \epsilon ]'\),

where f is the scalar function in (6) that we want to minimize in \([-3,3]\), and we set \(\ell _H=[0\ 0\ 0.1]'\), \(u_H=[3\ 3\ 3]'\), which is a reasonably large-enough range according to our numerical experience. The \(\min\) in (19) provides the best objective value found up to iteration \(N_\mathrm{max}/2+h\), the term \((h+1)\) aims at penalizing high values of the best objective the more the later they occur during the iterations, \(N_t=20\) is the number of times Algorithm 1 is executed to minimize \(f_H\) for the same triplet \((\alpha ,\delta ,\epsilon )\), \(N_\mathrm{max}=20\) is the number of times f is evaluated per execution, \(x_{i,N}\) is the sample generated by Algorithm 1 during the ith run at step N, \(i=1,\ldots ,N_t\), \(N=1,\ldots ,N_{\mathrm{max}}\). Clearly (19) penalizes failure to convergence close to the global optimum \(f^*\) in \(N_{\mathrm{max}}\) iterations without caring of how the algorithm performs during the first \(N_{\mathrm{max}}/2-1\) iterations.

In optimizing (19), the outer instance of Algorithm 1 is run with \(\alpha _H=2\), \(\delta _H=0.5\), \(\epsilon _H=0.5\), \(N_{\mathrm{init,H}}=8\), \(N_{\mathrm{max,H}}=100\) [which means that \(f_H\) in (19) is evaluated 100 times, each evaluation requiring executing Algorithm 1 \(N_t=20\) times to minimize function f], and PSO as the global optimizer of the acquisition function. The RBF inverse quadratic function is used in both the inner and outer instances of Algorithm 1. The resulting optimal selection is

Figure 8 compares the behavior of GLIS (Algorithm 1) when minimizing f(x) as in (6) in \([-3,3]\) with tentative parameters \(\alpha =1\), \(\delta =1\), \(\epsilon =0.5\) and with the optimal values in (20). The figure also shows the results obtained by using BO on the same problem.

Clearly the results of the hyper-optimization depend on the function f which is minimized in the inner loop. For a more comprehensive and general optimization of GLIS hyperparameters, one could alternatively consider in \(f_H\) the average performance with respect to a collection of problems instead of just one problem.

Minimization of f(x) as in (6) in \([-3,3]\): tentative hyperparameters (left) and optimal hyperparameters (right). The average performance obtained over \(N_{\mathrm{test}}=100\) runs as a function of evaluations of \(f_H\), along with the band defined by the best- and worst-case instances

6.2 Benchmark global optimization problems

We test the proposed global optimization algorithm on standard benchmark problems, summarized in Table 1. For each function the table shows the corresponding number of variables, upper and lower bounds, and the name of the example in [19] reporting the definition of the function. For lack of space, we will only consider the GLIS algorithm implemented using inverse quadratic RBFs for the surrogate, leaving IDW only for exploration, because compared to other RBFs it was found a robust choice experimentally.

As a reference target for assessing the quality of the optimization results, for each benchmark problem the optimization algorithm DIRECT [22] was used to compute the global optimum of the function through the NLopt interface [20], except for the ackley and stepfunction2 benchmarks in which PSO is used instead due to the slow convergence of DIRECT on those problems. The corresponding global minima were validated, when possible, against results reported in [19] or, in case of one- or two-dimensional problems, by inspection.

Algorithm 1 is run by using the RBF inverse quadratic function with hyperparameters obtained by dividing the values in (20) by the number n of variables, with the rationale that exploration is more difficult in higher dimensions and it is therefore better to rely more on the surrogate function during acquisition. The threshold \(\epsilon _\mathrm{SVD}=10^{-6}\) is adopted to compute the RBF coefficients in (10c). The number of initial samples is \(N_\mathrm{init}=2n\).

For each benchmark, the problem is solved \(N_{\mathrm{test}}=100\) times to collect statistically significant enough results. The last two columns of Table 1 report the average CPU time spent for solving the \(N_{\mathrm{test}}=100\) instances of each benchmark using BO and GLIS. As the benchmark functions are very easy to compute, the CPU time spending on evaluating the \(N_{\mathrm{max}}\) function values F is negligible, so the time values reported in the table are practically those due to the execution of the algorithms. Algorithm 1 (GLIS) is between 4.6 and 9.4 times faster than Bayesian optimization (about 7 times faster on average). The execution time of GLIS in Python 3.7 on the same machine, using the PSO package pyswarm (https://pythonhosted.org/pyswarm) to optimize the acquisition function, is similar to that of the BO package GPyOpt [38].

The results of the tests are reported in Fig. 9, where in each plot we show the average function value obtained over \(N_{\mathrm{test}}=100\) runs as a function of the number of function evaluations, and the band defined by the best-case and worst-case instances, and how the global optimum is approached.

Comparison between Algorithm 1 (GLIS) and Bayesian optimization (BO) on benchmark problems. Each plot reports the best function value obtained as a function of the number of function evaluations: average over \(N_{\mathrm{test}}=100\) runs (thick lines), band defined by the best- and worst-case instances (shadowed areas), and global optimum to be attained (black dashed line)

In order to test the algorithm in the presence of constraints, we consider the camelsixhumps problem and solve it under the following constraints

Algorithm 1 is run with hyperparameters set by dividing by \(n=2\) the values obtained in (20) and with \(\epsilon _{\mathrm{SVD}}=10^{-6}\), \(N_{\mathrm{init}}=2n\) for \(N_{\mathrm{max}}=20\) iterations, with penalty \(\rho =1000\) in (18). The results are plotted in Fig. 10. The unconstrained two global minima of the function are located at \(\left[ {\begin{matrix} -0.0898 \\ 0.7126 \end{matrix}} \right]\), \(\left[ {\begin{matrix}0.0898\\ -0.7126 \end{matrix}} \right]\).

6.3 ADMM hyperparameter tuning for QP

The Alternating Direction Method of Multipliers (ADMM) [7] is a popular method for solving optimization problems such as the following convex Quadratic Program (QP)

where \(z\in {\mathbb R}^n\) is the optimization vector, \(\theta \in {\mathbb R}^p\) is a vector of parameters affecting the problem, and \(A\in {\mathbb R}^{q\times n}\), \(b\in {\mathbb R}^{q}\), \(S\in {\mathbb R}^{q\times p}\), and we assume \(Q=Q'\succ 0\). Problems of the form (21) arise for example in model predictive control applications [2, 3], where z represents a sequence of future control inputs to optimize and \(\theta\) collects signals that change continuously at runtime depending on measurements and set-point values. ADMM can be used effectively to solve QP problems (21), see for example the solver described in [1]. A very simple ADMM formulation for QP is summarized in Algorithm 3.

We consider a randomly generated QP test problem with \(n=5\), \(q=10\), \(p=3\) that is feasible for all \(\theta \in [-1,1]^3\), whose matrices are reported in “Appendix B” for reference. We set \(N=100\) in Algorithm 3, and generate \(M=2000\) samples \(\theta _j\) uniformly distributed in \([-1,1]^3\). The aim is to find the hyperparameters \(x=[{\bar{\rho }}\ {\bar{\alpha }}]'\) that provide the best QP solution quality after N ADMM iterations. Quality is expressed by the following objective function

where \(\phi ^*_j(x)\), \(z^*_j(x)\) are the optimal value and optimizer found at run \(\#j\), respectively, \(\phi ^*(x)\) is the solution of the QP problem obtained by running the very fast and accurate ODYS QP solver [10]. The first term in (22) measures relative optimality, the second term relative violation of the constraints, and we set \({\bar{\beta }}=1\) to equally weight relative optimality versus relative accuracy. Function f in (22) is minimized for \(\ell =\left[ {\begin{matrix}0.01\\ 0.01 \end{matrix}} \right]\) and \(u=\left[ {\begin{matrix}3\\ 3 \end{matrix}} \right]\) using GLIS with the same parameters used in Sect. 6.2 and, for comparison, by Bayesian optimization. Due to the fact that ADMM provides suboptimal solutions, when acquiring the samples \(f_i\) the argument of the logarithm in (22) is always found positive in our tests. The test is repeated \(N_{\mathrm{test}}=100\) times and the results are depicted in Fig. 11. It is apparent that GLIS attains slightly better function values for the same number of functions evaluations than BO, both on average and in the worst-case. The resulting hyperparameter tuning that minimized the selected ADMM performance index (22) is \({\bar{\rho }}=0.1566\), \({\bar{\alpha }}=1.9498\).

7 Conclusions

This paper has proposed an approach based on surrogate functions to address global optimization problems whose objective function is expensive to evaluate, possibly under constraints that are inexpensive to evaluate. Contrarily to Bayesian optimization methods, the approach is driven by deterministic arguments based on radial basis functions (or inverse distance weighting) to create the surrogate, and on inverse distance weighting to characterize the uncertainty between the surrogate and the black-box function to optimize, as well as to promote the exploration of the feasible space. The computational burden associated with the algorithm is lighter then the one of Bayesian optimization while performance is comparable. Clearly, the main limitation of the algorithm is related to the dimension of the optimization vector it can cope with, as many other black-box global optimization algorithms.

Current research is devoted to extend the approach to include constraints that are also expensive to evaluate, and to explore if performance can be improved by adapting the parameters \(\alpha\) and \(\delta\) during the search. Future research should address theoretical issues of convergence of the approach, by investigating assumptions on the black-box function f and on the parameters \(\alpha ,\delta ,\epsilon _{\rm{SVD}},\epsilon _{\Delta {\rm F}}>0\) of the algorithm, so to allow guaranteeing convergence, for example using the arguments in [15] based on the results in [40].

Notes

Matrices U and V have the same columns, modulo a change a sign. Indeed, as M is symmetric, we could instead solve the symmetric eigenvalue problem \(M=T'\Lambda T\), \(T'T=I\), which gives \(\Sigma _{ii}=|\Lambda _{ii}|\), and set \(U=V=T'\). As N will be typically small, we neglect computational advantages and adopt here SVD decomposition.

References

Banjac, G., Stellato, B., Moehle, N., Goulart, P., Bemporad, A., Boyd, S.: Embedded code generation using the OSQP solver. In: Proc. 56th IEEE Conf. on Decision and Control, pp. 1906–1911, Melbourne, Australia, 2017. https://github.com/oxfordcontrol/osqp

Bemporad, A.: Model-based predictive control design: new trends and tools. In: Proc. 45th IEEE Conf. on Decision and Control, pp. 6678–6683, San Diego, CA (2006)

Bemporad, A.: A multiparametric quadratic programming algorithm with polyhedral computations based on nonnegative least squares. IEEE Trans. Autom. Control 60(11), 2892–2903 (2015)

Bemporad, A.: Global optimization via inverse distance weighting. 2019. Available on arXiv at arxiv:1906.06498. Code available at http://cse.lab.imtlucca.it/~bemporad/glis

Bemporad, A., Piga, D.: Active preference learning based on radial basis functions. 2019. Available on arXiv at arxiv:1909.13049. Code available at http://cse.lab.imtlucca.it/~bemporad/idwgopt

Blok, H.J.: The lhsdesigncon MATLAB function, 2014. https://github.com/rikblok/matlab-lhsdesigncon

Boyd, S., Parikh, N., Chu, E., Peleato, B., Eckstein, J.: Distributed optimization and statistical learning via the alternating direction method of multipliers. Found. Trends Mach. Learn. 3(1), 1–122 (2011)

Boyd, S., Vandenberghe, L.: Convex Optimization. Cambridge University Press, New York, NY, USA (2004). http://www.stanford.edu/~boyd/cvxbook.html

Brochu, E., Cora, V.M., De Freitas, N.: A tutorial on Bayesian optimization of expensive cost functions, with application to active user modeling and hierarchical reinforcement learning. arXiv preprintarXiv:1012.2599 (2010)

Cimini, G., Bemporad, A., Bernardini, D.: ODYS QP Solver. ODYS S.r.l. (https://odys.it/qp), September 2017

Costa, A., Nannicini, G.: Rbfopt: an open-source library for black-box optimization with costly function evaluations. Math. Program. Comput. 10(4), 597–629 (2018)

Eberhart, R., Kennedy, J.: A new optimizer using particle swarm theory. In: Proceedings of the Sixth International Symposium on Micro Machine and Human Science, pp. 39–43. Nagoya (1995)

Feurer, M., Klein, A., Eggensperger, K., Springenberg, J.T., Blum, M., Hutter, F.: Auto-sklearn: efficient and robust automated machine learning. In: Hutter, F., Kotthoff, L., Vanschoren, J. (eds.) Automated Machine Learning: Methods, Systems, Challenges, pp. 113–134. Springer International Publishing (2019)

Forgione, M., Piga, D., Bemporad, A.: Efficient calibration of embedded MPC. In: Proc. 21th IFAC World Congress. https://arxiv.org/abs/1911.13021 (2020)

Gutmann, H.-M.: A radial basis function method for global optimization. J. Glob. Optim. 19, 201–2227 (2001)

Hansen, N., Ostermeier, A.: Completely derandomized self-adaptation in evolution strategies. Evol. Comput. 9(2), 159–195 (2001)

Hardy, R.L.: Multiquadric equations of topography and other irregular surfaces. J. Geophys. Res. 76(8), 1905–1915 (1971)

Huyer, W., Neumaier, A.: Global optimization by multilevel coordinate search. J. Glob. Optim. 14(4), 331–355 (1999)

Jamil, M., Yang, X.-S.: A literature survey of benchmark functions for global optimisation problems. Int. J. Math. Model. Numer. Optim. 4(2):150–194 (2013). arxiv:1308.4008.pdf

Johnson, S.G.: The NLopt nonlinear-optimization package. http://github.com/stevengj/nlopt

Jones, D.R.: A taxonomy of global optimization methods based on response surfaces. J. Glob. Optim. 21(4), 345–383 (2001)

Jones, D.R.: DIRECT global optimization algorithm. Encyclopedia of Optimization, pages 725–735, (2009)

Jones, D.R., Schonlau, M., Matthias, W.J.: Efficient global optimization of expensive black-box functions. J. Glob. Optim. 13(4), 455–492 (1998)

Joseph, V.R., Kang, L.: Regression-based inverse distance weighting with applications to computer experiments. Technometrics 53(3), 255–265 (2011)

Kushner, H.J.: A new method of locating the maximum point of an arbitrary multipeak curve in the presence of noise. J. Basic Eng. 86(1), 97–106 (1964)

Matheron, G.: Principles of geostatistics. Econ. Geol. 58(8), 1246–1266 (1963)

McDonald, D.B., Grantham, W.J., Tabor, W.L., Murphy, M.J.: Global and local optimization using radial basis function response surface models. Appl. Math. Model. 31(10), 2095–2110 (2007)

McKay, M.D., Beckman, R.J., Conover, W.J.: Comparison of three methods for selecting values of input variables in the analysis of output from a computer code. Technometrics 21(2), 239–245 (1979)

Piga, D., Forgione, M., Formentin, S., Bemporad, A.: Performance-oriented model learning for data-driven MPC design. IEEE Control Systems Letters, 2019. Also in Proc. 58th IEEE Conf. Decision and Control, Nice (France) (2019). arxiv:1904.10839

Powell, M.J.D.: The NEWUOA software for unconstrained optimization without derivatives. In Large-scale nonlinear optimization, pp. 255–297. Springer (2006)

Regis, R.G., Shoemaker, C.A.: Constrained global optimization of expensive black box functions using radial basis functions. J. Glob. Optim. 31(1), 153–171 (2005)

Rios, L.M., Sahinidis, N.V.: Derivative-free optimization: a review of algorithms and comparison of software implementations. J. Glob. Optim. 56(3), 1247–1293 (2013)

Rippa, S.: An algorithm for selecting a good value for the parameter c in radial basis function interpolation. Adv. Comput. Math. 11(2–3), 193–210 (1999)

Sacks, J., Welch, W.J., Mitchell, T.J., Wynn, H.P.: Design and analysis of computer experiments. Stat. Sci. 409–423 (1989)

Shahriari, B., Swersky, K., Wang, Z., Adams, R.P., De Freitas, N.: Taking the human out of the loop: a review of Bayesian optimization. Proc. IEEE 104(1), 148–175 (2015)

Shepard, D.: A two-dimensional interpolation function for irregularly-spaced data. In: Proc. ACM National Conference, pp. 517–524. New York (1968)

Snoek, J., Jasper, H., Adams, R.P.: Practical Bayesian optimization of machine learning algorithms. In: Advances in Neural Information Processing Systems, pp. 2951–2959 (2012)

The GPyOpt authors. GPyOpt: a Bayesian optimization framework in Python. http://github.com/SheffieldML/GPyOpt (2016)

The Mathworks, Inc. Statistics and Machine Learning Toolbox User’s Guide (2019). https://www.mathworks.com/help/releases/R2019a/pdf_doc/stats/stats.pdf

Törn, A., Žilinskas, A.: Global Optimization, vol. 350. Springer, Berlin (1989)

Vaz, A.I.F., Vicente, L.N.: A particle swarm pattern search method for bound constrained global optimization. J. Glob. Optim. 39(2), 197–219 (2007)

Vaz, A.I.F., Vicente, L.N.: PSwarm: a hybrid solver for linearly constrained global derivative-free optimization. Optim. Methods Softw. 24, 669–685 (2009). http://www.norg.uminho.pt/aivaz/pswarm/

Whitley, D.: A genetic algorithm tutorial. Stat. Comput. 4(2), 65–85 (1994)

Acknowledgements

Open access funding provided by Scuola IMT Alti Studi Lucca within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendices

A Proofs

Proof of Lemma 1

Property P1 easily follows from (4). Property P2 also easily follows from the fact that for all \(x\in {\mathbb R}^n\) the values \(v_i(x)\in [0,1]\), \(\forall i=1,\ldots ,N\), and \(\sum _{i=1}^Nv_i(x)=1\), so that

Regarding differentiability, we first prove that for all \(i=1,\ldots ,N\), functions \(v_i\) are differentiable everywhere on \({\mathbb R}^n\), and that in particular \(\nabla v_i(x_j)=0\) for all \(j=1,\ldots ,N\). Clearly, functions \(v_i\) are differentiable for all \(x\not \in \{x_1,\ldots ,x_N\}\), \(\forall i=1,\ldots ,N\). Let \(e^h\) be the hth column of the identity matrix of order n. Consider first the case in which \(w_i(x)\) are given by (3b). The partial derivatives of \(v_i\) at \(x_i\) are

and similarly at \(x_j\), \(j\ne i\) are

In case \(w_i(x)\) are given by (3a) differentiability follows similarly, with \(e^{-t^2}\) replaced by 1. Therefore \({\hat{f}}\) is differentiable and

\(\square\)

Proof of Lemma 2

As by Lemma 1 functions \({\hat{f}}\) and \(v_i\) are differentiable, \(\forall i=1,\ldots ,N\), it follows immediately that s(x) is differentiable. Regarding differentiability of z, clearly it is differentiable for all \(x\not \in \{x_1,\ldots ,x_N\}\), \(\forall i=1,\ldots ,N\). Let \(e^h\) be the hth column of the identity matrix of order n. Consider first the case in which \(w_i(x)\) are given by (3b). The partial derivatives of z at \(x_i\) are

In case \(w_i(x)\) are given by (3a) differentiability follows similarly, with \(e^{-t^2}\) replaced by 1. Therefore the acquisition function a is differentiable for all \(\alpha ,\delta \ge 0\). \(\square\)

B Matrices of parametric QP considered in Sect. 6.3

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Bemporad, A. Global optimization via inverse distance weighting and radial basis functions. Comput Optim Appl 77, 571–595 (2020). https://doi.org/10.1007/s10589-020-00215-w

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10589-020-00215-w