Abstract

We study the stochastic total variation flow (STVF) equation with linear multiplicative noise. By considering a limit of a sequence of regularized stochastic gradient flows with respect to a regularization parameter \(\varepsilon \) we obtain the existence of a unique variational solution of the STVF equation which satisfies a stochastic variational inequality. We propose an energy preserving fully discrete finite element approximation for the regularized gradient flow equation and show that the numerical solution converges to the solution of the unregularized STVF equation. We perform numerical experiments to demonstrate the practicability of the proposed numerical approximation.

Similar content being viewed by others

1 Introduction

We study numerical approximation of the stochastic total variation flow (STVF)

where \(\mathcal {O}\subset \mathbb {R}^d\), \(d\ge 1\) is a bounded, convex domain with a piecewise \(C^{2}\)-smooth boundary \(\partial \mathcal {O}\), and \(\lambda \ge 0\), \(T>0\) are constants. We assume that \(x_0,\, g \in \mathbb {L}^2\) and consider a one dimensional real-valued Wiener process W, for simplicity; generalization for a sufficiently regular trace-class noise is straightforward.

Equation (1) can be interpreted as a stochastically perturbed gradient flow of the penalized total variation energy functional

The minimization of above functional, so-called ROF-method, is a prototypical approach for image denoising, cf. [13]; in this context the function g represents a given noisy image and \(\lambda \) serves as a penalization parameter. Further applications of the functional include, for instance, elastoplasticity and the modeling of damage and fracture, for more details see for instance [4] and the references therein.

The use of stochastically perturbed gradient flows has proven useful in image processing. Stochastic numerical methods for models with nonconvex energy functionals are able to avoid local energy minima and thus achieve faster convergence and/or more accurate results than their deterministic counterparts; see [10] which applies stochastic level-set method in image segmentation, and [14] which uses stochastic gradient flow of a modified (non-convex) total variation energy functional for binary tomography.

Due to the singular character of total variation flow (1), it is convenient to perform numerical simulations using a regularized problem

with a regularization parameter \(\varepsilon >0\). In the deterministic setting (\(W\equiv 0\)) Eq. (3) corresponds to the gradient flow of the regularized energy functional

It is well-known that the minimizers of the above regularized energy functional converge to the minimizers of (2) for \(\varepsilon \rightarrow 0\), cf. [7] and the references therein.

Owing to the singular character of the diffusion term in (1) the classical variational approach for the analysis of stochastic partial differential equations (SPDEs), see e.g. [11, 12], is not applicable to this problem. To study well-posedeness of singular gradient flow problems it is convenient to apply the solution framework developed in [3] which characterizes the solutions of (1) as stochastic variational inequalities (SVIs). In this paper, we show the well posedness of SVI solutions using the practically relevant regularization procedure (3) which, in the regularization limit, yields a SVI solution in the sense of [3]. Throughout the paper, we will refer to the solutions which satisfy a stochastic variational inequality as SVI solutions, and to the classical SPDE solutions as variational solutions. Convergence of numerical approximation of (3) in the deterministic setting (\(W\equiv 0\)) has been shown in [7]. Analogically to the deterministic setting, we construct an implementable finite element approximation of the problem (1) via the numerical discretization of the regularized problem (3). The scheme is implicit in time and preserves the gradient structure of the problem, i.e., it satisfies a discrete energy inequality. The deterministic variational inequality framework used in the the numerical analysis of [7] is not directly transferable to the stochastic setting. Instead, we show the convergence of the proposed numerical approximation of (3) to the SVI solution of (1) via an additional regularization step on the discrete level. The convergence analysis of the discrete approximation is inspired by the analytical approach of [8] where the SVI solution concept was applied to the stochastic p-Laplace equation. As far as we are aware, the present work is the first to show convergence of implementable numerical approximation for singular stochastic gradient flows in the framework of stochastic variational inequalities.

The paper is organized as follows. In Sect. 2 we introduce the notation and state some auxiliary results. The existence of a unique SVI solution of the regularized stochastic TV flow (3) and its convergence towards a unique SVI solution of (1) for \(\varepsilon \rightarrow 0\) is shown in Sect. 3. In Sect. 4 we introduce a fully discrete finite element scheme for the regularized problem (3) and show its convergence to the SVI solution of (1). Numerical experiments are presented in Sect. 5.

2 Notation and preliminaries

Throughout the paper we denote by C a generic positive constant that may change from line to line. For \(1\le p \le \infty \), we denote by \((\mathbb {L}^p,\Vert \cdot \Vert _{\mathbb {L}^p})\) the standard spaces of pth order integrable functions on \(\mathcal {O}\), and use \(\Vert \cdot \Vert := \Vert \cdot \Vert _{\mathbb {L}^2}\) and \((\cdot ,\cdot ):=(\cdot ,\cdot )_{\mathbb {L}^2}\) for the \(\mathbb {L}^2\)-inner product. For \(k \in \mathbb {N}\) we denote the usual Sobolev space on \(\mathcal {O}\) as \((\mathbb {H}^k,\Vert \cdot \Vert _{\mathbb {H}^k})\), and \((\mathbb {H}^1_0,\Vert \cdot \Vert _{\mathbb {H}^1_0})\) stands for the \(\mathbb {H}^1\) space with zero trace on \(\partial \mathcal {O}\) with its dual space \((\mathbb {H}^{-1},\Vert \cdot \Vert _{\mathbb {H}^{-1}})\). Furthermore, we set \(\langle \cdot ,\cdot \rangle :=\langle \cdot ,\cdot \rangle _{\mathbb {H}^{-1}\times \mathbb {H}^1_0}\), where \(\langle \cdot ,\cdot \rangle _{\mathbb {H}^{-1}\times \mathbb {H}^1_0}\) is the duality pairing between \(\mathbb {H}^1_0\) and \(\mathbb {H}^{-1}\). The functional (4) with \(\lambda =0\) will be denoted as \(\mathcal {J}_\varepsilon := \mathcal {J}_{\varepsilon ,0}\). We say that a function \(X \in L^1(\Omega \times (0,T);\mathbb {L}^2)\) is \(\mathcal {F}_t\)-progressively measurable if \(X \mathbb {1}_{[0,t]}\) is \(\mathcal {F}_t\otimes \mathcal {B}([0,t])\)-measurable for all \(t \in [0,T]\).

For the convenience of the reader we state some basic definitions below.

Definition 2.1

Let \(\mathbb {H}\) be a real Banach space, \(A :D(A)\rightarrow \mathbb {H}\) a linear operator and \(\rho (A)\) its resolvent set. For a real number \(\xi \in \rho (A)\) we define the resolvent \(R_{\xi }: \mathbb {H} \rightarrow \mathbb {H}\) of A as

Furthermore we define the Yosida approximation of A as

Definition 2.2

The mapping \(\mathcal {P}_m : \mathbb {L}^2\rightarrow \mathbb {V}_m\subset \mathbb {L}^2\) which satisfies

defines the \(\mathbb {L}^2\)-orthogonal projection onto \(\mathbb {V}_m\).

Definition 2.3

A function \(u \in L^1(\mathcal {O})\) is called a function of bounded variation, if its total variation

is finite. The space of functions of bounded variations is denoted by \(BV(\mathcal {O})\).

For \(u \in BV(\mathcal {O})\) we denote

The following proposition plays an important role in the analysis below; the proposition holds for convex domains with piecewise smooth boundary, which includes the case of practically relevant polygonal domains, cf. [3, Proposition 8.2 and Remark 8.1].

Proposition 2.1

Let \(\mathcal {O}\subset \mathbb {R}^d\), \(d\ge 1\) be a bounded domain with a piecewise \(C^2\)-smooth and convex boundary. Let \(g :[0,\infty ) \rightarrow [0,\infty )\) be a continuous and convex function of at most quadratic growth such that \(g(0)=0\), then it holds

3 Well posedness of STVF

In this section we show existence and uniques of the SVI solution of (1) (see below for a precise definition) via a two-level regularization procedure. To be able to treat problems with \(\mathbb {L}^2\)-regular data, i.e., \(x_0 \in L^2(\Omega ,\mathcal {F}_0;\mathbb {L}^2)\), \(g\in \mathbb {L}^2\) we consider a \(\mathbb {H}^1_0\)-approximating sequence \(\{x^n_0\}_{n\in \mathbb {N}} \subset L^2(\Omega ,\mathcal {F}_0;\mathbb {H}^1_0)\) s.t. \(x^n_0 \rightarrow x_0\) in \(L^2(\Omega ,\mathcal {F}_0; \mathbb {L}^2)\) for \(n\rightarrow \infty \) and \(\{g^n\}_{n\in \mathbb {N}} \subset \mathbb {H}^1_0\) s.t. \(g^n \rightarrow g\) in \(\mathbb {L}^2\) for \(n\rightarrow \infty \). We introduce a regularization of (3) as

where \(\delta >0\) is an additional regularization parameter.

We define the operator \(A^{\varepsilon ,\delta }: \mathbb {H}^1_0\rightarrow \mathbb {H}^{-1}\) as

and note that (7) is equivalent to

The operator \(A^{\varepsilon ,\delta }\) is coercive, semicontinuos and satisfies (cf. [12, Remark 4.1.1])

The following monotonicity property, which follows from the convexity of the function \(\sqrt{\vert \cdot \vert ^2+\varepsilon ^2}\), will be used frequently in the subsequent arguments

The existence and uniqueness of a variational solution of (7) is established in the next lemma; we note that the result only requires \(\mathbb {L}^2\)-regularity of data.

Lemma 3.1

For any \(\varepsilon , \delta >0\) and \(x^n_0 \in L^2(\Omega ,\mathcal {F}_0;\mathbb {H}^1_0)\), \(g^n\in \mathbb {H}^1_0\) there exists a unique variational solution \(X^{\varepsilon ,\delta }_{n}\in L^2(\Omega ;C([0,T];\mathbb {L}^2))\) of (7). Furthermore, there exists a \(C\equiv C(T)>0\) such that the following estimate holds

Proof of Lemma 3.1

On noting the properties (10)–(11) of the operator \(A^{\varepsilon ,\delta }\) for \(\varepsilon ,\delta >0\) the classical theory, cf. [12], implies that for any given data \(x^n_0 \in L^2(\Omega ,\mathcal {F}_0;\mathbb {H}^1_0)\), \(g^n\in \mathbb {H}^1_0\) there exists a unique variational solution \(X^{\varepsilon ,\delta }_{n}\in L^2(\Omega ;C([0,T];\mathbb {L}^2))\) of (7) which satisfies the stability estimate. \(\square \)

In next step, we show a priori estimate for the solution of (7) in stronger norms; the estimate requires \(\mathbb {H}^1_0\)-regularity of the data.

Lemma 3.2

Let \(x_0^n\, \in L^2(\Omega ,\mathcal {F}_0;\mathbb {H}^1_0)\), \(g^n \in \mathbb {H}^1_0\). There exists a constant \(C\equiv C(T)\) such that for any \(\varepsilon ,\, \delta >0\) the corresponding variational solution \(X^{\varepsilon ,\delta }_{n}\) of (7) satisfies

Proof of Lemma 3.2

Let \(\{e_i\}_{i=0}^{\infty }\) be an orthonormal basis of eigenfunctions of the Dirichlet Laplacian \(-\Delta \) on \(\mathbb {L}^2\) and \(\mathbb {V}_m := \text {span}\{e_0,\ldots , e_m\}\). Let \(\mathcal {P}_m:\mathbb {L}^2\rightarrow \mathbb {V}_m\) be the \(\mathbb {L}^2\)-orthogonal projection onto \(\mathbb {V}_m\).

For fixed \(\varepsilon ,\,\delta ,\,n\) the Galerkin approximation \(X^{\varepsilon ,\delta }_{n,m}\in \mathbb {V}_m\) of \(X^{\varepsilon ,\delta }_{n}\) satisfies

By standard arguments, cf. [12, Theorem 5.2.6], there exists a \(X^{\varepsilon ,\delta }_{n}\in L^2(\Omega ;C([0,T];\mathbb {L}^2))\) such that \(X^{\varepsilon ,\delta }_{n,m}\rightharpoonup X^{\varepsilon ,\delta }_{n}\) in \(L^2(\Omega \times (0,T);\mathbb {L}^2)\) for \(m\rightarrow \infty \). We use Itô’s formula for \(\Vert \nabla X^{\varepsilon ,\delta }_{n,m}(t)\Vert ^2\) to obtain

Let \(T_\xi \) be the Yosida-approximation and \(R_{\xi }\) the resolvent of the Dirichlet Laplacian \(-\Delta \) on \(L^2\), respectively; see Definition 2.1. By the convexity, cf. (12), we get

where we used Proposition 2.1 in the last step above. The Burkholder–Davis–Gundy inequality for \(p=1\) implies that

After taking supremum over t and expectation in (15), using (16) along with the Tonelli and Gronwall lemmas we obtain

Hence, from the sequence \(\{X^{\varepsilon ,\delta }_{n,m}\}_{m\in \mathbb {N}}\) we can extract a subsequence (not relabeled), s.t. for \(m \rightarrow \infty \)

By lower-semicontinuity of the norms, we get

\(\square \)

We define the SVI solution of (3) and (1) analogically to [3, Definition 3.1] as a stochastic variational inequality.

Definition 3.1

Let \(0< T < \infty \), \(\varepsilon \in [0,1]\) and \(x_0 \in L^2(\Omega ,\mathcal {F}_0;\mathbb {L}^2)\) and \(g \in \mathbb {L}^2\). Then a \(\mathcal {F}_t\)-progressively measurable map \(X^{\varepsilon }\in L^2(\Omega ; C([0,T];\mathbb {L}^2))\cap L^2(\Omega ; L^1((0,T);BV(\mathcal {O})))\) [denoted by \(X \in L^2(\Omega ; C([0,T];\mathbb {L}^2))\cap L^2(\Omega ; L^1((0,T); BV(\mathcal {O})))\) for \(\varepsilon =0\)] is called a SVI solution of (3) [or (1) if \(\varepsilon =0\)] if \(X^{\varepsilon }(0)=x_0\) (\(X(0)=x_0\)), and for each \((\mathcal {F}_t)\)-progressively measurable process \(G\in L^2(\Omega \times (0,T),\mathbb {L}^2) \) and for each \((\mathcal {F}_t)\)-adapted \(\mathbb {L}^2\)-valued process \(Z \in L^2(\Omega \times (0,T);\mathbb {H}^1_0)\) with \(\mathbb {P}\)-a.s. continuous sample paths which satisfy the equation

it holds for \(\varepsilon \in (0,1]\) that

and analogically for \(\varepsilon =0\) it holds that

In the next theorem we show the existence and uniqueness of a SVI solution to (3) for \(\varepsilon > 0\) in the sense of the Definition 3.1.

Theorem 3.1

Let \(0< T < \infty \) and \(x_0 \in L^2(\Omega ,\mathcal {F}_0;\mathbb {L}^2)\), \( g \in \mathbb {L}^2\). For each \(\varepsilon \in (0,1]\) there exists a unique SVI solution \(X^{\varepsilon }\) of (3). Moreover, any two SVI solutions \(X^{\varepsilon }_1,X^{\varepsilon }_2\) with \(x_0\equiv x^1_0\), \(g\equiv g^1\) and \(x_0\equiv x^2_0\), \(g\equiv g^2\) satisfy

for all \(t \in [0,T]\).

Proof of Theorem 3.1

We show that for fixed \(\varepsilon >0\) the sequence \(\{X^{\varepsilon ,\delta }_{n}\}_{\delta ,n}\) of variational solutions of (7) is a Cauchy-sequence w.r.t. \(\delta \) for any fixed \(n\in \mathbb {N}\), and then show that it is a Cauchy-sequence w.r.t. n for \(\delta \equiv 0\).

We denote by \(X^{\varepsilon ,\delta _1}_{n_1},X^{\varepsilon ,\delta _2}_{n_2}\) the solutions of (7) for \(\delta \equiv \delta _1\), \(\delta \equiv \delta _2\) and \(x_0\equiv x^{n_1}_0\in L^2(\Omega ,\mathcal {F}_0;\mathbb {H}^1_0)\), \(x_0\equiv x^{n_2}_0 \in L^2(\Omega ,\mathcal {F}_0;\mathbb {H}^1_0)\), respectively, where \(x^{n_1}_0\), \(x^{n_2}_0\) belong to the \(\mathbb {H}^1_0\)-approximating sequence of \(x_0\in L^2(\Omega ,\mathcal {F}_0;\mathbb {L}^2)\). By Itô’s formula it follows that

We note that

Hence by using the convexity (12), Lemma 3.2, the Burkholder–Davis–Gundy inequality for \(p=1\), the Tonelli and Gronwall lemmas we obtain

Inequality (21) implies for \(x_0^{n_1} \equiv x_0^{n_2}\equiv x_0^n\) that

Hence for any fixed n, \(\varepsilon \) there exists a \(\{\mathcal {F}_t \}\)-adapted process \(X^{\varepsilon }_n \in L^2(\Omega ,C([0,T]; \mathbb {L}^2))\), s.t.

For fixed \(n_1\), \(n_2\), \(\varepsilon \) we get from (21) using (22) by the lower-semicontinuity of norms that

Since \(x_0^{n_1},\,x_0^{n_2}\rightarrow x_0\) for \(n_1,n_2\rightarrow \infty \) we deduce from (23) that for any fixed \(\varepsilon \) there exists an \(\{\mathcal {F}_t \}\)-adapted process \(X^{\varepsilon }\in L^2(\Omega ;C([0,T];\mathbb {L}^2))\) such that

In the next step, we show that the limiting process \(X^{\varepsilon }\) is a SVI solution of (3). We subtract the process

with \(Z(t)=z_0\) from (7) and obtain

The Itô formula implies

We rewrite the second term on the right-hand side in above inequality as

The convexity of \(\mathcal {J}_\varepsilon \) along with the Cauchy–Schwarz and Young’s inequalities imply that

By combining two inequalities above with (25) we get

The lower-semicontinuity of \(\mathcal {J}_{\varepsilon }\) in \(BV(\mathcal {O})\) with respect to convergence in \(\mathbb {L}^1\), cf. [1], and (22), (24) and the strong convergence \(g^n \rightarrow g \) in \(\mathbb {L}^2\) imply that for \(\delta \rightarrow 0\) and \(n \rightarrow \infty \) the limiting process \(X^{\varepsilon }\in L^2(\Omega ; C([0,T];\mathbb {L}^2)\) satisfies (18).

To conclude that \(X^{\varepsilon }\) is a SVI solution of (3) it remains to show that \(X^{\varepsilon }\in L^2(\Omega ; L^1((0,T);BV(\mathcal {O})))\). Setting \(G\equiv 0\) in (17) [which implies \(Z\equiv 0\) by (17)] yields

On noting that (cf. Definition 2.3 or [7, proof of Theorem 1.3])

and \(\mathcal {J}_{\varepsilon ,\lambda }(0)=\varepsilon \vert \mathcal {O}\vert + \frac{\lambda }{2}\Vert g\Vert ^2\), we deduce from (27) that

Hence, by the Tonelli and Gronwall lemmas it follows that

Hence \(X^{\varepsilon }\in L^2(\Omega ; C([0,T];\mathbb {L}^2))\cap L^2(\Omega ; L^1((0,T);BV(\mathcal {O})))\) is a SVI solution of (3) for \(\varepsilon \in (0,1]\).

In the next step we show the uniqueness of the SVI solution. Let \(X^{\varepsilon }_1, X^{\varepsilon }_2\) be two SVI solutions to (3) for a fixed \(\varepsilon \in (0,1]\) with initial values \(x_0\equiv x^1_0, x^2_0\) and \(g \equiv g^1,g^2\), respectively. Let \(\{x^{2,n}_0\}_{n\in \mathbb {N}} \subset L^2(\Omega ,\mathcal {F}_0;\mathbb {H}^1_0)\) be a sequence, s.t. \(x^{2,n}_0 \rightarrow x^2_0\) in \(L^2(\Omega ,\mathcal {F}_0;\mathbb {L}^2)\) and \(\{g^{2,n}\}_{n\in \mathbb {N}} \subset \mathbb {H}^1_0\) be a sequence, s.t. \(g^{2,n}_0 \rightarrow g^2\) in \(\mathbb {L}^2\) for \(n \rightarrow \infty \) and let \(\{X^{\varepsilon ,\delta }_{2,n}\}_{n\in \mathbb {N},\delta >0}\) be a sequence of variational solutions of (7) (for fixed \(\varepsilon >0\)) with \(x_0\equiv x^{2,n}_0\), \(g\equiv g^{2,n}\). We note that the first part of the proof implies that \(X^{\varepsilon ,\delta }_{2,n} \rightarrow X^{\varepsilon }_2\) in \(L^2(\Omega ; C([0,T];\mathbb {L}^2)\) for \(\delta \rightarrow 0\), \(n\rightarrow \infty \). We set \(Z=X^{\varepsilon ,\delta }_{2,n}, G=A^{\varepsilon ,\delta }(X^{\varepsilon ,\delta }_{2,n})\) in (18) and observe that

The term III is estimated using Young’s inequality as

By the convexity (12) we estimate

Next, we obtain

After substituting III–V into (29) we arrive at

The convergences (22), (24) imply the convergence \(X^{\varepsilon ,\delta }_{2,n} \rightarrow X^{\varepsilon }_2\) in \(L^2(\Omega ;C([0,T];\mathbb {L}^2))\) for \(\delta \rightarrow 0\), \(n \rightarrow \infty \). We note that for \(\delta \rightarrow 0\) the fourth term on the right-hand side of (30) vanishes due to Lemma 3.2. Hence, by taking the limits for \(\delta \rightarrow 0\), \(n \rightarrow \infty \) in (30), using the strong convergence \(g^{2,n} \rightarrow g^2 \) in \(\mathbb {L}^2\) for \(n \rightarrow \infty \) , the lower-semicontinuity of norms and (22), (24) we obtain

for all \(t \in [0,T]\). After applying the Tonelli and Gronwall lemmas we obtain (20).

\(\square \)

Our second main theorem establishes existence and uniqueness of a SVI solution to (1) in the sense of Definition 3.1. The solution is obtained as a limit of solutions of the regularized gradient flow (3) for \(\varepsilon \rightarrow 0\).

Theorem 3.2

Let \(0< T < \infty \) and \(x_0 \in L^2(\Omega ,\mathcal {F}_0;\mathbb {L}^2)\), \( g \in \mathbb {L}^2\) be fixed. Let \(\{X^{\varepsilon }\}_{\varepsilon >0}\) be the SVI solutions of (3) for \(\varepsilon \in (0,1]\). Then \(X^{\varepsilon }\) converges to the unique SVI variational solution X of (1) in \(L^2(\Omega ;C([0,T];\mathbb {L}^2))\) for \(\varepsilon \rightarrow 0\), i.e., there holds

Furthermore, the following estimate holds

where \(X_1\) and \(X_2\) are SVI solutions of (1) with \(x_0\equiv x^1_0\), \(g\equiv g^1\) and \(x_0\equiv x^2_0\), \(g\equiv g^2\), respectively.

Proof of Theorem 3.2

We consider \(\mathbb {L}^2\)-approximating sequences \(\{x_0^n\}_{n\in \mathbb {N}} \subset L^2(\Omega ,\mathcal {F}_0; \mathbb {H}^1_0)\) and \(\{g^n\}_{n\in \mathbb {N}} \subset \mathbb {H}^1_0\) of the initial condition \(x_0\in L^2(\Omega ,\mathcal {F}_0;\mathbb {L}^2)\) and \(g \in \mathbb {L}^2\), respectively. For \(n\in \mathbb {N}\), \(\delta >0\) we denote by \(X^{\varepsilon _1,\delta }_{n},X^{\varepsilon _2,\delta }_{n}\) the variational solutions of (7) with \(\varepsilon \equiv \varepsilon _1\), \(\varepsilon \equiv \varepsilon _2\), respectively. By Itô’s formula the difference satisfies

We estimate the second term on the right-hand side of (33) using the convexity (12)

Next, we observe that

Using the inequality above, we get

Substituting (34) along with the last inequality into (33) yields

After using the Burkholder–Davis–Gundy inequality for \(p=1\), the Tonelli and Gronwall lemmas we obtain that

We take the limit for \(\delta \rightarrow 0\) in (36) for fixed n and \(\varepsilon _1,\varepsilon _2\), and obtain using (22) by the lower-semicontinuity of norms that

Hence, by (24) and the lower-semicontinuity of norms, after taking the limit \(n\rightarrow \infty \) in (37) for fixed \(\varepsilon _1\), \(\varepsilon _2\) we get

The above inequality implies that \(\{X^{\varepsilon }\}_{\varepsilon >0}\) is a Cauchy Sequence in \(\varepsilon \). Consequently there exists a unique \(\{\mathcal {F}_t\}\)-adapted process \(X \in L^2(\Omega ;C([0,T];\mathbb {L}^2))\) with \(X(0)=x_0 \) such that

This concludes the proof of (31).

Next, we show that the limiting process X is the SVI solution of (1), i.e., we show that (19) holds. We note that (28) implies that

Hence using (39), (40) we get by Fatou’s lemma and [2, Proposition 10.1.1] that

By Theorem 3.1 we know that \(X^{\varepsilon }\) satisfies (18) for any \(\varepsilon \in (0,1]\). By taking the limit for \(\varepsilon \rightarrow 0\) in (18), using the above inequality and (39) it follows that X satisfies (19). Finally, inequality (32) follows after taking the limit for \(\varepsilon \rightarrow 0\) in (39), by (20) and the lower semicontinuity of norms. \(\square \)

4 Numerical approximation

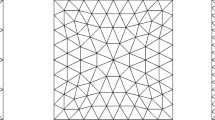

We construct a fully-discrete approximation of the STVF Eq. (1) via an implicit time-discretization of the regularized STVF Eq. (3). For \(N \in \mathbb {N}\) we consider the time-step \(\tau := T/N\), set \(t_i:=i\tau \) for \(i=0,\ldots ,N\) and denote the discrete Wiener increments as \(\Delta _i W:= W(t_i)-W(t_{i-1})\). We combine the discretization in time with a the standard \(\mathbb {H}^1_0\)-conforming finite element method, see, e.g., [4, 5, 7]. Given a family of quasi-uniform triangulations \(\big \{\mathcal {T}_h\big \}_{h>0}\) of \(\mathcal {O}\) into open simplices with mesh size \(h=\max _{K\in \mathcal {T}_h}\{\mathrm {diam}(K)\}\) we consider the associated space of piecewise linear, globally continuous functions \(\mathbb {V}_h = \{v_h \in C^0(\overline{\mathcal {O}});\, v_h|_K \in \mathcal {P}^1(K)\,\, \forall K\in \mathcal {T}_h\}\subset \mathbb {H}^1_0\) and set \(L\equiv \text {dim}\mathbb {V}_h\) for the rest of the paper. We set \(X^h_0:=\mathcal {P}_h x_0\), \(g^h:=\mathcal {P}_h g\), where \(\mathcal {P}_h\) is the \(\mathbb {L}^2\)-projection onto \(\mathbb {V}_h\).

The implicit fully-discrete approximation of (3) is defined as follows: fix \(N\in \mathbb {N}\), \(h>0\) set \(X^0_\varepsilon = x^h_0\in \mathbb {V}_h\) and determine \(X_{\varepsilon ,h}^{i}\in \mathbb {V}_h\), \(i=1,\dots , N\) as the solution of

To show convergence of the solution of the numerical scheme (41) we need to consider a discretization of the regularized problem (7). Given \(x_0\in L^2(\Omega ,\mathcal {F}_0;\mathbb {L}^2)\), \(g \in \mathbb {L}^2\) and \(n \in \mathbb {N}\) we choose \(x^n_0:=\mathcal {P}_n x_0\in \mathbb {V}_n\), \(g^n:=\mathcal {P}_n g\in \mathbb {V}_n\) in (7). Since \(\mathbb {V}_n\subset \mathbb {H}^1_0\) the sequences \(\{x^n_0\}_{n\in \mathbb {N}} \subset L^2(\Omega ,\mathcal {F}_0;\mathbb {H}^1_0)\), \(\{g_n\}_{n\in \mathbb {N}} \in \mathbb {H}^1_0\) constitute \(\mathbb {H}^1_0\)-approximating sequences of \(x_0\in L^2(\Omega ,\mathcal {F}_0;\mathbb {L}^2)\), \(g \in \mathbb {L}^2\), respectively. We set \(x^{h,n}_0:=\mathcal {P}_h x_0^n\), \(g^{h,n}:=\mathcal {P}_h g^n\), where \(\mathcal {P}_h\) is the \(\mathbb {L}^2\)-projection onto \(\mathbb {V}_h\). The fully-discrete Galerkin approximation of (7) for fixed \(n \in \mathbb {N}\) is then defined as follows: fix \( N\in \mathbb {N}\), \(h>0\) set \(X_{\varepsilon ,\delta ,n,h}^{0}=x^{h,n}_0\) and determine \(X_{\varepsilon ,\delta ,n,h}^{i}\in \mathbb {V}_h\), \(i=1,\dots , N\) as the solution of

The next lemma, cf. [15, Lemma II.1.4] is used to show \(\mathbb {P}\)-a.s. existence of discrete solutions \(\{X_{\varepsilon ,h}^{i}\}_{i=1}^N\), \(\{X_{\varepsilon ,\delta ,n,h}^{i}\}_{i=1}^N\) of numerical schemes (41), (42), respectively.

Lemma 4.1

Let \(h: \mathbb {R}^L \rightarrow \mathbb {R}^L\) be continuous. If there is \(R>0 \) such that \(h(v)v\ge 0\) whenever \(\Vert v\Vert _{\mathbb {R}^L}=R \) then there exist \(\bar{v}\) satisfying \(\Vert \bar{v}\Vert _{\mathbb {R}^L} \le R\) and \(h(\bar{v})=0\).

In order to show \(\{\mathcal {F}_{t_i}\}_{i=1}^{N}\)-measurability of the random variables \(\{X_{\varepsilon ,h}^{i}\}_{i=1}^N\), \(\{X_{\varepsilon ,\delta ,n,h}^{i}\}_{i=1}^N\) we make use of the following lemma, cf. [6, 9].

Lemma 4.2

Let \((S,\Sigma )\) be a measure space. Let \(f :S\times \mathbb {R}^L\rightarrow \mathbb {R}^L\) be a function that is \(\Sigma \)-measurable in its first argument for every \(x \in \mathbb {R}^L\), that is continuous in its second argument for every \(\alpha \in S\) and moreover such that for every \(\alpha \in S\) the equation \(f(\alpha , x)=0\) has an unique solution \(x=g(\alpha )\). Then \(g : S \rightarrow \mathbb {R}^L\) is \(\Sigma \)-measurable.

Below we show the existence, uniqueness and measurability of numerical solutions of (41), (42). We state the result for the scheme (42) only, since the proof also holds for \(\delta =0\) (i.e. for (41)) without any modifications.

Lemma 4.3

Let \(x_0 \in L^2(\Omega ,\mathcal {F}_0;\mathbb {L}^2)\), \(g\in \mathbb {L}^2\) and let \(L,n,N \in \mathbb {N}\) be fixed. The for any \(\delta \ge 0\), \(\varepsilon > 0\), \(i=1,\ldots ,N,\) there exist \(\mathcal {F}_{t_i}\)-measurable \(\mathbb {P}\)-a.s. unique random variables \(X_{\varepsilon ,\delta ,n,h}^{i}\in \mathbb {V}_h\) which solves (42).

Proof of Lemma 4.3

Assume that the \(\mathbb {V}_h\)-valued random variables \(X_{\varepsilon ,\delta ,n,h}^{0},\ldots ,X_{\varepsilon ,\delta ,n,h}^{i-1}\) satisfy (42) and that \(X_{\varepsilon ,\delta ,n,h}^{k}\) is \(\mathcal {F}_{t_k}\)-measurable for \(k=1,\ldots ,i-1\). We show that there is a \(\mathcal {F}_{t_i}\) measurable random variable \(X_{\varepsilon ,\delta ,n,h}^{i}\), that satisfies (41). Let \(\{\varphi _\ell \}_{\ell =1}^L\) be the basis of \(\mathbb {V}_h\). We identify every \(v \in \mathbb {V}_h\) with a vector \(\bar{v} \in \mathbb {R}^L\) with \(v = \sum _{\ell =1}^L \bar{v}_\ell \varphi _\ell \) and define a norm on \(\mathbb {R}^L\) as \(\Vert \bar{v}\Vert _{\mathbb {R}^L}:= \Vert v\Vert _{\mathbb {H}^1_0}\). For an arbitrary \(\omega \in \Omega \) we represent \(X_\omega \in \mathbb {V}_h\) as a vector \(\bar{X}_\omega \in \mathbb {R}^L\) and define a function \(h: \Omega \times \mathbb {R}^L \rightarrow \mathbb {R}^L\) component-wise for \(\ell =1,\ldots ,L\) as

We show, that for each \(\omega \in \Omega \) there exists an \(\bar{X}_\omega \) such that \(h(\omega ,\bar{X}_\omega )=0\). We note the following inequality

On choosing \(\Vert X_\omega \Vert =R_\omega \) large enough, the existence of \(X_{\varepsilon ,\delta ,n}^{i}(\omega )\in \mathbb {V}_h\) for each \(\omega \in \Omega \) then follows by Lemma 4.1, since \(h(\omega ,\cdot )\) is continuous by the semicontinuity of the operator \(A^{\varepsilon ,\delta }\), which follows from hemicontinuity and and monotonicity of \(A^{\varepsilon ,\delta }\) for \(\delta \ge 0\), \(\varepsilon >0\), see [12, Remark 4.1.1]. The \(\mathcal {F}_{t_i}\)-measurability follows by Lemma 4.2 for unique \(X_{\varepsilon ,\delta ,n}^{i}\).

Hence, it remains to show that \(X_{\varepsilon ,\delta ,n,h}^{i}\) is \(\mathbb {P}\)-a.s. unique. Assume there are two different solution \(X_{1},X_{2}\), s.t. \(h(\omega ,\overline{X}_{1}(\omega ))=0=h(\omega ,\overline{X}_{2}(\omega ))\) for \(\omega \in \Omega \). Then by the convexity (12) we observe that

Hence \({X}_1\equiv {X}_2\) \(\mathbb {P}\)-a.s. \(\square \)

We define the discrete Laplacian \(\Delta _h : \mathbb {V}_h \rightarrow \mathbb {V}_h\) by

To obtain the required the stability properties of the numerical approximation (42) we need the following lemma.

Lemma 4.4

Let \(\Delta _h\) be the discrete Laplacian defined by (43). Then for any \(v_h \in \mathbb {V}_h\), \(\varepsilon ,h>0\) the following inequality holds:

Proof of Lemma 4.4

Let \(\{\varphi _\ell \}_{\ell =1}^L\) be the basis of \(\mathbb {V}_h\) consisting of continuous piecewise linear Lagrange basis functions associated with the nodes of the triangulation \(\mathcal {T}_h\). Then any \(v_h\in \mathbb {V}_h\) has the representation \(v_h=\sum _{\ell =1}^L (v_h)_{\ell } \varphi _{\ell }\), where \((v_h)_{\ell } \in \mathbb {R}, \ell =1,\ldots ,L \) and analogically \(\Delta _hv_h=\sum _{\ell =1}^L (\Delta _h v_h)_\ell \varphi _\ell \), with coefficients \((\Delta v_h)_\ell \in \mathbb {R}, \ell =1,\ldots ,L\). From (43) it follows that

where we denote \((v,w)_K := \int _{K}v(x)w(x)\mathrm {d}x\) for \(K\in \mathcal {T}_h\).

We rewrite (45) with the mass matrix \(M:=\{M\}_{i,k}:=(\varphi _i,\varphi _k)\) and the stiffness matrix \(A:=\{A\}_{i,k}:=(\nabla \varphi _i,\nabla \varphi _k)\) and \(A_K := \{A_K\}_{i,k}:=(\nabla \varphi _i,\nabla \varphi _k)_{K}\) as

where \(\Delta _h\bar{v}_h\in \mathbb {R}^L\) is the vector \(((\Delta _h v_h)_1,\ldots ,(\Delta _h v_h)_L)^T\) and \(\bar{v}_h\in \mathbb {R}^L\) is the vector \(((v_h)_1,\ldots ,(v_h)_L)^T\). Since \(\mathbb {V}_h\) consists of functions, which are piecewise linear on the triangles \(K \in \mathcal {T}_h\), \((\vert \nabla v_h\vert ^2+\varepsilon ^2)^{-\frac{1}{2}}\) is constant on every triangle T. We note, that the matrices M and \(M^{-1}\) are positive definite. We get using the Young’s inequality

since \(M^{-1}\) is positive definite. \(\square \)

In the next lemma we state the stability properties of the numerical solution of the scheme (42) which are discrete analogues of estimates in Lemmas 3.1 and 3.2.

Lemma 4.5

Let \(x_0 \in L^2(\Omega ,\mathcal {F}_0;\mathbb {L}^2)\) and \(g\in \mathbb {L}^2\) be given. Then there exists a constant \(C \equiv C(\mathbb {E}\left[ \Vert x_0\Vert _{\mathbb {L}^2}\right] , \Vert g\Vert _{\mathbb {L}^2}) > 0\) such that for any \(n \in \mathbb {N}\), \(\tau ,h>0\) the solution of scheme (42) satisfies

and a constant \(C_{n} \equiv C( \mathbb {E}[\Vert x_0^n\Vert _{\mathbb {H}^1_0}], \Vert g^n\Vert _{\mathbb {H}^1_0}) > 0\) such that for any \(\tau ,h>0\)

Proof of Lemma 4.5

We set \(v_h=X_{\varepsilon ,\delta ,n,h}^{i}\) in (42), use the identity \(2(a-b)a =a^2 - b^2 + (a-b)^2\) and get for \(i=1,\ldots ,N\)

We take expected value in (49) and on noting the properties of Wiener increments \(\mathbb {E}\left[ \Delta _i W\right] =0\), \(\mathbb {E}\left[ \vert \Delta _i W\vert ^2\right] =\tau \) and the independence of \(\Delta _i W\) and \(X_{\varepsilon ,\delta ,n,h}^{i-1}\) we estimate the stochastic term as

We neglect the positive term

and get from (49) that

We sum up the above inequality for \(k=1,\ldots ,i\) and obtain

By the discrete Gronwall lemma it follows from (50) that

We substitute the above estimate into the right-hand side of (50) to conclude (47). To show the estimate (48) we set \(v_h = \Delta _h X_{\varepsilon ,\delta ,n,h}^{i}\) in (42) use integration by parts and proceed analogically to the first part of the proof. We note that by Lemma 4.4 it holds that

Hence we may neglect the positive term and get that

and obtain (48) after an application of the discrete Gronwall lemma. \(\square \)

We define piecewise constant time-interpolants of the numerical solution \(\{X_{\varepsilon ,\delta ,n,h}^{i}\}_{i=0}^{N}\) of (42) for \(t\in [0,T]\) as

and

We note that (42) can be reformulated as

where \(\theta _+(0):=0\) and \(\theta _+(t):=t_i\) if \(t\in (t_{i-1},t_{i}]\).

Estimate (47) yields the bounds

Furthermore, (55) and (11) imply

The estimates in (55) imply for fixed \(n \in \mathbb {N}\), \(\varepsilon ,\,\delta >0\) the existence of a subsequence, still denoted by \(\{\overline{X}_{\tau ,h}^{\varepsilon ,\delta ,n}\}_{\tau ,h>0}\), and a \(Y \in L^2(\Omega \times (0,T);\mathbb {L}^2)\cap L^2(\Omega \times (0,T);\mathbb {H}^1_0)\cap L^{\infty }((0,T);L^2(\Omega ;\mathbb {L}^2)\), s.t., for \(\tau ,h \rightarrow 0\)

In addition, there exists a \(\nu \in L^2(\Omega ;\mathbb {L}^2)\) such that \(\overline{X}_{\tau ,h}^{\varepsilon ,\delta ,n}(T) \rightharpoonup \nu \) in \(L^2(\Omega ;\mathbb {L}^2)\) as \(\tau ,h \rightarrow 0\) and the estimate (56) implies the existence of a \(a^{\varepsilon ,\delta } \in L^2(\Omega \times (0,T);\mathbb {H}^{-1})\), s.t.,

The estimates in (55) also implies for fixed \(n \in \mathbb {N}\), \(\varepsilon ,\, \delta > 0\) the existence of a subsequence, still denoted by \(\{\overline{X}_{\tau _-,h}^{\varepsilon ,\delta ,n}\}_{\tau >0}\), and a \(Y^- \in L^2(\Omega \times (0,T);\mathbb {L}^2)\), s.t.,

Finally, the inequality (50) implies

which shows that the weak limits of Y and \(Y^-\) coincide.

The following result shows that the limit \(Y\equiv X^{\varepsilon ,\delta }_{n}\), i.e., that the numerical solution of scheme (42) converges to the unique variational solution of (7) for \(\tau ,h \rightarrow 0\). Owing to the properties (10), (11) the convergence proof follows standard arguments for the convergence of numerical approximations of monotone equations, see for instance [6, 9], and is therefore omitted. We note that the convergence of the whole sequence \(\{\overline{X}_{\tau ,h}^{\varepsilon ,\delta ,n}\}_{\tau ,h>0}\) follows by the uniqueness of the variational solution.

Lemma 4.6

Let \(x_0 \in L^2(\Omega ,\mathcal {F}_0;\mathbb {L}^2)\) and \(g\in \mathbb {L}^2\) be given, let \(\varepsilon , \delta , \lambda >0\), \(n \in \mathbb {N}\) be fixed. Further, let \(X^{\varepsilon ,\delta }_{n}\) be the unique variational solution of (7) for \( x^n_0=\mathcal {P}_nx_0\), \(g^n = \mathcal {P}_n g\) and \(\overline{X}_{\tau ,h}^{\varepsilon ,\delta ,n}\), \(\overline{X}_{\tau _-,h}^{\varepsilon ,\delta ,n}\) be the respective time-interpolant (52), (53) of the numerical solution \(\{X_{\varepsilon ,\delta ,n,h}^{i}\}_{i=1}^N\) of (42). Then \(\overline{X}_{\tau ,h}^{\varepsilon ,\delta ,n}\), \(\overline{X}_{\tau _-,h}^{\varepsilon ,\delta ,n}\) converge to \(X^{\varepsilon ,\delta }_{n}\) for \(\tau ,h \rightarrow 0\) in the sense that the weak limits from (57), (58) satisfy \(Y\equiv X^{\varepsilon ,\delta }_{n}\), \(a^{\varepsilon ,\delta }\equiv A^{\varepsilon ,\delta }Y \equiv A^{\varepsilon ,\delta }X^{\varepsilon ,\delta }_{n}\) and \(\nu =Y(T)\equiv X^{\varepsilon ,\delta }_{n}(T)\). In addition it holds for almost all \((\omega ,t) \in \Omega \times (0,T)\) that

and there is an \(\mathbb {L}^2\)-valued continuous modification of \(Y\ (\)denoted again as Y) such that for all \(t \in [0,T]\)

The strong monotonicity property (10) of the operator \(A^{\varepsilon ,\delta }\) implies strong convergence of the numerical approximation in \(L^2(\Omega \times (0,T);\mathbb {L}^2)\).

Lemma 4.7

Let \(x_0 \in L^2(\Omega ,\mathcal {F}_0;\mathbb {L}^2)\) and \(g\in \mathbb {L}^2\) be given, let \(\varepsilon , \delta , \lambda >0\), \(n \in \mathbb {N}\) be fixed. Further, let \(X^{\varepsilon ,\delta }_{n}\) be the variational solution of (7) for \( x^n_0=\mathcal {P}_nx_0\), \(g^n = \mathcal {P}_n g\) and \(\overline{X}_{\tau ,h}^{\varepsilon ,\delta ,n}\) be the time-interpolant (52) of the numerical solution \(\{X_{\varepsilon ,\delta ,n,h}^{i}\}_{i=1}^N\) of (42). Then the following convergence holds true

Proof of Lemma 4.7

The proof follows along the lines of [6, 9]. We sketch the main steps of the proof for the convenience of the reader.

We note that \(\overline{X}_{\tau ,h}^{\varepsilon ,\delta ,n}\) satisfies (cf. proof of Lemma 4.5)

where \(\displaystyle R_{\tau }(t):= \mathbb {E}\left[ \int _t^{\theta _+(t)}2\langle A^{\varepsilon ,\delta }\overline{X}_{\tau ,h}^{\varepsilon ,\delta ,n}(s),\overline{X}_{\tau ,h}^{\varepsilon ,\delta ,n}(s)\rangle -\Vert \overline{X}_{\tau ,h}^{\varepsilon ,\delta ,n}(s)\Vert ^2\,\mathrm {d}s \right] \).

We reformulate the third term on the right-hand side in (63) as

We substitute the equality above into (63) and obtain for \(\kappa \ge 1\) that

We observe that \( \int _0^T e^{-\kappa s}\vert R_\tau (s)\vert \,\mathrm {d}s \rightarrow 0\) for \(\tau \). Hence, by the lower-semicontinuity of norms using the convergence properties from Lemma 4.6 and the monotonicity property (10) we get for \(\tau ,h \rightarrow 0\) that

It is not difficult to see that (61) for \(Y\equiv X^{\varepsilon ,\delta }_n\) implies

We subtract the equality (65) from (64) and obtain for \(\kappa \ge 1\)

Hence, we conclude that \( \overline{X}_{\tau ,h}^{\varepsilon ,\delta ,n}\rightarrow X^{\varepsilon ,\delta }_{n}\) in \(L^2(\Omega ;L^2((0,T);\mathbb {L}^2)\). \(\square \)

Remark 4.1

It is obvious from the proof of Lemma 4.7 that the strong convergence in \(L^2(\Omega \times (0,T);\mathbb {L}^2)\) remains valid for \(\lambda =0\) due to (10) by the Poincaré inequality.

Next lemma guarantees the convergence of the numerical solution of scheme (42) to the numerical solution of scheme (41) for \(\delta \rightarrow 0\).

Lemma 4.8

Let \(x_0 \in L^2(\Omega ,\mathcal {F}_0;\mathbb {L}^2)\) and \(g\in \mathbb {L}^2\) be given. Then for each \(n \in \mathbb {N}\) there exists a constant \(C\equiv C(T)>0\), \(C_n\equiv C(\mathbb {E}[\Vert x_0^n\Vert _{\mathbb {H}^1_0}], \Vert g^n\Vert _{\mathbb {H}^1_0})>0\) such that for any \(N\in \mathbb {N}\), \(\delta >0\), \(n\in \mathbb {N}\), \(h,\varepsilon \in (0,1]\) the following estimate holds for the difference of numerical solutions of (41) and (42):

We note that the n-dependent constant \(C_n\) in the estimate above is due to the a priori estimate (48), for \(\mathbb {H}^1_0\)-regular data \(x_0\), g it holds that \(C_n\equiv C(\mathbb {E}[\Vert x_0\Vert _{\mathbb {H}^1_0}], \Vert g\Vert _{\mathbb {H}^1_0})\) by the stability of the discrete \(\mathbb {L}^2\)-projection \(\mathcal {P}_h:\mathbb {H}^1_0\rightarrow \mathbb {V}_h\) in \(\mathbb {H}^1_0\).

Proof of Lemma 4.8

We define \(Z^{i}_{\varepsilon ,h}:=X_{\varepsilon ,h}^{i}-X_{\varepsilon ,\delta ,n,h}^{i}\). From (41) and (42) we get

We set \(v_h=Z^{i}_{\varepsilon ,h}\) and obtain

We note that

and by the Cauchy–Schwarz and Young’s inequalities

From the convexity (12) it follows that

Hence, we obtain that

We estimate the last term on the right-hand side above as

and substitute the above identity into (66)

Next, we sum up the above inequality up to \(i\le N\) and obtain

After taking expectation in the above and using the independence properties of Wiener increments and the estimate (48) we arrive at

with \(C_n \equiv C(\Vert x_0^n\Vert _{\mathbb {H}^1_0}, \Vert g^n\Vert _{\mathbb {H}^1_0})\). Finally, the Discrete Gronwall lemma yields for \(i=1,\ldots ,N\) that

which concludes the proof . \(\square \)

We define piecewise constant time-interpolant of the discrete solution \(\{X_{\varepsilon ,h}^{i}\}_{i=0}^N\) of (41) for \(t\in [0,T)\) as

We are now ready to state the second main result of this paper which is the convergence of the numerical approximation (41) to the unique SVI solution of the total variation flow (1) (cf. Definition 3.1).

Theorem 4.1

Let X be the SVI solution of (1) and let \(\overline{X}_{\tau ,h}^{\varepsilon }\) be the time-interpolant (68) of the numerical solution of the scheme (41). Then the following convergence holds true

Proof of Theorem 4.1

For \(x_0 \in L^2(\Omega ,\mathcal {F}_0;\mathbb {L}^2)\) and \(g\in \mathbb {L}^2\) we define the \(\mathbb {H}^1_0\)-approximating sequences \(\{x_0^n\}_{n\in \mathbb {N}}\subset \mathbb {H}^1_0\), \(x_0^n\rightarrow x_0\in L^2(\Omega ,\mathcal {F}_0;\mathbb {L}^2)\), \(\{g^n\}_{n\in \mathbb {N}}\subset \mathbb {H}^1_0\), \(n\in \mathbb {N}\), \(g^n\rightarrow g\in \mathbb {L}^2\) via the \(\mathbb {L}^2\)-projection onto \(\mathbb {V}_n\subset \mathbb {H}^1_0\). We consider the solutions \(X^{\varepsilon },X^{\varepsilon ,\delta }_{n}\) of (3), (7), respectively, and denote by \(X^{\varepsilon }_n\) the SVI solution of (3) for \(x_0\equiv x_0^n\), \(g\equiv g^n\). Furthermore, we recall that the interpolant \(\overline{X}_{\tau ,h}^{\varepsilon ,\delta ,n}\) of the numerical solution of (42) was defined in (52).

We split the numerical error as

By Theorem 3.1 it follows that

To estimate the second term we consider the solutions \(X^{\varepsilon }_n\) of (3) with \(x_0\equiv x_0^n\) and \(g\equiv g^n\). From (20) we deduce that

We use (22) to estimate the third term as

The fourth term is estimated by Lemma 4.7

For the last term we use Lemma 4.8

Finally, we consecutively take \(\tau ,h \rightarrow 0\), \(\delta \rightarrow 0\), \(n\rightarrow \infty \) and \(\varepsilon \rightarrow 0\) in (70) and use the above convergence of \(I-V\) to obtain (69). \(\square \)

Remark 4.2

We note that the convergence analysis simplifies in the case that the problem data have higher regularity. For \(x_0,g\in \mathbb {H}^1_0\) it is possible to show that the problem (3) admits a unique variational solution [which is also a SVI solution of (3) by uniqueness] by a slight modification of standard monotonicity arguments. This is due to the fact that the operator (8) retains all its properties for \(\delta =0\) except for the coercivity. The coercivity is only required to guarantee \(\mathbb {H}^1_0\)-stability of the solution, nevertheless the stability can also be obtained directly by the Itô formula on the continuous level, cf. Lemma 3.2, or analogically to Lemma 4.5 on the discrete level, even for \(\delta =0\). Consequently, for \(\mathbb {H}^1_0\)-data the convergence of the numerical solution \(\overline{X}_{\tau ,h}^{\varepsilon }\) can be shown as in Theorem 4.1 without the additional \(\delta \)-regularization step.

We conclude this section by showing unconditional stability of scheme (41), i.e., we show that the numerical solution satisfies a discrete energy law which is an analogue of the energy estimate (27).

Lemma 4.9

Let \(x_0, g\in \mathbb {L}^2\) and \(T> 0\). Then there exist a constant \(C\equiv C(T)\) such that the solutions of scheme (41) satisfy for any \(\varepsilon ,h\in (0,1]\), \(N\in \mathbb {N}\)

Proof of Lemma 4.9

We set \(v_h\equiv X_{\varepsilon ,h}^{i}\) in (41) and obtain

Using the the convexity of \(\mathcal {J}_\varepsilon \) along with the identity

we get from (72) that

After taking the expectation and summing up over i in (73), and noting that \(\mathcal {J}_{\varepsilon }(0) =\varepsilon \vert \mathcal {O}\vert \) we obtain

Hence (71) follows after an application of the discrete Gronwall lemma. \(\square \)

5 Numerical experiments

We perform numerical experiments using a generalization of the fully discrete finite element (41) on the unit square \(\mathcal {O}= (0,1)^2\). The scheme for \(i=1, \dots , N\) then reads as

where \(g^h,\,x_0^h\in \mathbb {V}_h\) are suitable approximations of g, \(x_0\) (e.g., the orthogonal projections onto \(\mathbb {V}_h\)), respectively, and \(\mu >0\) is a constant. The multiplicative space-time noise \(\sigma (X^{i-1}_{\varepsilon ,h})\Delta _i W^h\) is constructed as follows. The term \(W^h\) is taken to be a \(\mathbb {V}_h\)-valued space-time noise of the form

where \({\beta }_\ell \), \(\ell =1,\dots , L\) are independent scalar-valued Wiener processes and \(\{\varphi _\ell \}_{\ell =1}^L\) is the standard ‘nodal’ finite element basis of \(\mathbb {V}_h\). In the simulations below we employ three practically relevant choices of \(\sigma \): a tracking-type noise \(\sigma (X) \equiv \sigma _1(X) = |X - g^h|\), a gradient type noise \(\sigma (X) \equiv \sigma _2(X) = |\nabla X |\) and the additive noise \(\sigma (X)\equiv \sigma _3 = 1\); in the first case the noise is small when the solution is close to the ‘noisy image’ \(g^h\), in the gradient noise case the noise is localized along the edges of the image. We note that the fully discrete finite element scheme (74) corresponds to an approximation of the regularized Eq. (3) with a slightly more general space-time noise term of the form \(\mu \sigma (X^{\varepsilon }) \mathrm {d}W\).

In all experiments we set \(T=0.05\), \(\lambda =200\), \(x_0 \equiv x_0^h\equiv 0\). If not mentioned otherwise we use the time step \(\tau = 10^{-5}\), the mesh size \(h = 2^{-5}\) and set \(\varepsilon =h=2^{-5}\), \(\mu =1\). We define \(g \in \mathbb {V}_h\) as a piecewise linear interpolation of the characteristic function of a circle with radius 0.25 on the finite element mesh, see Fig. 1 (left), and set \(g^h = g + \xi _h \in \mathbb {V}_h\) with \( \xi _h(x) = \nu \sum _{\ell =1}^{L} \varphi _\ell (x) \xi _\ell \), \(x\in \mathcal {O}\) where \(\xi _\ell \), \(\ell =1,\dots , L\) are realizations of independent \(\mathcal {U}(-1,1)\)-distributed random variables. If not indicated otherwise we use \(\nu =0.1\); the corresponding realization of \(\xi _h\) is displayed in Fig. 1 (right).

We choose \(\varepsilon =h=2^{-5}\), \(\mu =1\), \(\sigma \equiv \sigma _1\) as parameters for the ‘baseline’ experiment; the individual parameters are then varied in order to demonstrate their influence on the evolution. The time-evolution of the discrete energy functional \(\mathcal {J}_{\varepsilon ,\lambda }(X_{\varepsilon ,h}^{i})\), \(i=1,\dots ,N\) for a typical realization of the space-time noise \(W^h\) is displayed in Fig. 2; in the legend of the graph we state parameters which differ from the parameters of the baseline experiment, e.g., the legend ‘\(sigma_2,\, mu=0.125\)’ corresponds to the parameters \(\sigma \equiv \sigma _2\), \(\mu =0.125\) and the remaining parameters are left unchanged, i.e., \(\varepsilon =h=2^{-5}\). For all considered parameter setups, except for the case of noisier image \(\nu =0.2\), the evolution remained close to the discrete energy of the deterministic problem [i.e., (74) with \(\mu =0\)]. The energy decreases over time until the solution is close to the (discrete) minimum of \(\mathcal {J}_{\varepsilon ,\lambda }\); to highlight the differences we display a zoom at the graphs. We observe that in the early stages (not displayed) the energy of stochastic evolutions with sufficiently small noise typically remained below the energy of the deterministic problems and the situation reversed as the solution approached the stationary state.

Evolution of the discrete energy: \(\sigma \equiv \sigma _1\), \(h=2^{-5}\), \(\varepsilon =h,\frac{h}{2}\), \(\mu =1,2\) (left); \(\sigma \equiv \sigma _1,\sigma _2,\sigma _3\), \(\sigma \equiv \sigma _1\), \(h=2^{-5}\), \(\varepsilon =2h\), \(\sigma =\sigma _1\), \(\varepsilon =h=2^{-6}\) and \(\nu =0.2\) (middle and right)

In Fig. 3 we display the solution at the final time computed with \(\sigma \equiv \sigma _1\), \(\varepsilon =h\) for \(h=2^{-5}, 2^{-6}\), respectively, and \(\sigma \equiv \sigma _2\), \(\varepsilon =h=2^{-5}\); graphically the results of the remaining simulations did not significantly differ from the first case. The displayed results may indicate that the noise \(\sigma _2\) yields worse results than the noise \(\sigma _1\) and \(\sigma _2\); however, for sufficiently small value of \(\mu \) the results would remain close to the deterministic simulation as well. We have magnified noise intensity \(\mu \) to highlight the differences to the other noise types (i.e., the noise is concentrated along the edges of the image). We note that the gradient type noise \(\sigma _2\) might be a preferred choice for practical computations, cf. [14].

Change history

04 August 2022

A Correction to this paper has been published: https://doi.org/10.1007/s40072-022-00267-5

References

Ambrosio, L., Fusco, N., Pallara, D.: Functions of Bounded Variation and Free Discontinuity Problems. Oxford Mathematical Monographs. The Clarendon Press, Oxford University Press, New York (2000)

Attouch, H., Buttazzo, G., Michaille, G.: Variational analysis in Sobolev and BV spaces, volume 6 of MPS/SIAM Series on Optimization. Society for Industrial and Applied Mathematics (SIAM), Philadelphia, PA; Mathematical Programming Society (MPS), Philadelphia, PA. Applications to PDEs and optimization (2006)

Barbu, V., Röckner, M.: Stochastic variational inequalities and applications to the total variation flow perturbed by linear multiplicative noise. Arch. Ration. Mech. Anal. 209(3), 797–834 (2013)

Bartels, S., Milicevic, M.: Stability and experimental comparison of prototypical iterative schemes for total variation regularized problems. Comput. Methods Appl. Math. 16(3), 361–388 (2016)

Brenner, S.C., Scott, L.R.: The Mathematical Theory of Finite Element Methods, 2nd edn. Springer, New York (2002)

Emmrich, E., Šiška, D.: Nonlinear stochastic evolution equations of second order with damping. Stoch. Partial Differ. Equ. Anal. Comput. 5(1), 81–112 (2017)

Feng, X., Prohl, A.: Analysis of total variation flow and its finite element approximations. M2AN Math. Model. Numer. Anal. 37(3), 533–556 (2003)

Gess, B., Tölle, J.: Stability of solutions to stochastic partial differential equations. J. Differ. Equ. 260(6), 4973–5025 (2016)

Gyöngy, I., Millet, A.: On discretization schemes for stochastic evolution equations. Potential Anal. 23(2), 99–134 (2005)

Juan, O., Keriven, R., Postelnicu, G.: Stochastic motion and the level set method in computer vision: stochastic active contours. Int. J. Comput. Vis. 69, 7–25 (2006)

Krylov, N.V., Rozovskii, B.L.: Stochastic evolution equations. In: Stochastic Differential Equations: Theory and Applications, Volume 2 of Interdiscip. Math. Sci., pp. 1–69. World Sci. Publ., Hackensack, NJ (2007)

Liu, W., Röckner, M.: Stochastic Partial Differential Equations: an Introduction. Universitext. Springer, Cham (2015)

Rudin, L.I., Osher, S., Fatemi, E.: Nonlinear total variation based noise removal algorithms. Phys. D 60(1–4), 259–268 (1992)

Sixou, B., Wang, L., Peyrin, F.: Stochastic diffusion equation with singular diffusivity and gradient-dependent noise in binary tomography. J. Phys Conf. Ser. 69, 012001 (2010). 542

Temam, R.: Navier–Stokes equations. Theory and numerical analysis. North-Holland Publishing Co., Amsterdam-New York-Oxford, 1977. Studies in Mathematics and its Applications, Vol. 2

Acknowledgements

Open Access funding provided by Projekt DEAL. This work was supported by the Deutsche Forschungsgemeinschaft through SFB 1283 “Taming uncertainty and profiting from randomness and low regularity in analysis, stochastics and their applications”. The authors would like to thank the referee for careful reading of the manuscript and constructive comments, as well as to Lars Diening for stimulating discussions.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Baňas, L., Röckner, M. & Wilke, A. Convergent numerical approximation of the stochastic total variation flow. Stoch PDE: Anal Comp 9, 437–471 (2021). https://doi.org/10.1007/s40072-020-00169-4

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40072-020-00169-4