Abstract

We discuss the Gaussian and the mixture of Gaussians in the limit of open quantum random walks. The central limit theorems for the open quantum random walks under certain conditions were proven by Attal et al (Ann Henri Poincaré 16(1):15–43, 2015) on the integer lattices and by Ko et al (Quantum Inf Process 17(7):167, 2018) on the crystal lattices. The purpose of this paper is to investigate the general situation. We see that the Gaussian and the mixture of Gaussians in the limit depend on the structure of the invariant states of the intrinsic quantum Markov semigroup whose generator is given by the Kraus operators which generate the open quantum random walks. Some concrete models are considered for the open quantum random walks on the crystal lattices. Due to the intrinsic structure of the crystal lattices, we can conveniently construct the dynamics as we like. Here, we consider the crystal lattices of \(\mathbb {Z}^2\) with intrinsic two points, hexagonal, triangular, and Kagome lattices. We also discuss Fourier analysis on the crystal lattices which gives another method to get the limit theorems.

Similar content being viewed by others

References

Attal, S., Guillotin-Plantard, N., Sabot, C.: Central limit theorems for open quantum random walks and quantum measurement records. Ann. Henri Poincaré 16(1), 15–43 (2015)

Attal, S., Petruccione, F., Sabot, C., Sinayskiy, I.: Open quantum random walks. J. Stat. Phys. 147, 832–852 (2012)

Attal, S., Petruccione, F., Sinayskiy, I.: Open quantum walks on graphs. Phys. Lett. A 376(18), 1545–1548 (2012)

Fagnola, F., Rebolledo, R.: Transience and recurrence of quantum Markov semigroups. Probab. Theory Relat. Fields 126, 289–306 (2003)

Fagnola, F., Rebolledo, R.: Quantum Markov semigroups and their stationary states, Stochastic analysis and mathematical physics II. Trends Math. pp. 77–128, (2003)

Fagnola, F., Pellicer, R.: Irreducible and periodic positive maps. COSA 3(3), 407–418 (2009)

Ko, C.K., Konno, N., Segawa, E., Yoo, H.J.: How does Grover walk recognize the shape of crystal lattice? Quantum Inf. Process. 17(7), 167 (2018)

Ko, C.K., Konno, N., Segawa, E., Yoo, H.J.: Central limit theorems for open quantum random walks on the crystal lattices. J. Stat. Phys. 176, 710–735 (2019)

Konno, N., Yoo, H.J.: Limit theorems for open quantum random walks. J. Stat. Phys. 150, 299–319 (2013)

Sunada, T.: Topological crystallography with a view towards discrete geometric analysis, Surveys and Tutorials in Applied Mathematical Sciences 6. Springer (2013)

Umanita, V.: Classification and decomposition of quantum Markov semigroups. Thesis, Politecnico di Milano (2005)

Umanita, V.: Classification and decomposition of quantum Markov semigroups. Probab. Theory Relat. Fields 134, 603–623 (2006)

Acknowledgements

We are grateful to Professor Franco Fagnola and Professor Veronica Umanita for many helpful discussions and giving us reference [11]. We thank Mrs. Yoo Jin Cha for helping with figures. The research by H. J. Yoo was supported by Basic Science Research Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Education (NRF-2016R1D1A1B03936006).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

A Examples: central limit theorem

In this Appendix, we consider some more examples satisfying the CLT. The examples of OQRWs on the hexagonal lattice were investigated in [8]. Here, we consider the examples for the triangular and Kagome lattices.

1.1 A.1 Triangular lattice

Triangular lattice is a crystal lattice that is depicted in \(\mathbb {R}^2\). Look at Figure 4.

1.1.1 A.1.1 Preparation

We let \(V_0=\{u\}\) and let \(\{e_i\}_{i=1,2,3}\) be the three self-loops in \(G_0\) with \(\mathrm {o}(e_i)=\mathrm {t}(e_i)=u\). (See Figure 4.) The reversed self-loops are denoted by \(\overline{e}_i\), \(i=1,2,3\). It is natural to define \(\mathfrak {h}\equiv \mathfrak {h}_u=\mathbb {C}^6\) and find six matrices B(e), \(e\in A(G_0)\), of size \(6\times 6\) that satisfy (2.4). However, it is too much to investigate all the general cases. Here, we focus on the simple examples that satisfy the central limit theorems. For that, we let \(\mathfrak {h}=\mathbb {C}^3\oplus \mathbb {C}^3\) and consider \(3\times 3\) block matrices for B(e), \(e\in A(G_0)\), as follows. We remark that the following construction is very similar to the example for hexagonal lattice studied in [8]. First, we let

and \(\widehat{\theta }(\overline{e}_i)=-\widehat{\theta }(e_i)\), \(i=1,2,3\). In order to define the operators B(e), \(e\in A(G_0)\), let \(U=\left[ \begin{matrix}{} \mathbf{u}_1&\mathbf{u}_2&\mathbf{u}_3\end{matrix}\right] \) and \(V=\left[ \begin{matrix}{} \mathbf{v}_1&\mathbf{v}_2&\mathbf{v}_3\end{matrix}\right] \) be \(3\times 3\) unitary matrices with column vectors \(\mathbf{u}_i= [u_{1i}, u_{2i},u_{3i}]^T\) and \(\mathbf{v}_i= [ v_{1i},v_{2i},v_{3i} ]^T\), \(i=1,2,3\). For \(i=1,2,3\), let \(U_i\) be a \(3\times 3\) matrix whose ith column is \(\mathbf{u}_i\) and the remaining columns are zeros. Similarly, let \(V_i\) be the \(3\times 3\) matrix, whose ith column is the vector \(\mathbf{v}_i\) and other columns are zeros. For \(i=1,2,3\), let \(\widetilde{U}_i\) and \(\widetilde{V}_i\) be \(6\times 6\) matrices whose block matrices are given as follows:

Now, we define

Then, \(B(e_i),\, B(\overline{e}_i),\, i=1,2,3\) satisfy condition (2.4).

It is easy to check that a state \(\rho \in \mathcal {E}(\mathfrak {h})\) is a solution to the equation \(\mathcal {L}_*(\rho )=0\), where \(\mathcal {L}_*(\rho )\) was defined in (3.1), if and only if \(\rho =\rho _1\oplus \rho _2\) and it holds that

Let us consider the following (doubly) stochastic matrices:

It was shown in [8, Proposition 4.1] that if the stochastic matrices \(P_UP_V\) and \(P_VP_U\) are irreducible, then the equation \(\mathcal {L}_*(\rho )=0\) has a unique state solution \(\rho =\rho _1\oplus \rho _2\) with \(\rho _1=\rho _2=\frac{1}{6}I\).

1.1.2 Example: nonzero covariance

Let us take \(U=V=U_G\), where

It is obvious that \(P_UP_V=P_VP_U\) is irreducible, where \(P_U\) and \(P_V\) are defined in (A.2). Therefore, the equation \(\mathcal {L}_*(\rho )=0\) has a unique state solution \(\rho =\frac{1}{6}I\oplus \frac{1}{6}I\). From equation (3.2), it is easy to see that \(m=0\). By directly computing from (3.3), we get, up to a sum of a constant multiple of identity,

and

Notice that the transformation matrix \(\Theta \) in (2.1) is given by

By the linearity of equation (3.3), we have (see [8, Remark 3.6])

Therefore, we get

and

with

Now, we are ready to compute the covariance matrix \(\Sigma \) given in (3.4). Since the mean m is zero and \(\rho _\infty =\frac{1}{6}I\), we are left with

For the first term \(C^{(1)}_{ij}\), the trace part is all 1 and thus we get

For the second term \(C^{(2)}_{ij}\), computations before taking trace give us

and so we get

Thus, summing those two terms we get the covariance matrix

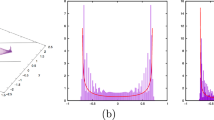

The characteristic function for the Gaussian random variable X with mean zero and covariance \(\Sigma \) in (A.7) is

We notice that the variance in the horizontal line (x-axis) is smaller than that in the vertical line (y-axis). This reflects that fact that along the vertical line there are “roads” (the vectors \(\widehat{\theta }(e_3)\) and \(\widehat{\theta }(\overline{e}_3)\)) through which the walker can travel.

1.1.3 Example: zero covariance

Let us consider one more example for the model of OQRW on the triangular lattice. This time, let us take \(U=U_G\) in (A.3) and \(V=I\). In this case, the matrices \(P_UP_V\) and \(P_VP_U\) are also irreducible and hence the equation \(\mathcal {L}_*(\rho )=0\) has a unique state solution \(\rho _\infty =\frac{1}{6}I\). From equation (3.2), it is easy to see that \(m=0\). As before, the solutions of (3.3) are, up to a sum of constant multiple of identity,

and

Recall \(\Theta \) in (A.4). We then get

and

The covariance matrix can be computed as before, and we get \(\Sigma =0\). Now, the measure is a Gaussian and the mean and covariance are all zero; this means that it is a Dirac measure at the origin.

1.2 A.2 Kagome lattice

In this subsection, we consider the OQRWs on the Kagome lattice. Look at the Kagome lattice in Fig. 5.

We let \(V_0=\{1, 2, 3\}\) by naming the vertices with numbers. For \(1\le i\ne j\le 3\), we let \(\{e_{ij}, f_{ij}\}\) be the 12 directed edges in \(G_0\) with a convention \(\mathrm {o}(e_{ij})=j\) and \(\mathrm {t}(e_{ij})=i\) and similarly for \(f_{ij}\)’s. We notice that \(\overline{e}_{ij}=e_{ji}\) and \(\overline{f}_{ij}=f_{ji}\). We let

and

In order to define the operators B(e), \(e\in A(G_0)\), let \(\mathfrak {h}_1=\mathfrak {h}_2=\mathfrak {h}_3=\mathbb {C}^4\), and \(\mathfrak {h}=\mathfrak {h}_1\oplus \mathfrak {h}_2\oplus \mathfrak {h}_3=\mathbb {C}^{12}\). Let H be a \(2\times 2\) unitary matrix given by

where

Notice that

Let \(U_L\), \(U_R\), \(V_L\), and \(V_R\) be \(4\times 4\) matrices given by

Notice that

For \(i,j=1,2,3\) \((i\ne j)\), let \(U_{ij}\) and \(V_{ij}\) be \(12\times 12\) matrices whose block matrices are given as follows:

and

Now, we define

Then, \(B(e_{ij}),\, B(f_{ij}),\, i,j=1,2,3\) \((i\ne j)\) satisfy condition (2.4).

Lemma A.1

The equation \(\mathcal {L}_*(\rho )=0\) for the states, where \(\mathcal {L}_*(\rho )\) was defined in (3.1), has a unique solution \(\rho _\infty =\frac{1}{12}I\oplus \frac{1}{12}I\oplus \frac{1}{12}I\in \mathcal {E}(\mathfrak {h})\).

Proof

It is easy to check that a state \(\rho \in \mathcal {E}(\mathfrak {h})\) solves the equation \(\mathcal {L}_*(\rho )=0\) if and only if it has the form \(\rho =\rho ^{(1)}\oplus \rho ^{(2)}\oplus \rho ^{(3)}\) and satisfies

From equations (A.8), we see that the matrices \(\rho ^{(i)}\), \(i=1,2,3\), are block matrices of the form

here, \(\rho ^{(i)}_j\), \(j=1,2\), are \(2\times 2\) matrices, say

Using the form in (A.9), we can rewrite (A.8) in the following form:

where \(\rho \) and S are \(12\times 12\) block matrices defined by

It is easy to check that S is a unitary matrix. Therefore, by multiplying \(S^*\) and S from left and right, respectively, to equation (A.10) we also have

From (A.12), we see that \(\rho ^{(i)}_j\) are diagonal matrices:

Now equating the first block in (A.10) and (A.12), we get

or

Looking at the off-diagonal components, we get

Applying this relation to (A.15) and (A.14), we easily get

That is, \(\rho ^{(1)}_1=\rho ^{(2)}_2=\rho ^{(3)}_2\). Using the cyclic symmetry, we obtain that all six matrices \(\rho ^{(i)}_j\), \(i=1,2,3\), \(j=1,2\), are the same to each other. Taking into account that \(\rho \) is a state, we conclude \(\rho =\frac{1}{12}I\oplus \frac{1}{12}I\oplus \frac{1}{12}I\in \mathcal {E}(\mathfrak {h})\) and the proof is completed. \(\square \)

Let us compute the mean m and covariance matrix \(\Sigma \). From equation (3.2), it is easy to see that \(m=0\). By directly computing from (3.3), we see that, up to a sum of a constant multiple of identity,

and

Notice that

Therefore, we get by (A.5)

and

Now, we are ready to compute the covariance matrix \(\Sigma \) given in (3.4). Since the mean m is zero and \(\rho _\infty =\frac{1}{12}I\oplus \frac{1}{12}I\oplus \frac{1}{12}I\), we are left with

For the first term \(C^{(1)}_{ij}\), the trace part is all 1 and thus we get

For the second term \(C^{(2)}_{ij}\), the terms, before taking trace, are given by

Then, we get

Thus, the covariance matrix is

Notice that the covariance matrix (A.17) has eigenvalues \(\frac{1}{6}(3\pm \sqrt{5})\) with corresponding eigenvectors \([2\mp \sqrt{5},1]^T\).

B Analytic proof of mixture of Gaussians for the hexagonal lattice

Let us recall the Fourier analysis on the crystal lattices and consider a dual process which was developed in [8, 9]. For a function \(f:\mathbb {L}\rightarrow \mathbb {C}\), its Fourier transform \(\widehat{f}:\Theta ({\mathbb {T}}^2)\rightarrow \mathbb {C}\) is defined by

and the inverse relation is given by

(See [8, Section 5.1] for the details.) If \(\rho ^{(0)}\) is the initial condition, then the state at nth step is given in the Fourier transform space by [7]

Here, \(L_A\) and \(R_A\) are the left and right multiplication operators by A, respectively:

The dual process is the process \((Y_n(\mathbf{k}))_{\mathbf{k}\in \Theta (\mathbb {T}^2)}\in \widehat{\mathcal {A}}\) given by

Then, it holds that

In other words, the Fourier transform of the probability density \((p_x^{(n)})_{x\in \mathbb {L}}\) at time n is given by

Let us focus on the situation where a mixture of Gaussians appears. Thus, suppose that \(P_UP_V\) and \(P_VP_U\) are reducible with a common decomposition into communicating classes, say \(\{\{1,2\}, \{3\}\}\) assuming the stochastic matrices \(P_UP_V\) and \(P_VP_U\) are defined on the state space \(\{1,2,3\}\). Put

where \(\mathrm {diag}(a,b,c)\) means the diagonal matrix with entries a, b, and c. We can show (cf. [8, Example 5.3]) that

where the components satisfy the following recurrence relations:

Therefore, we get

Here, the matrices \(\widetilde{A}_n(\mathbf{k})\) and \(\widetilde{B}_n(\mathbf{k})\) are given by

By the assumption, we see that the operators \(\widetilde{A}_n(\mathbf{k})\) and \(\widetilde{B}_n(\mathbf{k})\) are block diagonal matrices acting on \(\mathbb {C}^2\oplus \mathbb {C}\). And since it is irreducible for each block, when we restrict on each block, the map \(\mathcal {L}_*\) has a unique invariant state (see the proof of [8, Proposition 4.1]). Therefore, for any \(\lambda \in [0,1]\), the following states (density matrices) are all invariant states satisfying \(\mathcal {L}_*(\rho ^{(\lambda )})=0\):

with

For a concrete model, let us consider \(U=V=U_H\) in (4.12). By (4.14), \(P_UP_V\) and \(P_VP_U\) are reducible with a common communicating classes. There are infinitely many solutions to the equation \(\mathcal {L}_*(\rho )=0\), and in fact, for any \(\lambda \in [0,1] \), the states \(\rho ^{(\lambda )}\) in (B.10) are all invariant states.

A Gaussian Let us take the initial state

Hence, we have \(\widehat{\rho ^{(0)}}(\mathbf{k})=\frac{1}{2}\eta _0\oplus \frac{1}{2}\eta _0\). Therefore, putting \(u:=[1,1,1]^T\) and \(u_0:=\frac{1}{\sqrt{2}}[1,1,0]^T\) we see that (use also \(\widetilde{B}_n(\mathbf{k})=\overline{\widetilde{A}_n(\mathbf{k})}\))

Putting \(\theta _j=-\langle \mathbf{k},\widehat{\theta }_j\rangle \), \(j=1,2\), for simplicity, we have \(D=\mathrm {diag}(e^{i\theta _1},e^{i\theta _2},1)\). By defining \(P_{\pm }:=D^{\pm 1/2}PD^{\mp 1/2}\), we can write

(We have used \(Pu=u\).) Consider firstly \(n=2m+1\). Putting \(u_{\pm }:=D^{\pm 1/2}u_0\), we have

We notice that D and P, and hence \(P^\pm \) also, are invariant on the range of \(P_1^\perp \), i.e., the two-dimensional subspace generated by the first two components of the vectors in \(\mathbb {C}^3\). Therefore, without loss of generality, we may let

We notice that

By directly computing, we get

Here,

Therefore,

Now, let us consider the asymptotics of \(\widehat{p_\cdot ^{(n)}}(\mathbf{k})\) for large n. Let \(X_n\in \mathbb {L}\) be the position of the walker at time n. We want to see the behavior of \(X_n/\sqrt{n}\) at large time by computing \(\mathbb {E}\left[ e^{i\langle \mathbf{t},X_n/\sqrt{n}\rangle }\right] \), which is nothing but \(\widehat{p_\cdot ^{(n)}}(-\mathbf{t}/\sqrt{n})\) by (B.1). Then, formerly defined \(\theta _j=-\langle \mathbf{k},\widehat{\theta }_j\rangle \) becomes now \(\theta _j=\frac{1}{\sqrt{n}}\langle \mathbf{t}, \widehat{\theta }_j\rangle \), \(j=1,2\), and we get

where \(\varepsilon ^2(\mathbf{t})=\langle \mathbf{t},\widehat{\theta }_1-\widehat{\theta }_2\rangle ^2\). Therefore, as \(n\rightarrow \infty \),

We conclude that \(X_n/\sqrt{n}\) converges weakly to a Gaussian with covariance

The limit as n goes to infinity with even numbers can be computed similarly, and it gives the same result as the above. It is easy to guess the above result from the dynamics of the walk. In fact, the movements in the y-direction are just an oscillation between the coordinates \(\{-1/\sqrt{2},0,1/\sqrt{2}\}\). Therefore, the variance in the y-direction of the scaled walk by \(1/\sqrt{n}\) converges to 0 as (B.12) shows.

Another Gaussian Let us take the initial state

Put \(v_0:=[0,0,1]^T\) and \(P_2\) which is the projection onto the third component space so that \(v_0=P_2u\). Now, we have \(\widehat{\rho ^{(0)}}(\mathbf{k})=\frac{1}{2}\xi _0\oplus \frac{1}{2}\xi _0\). Therefore,

Here, we have used again the fact that \(\widetilde{B}_n(\mathbf{k})=\overline{\widetilde{A}_n(\mathbf{k})}\). Clearly, we have

This means that the measure is a Dirac measure at the origin. From the dynamics of the walk, it is obvious why we have Dirac measure. In fact, from the initial condition, the walk never moves out of the origin.

A mixture of Gaussians Let us consider an initial condition given by a convex combination of the preceding examples:

where \(\rho ^{(0)}_1\) and \(\rho ^{(0)}_2\) are in (B.11) and (B.13), respectively. As we have seen in the preceding examples, the states \(\rho ^{(0)}_1\) and \(\rho ^{(0)}_2\) never mix as the dynamics goes on. Therefore, we see that as \(n\rightarrow \infty \), \(X_n/\sqrt{n}\) converges weakly to the mixture of Gaussians

where \(\mu ^{(1)}\) is a Gaussian with mean 0 and covariance \(\Sigma \) in (B.12).

Rights and permissions

About this article

Cite this article

Ko, C.K., Yoo, H.J. Mixture of Gaussians in the open quantum random walks. Quantum Inf Process 19, 244 (2020). https://doi.org/10.1007/s11128-020-02751-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11128-020-02751-0

Keywords

- Open quantum random walks

- Quantum dynamical semigroup

- Invariant states

- Crystal lattices

- Central limit theorem

- Mixture of Gaussians