Abstract

Purpose

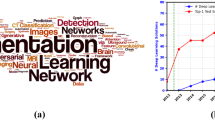

To achieve accurate image segmentation, which is the first critical step in medical image analysis and interventions, using deep neural networks seems a promising approach provided sufficiently large and diverse annotated data from experts. However, annotated datasets are often limited because it is prone to variations in acquisition parameters and require high-level expert’s knowledge, and manually labeling targets by tracing their contour is often laborious. Developing fast, interactive, and weakly supervised deep learning methods is thus highly desirable.

Methods

We propose a new efficient deep learning method to accurately segment targets from images while generating an annotated dataset for deep learning methods. It involves a generative neural network-based prior-knowledge prediction from pseudo-contour landmarks. The predicted prior knowledge (i.e., contour proposal) is then refined using a convolutional neural network that leverages the information from the predicted prior knowledge and the raw input image. Our method was evaluated on a clinical database of 145 intraoperative ultrasound and 78 postoperative CT images of image-guided prostate brachytherapy. It was also evaluated on a cardiac multi-structure segmentation from 450 2D echocardiographic images.

Results

Experimental results show that our model can segment the prostate clinical target volume in 0.499 s (i.e., 7.79 milliseconds per image) with an average Dice coefficient of 96.9 ± 0.9% and 95.4 ± 0.9%, 3D Hausdorff distance of 4.25 ± 4.58 and 5.17 ± 1.41 mm, and volumetric overlap ratio of 93.9 ± 1.80% and 91.3 ± 1.70 from TRUS and CT images, respectively. It also yielded an average Dice coefficient of 96.3 ± 1.3% on echocardiographic images.

Conclusions

We proposed and evaluated a fast, interactive deep learning method for accurate medical image segmentation. Moreover, our approach has the potential to solve the bottleneck of deep learning methods in adapting to inter-clinical variations and speed up the annotation processes.

Similar content being viewed by others

References

McBee MP, Awan OA, Colucci AT, Ghobadi CW, Kadom N, Kansagra AP, Tridandapani S, Auffermann WF (2018) Deep learning in radiology. Acad Radiol 25(11):1472–80. https://doi.org/10.1016/j.acra.2018.02.018

Girum KB, Lalande A, Quivrin M, Bessières I, Pierrat N, Martin E, Cormier L, Petitfils A, Cosset JM, Créhange G (2018) Inferring postimplant dose distribution of salvage permanent prostate implant (PPI) after primary PPI on CT images. Brachytherapy 17(6):866–73. https://doi.org/10.1016/j.brachy.2018.07.017

Litjens G, Kooi T, Bejnordi BE, Setio AA, Ciompi F, Ghafoorian M, van der Laak JA, van Ginneken B, Sánchez CI (2017) A survey on deep learning in medical image analysis. arXiv: 1702.05747

Ronneberger O, Fischer P, Brox T (2015) U-net: convolutional networks for biomedical image segmentation. Miccai. https://doi.org/10.1007/978-3-319-24574-4_28

ing H, Gao J, Kar A, Chen W, Fidler S (2019) Fast interactive object annotation with curve-gcn. CVPR. 5257–5266. arXiv: 1903.06874

Maninis KK, Caelles S, Pont-Tuset J, Van Gool L (2018) Deep extreme cut: From extreme points to object segmentation. CVPR. https://doi.org/10.1109/CVPR.2018.00071

Suchi M, Patten T, Fischinger D, Vincze M (2019) EasyLabel: a semi-automatic pixel-wise object annotation tool for creating robotic RGB-D datasets. ICRA. https://doi.org/10.1109/ICRA.2019.8793917

Sakinis T, Milletari F, Roth H, Korfiatis P, Kostandy P, Philbrick K, Akkus Z, Xu Z, Xu D, Erickson BJ (2019) Interactive segmentation of medical images through fully convolutional neural networks.1-10. arXiv: 1903.08205

Benard A, Gygli M (2017) Interactive video object segmentation in the wild. arXiv: 1801.00269

Chen LC, Papandreou G, Kokkinos I, Murphy K, Yuille AL (2017) Deeplab: semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE T Pattern Anal. https://doi.org/10.1109/TPAMI.2017.2699184

Acuna D, Ling H, Kar A, Fidler S (2018) Efficient interactive annotation of segmentation datasets with polygon-rnn++. CVPR. https://doi.org/10.1109/CVPR.2018.00096

Castrejon L, Kundu K, Urtasun R, Fidler S (2017) Annotating object instances with a polygon-rnn. CVPR. https://doi.org/10.1109/CVPR.2017.477

Rajchl M, Lee MC, Oktay O, Kamnitsas K, Passerat-Palmbach J, Bai W, Damodaram M, Rutherford MA, Hajnal JV, Kainz B, Rueckert D (2016) Deepcut: object segmentation from bounding box annotations using convolutional neural networks. IEEE T Med Imaging 36(2):674–83. https://doi.org/10.1109/TMI.2016.2621185

Li Y, Tarlow D, Brockschmidt M, Zemel R (215) Gated graph sequence neural networks. 1-20. arXiv: 1511.05493

Roth H, Zhang L, Yang D, Milletari F, Xu Z, Wang X, Xu D (2019) Weakly supervised segmentation from extreme points. In: Zhou L et al (eds) LABELS 2019, HAL-MICCAI 2019, CuRIOUS 2019. https://doi.org/10.1007/978-3-030-33642-4_5

Wang M, Deng W (2018) Deep visual domain adaptation: a survey. Neurocomputing 312:135–53. https://doi.org/10.1016/j.neucom.2018.05.083

Leclerc S, Smistad E, Pedrosa J, Østvik A, Cervenansky F, Espinosa F, Espeland T, Berg EA, Jodoin PM, Grenier T, Lartizien C (2019) Deep learning for segmentation using an open large-scale dataset in 2D echocardiography. IEEE T Med Imaging 22 38(9):2198–210. https://doi.org/10.1109/TMI.2019.2900516

Radford A, Metz L, Chintala S (2015) Unsupervised representation learning with deep convolutional generative adversarial networks. arXiv: 1511.06434

Girum KB, Créhange G, Hussain R, Walker PM, Lalande A (2019) Deep Generative Model-Driven Multimodal Prostate Segmentation. In: Nguyen D, Xing L, Jiang S (eds) Artificial intelligence in radiation therapy. AIRT 2019. https://doi.org/10.1007/978-3-030-32486-5_15

Kingma DP, Ba J (2014) Adam: A Method for Stochastic Optimization. 1–15. arXiv: 1412.6980

Sandhu GK, Dunscombe P, Meyer T, Pavamani S, Khan R (2012) Inter-and intra-observer variability in prostate definition with tissue harmonic and brightness mode imaging. Int J Radiat Oncol. https://doi.org/10.1016/j.ijrobp.2011.02.013

Acknowledgements

The authors would like to thank NVIDIA for providing GPU (NVIDIA TITAN X, 12 GB) through their GPU grant program.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

All authors declare no conflict of interest. Ethical approval and informed consent were not required for this study.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Girum, K.B., Créhange, G., Hussain, R. et al. Fast interactive medical image segmentation with weakly supervised deep learning method. Int J CARS 15, 1437–1444 (2020). https://doi.org/10.1007/s11548-020-02223-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11548-020-02223-x