Abstract

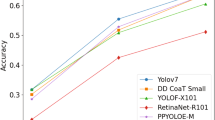

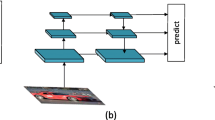

In this paper, a parallel SSD (Single Shot MultiBox Detector) fusion network based on inverted residual structure (IR-PSN) is proposed to solve the problems of the lack of extracted feature information and the unsatisfactory effect of small object detection by deep learning. Firstly, the Inverted Residual Structure (IR) is adopted into the SSD network to replace the pooling layer. The improved SSD network is called deep network of IR-PSN to extract high-level feature information of the image. Secondly, a shallow network based on the inverted residual structure is constructed to extract low-level feature information of the image. Finally, the shallow network is fused with the deep network to avoid the lack of small object feature information and improve the detection rate of small object. The experimental results show that the proposed method has satisfied results for small object detection under the premise of ensuring the accuracy rate P and recall rate R of the comprehensive object detection.

Similar content being viewed by others

REFERENCES

Dalal, N. and Riggs, B., Histograms of oriented gradients for human detection, International Conference on Computer Vision and Pattern Recognition (CVPR), 2005, vol. 1, pp. 886–893.

Felzenszwalb, P.F., Girshick, R.B., McAllester, D., and Ramanan, D., Object detection with discriminatively trained part-based models, IEEE Trans. Pattern Anal. Mach. Intell., 2009, vol. 32, no. 9, pp. 1627–1645.

Hinton, G.E., Osindero, S., and Teh, Y.W., A fast learning algorithm for deep belief nets, Neural Comput., 2006, vol. 18, no. 7, pp. 1527–1554.

Hinton, G.E., and Salakhutdinov, R.R., Reducing the dimensionality of data with neural networks, Science, 2006, vol. 313, no. 5786, pp. 504–507.

Girshick, R., Donahue, J., Darrell, T., and Malik, J., Rich feature hierarchies for accurate object detection and semantic segmentation, Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2014, pp. 580–587.

He, K., Zhang, X.Y., Ren, S., and Sun, J., Spatial pyramid pooling in deep convolutional networks for visual recognition, IEEE Trans. Pattern Anal. Mach. Intell., 2015, vol. 37, no. 9, pp. 1904–1916.

Girshick R., Fast R-CNN, Proceedings of the IEEE International Conference on Computer Vision, 2015, pp. 1440–1448.

Ren, S., He, K., Girshick, R., and Sun, J., Faster R-CNN: Towards real-time object detection with region proposal networks, Advances in Neural Information Processing Systems, 2015, pp. 91–99.

Redmon, J., Divvala, S., Girshick, R., and Farhadi, A., You only look once: Unified, real-time object detection, Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2016, pp. 779–788.

Liu, W., Anguelov, D., Erhan, D., Szegedy, C., Reed, S., Fu, C.Y., and Berg, A.C., SSD: Single shot multibox detector, European Conference on Computer Vision, Cham, 2016, pp. 21–37.

Howard, A.G., Zhu, M., Chen, B., Kalenichenko, D., Wang, W., Weyand, T., and Adam, H., MobileNets: Efficient convolutional neural networks for mobile vision applications, arXiv preprint arXiv:1704.04861, 2017.

Sandler, M., Howard, A., Zhu, M., Zhmoginov, A., and Chen, L.C., Mobilenetv2: Inverted residuals and linear bottlenecks, Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018, pp. 4510–4520.

Zhang, X., Zhou, X., Lin, M., and Sun, J., Shufflenet: An extremely efficient convolutional neural network for mobile devices, Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018, pp. 6848–6856.

Ma, N., Zhang, X., Zheng, H.T., and Sun, J., Shufflenet v2: Practical guidelines for efficient CNN architecture design, Proceedings of the European Conference on Computer Vision (ECCV), 2018, pp. 116–131.

Iandola, F.N., Han, S., Moskewicz, M.W., Ashraf, K., Dally, W.J., and Keutzer, K., SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and < 0.5 MB model size, arXiv preprint arXiv:1602.07360, 2016.

Huang, G., Liu, Z., Van Der Maaten, L., and Weinberger, K.Q., Densely connected convolutional networks, Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2017, pp. 4700–4708.

Huang, G., Liu, S., Van der Maaten, L., and Weinberger, K.Q., Condensenet: An efficient DenseNet using learned group convolutions, Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018, pp. 2752–2761.

Zhou, B., Khosla, A., Lapedriza, A., Oliva, A., and Torralba, A., Object detectors emerge in deep scene CNNs, arXiv preprint arXiv:1412.6856, 2014.

Cao, G., Xie, X., Yang, W., Liao, Q., Shi, G., and Wu, J., Feature-fused SSD: Fast detection for small objects, Ninth International Conference on Graphic and Image Processing (ICGIP 2017). International Society for Optics and Photonics, 2018, vol. 10615.

Hoiem, D., Chodpathumwan, Y., and Dai, Q., Diagnosing error in object detectors, European Conference on Computer Vision, Berlin–Heidelberg, 2012, pp. 340–353.

Funding

This work was financially supported by the National Natural Science Foundation of China (Grant nos. 6154055, 61863011), the Dean Project of Guangxi Key Laboratory of Wireless Wideband Communication and Signal Processing, China, the Science Research and Technology Development Program of Hezhou (no. 1707041).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

The authors declare that they have no conflicts of interest.

About this article

Cite this article

Changgeng Yu, Liu, K. & Zou, W. A Method of Small Object Detection Based on Improved Deep Learning. Opt. Mem. Neural Networks 29, 69–76 (2020). https://doi.org/10.3103/S1060992X2002006X

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.3103/S1060992X2002006X