Abstract

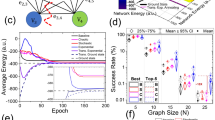

To tackle important combinatorial optimization problems, a variety of annealing-inspired computing accelerators, based on several different technology platforms, have been proposed, including quantum-, optical- and electronics-based approaches. However, to be of use in industrial applications, further improvements in speed and energy efficiency are necessary. Here, we report a memristor-based annealing system that uses an energy-efficient neuromorphic architecture based on a Hopfield neural network. Our analogue–digital computing approach creates an optimization solver in which massively parallel operations are performed in a dense crossbar array that can inject the needed computational noise through the analogue array and device errors, amplified or dampened by using a novel feedback algorithm. We experimentally show that the approach can solve non-deterministic polynomial-time (NP)-hard max-cut problems by harnessing the intrinsic hardware noise. We also use experimentally grounded simulations to explore scalability with problem size, which suggest that our memristor-based approach can offer a solution throughput over four orders of magnitude higher per power consumption relative to current quantum, optical and fully digital approaches.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

The data supporting plots within this paper and other findings of this study are available from the corresponding author upon reasonable request.

References

Zidan, M. A., Strachan, J. P. & Lu, W. D. The future of electronics based on memristive systems. Nat. Electron. 1, 22–29 (2018).

Williams, R. S. What’s next? [The end of Moore’s law]. Comput. Sci. Eng. 19, 7–13 (2017).

Hennessy, J. L. & Patterson, D. A. A new golden age for computer architecture. Commun. ACM 62, 48–60 (2018).

Johnson, M. W. et al. Quantum annealing with manufactured spins. Nature 473, 194 (2011).

Bojnordi, M. N. & Ipek, E. Memristive Boltzmann machine: a hardware accelerator for combinatorial optimization and deep learning. In 2016 IEEE Int. Symp. High Performance Computer Architecture (HPCA) 1–13 (IEEE, 2016).

Shin, J. H., Jeong, Y., Zidan, M. A., Wang, Q. & Lu, W. D. Hardware acceleration of simulated annealing of spin glass by RRAM crossbar array. In IEEE Int. Electron. Devices Meet. (IEDM) 63–66 (IEEE, 2018).

Hamerly, R. et al. Experimental investigation of performance differences between coherent Ising machines and a quantum annealer. Sci. Adv. 5, eaau0823 (2019).

Roques-Carmes, C. et al. Heuristic recurrent algorithms for photonic Ising machines. Nat. Commun. 11, 249 https://doi.org/10.1038/s41467-019-14096-z (2020).

Kielpinski, D. et al. Information processing with large-scale optical integrated circuits. In IEEE Int. Conf. Rebooting Computing (ICRC’16) https://doi.org/10.1109/ICRC.2016.7738704 (IEEE, 2016).

Tezak, N. et al. Integrated coherent Ising machines based on self-phase modulation in microring resonators. IEEE J. Sel. Top. Quant. Electron. 26, 1–15 (2020).

Aramon, M. et al. Physics-inspired optimization for quadratic unconstrained problems using a digital annealer. Front. Phys. 7, 48 (2019).

King, A. D., Bernoudy, W., King, J., Berkley, A. J. & Lanting, T. Emulating the coherent Ising machine with a mean-field algorithm. Preprint at https://arxiv.org/abs/1806.08422 (2018).

A quadratic unconstrained binary optimization problem formulation for single-period index tracking with cardinality constraints White Paper (QC Ware Corp., 2018); https://qcware.com/wp-content/uploads/2019/09/index-tracking-white-paper.pdf

Kochenberger, G. et al. The unconstrained binary quadratic programming problem: a survey. J. Comb. Optim. 28, 58–81 (2014).

Booth, M., Reinhardt, S. P. & Roy, A. Partitioning optimization problems for hybrid classical/quantum execution (D-Wave, 2017); https://www.dwavesys.com/sites/default/files/partitioning_QUBOs_for_quantum_acceleration-2.pdf

Neukart, F. Traffic flow optimization using a quantum annealer. Front. ICT 4, 1–6 (2017).

Hopfield, J. J. Neural networks and physical systems with emergent collective computational abilities. Proc. Natl Acad. Sci. 79, 2554–2558 (1982).

Hopfield, J. J. Neurons with graded response have collective computational properties like those of two-state neurons. Proc. Natl Acad. Sci. 81, 3088–3092 (1984).

Guo, X. et al. Modeling and experimental demonstration of a hopfield network analog-to-digital converter with hybrid CMOS/memristor circuits. Front. Neurosci. 9, 488 (2015).

Hu, S. G. et al. Associative memory realized by a reconfigurable memristive Hopfield neural network. Nat. Commun. 6, 1–5 (2015).

Yang, J., Wang, L., Wang, Y. & Guo, T. A novel memristive Hopfield neural network with application in associative memory. Neurocomputing 227, 142–148 (2017).

Duan, S., Dong, Z., Hu, X., Wang, L. & Li, H. Small-world Hopfield neural networks with weight salience priority and memristor synapses for digit recognition. Neural Comput. Appl. 27, 837–844 (2016).

Lucas, A. Ising formulations of many NP problems. Front. Phys. 2, 5 (2014).

Coffrin, C., Nagarajan, H. & Bent, R. Ising Processing Units: Potential and Challenges for Discrete Optimization (LANL, 2017); https://permalink.lanl.gov/object/tr?what=info:lanl-repo/lareport/LA-UR-17-23494

Hopfield, J. J. & Tank, D. W. ‘Neural’ computation of decisions in optimization problems. Biol. Cybernet. 52, 141–152 (1985).

Boahen, K. A neuromorph’s prospectus. Comput. Sci. Eng. 19, 14–28 (2017).

Shafiee, A., Nag, A., Muralimanohar, N. & Balasubramonian, R. ISAAC: a convolutional neural network accelerator with in-situ analog arithmetic in crossbars. ACM SIGARCH Comput. Archit. News 44, 14–26 (2016).

Le Gallo, M. et al. Mixed-precision in-memory computing. Nat. Electron. 1, 246–253 (2018).

Ielmini, D., Nardi, F. & Cagli, C. Resistance-dependent amplitude of random telegraph-signal noise in resistive switching memories. Appl. Phys. Lett. 96, 053503 (2010).

Mahmoodi, M. R., Nili, H. & Strukov, D. B. RX-PUF: low power, dense, reliable, and resilient physically unclonable functions based on analog passive rram crossbar arrays. In 2018 IEEE Symp. VLSI Technology 99–100 (IEEE, 2018).

Kirkpatrick, S., Gelatt, C. D. & Vecchi, M. P. Optimization by simulated annealing. Science 220, 671–680 (1983).

Chen, L. & Aihara, K. Chaotic simulated annealing by a neural network model with transient chaos. Neural Netw. 8, 915–930 (1995).

He, Y. Chaotic simulated annealing with decaying chaotic noise. IEEE Trans. Neural Netw. 13, 1526–1531 (2002).

Katti, R. S. & Srinivasan, S. K. Efficient hardware implementation of a new pseudo-random bit sequence generator. In 2009 IEEE Int. Symp. Circuits and Systems 1393–1396 (IEEE, 2009).

Wiegele, A. Biq Mac Library—A Collection of Max-Cut and Quadratic 0-1 Programming Instances of Medium Size (Univ. of Klagenfurt, 2007); http://biqmac.aau.at/biqmaclib.pdf

Liu, W., Wang, L. Solving the shortest path routing problem using noisy Hopfield neural networks. In 2009 WRI Int. Conf. Communications and Mobile Computing 299–302, https://doi.org/10.1109/CMC.2009.366 (IEEE, 2009).

Matsubara, S. et al. Ising-model optimizer with parallel-trial bit-sieve engine. In Conf. Complex, Intelligent, and Software Intensive Systems 432–438 (Springer, 2017).

Sheng, X. et al. Low-conductance and multilevel CMOS-integrated nanoscale oxide memristors. Adv. Electron. Mater. 5, 1800876 (2019).

Hu, M. et al. Memristor-based analog computation and neural network classification with a dot product engine. Adv. Mater. 30, 1705914 (2018).

Hu, M. et al. Dot-product engine for neuromorphic computing: programming 1T1M crossbar to accelerate matrix-vector multiplication. In Proc. 53rd Annual Design Automation Conf. 19 (ACM, 2016).

Roth, R.M. Fault-tolerant dot-product engines. IEEE Trans. Inform. Theory 65, 2046–2057 (2018).

Ankit, A. et al. Puma: a programmable ultra-efficient memristor-based accelerator for machine learning inference. In Proc. 24th Int. Conf. Architectural Support for Programming Languages and Operating Systems 715–731 (ACM, 2019).

Rekhi, A.S. et al. Analog/mixed-signal hardware error modeling for deep learning inference. In Proc. 56th Ann. Design Automation Conf. 299–302, https://doi.org/10.1145/3316781.3317770 (ACM, 2019).

Marinella, M. J. et al. Multiscale co-design analysis of energy, latency, area, and accuracy of a ReRAM analog neural training accelerator. IEEE J. Emerg. Sel. Top. Circuits Syst. 8, 86–101 (2018).

Mandra, S. & Katzgraber, H. G. A deceptive step towards quantum speedup detection. Quant. Sci. Technol. 3, 1–11 (2018).

Ambrogio, S. et al. Equivalent-accuracy accelerated neural-network training using analogue memory. Nature 558, 60 (2018).

Villalonga, B. et al. A flexible high-performance simulator for verifying and benchmarking quantum circuits implemented on real hardware. npj Quant. Inform. 5, 1–16 (2019).

Linn, E., Rosezin, R., Tappertzhofen, S., Böttger, U. & Waser, R. Beyond von Neumann—logic operations in passive crossbar arrays alongside memory operations. Nanotechnology 23, 305205 (2012).

Ielmini, D. & Wong, H.-S. P. In-memory computing with resistive switching devices. Nat. Electron. 1, 333–343 (2018).

Prezioso, M. et al. Training and operation of an integrated neuromorphic network based on metal-oxide memristors. Nature 521, 61–64 (2015).

Burr, G. W. et al. Experimental demonstration and tolerancing of a large-scale neural network (165000 synapses) using phase-change memory as the synaptic weight element. IEEE Trans. Electron. Devices 62, 3498–3507 (2015).

Pickett, M. D., Medeiros-Ribeiro, G. & Williams, R. S. A scalable neuristor built with Mott memristors. Nat. Mater. 12, 114–117 (2013).

Pi, S. et al. Memristor crossbar arrays with 6-nm half-pitch and 2-nm critical dimension. Nat. Nanotechnol. 14, 35–39 (2019).

Torrezan, A. C., Strachan, J. P., Medeiros-Ribeiro, G. & Williams, R. S. Sub-nanosecond switching of a tantalum oxide memristor. Nanotechnology 22, 485203 (2011).

Acknowledgements

We are grateful to S. Mandrà for performing the CPU simulations used in Table 1 and early review of the article. We acknowledge discussions with H. Katzgraber, P. L. McMahon, E. Rothberg, K. Roenigk, C. Santos, R. Slusher and J. Weinschenk. This research was based upon work supported by the Office of the Director of National Intelligence (ODNI), Intelligence Advanced Research Projects Activity (IARPA), via contract number 2017-17013000002.

Author information

Authors and Affiliations

Contributions

F.C., S.K., T.V.V., C.L. and J.P.S. performed the simulations. T.V.V., S.K., R.L., Z.L., M.F. and J.P.S. contributed to performance benchmarking. S.K. and J.P.S. performed the experiments. X.S., C.L., Q.X., J.J.Y. and J.P.S. contributed to the chip fabrication and experimental system development. All authors supported analysis of the results and commented on the article.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary Information

Supplementary Figs. 1–15, Supplementary Discussion sections 1–10

Rights and permissions

About this article

Cite this article

Cai, F., Kumar, S., Van Vaerenbergh, T. et al. Power-efficient combinatorial optimization using intrinsic noise in memristor Hopfield neural networks. Nat Electron 3, 409–418 (2020). https://doi.org/10.1038/s41928-020-0436-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41928-020-0436-6

This article is cited by

-

Energy-efficient superparamagnetic Ising machine and its application to traveling salesman problems

Nature Communications (2024)

-

Mosaic: in-memory computing and routing for small-world spike-based neuromorphic systems

Nature Communications (2024)

-

Ferroelectric compute-in-memory annealer for combinatorial optimization problems

Nature Communications (2024)

-

Neuromorphic computing based on halide perovskites

Nature Electronics (2023)

-

In-memory factorization of holographic perceptual representations

Nature Nanotechnology (2023)