Abstract

In this paper, the ill-posedness of derivative interpolation is discussed, and a regularized derivative interpolation for band-limited signals is presented. The ill-posedness is analyzed by the Shannon sampling theorem. The convergence of the regularized derivative interpolation is studied by the combination of a regularized Fourier transform and the Shannon sampling theorem. The error estimation is given, and high-order derivatives are also considered. The algorithm of the regularized derivative interpolation is compared with derivative interpolation using some other algorithms.

Similar content being viewed by others

1 Introduction

The computation of the derivative is widely applied in engineering, signal processing, and neural networks [1–3]. It is also widely applied in complex dynamical systems in networks [4].

In this section, we describe the problem of finding the derivative of band-limited signals by the Shannon sampling theorem [5]. Recall that a function is called Ω-band-limited if its Fourier transform has the property that \(\hat f(\omega)=0\) for every ω∉[−Ω,Ω].

Shannon sampling theorem If f∈L2(R) and is Ω-band-limited, then it can be exactly reconstructed from its samples f(nh):

where \(\text {sinc} \Omega (t-nh):= \frac {\sin \Omega (t-nh)} {\Omega (t-nh)}\) and h=π/Ω. Here, the convergence is in L2 and uniform on R, which means the series approaches f(t) according to the L2-norm and L∞-norm in R.

By the inversion formula, if f is band-limited

By the Paley-Wiener theorem [6] p.67, f is real analytic.

It is an elementary property of the Fourier transform that F[f(k)(t)]=(iω)kF[f(t)], where f(k)(t) is the kth derivative of f(t); consequently, if f is band-limited so is f(k).

In [7], Marks presented an algorithm to find the derivative of band-limited signals by the sampling theorem:

Here, again, the convergence is in L2 and uniform on R.

In this paper, we study the problem of computing f(k)(t) in the case the samples {f(nh)} are noisy:

where fE(nh) is the exact signal and {ηn}={η(nh)} is the noise with the bound δ>0.

It was pointed out in [7] that the interpolation noise level increases significantly with k. In [8], Ignjatovic stated that numerical evaluation of higher-order derivatives of a signal from its samples is very noise sensitive and presented a motivation for the notions of chromatic derivatives. The Chromatic derivatives in [8] is too special since it is the operator polynomial \({\mathcal {K}}^{n}_{t}=(-i)^{n} P^{L}_{n}(i\frac d {dt})\), where \(P^{L}_{n}\) are the normalized and scaled Legendre polynomials. A Fourier truncation method to compute high-order numerical derivatives was proposed in [9], and we will compare with the method in the simulation section. The advantage of [9] is that it does not require the function to be band-limited. The disadvantage is that the accuracy is not good for band-limited functions. In [10], the series to approximate a band-limited function and its derivative is given, but the ill-posedness and noise are not considered. In [11], an optimal algorithm for approximating band-limited functions from localized sampling is given. It is shown that a certain truncated series is the best estimate for using the local information operator; but the noisy case is not considered which means ηn=0 in (4). In [12], the noisy case is considered for approximating band-limited functions, but the computation of the derivatives is not considered in the noisy case.

In [13], pp. 235–249, cubic smoothing spline is discussed in noisy cases, but no proof of convergence is given. In [14–16], regularization methods are used to find the stable solutions in the computation of derivatives. In [14, 16], the approximation error is measured with respect to L2-norm. This means the regularized solution approximates the exact solution according to the L2-norm. Furthermore, the noise models are different: in [14], the disturbance is assumed to be bounded in the L2-norm, whereas in [16], it is bounded in the maximum norm. On a finite interval in R, the maximum norm is more strict. In [14], the error of the first derivative is given by \(O(h) + O(\sqrt \delta)\). In [16], for the regularization parameter α=δ2, the error of the jth derivative is given by

for 0≤j≤k−1 and f∈Hk(0,1)={g:g∈L2(0,1), g(k)∈L2(0,1)} in the L2-norm which means the distance in Hk(0,1) is measured in the L2-norm. Here, \(f^{(j)}_{\alpha }\) is the approximate jth derivative by regularization, δ is the error bound in (4), and h is the step size for the derivative interpolation. In the current paper, we measure the approximation error in the L∞-norm on any finite interval in R and assume additive l∞ noise. The notion of robustness naturally differs significantly for these different norms and spaces since the regularized solution approximates the exact solution according to the maximum norm on any finite interval in R implies the regularized solution approximates the exact solution according to the L2-norm on the same interval. In accordance to the estimated error in [14, 16], h must be close to zero to guarantee the accuracy. This is also needed in [15]. In this paper, since f is band-limited, there is no O(h) in the error estimate. So, the estimate of approximation error obtained in this paper is better since the condition h→0 is not required for the approximation.

In [17, 18], other kernels such as Gaussian functions and the power of sinc functions are studied. However, the noise and ill-posedness are not considered. In this paper, the kernel will be given by the regularized Fourier transform in [19]. The regularized transform is found by the Tikhonov regularization method in [20]. The estimate of approximation error is obtained in the noisy case. The ill-posedness is taken into account in the error estimation. In [21] and [22], the ill-posedness of the problem of computing f from the samples of f is analyzed and a regularized sampling algorithm is presented in [21].

In this paper, we will consider a more complex problem. In Section 2, we will analyze the ill-posedness of the problem of computing f(k) from the samples of f and conclude that, in the presence of noise, formula (3) is not reliable even if k is relatively small. For the case k=1, we can see the ill-posedness by the bipolar δ noise in Section 2. In Section 3, a regularized derivative interpolation formula will be presented and its convergence property is proved. We will show the bipolar δ noise that causes ill-posedness can be controlled by the regularized solution. In Section 4, a regularized high -order derivative interpolation formula is presented and its convergence property is proved. In Section 5, applications will be shown by some examples. We will see that the error of the regularized solution is small for the bipolar δ noise. Finally, the conclusion is given in Section 6. In our algorithm, it is not necessary for the step size h of the samples to be close to 0.

2 The concepts of Paley-Wiener spaces and ill-posedness

In this section, we introduce the concepts of entire functions, Bernstein inequality, Plancherel-Pólya theorem, Paley-Wiener theorem ([6] pp. 48–68), and the ill-posedness.

Definition 1

A function \(f(z):\mathbb {C}\rightarrow \mathbb {C}\) is said to be entire if is is holomorphic throughout the set of complex numbers \(\mathbb {C}\).

By this definition, we can see that any band-limited function is an entire function.

Definition 2

An entire function f(z)is said to be exponential type if there exist positive constants A and B such that

Definition 3

An entire function f(z)is said to be exponential type at most τ if given ε>0 there exist a constant Aε such that

Definition 4

By Eσ we denote the class whose members are entire functions in the complex plane and are of exponential type at most σ.

According to this definition, if f(z)∈Eσ, then, given ε>0, there exist a constant Aε such that

Definition 5

The Bernstein class \(B^{p}_{\sigma }\) consists of those functions which belong to Eσ and whose restriction to R belongs to Lp(R).

This means \(B^{p}_{\sigma }=\{f\in E_{\sigma }: f(z)|_{z=x+0i}\in L^{p}(\bf R)\}\).

Bernstein inequality For every \(f\in B^{p}_{\sigma }\), positive r∈Z and p≥1, we have

Plancherel-Pólya inequality Let \(f\in B^{p}_{\sigma }\), p>0, and let Λ=(λn), n∈Z be a real increasing sequence such that λn+1−λn≥2δ. Then,

By this inequality, we can see that \(f\in B^{p}_{\sigma }\) implies f∈L∞(R).

Paley-Wiener theorem ([6], p. 67) The class functions {f(z)} whose members are entire, belong to L2 when restricted to the real axis and are such that |f(z)|=o(eσz) is identical to the class of functions whose members have a representation

for some φ∈L2(−σ, σ).

By the Paley-Wiener theorem, the space of Ω-band-limited functions is equivalent to the classical Paley-Wiener space of entire functions. We denote

Then, for each f∈PW2, the Plancherel-Pólya inequality implies that also f∈L∞; hence, the possibility of applying the Bernstein inequality on f and its derivatives f(k) which yields that all f(k)∈L∞ have a bounded L∞ norm for k≥1. We will consider a restricted function space which only includes derivatives of functions f∈PW2 which are not only Ω-band-limited but also have a bounded Fourier transform. The condition \(\hat f\in L^{\infty } (\bf R)\) is required to prove the approximation property of the derivative interpolation by regularization. This can be seen in the proof of Lemma 3 in Section 3. Let us denote the space by

The samples we considers are always bounded sequences in l∞.

The concept of ill-posed problems was introduced in [20]. Here, we borrow the following definition from it:

Definition 6

Assume \({\mathcal {A}}:D\rightarrow U\) is an operator in which D and U are metric spaces with distances ρD(∗,∗) and ρU(∗,∗), respectively. The problem

of determining a solution z in the space D from the “initial data” u in the space U is said to be well-posed on the pair of metric spaces (D,U) in the sense of Hadamard if the following three conditions are satisfied:

(i) For every element u∈U, there exists a solution z in the space D; in other words, the mapping \(\mathcal {A}\) is subjective.

(ii) The solution is unique; in other words, the mapping \(\mathcal {A}\) is one-to-one.

(iii) The problem is stable in the spaces (D,U): ∀ε>0,∃δ>0, such that

In other words, the inverse mapping \({\mathcal {A}}^{-1}\) is uniformly continuous.

Problems that violate any of the three conditions are said to be ill-posed.

In this section, we discuss the ill-posedness of derivative interpolation by the sampling theorem in the pair of spaces (PW∞, l∞). For f(k)∈PW∞, its norm is defined by

and

is the space of bounded sequences with the norm

We define the operator

Here, f(nh) is the coefficient of [sincΩ(t−nh)](k) in (3).

Let us describe the case k=1 in (3). For the Ω-band-limited function f, the first derivative is

where h=π/Ω. Here, the convergence is both in L2 and uniformly on R. The proof of this fact is similar to the proof of the Shannon sampling theorem [23].

i) The existence condition is not satisfied.

The proof is given in (iii) that the stability condition is not satisfied. In the proof, we will find a kind of noise {η(nh):n∈Z} such that

at some point t0∈(−∞, ∞).

(ii) The uniqueness condition is satisfied.

Since S is a linear operator, it is one-to-one if and only if

Since f(nh)=0 for all n∈Z implies f(t)≡0 by the Shannon sampling theorem, then f(k)(t)≡0. So S is one-to-one.

(iii) The stability condition is not satisfied, in other words, S−1 is not continuous.

This can be seen from the following example.

Example 1

Consider any band-limited signal f along with f(nh)+ηb(nh) where ηb(nh) is a noise.

Assume the noise has the form ηb(nh)=δ·sgn{cosΩ(t0−nh)/(t0−nh)}, −N≤n≤N and ηb(nh)=0, for |n|>N, where t0 is a given point in the time domain and δ is a small positive number. Then, the noise of the derivative in formula (5) is

This is the noise of f(k)(t) in which k=1.

At t=t0 the noise of the derivative is

as N→∞ if cosΩt0≠0, since

by the divergence property of the Harmonic series and

converges by the convergence property of p-series in the case p=2.

If we do not set ηb(nh)=0, for |n|>N and define them in the same way as |n|≤N, then ηb′(t0)=∞. This shows that the existence condition is not satisfied.

Also at any point, t=t0+kπ/Ω, k∈Z

as N→∞.

Thus, \(||\{\eta _{b}(nh)\}||_{l^{\infty }}\leq \delta \), yet \(||(f+\eta _{b})'-f'||_{\mbox{{PW}}^{\infty }}=||\eta _{b}'||_{L^{\infty }}\) can be made arbitrarily large. So (iii) in the definition of well-posedness fails.

Therefore, this is an ill-posed problem.

If we consider the problem on the pair of spaces (PW∞, l2), the problem is well posed. But the condition \(||\eta ||_{l^{2}}\leq \delta \rightarrow 0\) is too strict.

In Section 3, we will show that the regularized solution will converge to the exact signal as \(||\eta ||_{l^{\infty }}\leq \delta \rightarrow 0\) according to the l∞-norm for suitable regularization parameters.

3 The derivative interpolation by regularization

To solve the ill-posed problem in last section, we introduce the regularized Fourier transform [19]:

Definition 7

For α>0, we define

where

is the function f(t) multiplied with the weight function

The regularized Fourier transform was found by finding the minimum of a smoothing function and solving an Euler equation. The detail can be seen in [19].

In [19], we have proved that

under the condition

if α(δ)→0 and δ2/α(δ) is bounded as δ→0. So the weight function Kα(t) has the function of stabilization. We have successfully used the regularization factor Kα(t) for band-limited extrapolations in [19]. In [24], we also successfully used the factor Kα(nh) in the computation of Fourier transform. The analysis of convergence property and the computation are in the frequency domain. In [21], the weight function Kα(nh) is applied to the Shannon sampling theorem. In this paper, we will compute the derivatives by the combination of (5) and (6). The analysis of convergence property and computation are in the time domain for the derivatives. The error estimations of the computation of the derivatives are also given. The proof is quite different.

Definition 8

Given {f(nh):n∈Z} in l∞, define

The infinite series is uniformly convergent in R for any α>0 since both sincΩ(t−nh) and {f(nh):n∈Z} are bounded.

By the differentiation of fα(t) in Definition 3.2, we obtain the regularized derivative interpolation:

This derivative is well defined since the infinite series is also uniformly convergent on R.

Lemma 1

If f is band-limited, then \( \textbf {F}_{\alpha }[f](\omega)=\frac 1 {4\pi a\alpha } \int _{-\Omega }^{\Omega } \hat f(u)e^{-a|u-\omega |} du \) where \(a:=\left (\frac {1+2\pi \alpha } {2\pi \alpha }\right)^{\frac 1 2} \).

It can be seen from the convolution

where \(\hat K_{\alpha }(\omega)=\frac 1 {2 a\alpha } e^{-\alpha |\omega |}\) is the Fourier transform of Kα(t). For the proof of the convergence of the regularized derivative interpolation, we will need the definition of periodic extension of the function eiωt.

Definition 9

(eiωt)p[−Ω,Ω] denotes the periodic extension of the function eiωt defined on the interval [−Ω,Ω] to the interval (−∞,∞) with period 2Ω.

Let Et(ω):=(eiωt)p[−Ω,Ω]. Then, for ω∈[−Ω,Ω], Et(ω)=eiωt. For ω∈R∖[−Ω,Ω], Et(ω) is the periodic extension of Et(ω) for ω∈[−Ω,Ω].

The next Lemma is from [25].

Lemma 2

If \(\hat f\in L^{1}(-\infty, \infty)\), then

for each t∈R.

In order to prove the convergence property of the regularized derivative interpolation, we need some more lemmas which are listed in the Appendix.

We are now in a position to state and prove our main theorem.

Theorem 1

Suppose

where \(||\{\eta _{n}\}||_{l^{\infty }} \leq \delta \) and fE∈PW∞. Then, if we choose α=α(δ)=O(δμ), 0<μ<2 as δ→0, then \(f^{\prime }_{\alpha }(t) \rightarrow f'_{E}(t)\) uniformly in any finite interval [-T, T] as δ→0. Furthermore,

The proof is in the Appendix.

Theorem 2

Suppose

where {ηw(nh)} is white noise, Eηw=0 and Var(ηw)=σ2 and fE∈PW∞. Then, if we choose α=α(σ)=O(σμ), 0<μ<2 as σ→0, then \(Ef^{\prime }_{\alpha }(t) \rightarrow f'_{E}(t)\) uniformly in any finite interval [-T, T] as σ→0. Furthermore,

and

The proof is in the Appendix.

4 Derivative interpolation of higher order

In this section, we prove the convergence property of the derivative interpolation formula of high order:

Some lemmas are in the Appendix.

We can now state and prove a version of Theorem 1 for higher-order derivatives.

Theorem 3

If we choose α=α(δ)=O(δμ), 0<μ<2 as δ→0, then \(f^{(k)}_{\alpha }(t) \rightarrow f^{(k)}_{E}(t)\) uniformly in any finite interval [-T, T] as δ→0. Furthermore, we have the estimate

The proof is in the Appendix.

Remarks 1

This theorem shows that evaluation of higher order derivatives from Nyquist rate samples with any accuracy is possible. Here, the Nyquist rate samples mean the samples with the step size \(h=\frac \pi \Omega \).

Theorem 4

Suppose

where {ηw(nh)} is white noise, Eηw=0 and Var(ηw)=σ2 and fE∈PW∞. Then, if we choose α=α(σ)=O(σμ), 0<μ<2 as σ→0, then \(Ef^{(k)}_{\alpha }(t) \rightarrow f^{(k)}_{E}(t)\) uniformly in any finite interval [-T, T] as σ→0. Furthermore,

and

The proof is similar to the proof of Theorem 2. We omit it here.

5 Methods, experimental results, and discussion

In this section, we give some examples to show that the regularized sampling algorithm is more effective in controlling the noise than some other algorithms. We will compare it with the Fourier truncation method ([9]) and Tikhonov regularization method ([16]). The procedure of how the Tikhonov regularization method was performed is described in detail in [16].

In practice, only finite terms can be used in (8), so we choose a large integer N and use next formula in computation:

where f(nh) is the noisy sampling data given in (4) in Section 1. Due to the weight function, the series above converges much faster than the series (3) of using Shannon’s sampling theorem. We give the estimate of the truncation error next.

Since t∈[−T, T], for |n|>N

Then, the truncation error

where

Therefore,

So, if N is large enough, the truncation error can be very small.

Theorem 5

If we choose α such that α(δ)=O(δμ), 0<μ<2 as δ→0, and α=O(Nγ), −2<γ<0 as N→∞, then we have the estimate

In this case, \( \text {TR}=O(\frac 1 {N^{2+\gamma }}), \) which will vanish as N→∞.

In next three examples, we choose N=100 and α=0.01 in (9). We will consider three types of noise:

(i) Bipolar δ noise

where t0=30, and δ=0.1, This is the noise given in Section 2 for which we have shown the stability condition is not satisfied.

(ii) White noise that is uniformly distributed in [−0.1,0.1].

(iii) White Gaussian noise whose variance is 0.01.

For the bipolar δ noise, we will give the square errors (SE). For the white noise, we use the three methods 100 times and will give the mean square errors (MSE).

Example 2

Suppose

Then,

where Ω=1.5.

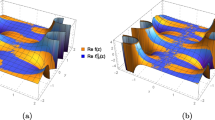

The simulation results for the bipolar δ noise are in Fig. 1. The solid curve is the exact derivative. The dot is the result by the Fourier truncation method. We choose α=0.01. The dot dashed is the result by the Tikhonov regularization method. The dashed is the result by the regularized sampling algorithm. We give the SE in the table:

The simulation results for the uniform noise are in Fig. 2. The MSE is in the table:

The simulation results for the Gaussian noise are in Fig. 3. The MSE is in the table:

Example 3

In this example, we choose a function that has a triangular spectrum. Let

Then,

where Ω=1.5.

We still choose α=0.01. The simulation results for the bipolar δ noise are in Fig. 4.

We give the SE in the table:

The simulation results for the uniform noise are in Fig. 5. The MSE is in the table:

The simulation results for the Gaussian noise are in Fig. 6. The MSE is in the table:

Example 4

In this example, we choose a function that is a raised-cosine filter. Let

Then,

where ωc=1 and β=0.5. Here, Ω=ωc(1+β)=1.5.

We still choose α=0.01. The simulation results for the bipolar δ noise are in Fig. 7. We give the SE in the table:

The simulation results for the uniform noise are in Fig. 8. The MSE is in the table:

The simulation results for the Gaussian noise are in Fig. 9. The MSE is in the table:

Next, we compare the three algorithms in dependence of the variance of the Gaussian noise. The MSE for variance = 1, 0.25, 0.04, 0.01, 0.0025 for the three algorithms are in the next three tables.

Fourier truncation method:

Tikhonov regularization method:

Regularized sampling algorithm:

Remarks 2

The results of the Fourier truncation method in [9] are not good since the condition \(\phantom {\dot {i}\!}||\eta ||_{L^{2}}\leq \delta \) is not satisfied here. In this paper, the condition on the samples |η(nh)|≤δ is much weaker. The condition to apply the regularization method in [16] is the same, so the result is better than the Fourier truncation method, but it is not as good as the regularized sampling algorithm in this paper. This is just for band-limited signals. The Tikhonov regularization method is a general method for ill-posed problems. It may be better for other signals such as non-band-limited signals. For the Tikhonov regularization method in [16], one must solve a system of linear equations. The amount of computation is of the order O(N3) to compute the derivative of N points. For each t, the amount of computation of (9) is of the order O(N). Then, for N points, the amount of computation of (9) is of the order O(N2).

Example 5

In this example, we show how the square error depends on the regularization parameter α and give the optimal α. We choose the function in example 1 and the bipolar δ noise. The results are in the Fig. 10. For the first derivative, the optimal α=0.0590. For the second derivative, the optimal α=0.0650.

Remarks 3

By the proof of Theorem 1 and 3, the error bound depends on the exact signal fE which is not known. So it is not easy to find an optimized α. However, there are some methods in which α can be determined such as discrepancy principle ([26]), the GCV and L-curve ([27], [28]). And by Example 4, we can see that the regularization parameter α should be a little larger in the computation of the second derivative than in the first derivative. This means higher derivatives are more sensitive to noise. So a larger regularization parameter α is required.

6 Conclusion

The interpolation formula obtained by differentiating the formula of the Shannon sampling theorem is not stable. The presence of noise can give rise to the unreliable results. For certain kind of noise, the error can even approach infinity. So, this is a highly ill-posed problem. The regularization method is an effective method for ill-posed problems. The derivative interpolation by regularized sampling algorithm is presented and the method is extended to high order derivative interpolation. The convergence property is proved and tested by some examples. The numerical results show that the derivative interpolation by regularized sampling algorithm is more effective in reducing noise for band-limited signals.

7 Appendix

Lemma 3

If f is band-limited and \(\hat f\in L^{\infty } (\textbf {R})\), then

where \(O(\alpha ^{\frac 1 2})\) means \(O(\alpha ^{\frac 1 2})\leq C\cdot \alpha ^{\frac 1 2}\), C=const.>0.

Proof

We will see that this improper integral is uniformly convergent, so we can interchange the order of the differentiation and integration.

where

Assume \(|\hat f(\omega)|\leq M\) where M is a positive constant. □

In the case ω≥Ω,

In the case ω≤−Ω, \(|\hat f_{W}(\omega)| =\left |\frac 1 {4\pi a\alpha } \int _{-\Omega }^{\Omega } \hat f(u)e^{a(\omega -u)} du\right |\), we have the similar inequality.

So

where the last equality uses the definition of a in lemma 3.1.

7.1 Proof of Theorem 1

Suppose t∈[−T,T], using formula (7), we obtain

By Lemma 3,

Since −T≤t≤T,

We can calculate \(\int _{0}^{\infty } K_{\alpha }(t) {dt}=\frac 1 {2a\alpha }\).

Since [sincΩ(t−nh)]′ is bounded and

we have

By the estimates above, we have

7.2 Proof of Theorem 2

By the proof of Theorem 1, we can see that

as σ→0.

Since [Kα(t)]2≤|Kα(t)|,

Lemma 4

where \(A_{k}^{l}=\prod _{j=1}^{l} (k-j+1)\) and \(A_{k}^{0}=1\).

Proof

We can prove it by integration by parts:

□

Lemma 5

If f is band-limited, then

Proof

Since \( (\text {sinc} \Omega t)^{(k)}\,=\, \frac 1 {2\Omega } \int _{-\Omega }^{\Omega }(i\omega)^{(k)}e^{i\omega t}d\omega \) is bounded on (−∞, ∞),

is uniformly convergent.

where \(A_{k}^{l}=\prod _{j=1}^{l} (k-j+1)\) and \(A_{k}^{0}=1\) by lemma 4 □

Lemma 6

For t∈[−T,T], if k is even

and if k is odd

as α→0.

Proof

If k is even, this is of the order \(O\left (\frac {a^{k+1}} {\alpha a a^{2k+2}}\right)=O\left (\alpha ^{k/2}\right)\).

If k is odd, this is of the order \(O\left (\frac {a^{k}} {\alpha a a^{2k+2}}\right)=O\left (\alpha ^{(k+1)/2}\right)\). □

Lemma 7

For t∈[−T,T],

Proof

by Lemma 6. □

8 Proof of Theorem 3

where

by lemma 5,

by lemma 7, and

This implies

Availability of data and materials

NA

References

Y. An, C. Shao, X. Wang, Z. Li, in IEEE International Conference on Intelligent Control and Information Processing August 13-15. Geometric Properties Computation for Discrete Curves Based on Discrete Derivatives, (2010), pp. 217–224. https://doi.org/10.1109/icicip.2010.5564203.

P. Cardaliaguet, G. Euvrard, Approximation of a function and its derivative with a neural network. Neural Netw.5(2), 207–220 (1992).

S. Haykin, Kalman filtering and neural networks (Wiley, 2004). https://doi.org/10.1002/0471221546.

Z. Levnajic, A. Pikovsky, Untangling complex dynamical systems via derivative-variable correlations. Sci. Rep.4(5030) (2014).

C. E Shannon, A mathematical theory of communication. Bell Syst. Tech. J.27: (1948). https://doi.org/10.1109/9780470544242.ch1.

J. R Higgins, Sampling Theory in Fourier and Signal Analysis (Oxford Science Publications, 1996).

R. J. Marks II, Noise sensitivity of band-limited signal derivative interpolation. IEEE Trans. Acoust. Speech Sig. Process.ASSP-31:, 1028–1032 (1983).

A. Ignjatovic, Chromatic derivatives and local approximations. IEEE Trans. Sig. Process.57(8) (2009).

Z. Qian, C. -L. Fu, X. -T. Xiong, T. Wei, Fourier truncation method for high order numerical derivatives. Appl. Math. Comput.181(2), 940–948 (2006).

L. Qian, D. B. Creamer, Localization of the generalized sampling series and its numerical application. SIAM J. Numer. Anal.43:, 2500–2516 (2006).

C. A. Micchelli, Y. Xu, H. Zhang, Optimal learning of bandlimited functions from localized sampling. J. Complex.25:, 85–114 (2009).

L. Qian, D. B. Creamer, Localized sampling in the presence of noise. Appl. Math. Lett.19:, 351–355 (2006).

C. De Boor, A Practical Guide to Splines (Springer Verlag, New York, 1978).

M. Hanke, O. Scherzer, Inverse problems light: numerical differentiation. Amer. Math. Monthly. 108(6), 512–521 (2001).

A. G. Ramm, A. B. Smirnova, On stable numerical differentiation. Math. Comp.70:, 1131–1153 (2001).

Y. B. Wang, Y. C. Hon, J. Cheng, Reconstruction of high order derivatives from input data. J. Inv. Ill-Posed Probl.14(2), 205–218 (2006).

L. W. Qian, On the regularized Whittaker-Kotel’nikov-Shannon sampling formula. Proc. Am. Math. Soc.131:, 1169–1176 (2003).

G. Schmeisser, Interconnections between multiplier methods and window methods in generalized sampling. Sampl. Theory Sig. Image Process.9:, 1–24 (2010).

W. Chen, An efficient method for an ill-posed problem—band-limited extrapolation by regularization. IEEE Trans. Sig. Process. 54:, 4611–4618 (2006).

A. N. Tikhonov, V. Y. Arsenin, Solution of Ill-Posed Problems (Winston/Wiley, Washington, D.C., 1977).

W. Chen, The ill-posedness of the sampling problem and regularized sampling algorithm. Digit. Sig. Process.21(2), 375–390 (2011).

K. F. Cheung, R. J. Marks II, Ill-posed sampling theorems. IEEE Trans. Circ. Syst.CAS-32:, 481–484 (1985).

A. Steiner, Plancherel’s theorem and the Shannon series derived simultaneously. Am. Math. Monthly.87(3), 193–197 (1980).

W. Chen, Computation of Fourier transforms for noisy bandlimited signals. SIAM J. Numer. Anal.49(1), 1–14 (2011).

J. L. Brown Jr., On the error in reconstructing a non-bandlimited function by means of the bandpass sampling theorem. J. Math. Anal. Appl. 18:, 75–84 (1967).

A. Griesbaum, B. Barbara, B. Vexler, Efficient computation of the Tikhonov regularization parameter by goal-oriented adaptive discretization. Inverse Probl. 24:, 1–20 (2008).

M. Belge, M. E. Kilmer, E. L. Miller, Efficient determination of multiple regularization parameters in a generalized L-curve framework. Inverse Probl.18:, 1161–1183 (2002).

M. E. Kilmer, D. P. O’leary, Choosing regularization parameters in iterative methods for ill-posed problems. SIAM J. Matrix Anal. Appl. 22(4), 1204–1221 (2001).

Acknowledgments

The author would like to express appreciation to Professor Charles Moore for his very important discussion in the course of this paper.

Funding

NA

Author information

Authors and Affiliations

Contributions

NA

Corresponding author

Ethics declarations

Consent for publication

NA

Competing interests

The author declares that there are no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Chen, W. The ill-posedness of derivative interpolation and regularized derivative interpolation for band-limited functions by sampling. EURASIP J. Adv. Signal Process. 2020, 32 (2020). https://doi.org/10.1186/s13634-020-00668-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13634-020-00668-5