1. Introduction

Synthetic aperture radar (SAR) is a widely used remote sensing imaging system, which can produce high-resolution images and work under all time and all-weather conditions [

1]. SAR images have found wide applications in resources, environment, archeology, military and so on [

2]. SAR image have a variety of landforms, such as rivers, crops and residential areas. When interpreting SAR images, it is usually necessary to understand these different landforms as independent regions. The interpretation of an image is to get a reasonable description of the image after a series of processing. An important issue in the SAR image applications is the correct segmentation and identification of objectives [

3]. As a basic work of SAR image interpretation, SAR image segmentation is to divide a SAR image into several non-overlapping and coherent regions. The pixels in the same region have similarities, while the pixels between different regions have different features [

4]. Dividing SAR images into these meaningful areas helps to understand the image from a high level and is convenient for further processing and analysis. However, due to the special imaging mechanism, the SAR image itself contains many speckle noises [

5]. This multiplicative noise makes the processing of SAR images very challenging.

According to the complexity of texture, the application scenarios of SAR image segmentation algorithm can be divided into simple texture (farm crops, water, etc.) scenes and complex texture (residential areas, etc.) scenes. Among various methods for SAR image segmentation, pixel-level algorithms have achieved good results. Since the processing unit is a single pixel, these methods can preserve the detailed information in the image. It also makes the algorithm susceptible to noise. These algorithms are usually suitable for simple texture scenes. In contrast, regional algorithms tend to outperform the pixel-level ones. A superpixel is a small image area having a uniform appearance generated by dividing an original image. These algorithms can preserve the structural information and improve the computational efficiency by replacing thousands of pixels with hundreds of superpixels [

6]. However, since the mixed superpixels may exist in the over-segmented image, the segmentation effect largely depends on the over-segmentation algorithm. Neither of these two algorithms can accurately achieve the semantic segmentation for SAR images. Texture analysis and deep learning methods are effective tools of SAR semantic segmentation [

7,

8,

9]. Deep learning methods are usually supervised and have high requirements on the sample data volume. In addition, texture analysis methods usually need classifier and most of them are only suitable for specific scene. As in reference [

7], an unsupervised algorithm to identify the flooded areas from SAR images based on texture information derived from the grey-level co-occurrence matrices (GLCM) texture analysis. This method is only useful when identifying flooded and dry areas.

The pixel-level segmentation algorithm assigns a label to each pixel in the image. The most common one is the clustering algorithms. These methods usually obtain cluster centres by multiple iterations and the similarity measure [

10]. Fuzzy techniques are widely used in clustering and the most representative one is fuzzy C means algorithm (FCM) [

11]. The main idea of FCM algorithm is to decrease the value of the objective function by updating a fuzzy membership matrix and cluster centres iteratively and deblurring the membership matrix to get the cluster results [

12]. Xiang et al. [

13] proposed a kernel clustering algorithm with fuzzy factor (ILK_FCM), which used the local information of the image to execute wavelet transform. They also designed a new weight blur factor and added the kernel metrics in the iterative process. Shang et al. [

14] proposed the immune clone clustering algorithm based on non-information (CKS_FCM). This algorithm first uses a multi-objective optimisation method to determine the cluster centres and the non-local mean information are added in the clustering process to overcome the effect of speckle noises. To solve the problem that clustering all pixels reduces the efficiency of the algorithm, Pham et al. [

15] proposed to process SAR images point-by-point, which extracted key points in the image and only these key points are processed during the clustering. Based on this, Shang et al. [

16] put forward the fast key pixel fuzzy clustering (FKP_FCM) algorithm. They only perform fuzzy clustering on key pixels and the clustering results are used to determine the labels of non-key pixels. This method can get very accurate segmentation results in a relatively short time.

The superpixel-level methods usually over-segment the image and extract the features of each superpixels, such as intensity [

17], texture [

18], edge [

19] and so on. Then these features are used to classify the superpixels, pixels in the same superpixel have the same label. Including mean shift [

20], a lot of over-segmentation algorithms have been proposed such as normalized cuts (Ncuts) [

21], graph-based [

22], watershed method [

23], Turbo superpixels method [

24] and simple linear iterative clustering (SLIC) [

25]. SLIC is prominent among these algorithms, since it can produce superpixels quickly without sacrificing much of the segmentation accuracy [

26]. SLIC uses k-means clustering method to efficiently generate superpixels, which is proposed by Achanta et al. [

25]. However, pure superpixels and mixed superpixels both exist in the SAR images. The pixels in the pure superpixels are from a single class and the mixed superpixels are composed of pixels with different labels, which can seriously affect the accuracy of segmentation [

6]. Therefore, when using regional algorithms to segment SAR image, the mixed superpixels should be processed accordingly. To this end, Xiang et al. [

27] proposed a novel superpixel generation algorithm that used the pixel intensity and position similarity to modify the similarity measure of SLIC algorithm, so the proposed algorithm is suitable for SAR images. In order to improve the boundary compliance of superpixels, Zou et al. [

28] proposed that the SAR images can be modelled by a generalised gamma distribution to use the likelihood information of SAR image pixel clusters and the small isolated regions are removed in post-processing, which combined the spatial context and likelihood information. Qin et al. [

29] improved cluster centre initialisation and introduced the SLIC method into polarimetric synthetic aperture radar (PolSAR) image processing, where the modified Wishart distance revised by the symmetric and was used for local iterative clustering. Wang et al. [

30] integrated two different distances to measure the dissimilarity between adjacent pixels and introduced the entropy rate method into the oversegmentation of the PolSAR image. Jiao et al. [

6] proposed a new concept, fuzzy superpixels, to reduce the generation of mixed superpixels. According to fuzzy superpixels, this method divided the pixels in the image into two parts: superpixels and pending pixels. The pending pixels are pixels in the overlapping search regions, which need further decision. That is, in fuzzy superpixels, not all pixels are assigned the same label.

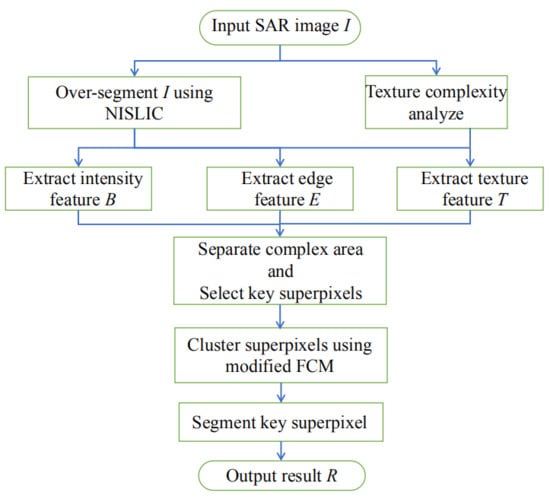

The main problems to be solved in this paper is how to achieve land cover classification of SAR image and how to deal with the existence of mixed superpixels. Generally speaking, the more complex the application scenario and the greater the impact of noise, the more likely it is that mixed superpixels will appear. Therefore, in order to overcome the influence of mixed superpixels and speckle noise and improve the adaptability of the algorithm in different application scenarios, inspired by [

16], we used superpixels to extend the key point idea to the regional level and proposed a method based on texture complexity analysis and key superpixel to achieve semantic segmentation. FCM can quickly converge and obtain accurate results, so we used the modified FCM to achieve the initial classification of superpixels. Since pixel-level method give each pixel a label and the pixels in mixed superpixels belong to different category, the pixel-level information is used to segment key superpixels. Specifically, the proposed algorithm calculates the texture complexity of the input image by a new texture analysis method, then neighbourhood information simple linear iterative clustering (NISLIC) is used to over-segment the image. For images with high texture complexity, the complex areas are first separated and key superpixels are selected according to certain rules. For images with low texture complexity, key superpixels are directly extracted. Finally, the superpixels are pre-segmented by fuzzy clustering based on the extracted features and the key superpixels are processed at the pixel level to obtain the final result. To make the result more smooth, we used a filtering method in the segmentation of key superpixels. The proposed algorithm not only uses texture analysis to improve the algorithm’s ability to segment complex texture scenes, but also overcomes the limitations of the over-segmentation algorithm on final result through the positioning and processing of key superpixels. In addition, the pixel-level information is used to determine the category of pixels in the key superpixels, which can retain more details and improve the accuracy of the segmentation.

The followings are the contributions of this paper:

- (1)

To achieve land cover classification of a SAR image, a new texture complexity analysis method is proposed. The texture complexity of the input image is calculated using the change frequency of the grey value and the whole information can be grasped simply. The texture feature of each superpixels is also defined by this method which can separate superpixels located in complex landforms and superpixels located in simple landforms.

- (2)

To overcome the impact of noise in over-segmentation, a new generation algorithm is proposed to improve the robustness of the superpixel generation to speckle noise, which uses the neighbourhood information of pixel to modify the similarity measure.

- (3)

To deal with the existence of mixed superpixels, the concept of key superpixels is proposed. Key superpixels are mixed superpixels selected by certain rules. So, the segmentation no longer relies on the superpixel generation algorithm.

The remaining parts of this paper are mainly constructed as follows:

Section 2 details the algorithm proposed in this paper,

Section 3 introduces the experimental setups and algorithm evaluation.

Section 4 analyses experimental segmentation results and the fifth section is the discussion.

5. Discussion

This paper used four kinds of images to verify the advancement of proposed algorithm: simulated SAR image, real SAR images, large SAR image and texture images.

For the simulated SI1 image with different intensity, the advancement of TKSFCM is shown in over-segmentation and the location of mixed superpixels. The results obtained from the clustering of superpixel pixels have good consistency and the mixed superpixels are processed with pixel-level features to overcome the limitation of the superpixel generation algorithm on the segmentation results. For the simulated SI2 image with complex area, the advancement of proposed algorithm is shown by segmenting texture area. However, the segmentation of edge area in this image is not ideal. It is because pixel-level information used in the key superpixel segmentation is greatly affected by noise and the impact on edge area caused by using neighbourhood information is not considered. TKSFCM can obtain relatively high segmentation accuracy no matter the image with high noise or low noise content, which fully shows that the proposed algorithm is more robust.

For the Maricopa image and the Chinalake image, the advancement of TKSFCM is shown in capturing the edge area. It can be seen from experiment data that the proposed algorithm has better ability to maintain details. TKSFCM can more completely segment the edges in the image, but the phenomenon of edge width and actual situation appears. Since the points where the superpixels are all structural information are given the same class mark. For the Chinalake image, Terracut image and Ries image, the advancement of the proposed algorithm is shown in segmentation of residential area. Since the key superpixels proposed in this paper are combined with fuzzy clustering and the results obtained are coherent as a whole and retain detailed information, the results on the real SAR image are ideal. For the large SAR image, the proposed algorithm satisfies both efficiency and accuracy.

For texture images similar to SAR images, the proposed algorithm can achieve ideal segmentation and the results are robust to speckle noise. For images contains two different texture, TKSFCM can segment them effectively. For images contains textures with similar intensities, the segmentation result is not ideal. Overall, the proposed texture descriptor is more suitable for SAR images with speckle noise. It is not the best choice to segment texture images. There are many algorithm worth considerate such as [

52,

53].

6. Conclusions

This paper has proposed a new semantic segmentation method for SAR image, named TKSFCM. Semantic segmentation is achieved by using both a texture complexity analysis method and key superpixels. Texture complexity analysis is used on the input image, and mixed superpixels are selected as key superpixels. Firstly, in order to grasp the texture information of the image, a new texture information extraction method is proposed. This method can effectively distinguish complex texture areas and simple texture areas. The result of TKSFCM (e.g.,

Figure 17) shows that the urban area Ries image is divided as a whole. Compared with SFFCM, which also achieves semantic segmentation, the accuracy is improved by 25.36%. Therefore, SFFCM can achieve effective semantic segmentation results for SAR images. Meanwhile, to overcome the problem that the traditional SLIC does not consider the influence of noise, this work improves SLIC by using the neighbourhood information of the pixel instead of a single pixel to constrain the generation of superpixels. This new method is called NISLIC. It can be seen from

Figure 10 that for Chinalake image with low noise, NISLIC can obtain super-pixels with low influence of noise and high edge fitness. Furthermore, the key point idea is expanded to the regional level, and the mixed super-pixels generated over-segmentation are selected as the key super-pixels for processing. Thus, the edge area can be accurately grasped and segmented. The results on the Maricopa images (e.g.,

Figure 14) show that the edge segmentation effect of TKSFCM is more accurate and smoothing. The edge segmentation ability is verified and the rk of TKSFCM is high. It means that most pixels belonging to the water area are correctly labeled. In addition, TKSFCM is efficient and accurate on large SAR images (e.g.,

Figure 19 and

Table 6).

For the four real SAR images, the average of SA generated by our method is at least 13.16% higher than those of the compared algorithms, which shows the effectiveness of TKSFCM. However, there are still some pixels are wrongly labeled. This is because the global information is not considered when performing fuzzy clustering on superpixels. The guidance information to segment the key superpixels is only the result of fuzzy clustering, which thus has some certain limitations. The texture complexity analysis method can only distinguish regions with a large feature gap.