Abstract

A comprehensive framework for model validation and reliability assessment of container crane structures is pursued in this paper. The framework is composed of three phases. In phase I, a parameterized finite element model (FEM) of a typical type of container crane structure at Yangshan deep-water port, Shanghai, is developed for simulating the structure performance at controllable input/parameter settings. Full-scale experiments are conducted at multiple locations of the container crane for the validation assessment of the parameterized FEM. In phase II, high fidelity reliability model (HFRM) of the crane structure is constructed by incorporating the parameterized FEM, failure criteria, and uncertainty from multiple sources. To alleviate the computational burden for running finite element analysis at each function evaluation, surrogate modeling techniques, i.e., the polynomial response surface (PRS) and artificial neural networks (ANN) are applied to build lower fidelity reliability models (LFRM) using simulations generated from the high fidelity reliability model. Quantitative validation metrics, i.e., the area metric and u-pooling metric are applied to assess the degree to which the surrogates represent the high fidelity model in presence of uncertainty. Finally in phase III, the reliability of the container crane structure is assessed by estimating the probability of failure based on the surrogates of the high fidelity reliability model through structure reliability methods. The proposed framework provides a scientific and organized procedure for model validation activities, surrogate model building, and efficient reliability assessment to container cranes and other complex structures in engineering if necessary.

Similar content being viewed by others

1 Introduction

Container cranes are heavy duty equipment that work at quayside container terminals for loading and unloading containers from container ships (Zrnic et al. 2005). To keep up with the increasing transportation demand as well as capacity that result from the prosperity of global trading, quay cranes often running nonstop day and night swing from ship to shore for repeating the picking up and setting down of volume. Therefore, it is critical to ensure the operational safety and structure reliability of a container crane for its productivity and working efficiency.

There are multiple sources of uncertainty that result from the variations in the weight and traveling location of the containers, fluctuations of material properties and geometric measures, environmental conditions, and etc. (Alyami et al. 2019; Soderberg and Jordan 2007; Azeloglu et al. 2014). Therefore, vigorous approaches for assessing the reliability of container crane structures impose challenges. One of the important aspects for structure reliability assessment is the propagation of uncertainty from the input variables to the system response of interest (Ditlevsen and Madsen 1996). In the work by Lee and Chen (2009), existing methods for uncertainty propagation were classified into five categories, i.e., (1) the simulation-based methods, (2) the local expansion-based methods, (3) the most probably point (MPP)-based methods, (4) the functional expansion-based methods, and (5) numerical integration-based methods. The representatives of the first category are the classical Monte Carlo simulation (MCS) (Madsen et al. 2006; Der Kiureghian 1996) and importance sampling (Melchers 1989; Engelund and Rackwitz 1993). These methods rely on random sample generated from the joint CDF of the input variables for analyzing the probability of failure. Methods like the Taylor series expansion or perturbation methods (Madsen et al. 2006; Der Kiureghian 1996; Ghanem and Spanos 1991) belong to the second category, this type of methods only suits for linear problems that with small input uncertainty. The most well-known MPP-based approaches are the first order reliability methods (FORM) and second order reliability methods (SORM) (Der Kiureghian and Dakessian 1998; Jackson 1982), and these methods assessing the reliability by searching for the most probable point on a limit state function. The accuracy of this category highly depends on the searching algorithm and the complexity of the analyzing object. A common type of the function expansion-based methods is the polynomial chaos expansion (Ghanem and Spanos 1991; Xiu and Karniadakis 2003), which is a series of orthogonal polynomials for representing stochastic quantities. The numerical integration-based methods estimate the statistical moments of the system output by direct numerical integration (Evans 1972; Seo and Kwak 2002; Sang and Kwak 2006; Rahman and Xu 2004; Xu and Rahman 2010). By incorporating the information of the statistical moments, the probability distribution of the output can be approximated by the empirical distribution systems (Johnson et al. 1994). The reliability can be assessed by evaluating the tail region probability of the empirical distribution. All these methods are applicable to black-box type models (Lee and Chen 2009), but generally have their pros and cons when facing specific problems. Among the aforementioned approaches, the applicability of the MCS method is most versatile compared with other alternative approaches, and it has no restrictions to the form of a limit state function, for example, the degree of nonlinearity, the problem dimension, and other aspects of complexity. As long as the sampling size is large enough, the reliability assessment by MCS is mostly convincing.

As the full-scaled experiments for the crane structure is often expensive and scarce, performance prediction of which has been extensively relied on the use of computational models, most frequently the finite element models (FEM). The reliability assessment of a crane structure requires a significant amount of repeated function evaluations from its FEM. The probability of failure of engineering structures is usually quite small (Ditlevsen and Madsen 1996), in order to make a plausible estimation of which, the simulation-based approach generally requires a large number of evaluations of the limit state function. For MPP-based approaches (Der Kiureghian and Dakessian 1998; Jackson 1982) and numerical integration-based methods (Evans 1972; Xu and Rahman 2010), depending on the convergence rates and the problem dimensionality, they generally require not less than a few thousands runs. Each trial run of the limit state function requires calling the FEM in ANSYS, which may take several seconds or even longer. Therefore, application of these methods directly based on the FEM will be extremely time consuming and sometimes infeasible. To address this issue, surrogate models are used instead of the FEM for carrying out the uncertainty quantification process with better efficiency (Ender and Balestrini-Robinson 2015; Montgomery 2012). A surrogate model is simpler and faster representations of a computationally expensive simulation model, which is usually built by doing regression according to the input and output data from the simulation object. There are several types of surrogate models, the most commonly used are the polynomial response surface (PRS) model (Bucher and Bourgund 1990; Gunst et al. 2016; Box 2008), artificial neural network (ANN) model (Klopf 1988; Murata et al. 1994), Gaussian process model (Rasmussen 2006), and etc.

The extensive usage of computational models and surrogate models leads to another problem for structural reliability assessment, which is the validity of these models for conducting the analysis. Thus, it is important to not only validate a computational model against experimental data, but also assess the predictive capability of a surrogate model by using simulation data from high fidelity computational model in presence of input uncertainty. Model validation is the process for determining the degree to which a model is an accurate representation of the real world from the perspective of the intended use of the model (Oberkampf et al. 1998; Oberkampf et al. 2004; ASME 2006; Sornette et al. 2007). In order to assess how well the models represent the real physical structure, quantitative validation metrics need to be employed. Existing metrics for model validation are classified into four categories by Liu and Chen (2011), i.e., the hypothesis testing-based metric (Hills and Trucano 2001), frequentist’s metric (Oberkampf et al. 2004; Oberkampf and Barone 2006), Bayes factor (Rebba and Mahadevan 2006; Rebba et al. 2006), and area-based metric (Ferson and Oberkampf 2009; Ferson et al. 2008; Li et al. 2014). Among these four categories, the area-based metric has many desired features for a validation metric (Oberkampf et al. 2004; Liu et al. 2011). It directly measures the difference between the overall distribution of the model prediction and the empirical distribution of the observations. More importantly, the area metric can be further extended to u-pooling metric for models with dynamic or multiple responses by using probability integral transformation (PIT) (Li et al. 2014; Angus 1994; Li et al. 2016). This allows for incorporating data collected from multiple validation sites or response quantities into a single measure for assessing the overall model credibility.

Above all, most existing works for crane structure reliability mainly focus on developing the state of art approaches for estimating the reliability, but the importance of validation assessment for ensuring credibility of the models used for conducting the analysis is overlooked. Therefore, the aim of this paper is to develop a comprehensive framework for guiding the model validation and reliability assessment activities of container crane and complex engineering structures. In the remainder of this paper, a short introduction of the proposed framework that consists of three phases is presented in Section 2. Section 3 introduces the construction and validation of computational models for container crane structure in phase I. Section 4 involves the high and low fidelity reliability model of phase II. The final reliability assessment of phase III is provided in Section 5. The summary of conclusion of this work is given in Section 6.

2 Proposed framework for model validation and reliability assessment of container crane structures

In order to guide the structure reliability analysis of container cranes in a scientific and organized way, a new framework for integrating the model validation activities and reliability assessment of container crane structures is proposed in this work. The essential elements as well as the interaction activities involved in the framework are presented in Fig. 1, which help to demonstrate the technical work flow of the overall process. Most of the current design and analysis of container crane structures are based on computational models, and among these studies, a significant amount is built by the finite element method. Thus, it is assumed that a finite element model (FEM) of the container crane structure is available at the very beginning of the whole process for model validation and reliability assessment of a container crane structure.

As shown in Fig. 1, the proposed framework is composed of three phases, i.e., phase I: experimental validation of the computational model of the container crane structure, phase II: constructing and validation of the reliability model of the container crane structure, and phase III: assessing the structure reliability. During phase I, a parameterized FEM is constructed for by combing FEM codes with critical parameter selections. The parameterization gains more flexibility than the original FEM as it allows for an automatic simulation of the structure at different input settings. Full-scale experimental tests of the container crane structure are conducted for generating the essential observations of the actual crane structure. Then the experimental observations are compared with the simulation data to test the credibility of the computational model. If the accuracy of the FEM is acceptable, it will be further used for the following phase. Otherwise, the model should be updated or modified until it reaches the analysts’ expectation. Generally, there are two sources of uncertainty that should be quantified before using the computational model for representing the structure in reality. One is the uncertainty in model parameters due to imperfect knowledge, and the other is the model discrepancy or bias that caused by approximations. There are a bunch of methods that have been developed in literature for learning the two sources of uncertainty through model calibration and bias correction by combing simulation data with physical observations, which can help to obtain an accurate prediction of the physical reality (Kennedy and O'Hagan 2001; Higdon et al. 2004; Bayarri et al. 2007). The interesting readers can refer to these articles for more detailed information on refining the computational model.

In phase II, the reliability model of the container crane structure, also known as the limit state function, is constructed by incorporating the parameterized FEM with the failure criteria of the structure. This reliability model is addressed as the high fidelity reliability model (HFRM) in this paper considering that the FEM is sophisticated and accurate. In order to obtain an accurate estimation of the reliability index or probability of failure of the container crane structure, a large number of function evaluations need to be conducted corresponding as the reliability model. Each function evaluation implies one finite element analysis of the structure. Therefore, reliability assessment of the container crane structure based on the HFRM can be computationally expensive and time consuming. In order to mitigate the computational cost, lower fidelity reliability model (LFRM) is generated based on the input and output data of the HFRM by employing surrogate modeling techniques during this phase. Simulations from LFRM are compared with extra observations from the HFRM using quantitative validation metrics for assessing the predictive capability of a LFRM. If the validation metric indicates that the LFRM is adequate for representing the HFRM, it will be used later in the reliability assessment, or else the LFRM will be updated or replaced by other surrogate models. The accuracy of the LFRMs highly depends on the adequacy of the training samples generated by the HFRM, the selection among alternative surrogate techniques, and the underlying function structure of the HFRM. Intuitively, adding more training samples will help gaining accuracy for the LFRMs. If not, it is suggested to try more forms of surrogates in order to achieve a good agreement between the HFRM and the LFRM.

Finally in phase III, the reliability index or probability of failure of the container crane structure is estimated using structure reliability methods such as FORM, SORM, Monte Carlo simulation, and numerical integration-based methods.

3 Phase I: construction and validation of the computational model for container crane structures

3.1 Finite element model (FEM) of the quayside container crane structure

A typical type of quayside container crane that operates at Yangshan deep-water port in Shanghai, China, is considered in this paper. A FEM of the crane structure is built in ANSYS for further analysis. The fundamental structure of the quayside container crane model is shown in Fig. 2. The crane structure system is mainly composed of three subsystems, i.e., (1) the portal frame subsystem (red tag), (2) the pull rod subsystem (green tag), and (3) the girder subsystem (purple tag). As shown in the picture, the portal frame subsystem provides support for the crane structure, which including the seaside and landside portal legs, the upper seaside and landside beams, the lower seaside and landside beams, portal beams, the trapezoidal frame, and the lower diagonal brace. The pull rod subsystem consists of the inner and outer forestay, the backstay, and the upper diagonal brace, which are mainly linking elements that cooperate to control the rising angle of the boom. The girder subsystem is a combination of the trolley girder and the boom, and they are steel structures that connected by hinges and welding. Due to the impact of from the container load the traveling trolley, the girder subsystem is often suffered from metal fatigue that induces the failure of the structure.

There are four types of elements defined for the structure FEM, i.e., the BEAM188, PIPE288, LINK180, and MASS21 elements. The first three types are defined for structure elements such as the boom, beams, portal legs, pull rods, and braces, while the mass element is defined for the static units such as the control center machine room, the front and rear pulley sets, and cables. The FEM consists of 1281 nodes and 910 elements in total. The structure is fixed at four places, which are the intersections of the four portal legs with the lower seaside beams. As the trolley travels back and forth on the girder and the boom during operation, the loads from the trolley, the container, as well as the lifting equipment can be added on multiple locations of the crane one at a time to simulate performance of the structure.

3.2 Parameterized model of the quayside container crane

Because the system input variables are treated as random variables instead of deterministic values during structure reliability assessment, an accurate estimation of the reliability index and the probability of failure (PoF) requires a significant amount of performance function evaluations, either through the sampling based (Madsen et al. 2006; Der Kiureghian 1996) or the numerical integration-based approaches (Evans 1972; Xu and Rahman 2010). In this case, each performance function evaluation is an individual running of the FEM at distinct input settings for extracting the related result. To make this step automatically, a parameterized model of the FEM is needed. The ANSYS Parametric Design Language (APDL) is connected with compatible MATLAB codes to manipulate the settings of inputs for gathering the associated simulations of the output. The parameterized model can be treated as an implicit function in a computational environment, in which the function inputs are the model parameters/variables, and the function outputs are the response of interests about the FEM.

There are plenty of model parameters/variables in the FEM for the crane structure, and it is impossible to treat all of them as design variables. The key is to select critical parameters/variables as inputs of the parameterized model, and the response quantities of interests as outputs of the model. Intuitively, the variations in the parameters/variables that have greater impact to the reliability of the structure are more preferred. For instance, the uncertainty in the load from the trolley, the grabbing equipment, and the container, which is denoted by 2 × P, directly affect the performance of the structure. Therefore, the load P is picked as one of the input to the parameterized model. The uncertainty in the material properties can also contribute to the failure of a structure. Hence the Yang’s modulus E, a material parameter that controls the whole structure, is selected either. Based on the working experience with the quayside container crane, the boom, the forestays, and the backstays are highly critical components for the safety of the structure. As a result, the dimensional parameters that related to these components are chosen as the parameterized model inputs. There are totally 11 dimensional parameters, in which L1, L2, and L3 are three length parameters that control the lengths of the boom, as shown in Fig. 2. The rest of the dimensional parameters are the cross-section dimensions as shown in Fig. 3, where t1, t2, w1, and w2 are the dimensional parameters of the box-type cross section of the boom, and t3, t4, w3, and w4 are the dimensional parameters of the I-type cross section of backstays and forestays. Outputs of the parameterized model are selected according to the response quantities of interests. Under the context of structure reliability assessment, the quantities of interests are the displacement and stress in the structure. Therefore, providing that the nodal displacement and stress respectively denoted by δ and σ, the parameterized model of the FEM can be expressed as follows:

in which nodeID represents the specific node that associated with the determined result among the displacement/stress field.

3.3 Experimental validation of the computational model

Since the reliability analysis is performed based on the computational model instead of the structure in reality, it is crucial to make sure that the accuracy of the computational model is satisfactory. In this step, full-scale experimental tests are designed and conducted at selected locations of the structure to obtain some real-world data of the crane structure in comparison with the parameterized model under same input settings.

In order to offset the influence from systematic error and structure weight, two testing conditions, i.e., testing condition 1 (TC1) and testing condition 2 (TC2) are designed. In TC1, the load (container weight: 60 tons) is not attached to the lifting equipment and the trolley, while in TC2, the load is lifted by the trolley and the grab. The trolley and the grabbing equipment are located at the maximum traveling distance on the boom under both testing conditions. Therefore, the difference between the testing data from TC2 and TC1 is essentially caused by container load. The arrangement of the measurement locations is shown in Fig. 4, and the explanation about these locations and the related measurement quantities are shown in Table 1. The displacement δ is measured at six locations on boom, i.e., D0, D1, D2, D3, D4, and D5, in which D0 is taken as a reference point for the rest of the others, while the stress σ is measured at three locations on boom, that is A-S2, B-S1, and B-S3. Two sets of experimental tests are conducted under TC1 and TC2 respectively for obtaining the measurement data of the displacement δ and stress σ at these locations.

On the other hand, the computational model is simulated at identical test settings to generate data for comparing with the validation data. The comparison between the experimental data and the simulations of the displacement and stress are shown in Fig. 5 and Fig. 6 respectively. In Fig. 5, the experimental data are represented by yellow squares with error bars, while the simulation data are shown as blue circles. All simulations lie inside the 5% error bars, which means the relative errors are less than 5%. For the stress comparison shown in Fig. 6, relative errors of simulations are less than 3%. Therefore, data in both figures show that the simulations from the computational model match really well with the data from physical tests. The root mean squared error (RMSE) is 6.31 mm for the displacement and 0.48 MPa for the stress. Therefore, the credibility of the computational model is acceptable and will be employed for further analysis. The FEM in this paper is actually a mature model for the tested quayside container crane structure in reality. There is very limited room left for model refinement. In the case that the discrepancy between computational model simulation and data is significant, the model should be checked, calibrated, or updated till satisfactory.

4 Phase II: reliability models of the crane structure

When the reliability of a structure is considered in engineering practice, there can be many possible failure modes that associated with the structure. Each failure mode corresponds to a failure criteria and a limit state function about the structure performance. For demonstration purpose, only two failure criteria are considered in this paper. One is regarding to the maximum deformation that permitted by the regular operation of the system, and the other is concerning the strength of the materials that build the structure, i.e., the allowed maximum stress. Validation of other structure capabilities will follow the same validation strategy if necessary. Therefore, the structure failure is further described as either the maximum displacement δ max larger than the allowable displacement [δ ], or the maximum stress σ max exceeds the allowable stress [σ ], which can be written as follows:

4.1 High fidelity reliability model (HFRM)

Guided by the failure criteria shown in (2), the remaining task is to determine the corresponding quantities of the quayside crane structure. During operation, the trolley lifts the grab with the container and travels on its track. The structure performance changes when the trolley is located at different sites of the structure. In order to determine the trolley location that induces the maximum displacement δ max and stress σ max, the parameterized FEM is simulated under 8 critical load settings for comparison. It turns out that both maximum stress σ max and displacement δ max appear around the load when the trolley is located at D1, which is the furthest location that allowed by the trolley. Therefore, the load P will be added at D1 in the parameterized FEM that used for constructing the reliability model. According to the design specification of this quay crane, the allowable displacement [δ ] at the end of the boom is as follows:

where Ltot is the total length of the boom. The boom is made of Q345 steel with the yield strength σ s of 345 MPa. Therefore, the allowable stress of the boom is as follows:

in which nwind is an empirical safe factor when the quay crane is working within wind environment considering the climate of Yangshan deep-water port.

Accordingly, the limit states of the quayside container crane can be written as follows:

and

The aim of the reliability model here is to calculate the structure probability of failure Pf, which is the probability of either the two limit state functions smaller than zero. Therefore, the reliability model can be expressed as follows:

For the reliability model shown in (7), the load P, Young’s modulus E, and all the dimensional parameters are treated as random input variables. The distribution parameters of which are shown in Table 2. This model is built upon the parameterized FEM shown in (1), which matches quite well with the real crane structure performance according to experimental validation. As a result, the reliability model in (7) is addressed as the high fidelity reliability model (HFRM) for the rest of the paper.

Theoretically, with the HFRM, the Pf can be estimated by many existing structure reliability methods, for instance, the simulation-based approaches like Monte Carlo method (Madsen et al. 2006; Der Kiureghian 1996) and importance sampling (Melchers 1989; Engelund and Rackwitz 1993), the most probable point (MPP)-based approaches such as the first order and second order reliability methods (Der Kiureghian and Dakessian 1998; Jackson 1982), and the numerical integration-based reliability methods (Evans 1972; Xu and Rahman 2010). However, the value of Pf for crane structure is usually quite small, in order to make a plausible estimation of which, the sampling-based approach generally requires at least hundred thousands of runs of the limit state function. As for the MPP-based approach and the numerical integration-based methods, depending on the convergence rate and the problem dimensionality, generally require not less than a few thousands runs. Each trial run of the limit state function requires calling the FEM in ANSYS, which may take several seconds or even longer. Therefore, application of these methods directly based on the HFRM will be extremely time consuming and sometimes infeasible. To address this issue, low fidelity reliability models (LFRM) are built instead of the HFRM in (7) to efficiently carry out the uncertainty quantification process.

4.2 Low fidelity reliability models (LFRMs)

The low fidelity reliability models (LFRMs) are simpler and faster representations of the HFRM that build by surrogate modeling techniques. These techniques often based on a regression of the statistical inputs and outputs from the more sophisticated high fidelity model. In this section, two types of LFRM are built based for representing the HFRM: i.e., (1) the LFRM based on polynomial response surface (PRS) and (2) the LFRM based on artificial neural networks (ANN).

4.2.1 LFRM based on PRS

The aim of the response surface method is to find an appropriate approximation for the underlying relationship between the output Gδ or Gσ and the input variables. As the form of the relationship between the responses of the limit state functions and the input variables is unknown, an explicit form must be assumed for the approximation. If the relationship between the response and the input variables is linear, the first-order polynomial model is often used. On the other hand, if the relationship is nonlinear, a polynomial model with higher order is usually the choice. Strictly speaking, an assumed polynomial form is not likely to be the true relation over the entire range of the input variables. However, for a relatively small space, it generally fits quite well (Ender and Balestrini-Robinson 2015; Montgomery 2012). Here, for building the LFRM based on PRS, the second order polynomial response surface model without cross-product terms is applied, which can be written as follows:

where G is the limit state output, and xi (i = 1,2,…,k) are the k input variables. In (8), a0 is the intercept regression coefficient, ai is the regression coefficient of a linear term, aii is the regression coefficient of a quadratic term, and εrsp is the error term that indicating the mean squared error (MSE).

Once the form of the response surface is determined, there are two tasks remaining for solving the problem. The first task is to design an appropriate sampling scheme for conducting numerical experiment on the HFRM; the second one is to apply comprehensive regression method for generating the response surface function. In this study, the classical response surface approach proposed by Bucher and Bourgund (1990) is applied for obtaining the PRS-based LFRM. Bucher’s sampling scheme for problems with two input variables x1 and x2 is illustrated in Fig. 7, where \( {\sigma}_{x_1} \) and \( {\sigma}_{x_2} \) are respectively the standard deviation of x1 and x2, f is the sampling coefficient, and (\( {x}_1^{\ast } \), \( {x}_2^{\ast } \)) is the coordinate of the sampling center x*, which is obtained by interpolating linearly from the mean vector μ to the design point xD in each iteration, i.e.,

Bucher’s sampling scheme (Bucher and Bourgund 1990)

The least squares method is applied for obtaining the regression coefficients. The readers are referred to (Bucher and Bourgund 1990; Rajashekhar and Ellingwood 1993) for more information on the technique details of the PRS-based approach for structural reliability analysis. The regression coefficients of the PRS-based LFRM for the limit state functions Gδ and Gσ are shown in Table 3.

4.2.2 LFRM based on ANN

As a comparison, another low fidelity reliability model (LFRM) is built by exploiting the relationship between the responses of the limit state function Gd and Gs and the input variables using artificial neural networks (ANN) (Klopf 1988; Murata et al. 1994). As a comprehensive machine learning tool, ANN-based regression has been gaining significant amount of attention in various engineering applications for model building. There are many training algorithms that can be used in ANN for building surrogates based on data from a high fidelity model, e.g., the gradient decent, Newton’s methods, scaled conjugate gradients, Bayesian regularization, and Levenberg-Marquardt algorithms (Bishop 1995; More 1977). The accuracy of these surrogates depends on the adequacy of training samples, the function form of the high fidelity model, and the settings of network. In this paper, the neural network regression is applied for limit state response predictions by training data generated from the HFRM. The form of the ANN-based LFRM model is written as follows:

where εann is the ANN model error that follows a zero mean Gaussian distribution, and the standard deviation of the distribution is the root mean squared error (RMSE).

A Latin Hypercube design with 10,000 samples of all input variables are generated and evaluated through the finite element model for obtaining the data source of the learning process. To determine the LFRM with the highest predictive power, a test set of 3000 data are separated from the training set (7000 data points). The fitted models will simulate at the input settings of the 3000 test sets after the training for demonstrating their performance. As the test set has no effect on training, the result of which will provide an independent measure of the predictive capability of the fitted models. Three types of parameter optimization algorithms are applied to training data set, i.e., the Bayesian regularization, Scaled conjugate gradient, and the Levenberg-Marquardt. Also, change in the structure settings of the neural networks will lead to different training results. Among the three algorithms with different neural network settings that have been tested, the Levenberg-Marquardt algorithm-based ANN (More 1977) with 2 hidden neurons produces the best training result. Therefore, it is selected as the low fidelity reliability model for the crane structure. The results for predicting the test data set are shown in Figs. 8 and 9, the RMSE is 8.90 × 10−3 for Gδ and 3.32 × 10−5 for Gσ respectively, and the coefficient of determination R between the predicted and actual response of the limit state response is 0.99982 for Gδ and 0.9998 for Gσ , which indicates a nearly perfect fit.

It should be noted that the selection of training algorithms and ANN structures can be different from one problem to the other. In order to obtain a desired LFRM for representing the HFRM, it is suggested to perform a group of training activities with different optimization algorithms and ANN structures until the training result reaches satisfaction.

4.3 Validation of the two LFRMs against extra observations from HFRM under uncertainty

In order to further assess the predictive capability of the two LFRMs in presence of input uncertainty, the area metric and u-pooling metric are employed for the validation assessment of the LFRMs. The validation data set is composed of 300 extra data points that randomly generated from the HFRM for Gδ and Gσ respectively.

4.3.1 Single response validation of the LFRMs by area metric

The area metric, which was originally suggested by Ferson et al. (2008), aims at measuring the disagreement between the complete distribution of the predictions and observations through a Minkowski-L1 distance (Rodrigues 2018), which can be expressed by the following:

where y is the model or system output and Fp(y) is the cumulative distribution function (CDF) of predictions by the model under validation. \( {S}_n^o(y) \) is the empirical cumulative distribution function (ECDF) of the observations from real physical experiment or models with higher fidelity, in which n denotes for the number of observations. The Minkowsiki-L1 distance \( d\left({F}^p,{S}_n^o\right) \) calculates the area difference between the CDF of the model prediction and ECDF of the observations. For a determined amount of data size n, a small area difference would indicate a better agreement between the model predictions and observations.

In this study, area metric in (11) is used for measuring the disagreement between the cumulative failure probability function of the LFRMs and the HFRM, i.e.,

The 300 validation data points are compared against with the simulations from the two LFRMs based on PRS and ANN. Figure 10 provides a graphical form of the area metric to the limit state of displacement Gδ , in which the cumulative failure probability function evaluated by LFRMs is compared with the ECDF of the 300 validation data for Gδ. The graphical comparison of the area metric between the PRS/ANN-based LFRM and the HFRM for Gσ is illustrated in Fig. 11. In both figures, the failure CDF predicted by PRS-based LFRM is shown by blue-shaded area, while the CDF of failure predicted by ANN-based LFRM is shown in purple-shaded area. The solid black curve stands for the ECDF of the validation data, and the dashed yellow curves are the 95% confidence bound of the empirical CDF. For both Gδ and Gσ , the failure CDFs from the two LFRMs are quite close to the ECDF of validation data from HFRM, and the CDF of failure predicted by ANN is almost overlap with the ECDF curve of validation. This indicates that the predictive capability of the ANN-based LFRM is better than PRS-based LFRM. Therefore, the reliability assessment by ANN-based LFRM is expected to be more accurate than that of PRS-based LFRM.

The area metric provides a straight forward way for comparing simulations of surrogate models with observations from high fidelity models. It compares the entire distributions of the model predictions and observations to draw a conclusion about the predictive capability of a model. The area metric is also applicable when only a few observation data points are available. However, it can only deal with single response problems at a single-input setting. For models with multiple responses, the area metric will independently yield multiple validation results for all the responses. This will cause problems when it comes to make decisions about the acceptance or reject a model because these results of area metric might lead to different conclusions that conflict with each other. Another issue about area metric is that it is not designed in universal scale; the metric value highly depends on the measurement unit of the response, which is a bit inconvenient for setting a model acceptance threshold.

4.3.2 Aggregated multiple response validation of the LFRMs by u-pooling metric

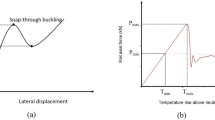

The u-pooling metric was proposed based upon the idea of the probability integral transformation (PIT) by Ferson et al. to provide an overall validation assessment for models with dynamic or multiple response by pulling data from multiple validation sites into a single aggregated measure (Ferson et al. 2008; Li et al. 2016). The underlying principle behind the u-pooling method is that the probability integral transform of any one dimensional continuous probability distribution is a standard uniform distribution (Li et al. 2014; Angus 1994). Assuming that the accuracy of a predictive model perfectly matches with the response of the system in reality, or in this case, a high fidelity model, then the observation data from physical experiment or high fidelity model resembling the random realizations of output from the predictive model. Consequently, substituting the validation data into the corresponding CDF of the predictive model will produce samples from the population of a standard uniform distribution. Therefore, the ECDF of these samples converges to the CDF of the standard uniform distribution as n increases. However, in most cases, a predictive model is imperfect. Hence, the produced samples will form a different ECDF compared with the CDF of the standard uniform distribution.

The transformation behind the u-pooling method for a model with three responses is illustrated in Fig. 12. As shown by the picture in the right hand side, the marginal CDFs of the three responses are denoted by \( {F}_1^p\left(\cdot \right),{F}_2^p\left(\cdot \right)\ \mathrm{and}\ {F}_3^p\left(\cdot \right) \)respectively, and there is one observation datum for each response, i.e., \( {y}^o=\left\{{y}_1^o,{y}_2^o,{y}_3^o\right\} \). Each observed datum is transformed by the PIT according to the corresponding CDF into a u value as shown in the picture in the left, i.e.,

Illustration of PIT of the u-pooling metric for a model with three responses (Li et al. 2014)

The ECDF (step function) of all the u values and the CDF of the standard uniform distribution are plotted in the left picture. The shaded areas indicate the amount of difference between the ECDF of the transformed observations and the transformed CDFs of the model predictions. Therefore, the summation of all shaded areas yields the metric value of the u-pooling method for models with multiple responses. The mathematical form for the u-pooling metric is expressed by the Minkowski-L1 distance between the ECDF of the u values and the CDF of the standard uniform distribution, i.e.,

The validation result by the u-pooling metric in (14) for any problem belongs to the interval of [0, 0.5]. The metric value of 0 suggests the predictive model perfectly agrees with the validation data, while the metric value of 0.5 indicates the worst match between the two.

The 300 observations from HFRM for Gδ and Gσ are converted via PIT corresponding to the related failure CDF of the two responses from the LFRMs respectively. The ECDF of the transformed data sets are plotted together with the CDF of the standard uniform distribution in Fig. 13 to illustrate the overall predictive capability of the LFRMs by taking into account the two responses simultaneously. In the picture, the dashed black line represents the CDF of the standard uniform distribution. The solid blue curve is the ECDF of the validation data transformed by the LFRM based on PRS method, and the 95% confidence interval of which is illustrated by the shaded area in light blue. The solid purple curve is the ECDF of the validation data transformed by the LFRM based on ANN regression, and the 95% confidence interval of which is shown by the shaded area in light blue. The solid blue curve by PRS method is close to the dashed black line for the CDF of the standard uniform distribution at the beginning, but a little off as the u value increases. The left half of the dashed black line is covered by the 95% confidence interval, but the rest of it is not. The solid purple curve by the ANN-based method is quite close to the dashed black line, and the confidence interval of which covers all of the dashed black line expect for a small region around the u value of 0.8 to 0.9. The metric value is 0.0695 for the PRS-based LFRM, and 0.0179 for the ANN-based LFRM, which are both small values for a u-pooling metric. This indicates that both models are acceptable. And as a comparison, the predictive capability of the ANN-based LFRM is superior than that of the PRS-based LFRM as the metric value of which is smaller. The results of u-poling again build confidence in employing the surrogate models for reliability assessment.

5 Phase III: reliability assessment

In this phase, the Monte Carlo simulation (MCS) is applied to the HFRM and the two LFRMs built by surrogate modeling techniques for the propagation of uncertainty in the random input variables to the limit state functions. The MCS-based reliability method is a classical sampling-based approach that depends on random samples from the joint distribution of the input variables to estimate the probability of failure Pf. Built upon the law of large numbers (LLN) in probability theory, the estimation of Pf by MCS will converge towards the exact solution of the probability of failure as the number of samples approaches infinite. The applicability of the MCS method is versatile compared with other reliability approaches, which has no restrictions to the form of a limit state function. For problems of different degrees of nonlinearity, dimensions and function types, as long as the sampling size is large enough, the results generated by MCS are credible. For this reason, the results of which are often used as references for demonstrating the performance of alternative approaches.

A comparison of the final reliability assessment results by MCS for the high fidelity reliability model (HFRM) and the two low fidelity reliability models (LFRM) is shown in Table 4. A total of 105 samples from the HFRM are generated for estimating the probability of failure Pf, which costs 105 runs of the finite element model (FEM). For the limit state function of the displacement Gδ , 21 samples are found in the failure region. Therefore, the corresponding reliability is 0.99979. On the other hand, for the limit state function of the stress Gσ, none of the samples falls in the failure region. This yields the result of zero Pf and reliability of one. However, it does not indicate that the structure performance is absolutely reliable. The truth is that the failure probability associated with Gσ is so small that 105 samples are not sufficient enough for the estimations of such a small Pf. For the LFRM based on the PRS method, it costs 8748 runs of the FEM for generating the response surfaces for Gδ and Gσ , while for the LRFM based on ANN, it costs 7000 runs for training the neural network. Five million MCS samples are evaluated via the two LFRMs respectively for the estimation of Pf. The reliability results of both LFRMs are both quite accurate compared with that of the HFRM. Between the two LFRMs, the precision of ANN-based LFRM is superior than that of the PRS-based LFRM, which matches with the results of area metric and u-pooling metric in Section 4.3.

It is worth noticing that for structural systems that usually have very small probability of failure, performing repeated simulations directly on high fidelity models is super computationally extensive. And the computational cost reduced significantly by introducing the LFRMs to structural reliability assessment. The CPU hours spent by the LFRMs are only 7% and 8.74% of that spent by the HFRM; this means the two LFRMs make 93% and 91.26% savings respectively in computational cost. The reliability results of the two LFRMs are quite consistent. Computational cost of the ANN-based LFRM is slightly lower than that of the PRS-based LFRM. Furthermore, considering that the validation assessment shows that the ANN-based LFRM matches better with the HFRM than PRS-based LFRM, the ANN-based LFRM is more recommended for the container crane structure in this paper.

6 Conclusion

In order to guide the reliability assessment of container crane structures in a scientific and organized way, a framework for incorporating model validation, surrogate model building, and efficient reliability assessment of complex engineering structures is presented in this paper. Three phases of the proposed framework are demonstrated by considering a typical type of quayside container crane at Yangshan deep-water port, Shanghai. In phase I, a parameterized FEM is built by combing ANSYS APDL with MATLAB codes for repeated simulations the quantities of interest, i.e., the nodal displacement and stress. Two sets of full-scale experimental data are collected for each response to the validation assessment of the parameterized FEM. In phase II, a high fidelity reliability model (HFRM) of the crane structure is established by integrating the parameterized FEM, the failure criteria of the structure, and uncertainty information about the input variables. Two low fidelity reliability models (LFRMs) are built using surrogate modeling techniques based on the polynomial response surface (PRS) and artificial neural networks (ANN). The predictive capability of the two LFRMs under uncertainty is assessed by area metric and u-pooling metric respectively using extra observations from the HFRM. Results show that the predictive performance of ANN-based LFRM is better than that of the PRS-based LFRM. Finally in phase III, Monte Carlo simulation (MCS) is performed on the two LFRMs for the probability of failure estimation of the container crane. As a comparison, the reliability assessment based on HFRM is also conducted via MCS. The credibility of the two LFRMs is further demonstrated by their consistent results on reliability estimations. The computational cost of reliability assessment is reduced significantly by using the LFRMs instead of HFRM, which further proves the benefit of efficiency via the proposed framework for reliability assessment of container crane structures and other analogous engineering structures.

References

Alyami H, Yang Z, Riahi R, Bonsall S, Wang J (2019) Advanced uncertainty modelling for container port risk analysis. Accid Anal Prev 123:411–421

Angus JE (1994) The probability integral transform and related results. SIAM Rev 36:652–654

ASME (2006) Guide for verification and validation in computational solid mechanics, V&V 10–2006. ASME, New York

Azeloglu O, Edinçliler A, Sagirli A (2014) Investigation of seismic behavior of container crane structures by shake table tests and mathematical modeling. Shock Vib 2014:1–9

Bayarri MJ, Berger JO, Rui P, Sacks J, Cafeo JA, Cavendish J, Lin C-H, Tu J (2007) A framework for validation of computer models. Technometrics 49:138–154

Bishop CM (1995) Neural networks for pattern recognition, Oxford University Press

Box GEP (2008) Response surfaces, mixtures and ridge analyses. J Am Stat Assoc 103:888–897

Bucher CG, Bourgund U (1990) A fast and efficient response surface approach for structural reliability problems. Struct Saf 7:57–66

Der Kiureghian A (1996) Structural reliability methods for seismic safety assessment: a review. Eng Struct 18:412–424

Der Kiureghian A, Dakessian T (1998) Multiple design points in first and second-order reliability. Struct Saf 20:37–49

Ditlevsen O, Madsen HO (1996) Structural reliability methods, 1st edn. Wiley, New York

Ender TR, Balestrini-Robinson S (2015) Surrogate modeling, modeling and simulation in the systems engineering life cycle: core concepts and accompanying lectures. Springer, London, pp 201–216

Engelund S, Rackwitz R (1993) A benchmark study on importance sampling techniques in structural reliability. Struct Saf 12:255–276

Evans D (1972) An application of numerical integration techniques to statistical tolerancing, II—A note on the error. Technometrics 13:315–324

Ferson S, Oberkampf WL (2009) Validation of imprecise probability models. Int J Reliab Saf 3:3–22

Ferson S, Oberkampf WL, Ginzburg L (2008) Model validation and predictive capability for the thermal challenge problem. Comput Methods Appl Mech Eng 197:2408–2430

Ghanem RG, Spanos PD (1991) Stochastic finite elements: a spectral approach. Springer, Berlin

Gunst RF, Myers RH, Montgomery DC (2016) Response surface methodology: process and product optimization using designed experiments, 4th edn. Wiley, New York

Higdon D, Kennedy M, Cavendish JC, Cafeo JA, Ryne RD (2004) Combining field data and computer simulations for calibration and prediction. SIAM J Sci Comput 26:448–466

Hills RG, Trucano TG (2001) Statistical validation of engineering and scientific models with application to CTH, Sandia National Laboratories

Jackson PS (1982) A second-order moments method for uncertainty analysis. IEEE Trans Reliab:382–384

Johnson NL, Kotz S, Balakrishna N (1994) Continuous univariate distributions, 2nd edn. Wiley-Interscience, New York

Kennedy MC, O'Hagan A (2001) Bayesian calibration of computer models. J R Stat Soc 63:425–464

Klopf A (1988) The drive-reinforcement neuronal model: a real-time learning mechanism for unsupervised learning, SPIE. Proceedings of the SPIE, United States, pp 119–121

Lee SH, Chen W (2009) A comparative study of uncertainty propagation methods for black-box-type problems. Struct Multidiscip Optim 37:239–253

Li W, Chen W, Jiang Z, Lu Z, Liu Y (2014) New validation metrics for models with multiple correlated responses. Reliability Engineering & System Safety 127:1–11

Li W, Chen S, Jiang Z, Apley DW, Lu Z, Chen W (2016) Integrating Bayesian calibration, bias correction, and machine learning for the 2014 Sandia Verification and Validation Challenge Problem, Journal of Verification. Validation and Uncertainty Quantification 1:1–18

Liu Y, Chen W, Arendt P, Huang H-Z (2011) Toward a better understanding of model validation metrics. J Mech Des 133:071005–071018

Madsen HO, Krenk S, Lind N (2006) Method of structural safety. Dover Publications, New York

Melchers RE (1989) Importance sampling in structural systems. Struct Saf 6:3–10

Montgomery DC (2012) Design and analysis of experiments, 9th edn. John Wiley & Sons, New York

More JJ (1977) The Levenberg- Marquardt algorithm: implementation and theory. Numerical Analysis:105–106

Murata N, Yoshizawa S, Amari SI (1994) Network information criterion-determining the number of hidden units for an artificial neural network model. IEEE Trans Neural Netw 5:865–872

Oberkampf WL, Barone MF (2006) Measures of agreement between computation and experiment: validation metrics. J Comput Phys 217:5–36

Oberkampf WL, Sindir M, Conlisk A (1998) Guide for the verification and validation of computational fluid dynamics simulations, American Institute of Aeronautics and Astronautics, AIAA

Oberkampf WL, Trucano TG, Hirsch C (2004) Verification, validation, and predictive capability in computational engineering and physics. Appl Mech Rev 57:345–384

Rahman S, Xu H (2004) A univariate dimension-reduction method for multi-dimensional integration in stochastic mechanics. Probabilistic Engineering Mechanics 19:393–408

Rajashekhar MR, Ellingwood BR (1993) A new look at the response surface approach for reliability analysis. Struct Saf 12:205–220

C.E. Rasmussen, Gaussian processes for machine learning, (2006)

Rebba R, Mahadevan S (2006) Validation of models with multivariate output. Reliability Engineering & System Safety 91:861–871

Rebba R, Huang S, Liu Y, Mahadevan S (2006) Statistical validation of simulation models. Int J Mater Prod Technol 25:164–181

Rodrigues ÉO (2018) Combining Minkowski and Cheyshev: new distance proposal and survey of distance metrics using k-nearest neighbours classifier. Pattern Recogn Lett 110:66–71

Sang HL, Kwak BM (2006) Response surface augmented moment method for efficient reliability analysis. Struct Saf 28:261–272

Seo HS, Kwak BM (2002) Efficient statistical tolerance analysis for general distribution using three-point information. Int J Prod Res 40:931–944

Soderberg E, Jordan M (2007) Seismic response of jumbo container cranes and design recommendations to limit damage and prevent collapse, American Society of Civil Engineers 11th triennial international conference on ports. San Diego, California, United States, pp 1–10

Sornette D, Davis A, Ide K, Vixie K, Pisarenko V, Kamm J (2007) Algorithm for model validation: theory and applications. Proc Natl Acad Sci 104:6562–6567

Xiu D, Karniadakis GE (2003) Modeling uncertainty in flow simulations via generalized polynomial chaos. J Comput Phys 187:137–167

Xu H, Rahman S (2010) A generalized dimension-reduction method for multidimensional integration in stochastic mechanics. Int J Numer Methods Eng 61:1992–2019

Zrnic N, Petković Z, Bošnjak S (2005) Automation of ship-to-shore container cranes: a review of state-of-the-art. FME Transactions 33:111–121

Funding

This work is supported by the National Natural Science Foundation of China (under Grant 51605279), the “Chenguang Program” supported by Shanghai Education Development Foundation and Shanghai Municipal Education Commission (No. 16CG54), and Opening Funding Supported by the Key Laboratory of Road Structure & Material Ministry of Transport (Research Institute of Highway Ministry of Transport).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Replication of results

Unfortunately, the FEM and data of the crane structure are restricted and unable to share.

Additional information

Responsible Editor: Somanath Nagendra

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Li, W., Quan, L., Hu, X. et al. A comprehensive framework for model validation and reliability assessment of container crane structures. Struct Multidisc Optim 62, 2817–2832 (2020). https://doi.org/10.1007/s00158-020-02637-w

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00158-020-02637-w