Abstract

The classical ARCH model together with its extensions have been widely applied in the modeling of financial time series. We study a variant of the ARCH model that takes account of liquidity given by a positive stationary process. We provide minimal assumptions that ensure the existence and uniqueness of the stationary solution for this model. Moreover, we give necessary and sufficient conditions for the existence of the autocovariance function. After that, we derive an AR(1) characterization for the stationary solution yielding Yule–Walker type quadratic equations for the model parameters. In order to define a proper estimation method for the model, we first show that the autocovariance estimators of the stationary solution are consistent under relatively mild assumptions. Consequently, we prove that the natural estimators arising out of the quadratic equations inherit consistency from the autocovariance estimators. Finally, we illustrate our results with several examples and a simulation study.

Similar content being viewed by others

1 Introduction

The ARCH and GARCH models have become important tools in time series analysis. The ARCH model was introduced in Engle (1982) and then it has been generalized to the GARCH model by Bollerslev (1986). Since, a large collection of variants and extensions of these models has been produced by many authors. See for example Bollerslev (2008) for a glossary of models derived from ARCH and GARCH. On a related work we also mention (Han 2013), where GARCH-X model with liquidity arising from a certain fractional ARMA process is considered.

In this work, we also focus on a generalization of the ARCH model, namely the model (1). Our contribution proposes to include in the expression of the squared volatility \(\sigma _{t} ^{2} \) a factor \(L_{t-1}\), which we will call liquidity. The motivation to consider such a model comes from mathematical finance, where the factor \(L_{t}\), which constitutes a proxi for the trading volume at day t, has been included in order to capture the fluctuations of the intra-day price in financial markets. A more detailed explanation can be found in Bahamonde et al. (2018) or Tudor and Tudor (2014). In the work (Bahamonde et al. 2018) we considered the particular case when \(L_{t}\) is the squared increment of the fractional Brownian motion (fBm in the sequel), i.e. \(L_{t}= (B ^{H}_{t+1} -B^{H}_{t}) ^{2} \), where \(B ^{H}\) is a fBm with Hurst parameter \( H\in (0,1)\).

In this work, our purpose is twofold. Firstly, we enlarge the ARCH with fBm liquidity in Bahamonde et al. (2018) by considering, as a proxi for the liquidity, a general positive (strictly stationary) process \((L_{t}) _{t\in {\mathbb {Z}}}\). This includes, besides the above mentioned case of the squared increment of the fBm, many other examples.

The second purpose is to provide a method to estimate the parameters of the model. As mentioned in Bahamonde et al. (2018), in the case when L is a process without independent increments, the usual approaches for the parameter estimation in ARCH models (such as least squares method and maximum likelihood method) do not work, in the sense that the estimators obtained by these classical methods are biased and not consistent. Here we adopt a different technique, based on the AR(1) characterization of the ARCH process, which has also been used in Voutilainen et al. (2017). The AR(1) characterization leads to Yule–Walker type equations for the parameters of the model. These equations are of quadratic form and then we are able to find explicit formulas for the estimators. We prove that the estimators are consistent by using extended version of the law of large numbers and by assuming enough regularity for the correlation structure of the liquidity process. We also provide a numerical analysis of the estimators.

The rest of the paper is organised as follows. In Sect. 2 we introduce our model and discuss the existence and uniqueness of the solution. We also provide necessary and sufficient conditions for the existence of the autocovariance function. We derive the AR(1) characterization and Yule–Walker type equations for the parameters of the model. Section 3 is devoted to the estimation of the model parameters. We construct estimators in a closed form and we prove their consistency via extended versions of the law of large numbers and a control of the behaviour of the covariance of the liquidity process. Several examples are discussed in details. In particular, we study squared increments of the fBm, squared increments of the compensated Poisson process, and the squared increments of the Rosenblatt process. We end the paper with a numerical analysis of our estimators. All the proofs and auxiliary lemmas are postponed to the appendix.

2 The model

Our variant of the ARCH model is defined for every \(t\in {\mathbb {Z}}\) as

where \(\alpha _0\ge 0\), \(\alpha _1,l_1>0\), and \((\epsilon _t)_{t\in {\mathbb {Z}}}\) is an i.i.d. process with \({\mathbb {E}}(\epsilon _0) = 0\) and \({\mathbb {E}}(\epsilon _0^2)=1\). Moreover, we assume that \((L_t)_{t\in {\mathbb {Z}}}\) is a positive process and independent of \((\epsilon _t)_{t\in {\mathbb {Z}}}\).

Remark 1

By setting \(L_{t-1} = \sigma ^2_{t-1}\) in (1) we would obtain the defining equations of the classical GARCH(1, 1) process. However, in this case, the processes L and \(\epsilon \) would not be independent of each other.

In Sect. 3, in the estimation of the model parameters, we further assume that \((L_t)_{t\in {\mathbb {Z}}}\) is strictly stationary with \({\mathbb {E}}(L_0) = 1\). However, we first give sufficient conditions to ensure the existence of a solution in a general setting where L is only assumed to be bounded in \(L^2\). This allows one to introduce ARCH models that are not based on stationarity.

Note that we have a recursion

Let us denote

Using (2) \(k+1\) times we get

with the convention \(\prod _0^ {-1} = 1\).

2.1 Existence and uniqueness of the solution

In the case of strictly stationary L, the existence and uniqueness of the solution is studied in Brandt (1986) and Karlsen (1990). However, in order to allow flexibility and non-stationarity in our class of ARCH models, we present a general existence and uniqueness result. Furthermore, our result allows one to consider only weakly stationary sequences. In addition, our proof is based on a different technique, adapted from Bahamonde et al. (2018).

We start with the following theorem providing the existence and uniqueness of a solution under relatively weak assumptions (we only assume the boundedness of L in \(L^2\) and the usual condition \(\alpha _{1} <1 \) [see e.g. Francq and Zakoian (2010)].

Theorem 1

Assume that \(\sup _{t\in {\mathbb {Z}}}{\mathbb {E}}(L_t^ 2) < \infty \) and \(\alpha _1< 1\). Then (1) has the following solution

Moreover, the solution is unique in the class of processes satisfying \(\sup _{t\in {\mathbb {Z}}}{\mathbb {E}}(\sigma _t^2) < \infty \).

The following result provides us a strictly stationary solution provided that L is strictly stationary. While the result is a special case of Karlsen (1990), for the reader’s convenience we present a different proof that can be applied to the case of weak stationarity as well (see Corollary 2).

Corollary 1

Let \(\alpha _1 < 1\). If L is strictly stationary with \({\mathbb {E}}(L_0^2) < \infty \), then the unique solution (4) is strictly stationary.

In the sequel, we consider a strictly or weakly stationary liquidity \((L_t)_{t\in {\mathbb {Z}}}\) and the corresponding unique solution given by (4). Therefore, we will implicitly assume that

In order to study covariance function or weak stationarity of the solution (4), we require that the moments \({\mathbb {E}}(\sigma _t^4)\) exist. Necessary and sufficient conditions for this are given in the following lemma.

Lemma 1

Suppose \({\mathbb {E}}(\epsilon _0^4) < \infty \) and L is strictly stationary. Then \({\mathbb {E}}(\sigma ^4_0) < \infty \) if and only if \(\alpha _1 < \frac{1}{\sqrt{{\mathbb {E}}(\epsilon _0^4)}}\).

Remark 2

As expected, in order to have finite moments of higher order we needed to pose a more restrictive assumption \(\alpha _1 < \frac{1}{\sqrt{{\mathbb {E}}(\epsilon _0^4)}} \le 1\), since \({\mathbb {E}}(\epsilon _0^2) = 1\). For example, in the case of Gaussian innovations we obtain the well-known condition \(\alpha _1 < \frac{1}{\sqrt{3}}\) [see e.g. Francq and Zakoian (2010) or Lindner (2009)]. An explicit expression of the fourth moment can be obtained when L is the squared increment of fBm [see Lemma 4 in Bahamonde et al. (2018)].

We end this section with the following result similar to Corollary 1 on the existence of weakly stationary solutions.

Corollary 2

Suppose that \(\alpha _1 < \frac{1}{\sqrt{{\mathbb {E}}(\epsilon _0^4)}}\) and L is weakly stationary. Then the unique solution (4) is weakly stationary.

2.2 Computation of the model parameters

In this section we consider the stationary solution and compute the parameters \(\alpha _{0}\), \(\alpha _{1}\), \(l_{1}\) in (1) by using the autocovariance functions of \(X^{2}\) and L. To this end, we use an AR(1) characterization of the ARCH process. From this characterization, we derive, using an idea from Voutilainen et al. (2017), a Yule–Walker equation of quadratic form for the parameters, that we can solve explicitly. This constitutes the basis of the construction of the estimators in the next section. From (1) it follows that if \((\sigma ^2_t)_{t\in {\mathbb {Z}}}\) is stationary, then so is \((X^2_t)_{t\in {\mathbb {Z}}}\). In addition

Now

and hence

Let us define an auxiliary process \((Y_t)_{t\in {\mathbb {Z}}}\) by

Now Y is a zero-mean stationary process satisfying

By denoting

we may write

corresponding to the AR(1) characterization (Voutilainen et al. 2017) of \(Y_t\) for \(0<\alpha _1 <1\).

In what follows, we denote the autocovariance functions of \(X^2\) and L with \(\gamma (n)={\mathbb {E}} (X_{t} ^{2} X_{t+n} ^{2}) - ( \frac{\alpha _0 + l_1}{1-\alpha _1})^2\) and \(s(n)= {\mathbb {E}} (L_{n} L_{t+n} ) - 1\) respectively.

Lemma 2

Suppose \({\mathbb {E}}(\epsilon _0^4) < \infty \) and \(\alpha _1 < \frac{1}{\sqrt{{\mathbb {E}}(\epsilon _0^4)}}\). Then for any \(n\ne 0\) we have

and for \(n=0\) it holds that

Now, let first \(n\in {\mathbb {Z}}\) with \(n\ne 0\). Then

From the first equation we get

Substitution to (6) yields

Let us denote \({\pmb {\gamma }}_0 = [\gamma (n+1), \gamma (n), \gamma (n-1), \gamma (1), \gamma (0), {\mathbb {E}}(X_0^4)]\) and

Assuming that \(a_0({\pmb {\gamma }}_0) \ne 0\) we have the following solutions for the model parameters \(\alpha _1\) and \(l_1\):

and

Finally, denoting \(\mu = {\mathbb {E}}(X_0^2)\) and using (5) we may write

Now, let \(n_1, n_2 \in {\mathbb {Z}}\) with \(n_1\ne n_2\) and \(n_1,n_2\ne 0\). Then

Assuming that \(n_2\) is chosen in such a way that \(s(n_2) \ne 0\) we have

Substitution to (11) yields

Let us denote \({\pmb {\gamma }}= [\gamma (n_1+1), \gamma (n_2+1), \gamma (n_1), \gamma (n_2), \gamma (n_1-1), \gamma (n_2-1)]\) and

Assuming \(a({\pmb {\gamma }}) \ne 0\) we obtain the following solutions for the model parameters \(\alpha _1\) and \(l_1\):

and

Again, \(\alpha _0\) is given by

Remark 3

Note that here we assumed \(s(n_2)\ne 0\) and \(a({\pmb {\gamma }}) \ne 0\) which means that we choose \(n_1,n_2\) in a suitable way. Notice however, that these assumptions are not a restriction. Firstly, the case where \(s(n_2)=0\) for all \(n_2\ne 0\) corresponds to the more simple case where L is a sequence of uncorrelated random variables. Secondly, if \(s(n_2)\ne 0\) and \(a({\pmb {\gamma }})=0\), the second order term vanishes and we get a linear equation for \(\alpha _1\). For detailed discussion on this phenomena, we refer to Voutilainen et al. (2017).

Remark 4

At first glimpse Eqs. (8) and (13) may seem useless as one needs to choose between signs. However, it usually suffices to know additional values of the covariance of the noise [see Voutilainen et al. (2017)]. In particular, it suffices that \(s(n) \rightarrow 0\) [see Voutilainen et al. (2019)].

3 Parameter estimation

In this section we discuss how to estimate the model parameters consistently from the observations under some knowledge of the covariance of the liquidity L. At this point we simply assume that the covariance structure of L is completely known. However, this is not necessary, as discussed in Remarks 5 and 6. As mentioned in the introduction, classical methods like maximum likelihood (MLE) or least squares method (LSE) may fail in the presence of memory. Indeed, while MLE is in many cases preferable, it requires the knowledge of the distributions so that the likelihood function can be computed. Compared to our method, we only require certain kind of asymptotic independence for the process L in terms of third and fourth order covariances (see Lemma 3). Unlike MLE, the LSE estimator does not require distributions to be known. However, in our model it may fail to be consistent. Indeed, this happens already in the case of squared increments of the fractional Brownian motion (Bahamonde et al. 2018).

Based on formulas for the parameters provided in Sect. 2.2, it suffices that the covariances of \(X^2\) can be estimated consistently. For simplicity, we assume that the liquidity \((L_{t})_{t\in {\mathbb {Z}}}\) is a strictly stationary sequence. The main reason why we prefer to keep the assumption of strict stationarity is that it simplifies the third and fourth order assumptions of Lemma 3 and also because our main examples of liquidities are strictly stationary processes (see Sect. 3.3). Nevertheless, the results either hold directly or can be modified with only a little effort to cover the case of weakly stationary sequences. We leave the details to the reader.

3.1 Consistency of autocovariance estimators

Assume that \((X^2_1, X^2_2, \ldots ,X^2_N)\) is an observed series from \((X_t)_{t\in {\mathbb {Z}}}\) that is given by our model (1). We use the following estimator of the autocovariance function of \(X_t^2\)

where \(\bar{X^2}\) is the sample mean of the observations. We show that the estimator above is consistent in two steps. Namely, we consider the sample mean and the term

separately. If the both terms are consistent, consistency of the autocovariance estimator follows. Furthermore, if this holds for the lags involved in Theorems 2 and 3, also the corresponding model parameter estimators are consistent.

Lemma 3

Suppose \({\mathbb {E}}(L_0^ 4)<\infty \) and \({\mathbb {E}}(\epsilon _0^ 8) <\infty \). In addition, assume that for every fixed \(n, n_1\) and \(n_2\) it holds that \(\mathrm {Cov}(L_0,L_t)\rightarrow 0\), \(\mathrm {Cov}(L_0L_n, L_{\pm t}) \rightarrow 0\) and \(\mathrm {Cov}(L_0L_{n_1}, L_tL_{t+n_2})\rightarrow 0\) as \(t\rightarrow \infty \). If \(\alpha _1 < \frac{1}{{\mathbb {E}}(\epsilon _0^8)^{\frac{1}{4}}}\), then

converges in probability to \({\mathbb {E}}(X_0^2X_n^2)\) for every \(n\in {\mathbb {Z}}\).

The next lemma provides us the missing piece of consistency of the covariance estimators. It can be proven similarly as Lemma 3, but as it is less involved, we leave the proof to the reader.

Lemma 4

Suppose \({\mathbb {E}}(\epsilon _0^4) < \infty \) and \(\mathrm {Cov}(L_0L_t) \rightarrow 0\) as \(t\rightarrow \infty \). If \(\alpha _1 < \frac{1}{\sqrt{{\mathbb {E}}(\epsilon _0^ 4)}}\), then the sample mean

converges in probability to \({\mathbb {E}}(X_0^2)\).

Remark 5

As one would expect, the assumptions of Lemma 4 are implied by the assumptions of Lemma 3, which on the other hand are only sufficient for our purpose. Indeed, it suffices that \(Y_t = X_t^2 X^2_{t+n}\) is mean-ergodic for the relevant lags n (see Theorems 2 and 3 , and Remark 6). This happens when the dependence within the stationary process Y vanishes sufficiently fast. The condition \(\alpha _1 < {\mathbb {E}}(\epsilon _0^8)^{-\frac{1}{4}}\) ensures the existence of an autocovariance function of Y. Furthermore, the assumptions made related to the asymptotic behavior of the covariances of L guarantee that the dependence structure of Y (measured by the autocovariance function) is such that the desired consistency of the autocovariance estimators follows. Finally, the assumptions related to the liquidity are very natural. Indeed, we only assume that the (linear) dependencies within the process \(L_t\) vanish over time. Examples of L satisfying the required assumptions can be found in Sect. 3.3.

3.2 Estimation of the model parameters

Set, for \(N\ge 1\),

and

where \(a_0({\pmb {\gamma }}_0)\), \(b_0({\pmb {\gamma }}_0)\) and \(c_0({\pmb {\gamma }}_0)\) are as in (7). In addition, let

and \({\hat{{\pmb {\xi }}}}_{0, N} = [{\hat{\pmb {\gamma }}_{0,N}}, {\hat{\mu }}_N]\) for some fixed \(n\ne 0\). The following estimators are motivated by (8), (9) and (10).

Definition 1

We define estimators \({\hat{\alpha }}_1\), \({\hat{l}}_1\) and \({\hat{\alpha }}_0\) for the model parameters \(\alpha _1\), \(l_1\) and \(\alpha _0\) respectively through

and

where \(n \ne 0\).

Theorem 2

Assume that \(a_0({\pmb {\gamma }}_0) \ne 0\) and \(g_0({\pmb {\gamma }}_0) > 0\). Let the assumptions of Lemma 3 prevail. Then \({\hat{\alpha }}_1, {\hat{l}}_1\) and \({\hat{\alpha }}_0\) given by (16), (17) and (18) are consistent.

Remark 6

In addition to the mean-ergodicity discussed in Remark 5, it suffices that the autocovariance function \(s(\cdot )\) of the liquidity L is known for the chosen lags 0 and n. Furthermore, if we can observe L, which is often the case, these quantities can also be estimated.

Let us denote

where \(a({\pmb {\gamma }})\) and \(b({\pmb {\gamma }})\) are as in (12). In addition, let

and \({\hat{{\pmb {\xi }}}}_N = [{\hat{\pmb {\gamma }}_N}, {\hat{\mu }}_N]\) for some fixed \(n_1,n_2\ne 0\) with \(n_1 \ne n_2\). The following estimators are motivated by (13), (14) and (15).

Definition 2

We define estimators \({\hat{\alpha }}_1\), \({\hat{l}}_1\) and \({\hat{\alpha }}_0\) for the model parameters \(\alpha _1\), \(l_1\) and \(\alpha _0\) respectively through

and

where \(n_1, n_2\ne 0\) and \(n_1\ne n_2\).

The proof of the the next theorem is basically the same as the proof of Theorem 2.

Theorem 3

Assume that \(s(n_2) \ne 0, a({\pmb {\gamma }}) \ne 0\) and \(g({\pmb {\gamma }}) > 0\). Let the assumptions of Lemma 3 prevail. Then \({\hat{\alpha }}_1, {\hat{l}}_1\) and \({\hat{\alpha }}_0\) given by (19), (20) and (21) are consistent.

Remark 7

-

Statements of Theorems 2 and 3 hold true also when \(g_0({\pmb {\gamma }}_0) = 0\) and \(g({\pmb {\gamma }}) = 0\), but in these cases the estimators do not necessarily become real valued as the sample size grows.

-

The estimators from Definitions 1 and 2 are of course related. In practice (see the next section) we use those from Definition 1 while those from Definition 2 are needed just in case when we need more information in order to choose the correct sign for \({{\hat{\alpha }}} _1\), see Remark 4.

-

Note that here we implicitly assumed that the correct sign can be chosen in \({\hat{\alpha }}_1\). However, this is not a restriction as discussed.

The approach of Voutilainen et al. (2017) was motivated by the classical Yule–Walker equations of an AR(p) process, for which the corresponding estimators have the property that they yield a causal AR(p) process agreeing with the underlying assumption of the equations [see e.g. Brockwell and Davis (2013)]. In comparison, with finite samples, the above introduced estimators may produce invalid values, such as complex numbers or negative reals. Moreover, for \(\alpha _1\) we may obtain an estimate \({\hat{\alpha }}_1 \ge {\mathbb {E}}(\epsilon _0^8)^{-\frac{1}{4}}\) violating assumptions of Lemma 3. However, we would like to emphasize that, as discussed before, the assumptions of the lemma are not necessary for a consistent estimation procedure. It may also happen that \({\hat{\alpha }}_1 \ge 1\) and in this case, Theory 1 does not guarantee the existence of the unique solution for (1) together with its stationarity. However, even in this case, there might still exist a (unique) stationary solution (cf. AR(1) with \(|\phi | >1\)). A further analyze of properties of (1) could be a potential topic for future research. The above described unwanted estimates are of course more prone to occur with small samples, although the probability of producing such values depends also on the different components of the model (1), such as the true values of the parameters. In practice, the issue can be avoided e.g. by using indicator functions as in Voutilainen et al. (2017) forcing the estimators to the demanded intervals. We also refer to our simulation study in Sect. 4 and Appendix B, which show that with the largest sample size we always obtained valid estimates.

3.3 Examples

We will present several examples of stationary processes for which our main result stated in Theorem 2 apply. Our examples are constructed as

where \((Y_{t})_{t\in {\mathbb {R}}}\) is a stochastic process with stationary increments. We discuss below the case when Y is a continuous Gaussian process (the fractional Brownian motion), a continuous non-Gaussian process (the Rosenblatt process), or a jump process (the compensated Poisson process).

3.3.1 The fractional Brownian motion

Let \(Y_{t}: = B ^{H}_{t}\) for every \(t\in {\mathbb {R}} \) where \((B ^{H}_{t}) _{t\in {\mathbb {R}}} \) is a two-sided fractional Brownian motion with Hurst parameter \(H\in (0,1)\). Recall that \( B^{H} \) is a centered Gaussian process with covariance

Let us verify that the conditions from Lemma 3 and Theorem 2 are satisfied by \(L_{t}= (B ^{H}_{t+1}- B ^{H}_{t}) ^{2}\). First, notice that [see Lemma 2 in Bahamonde et al. (2018)] that for \(t\ge 1\)

with

since \(r_{H}(t) \) behaves as \(t^{2H-2}\) for t large.

Let us now turn to the third-order condition, i.e. \(\mathrm {Cov} (L_{0}L_{n}, L_{t})={\mathbb {E}} (L_{0} L_{n} L_{t} )-{\mathbb {E}} (L_{0} L_{n} ) \rightarrow 0\) as \(t\rightarrow \infty \). We can suppose \(n\ge 1\) is fixed and \(t>n\). For any three centered Gaussian random variables \(Z_{1}, Z_{2}, Z_{3}\) with unit variance we have \({\mathbb {E}} ( Z_{1} ^{2} Z_{2} ^{2}) = 1+2({\mathbb {E}}(Z_{1}Z_{2}) ) ^{2} \) and

By applying this formula to \(Z_{1}= B ^{H}_{1}, Z_{2}= B^H_{n+1}- B ^{H}_{n}, Z_{3}= B ^{H}_{t+1} -B ^{H}_{t}\), we find

where \(r_{H}\) is given by (22). By (22), the above expression converges to zero as \(t\rightarrow \infty \).

Similarly for the fourth-order condition, the formulas are more complex but we can verify by standard calculations that, for every \(n_{1}, n_{2}\ge 1\) and for every \(t> \max (n_{1}, n_{2})\), the quantity

can be expressed as a polynomial (without term of degree zero) in \(r_{H}(t)\), \(r_{H}(t-n_{1})\), \(r_{H}(t+n_{2})\), \(r_{H}(t+n_{2} -n_{1}) \) with coefficients depending on \(n_{1}, n_{2}\). The conclusion is obtained by (22).

3.3.2 The compensated Poisson process

Let \((N_{t})_{t\in {\mathbb {R}} }\) be a Poisson process with intensity \(\lambda =1\). Recall that N is a cadlag adapted stochastic process, with independent increments, such that for every \(s<t\), the random variable \(N_{t}-N_{s}\) follows a Poisson distribution with parameter \(t-s\). Define the compensated Poisson process \( ({\tilde{N}}_{t}) _{t\in {\mathbb {R}} } \) by \({\tilde{N}}_{t}=N_{t}-t\) for every \(t\in {\mathbb {R}}\) and let \(L_{t}= ({\tilde{N}}_{t+1}-{\tilde{N}}_{t})^ {2}\). Clearly \({\mathbb {E}}L_{t}= 1\) for every t and, by the independence of the increments of \({\tilde{N}}\), we have that for t large enough

so the conditions in Theorem 2 are fulfilled.

3.3.3 The Rosenblatt process

The (one-sided) Rosenblatt process \((Z^{H}_{t}) _{t\ge 0} \) is a self-similar stochastic process with stationary increments and long memory in the second Wiener chaos, i.e. it can be expressed as a multiple stochastic integral of order two with respect to the Wiener process. The Hurst parameter H belongs to \((\frac{1}{2}, 1)\) and it characterizes the main properties of the process. Its representation is

where \((W(y))_{y\in {\mathbb {R}}}\) is Wiener process and \(f_{H} \) is deterministic function such that

\(\int _{{\mathbb {R}}} \int _{{\mathbb {R}}} f_{H}(y_{1}, y_{2}) ^{2} dy_{1}dy_{2} <\infty \). See e.g. Tudor (2013) for a more complete exposition on the Rosenblatt process. The two-sided Rosenblatt process has been introduced in Coupek (2018). In particular, it has the same covariance as the fractional Brownian motion, so \({\mathbb {E}}(L_{t})= {\mathbb {E}}(Z^{H}_{t+1} -Z^{H}_{t}) ^{2}=1\) for every t. The use of the Rosenblatt process can be motivated by the presence of the long-memory in the emprical data for liquidity in financial markets, see Tsuji (2002).

The computation of the quantities \(\mathrm {Cov}(L_{0}, L_{t})\), \(\mathrm {Cov} (L_{0}L_{n}, L_{t})\) and

\( \mathrm {Cov}(L_{0} L_{n_{1}}, L_{t} L_{t+n_{2}})\) requires rather technical tools from stochastic analysis including properties of multiple integrals and product formula which we prefer to avoid here. We only mention that the term \(\mathrm {Cov}(L_{0}, L_{t})\) can be written as

\(P( r_{H}(t), r_{H,1}(t) )\) where P is a polynomial without term of degree zero, \(r_{H}\) is given by (22), while

Note that

Since \(\vert u_{1}-u_{2}\vert ^{H-1}\vert u_{2}-u_{3}+t \vert ^{H-1}\vert u_{3}-u_{4}\vert ^{H-1}\vert u_{4}-u_{1}+t\vert ^{H-1}\) converges to zero as \(t\rightarrow \infty \) for every \(u_{i}\) and since this integrand is bounded for t large by \(\vert u_{1}-u_{2}\vert ^{H-1}\vert u_{2}-u_{3} \vert ^{H-1}\vert u_{3}-u_{4}\vert ^{H-1}\vert u_{4}-u_{1}\vert ^{H-1}\), which is integrable over \([0,1] ^{4}\), we obtain, via the dominated convergence theorem, that \(\mathrm {Cov}(L_{0}, L_{t})\rightarrow _{t\rightarrow \infty } 0.\) Similarly, the quantities \(\mathrm {Cov} (L_{0}L_{n}, L_{t})\) and \(\mathrm {Cov} (L_{0} L_{n_{1}}, L_{t} L_{t+n_{2}})\) can be also expressed as polynomials (without constant terms) of \(r_{H}, r_{H, k}\), \(k=1,2,3, 4\) where

where at least one set \(A_{i}\) is \((t, t+1)\). Thus we may apply a similar argument as above.

4 Simulations

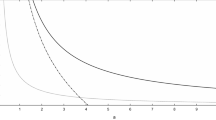

This section provides a visual illustration of convergence of the estimators (16), (17) and (18) when the liquidity process L is given by \(L_t = (B^H_{t+1} - B^H_{t})^2\) with \(H =\frac{4}{5}\).

The simulation setting is the following. The i.i.d. process \((\epsilon _t)_{t\in {\mathbb {Z}}}\) is assumed to be a sequence of standard normals. In this case the restriction given by Lemma 3 reads \(\alpha _1 < \frac{1}{105^{\frac{1}{4}}} \approx 0.31\). The used lag is \(n=1\) and the true values of the model parameters are \(\alpha _0=1\), \(\alpha _1 = 0.1\) and \(l_1 = 0.5\). The used sample sizes are \(N=100, N=1000\) and \(N=10000\). The initial \(X_0^2\) is set equal to 1.7. After the processes \(L_t\) with \(t=0, 1, \ldots N-2\) and \(\epsilon _t\) with \(t=1,2, \ldots N-1\) are simulated, the initial is used to generate \(\sigma _1^2\) using (1). Together with \(\epsilon _1\) this gives \(X_1^2\), after which (1) yields the sample \(\{X_0^2, X_1^2, \ldots , X^2_{N-1}\}\).

We have simulated 1000 scenarios with each sample size and the corresponding histograms of the model parameter estimates are provided in Figs. 1, 2 and 3. Our simulations show that the behaviour of the limit distributions are close to Gaussian ones, as N increases. We also note that, since the estimators involve square roots, they may produce complex valued estimates. However, asymptotically the estimates become real. In the simulations, the sample sizes \(N=100\) and \(N=1000\) resulted complex valued estimates in \(47.9\%\) and \(4.3\%\) of the iterations respectively, whereas with the largest sample size all the estimates were real. For the histograms the complex valued estimates have been simply removed. Some illustrative tables are given in Appendix B.

It is straightforward to repeat the simulations with other Hurst indexes, or with completely different liquidities such as squared increments of the compensated Poisson process. In these cases, we obtain similar results.

The simulations have been carried out by using the version 1.1.456 of RStudio software on Ubuntu 16.04 LTS operating system. Fractional Brownian motion was simulated by using circFBM function from the package dvfBm.

References

Bahamonde N, Torres S, Tudor CA (2018) ARCH model and fractional Brownian motion. Stat Probab Lett 134:70–78

Bollerslev T (1986) Generalized autoregressive conditional heteroskedasticity. J Econom 31(3):307–327

Bollerslev T (2008) Glossary to ARCH (GARCH). CREATES Research Papers 2008-49

Brandt A (1986) The stochastic equation \({Y}_{n+1} = {A}_n {Y}_n + {B}_n\) with stationary coefficients. Adv Appl Probab 18(1):211–220

Brockwell P, Davis R (2013) Time series: theory and methods. Springer, Switzerland

Coupek P (2018) Limiting measure and stationarity of solutions to stochastic evolution equations with Volterra noise. Stoch Anal Appl 36(3):393–412

Engle RF (1982) Autoregressive conditional heteroskedasticity with estimates of the variance of the U.K. inflation. Econom J Econom Soc 50:987–1108

Francq C, Zakoian JM (2010) GARCH models. Wiley, New Jersey

Han H (2013) Asymptotic properties of GARCH-X processes. J Financ Econom 13(1):188–221

Karlsen H (1990) Existence of moments in a stationary stochastic difference equation. Adv Appl Probab 22(1):129–146

Kreiß JP, Neuhaus G (2006) Einführung in die Zeitreihenanalyse. Springer, Berlin

Lindgren G (2012) Stationary stochastic processes: theory and applications. Chapman and Hall/CRC, London

Lindner AM (2009) Stationarity, mixing, distributional properties and moments of GARCH(p, q)-processes. In: Mikosch T, Kreiß JP, Davis R, Andersen T (eds) Handbook of financial time series. Springer, Berlin, Heidelberg

Tsuji C (2002) Long-term memory and applying the multi-factor ARFIMA models in financial markets. Asia-Pac Mark 9(3–4):283–304

Tudor CA (2013) Analysis of variations for self-similar processes: a stochastic calculus approach. Springer, Switzerland

Tudor CA, Tudor C (2014) EGARCH model with weighted liquidity. Commun Stat Simul Comput 43(5):1133–1142

Voutilainen M, Viitasaari L, Ilmonen P (2017) On model fitting and estimation of strictly stationary processes. Mod Stoch Theory Appl 4(4):381–406

Voutilainen M, Viitasaari L, Ilmonen P (2019) Note on AR(1)-characterisation of stationary processes and model fitting. Mod Stoch Theory Appl 6(2):195–207

Acknowledgements

Open access funding provided by Aalto University. The authors wish to thank two anonymous referees for their insightful comments that helped to improve the manuscript. Pauliina Ilmonen and Lauri Viitasaari wish to thank Vilho, Yrjö and Kalle Väisälä Fund, Marko Voutilainen wishes to thank Magnus Ehrnrooth Foundation, and Soledad Torres wishes to thank National Fund for Scientific and Technological Development, Fondecyt 1171335, for support.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: Auxiliary lemmas and proofs

The following lemma ensures that we are able to continue the recursion in (3) infinitely many times.

Lemma 5

Suppose \(\alpha _1<1\) and \(\sup _{t\in {\mathbb {Z}}}{\mathbb {E}}(\sigma _t^ 2) \le M_1<\infty \). Then, as \(k\rightarrow \infty \), we have

in \(L^ 1\). Furthermore, if \(\alpha _1 < \frac{1}{\sqrt{{\mathbb {E}}(\epsilon _0^ 4)}}\) and \(\sup _{t\in {\mathbb {Z}}}{\mathbb {E}}(\sigma _t^4)\le M_2 < \infty \), then the convergence holds also almost surely.

Proof

By independence of \(\epsilon \), we have

proving the first part of the claim.

For the second part, Chebysev’s inequality implies

which is summable by assumptions. Borel–Cantelli then implies

almost surely proving the claim. \(\square \)

Proof of Theorem 1

We begin by showing that (4) is well-defined. That is, we prove that

defines an almost surely finite random variable. First we observe that the summands above are non-negative and hence, the pathwise limits exist in \([0,\infty ]\). Write

and denote

By the root test it suffices to prove that

Here

where

by the law of large numbers and continuous mapping theorem. By Jensen’s inequality we obtain that

That is

almost surely. This proves (25) which implies that the first series in (23) is almost surely convergent. To obtain (24), it remains to show \(\limsup _{n\rightarrow \infty } L_{t-n}^ {\frac{1}{n}} \le 1\) almost surely. We have

where we have used

Now

Consider now the function \(f_x(a) {:}{=}x^ a\) for \(x\ge 1\) and \(a\ge 0\). Since \(f_x'(a) = x^a\log x\) we obtain by the mean value theorem that

Hence

On the other hand, for \(n\ge 2\) and \(L_{t-n} \ge 1\) it holds that

since for \(x\ge 1\), the function \(g(x) {:}{=}\left( \log x\right) ^ 2x^ {-1}\) has the maximum \(g(e^2) = 4e^ {-2}\). Consequently,

Hence Borel–Cantelli implies

which by (26) shows (24). Let us next show that (4) satisfies (2):

This shows that (4) is a solution.

It remains to prove the uniqueness. By (3) we have for every \(t\in {\mathbb {Z}}\) and \(k\in \{0,1,\ldots \}\) that

Suppose now that there exists two solutions \(\sigma _{t}^2\) and \({\tilde{\sigma }}_t^2\) satisfying \(\sup _{t\in {\mathbb {Z}}}{\mathbb {E}}(\sigma _t^2) < \infty \) and \(\sup _{t\in {\mathbb {Z}}}{\mathbb {E}}({\tilde{\sigma }}_t^2) < \infty \). Then

As both terms on the right-side converges in \(L^1\) to zero by Lemma 5, we observe that

for all \(t\in {\mathbb {Z}}\) which implies the result. \(\square \)

Proof of Corollary 1

Let k be fixed and define

If L is strictly stationary, then so is \((A_t, B_t)\). Consequently, we have

for every t. That is, \(H_{k,t} \overset{\text {law}}{=} H_{k,0}\). Since the limits of the both sides exist as \(k\rightarrow \infty \) we have

Treating multidimensional distributions similarly concludes the proof. \(\square \)

Proof of Lemma 1

The ”if” part follows from Theorem 3.2 of Karlsen (1990). For the converse, denote \({\mathbb {E}}(\epsilon _0^4) = C_\epsilon \) and \({\mathbb {E}}(L_0^2) = C_L\). By (4) we have

and since all the terms above are positive, both sides are simultaneously finite or infinite. Note also that, as the terms all positive, we may apply Tonelli’s theorem to change the order of summation and integration obtaining

Consider now the first term above. By independence, we obtain

Consequently, \({\mathbb {E}}(\sigma _0^4) < \infty \) implies \(\alpha _1 < \frac{1}{\sqrt{C_\epsilon }}\), since it is the radius of convergence of the series above. \(\square \)

Proof of Corollary 2

Let \(H_{k,t}\) be defined by (27). By the proof of Lemma 1 we get

Thus \(H_{k,t}\) converges to \(\sigma _{t+1}^2\) in \(L^2\). To conclude the proof, it is straightforward to check that weak stationarity of L implies weak stationarity of \(H_{k,t}\) for every k. \(\square \)

Proof of Lemma 2

First we notice that

by Lemma 1. Hence, the stationary processes Y and Z have finite second moments. Furthermore, the covariance of Y coincides with the one of \(X^2\). Applying Lemma 1 of Voutilainen et al. (2017) we get

for every \(n\in {\mathbb {Z}}\), where \(r(\cdot )\) is the autocovariance function of Z. For r(n) with \(n\ge 1\) we obtain

since the sequences \((\epsilon _t)_{t\in {\mathbb {Z}}}\) and \((L_t)_{t\in {\mathbb {Z}}}\) are independent of each other, and \(\epsilon _t\) is independent of \(\sigma _s\) for \(s\le t\). By the same arguments, for \(n=0\) we have

Now using (28) and \(\gamma (-1) = \gamma (1)\) completes the proof. \(\square \)

In the remaining we denote

Lemma 6

Let \(t,s \in {\mathbb {Z}}\). Then

Proof

By (4) and Fubini-Tonelli

where the series converges since \(\alpha _1 <1\) and \({\mathbb {E}}(L_0^2)< \infty \). \(\square \)

We use the following variant of the law of large numbers for consistency of the covariance estimators. The proof based on Chebyshev’s inequality is rather standard and hence omitted. In the case of a weakly stationary sequence, a proof can be found from (Kreiß and Neuhaus 2006, p. 154), or from (Lindgren 2012, p. 65) concerning the continuous time setting.

Lemma 7

Let \((U_1, U_2, \ldots )\) be a sequence of random variables with a mutual expectation. In addition, assume that \(\mathrm {Var}(U_j) \le C\) and \(\left| \mathrm {Cov}(U_j,U_k)\right| \le g(|k-j|)\), where \(g(i)\rightarrow 0\) as \(i\rightarrow \infty \). Then

in \(L^2\).

Proof of Lemma 3

By Lemma 7 it suffices to show that \(\mathrm {Cov}(X_0^2X_n^2, X_t^2X_{t+n}^2)\) converges to zero as t tends to infinity. Hence we assume that \(t > n\). By (4)

Since the summands are non-negative, we can take the expectation inside. Furthermore, by independence of the sequences \(\epsilon _t\) and \(L_t\) we observe

Next we justify the use of the dominated convergence theorem in order to change the order of the summations and taking the limit. Consequently, it suffices to study the limits of the terms

Step 1: finding summable upper bound.

First note that the latter term is bounded by a constant. Indeed, by stationarity of \((B_t)_{t\in {\mathbb {Z}}}\) we can write

which is bounded by a repeated application of Cauchy-Schwarz inequality and the fact that the fourth moment of \(L_0\) is finite.

Consider now the first term in (31). First we recall the elementary fact

Next note that the first term in (31) is bounded for every set of indices. Indeed, this follows from the independence of \(\epsilon \) and the observation that we obtain terms up to power 8 at most. That is, terms of form \(\epsilon _t^8\) and by assumption, \({\mathbb {E}}(\epsilon _t^8) < \infty \). Let now \(n>0\). Then

By computing similarly for \(n=0\), using stationarity of A and Eq. (33), we deduce that

where C is a constant. Moreover, by using similar arguments we observe

Combining all the estimates above, it thus suffices to prove that

Now for \(i_1\le i_2 \le i_3 \le i_4\) we have

which yields

Denote

Then we need to show that

For this suppose first that \(1 \notin S {:}{=}\{a_1, a_2, a_3, a_1a_2, a_2a_3, a_1a_2a_3\}.\) Then we are able to use geometric sums to obtain

Continuing like this in the iterated sums in (34) we deduce

and

Consequently, it suffices that the following three series converge

yielding constraints

However, these follow from the assumption \(\alpha _1 <\frac{1}{{\mathbb {E}}(\epsilon _0^8)^{\frac{1}{4}}}\). Finally, if \(1 \in S\) it simply suffices to replace \(a_1,a_2, a_3\) with

such that

Choosing \(\delta <0\) small enough the claim follows from the fact that the inequality \(\alpha _1 <\frac{1}{{\mathbb {E}}(\epsilon _0^8)^{\frac{1}{4}}}\) is strict.

Step 2: computing the limit of (30).

By step 1 we can apply dominated convergence theorem in (30). For this let us analyze the limit behaviour of (31). For the latter term we use (32). By assumptions, we have e.g. the following identities:

Therefore the limit of the latter term of (31) is given by

The first term of (31) can be divided into two independent parts whenever t is large enough. More precisely, for \(t > \max \{n+i_3, i_4\}\), we have

where the last equality follows from stationarity of \(A_t\). Hence

On the other hand, by (4)

Consequently, we conclude that

proving the claim. \(\square \)

Proof of Theorem 2

Since the assumptions of Lemma 3 are satisfied, so are the assumptions of Lemma 4 implying that the autocovariance estimators, the mean and the second moment estimator of \(X_t^2\) are consistent. The claim follows from the continuous mapping theorem. \(\square \)

Appendix B: Tables

In Table 1 we have presented means and standard deviations of the estimates with different sample sizes. In addition, we have provided Table 2 demonstrating how the estimates match their theoretical intervals \(0 \le \alpha _0\), \(0< \alpha _1 < 105^{- \frac{1}{4}}\) and \(0 < l_1\). We can see that multiplying the mean squared error (RMSE) provided by Table 1 with \(N^H\), the power H of the sample size, gives us evidence of the convergence rates of the estimators.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Voutilainen, M., Ilmonen, P., Torres, S. et al. On the ARCH model with stationary liquidity. Metrika 84, 195–224 (2021). https://doi.org/10.1007/s00184-020-00779-x

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00184-020-00779-x