Abstract

We study a scalar elliptic problem in the data driven context. Our interest is to study the relaxation of a data set that consists of the union of a linear relation and single outlier. The data driven relaxation is given by the union of the linear relation and a truncated cone that connects the outlier with the linear subspace.

Similar content being viewed by others

1 Introduction

The data driven perspective is new in the field of material science and partial differential equations, we mention [18] and [6] as the two fundamental contributions of this young field. In the data driven perspective certain laws of physics are accepted as invariable, e.g. balance of forces or compatibility. On the other hand, material laws (such as Hooke’s law) can be questionable. In the classical approach, measurements are used to estimate constants of material laws. The new paradigm is to use a set of data points, obtained from measurements; the data points are not interpreted as realizations of some law, but calculations and analysis are based directly on the cloud of data points.

On a more formal level, one introduces a set \(\mathcal {E}\) of functions that satisfy the invariable physical laws. A second set \(\mathcal {D}\) denotes those functions that are consistent with the data. In this setting, the aim is to find functions in \(\mathcal {E}\) that minimize the distance to the data set \(\mathcal {D}\).

The emphasis in [18] was to derive computing algorithms for this new approach. The mathematical analysis in [6] establishes well-posedness properties and introduces, among other tools, data convergence and relaxation in the data driven context. It is shown that data driven relaxation differs markedly from traditional relaxation, see the discussion below.

In the work at hand, we investigate a scalar setting, which can be used, e.g., in the modelling of porous media. We seek two functions, G (a gradient) and J (a negative flux). Given a domain \(Q\subset \mathbb {R}^n\) and a source \(f:Q\rightarrow \mathbb {R}\), the invariable physical laws are the compatibility \(G = \nabla U\) for some \(U : Q\rightarrow \mathbb {R}\) and the mass conservation \(\nabla \cdot J = f\) (in other contexts, the second law is the balance of forces). We introduce

In the classical approach, one might be interested in the linear material law given by \(J = A G\) for \(A\in \mathbb {R}^{n\times n}\). We note that a pair \((G,J) \in \mathcal {E}_f\) with \(J = A G\) can be found be solving the scalar elliptic equation \(\nabla \cdot (A\nabla U) = f\).

In the data driven perspective, the material law is replaced by a data set \(\mathcal {D}\). In a simple setting, we are given a local data set \(\mathcal {D}_\mathrm {loc}:= \{ (g_i, j_i) \,|\, i\in I \} \subset \mathbb {R}^n\times \mathbb {R}^n\) for some index set I. This data set might be obtained by measurements, in this case the index set I is finite and \(\mathcal {D}_\mathrm {loc}\) is a cloud of points in \(\mathbb {R}^n\times \mathbb {R}^n\). The set of functions that respect the data is

In the data driven perspective, the task is: Find a pair \((G,J)\in \mathcal {E}_f\)that minimizes the distance to the set \(\mathcal {D}\).

We remark that we recover the classical problem if we introduce

and the corresponding set of functions \(\mathcal {D}^A\) as in (1.2). For typical choices of Q, A, and f, the linear problem can be solved; in this case, there exists \((G,J)\in \mathcal {E}_f\cap \mathcal {D}^A\) and the minimization task has a solution that realizes the distance 0.

The advantage of the data driven perspective is the generality of the data set. In the minimization task above, an arbitrary data set \(\mathcal {D}\) can be considered. Three different types of questions can be asked:

-

1.

Minimality conditions: When \(\mathcal {E}_f\cap \mathcal {D}\) is empty, what are conditions for minimizers of the distance?

-

2.

Families of data sets: Given a family of data sets \(\mathcal {D}_h\) and solutions \((G_h, J_h)\) of the minimization problems, what can we say about limits?

-

3.

Relaxation: Given \(\mathcal {D}\) and sequences of pairs \((G^h, J^h)\in \mathcal {E}_f\) and \((g^h, j^h)\in \mathcal {D}\). Which limits are attainable in the sense of data convergence?

The present paper is devoted to the third question. We investigate a special data set: \(\mathcal {D}_\mathrm {loc}\) is the union of \(\mathcal {D}^A_\mathrm {loc}\) and \(\mathcal {D}^B_\mathrm {loc}\), where \(\mathcal {D}^A_\mathrm {loc}\) is as in (1.3) and \(\mathcal {D}^B_\mathrm {loc}\) is a one-point set of a single outlier. In this setting, the minimization problem is solvable with distance 0 since \(\mathcal {D}\) is larger than \(\mathcal {D}^A\). Our interest is to study the relaxation problem.

The motivation to study the data set \(\mathcal {D}_\mathrm {loc}= \mathcal {D}^A_\mathrm {loc}\cup \mathcal {D}^B_\mathrm {loc}\) is to understand the effect of a single outlier in a cloud of measurement points. When an increasing number of data points approximates the plane of Hooke’s law \(\mathcal {D}^A_\mathrm {loc}\), then the data driven solutions to these data sets approximate the classical solution with Hooke’s law; this is one of the results in [6]. Our interest is an outlier: When the measurements contain a single point that is not in \(\mathcal {D}^A_\mathrm {loc}\), the data driven solutions can always use this data point in the further process. How far off can the data driven solutions be because of the single outlier? Our result characterizes the relaxed data set and shows that it is only changed locally in the vicinity of the outlier. In this sense, the outlier has only a limited effect on the data driven solutions.

In more mathematical terms, the analysis of this article is concerned with sequences of pairs \((G^h, J^h)\in \mathcal {E}_f\) and \((g^h, j^h)\in \mathcal {D}\) that converge in the sense of data convergence. The set of all limits (g, j) constitutes the relaxed data set \(\mathcal {D}^{\mathrm {relax}}\). Our main result is the characterization of this set. We prove that it consists of functions that attain values in a local relaxed data set \(\mathcal {D}_\mathrm {loc}^{\mathrm {relax}}\). This set contains \(\mathcal {D}_\mathrm {loc}\), it is the “data driven convexification” of \(\mathcal {D}_\mathrm {loc}\). We find that the set is strictly larger than \(\mathcal {D}_\mathrm {loc}\), but smaller than the convex hull of \(\mathcal {D}_\mathrm {loc}\). We will characterize \(\mathcal {D}_\mathrm {loc}^{\mathrm {relax}}\) as the union of \(\mathcal {D}^A_\mathrm {loc}\) with a truncated cone that connects the additional point \(\mathcal {D}^B_\mathrm {loc}\) with the hyperplane \(\mathcal {D}^A_\mathrm {loc}\). Denoting the truncated cone by C, our main result states \(\mathcal {D}_\mathrm {loc}^{\mathrm {relax}}= C \cup \mathcal {D}_\mathrm {loc}^A\), see Theorem 1.

The proof consists of two parts. The fact that any pair (g, j) with values in \(C\cup \mathcal {D}_\mathrm {loc}^A\) belongs to \(\mathcal {D}^{\mathrm {relax}}\) requires the construction of a sequence of functions that use a fine mixture of materials. We will first approximate constant functions with values in \(C\cup \mathcal {D}_\mathrm {loc}^A\) by constructions of simple and iterated laminates. In order to realize a point in \(\mathcal {D}^A_\mathrm {loc}\), it suffices to use constant functions. In order to realize a point on the lateral boundary of the cone C, it is sufficient to construct a simple laminate with phases A and B. For a point in the interior of C, an iterated laminate must be constructed. Such iterated laminates are quite standard, we mention [13] and [22]. The technical difficulty in the derivation of the inclusion for functions lies in the glueing process for the local constructions. We adapt an approach of [6] and use a suitable Vitali covering.

The other part of the proof regards necessary conditions for limits of data convergent sequences. More precisely, we have to show that limits take only values in \(C\cup \mathcal {D}_\mathrm {loc}^A\). This part of the proof relies on the div-curl lemma [24]. In our context, the notion of data convergence of [6] provides exactly the prerequisites in order to use the div-curl lemma for data convergent sequences.

Literature.

Relaxation is a classical problem in the calculus of variations. For a functional \(I:X\rightarrow {\bar{\mathbb {R}}}\) on a Banach space X, one introduces the relaxed functional \(I^{\mathrm {relax}}:X\rightarrow {\bar{\mathbb {R}}}\) as \(I^{\mathrm {relax}}(u) := \inf \left\{ \liminf _k I(u^k) \,|\, u^k\rightharpoonup u \right\} \). A related notion is that of quasiconvexity; loosely speaking, quasiconvex functionals coincide with their relaxation. For fundamental results on these important concepts we refer to [2, 8, 12]. For a functional I which is not quasiconvex, one can construct laminates or more complex patterns in order to find the relaxed functional and/or the quasiconvex envelope of the integrand, see e.g. [3] and [5]. For an introduction we refer to [22].

The data driven perspective introduces a new concept of a relaxation. For a data set \(\mathcal {D}\), the task is to study the relaxed data set, which consists of points that are attainable as limits in the sense of data convergence. A relaxed data set in this sense has been calculated in [6] for a problem in the vectorial case: For a data set that describes a non-monotone material law (corresponding to a non-convex energy), the authors determine the relaxed data set, compare (3.26) and Theorem 3.6 in [6]. The relaxed data set is larger than the original data set, but it is smaller than the convex hull of the original data set. A similar phenomenon appears in our main result.

We want to emphasize the close relation to homogenization. In the primal problem of homogenization, one prescribes different material laws in different points x of the macroscopic domain, and asks for the effective law for fine mixtures. Building upon such results, one then asks: With any material laws in different points x (material laws of some admissible set), which effective material laws can be obtained by homogenization? This leads to bounds for effective material laws as in [14, 15, 19] and to optimization of the distribution of the single material laws, see [1, 4]. For early results in this direction which also highlight the relation to relaxation see [20, 21].

Our main result may be interpreted in the perspective of homogenization. We use the two material laws \(\mathcal {D}^A\) and \(\mathcal {D}^B\) in different regions of the macroscopic domain, possibly in a fine mixture. We ask what effective laws can be obtained in the limit. The warning about this description is that \(\mathcal {D}^B\) is not a linear relation and hence does not describe a material law in the classical setting of homogenization.

We will make use of the div-curl Lemma in the second part of the proof. This lemma is also used in the compensated compactness method of homogenization, see [16, 23]. Related concepts are those of \(\Gamma \)-convergence [9], Young-measures [12], and H-convergence [13].

For recent developments of the data driven approach we refer to [10] and [17], which are both concerned with numerical aspects. Finite plasticity in the context of data driven analysis is treated in [7].

1.1 The main result

Let \(n\ge 2\) be the dimension, \(Q\subset \mathbb {R}^n\) be a bounded Lipschitz domain, \(f\in H^{-1}(Q; \mathbb {R})\) a given source, and \(A\in \mathbb {R}^{n\times n}\) a positive definite symmetric matrix. We consider the local material data sets

and

We therefore enrich the data set \(\mathcal {D}_\mathrm {loc}^A\) of the classical approach with the one point set \(\mathcal {D}_\mathrm {loc}^B\). We choose here \((0,e_1)\not \in \mathcal {D}_\mathrm {loc}^A\) as the position of the outlier; by elementary transformations, an arbitrary outlier can be analyzed. Functions with values in the data set are defined by

We recall that the fundamental task in the data driven approach is to find a pair \((G,J)\in \mathcal {E}_f\) from (1.1) that minimizes the distance to \(\mathcal {D}\). In the above setting, a vanishing distance can be realized since \(\mathcal {D}\) is larger than \(\mathcal {D}^A\).

Our interest is to study the relaxed data set, which is the set of states that can be approximated in the sense of data convergence with sequences in \(\mathcal {E}_f\times \mathcal {D}\). We use the notion of data convergence of Definition 3.1 in [6].

Definition 1

(Relaxed data set \(\mathcal {D}^{\mathrm {relax}}\); data convergence) We use \(\mathcal {E}_f\) from (1.1) and \(\mathcal {D}\) from (1.7). A pair \((g,j) \in L^2(Q; \mathbb {R}^n)\times L^2(Q; \mathbb {R}^n)\) is in the relaxed data set and we write \((g,j)\in \mathcal {D}^{\mathrm {relax}}\) if the following holds:

There exists a sequence \(h\rightarrow 0\) and sequences \((g^h, j^h)_h\) and \((G^h, J^h)_h\) and a limit \((G, J)\in \mathcal {E}_f\) such that, for every h,

The sequence of pairs \(((g^h, j^h), (G^h, J^h))_h\) converges in the sense of data convergence to ((g, j), (G, J)), which means that

as \(h\rightarrow 0\).

We remark that the relaxed data set \(\mathcal {D}^{\mathrm {relax}}\) can also be characterized as a Kuratowski limit. The precise statement is provided in Lemma 1 below.

We will characterize the relaxed data set \(\mathcal {D}^{\mathrm {relax}}\) in terms of a local relaxed data set \(\mathcal {D}_\mathrm {loc}^{\mathrm {relax}}\) which, in turn, is the union of two sets: the hyperplane \(\mathcal {D}_\mathrm {loc}^A\) and a truncated cone C with vertex in the outlier \(\mathcal {D}_\mathrm {loc}^B\). The cone is truncated by the hyperplane \(\mathcal {D}_\mathrm {loc}^A\).

We define the cone in the following steps. For \(b\in [0,1]\), we set

For fixed b, the set \(C_b\) is an n-dimensional closed ellipsoid in \(\mathbb {R}^{n\times n}\). For \(b=1\), the ellipsoid degenerates to a point, \(C_1 = \{ (0,e_1)\} = \mathcal {D}_\mathrm {loc}^B\). On the other hand, for \(b=0\), every vector in \(C_0\) satisfies \(j = A g\), hence \(C_0\subset \mathcal {D}_\mathrm {loc}^A\). We define the truncated cone C as

Our main result is the characterization of the relaxed data set.

Theorem 1

(Characterization of the relaxed data set) The set \(\mathcal {D}^{\mathrm {relax}}\) of Definition 1 is characterized as

where the local relaxed data set \(\mathcal {D}_\mathrm {loc}^{\mathrm {relax}}\) is given by

with the truncated cone C of (1.9)–(1.10).

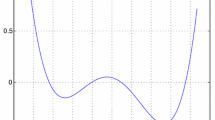

Theorem 1 characterizes the relaxation of the data set in the context of data driven analysis. The convexification of a set consisting of an hyperplane and an outlier yields the union of the plane with a truncated cone that connects the outlier with the plane, compare Fig. 1 and Fig. 2. In particular, the data driven relaxation does not yield the (classical) convexification of the original set, which is an infinite strip (the infinite strip can be regarded as the truncated cone with opening angle \(\pi \); in this sense, the data driven relaxation yields a cone with smaller opening angle).

A sketch for \(A=\mathrm {id}\), showing only the plane \((g_1, j_1)\in \mathbb {R}^2\). The diagonal line corresponds to the set \(\mathcal {D}_\mathrm {loc}^A\) of points with \(j = g\). The exceptional point P is \((g_1, j_1) = (0,1)\), corresponding to the one-point set of additional data points, \(\mathcal {D}_\mathrm {loc}^B = \{ (0,e_1) \}\)

We note that the representation of \(\mathcal {D}^{\mathrm {relax}}\) in particular implies that the local relaxed data set \(\mathcal {D}_\mathrm {loc}^{\mathrm {relax}}\) coincides with the set of attainable values,

The inclusion \(C\cup \mathcal {D}_\mathrm {loc}^A\subset \mathcal {D}_*^{\mathrm {relax}}\) will be a crucial part in the proof of Theorem 1, see Section 2.1 below.

1.2 An alternative description of the relaxed data set

Our definition of \(\mathcal {D}^{\mathrm {relax}}\) was given in terms of sequences. As noted above, the set \(\mathcal {D}^{\mathrm {relax}}\) can also be described in terms of a Kuratowski limit as in [6].

Lemma 1

(Kuratowski limit) Let data convergence be denoted as \(\triangle -\lim \). We use Kuratowski convergence of sets, which coincides with \(\Gamma \)-convergence of the indicator functions. With these topological tools, the data relaxation can be written as a limit:

Proof

Similar to [6] the sequential characterization of the Kuratowski limit follows from an (equi-)transversality condition.

Step 1: Transversality. We claim that there exist constants \(C_1,C_2>0\) such that every pair \(z=(g,j)\in \mathcal {D}\) and \(Z=(G,J)\in \mathcal {E}_f\) satisfies

The inequality is concluded with the help of the positivity of \(A\in \mathbb {R}^{n\times n}\), \(\xi \cdot A\xi \ge c_0|\xi |^2\) for some \(c_0>0\). From this estimate and the fact that \(z\in \mathcal {D}\) implies \(g=0\) on \(\{j\ne Ag\}\) we deduce

Since \(Z\in \mathcal {E}_f\) implies \(G=\nabla U\) and \(\nabla \cdot J=f\), we further obtain

where we have used Poincaré’s inequality and \(G=\nabla U\) in the last step; here and below, C denotes a constant that depends only on A, Q, n and that may change from line to line. Together with (1.16) we deduce that

The triangle inequality yields an analogous inequality for g,

Since \(j=e_1\) holds in \(\{j\ne Ag\}\) we next observe that

Using (1.19) and Young’s inequality, this provides

This estimate can be inserted in (1.18) and we obtain the corresponding estimate for G. By the triangle inequality, we control all functions g, G, j, J in \(L^2(Q;\mathbb {R}^n)\) by the right-hand side of (1.20). This proves the transversality (1.15).

Step 2: Sequential characterization of Kuratowski convergence. The Kuratowski limit \(K(\triangle )\text {-}\lim \ \mathcal {D}\times \mathcal {E}_f\) is given by the domain of the \(\Gamma \)-limit of the (constant sequence of the) indicator function of \(\mathcal {D}\times \mathcal {E}_f\). To characterize this set consider any point \((z_0,Z_0)\in L^2(Q;\mathbb {R}^n)^2\). Since \(\Gamma \)-convergence is a local property, when computing the \(\Gamma \)-limit in this point we may restrict ourselves to any neighborhood of \((z_0,Z_0)\) with respect to the \(\triangle \)-topology. In particular, we may choose a neighborhood in which all pairs \((z,Z)\in L^2(Q;\mathbb {R}^n)^2\) satisfy \(\Vert (z-z_0)-(Z-Z_0)\Vert _{L^2(Q;\mathbb {R}^n)}<1\) (note that strong convergence of differences is part of the definition of \(\triangle \)-convergence). Then the transversality property implies that we can restrict the computation of the Gamma limit to a bounded set in \(L^2(Q;\mathbb {R}^n)^2\). On bounded sets the data convergence topology is metrizable. Hence the topological and the sequential characterization of \(\Gamma \) convergence coincide [9, Proposition 8.1].

The sequential characterization of the \(\liminf \) and \(\limsup \) inequalities that characterize \(\Gamma \)-convergence of the indicator function of \(\mathcal {D}\times \mathcal {E}_f\) to \(\mathcal {D}^{\mathrm {relax}}\times \mathcal {E}_f\) are described by the properties:

-

(i)

For any sequence \((z^h,Z^h)\) in \(\mathcal {D}\times \mathcal {E}_f\) that \(\triangle \)-converges to a limit \((z,Z)\in L^2(Q;\mathbb {R}^n)^2\), there holds \((z,Z)\in \mathcal {D}^{\mathrm {relax}}\times \mathcal {E}_f\).

-

(ii)

For any \((z,Z)\in \mathcal {D}^{\mathrm {relax}}\times \mathcal {E}_f\) there exists a sequence \((z^h,Z^h)\) in \(\mathcal {D}\times \mathcal {E}_f\) that \(\triangle \)-converges to (z, Z).

This is equivalent to the characterization of \(\mathcal {D}^{\mathrm {relax}}\) given in Definition 1. \(\square \)

1.3 Equivalent descriptions for the truncated cone C

The main purpose of this section is to derive a convenient description of the lateral boundary of C.

Before we do so, let us study briefly the cone C in the special case that the dimension is \(n=2\) and that the linear law is given by \(A = \mathrm {id}\in \mathbb {R}^{2\times 2}\). In this situation, we can use the new variable \(r = (1-b)/2\) to write the condition \(g\cdot Ag \le (1-b)g_1\) as \(g_1^2 + g_2 ^2 \le 2 r g_1\). We find

The last condition expresses that \(g = (g_1, g_2)\) is contained in the disc \(B_{r}((r,0))\) with radius r and center (r, 0). Because of \(j_1 = g_1+1-2r\), the disc is mapped into an inclined plane.

The lateral boundary of C. For \(b\in [0,1]\) fixed, the lateral boundary of \(C_b\) is

The lateral boundary of C can be expressed as \(\partial _{\mathrm {lat}}C := \bigcup _{b\in [0,1]}\partial _{\mathrm {lat}}C_b\). With this notation, the boundary of C is given by

We can generalize (1.21) as follows. Let \(y\in \mathbb {R}^n\) be a vector that satisfies \(Ay=e_1\). We introduce the scalar product \(\langle v_1,v_2\rangle _A := v_1\cdot Av_2\) and the associated norm \(|\cdot |_A\). The corresponding sphere with center \(\tfrac{1}{2}y\) that contains 0 is

Then \((g,j)\in \partial _{\mathrm {lat}}C_b\) if and only if \(g \in (1-b)S^A_y\) and \(j=be_1+Ag\). In fact, for \(b=1\), there holds \(\partial _{\mathrm {lat}}C_b = \{(0,e_1)\}\) and the equivalence is valid. For \(b\in [0,1)\), we find

For later use we include the following alternative characterization of \(\partial _{\mathrm {lat}}C_b\).

Lemma 2

The lateral boundary can also be written as

Proof

We fix \(b\in [0,1]\) and denote by \(K_b\) the right-hand side of (1.23). Consider any (g, j) with \(j=be_1+Ag\) and \(g\ne 0\). Then

where the choice \(\nu = g\) provides the last implication (Fig. 2).

Vice versa, let (g, j) with \(g\ne 0\) be in \(K_b\). By definition, there exists \(\nu \ne 0\) with \(g=(1-b)\frac{\nu _1}{\nu \cdot A\nu }\nu \). We can calculate \(g\cdot Ag = (1-b)^2 \nu _1^2/ (\nu \cdot A\nu )\) and \((1-b) g_1 = (1-b)^2 \nu _1^2 / (\nu \cdot A\nu )\), which shows \(g\cdot Ag = (1-b) g_1\). \(\square \)

2 Construction of approximating sequences

The goal of this section is to prove the inclusion

This is one of the inclusions in (1.11). It is that part of Theorem 1 that must be verified by the construction of approximations. We show in Subsection 2.1 that constant functions with values in \(C\cup \mathcal {D}_\mathrm {loc}^A\) can be approximated by data convergence, i.e. the inclusion \(C\cup \mathcal {D}_\mathrm {loc}^A\subset \mathcal {D}_*^{\mathrm {relax}}\). In Subsection 2.2 the construction is extended to nonconstant functions with values in \(C\cup \mathcal {D}_\mathrm {loc}^A\).

2.1 Approximation of constant functions

The aim of this subsection is to verify \(C\cup \mathcal {D}_\mathrm {loc}^A\subset \mathcal {D}_*^{\mathrm {relax}}\). To this end, we choose an arbitrary point \((g,j) \in C\cup \mathcal {D}_\mathrm {loc}^A\subset \mathbb {R}^n\times \mathbb {R}^n\). We have to construct sequences \((g^h,j^h)\in \mathcal {D}\) and \((G^h,J^h)\in \mathcal {E}_f\) such that the pairs data converge, satisfying \((g^h,j^h) \rightharpoonup (g,j)\). To fulfil the condition \(\nabla \cdot J^h = f\), it is convenient to fix a vector field \(J_f\in L^2(\Omega ;\mathbb {R}^n)\) with \(\nabla \cdot J_f=f\).

In the case \((g,j) \in \mathcal {D}_\mathrm {loc}^A\) there holds \(j = A g\) and we can use trivial sequences (in particular, \(j^h = j\) and \(g^h = g\)) in order to obtain \((g,j) \in \mathcal {D}_*^{\mathrm {relax}}\). When \((g,j) \in C\) is a point on the lateral boundary of the cone, we use simple laminates to construct data convergent sequences \((g^h, j^h)\) and \((G^h, J^h)\). Finally, when \((g,j) \in C\) is an inner point, we use iterated laminates to construct the data convergent sequences.

In order to motivate the subsequent constructions, we present what can be achieved in the case \(A = \mathrm {id}\) with simple laminates of horizontal or vertical layers. With respect to Fig. 1 we can say: The simple laminates show that all points in the vertical line of the cone and all points in the horizontal line of the cone can be constructed.

Remark 1

(Horizontal layers) We consider \(A = \mathrm {id}\) and fix \(b \in (0,1)\). We decompose Q into thin horizontal layers such that \(e_1\) is a tangential vector of the interfaces. The layers have the width \((1-b) h\) and \(b\, h\) in an alternating fashion. The layers with width \((1-b) h\) are called A-layers, the other layers are B-layers. In the A-layers, we set \(j^h := G^h := g^h := 0\), in the B-layers we set \(G^h := g^h := 0\) and \(j^h := e_1\). We finally set \(J^h := j^h + J_f\), for \(J_f\) as above.

By construction, \((g^h, j^h)\in \mathcal {D}\). Since layers are horizontal, \(j^h\) has a vanishing divergence and \(J_h\) has the divergence f. As a trivial function, \(G^h\) is a gradient. We find \((G^h, J^h)\in \mathcal {E}_f\). The functions converge weakly in \(L^2(Q;\mathbb {R}^n)\) and the differences \(g^h - G^h = 0\) and \(j^h - J^h = J_f\) converge strongly. We therefore obtain that the vertical line \(\{ (g,j) \,|\, g = 0, j = (j_1, 0, ..., 0), j_1\in [0,1] \}\) is contained in \(\mathcal {D}_*^{\mathrm {relax}}\).

Remark 2

(Vertical layers) We consider again \(A = \mathrm {id}\). We proceed as in Remark 1, but we now decompose Q into thin layers with normal vector \(e_1\). In the interior of Q, in the A-layers, we set \(j^h := G^h := g^h := e_1\), in the B-layers we set \(G^h := g^h := 0\) and \(j^h := e_1\), and \(J^h := j^h + J_f\).

Up to truncations near the boundary, one can verify \((G^h, J^h)\in \mathcal {E}_f\), \((g^h, j^h)\in \mathcal {D}\), and the convergence properties. We therefore obtain that the horizontal line \(\{ (g,j) \,|\, g = (g_1, 0, ..., 0), g_1\in [0,1], j = 0 \}\) is contained in \(\mathcal {D}_*^{\mathrm {relax}}\).

After these motivating examples, we move on to the construction in the general case.

Lemma 3

(Simple laminates) Let \((g,j) \in \mathcal {D}_\mathrm {loc}^A\cup \partial C\) be a point in the plane or on the boundary of the cone. Then, for every sequence \(h\searrow 0\), there exist \((G^h, J^h)\in \mathcal {E}_f\) and \((g^h, j^h)\in \mathcal {D}\) such that the sequence of pairs is data convergent with \((g^h, j^h) \rightharpoonup (g,j)\) and \((G^h, J^h) \rightharpoonup (0,j+J_f)\) in \(L^2(Q;\mathbb {R}^n)\). In particular, there holds

The sequence can be chosen such that \(|g^h|+|j^h|\le C(n,A)(1+|g|)\) in Q.

Proof

For a point \((g,j) \in \mathcal {D}_\mathrm {loc}^A\), i.e., \(j = A g\), trivial sequences can be used: We choose constant functions \(g^h = g\), \(G^h = 0\), \(j^h = j\), and \(J^h = j + J_f\).

The vertex of the cone (i.e.: the case \((g,j)\in \mathcal {D}_\mathrm {loc}^B\)) is also treated by choosing constant functions.

It remains to treat the case that, for some parameter \(b\in (0,1)\), there holds \((g,j)\in \partial _{\mathrm {lat}}C_b\). By Lemma 2, we can express this point in the form

for some \(\nu \in \mathbb {R}^n\setminus \{0\}\).

Step 1: Construction of approximating sequences. For \(h>0\), we consider the following layered subdivision of Q, using the direction \(\nu \),

For the volume fractions we note that \(|B^h|\rightarrow b|Q|\) and \(|A^h|\rightarrow (1-b)|Q|\) as \(h\searrow 0\). The field \((g^h,j^h)\) is chosen as

By definition of the fields, \((g^h, j^h)\in \mathcal {D}\) is satisfied and \(|g^h|+|j^h|\le C(n,A)\) holds in Q.

We note that the construction assures \(j^h\cdot \nu = e_1\cdot \nu \) in \(B^h\) and

This shows \(\nabla \cdot j^h=0\) in Q.

We want to find a function \(u^h : Q\rightarrow \mathbb {R}\) that satisfies

The function \(u^h\) can be constructed explicitly. We use the continuous (and piecewise affine) function \(v^h :\mathbb {R}\rightarrow \mathbb {R}\) with \(v^h(0) = 0\) and with the derivatives \(\partial _\xi v^h(\xi ) = 0\) for \(\xi \in (0,bh) + h\mathbb {Z}\) and \(\partial _\xi v^h(\xi ) = \nu _1/(\nu \cdot A\nu )\) for \(\xi \in (bh,h) + h\mathbb {Z}\). Using \(v^h\), we set

We may introduce

Then \(u^h\rightharpoonup u\) and \(\Vert u^h - u \Vert _{L^\infty } \le C h\) hold for a constant C that does not depend on h.

In order to define a corresponding pair \((G^h,J^h)\), we choose a cut-off function \(\varphi _h\in C^1_c(Q)\) with values in [0, 1], satisfying \(\varphi _h=1\) in \(\{x\in Q \,|\, \mathrm {dist}(x,\partial Q)\ge 2h\}\) and \(\varphi _h=0\) in \(\{x\in Q \,|\, \mathrm {dist}(x,\partial Q)\le h\}\) and \(|\nabla \varphi _h|\le \frac{2}{h}\). With these preparations we define

Step 2: Verification of the properties. By definition, \(G^h\) is a gradient of a function in \(H^1_0(Q)\). The field \(J^h\) has the divergence \(\nabla \cdot J^h = \nabla \cdot j^h + \nabla \cdot J_f = f\). This shows \((G^h, J^h)\in \mathcal {E}_f\).

We now verify the data convergence property. We clearly have

in \(L^2(Q;\mathbb {R}^n)\). Finally we have \(J^h-j^h =J_f\) and

Here, the convergence follows from the following facts: \((1-\varphi _h)\rightarrow 0\) strongly in \(L^2(Q;\mathbb {R}^n)\) implies convergence to 0 for the first term. The pointwise convergence \(\varphi _h\nabla u \rightarrow \nabla u\) with the uniform bound \(|\varphi _h\nabla u| \le |\nabla u|\) implies strong convergence of the second term to \(g = \nabla u\). The last term \((u^h-u)\nabla \varphi _h\) is uniformly bounded and converges to zero almost everywhere, hence strongly to 0.

Altogether, we obtain that \(\big ((g^h,j^h),(G^h,J^h)\big )\rightarrow \big ((g,j),(G,J)\big )\) in the sense of data convergence. In particular, \((g,j)\in \mathcal {D}^{{\mathrm {relax}}}_*\). \(\square \)

We next show that also the interior of the cone C will be reached by suitable iterated laminate constructions.

Lemma 4

(Iterated laminates) Let \((g,j) \in C\) be a point of the cone. Then, for every sequence \(h\searrow 0\), there exist \((G^h, J^h)\in \mathcal {E}_f\) and \((g^h, j^h)\in \mathcal {D}\) such that the sequence of pairs is data convergent with \((g^h, j^h) \rightharpoonup (g,j)\) and \((G^h, J^h) \rightharpoonup (0,J_f+j)\) in \(L^2(Q;\mathbb {R}^n)\). In particular, there holds

The sequence can be chosen such that \(|g^h|+|j^h|\le C(n,A)\) in Q and, in the case \(f = 0\), such that in addition \(J^h \equiv j\) holds in a neighborhood of \(\partial Q\).

Proof

In view of Lemma 3 it remains to consider interior points of the cone C. In this case we use iterated laminates for the construction.

Step 1: Preparations. Let \(p_C= (g_C, j_C)\in \mathring{C}\) be an arbitrary point in the interior of the cone. We show in Lemma A.1 of the “Appendix” that we can write \(p_C\) as a convex combination as follows: There exist two points \(p_A = (g_A, j_A) \in \mathcal {D}^A_\mathrm {loc}\) and \(p_L = (g_L, j_L) \in \partial _\mathrm {lat}C\) and a parameter \(\lambda \in (0,1)\) such that

and such that, additionally,

As in the proof of Lemma 3 we exploit Lemma 2: We can express the point \(p_L \in \partial _\mathrm {lat}C\) as a convex combination with some vector \(\nu \in \mathbb {R}^n\setminus \{0\}\):

with

The iterated laminate is constructed as a coarse laminate with layers of width \(\sqrt{h}\) and a fine laminate with layers of order h. Every second layer of the coarse mesh uses \(p_A = (g_A, j_A)\). The fine laminate uses \((g_a, j_a)\) and \((g_b, j_b)\). The two functions in the fine layer produce, in average, \(p_L = (g_L, j_L)\). The mixture of the coarse layers with values \(p_A\) and \(p_L\) provide the desired values \(p_C\). For a sketch see Fig. 3.

Step 2: Construction of the approximating sequence. From now on, the points \(p_C, p_A, p_L, p_a, p_b\), and the volume fractions \(\lambda \) and b are fixed. In addition to \(\nu \), we introduce the normal vector to the coarse layer interfaces

For every \(k\in \mathbb {Z}\), the coarse layers \(L^h_k\) and \(M^h_k\) are defined as

The unions are denoted as \(L^h := \bigcup _{k\in \mathbb {Z}} L^h_k\) and \(M^h := \bigcup _{k\in \mathbb {Z}} M^h_k\).

The iterated laminate is based on a subdivision of every layer \(L^h_k\). We set

The unions are denoted as \(L^h_b := \bigcup _{k\in \mathbb {Z}} L^h_{k,b}\) and \(L^h_a := \bigcup _{k\in \mathbb {Z}} L^h_{k,a}\).

We define the fields \(g^h\) and \(j^h\) as

By definition \((g^h,j^h)\in \mathcal {D}\). Using (A.2) we deduce that \(|g^h|+|j^h|\le C(n,A)\) holds in Q. We next define a function \(u^h : \mathbb {R}^n \rightarrow \mathbb {R}\) that is piecewise affine and which has piecewise the gradient \(g^h\). In order to construct \(u^h\) we introduce the points \(x_k := k \sqrt{h}\, \theta \in \mathbb {R}^n\) for \(k\in \mathbb {Z}\). The point \(x_k\) is chosen such that, if \(x_k\) happens to be in Q, it is a point in \(\partial L^h_k \cap \partial M^h_{k-1}\). By construction, the weak limit of \(g^h\) is \(g_C\). We therefore set \(u^h(x_k) := g_C\cdot x_k\). Accordingly, in the layer \(M^h_{k-1}\), we set \(u^h(x) := g_C\cdot x_k + g_A\cdot (x-x_k)\). In the layer \(L^h_{k}\), we define \(u^h\) as the unique continuous function with \(u^h(x_k) = g_C\cdot x_k\), with the gradient \(g_a\) in \(L^h_{k,a}\) and the gradient \(g_b\) in \(L^h_{k,b}\). A continuous function \(u^h\) exists in \(L^h_{k}\) since \((g_a - g_b)\parallel \nu \).

As in the proof of Lemma 3, we can use a cutoff function \(\varphi _h^k\) in the layer \(L^h_{k}\) to construct \(U^h_k : L^h_{k}\rightarrow \mathbb {R}\) with bounded gradient such that

The function \(U^h\) can be defined on all of Q by setting

By construction, the function \(U^h\) is continuous. This property follows by inserting the vector \(\lambda \sqrt{h}\, \theta \), the normal vector of layer \(L^h_k\), where \(U^h\) has the averaged gradient \(g_L\), and the vector \((1-\lambda ) \sqrt{h}\, \theta \) in normal direction of layer \(M^h_k\), where \(U^h\) has the gradient \(g_A\):

This is consistent with the choice of \(U^h(x_{k+1})\).

Furthermore, the function \(U^h\) has a bounded gradient. This can be seen as in the proof of Lemma 3: In the layer \(L^h_k\), the difference between \(u^h(x)\) and \(g_C\cdot x_k + g_L\cdot (x-x_k)\) is of order h (uniformly in x) since \(g_L\) is the average slope of \(u^h\) and \(u^h\) oscillates at order h. The gradient of the cutoff function \(\varphi _h^k\) is of order \(h^{-1}\).

The gradient of \(U^h\) coincides with \(g^h\) except for a set with a volume bounded by \(C\sqrt{h}\): the strips of width h in the layers \(L^h_k\), and there are \(O(1/\sqrt{h})\) such layers. With the choice \(G^h := \nabla U^h\), this guarantees the strong convergence \(\Vert g^h - G^h \Vert _{L^2(Q)} \rightarrow 0\).

We do not perform here the modification of \(U^h\) at the boundary \(\partial Q\). We restrict ourselves to the observation that the weak limit of the sequence \(U^h\) is the function \(U:\mathbb {R}^n\rightarrow \mathbb {R}\), \(U(x) = g_C\cdot x\). Moreover, there holds \(\Vert U^h - U \Vert _{L^\infty } \le C \sqrt{h}\) for some constant \(C>0\), which is independent of h. This fact allows to use the cutoff argument of Lemma 3 at \(\partial Q\). After this modification we have \(U^h\rightharpoonup 0\), see Lemma 3.

The construction is complete up to the choice of the sequence \(J^h\), which we postpone to Step 3. At this point, we have found the following functions: \((g^h, j^h)\) are functions that are compatible with the data set, \(G^h\) is a gradient (after the modification at \(\partial Q\), it is the gradient of an \(H^1_0(Q)\)-function), and \(g^h - G^h\) converges strongly in \(L^2(Q;\mathbb {R}^n)\). All functions converge weakly in \(L^2(Q;\mathbb {R}^n)\) with

If an appropriate sequence \(J^h\) can be constructed (with the right divergence and such that the difference to \(j^h\) is strongly convergent), this shows that \(p_C = (g_C, j_C)\) is in the relaxed data set \(\mathcal {D}_*^{\mathrm {relax}}\).

Step 3: The divergence of the approximation. Let us calculate the divergence of \(j^h\). In \(M^h\), the flux is constant and hence \(\nabla \cdot j^h = \nabla \cdot j_A = 0\) in \(M^h\). In \(L^h\), the construction uses the fluxes \(j_a\) and \(j_b\) which satisfy \((j_a - j_b)\cdot \nu = (A g_a - e_1)\cdot \nu = \left( \frac{\nu _1}{\nu \cdot A\nu } A\nu - e_1\right) \cdot \nu = 0\). This shows that \(j^h\) satisfies \(\nabla \cdot j^h = 0\) in \(L^h\).

Along \(\partial L^h\), the function \(j^h\) has the jumps

Important for the following construction is that the total flux through two subsequent pieces of \(\partial M^h\) vanishes:

After a rescaling by h and a shift into the origin, the local geometry is as follows:

compare the right part of Fig. 3. We emphasize that only three regions of unit dimensions are considered.

We claim that there exists a bounded vector field \(p : \Sigma _L \rightarrow \mathbb {R}^n\) with support in \(\{ x\in \Sigma _L \,|\, x\cdot \theta < 1 \}\) and with the properties

The divergence in the first line is understood in the sense of distributions. The function can be constructed in \(\mathbb {R}^2\) as follows: We use an ansatz with a rotated gradient, \(p := \nabla ^\perp \Phi = (-\partial _2 \Phi , \partial _1 \Phi )\) with a smooth function \(\Phi \) that is piecewise affine on the boundary \(\partial \left( \overline{\Sigma _{L,A}} \cup \overline{\Sigma _{L,B}}\right) \). The fact that the total flux vanishes by (2.14) implies that \(\Phi \) can be chosen such that it vanishes on \(\partial \left( \overline{\Sigma _{L,A}} \cup \overline{\Sigma _{L,B}}\right) \setminus \partial \Sigma _L\). This allows, in particular, to choose a compactly supported function \(\Phi \). The rotated gradient p has all the desired properties. In higher dimension, the two-dimensional function can be extended as a constant function in the remaining directions.

Rescaling p as \(p^h(x) := p(x/h)\) and extending the function \(p^h\) first periodically with period h in all directions perpendicular to \(\theta \), then extending the result periodically with period \(\sqrt{h}\) in direction \(\theta \), we obtain a function \(p^h\) that has the same distributional divergence as \(j^h\), see (2.12) and (2.13).

We construct \(J^h(x) := j^h - p^h\). This choice assures \(\nabla \cdot J^h = 0\). Furthermore, the strong convergence \(j^h - J^h = p^h \rightarrow 0\) in \(L^2(Q;\mathbb {R}^n)\) is a consequence of the boundedness of p together with the fact that \(p^h\ne 0\) holds only on a set with volume fraction of order \(h/\sqrt{h} = \sqrt{h}\).

This concludes the proof for \(f=0\). If a function \(J^h\) with \(\nabla \cdot J^h = f \ne 0\) has to be constructed, it suffices to add an h-independent function \(J_f\) as in the proof of Lemma 3.

In the case \(f = 0\), the property \(J^h \equiv j\) in a neighborhood of \(\partial Q\) can be achieved by a similar construction: One defines all sequences as constructed above in the smaller domain \(Q_h := \{ x\in Q | \mathrm {dist}(x,\partial Q) > h^{1/4}\}\). In the boundary region \(Q\setminus Q_h\) we set \(U^h\equiv 0\), \(G^h := \nabla U^h = 0\), \(J^h=j\), \(j^h := g^h := 0\). The extension \(J^h\) to all of Q is defined as above with a local construction. The local construction is possible since all averages of \(J^h\) over periodicity cells are given by j. \(\square \)

Remark on the last step in the above proof. The property \(J^h \equiv j\) near \(\partial Q\) can be obtained also with cut-off functions: One constructs \(J^h\) in all of Q by means of a potential with respect to a suitable differential operator, and uses a cut-off function to construct a transition from \(J^h\) to j. Such a construction is used in Lemma 3.14 of [6].

2.2 Approximation of general functions

The next lemma is a variant of Lemma 4. It contains no more than the observation that we can perform a local construction and, at the same time, keep boundary conditions for \(J^h\).

Lemma 5

(Iterated laminates with boundary condition) Let \(({\bar{g}}, {\bar{j}}) \in C\cup \mathcal {D}_\mathrm {loc}^A\) be a point, let \(j \in L^2(Q;\mathbb {R}^n)\) be a function. We set \(F:=\nabla \cdot j\). Then there exist sequences \((G^h, J^h)\in \mathcal {E}_F\) and \((g^h, j^h)\in \mathcal {D}\) that are data convergent with \((g^h, j^h) \rightharpoonup ({\bar{g}}, {\bar{j}})\) and \((G^h, J^h) \rightharpoonup (0,j)\) in \(L^2(Q;\mathbb {R}^n)^2\). The sequences can be chosen such that \(|g^h|+|j^h| \le C(n,A) (1+|{\bar{g}}|)\) pointwise in Q and such that \(J^h \equiv j\) holds in a neighborhood of \(\partial Q\).

Proof

We set \(f=0\) and \(({\bar{g}}, {\bar{j}})\) as a limit point. Let \((g^h,j^h)\) and \((G^h,{\tilde{J}}^h)\) be sequences in \(\mathcal {D}\) and \(\mathcal {E}_0\) as in Lemma 3 or Lemma 4, the limits are \(({\bar{g}}, {\bar{j}})\) and \((0,{\bar{j}})\). Furthermore, we can assume that \({\tilde{J}}^h\) coincides with \({\bar{j}}\) close to \(\partial Q\).

We set \(J^h := {\tilde{J}}^h - {\bar{j}} + j\). The new sequence is still data convergent, there holds \(\nabla \cdot J^h = F\), \(J^h \rightharpoonup j\), and \(J^h = j\) near \(\partial Q\). \(\square \)

In the last subsection, we have shown the local property \(C\cup \mathcal {D}_\mathrm {loc}^A\subset \mathcal {D}_*^{\mathrm {relax}}\). Glueing together the constructions, we will now obtain the corresponding approximation result for non-constant functions with values in \(C\cup \mathcal {D}_\mathrm {loc}^A\).

Proposition 1

(Glueing the constructions) There holds

Proof

Let \((g,j) : Q \rightarrow C\cup \mathcal {D}_\mathrm {loc}^A\) of class \(L^2\) be given. Our aim is to construct a sequence \(((g^k, j^k), (G^k, J^k))_k\) in \(\mathcal {D}\times \mathcal {E}_f\).

We set \(F := \nabla \cdot j\). We will construct a sequence with \(\nabla \cdot J^k = F\). This is sufficient, since we can add a function \(J_{f-F}\) with \(\nabla \cdot J_{f-F} = f-F\) in the very last step of the construction. Without loss of generality we assume \(|Q| \le 1\).

Step 1: Lebesgue-points and covering. We use balls \(Q_r(x) := \{y\in \mathbb {R}^n\, |\, |y-x|<r \}\), where \(x\in Q\) is the center and \(r>0\) is the radius; we only consider balls that are contained in Q. Since almost all points \(x\in Q\) are Lebesgue points for g and j, there exists a set of points \(\omega \subset Q\) with full measure, \(|Q\setminus \omega | = 0\), such that, for every \(x\in \omega \):

For arbitrary \(\delta >0\), we consider the sets \(Q_r(x)\) with \(x\in \omega \), \(r>0\), and \(\int _{Q_r(x)} | (g,j) - ({\bar{g}}, {\bar{j}}) |^2 \le \delta |Q_r(x)|\). This family of balls forms a regular Vitali covering of \(\omega \). By the Vitali covering theorem, there exists a countable disjoint covering of \(\omega \setminus N\) for some \(N\subset Q\) with \(|N| = 0\). Furthermore, we find a finite disjoint family of balls \((Q_i)_{i\in I}\), \(Q_i = Q_{r_i}(x_i)\), with

and

Step 2: Local construction. We can now construct approximations with Lemma 5. In each ball \(Q_i\), \(i\in I\), of the finite and disjoint covering, we use the functions \((G^h_i, J^h_i)\in \mathcal {E}_F(Q_i)\) and \((g^h_i, j^h_i)\in \mathcal {D}(Q_i)\) provided by Lemma 5. Here, \(\mathcal {E}_F(Q_i)\) and \(\mathcal {D}(Q_i)\) are defined analogously to (1.1) and (1.2), with Q replaced by \(Q_i\). We note that \(G^h_i\) is the gradient of a function \(u^h_i\) with \(u^h_i = 0\) on \(\partial Q_i\). The function \(J^h_i\) satisfies \(J^h_i = j\) in a neighborhood of \(\partial Q_i\). This means that these functions can be extended to \(Q\setminus \cup _i Q_i\) by 0 and j, respectively. This provides pairs \((G^h, J^h) \in \mathcal {E}_F\).

Similarly, we can extend the functions \((g^h_i, j^h_i)\in \mathcal {D}(Q_i)\). Because of \((0,0)\in \mathcal {D}^A_\mathrm {loc}\), we can extend both functions by 0 and obtain pairs \((g^h, j^h) \in \mathcal {D}\).

Step 3: Properties of the constructed sequence. Our construction provides, for all \(\delta >0\), a sequence \(h\rightarrow 0\) (that depends on \(\delta \)), and sequences of pairs \((G^h_\delta , J^h_\delta ) \in \mathcal {E}_F\) and \((g^h_\delta , j^h_\delta ) \in \mathcal {D}\).

The data convergence property of the construction in Lemma 5 provides that, for all \(\delta >0\), along a subsequence \(h\rightarrow 0\),

in \(L^2(Q;\mathbb {R}^n)\), with limit functions \(g_\delta =\sum _i \bar{g}_i\, \mathbf{1}_{Q_i}\) and \(j_\delta =\sum _i \bar{j}_i\, \mathbf{1}_{Q_i}\). In particular,

and similarly \(\int _Q | j_\delta - j |^2\le 2 \delta \).

The functions \((g^h_\delta ,j^h_\delta )\) are uniformly bounded for all \(0<\delta <1\). In fact, by Lemma 5, we have

Similarly, one obtains the uniform bound for \(j^h_\delta \). From (2.23) and (2.24) we deduce

and, for the fluxes,

The compact embedding \(L^2(Q)\hookrightarrow H^{-1}(Q)\) together with (2.23) and (2.24) yield

We now construct a suitable diagonal sequence \((G^k,J^k,g^k,j^k)_{k\in \mathbb {N}}\). By (2.25), (2.26), and (2.27), we can choose \(\delta (k) \searrow 0\) such that

We then choose \(h(k)\searrow 0\) such that

We finally define \(g^k:=g^{h(k)}_{\delta (k)}\) and analogously \(j^k,G^k,J^k\).

Let us verify the properties. By construction, \((G^k,J^k)\in \mathcal {E}_F\) and \((g^k,j^k)\in \mathcal {D}\). The boundedness of the sequences \((g^k,j^k)_k\), (2.28) and an identification argument show their weak convergence in \(L^2(Q;\mathbb {R}^n)\) to (g, j). By (2.28) we therefore have

This provides the required approximation of (g, j). \(\square \)

Remark on related statements in [6]. The proof of Proposition 1 can be compared in both, its statement and its methods of proof, with Theorem 3.16 in [6]. A difference is that we start from the explicit constructions and we do not assume, e.g., that g is a gradient.

3 Necessary conditions for relaxed data points

The goal of this section is to prove the inclusion “\(\subset \)” in (1.11) of Theorem 1. For the proof, we fix an arbitrary pair of functions \((g,j)\in \mathcal {D}^{\mathrm {relax}}\) and show that \((g,j)(x)\in C\cup \mathcal {D}_\mathrm {loc}^A\) for almost all \(x\in Q\).

By Definition 1, the condition \((g,j)\in \mathcal {D}^{\mathrm {relax}}\) means that there exist sequences \((g^h, j^h)\) and \((G^h, J^h)\) and a limit \((G, J)\in \mathcal {E}_f\) such that

as \(h\rightarrow 0\). The pairs \((G^h, J^h)\) are in \(\mathcal {E}_f\), i.e.: \(G^h = \nabla U^h\) is the gradient of some \(U^h\in H^1_0(Q)\) and \(\nabla \cdot J^h = f\). The pairs \((g^h, j^h)\) are in the data set \(\mathcal {D}\) of (1.7).

In the arguments below the div-curl lemma plays an important role. Data convergence provides the right properties to apply the lemma. The sequences \(G^h\), \(J^h\) have vanishing curl and controlled divergence since \((G^h,J^h)\) belong to \(\mathcal {E}_f\). Therefore the standard formulation of the div-curl lemma in \(L^2(Q;\mathbb {R}^n)\), see for example [11, Theorem 5.2.1], yields the distributional convergence of \(G^h\cdot J^h\). The convergence of the products \(g^h\cdot j^h\) can be deduced by the strong convergence of differences, inherited from the definition of data convergence.

Proposition 2

(Necessary condition on limit functions) There holds

Proof

We split the proof into several parts.

Step 1: Preparation. In order to prove (3.1), we fix a pair \((g,j)\in L^2(Q;\mathbb {R}^n)^2\) in the relaxed data set \(\mathcal {D}^{\mathrm {relax}}\), which means that there exist sequences \((g^h, j^h)\) in \(\mathcal {D}\) and \((G^h, J^h)\) in \(\mathcal {E}_f\) with data convergence such that \((g^h, j^h)\) weakly converges to (g, j). Our aim is to show \((g(x),j(x))\in C\cup \mathcal {D}_\mathrm {loc}^A\) for almost every \(x\in Q\).

The approximating sequences \((g^h, j^h)\) in \(\mathcal {D}\) and \((G^h, J^h)\) in \(\mathcal {E}_f\) with limit \((G, J)\in \mathcal {E}_f\) satisfy, as \(h\rightarrow 0\),

We denote by \(B^h\subset Q\) those points \(x\in Q\) for which \((g^h(x), j^h(x))\) is in \(\mathcal {D}_\mathrm {loc}^B\),

The complement is denoted as \(A^h := Q\setminus B^h\). Because of the bound \(0\le |B^h| \le |Q|\), we can select a subsequence (not relabeled) and a limit \(b\in L^\infty (Q)\) such that

As a consequence, \(\mathbf{1}_{A^h}\rightarrow (1-b)\) weakly-* in \(L^\infty (Q)\).

Step 2: A relation for averages. For any \(\varphi \in L^2(Q;\mathbb {R}^n)\) we can calculate, using the weak convergence of \(g^h\), the property \(g^h(x)=0\) for \(x\in B^h\), then \(Ag^h(x)=j^h(x)\) for \(x\in A^h\), then \(g^h(x)=e_1\) for \(x\in B^h\), and finally the weak convergence of \(j^h\):

This shows

In particular, we find \((g,j)(x)\in \mathcal {D}_\mathrm {loc}^A\) for almost all \(x\in \{x\in Q \,|\, b(x) = 0\}\).

Step 3: Div-curl lemma. The data convergence properties allow to calculate the distributional limit of the product \(g^h\cdot j^h\). In the subsequent calculation, we use the standard div-curl lemma in \(L^2(Q;\mathbb {R}^n)\) for the product \(G^h\cdot J^h\), and the strong convergence of differences in the other terms. In the limit \(h\rightarrow 0\), we obtain, for any \(\varphi \in C^\infty _c(Q)\),

Step 4: The cone condition. We choose \(\varepsilon \in (0,1)\) and set \(\beta _\varepsilon := (1-b+\varepsilon )^{-1}\). For arbitrary \(\varphi \in C^\infty _c(Q)\) with \(\varphi \ge 0\) we can calculate, exploiting the positivity and symmetry of A,

Using Step 3 and the weak-* convergence of \(\mathbf{1}_{A^h}\) we deduce

where we have used that \(j=be_1+Ag\). Since \(\varphi \) was arbitrary, almost everywhere in Q holds

Evaluating this inequality in \(\{b=1\} = \{\beta _\varepsilon =\varepsilon ^{-1}\}\), we find \(g\cdot Ag\le \frac{\varepsilon }{2-\varepsilon }g_1\) in this set. Since \(\varepsilon > 0\) was arbitrary, we find \(g=0\) and, as a consequence of (3.5), \(j=e_1\) almost everywhere in \(\{b=1\}\). In particular, \((g,j)(x)\in \mathcal {D}_\mathrm {loc}^B\subset C\) for almost all \(x\in \{b=1\}\).

We next consider the set \(\{0<b<1\}\). In this set, for \(\varepsilon \rightarrow 0\), there holds \(\beta _\varepsilon \rightarrow 1/(1-b)\). Relation (3.8) implies

almost everywhere in \(\{0<b<1\}\). This is one of the defining relations of the cone C, compare (1.9). Combined with (3.5), we obtain that \((g,j)\in C\) almost everywhere in \(\{0<b<1\}\).

We recall that \((g,j)\in \mathcal {D}_\mathrm {loc}^A\) in the set \(\{ b=0\}\) was already obtained in Step 2. This implies (3.1) and concludes the proof of the proposition. \(\square \)

References

Allaire, G.: Shape Optimization by the Homogenization Method. Applied Mathematical Sciences, vol. 146. Springer, New York (2002)

Ball, J.M.: Convexity conditions and existence theorems in nonlinear elasticity. Arch. Rational Mech. Anal., 63(4):337–403, (1976/77)

Ball, J.M., James, R.D.: Fine phase mixtures as minimizers of energy. Arch. Ration. Mech. Anal. 100(1), 13–52 (1987)

Cherkaev, A.: Variational Methods for Structural Optimization. Applied Mathematical Sciences, vol. 140. Springer, New York (2000)

Conti, S., Dolzmann, G.: On the theory of relaxation in nonlinear elasticity with constraints on the determinant. Arch. Ration. Mech. Anal. 217(2), 413–437 (2015)

Conti, S., Müller, S., Ortiz, M.: Data-driven problems in elasticity. Arch. Ration. Mech. Anal. 229(1), 79–123 (2018)

Conti, S., Müller, S., Ortiz, M.: Data-Driven Finite Elasticity. Arch Rational Mech Anal 237, 1–33 (2020). https://doi.org/10.1007/s00205-020-01490-x

Dacorogna, B.: Weak Continuity and Weak Lower Semicontinuity of Nonlinear Functionals. Lecture Notes in Mathematics, vol. 922. Springer, Berlin-New York (1982)

Dal Maso, G.: An Introduction to \(\Gamma \)-Convergence. Progress in Nonlinear Differential Equations and their Applications, vol. 8. Birkhäuser Boston Inc, Boston, MA (1993)

Eggersmann, R., Kirchdoerfer, T., Reese, S., Stainier, L., Ortiz, M.: Model-free data-driven inelasticity. Comput. Methods Appl. Mech. Eng. 350, 81–99 (2019)

Evans, L.C.: Weak convergence methods for nonlinear partial differential equations, volume 74 of CBMS Regional Conference Series in Mathematics. Published for the Conference Board of the Mathematical Sciences, Washington, DC; by the American Mathematical Society, Providence, RI, (1990)

Fonseca, I., Müller, S.: \(A\)-quasiconvexity, lower semicontinuity, and Young measures. SIAM J. Math. Anal. 30(6), 1355–1390 (1999)

Francfort, G.A., Milton, G.W.: Sets of conductivity and elasticity tensors stable under lamination. Commun. Pure Appl. Math. 47(3), 257–279 (1994)

Francfort, G.A., Murat, F.: Homogenization and optimal bounds in linear elasticity. Arch. Ration. Mech. Anal. 94(4), 307–334 (1986)

Hashin, Z., Shtrikman, S.: A variational approach to the theory of the elastic behaviour of multiphase materials. J. Mech. Phys. Solids 11, 127–140 (1963)

Jikov, V.V., Kozlov, S.M., Oleĭnik, O. A.: Homogenization of differential operators and integral functionals. Springer-Verlag, Berlin, 1994. Translated from the Russian by G. A. Yosifian

Kanno, Y.: Simple heuristic for data-driven computational elasticity with material data involving noise and outliers: a local robust regression approach. Jpn. J. Ind. Appl. Math. 35(3), 1085–1101 (2018)

Kirchdoerfer, T., Ortiz, M.: Data-driven computational mechanics. Comput. Methods Appl. Mech. Eng. 304, 81–101 (2016)

Kohn, R.V., Milton, G. W.: On bounding the effective conductivity of anisotropic composites. In: Homogenization and effective moduli of materials and media (Minneapolis, Minn., 1984/1985), volume 1 of IMA Vol. Math. Appl., pp 97–125. Springer, New York, (1986)

Kohn, R.V., Strang, G.: Optimal design and relaxation of variational problems. I. Commun. Pure Appl. Math. 39(1), 113–137 (1986)

Kohn, R.V., Strang, G.: Optimal design and relaxation of variational problems. II. Commun. Pure Appl. Math. 39(2), 139–182 (1986)

Müller, S.: Variational models for microstructure and phase transitions. In Calculus of variations and geometric evolution problems (Cetraro, 1996), volume 1713 of Lecture Notes in Math., pp. 85–210. Springer, Berlin, (1999)

Murat, F.: Compacité par compensation. II. In: Proceedings of the International Meeting on Recent Methods in Nonlinear Analysis (Rome, 1978), pp. 245–256. Pitagora, Bologna, (1979)

Tartar, L.: Compensated compactness and applications to partial differential equations. Nonlinear Anal. Mech. Heriot-Watt Symp. 4, 136–212 (1979)

Acknowledgements

Open Access funding provided by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by J. Ball.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

We show here a property of the cone C of (1.9) and (1.10): Every inner point of the cone can be written as a convex combination of two points on the boundary; the results is nontrivial, since the additional requirement (2.9) has to be satisfied. The result was used in the construction of iterated laminates in Lemma 4.

Lemma A.1

Let \(p_C=(g_C,j_C)\in \mathring{C}\) be given. Then there exist two points \(p_A\in \mathcal {D}_{\mathrm {loc}}^A\) and \(p_L\in \partial _{\mathrm {lat}} C\) and a parameter \(\lambda \in (0,1)\) such that (2.8) and (2.9) hold.

Proof

Since \(p_C\in \mathring{C}\) is an inner point of the cone, there exists \(b\in (0,1)\) such that \(j_C = b e_1 + A g_C\) and

We set \(\nu := \alpha g_C\) with \(\alpha := (g_C\cdot A g_C)^{-1/2}\). The choice of \(\alpha \) implies \(\nu \cdot A\nu = 1\). Given \(\nu \), we set

This choice guarantees \((g_A,j_A)\in \mathcal {D}_{\mathrm {loc}}^A\). Next, for some \(b_L\in [0,1]\) to be determined below, we define

The condition \((g_L,j_L)\in \partial _{\mathrm {lat}}C\) is equivalent to the condition

We note that the expression on the right hand side is negative for \(b_L = b\) by (A.1). On the other hand, for \(b_L=1\), the expression on the right hand side is a product of two identical terms and hence nonnegative. This implies that there exists a value \(b_L\in (b,1]\) such that the expression vanishes. For this parameter \(b_L\), the above condition is satisfied and hence \((g_L,j_L)\in \partial _{\mathrm {lat}}C\).

We set \(\lambda := \frac{b}{b_L}\in (0,1)\). With this choice, by definition of \(g_L\) in (A.3), we obtain \(g_L = \frac{1}{\lambda }(g_C-g_A) + g_A\) and therefore

Regarding the component j, we find

Together with (A.5), this shows (2.8).

Finally, the definitions of \(g_A,g_L,j_A\), and \(j_L\) imply \(g_A-g_L = \frac{b_L}{b}(g_A-g_C) = b_L\nu _1\nu \) and hence

This shows (2.9) and completes the proof of the lemma. \(\square \)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Röger, M., Schweizer, B. Relaxation analysis in a data driven problem with a single outlier. Calc. Var. 59, 119 (2020). https://doi.org/10.1007/s00526-020-01773-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00526-020-01773-x